Auditory Display of Fluorescence Image Data in an In Vivo Tumor Model

Abstract

1. Introduction

2. Materials and Methods

2.1. Cells and Animal Experiments

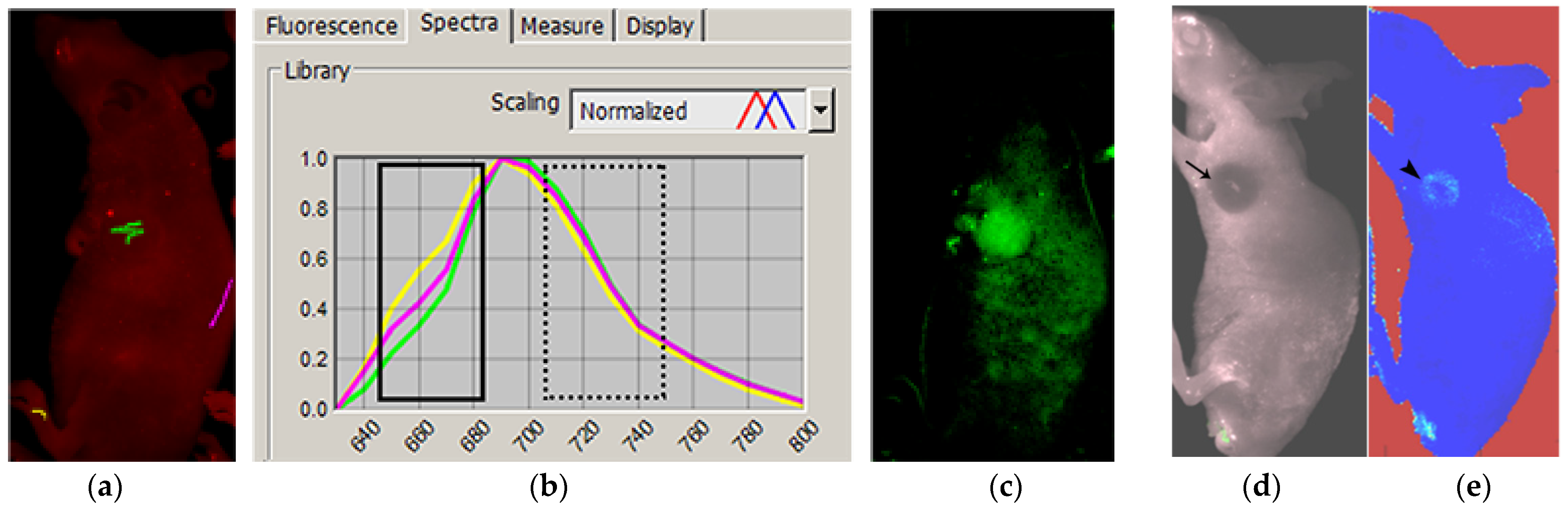

2.2. Pre-Analysis of Data

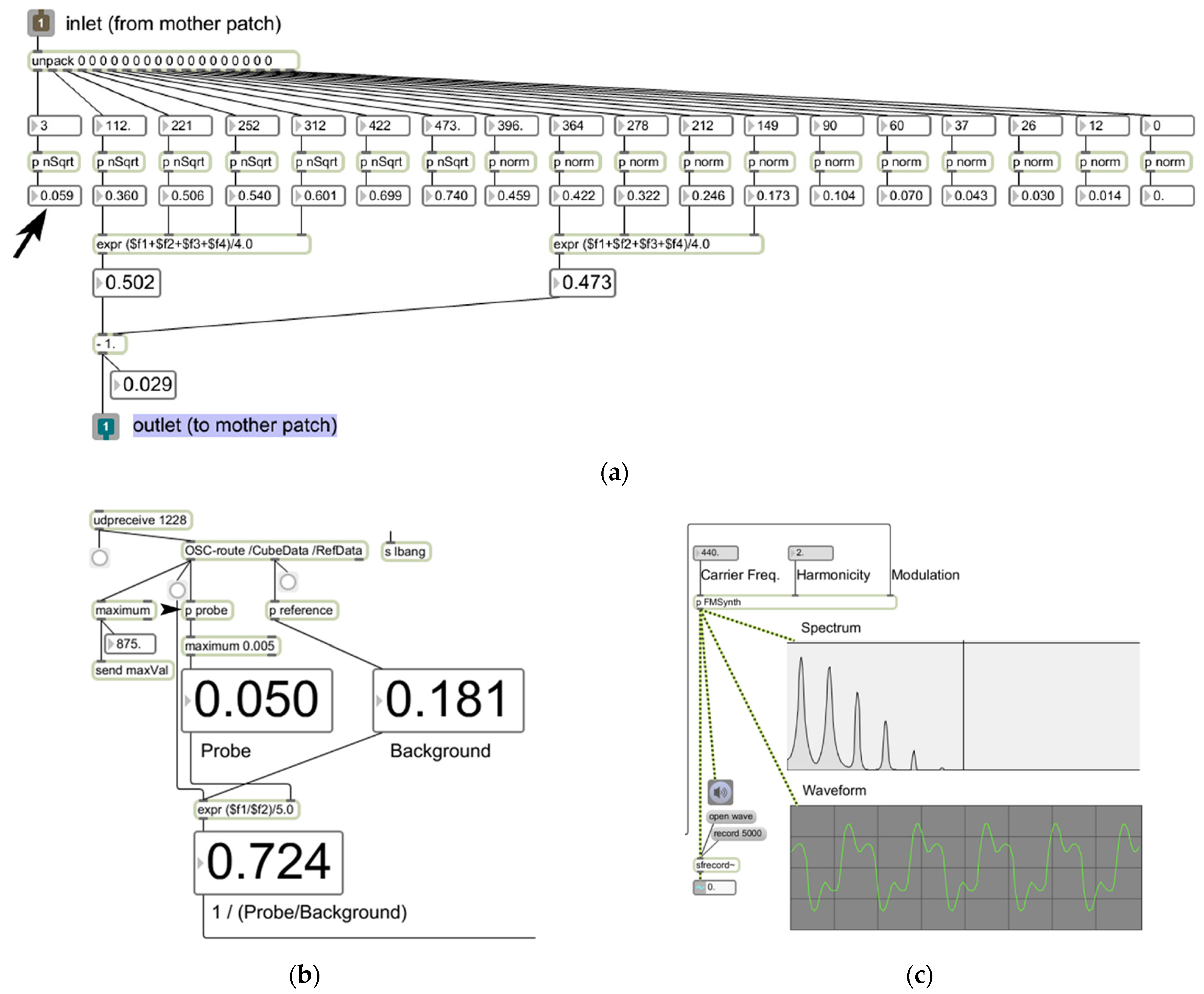

2.3. Data Transport and Auditory Display

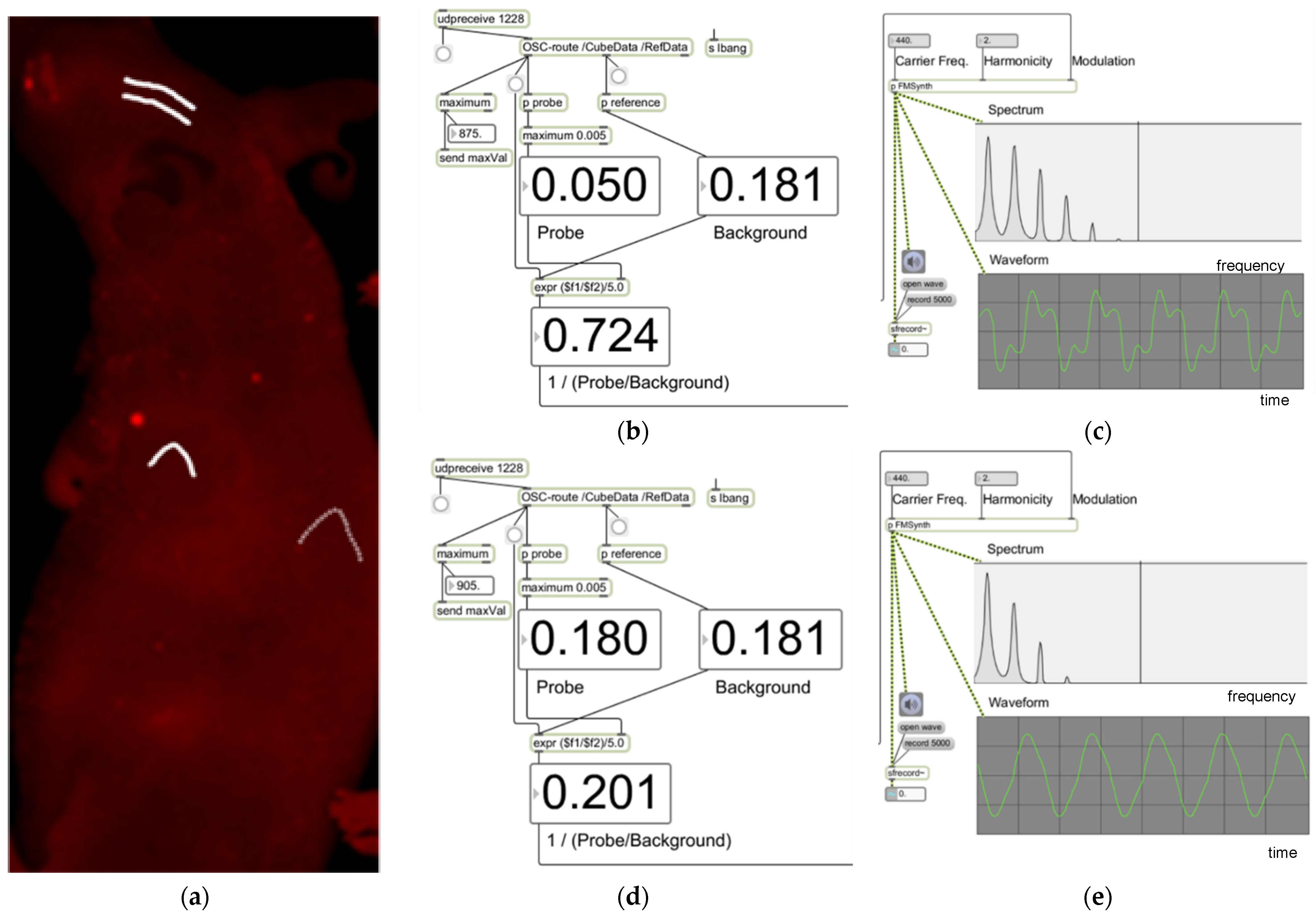

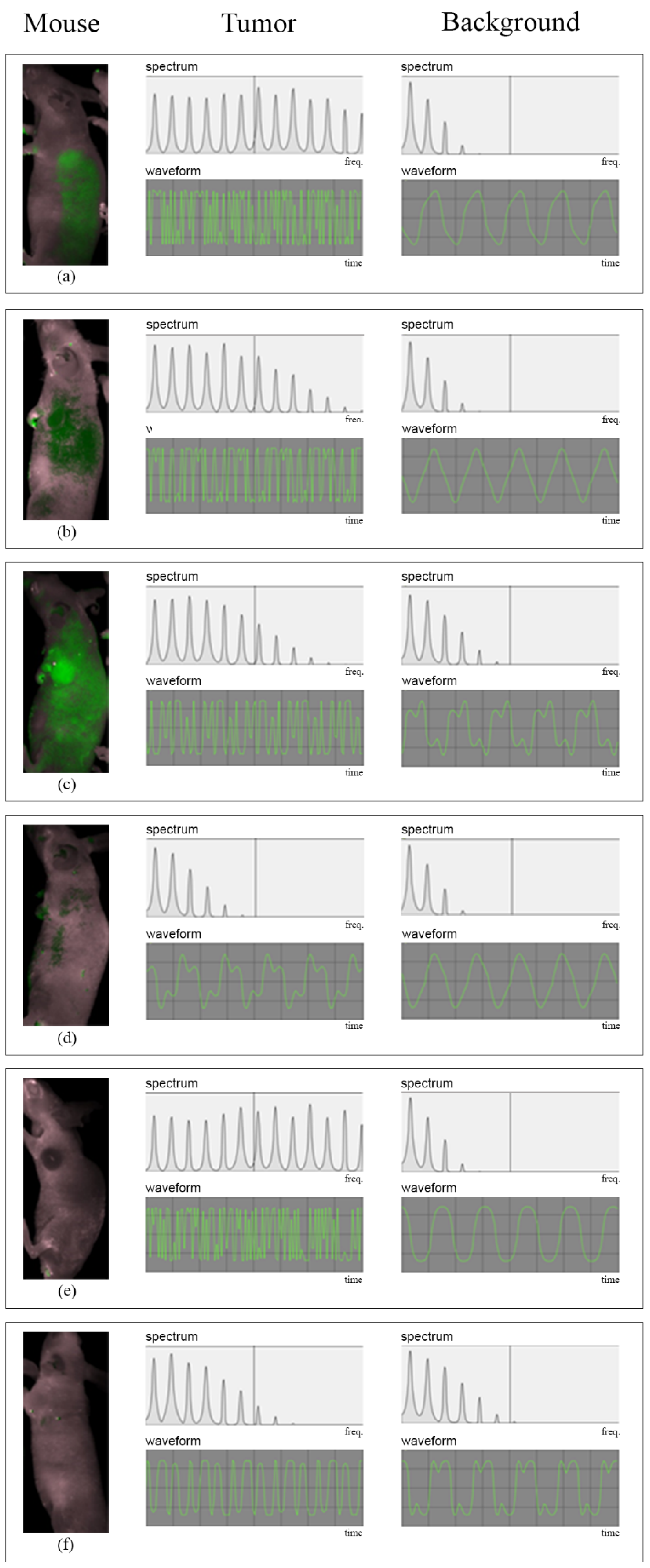

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Weissleder, R.; Mahmood, U. Molecular imaging. Radiology 2001, 219, 316–333. [Google Scholar] [CrossRef] [PubMed]

- Massoud, T.F.; Gambhir, S.S. Molecular imaging in living subjects: Seeing fundamental biological processes in a new light. Genes Dev. 2003, 17, 545–580. [Google Scholar] [CrossRef] [PubMed]

- Graves, E.E.; Weissleder, R.; Ntziachristos, V. Fluorescence molecular imaging of small animal tumor models. Curr. Mol. Med. 2004, 4, 419–430. [Google Scholar] [CrossRef] [PubMed]

- Cerussi, A.E.; Berger, A.J.; Bevilacqua, F.; Shah, N.; Jakubowski, D.; Butler, J.; Holcombe, R.F.; Tromberg, B.J. Sources of absorption and scattering contrast for near-infrared optical mammography. Acad. Radiol. 2001, 8, 211–218. [Google Scholar] [CrossRef]

- Mansfield, J.R.; Gossage, K.W.; Hoyt, C.C.; Levenson, R.M. Autofluorescence removal, multiplexing, and automated analysis methods for in-vivo fluorescence imaging. J. Biomed. Opt. 2005, 10, 41207. [Google Scholar] [CrossRef]

- Anderson, J.E.; Sanderson, P. Sonification design for complex work domains: Dimensions and distractors. J. Exp. Psychol. Appl. 2009, 15, 183–198. [Google Scholar] [CrossRef][Green Version]

- Spain, R.D.; Bliss, J.P. The effect of sonification display pulse rate and reliability on operator trust and perceived workload during a simulated patient monitoring task. Ergonomics 2008, 51, 1320–1337. [Google Scholar] [CrossRef]

- Watson, M.; Sanderson, P. Sonification supports eyes-free respiratory monitoring and task time-sharing. Hum. Factors 2004, 46, 497–517. [Google Scholar] [CrossRef]

- Colombo, S.; Terra, L. Geiger-Muller counter; function and use. Radioter. Radiobiol. E Fis. Med. 1951, 7, 315–333. [Google Scholar]

- Taft, R.B. The radiologist considers Geiger counters. Am. J. Roentgenol. Radium Ther. 1948, 60, 270–272. [Google Scholar]

- Baier, G.; Hermann, T.; Stephani, U. Event-based sonification of EEG rhythms in real time. Clin. Neurophysiol. 2007, 118, 1377–1386. [Google Scholar] [CrossRef] [PubMed]

- Lippert, M.; Logothetis, N.K.; Kayser, C. Improvement of visual contrast detection by a simultaneous sound. Brain Res. 2007, 1173, 102–109. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Z.; Levi, J.; Xiong, Z.; Gheysens, O.; Keren, S.; Chen, X.; Gambhir, S.S. Near-infrared fluorescent deoxyglucose analogue for tumor optical imaging in cell culture and living mice. Bioconj. Chem. 2006, 17, 662–669. [Google Scholar] [CrossRef] [PubMed]

- Chowning, J. The Synthesis of Complex Audio Spectra by Means of Frequency Modulation. J. Audio Eng. Soc. 1973, 21, 526–534. [Google Scholar]

- Black, D.; Hansen, C.; Nabavi, A.; Kikinis, R.; Hahn, H. A Survey of auditory display in image-guided interventions. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1665–1676. [Google Scholar] [CrossRef]

- Woerdeman, P.A.; Willems, P.W.; Noordmans, H.J.; van der Sprenkel, J.W. Auditory feedback during frameless image-guided surgery in a phantom model and initial clinical experience. J. Neurosurg. 2009, 110, 257–262. [Google Scholar] [CrossRef]

- Barker, J.L., Jr.; Garden, A.S.; Ang, K.K.; O’Daniel, J.C.; Wang, H.; Court, L.E.; Morrison, W.H.; Rosenthal, D.I.; Chao, K.S.; Tucker, S.L.; et al. Quantification of volumetric and geometric changes occurring during fractionated radiotherapy for head-and-neck cancer using an integrated CT/linear accelerator system. Int. J. Radiat. Oncol. Biol. Phys. 2004, 59, 960–970. [Google Scholar] [CrossRef]

- Soltesz, E.G.; Kim, S.; Kim, S.W.; Laurence, R.G.; De Grand, A.M.; Parungo, C.P.; Cohn, L.H.; Bawendi, M.G.; Frangioni, J.V. Sentinel lymph node mapping of the gastrointestinal tract by using invisible light. Ann. Surg. Oncol. 2006, 13, 386–396. [Google Scholar] [CrossRef]

- Aoki, T.; Yasuda, D.; Shimizu, Y.; Odaira, M.; Niiya, T.; Kusano, T.; Mitamura, K.; Hayashi, K.; Murai, N.; Koizumi, T.; et al. Image-guided liver mapping using fluorescence navigation system with indocyanine green for anatomical hepatic resection. World J. Surg. 2008, 32, 1763–1767. [Google Scholar] [CrossRef]

- Maeda, H. Toward a full understanding of the EPR effect in primary and metastatic tumors as well as issues related to its heterogeneity. Adv. Drug Deliv. Rev. 2015, 91, 3–6. [Google Scholar] [CrossRef]

- Giloh, H.; Sedat, J.W. Fluorescence microscopy: Reduced photobleaching of rhodamine and fluorescein protein conjugates by n-propyl gallate. Science 1982, 217, 1252–1255. [Google Scholar] [CrossRef] [PubMed]

- Ettinger, A.; Wittmann, T. Fluorescence live cell imaging. Methods Cell Biol. 2014, 123, 77–94. [Google Scholar] [CrossRef] [PubMed]

| Skull | Tumor | Left Flank | |

|---|---|---|---|

| All mice | 0.34 (0.13–1.59) | 4.91 (0.23–8.21) | 0.55 (0.17–6.62) |

| Mouse A | 0.31 (0.25–0.45) | 2.2 (1.02–8.21) | 0.98 (0.56–2.2) |

| Mouse B | 0.47 (0.23–1.59) | 6.62 (5.19–7.70) | 5.18 (0.86–6.62) |

| Mouse C | 0.66 (0.34–0.99) | 5.17 (4.89–6.23) | 3.8 (1.24–4.9) |

| Mouse D | 0.41 (0.34–0.67) | 6.47 (5.70–7.27) | 0.51 (0.35–0.54) |

| Mouse E | 0.21 (0.19–0.23) | 0.26 (0.23–0.35) | 0.24 (0.17–0.54) |

| Mouse F | 0.71 (0.13–0.34) | 0.75 (0.44–1.13) | 0.26 (0.19–0.34) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.-W.; Lee, S.H.; Cheng, Z.; Yeo, W.S. Auditory Display of Fluorescence Image Data in an In Vivo Tumor Model. Diagnostics 2022, 12, 1728. https://doi.org/10.3390/diagnostics12071728

Lee S-W, Lee SH, Cheng Z, Yeo WS. Auditory Display of Fluorescence Image Data in an In Vivo Tumor Model. Diagnostics. 2022; 12(7):1728. https://doi.org/10.3390/diagnostics12071728

Chicago/Turabian StyleLee, Sheen-Woo, Sang Hoon Lee, Zhen Cheng, and Woon Seung Yeo. 2022. "Auditory Display of Fluorescence Image Data in an In Vivo Tumor Model" Diagnostics 12, no. 7: 1728. https://doi.org/10.3390/diagnostics12071728

APA StyleLee, S.-W., Lee, S. H., Cheng, Z., & Yeo, W. S. (2022). Auditory Display of Fluorescence Image Data in an In Vivo Tumor Model. Diagnostics, 12(7), 1728. https://doi.org/10.3390/diagnostics12071728