Artificial Intelligence-Based Prediction of Oroantral Communication after Tooth Extraction Utilizing Preoperative Panoramic Radiography

Abstract

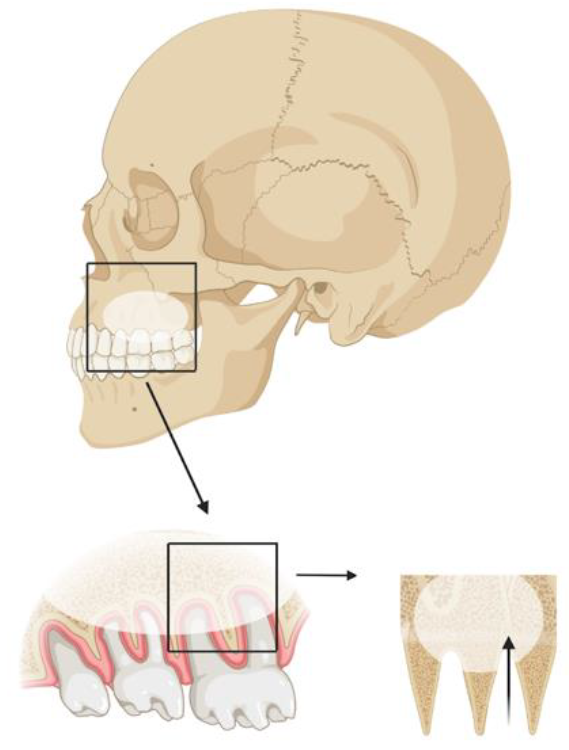

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Expert Evaluations

2.3. Convolutional Neural Networks

- CPU: AMD Ryzen 9 5950X 16-Core Processor;

- RAM: 64 GB;

- GPU: NVIDIA Geforce RTX 3090 (24 GB GDDR6X RAM);

- Python version: 3.10.4 (64-bit);

- OS: Windows 10.

3. Results

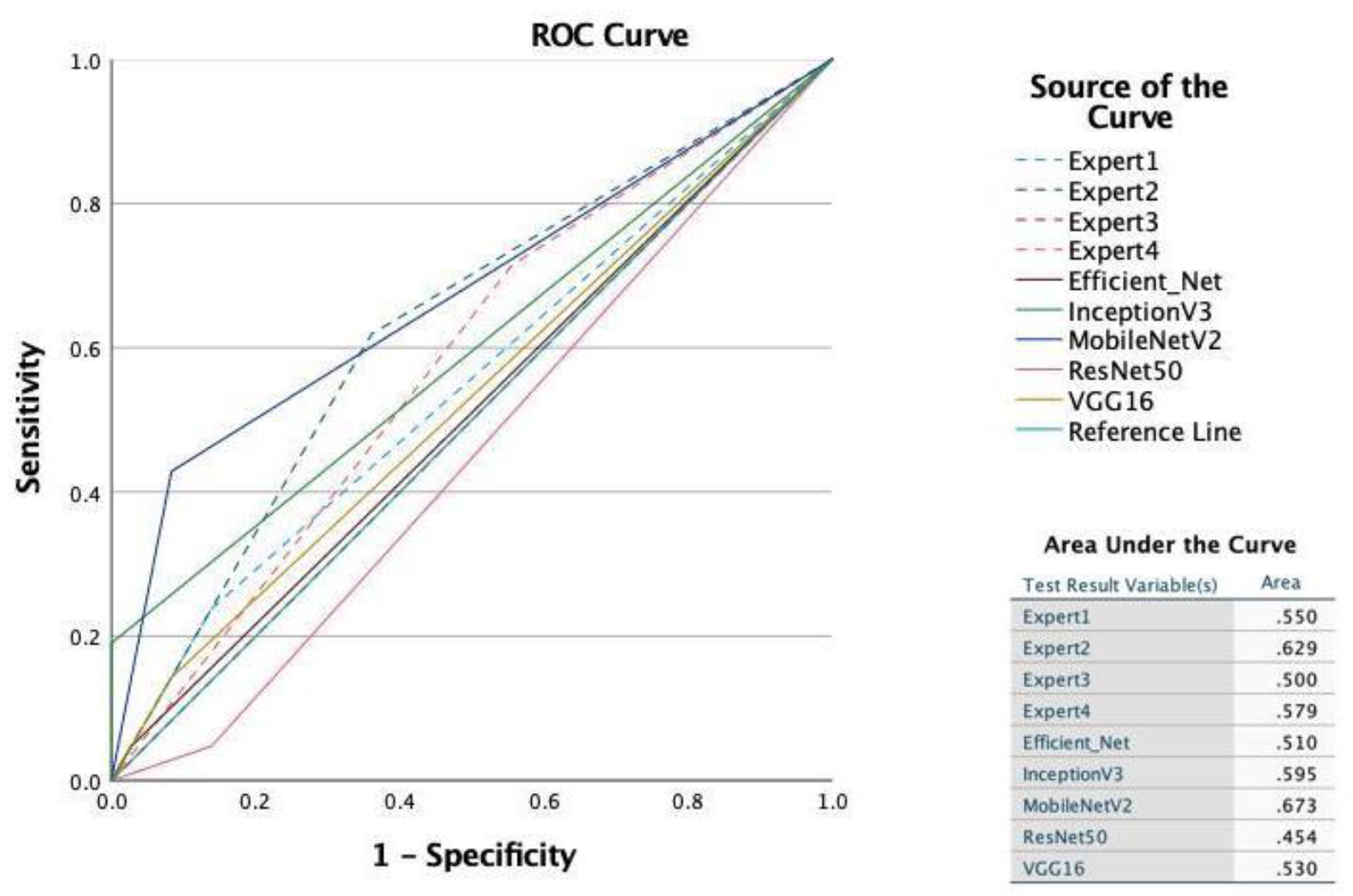

3.1. Convolutional Neural Network Performance

3.2. Expert Evaluations

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rothamel, D.; Wahl, G.; D’Hoedt, B.; Nentwig, G.-H.; Schwarz, F.; Becker, J. Incidence and predictive factors for perforation of the maxillary antrum in operations to remove upper wisdom teeth: Prospective multicentre study. Br. J. Oral Maxillofac. Surg. 2007, 45, 387–391. [Google Scholar] [CrossRef] [PubMed]

- Thereza-Bussolaro, C.; Galván, J.G.; Pachêco-Pereira, C.; Flores-Mir, C. Maxillary osteotomy complications in piezoelectric surgery compared to conventional surgical techniques: A systematic review. Int. J. Oral Maxillofac. Surg. 2019, 48, 720–731. [Google Scholar] [CrossRef] [PubMed]

- Moore, R.; Miller, R.; Henderson, S. Risk management in oral surgery. Br. Dent. J. 2019, 227, 1035–1040. [Google Scholar] [CrossRef] [PubMed]

- Van Den Bergh, J.P.A.; Ten Bruggenkate, C.M.; Disch, F.J.M.; Tuinzing, D.B. Anatomical aspects of sinus floor elevations. Clin. Oral Implant. Res. 2000, 11, 256–265. [Google Scholar] [CrossRef]

- Lewusz-Butkiewicz, K.; Kaczor, K.; Nowicka, A. Risk factors in oroantral communication while extracting the upper third molar: Systematic review. Dent. Med. Probl. 2018, 55, 69–74. [Google Scholar] [CrossRef] [Green Version]

- Su, N.; van Wijk, A.; Berkhout, E.; Sanderink, G.; De Lange, J.; Wang, H.; van der Heijden, G.J. Predictive Value of Panoramic Radiography for Injury of Inferior Alveolar Nerve After Mandibular Third Molar Surgery. J. Oral Maxillofac. Surg. 2017, 75, 663–679. [Google Scholar] [CrossRef] [PubMed]

- Suomalainen, A.; Esmaeili, E.P.; Robinson, S. Dentomaxillofacial imaging with panoramic views and cone beam CT. Insights Imaging 2015, 6, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Visscher, S.H.; van Roon, M.R.; Sluiter, W.J.; van Minnen, B.; Bos, R.R. Retrospective Study on the Treatment Outcome of Surgical Closure of Oroantral Communications. J. Oral Maxillofac. Surg. 2011, 69, 2956–2961. [Google Scholar] [CrossRef] [PubMed]

- Wowern, N. Frequency of oro-antral fistulae after perforation to the maxillary sinus. Eur. J. Oral Sci. 1970, 78, 394–396. [Google Scholar] [CrossRef] [PubMed]

- Bellman, R. Artificial Intelligence: Can Computers Think? Course Technology: Boston, MA, USA, 1978; ISBN 0-87835-149-3. [Google Scholar]

- Shortliffe, E.H. Testing Reality: The Introduction of Decision-Support Technologies for Physicians. Methods Inf. Med. 1989, 28, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Saravi, B.; Hassel, F.; Ülkümen, S.; Zink, A.; Shavlokhova, V.; Couillard-Despres, S.; Boeker, M.; Obid, P.; Lang, G.M. Artificial Intelligence-Driven Prediction Modeling and Decision Making in Spine Surgery Using Hybrid Machine Learning Models. J. Pers. Med. 2022, 12, 509. [Google Scholar] [CrossRef] [PubMed]

- Shavlokhova, V.; Sandhu, S.; Flechtenmacher, C.; Koveshazi, I.; Neumeier, F.; Padrón-Laso, V.; Jonke, Ž.; Saravi, B.; Vollmer, M.; Vollmer, A.; et al. Deep Learning on Oral Squamous Cell Carcinoma Ex Vivo Fluorescent Confocal Microscopy Data: A Feasibility Study. J. Clin. Med. 2021, 10, 5326. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2020, 16, 508–522. [Google Scholar] [CrossRef]

- Murata, M.; Ariji, Y.; Ohashi, Y.; Kawai, T.; Fukuda, M.; Funakoshi, T.; Kise, Y.; Nozawa, M.; Katsumata, A.; Fujita, H.; et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2018, 35, 301–307. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, K.J.; Sunwoo, L.; Choi, D.; Nam, C.-M.; Cho, J.; Kim, J.; Bae, Y.J.; Yoo, R.-E.; Choi, B.S.; et al. Deep Learning in Diagnosis of Maxillary Sinusitis Using Conventional Radiography. Investig. Radiol. 2019, 54, 7–15. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Bouquet, A.; Coudert, J.-L.; Bourgeois, D.; Mazoyer, J.-F.; Bossard, D. Contributions of reformatted computed tomography and panoramic radiography in the localization of third molars relative to the maxillary sinus. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2004, 98, 342–347. [Google Scholar] [CrossRef]

- Jung, Y.-H.; Cho, B.-H. Assessment of maxillary third molars with panoramic radiography and cone-beam computed tomography. Imaging Sci. Dent. 2015, 45, 233–240. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Iwata, E.; Hasegawa, T.; Kobayashi, M.; Tachibana, A.; Takata, N.; Oko, T.; Takeda, D.; Ishida, Y.; Fujita, T.; Goto, I.; et al. Can CT predict the development of oroantral fistula in patients undergoing maxillary third molar removal? Oral Maxillofac. Surg. 2020, 25, 7–17. [Google Scholar] [CrossRef]

- Tesfai, A.S.; Vollmer, A.; Özen, A.C.; Braig, M.; Semper-Hogg, W.; Altenburger, M.J.; Ludwig, U.; Bock, M. Inductively Coupled Intraoral Flexible Coil for Increased Visibility of Dental Root Canals in Magnetic Resonance Imaging. Investig. Radiol. 2021, 57, 163–170. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Vinayahalingam, S.; Kempers, S.; Limon, L.; Deibel, D.; Maal, T.; Hanisch, M.; Bergé, S.; Xi, T. Classification of caries in third molars on panoramic radiographs using deep learning. Sci. Rep. 2021, 11, 12609. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Mpesiana, T.A. COVID-19: Automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020, 43, 635–640. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khavalko, V.; Tsmots, I.G.; Kostyniuk, A.K.; Strauss, C. Classification and Recognition of Medical Images Based on the SGTM Neuroparadigm. IDDM 2019, 2488, 234–245. [Google Scholar]

- Miki, Y.; Muramatsu, C.; Hayashi, T.; Zhou, X.; Hara, T.; Katsumata, A.; Fujita, H. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput. Biol. Med. 2016, 80, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-H.; Kim, D.-H.; Jeong, S.-N.; Choi, S.-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep Learning for the Radiographic Detection of Periodontal Bone Loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019, 45, 917–922. [Google Scholar] [CrossRef]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef] [PubMed]

- Kahaki, S.M.M.; Nordin, J.; Ahmad, N.S.; Arzoky, M.; Ismail, W. Deep convolutional neural network designed for age assessment based on orthopantomography data. Neural Comput. Appl. 2019, 32, 9357–9368. [Google Scholar] [CrossRef]

- Schwendicke, G.; Dreher, K.J. Convolutional Neural Networks for Dental Image Diagnostics: A Scoping Review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef] [PubMed]

- CAMELYON17—Grand Challenge. Grand-Challenge.Org. Available online: https://Camelyon17.Grand-Challenge.Org/Evaluation/Challenge/Leaderboard/ (accessed on 18 May 2022).

- Shi, B.; Grimm, L.; Mazurowski, M.A.; Baker, J.A.; Marks, J.R.; King, L.M.; Maley, C.C.; Hwang, E.S.; Lo, J.Y. Prediction of Occult Invasive Disease in Ductal Carcinoma in Situ Using Deep Learning Features. J. Am. Coll. Radiol. 2018, 15, 527–534. [Google Scholar] [CrossRef]

- Wang, Z.; Du, B.; Guo, Y. Domain Adaptation with Neural Embedding Matching. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 2387–2397. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Wilson, G.; Cook, D.J. A Survey of Unsupervised Deep Domain Adaptation. ACM Trans. Intell. Syst. Technol. 2020, 11, 1–46. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Cheng, J.; Fu, H.; Gu, Z.; Xiao, Y.; Zhou, K.; Gao, S.; Zheng, R.; Liu, J. Noise Adaptation Generative Adversarial Network for Medical Image Analysis. IEEE Trans. Med. Imaging 2019, 39, 1149–1159. [Google Scholar] [CrossRef]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-Adversarial Training of Neural Networks. In Domain Adaptation in Computer Vision Applications; Advances in Computer Vision and Pattern Recognition book series; Springer: Cham, Switzerland, 2017; pp. 189–209. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Du, B.; Tu, W.; Zhang, L.; Tao, D. Incorporating Distribution Matching into Uncertainty for Multiple Kernel Active Learning. IEEE Trans. Knowl. Data Eng. 2019, 33, 128–142. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, Y.; Wu, Q.; Zhao, P.; Niu, S.; Huang, J.; Tan, M. Collaborative Unsupervised Domain Adaptation for Medical Image Diagnosis. IEEE Trans. Image Process. 2020, 29, 7834–7844. [Google Scholar] [CrossRef]

- Balki, I.; Amirabadi, A.; Levman, J.; Martel, A.L.; Emersic, Z.; Meden, B.; Garcia-Pedrero, A.; Ramirez, S.C.; Kong, D.; Moody, A.R.; et al. Sample-Size Determination Methodologies for Machine Learning in Medical Imaging Research: A Systematic Review. Can. Assoc. Radiol. J. 2019, 70, 344–353. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lei, S.; Zhang, H.; Wang, K.; Su, Z. How Training Data Affect the Accuracy and Robustness of Neural Networks for Image Classification. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Gianfrancesco, M.A.; Tamang, S.; Yazdany, J.; Schmajuk, G. Potential Biases in Machine Learning Algorithms Using Electronic Health Record Data. JAMA Intern. Med. 2018, 178, 1544–1547. [Google Scholar] [CrossRef]

- England, J.R.; Cheng, P. Artificial Intelligence for Medical Image Analysis: A Guide for Authors and Reviewers. Am. J. Roentgenol. 2019, 212, 513–519. [Google Scholar] [CrossRef]

- Schwendicke, F.; Samek, W.; Krois, J. Artificial Intelligence in Dentistry: Chances and Challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef] [PubMed]

- Shan, T.; Tay, F.; Gu, L. Application of Artificial Intelligence in Dentistry. J. Dent. Res. 2020, 100, 232–244. [Google Scholar] [CrossRef] [PubMed]

- Magrabi, F.; Ammenwerth, E.; McNair, J.B.; De Keizer, N.F.; Hyppönen, H.; Nykänen, P.; Rigby, M.; Scott, P.J.; Vehko, T.; Wong, Z.S.-Y.; et al. Artificial Intelligence in Clinical Decision Support: Challenges for Evaluating AI and Practical Implications. Yearb. Med. Inform. 2019, 28, 128–134. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pauly, G.; Kashyap, R.; Shetty, R.; Kini, R.; Rao, P.; Giridh, R. Oro-Antral Fistula: Radio-diagnostic lessons from a rare case. Am. J. Diagn. Imaging 2017, 4, 21. [Google Scholar] [CrossRef]

| Algorithm | Accuracy | AUC | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| VGG16 | 0.63 | 0.53 | 0.50 | 0.14 | 0.22 |

| MobileNetV2 | 0.74 | 0.67 | 0.75 | 0.43 | 0.55 |

| InceptionV3 | 0.70 | 0.60 | 1.00 | 0.19 | 0.32 |

| ResNet50 | 0.56 | 0.45 | 0.17 | 0.05 | 0.07 |

| EfficientNet | 0.63 | 0.51 | 0.50 | 0.05 | 0.09 |

| Observer | Sensitivity | Specificity | Correctly Classified | AUC |

|---|---|---|---|---|

| 1 | 14.14 | 95.02 | 68.33 | 0.5458 |

| 2 | 59.60 | 81.59 | 74.33 | 0.7059 |

| 3 | 34.69 | 76.12 | 62.54 | 0.5541 |

| 4 | 68.69 | 51.74 | 57.33 | 0.6021 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vollmer, A.; Saravi, B.; Vollmer, M.; Lang, G.M.; Straub, A.; Brands, R.C.; Kübler, A.; Gubik, S.; Hartmann, S. Artificial Intelligence-Based Prediction of Oroantral Communication after Tooth Extraction Utilizing Preoperative Panoramic Radiography. Diagnostics 2022, 12, 1406. https://doi.org/10.3390/diagnostics12061406

Vollmer A, Saravi B, Vollmer M, Lang GM, Straub A, Brands RC, Kübler A, Gubik S, Hartmann S. Artificial Intelligence-Based Prediction of Oroantral Communication after Tooth Extraction Utilizing Preoperative Panoramic Radiography. Diagnostics. 2022; 12(6):1406. https://doi.org/10.3390/diagnostics12061406

Chicago/Turabian StyleVollmer, Andreas, Babak Saravi, Michael Vollmer, Gernot Michael Lang, Anton Straub, Roman C. Brands, Alexander Kübler, Sebastian Gubik, and Stefan Hartmann. 2022. "Artificial Intelligence-Based Prediction of Oroantral Communication after Tooth Extraction Utilizing Preoperative Panoramic Radiography" Diagnostics 12, no. 6: 1406. https://doi.org/10.3390/diagnostics12061406

APA StyleVollmer, A., Saravi, B., Vollmer, M., Lang, G. M., Straub, A., Brands, R. C., Kübler, A., Gubik, S., & Hartmann, S. (2022). Artificial Intelligence-Based Prediction of Oroantral Communication after Tooth Extraction Utilizing Preoperative Panoramic Radiography. Diagnostics, 12(6), 1406. https://doi.org/10.3390/diagnostics12061406