1. Introduction

Tissue morphology in digital images can be assessed as accurately and efficiently as with conventional light microscopy [

1,

2]. Pattern recognition methods are available for conventional morphological staining and immunohistochemical antigen detection, which adds significant diagnostic value in clinical pathology [

3,

4]. More developed, machine-learning-based methods have been successfully used to stratify inter alia lung cancers that harbor different mutational statuses and also gastrointestinal cancers based on microsatellite instability status [

5,

6].

There is an unmet need to improve cancer outcome prediction based on histological cancer samples. AI methods are applicable for the recognition of cell and tissue components (epithelium, stroma, vessels, immune cells, etc.) in addition to their distribution and proportions. These methods have been successfully used to predict the progression of cancer [

7,

8]. Computer vision and neural networks have also been used in the search for predictive features that are independent of the traditional parameters, such as stage and grade [

9,

10,

11]. There is growing interest toward prostate cancer (PCa) histological grading by means of neural networks, and some recent studies have managed to touch upon the possible use of deep learning (DL) methods in the prediction of PCa progression and survival [

12,

13,

14]. AI, or more precisely trained neural networks, has shown great promise in detecting histological morphologies and changes that pathologists have traditionally determined [

15]. Gleason grading is the strongest predictor of survival after primary therapy for PCa. From the introduction of the Gleason Score (GS) in 1966 until now, Gleason grade patterns have been modified and recapitulated into GS 6 to 10. A new system with five distinct grade groups (GGs) has been adopted for use in conjunction with the Gleason grading, as it is simpler and reflects more correctly the PCa outcome [

16]. However, as GGs are based on the modified GS groups, the accuracy of Gleason grading is as important as before (

Table 1) [

16].

In terms of survival, there is clear evidence [

17,

18,

19] of the difference between GG2 and GG3, which emphasizes the importance of accurate and standardized assignment of Gleason grading patterns. The interobserver reproducibility of grading among urologic pathologists has been shown to be within an acceptable range [

20]. However, among general pathologists, there is still a need for training to reduce the interobserver variability in Gleason scoring [

21]. The cribriform growth pattern of the Gleason grade 4 has a high level of reproducibility between pathologists and is a highly specific predictor for metastasis, disease-specific death, and biochemical recurrence (BCR) [

22,

23,

24]. The independent predictive value of high Gleason grade patterns 4 and 5 has been previously shown as well [

25,

26].

PCa-related intra- and interobserver variation has recently been challenged by AI methodology. Specifically, neural networks have mostly succeeded in binomial classification of benign tissue vs. cancer or reached the level of expert pathologists in grading prostate biopsies [

12,

13,

14,

27]. To become usable in everyday pathology practice and decision making, any neural-network-based algorithm must therefore succeed in outcome prediction. Thus far, only a few studies have tried to address this problem in PCa, and some of these studies have shown no less than human-level results when performed in radical prostatectomy (RALP) cohorts [

12,

14].

For a long time, training new AI-augmented image analysis models have been labor intensive and required expert annotations of large data sets. Recent advances in neural networks and other AI models have enabled faster and more efficient development of detection of changes in histological tissue slides.

Here, we have trained a robust Gleason grade classifier in prostate biopsies by using a relatively small training cohort. We have endeavored to create an efficient and accurate algorithm that also reproduces the characteristics of grading for the prediction of cancer recurrence after primary therapy.

2. Materials and Methods

Our cohort consisted of 750 patients undergoing prostate biopsy at the Helsinki University Hospital (HUS) between 2016 and 2017. For cohort demographics, see

Table 2.

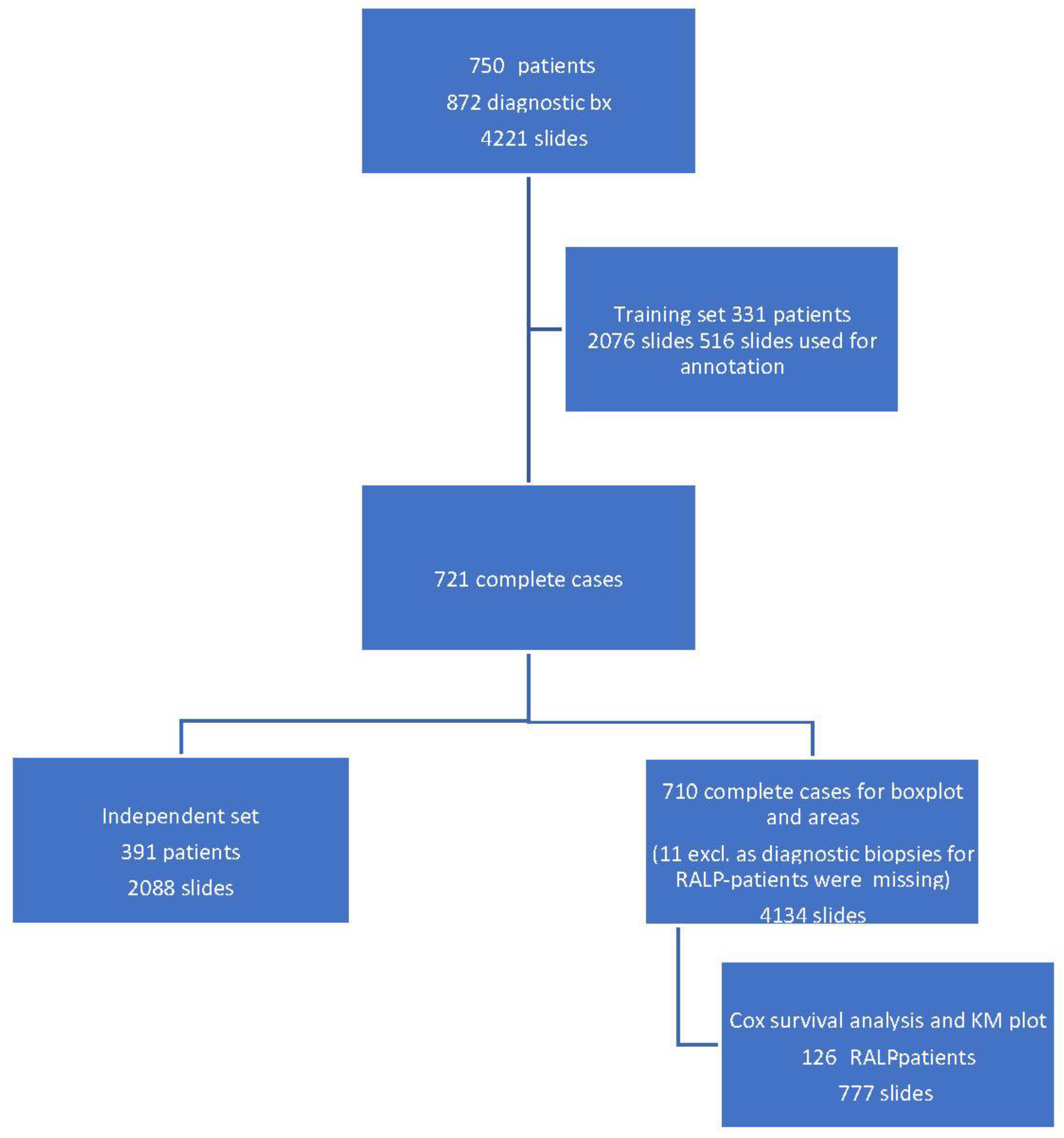

A total of 872 biopsy sessions resulted in 4221 hematoxylin-and-eosin-stained (H&E) slides that were scanned for AI development (

Figure 1).

Clinical and pathological data were collected for all the patients. For RALP patients, information about extra prostatic extension (EPE), seminal vesicle invasion (SVI), node status (NS), and pathologic stage (pT) was collected from electronic surgical pathology reports. Adverse pathologic findings in RALP specimens were defined as EPE, SVI, or positive NS. All post-operative prostate-specific antigen (PSA) values during the follow-up were collected for survival analysis. Detection of two consecutive post-operative PSA values equal to or higher than 0.2 ng/mL was considered as a BCR with the date of the first PSA value as the date of the event. The follow-up of the RALP cohort ended in May 2020. Of the biopsies, 79% were diagnosed by nine pathologists who sign out prostate biopsies at least on a weekly basis and the remaining 21% were diagnosed by six pathologists with uropathology experience less than that. All the 15 pathologists were considered equal in the analysis.

The H&E biopsy slides were scanned using a Pannoramic 250 Flash III scanner (3DHistech, Budapest, Hungary) with a 20× objective (numeric aperture 0.8) at 0.26 µm/pixel resolution, resulting in an optical magnification of 41×. The digital slide images were compressed to 50% in JPEG format and uploaded to Aiforia software (Aiforia Technologies Plc., Helsinki, Finland) for annotation, development, and testing of the AI algorithm.

For the AI algorithm, two independent convolutional neural networks (CNNs) were trained for multi-class semantic segmentation of tissue (CNN-T) and Gleason grades (CNN-GG). The training was fully supervised, and the training data were annotated as strong pixel-level multi-class segments for both tissue and Gleason grades in the Aiforia software by two expert uropathologists (K.S. and T.M.) in consensus. All training data were digitally augmented for geometric (i.e., rotation and scale) and photometric (i.e., contrast and noise) transformations to avoid overfitting and to ensure generalization of the CNNs. CNN-T and CNN-GG included 12 convolutional layers each and were trained using a 90 µm × 90 µm and a 100 µm × 100 µm field of view. These were used for multi-class logistic regression loss function. The final AI algorithm inference pipeline combined both CNN-T and CNN-GG in a nested design where Gleason grades were detected within the detected tissue regions.

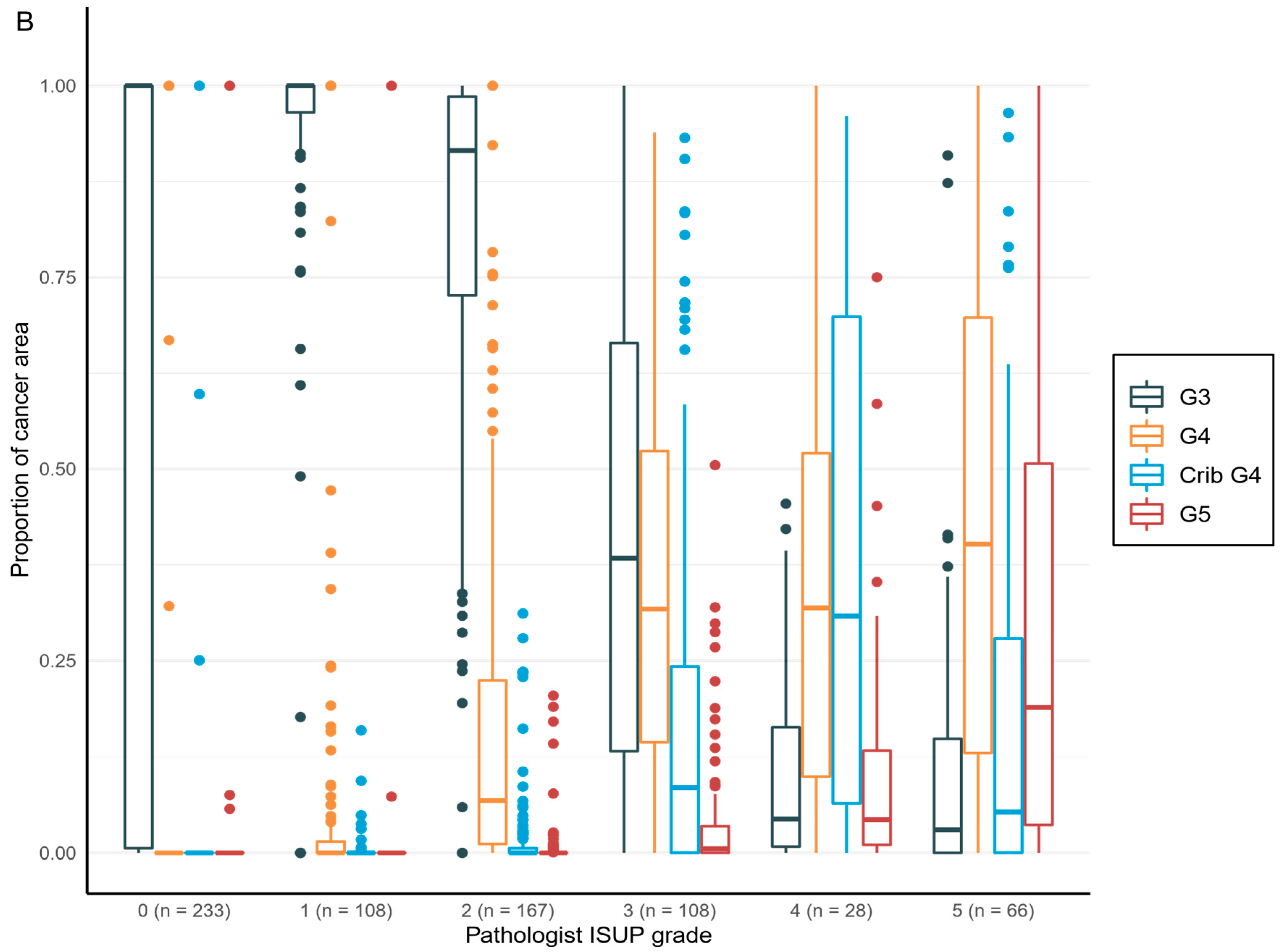

To enable comparison between AI model and original clinical pathological diagnoses, each determined by 1 of the 15 pathologists, the proportions of each growth pattern, i.e., Gleason grade 3, grade 4, grade 4 with cribriform pattern, and grade 5, in each set of digital diagnostic biopsies were calculated to generate a Gleason score. A GS is based on the reporting criteria for prostate biopsies detailed in the International Society of Urological Pathology (ISUP) Consensus Conference in 2014, which addressed the most common and the highest Gleason grade patterns [

16]. The result was then converted to an ISUP grade group (

Table 1) to enable cross-tabulation and comparison between AI and pathologists for GG in a 6-tier-grouping (benign and GGs 1–5).

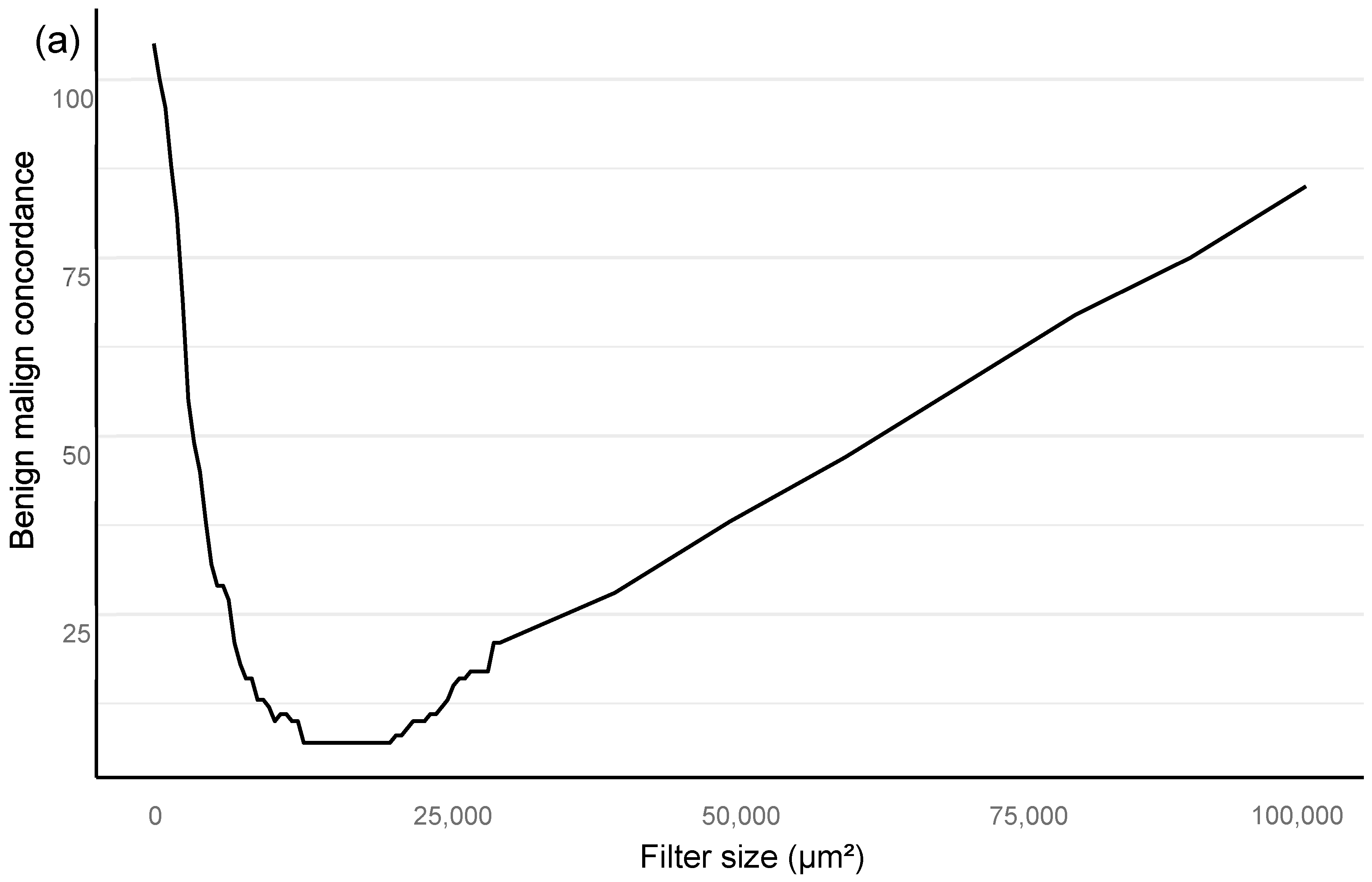

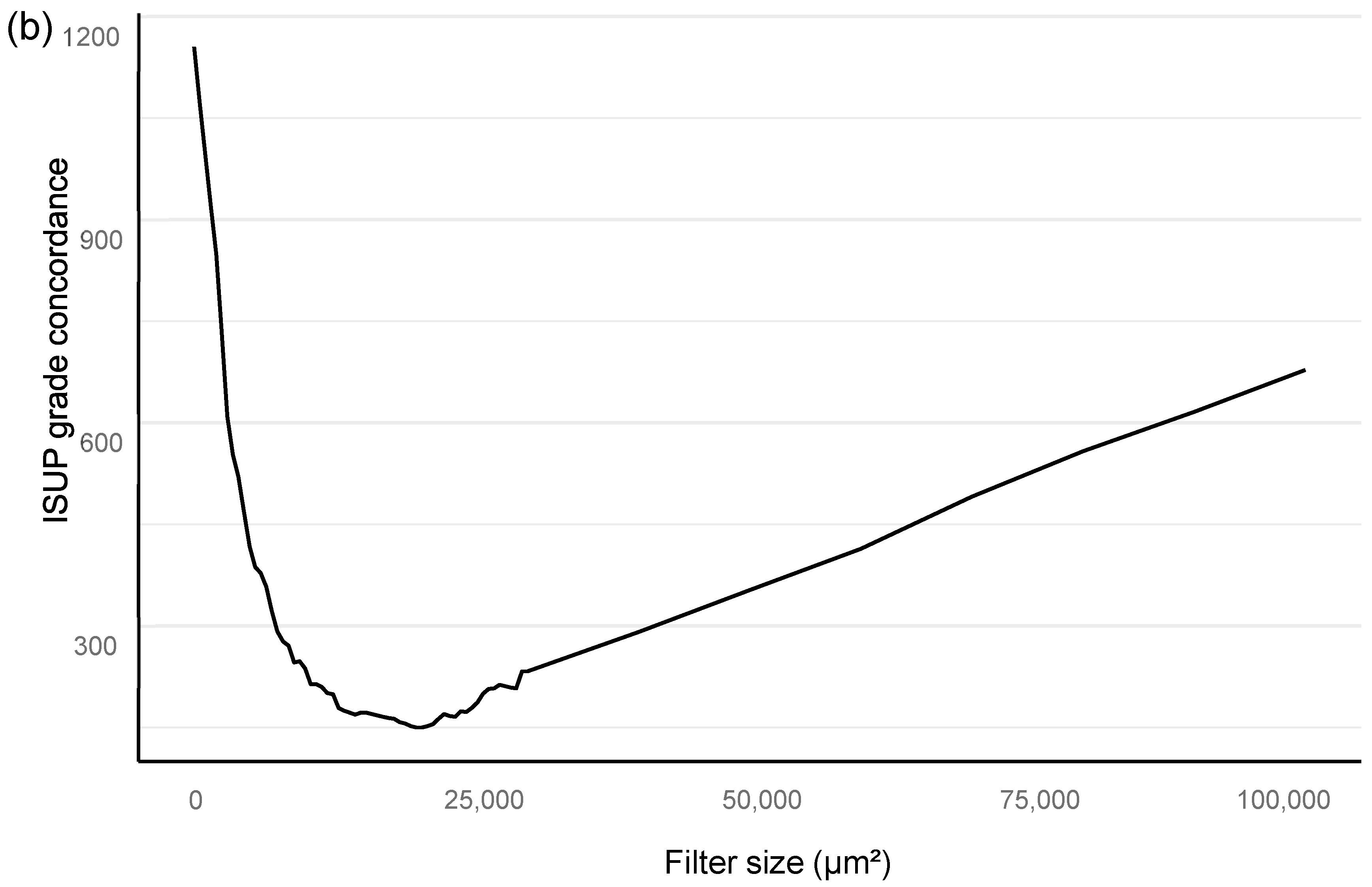

Training of the algorithm was performed as iterations to optimize the performance of the AI algorithm. The training included primary annotations, correction of annotations, and addition of new sections from independent material that were based upon outliers in the AI vs. the pathologist confusion matrix. An area filter curve that was based upon this confusion matrix was then constructed to find the optimal minimum for the filter area, which corresponded with benign vs. malignant differentiation and also GG stratification (

Figure 2; see also the

Section 2.1).

In the algorithm training phase, the clinically applicable filter size was determined iteratively based on urological pathology experts.

The filter curve was configured to be compliant with a clinical workflow. The AI algorithm training material consisted of a subset of 516 slides selected from 2076 slides that came from 331 patients. A total of 3606 separate glandular areas and stromal background were annotated on the training set slides, which resulted in a total area of 94 mm

2 of completely annotated prostate tissue (

Table 3).

The independent control set comprised 2088 slides taken from 391 patients.

Figure 1 shows the full study flowchart with patient stratification. The median number of slides in a complete preoperative slide set per patient (total number of patients 721) was 6, and most of the slides represented systematic biopsies (2 cores per slide). The average area of tissue was 25 mm

2 per slide, consisting of two needle biopsies and two levels each, which then equals approximately 6.3 mm

2 per individual needle core level on the slide. Therefore, the area of the annotated training data corresponded to a total area of four slides or 15 needle cores.

The RALP-specimen-related-adverse-outcome data and the follow-up PSA data of the RALP subcohort are completely independent from AI training and the associated data. Thus, the survival analysis was executed blinded to the annotating pathologists, and post-operative data were not used in the generation of the AI algorithm. The data were accessed and collected between October 2016 and May 2020 from the electronic health records at the HUS Helsinki University Hospital. The original data were accessed by using the patients’ social security numbers, but in the study database, all data were pseudonymized according to the study approval by the Helsinki University Hospital (HUS) (latest update 16 November 2019, diary number §166 HUS/44/2019). As this was a retrospective registry study, no informed consent was required as stipulated by Finnish national and European Union legislation. The study was conducted according to the Declaration of Helsinki.

2.1. Statistical Analyses

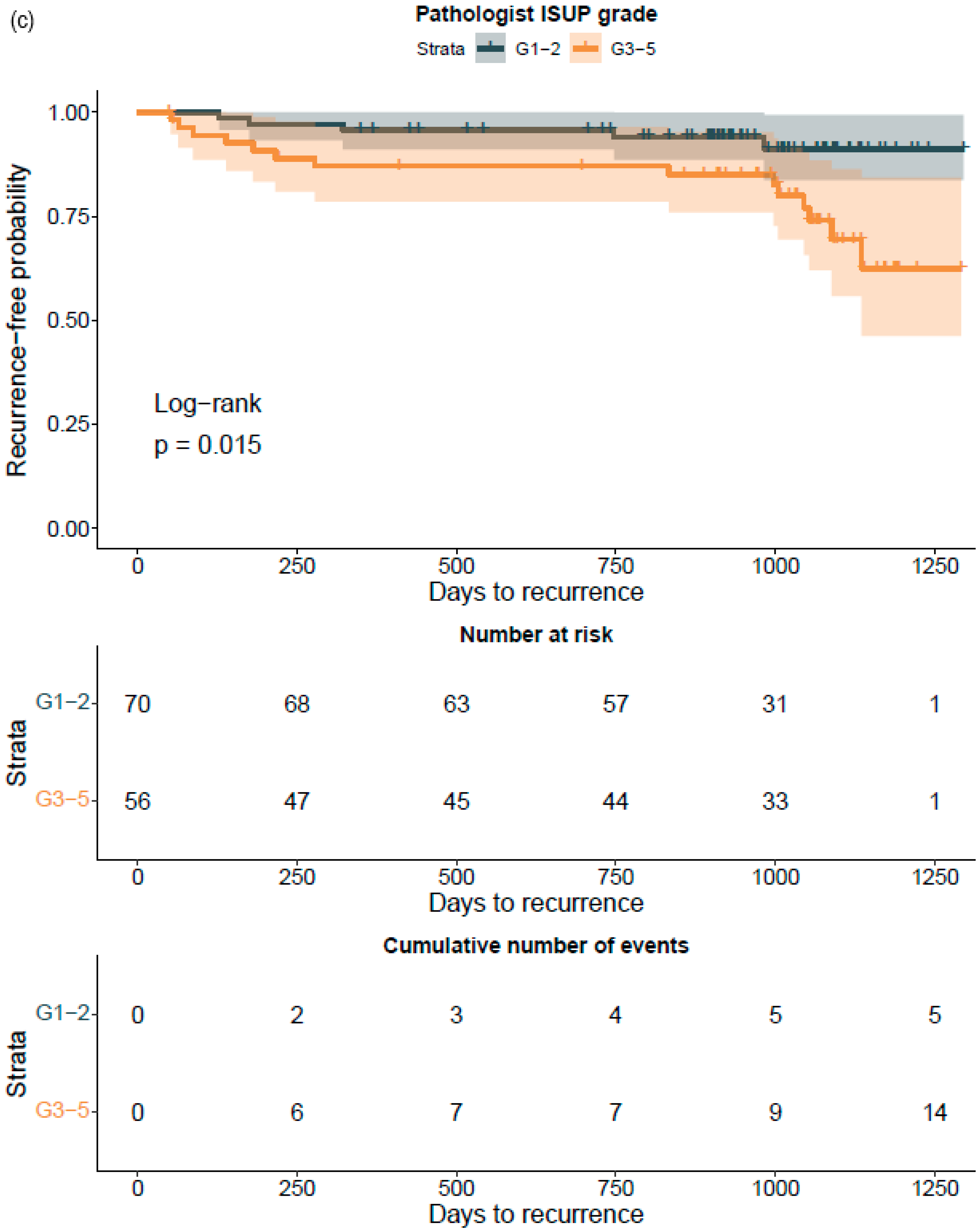

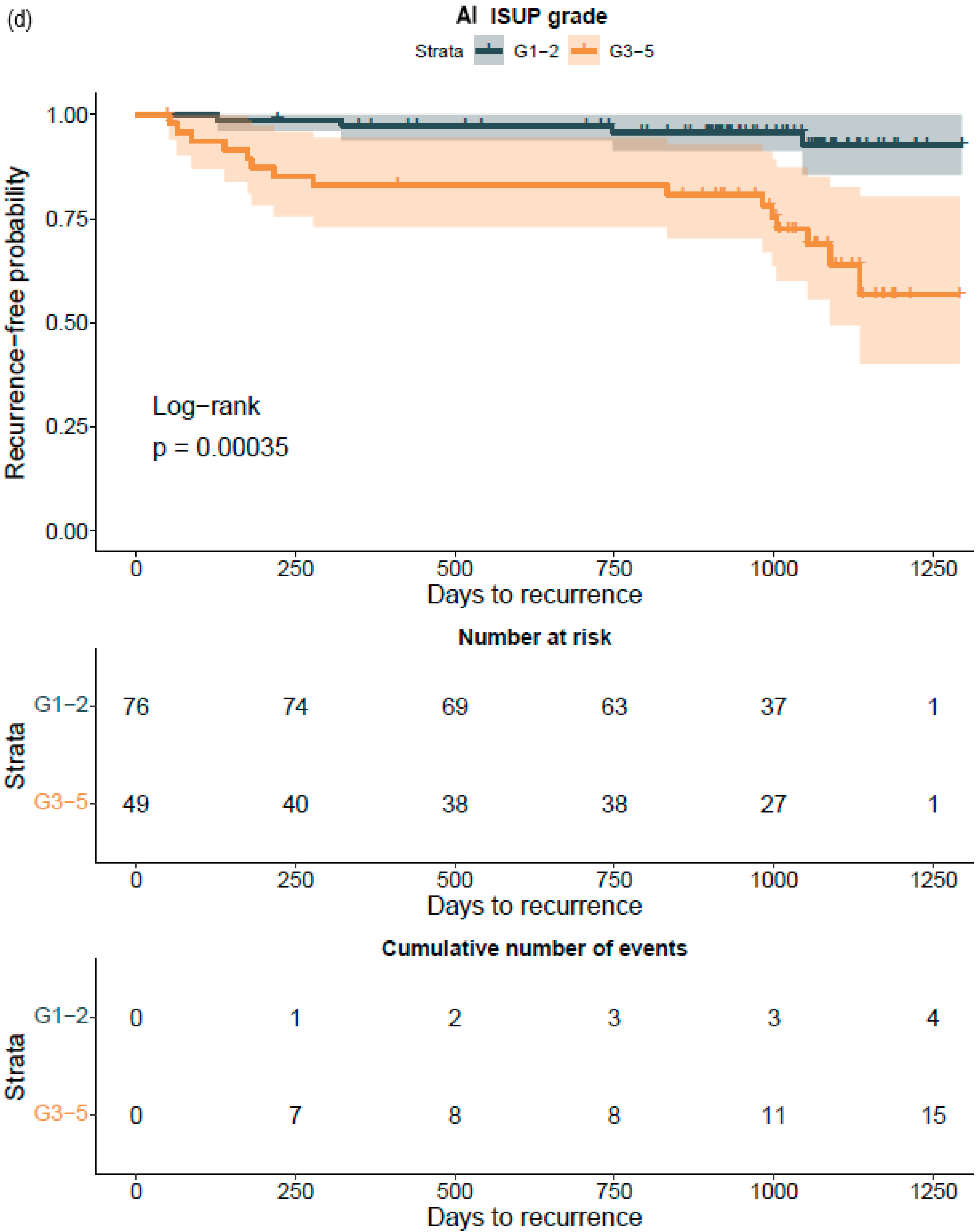

All statistical analyses are assessed for a filter size of 15,000 square microns, which showed the best performance in the Bloom filter analysis for the best determinant of class stratification (

Figure 2). ROC AUC analysis was performed and predictive accuracies determined for the algorithm. Cohen’s weighted kappa coefficient was used to measure interrater agreement between the AI model and each of the original classifications. Generalized linear models were applied to determine the relationship between the clinical GG and the AI-determined tumor area. Multivariable logistic regression models were used to determine the relationship between biopsy AI results and adverse outcomes on the RALP specimen (EPE, SVI, or positive NS). Cox proportional hazard regression models and Kaplan–Meier survival curves were applied to assess the predictive value of the AI-based GG and the original GG on BCR during the post-operative follow-up. An alpha level of 0.05 was used for statistical significance. All statistical analyses were made using R Statistical Software version 4.1.0 [

28] and using the caret and survival package [

29,

30].

4. Discussion

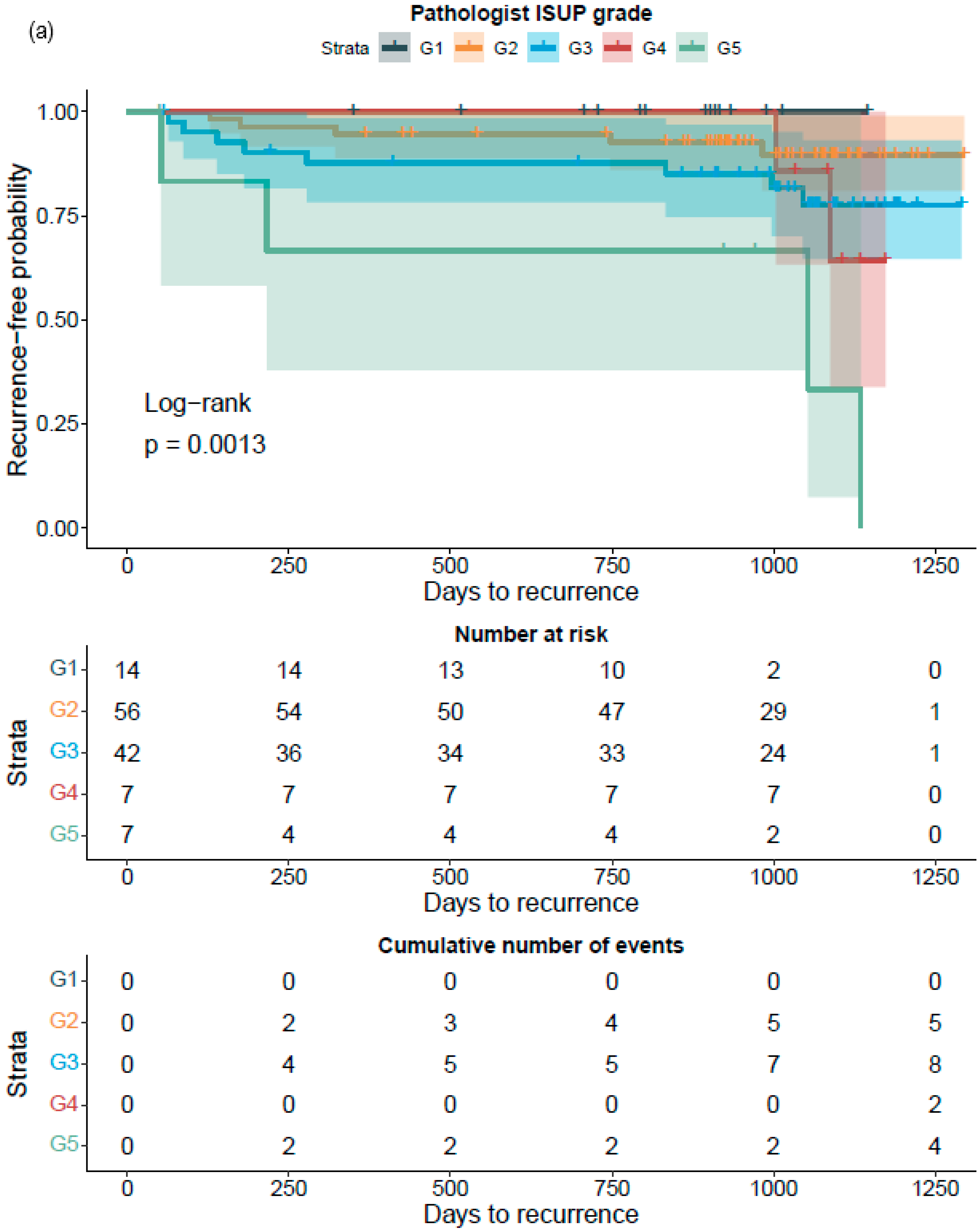

To the best of our knowledge, this is the first report that shows the prognostic value for a CNN-model-based grade group assignment in diagnostic prostate biopsies. Not only did the model accurately classify the diagnostic biopsies, it also accurately categorized patients to different risk groups for BCR after surgical treatment. Although our RALP cohort is limited in size, and only a limited number of biochemical relapses were observed due to short follow-up times, the strength of the AI model is shown in the predictive accuracy, which is on a par with the pathologist, ad minimum.

In PCa diagnostics, the correct identification of benign tissue is not an issue generally. Furthermore, this task can be performed by an AI-based approach, as shown by Campanella et al., who obtained slides from multiple institutions to develop an algorithm for cancer detection [

27]. However, their algorithm remains a binary cancer detection algorithm and its ability to predict the risk of disease recurrence has, to the best of our knowledge, not been reported. However, the current clinical challenges relate to consistently accurate grading of prostate biopsy samples to support clinical decision making.

Compared to other similar, although single-slide-based studies, our undoubtedly more clinically oriented study is unique, as it used a neural network algorithm that reached the performance of pathologists at the patient level in the whole biopsy session [

12,

13,

14]. The practical clinical setting, with 15 independent pathologists with varying experience in prostate diagnostics who signed out the biopsies, did not compromise our results. The algorithm was trained by two uropathologists, who annotated strong image labels, which resulted in a small training set compared with the independent testing group. The strong image labeling seemed to improve the results for differentiating GG1–2 versus GG3–5, compared with a previous report that used pure Gleason 3 + 3, 4 + 4, and 5 + 5 slides for training its algorithm [

13]. Pure tumor differentiation that just represents one GG is seldom observed in clinical practice as it is a rare phenomenon in biology.

The glandular architecture-based GG has been the most significant prognostic and predictive factor since its implementation in the 1960s and its subsequent gradual adoption [

31,

32]. The growth pattern assessment is forced into scale-wise grades, which undoubtfully reduces the meaningful information inherent to gland formation and cellular morphology. Modification of the Gleason grading system over the years has led to a regression toward the mean, i.e., clumping of GSs into intermediate scores of 7 and 8. The newly adopted grade grouping system in practice reproduces the same problem [

16]. There have been efforts to quantify and weigh the significance of growth patterns with the means of visual quantification of different growth patterns [

32] in addition to computer-vision-based algorithms [

14]. In our study, the notion is that the “general pathologist”-determined diagnostic GG tended to underestimate the presence of Gleason 4 or higher-grade patterns, as the expert pathologist-trained AI algorithm detected smaller, but clinically significant, foci of high-grade cancer. In the future, more consistent recognition of growth patterns, their proportions, and their relationships aided by computer vision and AI models will provide more stratification into the rigid GG categories and aid in personalized treatment approaches. AI-based grading creates consistency and reduces interobserver variation, which is characteristic of the categorical grouping performed by pathologists. A trained algorithm would not perform differently from day-to-day, as a human observer does. However, at the institutional level, there might be issues related to differences in the adopted interpretation of grade patterns. This creates a challenge for the generalization of a new grading algorithm. This problem may, however, be overcome by training the algorithm in-house with locally selected cases. Our algorithm also has real potential to help those pathologists who are not genitourinary oriented or have limited experience in prostate pathology.

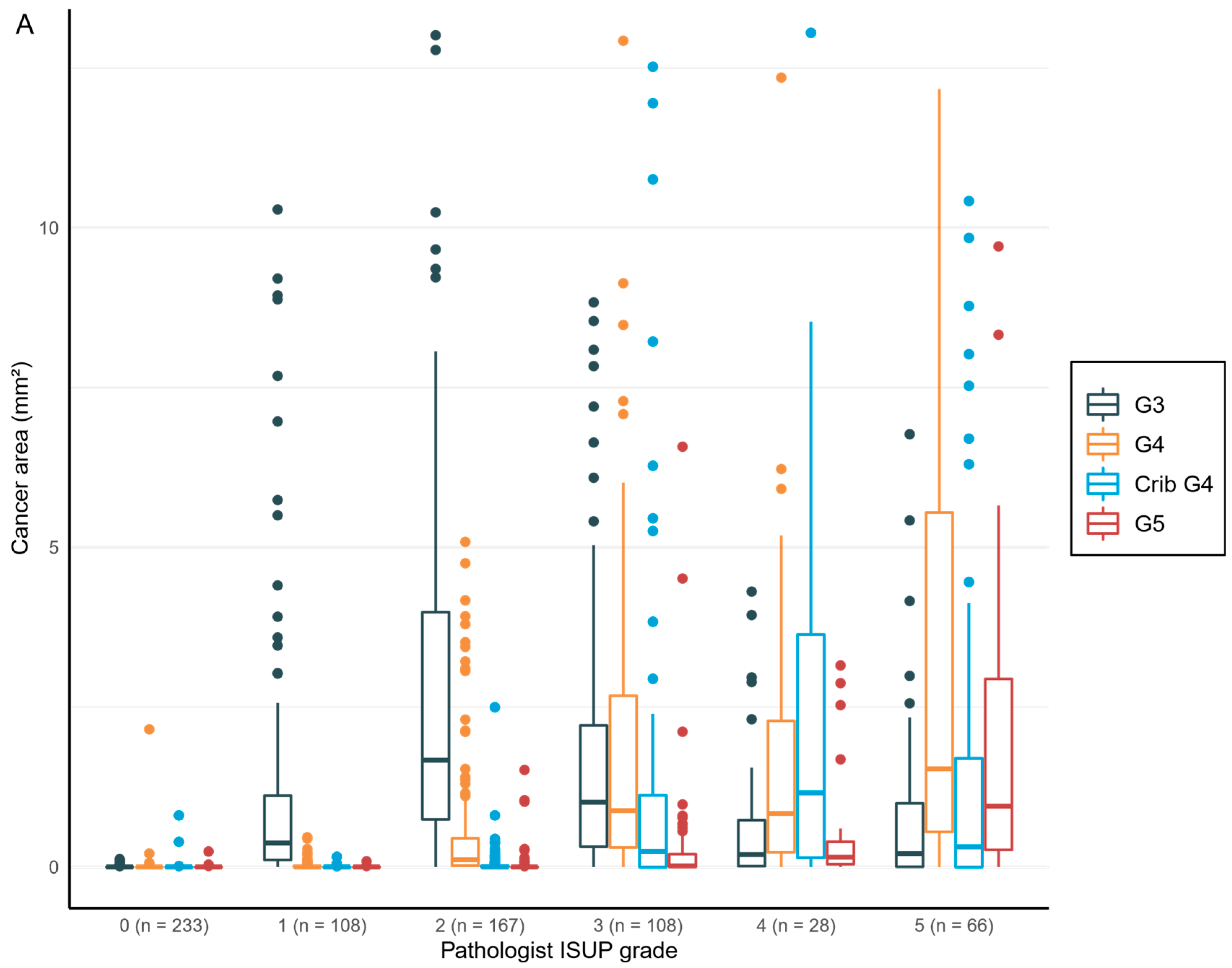

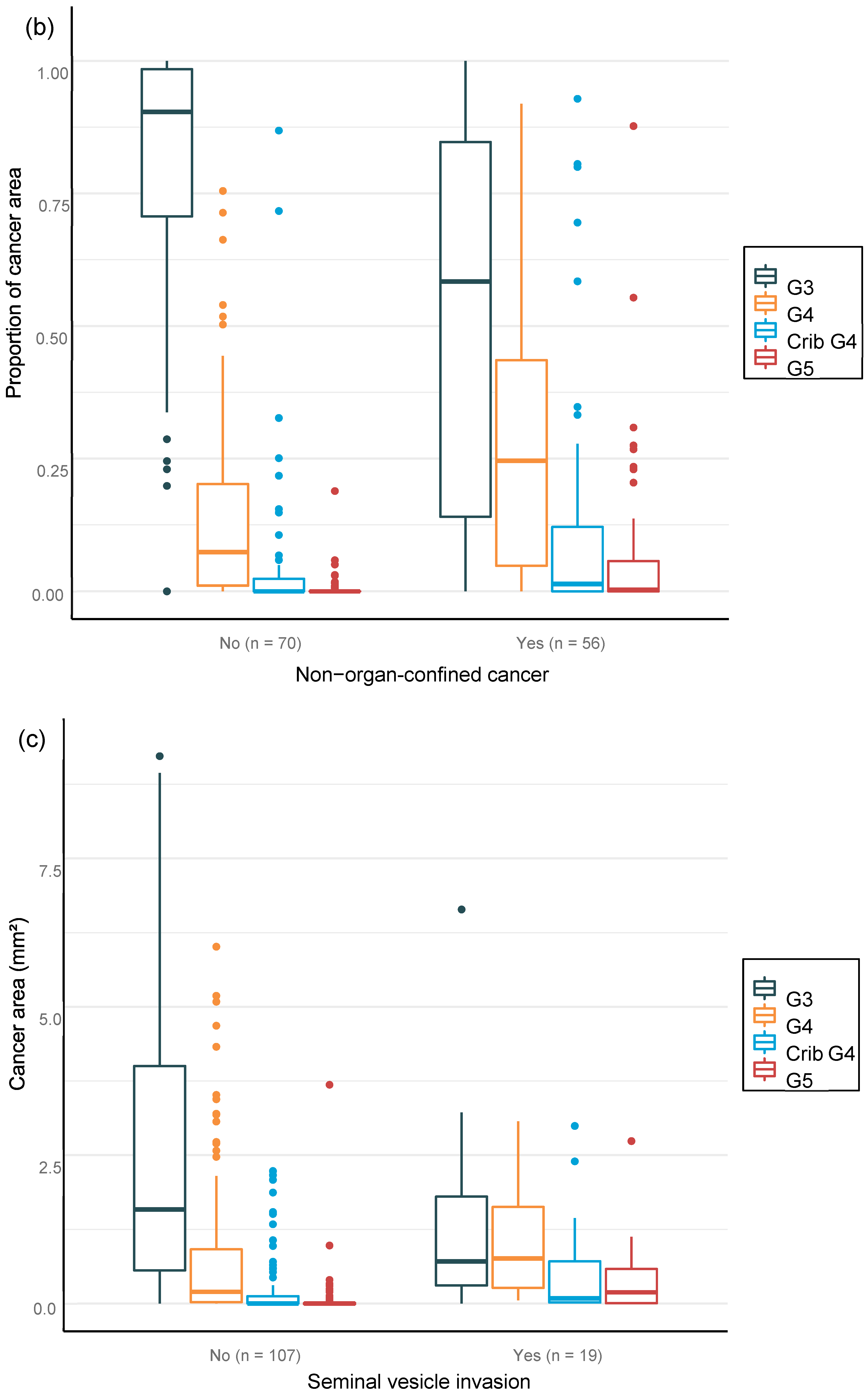

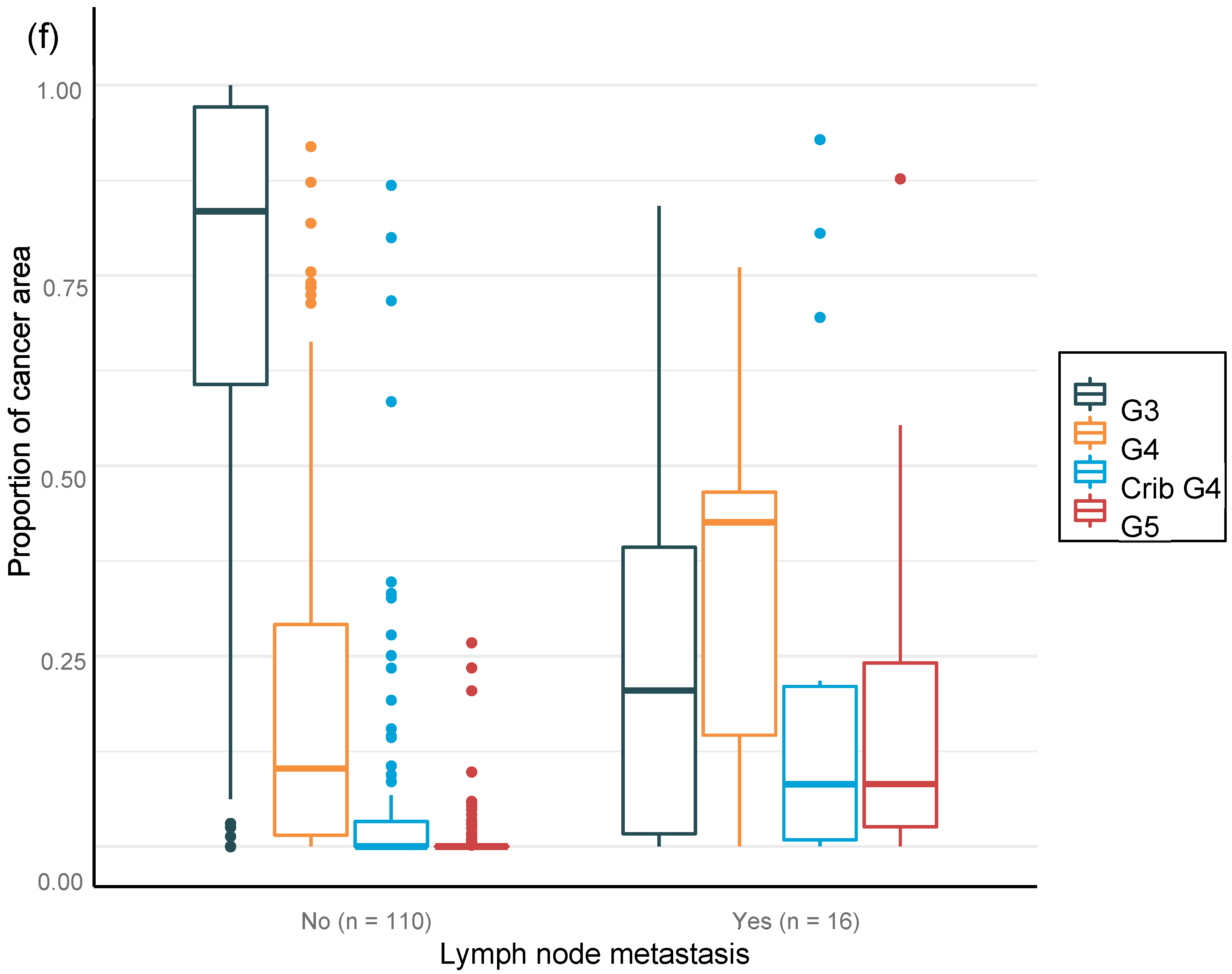

We were able to confirm that the AI-based area of cribriform G4 and G5 in prostate biopsies correlated with subsequent RALP adverse outcomes such as seminal vesicle invasion and positive lymph node status. The area of G5 in the biopsies significantly correlated with non-organ-confined disease. We observed that the proportion of GG pattern 5 is a strong predictor of BCR. This is in line with previous publications that show that the presence of any GG 5 pattern predicts an elevated probability of a relapse after primary treatment [

26,

33]. This is the first study to focus on patient-level outcome after prostatectomy based upon AI grading of full series of preoperative diagnostic prostate biopsies per patient. Our results confirm, ad minimum, a predictive performance compared to that of a human observer and suggest refined predictive accuracy that should subsequently be confirmed after a longer follow-up period. We chose mathematical modeling to work with a 15 000-micron filter to reproduce a comprehensive clinical workflow. The algorithm did not miss any cancer areas, although the use of the filter as an aid for fluent clinical workflow produced five false negative findings, of which three represented GG1, one represented GG2, and one GG3. Only two patients with benign biopsies were falsely classified as cancerous, and both had GG1. There is a definite need to develop more advanced AI models to detect small Gleason grade pattern 3 and 4 areas accurately if the sole aim is to mimic human ground truth with known inherent variability.

A weakness of the study is the lack of an external-site validation cohort. Similar, well-curated cohorts with matched preoperative biopsies, surgical specimens, and follow up for BCR are scarce. Moreover, there are differences in the biopsy techniques and tissue preparation methods between institutions. It is known that the performance of an algorithm usually does not reproduce as accurately in external validation cohorts as in the original cohort. All these limitations underscore the need for future prospective, preferably randomized, clinical trials to show that computer-vision-based cancer detection and grading performs similarly to the current gold standard of the expert human eye [

15,

34].

Ultimately, histology may include biologically and clinically relevant signals currently overlooked by the human eye, which may improve our ability to predict cancer behavior [

35]. The AI-based methods may help to stratify patients with different life expectancies within a given GG. The future of clinical decision making is likely shared decision making conjoined with supporting multidisciplinary prognostics models. For example, it has been shown in brain tumors that an AI algorithm complemented with molecular characteristics provides better predictive information than the information about the morphological differentiation or mutational status alone [

36]. DL models have also been used to explore molecular determinants of treatment response in PCa [

37]. It remains to be shown whether the biological signals and drivers of PCa progression and treatment resistance can be predicted in the tissue phenotype at a level that is clinically applicable [

38,

39].