1. Introduction

Colonoscopy is considered as the standard method for the diagnosis and surveillance of colon polyps. Subsequent polypectomy after colonoscopy is the most effective colorectal cancer (CRC) prevention [

1]. Early colonoscopy detection and removal of polyps reduces the incidence of colorectal cancer (CRC) by 76% [

2]. The most common polyps are hyperplastic and adenomatous. According to the American Society for Gastrointestinal Endoscopy, the “resect and discard” and “diagnose and leave” strategies propose that the hyperplastic polyp need not to be removed. Since hyperplastic polyps are most diminutive and non-malignant, these strategies save a great deal of resection time and pathologic analysis cost [

3,

4]. The traditional white light (WL) colonoscopy yields an adenoma miss rate of 26%, especially for those <5 mm in size [

5,

6]. Adenomatous polyps are the primary lesion which evolve to CRC and develop to an interval cancer missed in an initial colonoscopy. The identification and resection of adenomatous polyp is essential to prevent CRC [

7]. Innovative methods have been introduced to decrease polyp and adenoma miss rate [

8,

9].

As the field of colonoscopy technology thrives, new diagnostic modalities have been introduced to improve polyp detection. Image-enhanced endoscopy (IEE) is one of the state-of-the-art tools. Digital IEE includes Olympus narrowed-band imaging (NBI), PENTAX i-scan, and FUJI linked-color imaging (LCI), which improves the diagnostic ability by enhancing polyp mucosa microstructure and microvasculature. The Olympus NBI filters the specific wavelengths to enhance mucosa and vascular pattern. PENTAX i-scan is a real time post-image software-driven modification of contrast, hue and sharpness to enhance polyp mucosa. The NBI remains the most adopted and widely used method at present [

10].

NBI incorporated Olympus colonoscopy has a superb ability to detect and identify hyperplastic and adenomatous polyps. This one-button-activated electrical system is an innovative image technology and aids endoscopists to better detect and characterize polyps [

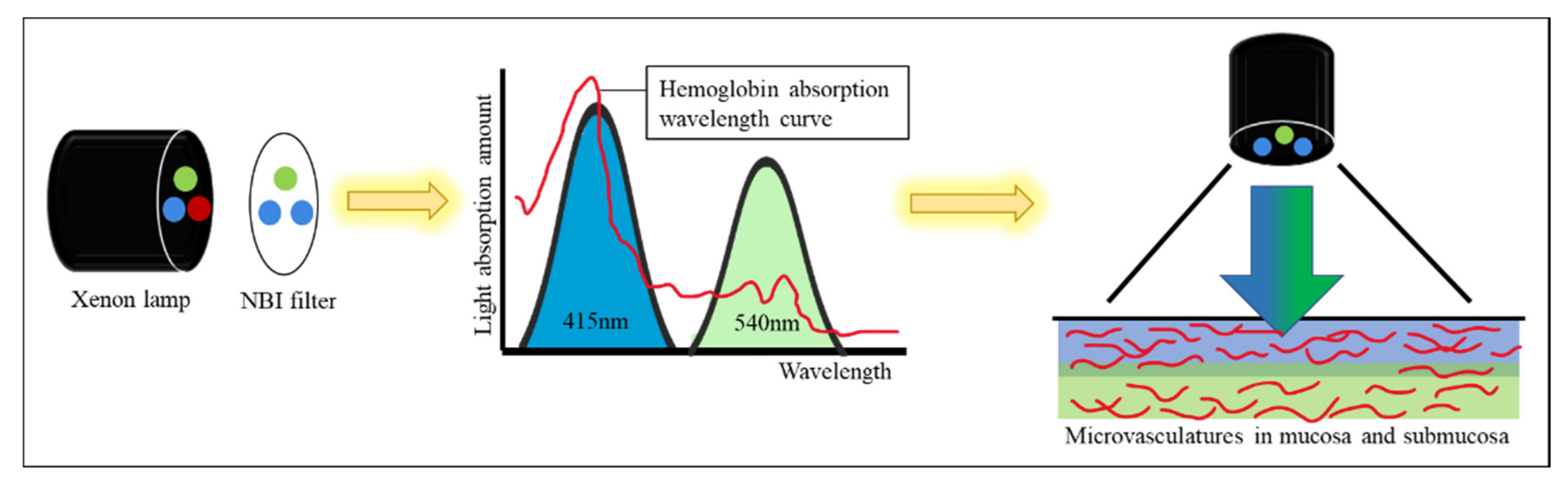

11]. NBI technology allows only blue and green lights to pass through a filter placed in the colonoscope light source. The NBI wavelength of the trichromatic optical filters is between 415 and 540 nm with a bandwidth of 30 nm, which has a shallow penetration depth [

12]. Two peaks of tissue hemoglobin are absorbed with the wavelength at 415 nm (blue light) and 540 nm (green light) [

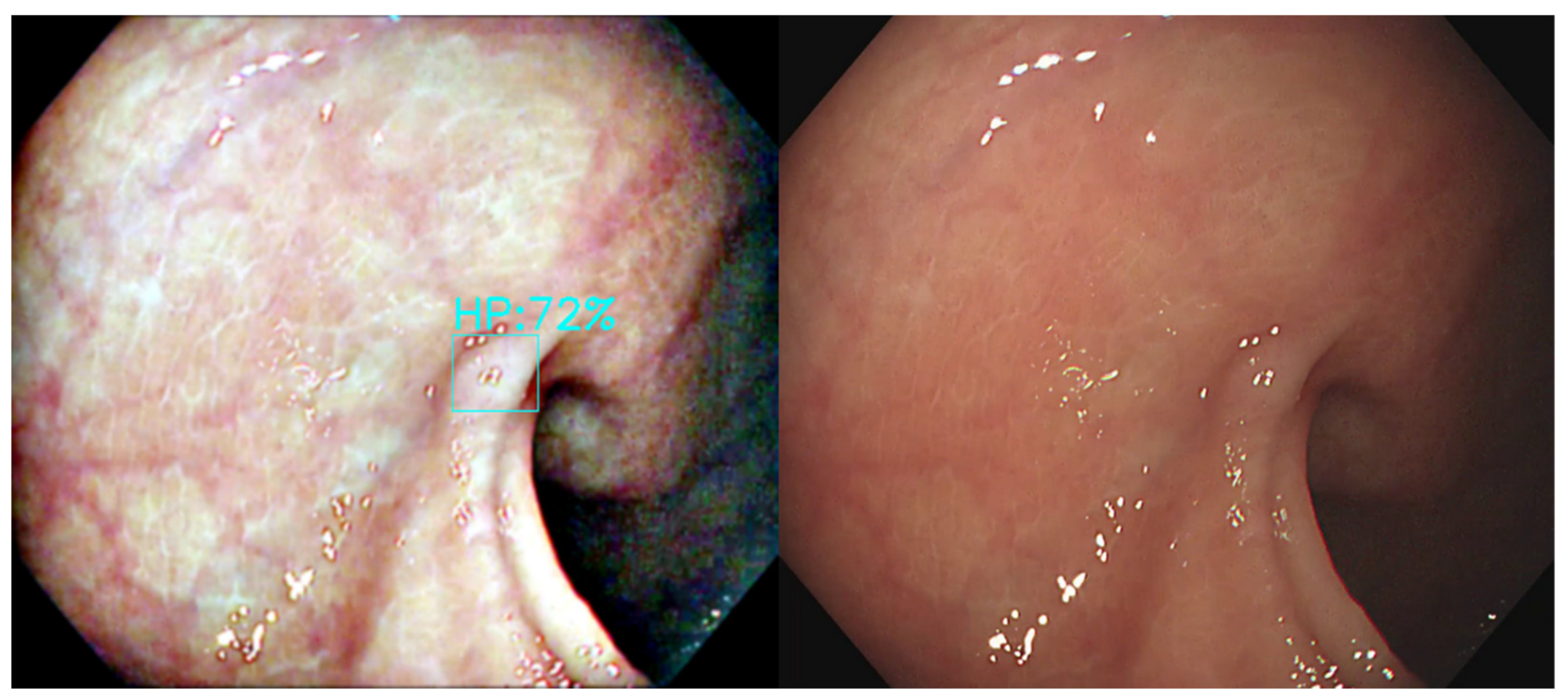

13]. The narrowed spectrum light highlights the mucosa surface microvasculature pattern to differentiate non-neoplastic (hyperplastic) from neoplastic (adenoma) polyp [

14,

15] (

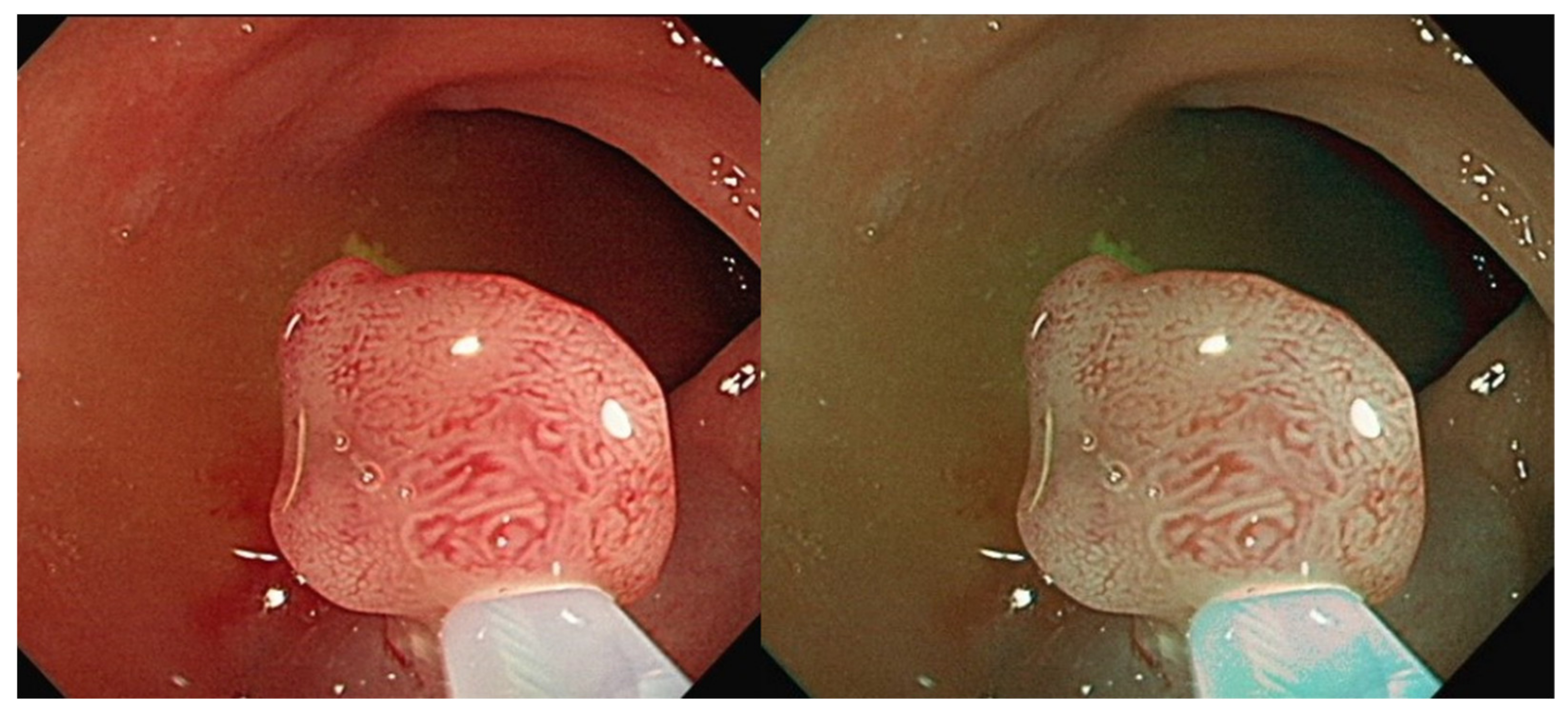

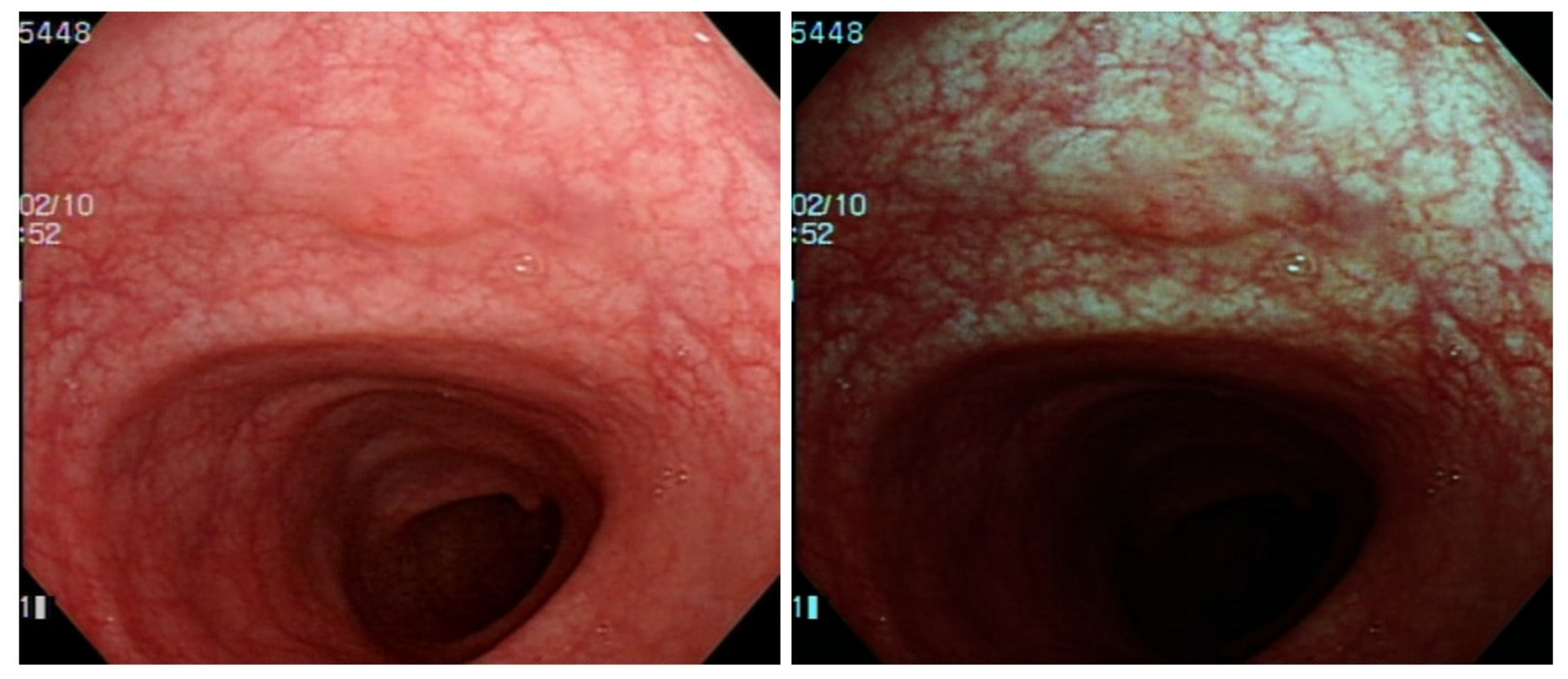

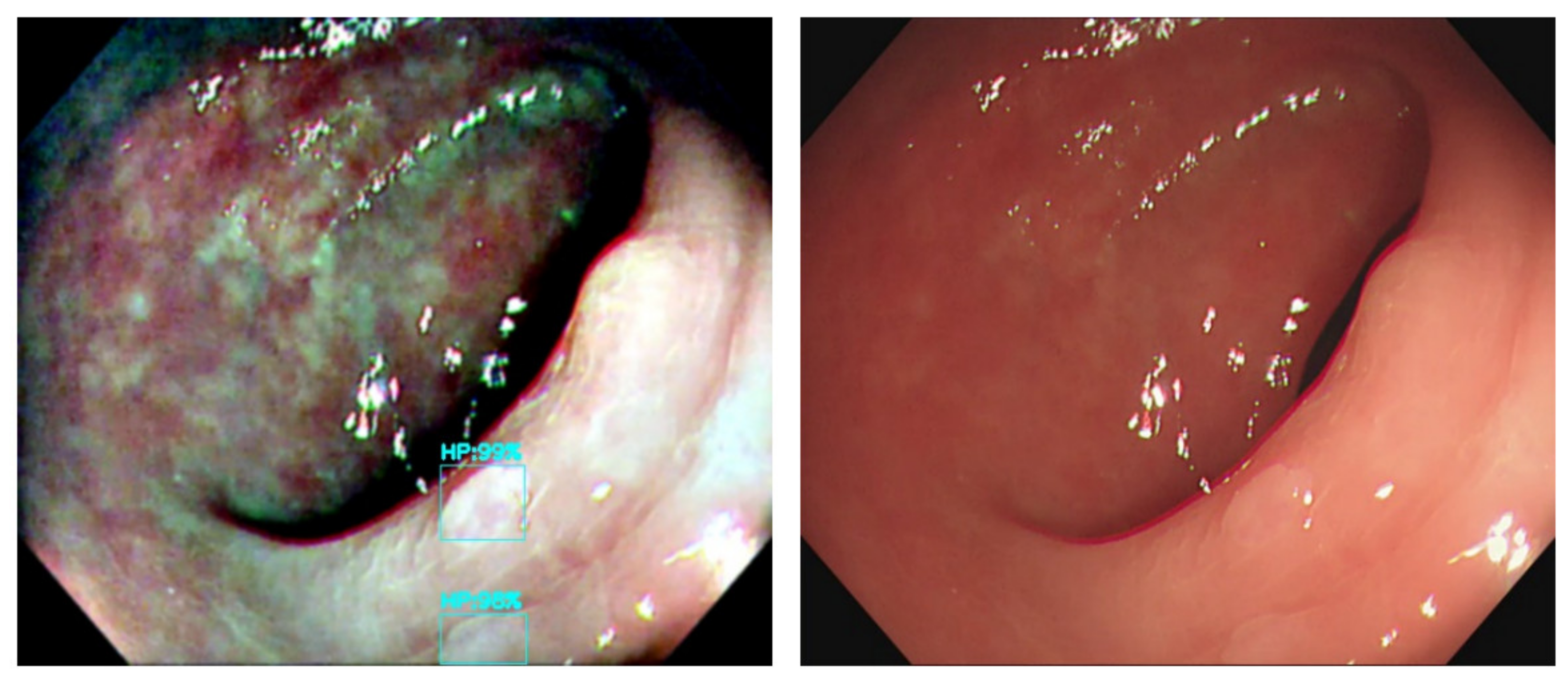

Figure 1). However, the bowel content of fecal material, debris or filthy water appears bright red color in contrast to the deep dark brown normal mucosa in the NBI environment, which is a visual irritant. The full-time activated NBI system might trigger visual fatigue and discomfort owing to the high color contrast image. Studies indicate that NBI increases polyp and adenoma detection rate with the full-time activated system [

12,

16,

17,

18]. In the real world, endoscopists only activate the NBI system in the circumstances of analyzing the type and margin of the polyp. Switching between WL and NBI back and forth during the withdrawal phase in a colonoscopy is time consuming and not cost-effective. As a consequence, endoscopists leave the system off in most of the colonoscopy observation period. The polyp and adenoma detection rates are not increased with the NBI system in daily clinical practice. In the era of artificial intelligence, we can overcome this issue with a tailor-made image enhancement CNN model to boost the polyp detection and classification without affecting an endoscopist’s routine performance.

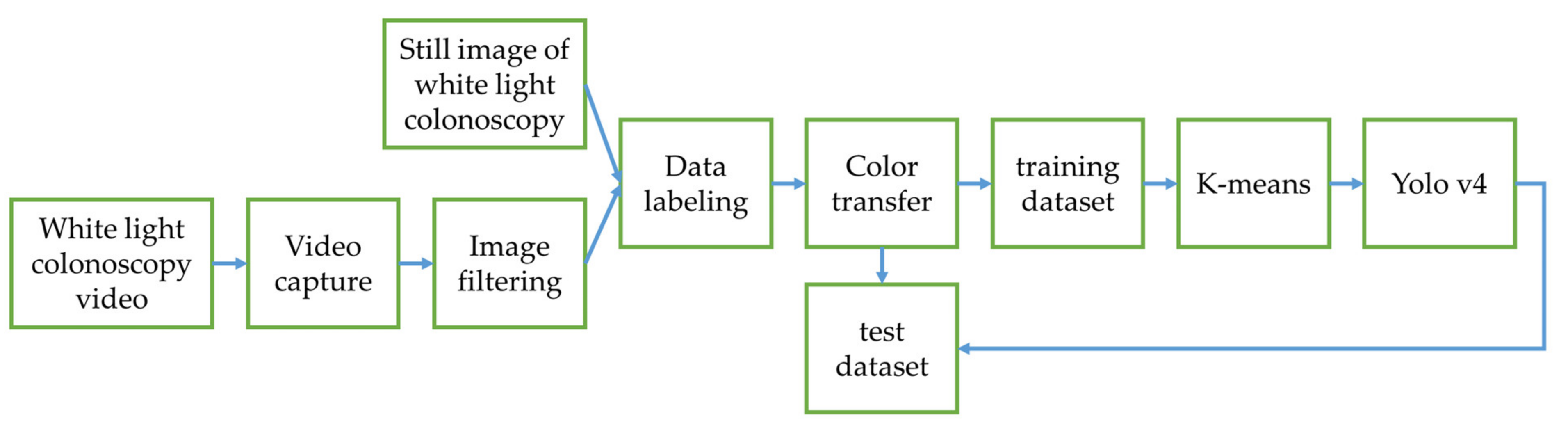

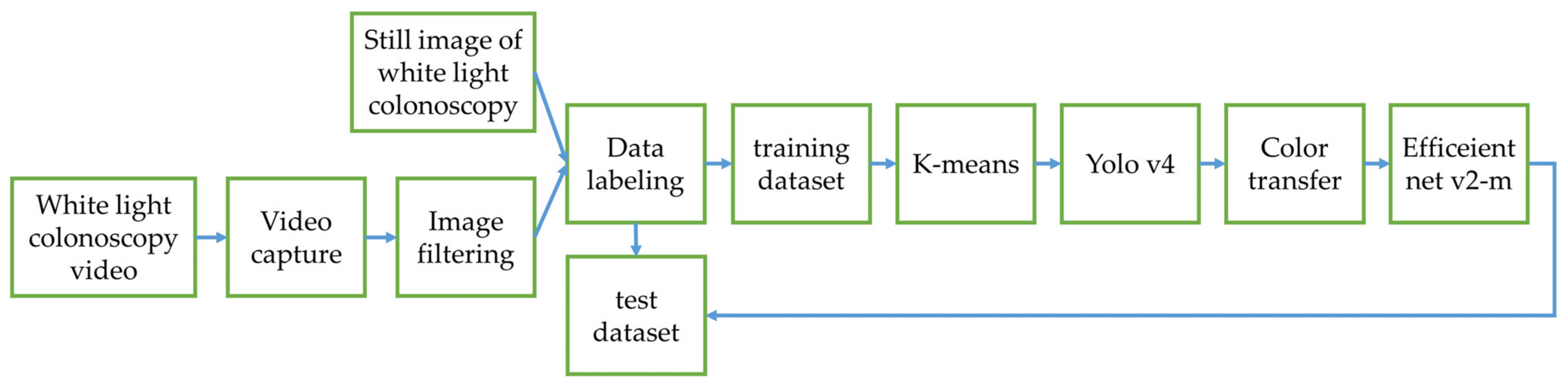

The output connection of the Olympus NBI system from the colonoscopy equipment to an external computer is not feasible. We need to convert the original WL image from the colonoscopy source to an NBI simulated CNN-based model on the background and show the WL image with the bounding box in the monitor (

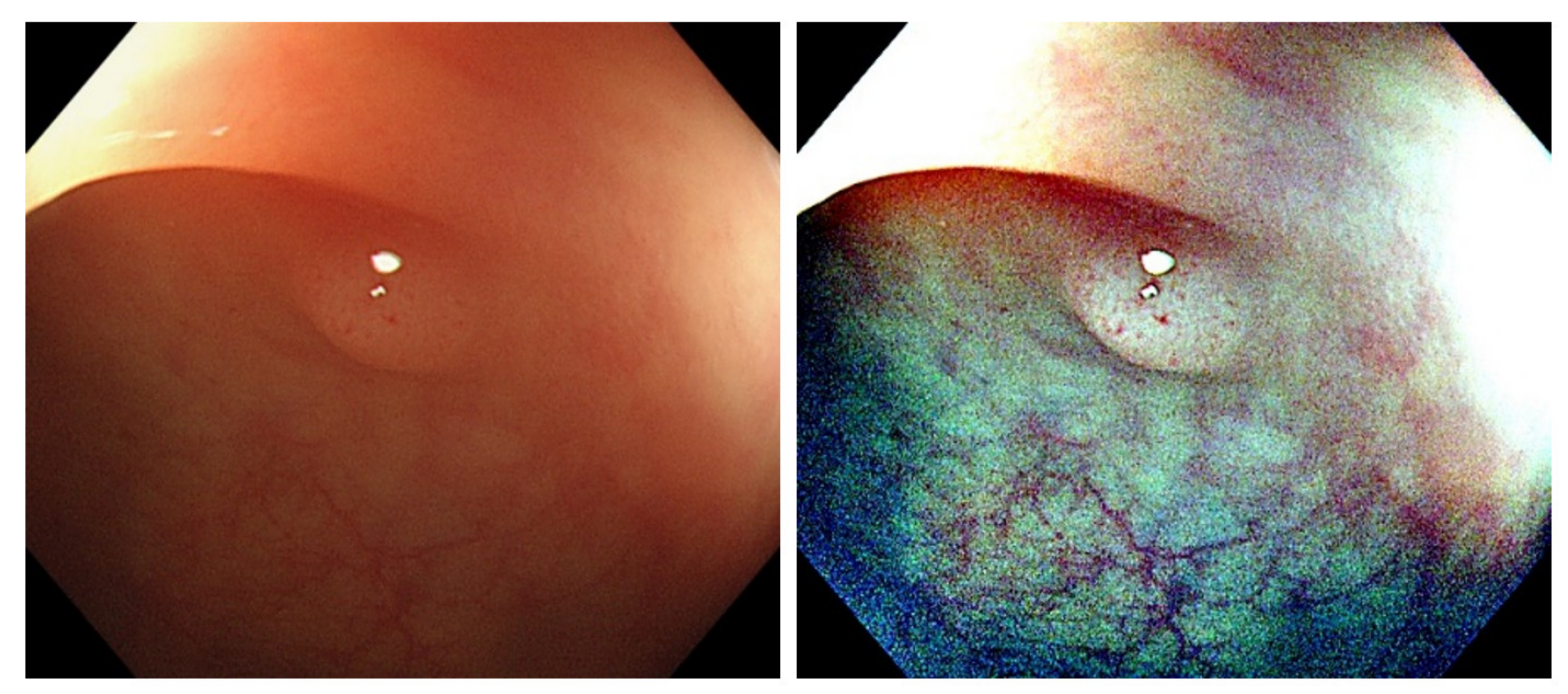

Figure 2). We selected three methods to simulate the NBI system for image enhancement: Color Transfer with Mean Shift (CTMS), Multi-scale Retinex (MSR), and gamma and sigmoid conversions. We also compared the selected methods with two conventional image enhancement methods, Histogram Equalization (HE) [

19,

20] and Contrast Limited Adaptive Histogram Equalization (CLAHE) [

21].

The CTMS conversion process is inspired by the work of Xiao et al. [

22], who transferred the insufficient training dataset images from the RGB color space to the CIE-LAB color space using a U-Net architecture, to generate the data augmented images.

MSR algorithm is an image enhancement method mimicking human visual perception which provides dynamic range compression, tonal rendition and color constancy [

23,

24,

25,

26]. Our eyes perceive colors by the light reflection back from an object with a certain wavelength. The human visual system captures colors irrespective of the illumination source under different spectral lighting conditions from a scene. The MSR algorithm separates the original image into a base and a detailed layer, which are processed to improve nonuniform illumination [

27]. It has been used for various issues as image dehazing [

28], image enhancement and defogging [

29] and color constancy computation [

30]. In real-world colonoscopy images, the illumination varies with uneven darkness and brightness owing to the light source on the tip of the colonoscope [

31]. Luo et al. [

32] used a modified MSR with detailed layer to solve the nonuniform and directional illumination on the surgical endoscopy field. Their combined visibility was improved from 0.81 to 1.06 and outperformed existent Retinex methods. Wang et al. [

33] corrected color images based on a MSR with a nonlinear functional transformation. They improved the overall brightness and contrast of an image and preserved the image details. Vani et al. [

31] discussed the use of MSR and Adaptive Histogram Equalization to suppress noise and improve visibility in wireless capsule endoscopy. Deeba et al. [

34] proposed a two-stage automated algorithm with Retinex and saliency region detection algorithm. They achieved a sensitivity of 97.33% and specificity of 79%. MSR provides superb endoscopy image enhancement with balanced brightness and contrast to detect subtle lesions in colonoscopy.

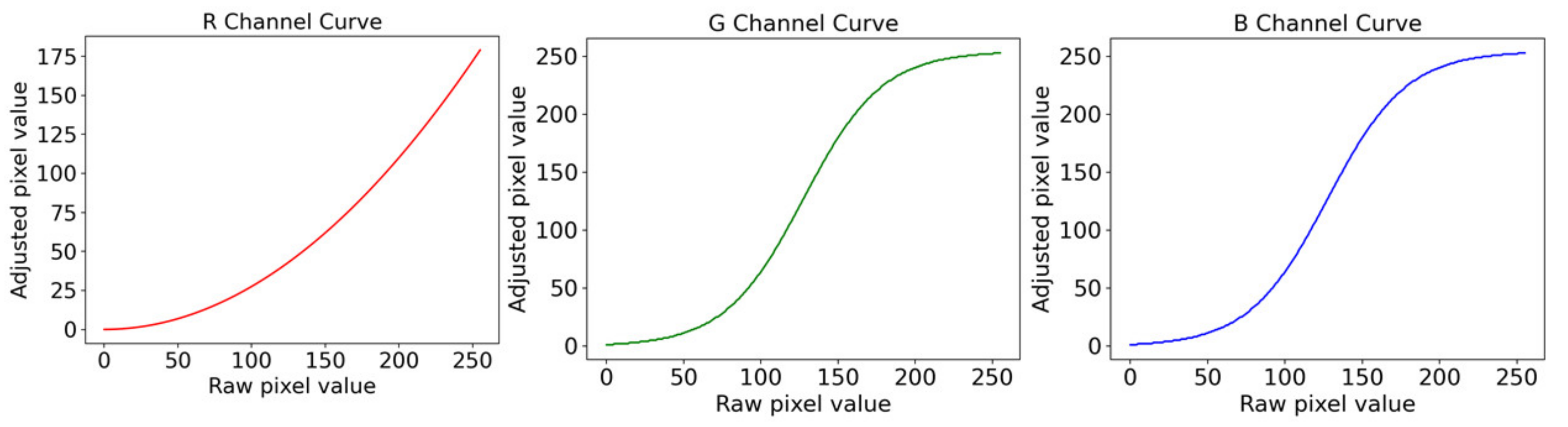

The sigmoidal-remapping function is accomplished by enhancing the image contrast in the limited dynamic range. That is, the lightness between the highlight and shadow in an image can be controlled with the lightness and darkness of the contrast in the sigmoid function [

35]. The sigmoidal-remapping function is a continuous nonlinear activation curve [

36]. Deeba et al. [

37] used a sigmoidal remapping curve to enhance the blue and green light channels in the endoscopy image combined with saliency map formation and histogram of gradient for feature extraction. They achieved a recall and F2 score of 86.33% and 75.51%, respectively.

In this study, we aim to establish a NBI simulated image enhancement technique combined with the computer-aided system to boost polyp detection and classification. We chose three different methods and compared them to each other for their effectiveness in endoscopy image enhancement.

3. Results

In this study, different color transfer methods were trained using deep learning networks and were compared with the model trained on the original images to evaluate the effect of color transfer methods on polyp identification and classification. The data are divided into three datasets according to different classification methods. The first dataset is divided into three types of polyps (TA, HP and SSA). The second dataset divides polyps into neoplastic (TA and SSA) and non-neoplastic (HP). The third dataset excludes the SSA due to them being scarce in number.

3.1. YOLOv4 Training Results in Experimental Design 1

In this section, the three datasets use MSRCR, gamma and sigmoid conversion, Color Transfer with Mean Shift, and original images to train the model with Yolov4 network, and we compare the model results. The neoplastic polyps, TA and SSA, are grouped into the same category for color transfer comparison. We use the MSRCR to compare with other color transfer methods and it shows the highest value at 77.6162 in mAP. The results of the conventional HE and CLAHE are also included to benchmark with the selected color transfer methods. The images are first transferred from the RGB color space to LAB color space and the HE and CLAHE are performed on the lightness L channel. There are two main operational parameters, tile size and clip limit, in the CLAHE image enhancement process. The tile size is the number of the non-overlapping tiles to which the original image is partitioned and is set to 8 × 8 in the experiments. The clip limit is the threshold that will be used to trim the histograms of the pixel distribution and is set to 2 in the experiments. The MSRCR performs best in the two groups of polyps (

Table 2). Therefore, MSRCR is the most suitable color transfer method to use in two classes.

Then, the dataset is divided into three categories according to different types of polyps for further comparison of color transfer methods. The gamma and sigmoid conversion mAP have the highest value of 72.1863 (

Table 3). Therefore, gamma and sigmoid conversion are suitable for color transfer in terms of the three polyp classes.

Since the SSA is a rare polyp with insufficient data, it affects the accuracy of the identification result. We exclude the SSA category and compared the mAP results of the two categories of HP and TA for color transfer. The mAP demonstrates that the MSRCR is the most suitable color transfer method, and its mAP value is 86.8422 (

Table 4).

Comparing the results of the three color transfer methods, the MSRCR has the best mAP result in two polyp classes. Although gamma and sigmoid conversion has a better result in three polyp classes analysis, we need to consider the low probability in the SSA group owing to scarce data. By excluding the SSA, the mAP of MSRCR color transfer is higher than using gamma and sigmoid conversion with TA and SSA combined. We speculate that the higher accuracy of gamma and sigmoid conversion on SSA results in better mAP than the MSRCR in three polyp classes. After considering the distribution of the dataset and the mAP results, this study selects MSRCR as the color transfer method.

3.2. Classifier Training Results in Experimental Design 2

In this section, the MSRCR, gamma and sigmoid conversion, Color Transfer with Mean Shift and original image dataset are adopted to train the model using Efficientnet v2-m network in three datasets. The polyps are automatically cropped from images and the classification task is performed based on the cropped polyp images. The accuracy is used as the criteria for polyp classification evaluation. The classification is correct if the type of polyp identified by the model matches the ground truth.

The results in the case of HP vs. combination of TA and SSA polyps can be seen from the above table (

Table 5). The result shows the best accuracy of MSRCR with 0.8643. Therefore, the MSRCR is the most accurate method for color transfer classification.

Then, the classification was performed in three polyp classes. The gamma and sigmoid conversion show the best accuracy of 0.7517, and the accuracy of MSRCR is 0.7491 (

Table 6). The values are similar. In this classification analysis, both methods are suitable for the task.

For the results after excluding SSA polyps, the MSRCR accuracy is the highest with a value of 0.8428 (

Table 7). Under this classification task, the most suitable color transfer method is MSRCR.

From the accuracy comparison of the different methods in several classes, the classification accuracy of the original image is the lowest. All color transfer methods using datasets other than the original image perform better. The results demonstrated that the color transfer has a positive impact on the polyp identification. Combining the mAP results from the previous section and the accuracy results in this section, we concluded that the Retinex has an excellent outcome and is the best choice for color transfer.