Design and Testing of Augmented Reality-Based Fluorescence Imaging Goggle for Intraoperative Imaging-Guided Surgery

Abstract

:1. Introduction

2. Materials and Methods

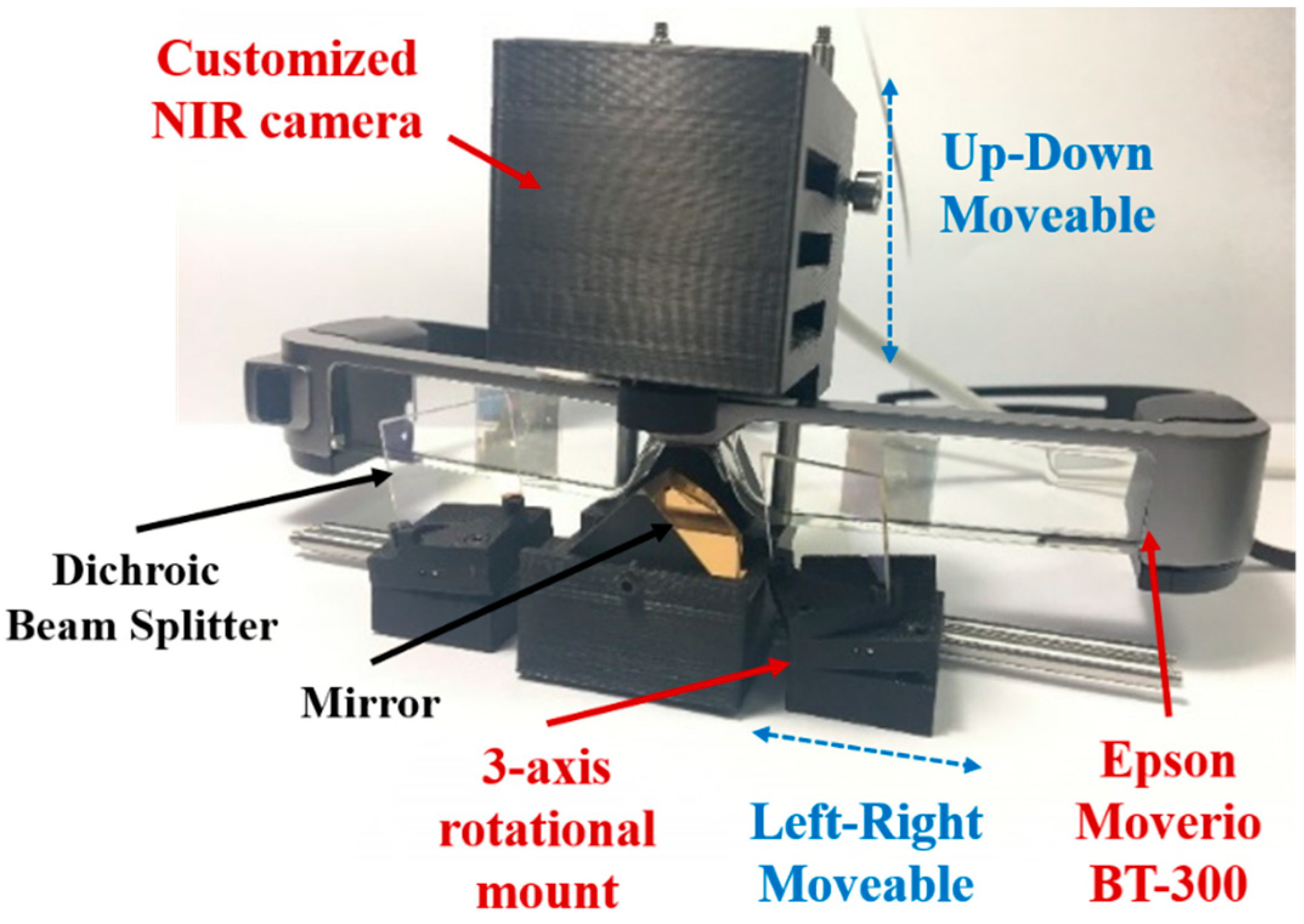

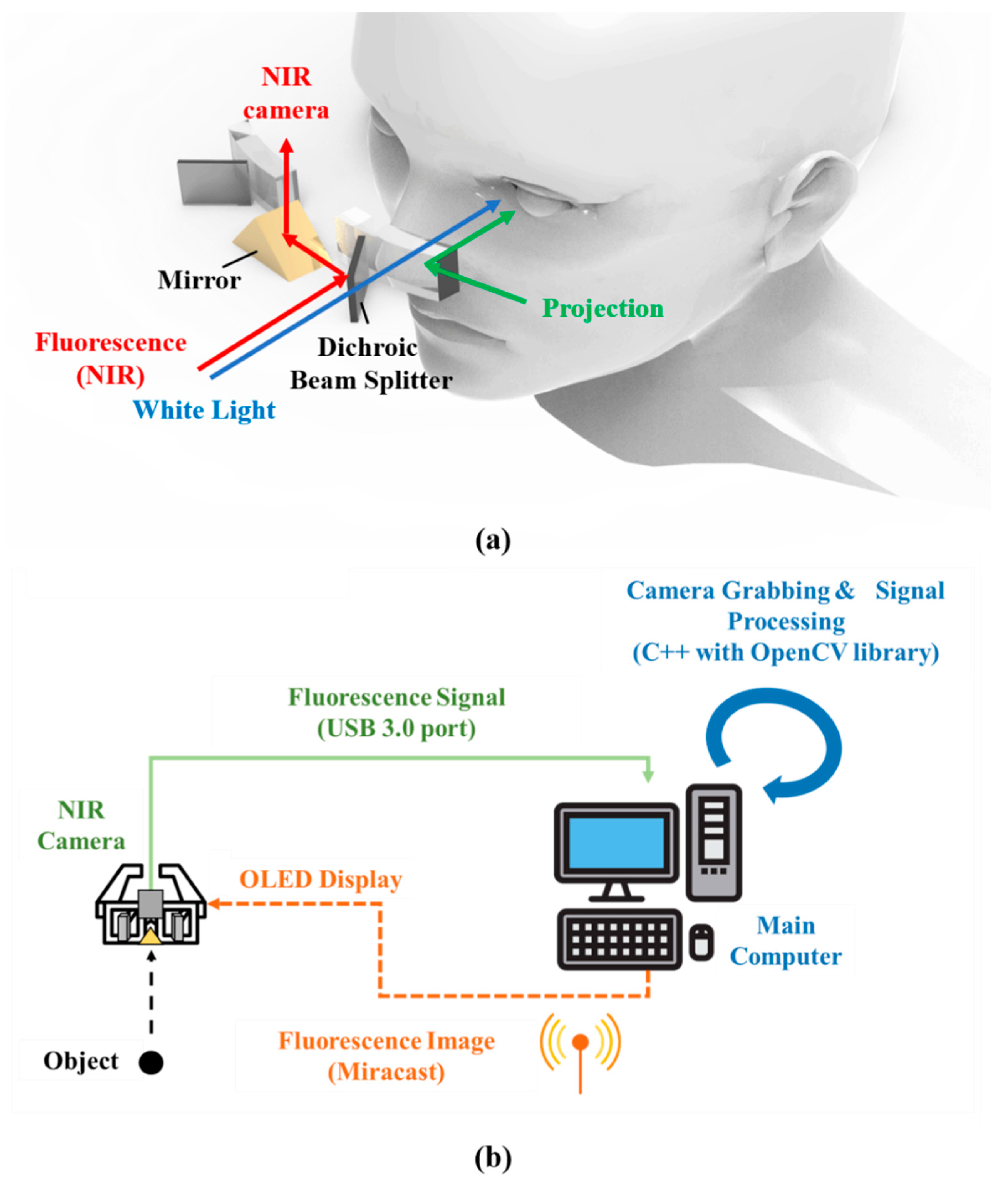

2.1. Hardware Design for the Arfi System

2.2. Operational Principle of Arfi System

2.3. In Vivo Animal Studies

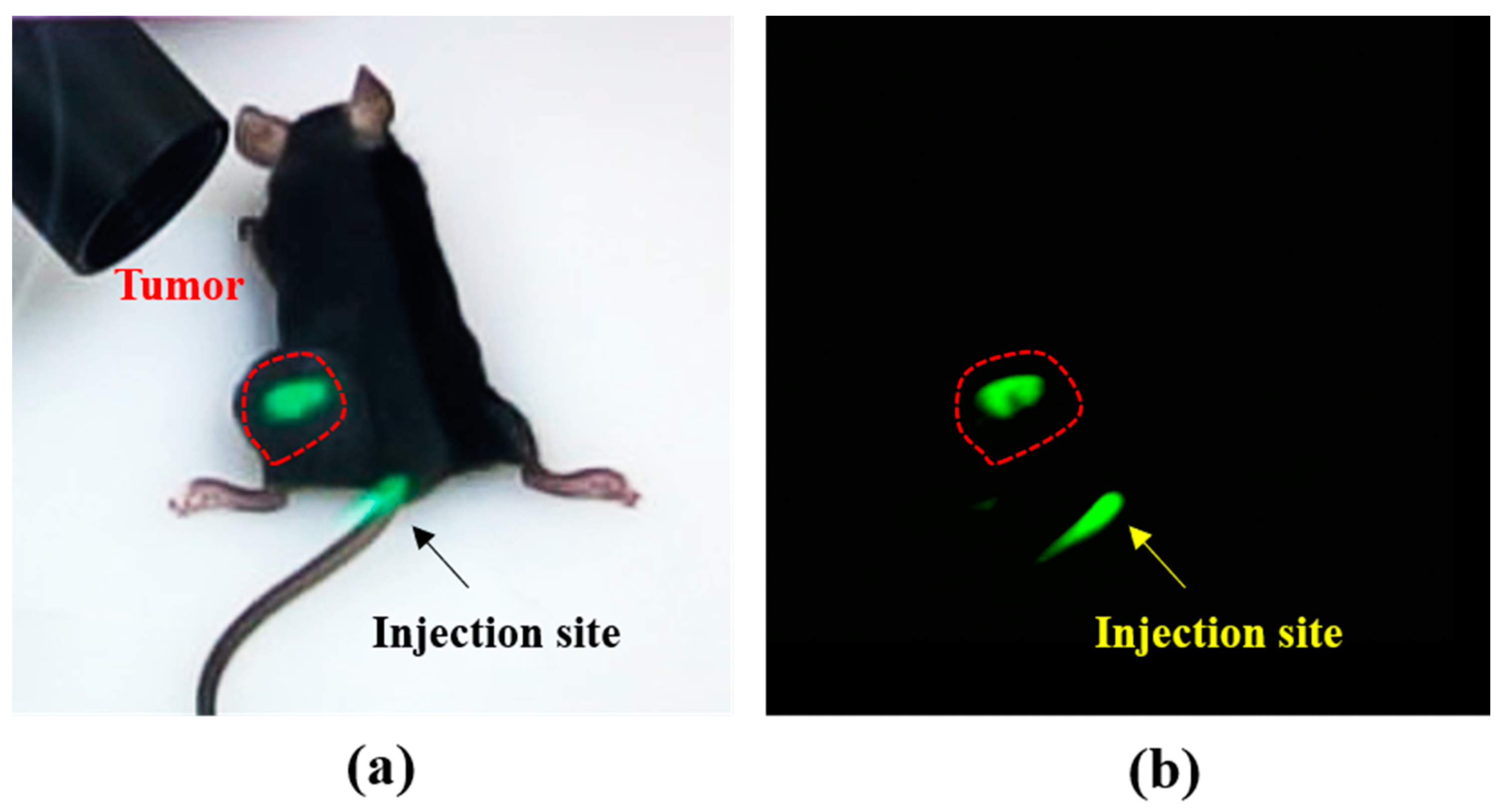

2.3.1. Mouse Subcutaneous Tumor Model

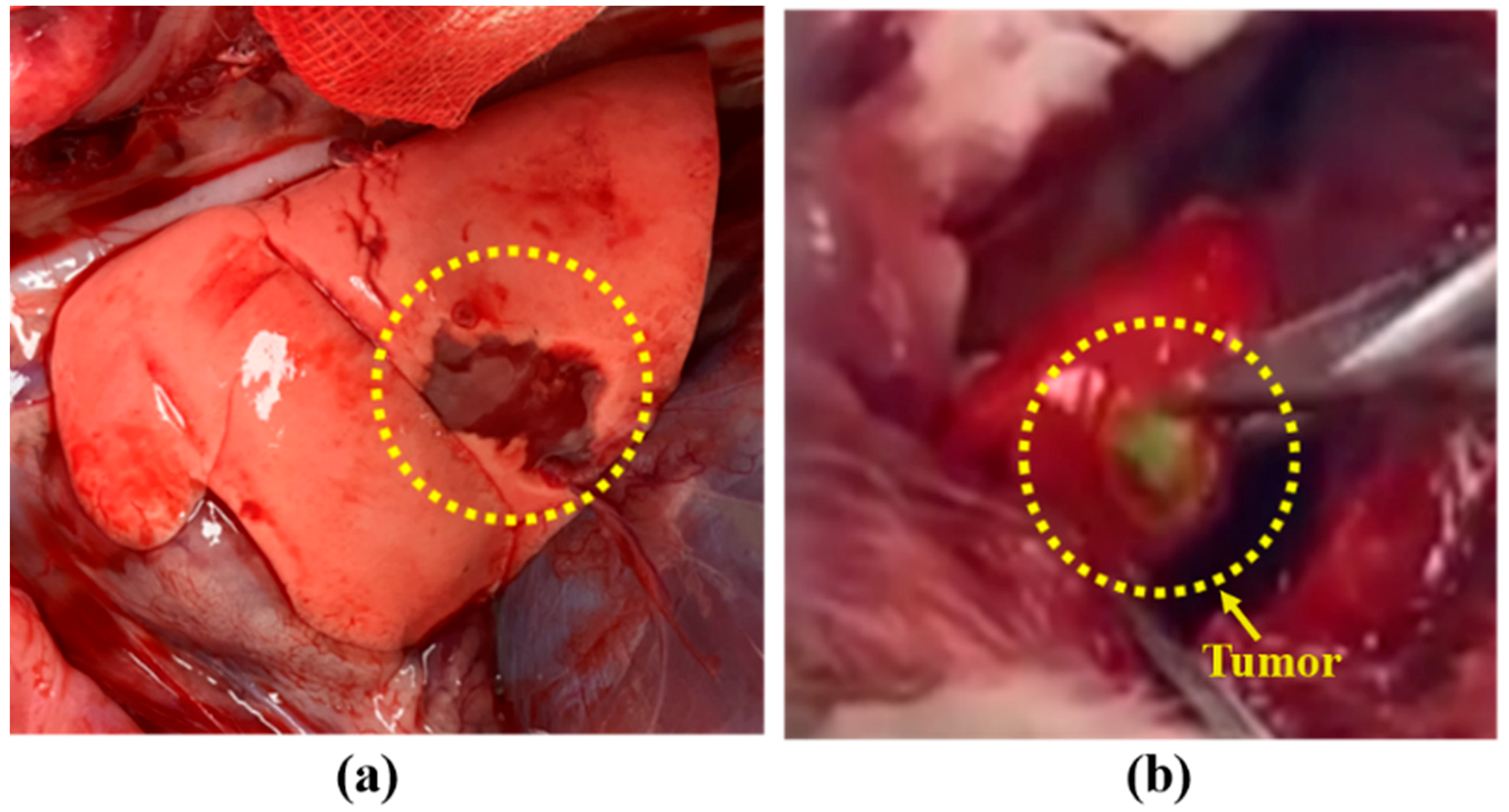

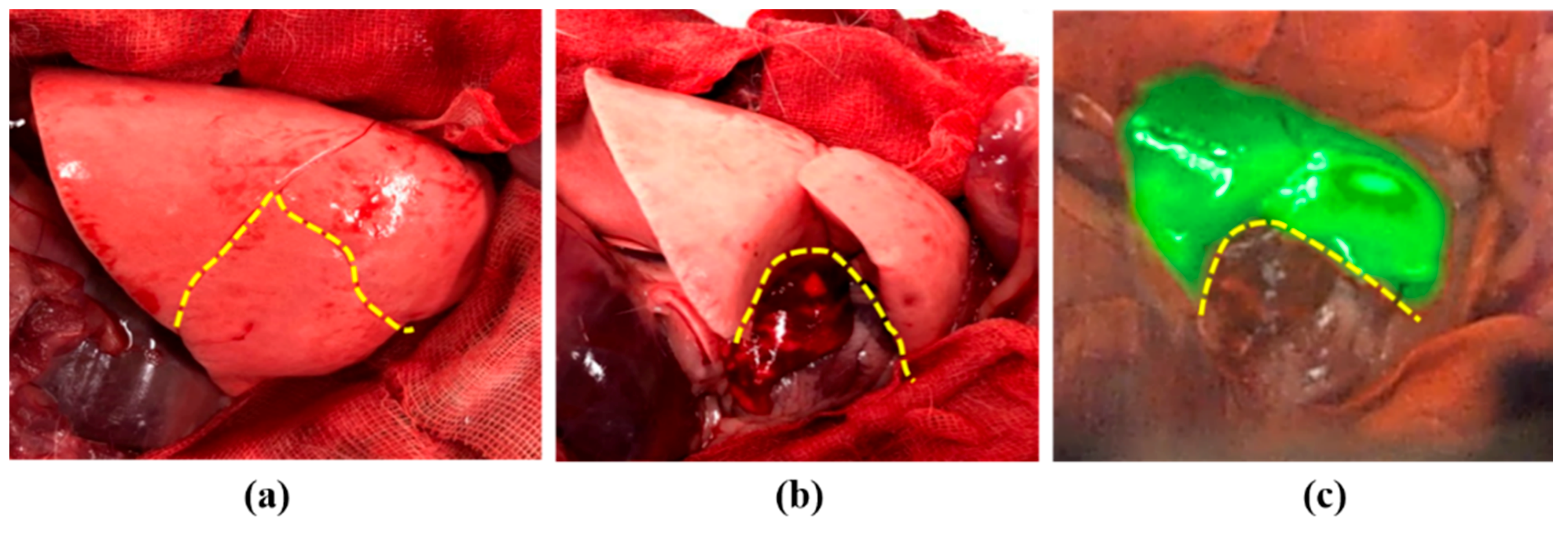

2.3.2. Rabbit Lung Cancer Model

3. Results

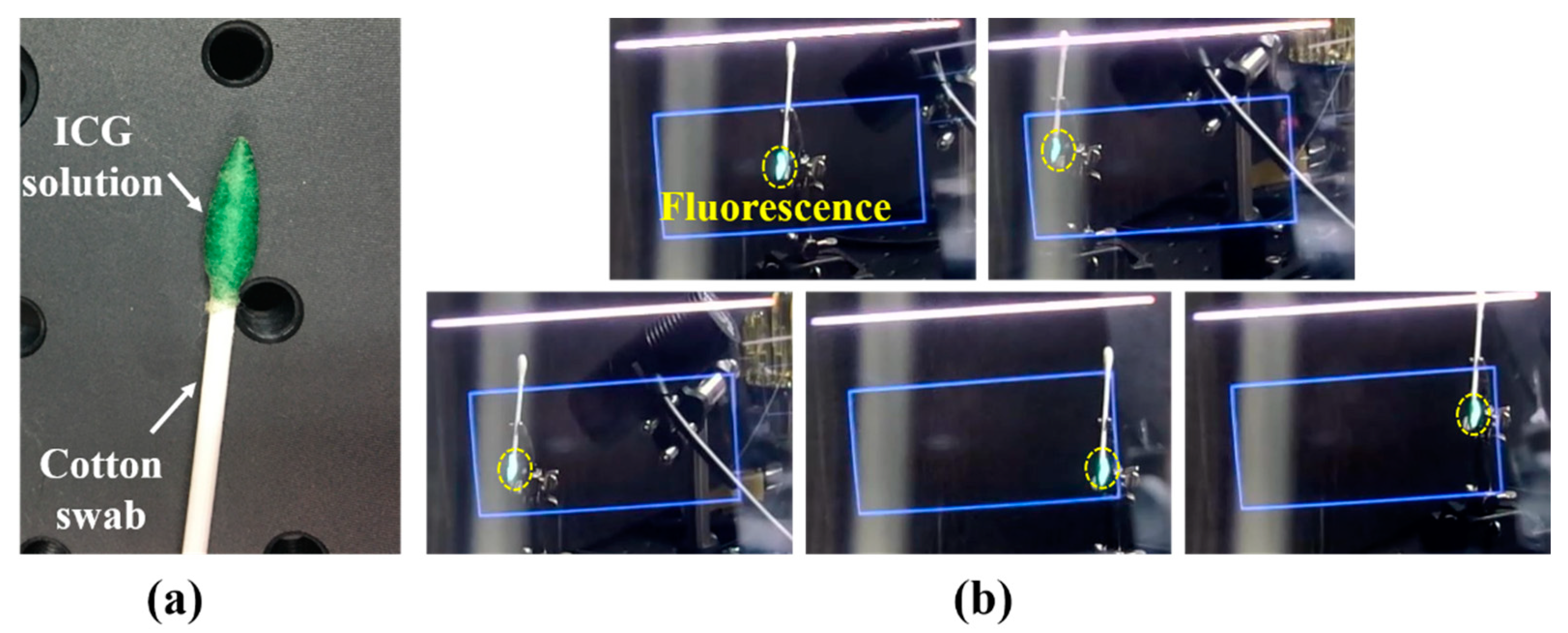

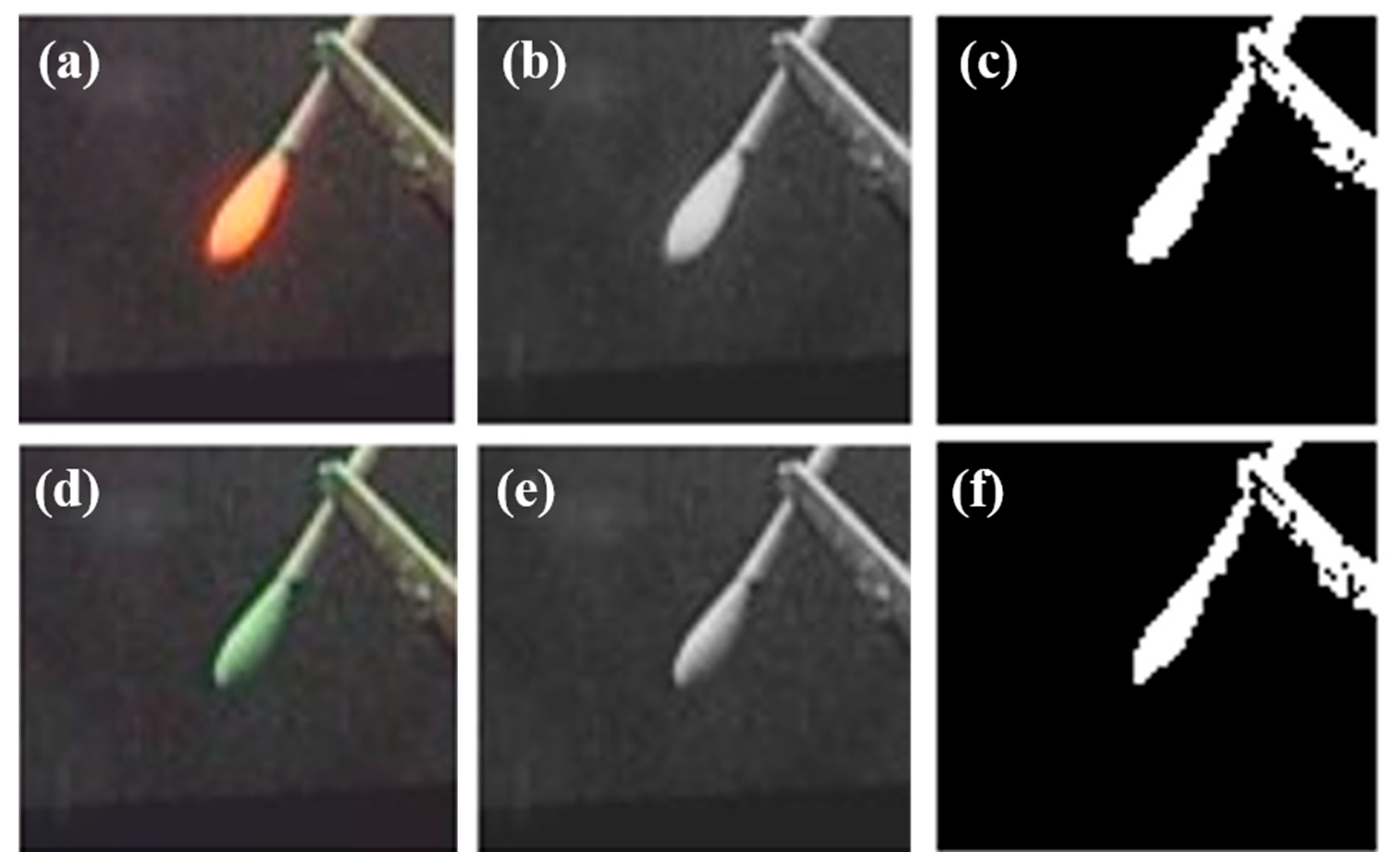

3.1. System Evaluation

3.2. Tumor Detection Using Arfi System in Mouse Tumor Model

3.3. Tumor Detection Using Arfi System in Rabbit Lung Tumor Model

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cuevas, C.; Shibata, D. Medical imaging in the diagnosis and management of cancer pain. Curr. Pain Headache Rep. 2009, 13, 261–270. [Google Scholar] [CrossRef] [PubMed]

- Wagner, R.F.; Metz, C.E.; Campbell, G. Assessment of medical imaging systems and computer aids: A tutorial review. Acad. Radiol. 2007, 14, 723–748. [Google Scholar] [CrossRef]

- Gorpas, D.; Koch, M.; Anastasopoulou, M.; Bozhko, D.; Klemm, U.; Nieberler, M.; Ntziachristos, V. Multi-parametric standardization of fluorescence imaging systems based on a composite phantom. IEEE Trans. Biomed. Eng. 2019, 67, 185–192. [Google Scholar] [CrossRef] [Green Version]

- Collins, L.; Schnitt, S.; Achacoso, N.; Haque, R.; Nekhlyudov, L.; Fletcher, S.; Quesenberry, C.; Habel, L. Outcome of women with ductal carcinoma in situ (DCIS) treated with breast-conserving surgery alone: A case-control study of 225 patients from the Cancer Research Network. In Proceedings of the Laboratory Investigation, New York, NY, USA, 1 January 2009; pp. 34A–35A. [Google Scholar]

- Vicini, F.A.; Kestin, L.L.; Goldstein, N.S.; Chen, P.Y.; Pettinga, J.; Frazier, R.C.; Martinez, A.A. Impact of young age on outcome in patients with ductal carcinoma-in-situ treated with breast-conserving therapy. J. Clin. Oncol. 2000, 18, 296. [Google Scholar] [CrossRef] [PubMed]

- Vahrmeijer, A.L.; Hutteman, M.; Van Der Vorst, J.R.; Van De Velde, C.J.; Frangioni, J.V. Image-guided cancer surgery using near-infrared fluorescence. Nat. Rev. Clin. Oncol. 2013, 10, 507. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Predina, J.D.; Keating, J.; Newton, A.; Corbett, C.; Xia, L.; Shin, M.; Frenzel Sulyok, L.; Deshpande, C.; Litzky, L.; Nie, S. A clinical trial of intraoperative near-infrared imaging to assess tumor extent and identify residual disease during anterior mediastinal tumor resection. Cancer 2019, 125, 807–817. [Google Scholar] [CrossRef] [PubMed]

- Keating, J.J.; Nims, S.; Venegas, O.; Jiang, J.; Holt, D.; Kucharczuk, J.C.; Deshpande, C.; Singhal, S. Intraoperative imaging identifies thymoma margins following neoadjuvant chemotherapy. Oncotarget 2016, 7, 3059. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Keating, J.; Tchou, J.; Okusanya, O.; Fisher, C.; Batiste, R.; Jiang, J.; Kennedy, G.; Nie, S.; Singhal, S. Identification of breast cancer margins using intraoperative near-infrared imaging. J. Surg. Oncol. 2016, 113, 508–514. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.K.; Quan, Y.H.; Choi, B.H.; Park, J.-H.; Han, K.N.; Choi, Y.; Kim, B.-M.; Choi, Y.H. Intraoperative pulmonary neoplasm identification using near-infrared fluorescence imaging. Eur. J. Cardio-Thorac. Surg. 2015, 49, 1497–1502. [Google Scholar] [CrossRef] [Green Version]

- DSouza, A.V.; Lin, H.; Henderson, E.R.; Samkoe, K.S.; Pogue, B.W. Review of fluorescence guided surgery systems: Identification of key performance capabilities beyond indocyanine green imaging. J. Biomed. Opt. 2016, 21, 080901. [Google Scholar] [CrossRef]

- Gilmore, D.M.; Khullar, O.V.; Jaklitsch, M.T.; Chirieac, L.R.; Frangioni, J.V.; Colson, Y.L. Identification of metastatic nodal disease in a phase 1 dose-escalation trial of intraoperative sentinel lymph node mapping in non–small cell lung cancer using near-infrared imaging. J. Thorac. Cardiovasc. Surg. 2013, 146, 562–570. [Google Scholar] [CrossRef] [Green Version]

- Imai, K.; Minamiya, Y.; Saito, H.; Nakagawa, T.; Ito, M.; Ono, T.; Motoyama, S.; Sato, Y.; Konno, H.; Ogawa, J.-I. Detection of pleural lymph flow using indocyanine green fluorescence imaging in non-small cell lung cancer surgery: A preliminary study. Surg. Today 2013, 43, 249–254. [Google Scholar] [CrossRef] [PubMed]

- Oh, Y.; Quan, Y.H.; Kim, M.; Kim, B.-M.; Kim, H.K. Intraoperative fluorescence image-guided pulmonary segmentectomy. J. Surg. Res. 2015, 199, 287–293. [Google Scholar] [CrossRef] [PubMed]

- Hsieh, C.-P.; Liu, Y.-H.; Wu, Y.-C.; Hsieh, M.-J.; Chao, Y.-K. Indocyanine green fluorescence-navigated robotic segmentectomy. Surg. Endosc. 2017, 31, 3347–3348. [Google Scholar] [CrossRef] [PubMed]

- Quan, Y.H.; Han, K.N.; Kim, H.K. Fluorescence Image-Based Evaluation of Gastric Tube Perfusion during Esophagogastrostomy. Korean J. Thorac. Cardiovasc. Surg. 2020, 53, 178. [Google Scholar] [CrossRef]

- Oh, Y.; Quan, Y.H.; Choi, Y.; Kim, C.K.; Kim, H.; Kim, H.K.; Kim, B.-M. Intraoperative combined color and fluorescent images–based sentinel node mapping in the porcine lung: Comparison of indocyanine green with or without albumin premixing. J. Thorac. Cardiovasc. Surg. 2013, 146, 1509–1515. [Google Scholar] [CrossRef] [Green Version]

- Oh, Y.; Lee, Y.-S.; Quan, Y.H.; Choi, Y.; Jeong, J.M.; Kim, B.-M.; Kim, H.K. Thoracoscopic color and fluorescence imaging system for sentinel lymph node mapping in porcine lung using indocyanine green-neomannosyl human serum albumin: Intraoperative image-guided sentinel nodes navigation. Ann. Surg. Oncol. 2014, 21, 1182–1188. [Google Scholar] [CrossRef]

- Kim, H.K.; Quan, Y.H.; Oh, Y.; Park, J.Y.; Park, J.-H.; Choi, Y.; Lee, Y.-S.; Jeong, J.M.; Choi, Y.H.; Kim, B.-M. Macrophage-targeted indocyanine green-neomannosyl human serum albumin for intraoperative sentinel lymph node mapping in porcine esophagus. Ann. Thorac. Surg. 2016, 102, 1149–1155. [Google Scholar] [CrossRef] [Green Version]

- Quan, Y.H.; Kim, M.; Kim, H.K.; Kim, B.-M. Fluorescent image-based evaluation of gastric conduit perfusion in a preclinical ischemia model. J. Thorac. Dis. 2018, 10, 5359. [Google Scholar] [CrossRef]

- Liu, Y.; Bauer, A.Q.; Akers, W.J.; Sudlow, G.; Liang, K.; Shen, D.; Berezin, M.Y.; Culver, J.P.; Achilefu, S. Hands-free, wireless goggles for near-infrared fluorescence and real-time image-guided surgery. Surgery 2011, 149, 689–698. [Google Scholar] [CrossRef] [Green Version]

- Troyan, S.L.; Kianzad, V.; Gibbs-Strauss, S.L.; Gioux, S.; Matsui, A.; Oketokoun, R.; Ngo, L.; Khamene, A.; Azar, F.; Frangioni, J.V. The FLARE™ intraoperative near-infrared fluorescence imaging system: A first-in-human clinical trial in breast cancer sentinel lymph node mapping. Ann. Surg. Oncol. 2009, 16, 2943–2952. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Akers, W.J.; Bauer, A.Q.; Mondal, S.; Gullicksrud, K.; Sudlow, G.P.; Culver, J.P.; Achilefu, S. Intraoperative detection of liver tumors aided by a fluorescence goggle system and multimodal imaging. Analyst 2013, 138, 2254–2257. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mondal, S.B.; Gao, S.; Zhu, N.; Sudlow, G.P.; Liang, K.; Som, A.; Akers, W.J.; Fields, R.C.; Margenthaler, J.; Liang, R. Binocular Goggle Augmented Imaging and Navigation System provides real-time fluorescence image guidance for tumor resection and sentinel lymph node mapping. Sci. Rep. 2015, 5, 12117. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Badiali, G.; Ferrari, V.; Cutolo, F.; Freschi, C.; Caramella, D.; Bianchi, A.; Marchetti, C. Augmented reality as an aid in maxillofacial surgery: Validation of a wearable system allowing maxillary repositioning. J. Cranio-Maxillofac. Surg. 2014, 42, 1970–1976. [Google Scholar] [CrossRef]

- Rolland, J.P.; Fuchs, H. Optical versus video see-through head-mounted displays in medical visualization. Presence Teleoperators Virtual Environ. 2000, 9, 287–309. [Google Scholar] [CrossRef]

- Shao, P.; Ding, H.; Wang, J.; Liu, P.; Ling, Q.; Chen, J.; Xu, J.; Zhang, S.; Xu, R. Designing a wearable navigation system for image-guided cancer resection surgery. Ann. Biomed. Eng. 2014, 42, 2228–2237. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Pei, J.; Wang, D.; Gan, Q.; Ye, J.; Yue, J.; Wang, B.; Povoski, S.P.; Martin, E.W., Jr.; Hitchcock, C.L. A wearable Goggle navigation system for dual-mode optical and ultrasound localization of suspicious lesions: Validation studies using tissue-simulating phantoms and an ex vivo human breast tissue model. PLoS ONE 2016, 11, e0157854. [Google Scholar] [CrossRef] [PubMed]

- Mondal, S.B.; Gao, S.; Zhu, N.; Habimana-Griffin, L.; Akers, W.J.; Liang, R.; Gruev, V.; Margenthaler, J.; Achilefu, S. Optical see-through cancer vision goggles enable direct patient visualization and real-time fluorescence-guided oncologic surgery. Ann. Surg. Oncol. 2017, 24, 1897–1903. [Google Scholar] [CrossRef]

- Maruyama, K.; Watanabe, E.; Kin, T.; Saito, K.; Kumakiri, A.; Noguchi, A.; Nagane, M.; Shiokawa, Y. Smart glasses for neurosurgical navigation by augmented reality. Oper. Neurosurg. 2018, 15, 551–556. [Google Scholar] [CrossRef]

- Ewers, R.; Schicho, K.; Undt, G.; Wanschitz, F.; Truppe, M.; Seemann, R.; Wagner, A. Basic research and 12 years of clinical experience in computer-assisted navigation technology: A review. Int. J. Oral Maxillofac. Surg. 2005, 34, 1–8. [Google Scholar] [CrossRef]

- Liu, Y.; Njuguna, R.; Matthews, T.; Akers, W.J.; Sudlow, G.P.; Mondal, S.B.; Tang, R.; Gruev, V.; Achilefu, S. Near-infrared fluorescence goggle system with complementary metal–oxide–semiconductor imaging sensor and see-through display. J. Biomed. Opt. 2013, 18, 101303. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mela, C.A.; Patterson, C.; Thompson, W.K.; Papay, F.; Liu, Y. Stereoscopic integrated imaging goggles for multimodal intraoperative image guidance. PLoS ONE 2015, 10, e0141956. [Google Scholar] [CrossRef] [PubMed]

- Alliance, W.-F. Miracast. Available online: https://www.wi-fi.org/discover-wi-fi/miracast (accessed on 19 October 2012).

- Choi, B.H.; Young, H.S.; Quan, Y.H.; Rho, J.; Eo, J.S.; Han, K.N.; Choi, Y.H.; Hyun Koo, K. Real-time computed tomography fluoroscopy-guided solitary lung tumor model in a rabbit. PLoS ONE 2017, 12, e0179220. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, N.; Mondal, S.; Gao, S.; Achilefu, S.; Gruev, V.; Liang, R. Dual-mode optical imaging system for fluorescence image-guided surgery. Opt. Lett. 2014, 39, 3830–3832. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Noll, M.; Noa-Rudolph, W.; Wesarg, S.; Kraly, M.; Stoffels, I.; Klode, J.; Spass, C.; Spass, G. ICG based augmented-reality-system for sentinel lymph node biopsy. In Proceedings of the Eurographics Workshop on Visual Computing for Biology and Medicine, Granada, Spain, 20–21 September 2018; pp. 11–15. [Google Scholar]

- Mondal, S.B.; Tsen, S.W.D.; Achilefu, S. Head-Mounted Devices for Noninvasive Cancer Imaging and Intraoperative Image-Guided Surgery. Adv. Funct. Mater. 2020, 30, 2000185. [Google Scholar] [CrossRef]

- Kraft, J.C.; Ho, R.J. Interactions of indocyanine green and lipid in enhancing near-infrared fluorescence properties: The basis for near-infrared imaging in vivo. Biochemistry 2014, 53, 1275–1283. [Google Scholar] [CrossRef] [Green Version]

- Homulle, H.; Powolny, F.; Stegehuis, P.; Dijkstra, J.; Li, D.-U.; Homicsko, K.; Rimoldi, D.; Muehlethaler, K.; Prior, J.; Sinisi, R. Compact solid-state CMOS single-photon detector array for in vivo NIR fluorescence lifetime oncology measurements. Biomed. Opt. Express 2016, 7, 1797–1814. [Google Scholar] [CrossRef] [Green Version]

- Stewart, H.L.; Hungerford, G.; Birch, D.J. Characterization of single channel liquid light guide coupling and SPAD array imaging for tumour margin estimation using fluorescence lifetime. Meas. Sci. Technol. 2020, 31, 125701. [Google Scholar] [CrossRef]

| Authors | Display Module | Hardware Design | Image | Application |

|---|---|---|---|---|

| Y. Liu et al. 2011 [21] | Monocular night vision viewer | Combined Night vision viewer, White/NIR light source | Monochrome/fluorescence fusion image | SLN mapping (preclinical) HCC imaging (clinical) |

| Y. Liu et al. 2013 [32] | Binocular HMD (ST1080, Silicon microdisplay) | Combined HMD, CMOS Camera, NIR light source | Monochrome/fluorescence fusion image or natural vision | SLN mapping Liver cancer surgery (preclinical) |

| P. Shao et al. 2014 [27] | Monocular HMD (Google glass, Google Labs) Binocular HMD (Personal Cinema System, Headplay) | Non-combined H.M.D., CCD. camera, NIR light source Combined HMD, CMOS camera Non-combined C.C.D. camera, NIR light source | Fluorescence image superimposed on natural vision Color/fluorescence fusion image | Phantom study |

| Mela CA et al. 2015 [33] | Binocular HMD (lab made) | Combined HMD, four CMOS sensors Non-combined hand-held microscopy, NIR light, ultrasound scanner | Color/fluorescence fusion image | Phantom study |

| S. B. Mondal et al. 2015 [24] | Binocular HMD (Carl Zeiss) | Combined HMD, custom VIS-NIR camera Non-combined NIR light | Color/fluorescence fusion image | Ovarian cancer surgery (preclinical) SLN mapping (clinical) |

| Zhang Z et al. 2016 [28] | Monocular HMD (Google glass, Google Labs) | Non-combined H.M.D., CCD. camera, NIR light, ultrasound probe | Fluorescence image superimposed on natural vision | SLN mapping (clinical) |

| S. B. Mondal et al. 2017 [29] | Binocular HMD (Carl Zeiss) | Combined HMD, custom VIS-NIR camera Non-combined NIR light | Color/fluorescence fusion image | Tumor resection (preclinical), SLN biopsy (clinical) |

| M. Keisuke et al. [30] | Binocular HMD (Moverio BT-200, Epson) | Combined HMD, optical markers Non-combined motion capture cameras | Fluorescence image superimposed on natural vision | Brain tumors |

| Our smart goggle system | Binocular HMD (Moverio BT-300, Epson) | Combined HMD, CMOS camera, and optical system Non-combined NIR light source | Fluorescence image superimposed on natural vision | Cancer detection, segmental line identification (preclinical) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.H.; Quan, Y.H.; Kim, M.S.; Kwon, K.H.; Choi, B.H.; Kim, H.K.; Kim, B.-M. Design and Testing of Augmented Reality-Based Fluorescence Imaging Goggle for Intraoperative Imaging-Guided Surgery. Diagnostics 2021, 11, 927. https://doi.org/10.3390/diagnostics11060927

Lee SH, Quan YH, Kim MS, Kwon KH, Choi BH, Kim HK, Kim B-M. Design and Testing of Augmented Reality-Based Fluorescence Imaging Goggle for Intraoperative Imaging-Guided Surgery. Diagnostics. 2021; 11(6):927. https://doi.org/10.3390/diagnostics11060927

Chicago/Turabian StyleLee, Seung Hyun, Yu Hua Quan, Min Sub Kim, Ki Hyeok Kwon, Byeong Hyeon Choi, Hyun Koo Kim, and Beop-Min Kim. 2021. "Design and Testing of Augmented Reality-Based Fluorescence Imaging Goggle for Intraoperative Imaging-Guided Surgery" Diagnostics 11, no. 6: 927. https://doi.org/10.3390/diagnostics11060927

APA StyleLee, S. H., Quan, Y. H., Kim, M. S., Kwon, K. H., Choi, B. H., Kim, H. K., & Kim, B.-M. (2021). Design and Testing of Augmented Reality-Based Fluorescence Imaging Goggle for Intraoperative Imaging-Guided Surgery. Diagnostics, 11(6), 927. https://doi.org/10.3390/diagnostics11060927