Novel Multimodal, Multiscale Imaging System with Augmented Reality

Abstract

1. Introduction

2. Materials and Methods

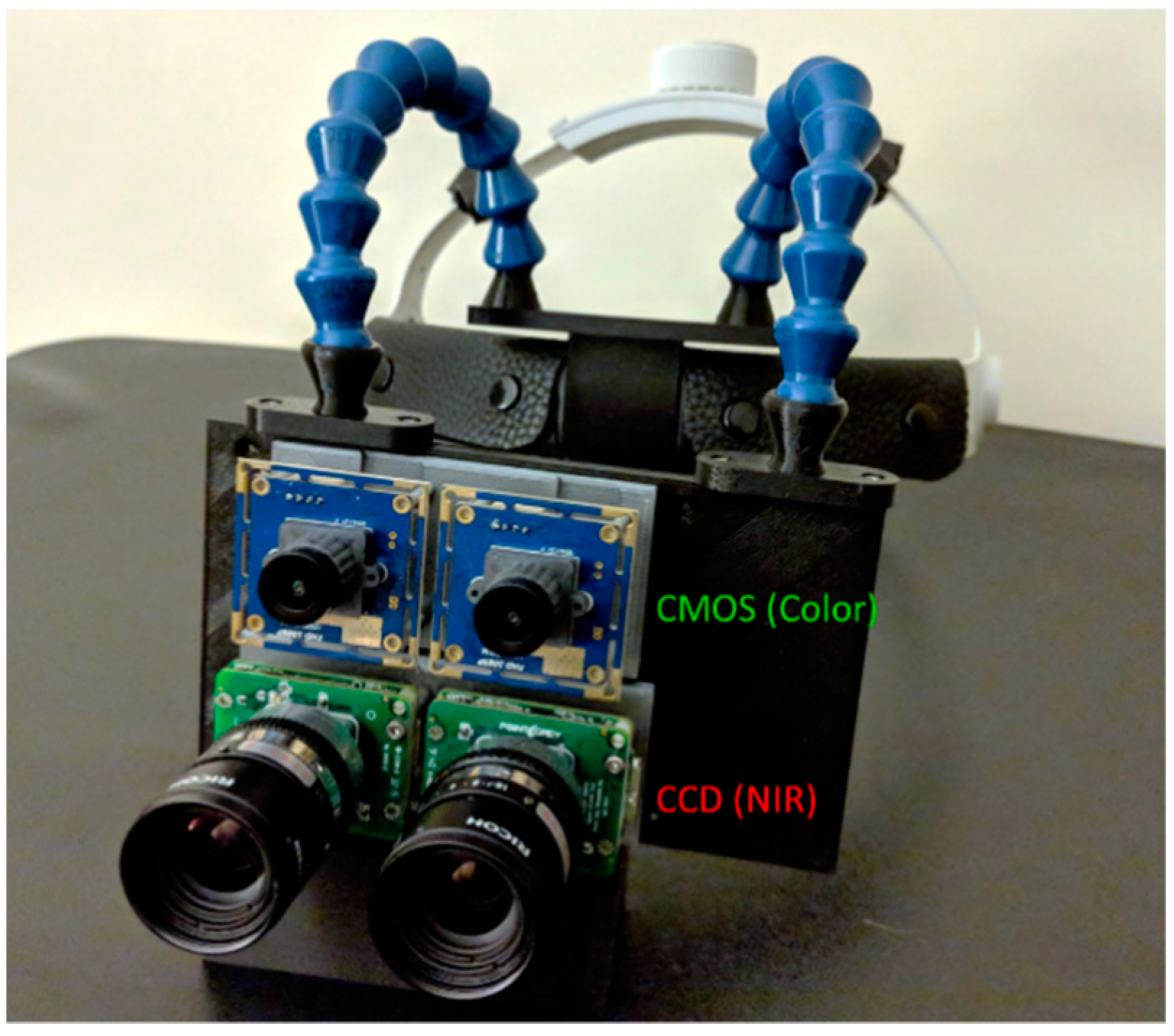

2.1. Multimodal Optical Imaging and Display

2.2. Ultrasound Imaging

2.3. Computation

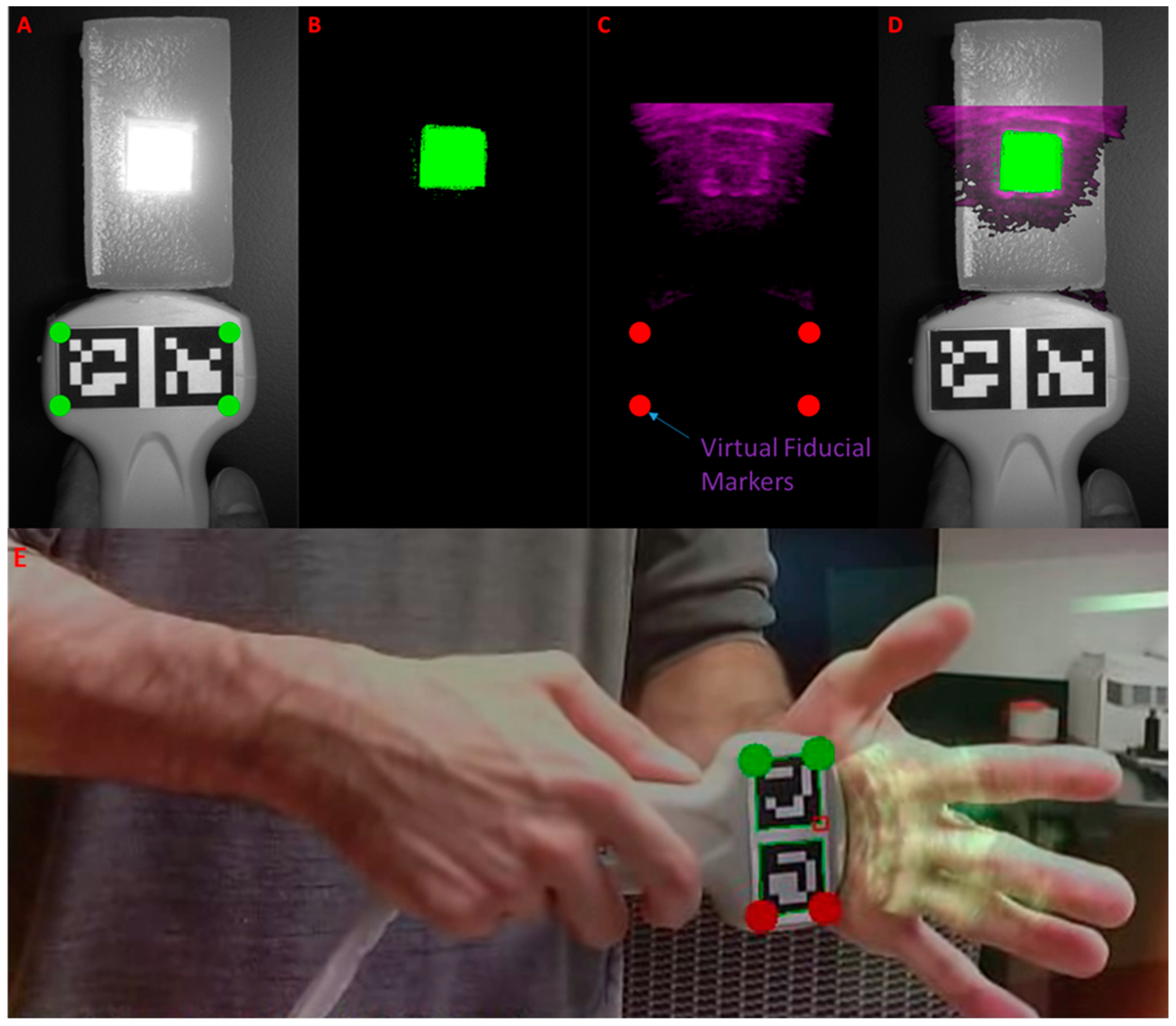

2.4. Ultrasound-to-Fluorescence Registration

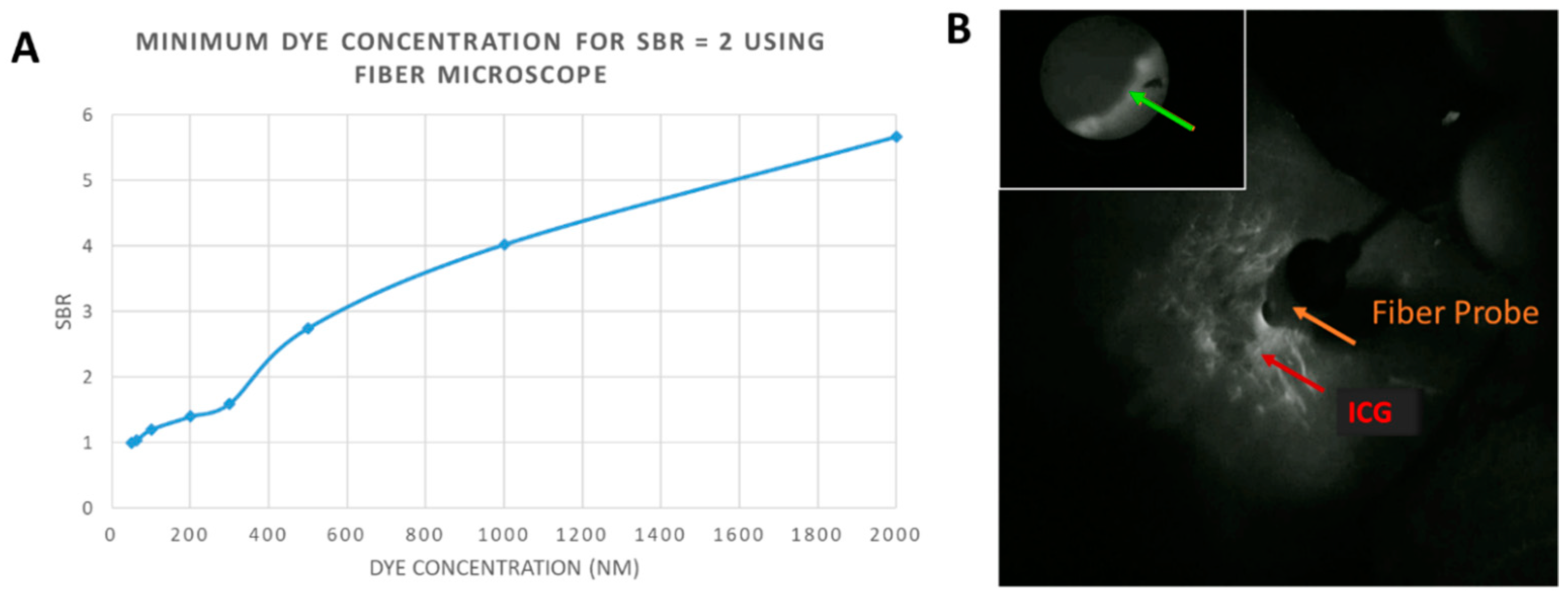

2.5. Multiscale Imaging with Fiber Microscope

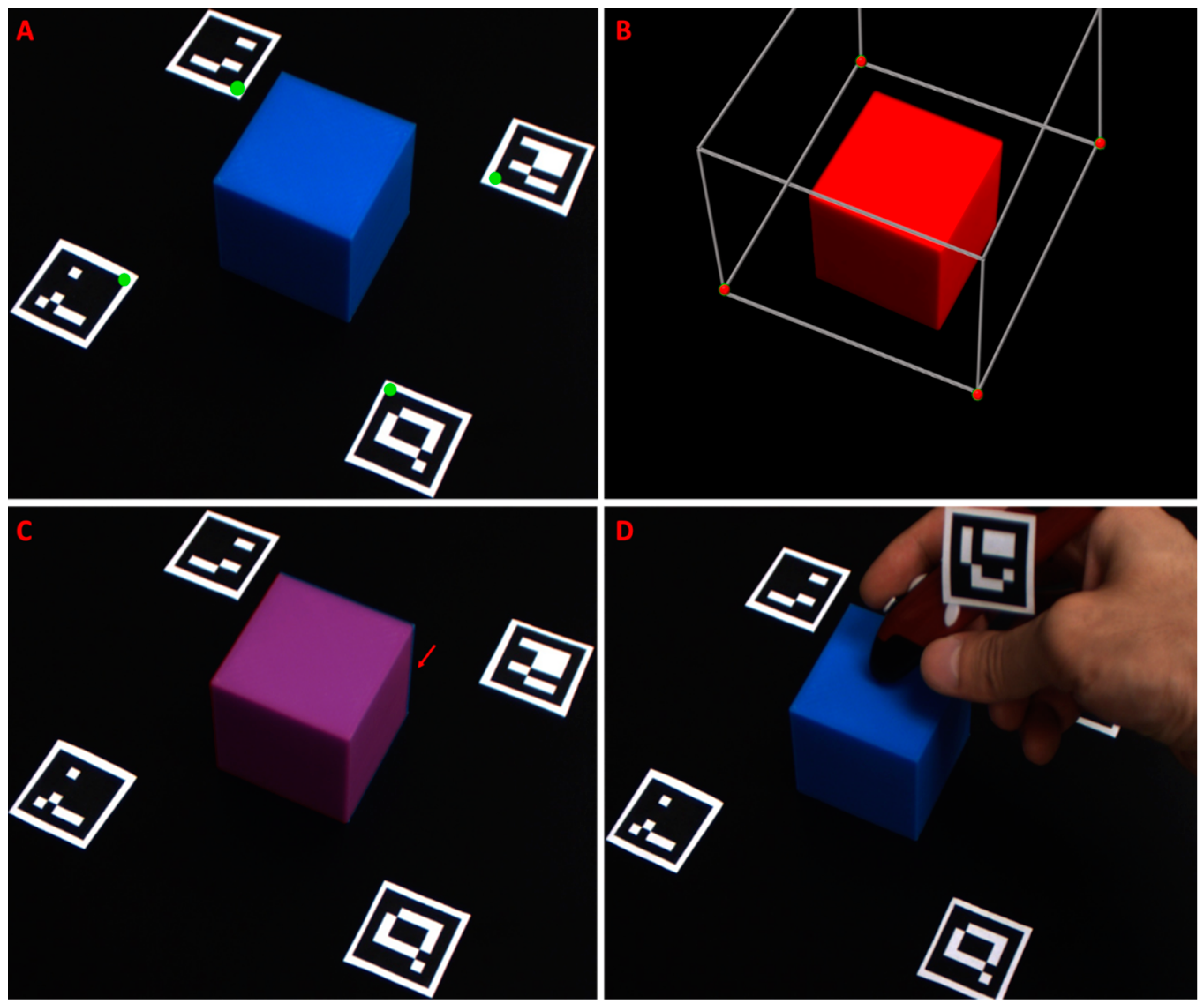

2.6. 3D Object Registration and AR Overlay Using Optical Markers

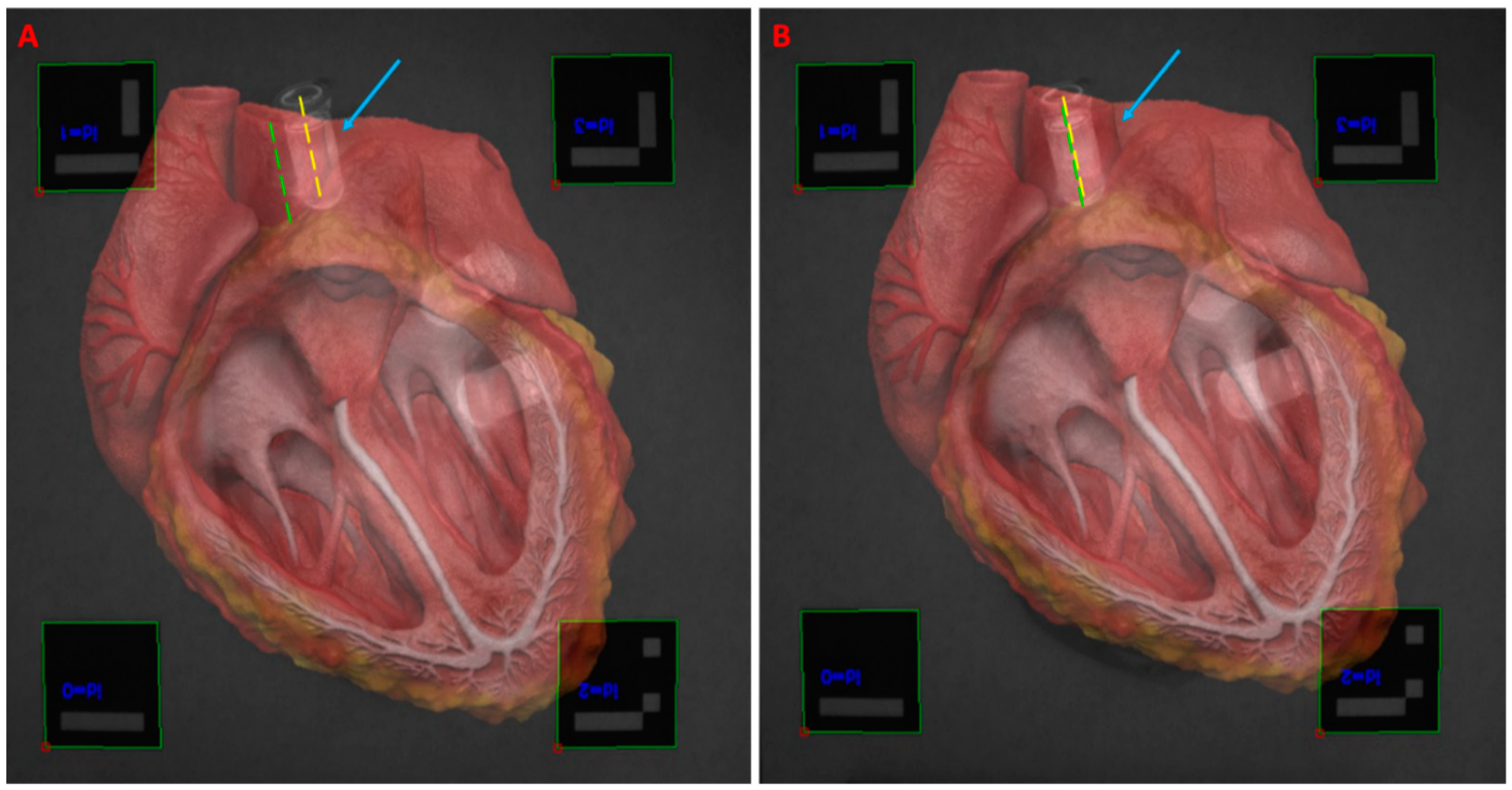

3. Results

4. Discussion

Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Novadaq Spy Elite Fluorescence Imaging System. Available online: http://novadaq.com/products/spy-elite/ (accessed on 16 August 2020).

- Troyan, S.L.; Kianzad, V.; Gibbs-Strauss, S.L.; Gioux, S.; Matsui, A.; Oketokoun, R.; Ngo, L.; Khamene, A.; Azar, F.; Frangioni, J.V. The FLARE™ intraoperative near-infrared fluorescence imaging system: A first-in-human clinical trial in breast cancer sentinel lymph node mapping. Ann. Surg. Oncol. 2009, 16, 2943–2952. [Google Scholar] [CrossRef]

- Koch, M.; Ntziachristos, V. Advancing surgical vision with fluorescence imaging. Annu. Rev. Med. 2016, 67, 153–164. [Google Scholar] [CrossRef] [PubMed]

- Landau, M.J.; Gould, D.J.; Patel, K.M. Advances in fluorescent-image guided surgery. Ann. Transl Med. 2016, 2, 392. [Google Scholar] [CrossRef] [PubMed]

- Nagaya, T.; Nakamura, Y.A.; Choyke, P.L.; Kobayashi, H. Fluorescence-guided surgery. Front. Oncol. 2017, 7, 314. [Google Scholar] [CrossRef] [PubMed]

- Mela, C.A.; Patterson, C.; Thompson, W.K.; Papay, F.; Liu, Y. Stereoscopic integrated imaging goggles for multimodal intraoperative image guidance. PLoS ONE 2015, 10, e0141956. [Google Scholar] [CrossRef]

- Chen, W.F.; Zhao, H.; Yamamoto, T.; Hara, H.; Ding, J. Indocyanine green lymphographic evidence of surgical efficacy following microsurgical and supermicrosurgical lymphedema reconstructions. J. Reconstr. Microsurg. 2016, 32, 688–698. [Google Scholar]

- Mela, C.A.; Papay, F.A.; Liu, Y. Intraoperative fluorescence imaging and multimodal surgical navigation using goggle system. Methods Mol. Biol. 2016, 1444, 85–95. [Google Scholar] [PubMed]

- Mela, C.; Liu, Y. Comprehensive characterization method for a fluorescence imaging system. Appl. Opt. 2019, 58, 8237–8246. [Google Scholar] [CrossRef]

- Mela, C.A.; Lemmer, D.P.; Bao, F.S.; Papay, F.; Hicks, T.; Liu, Y. Real-time dual-modal vein imaging system. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 203–213. [Google Scholar] [CrossRef]

- Valente, S.A.; Al-Hilli, Z.; Radford, D.M.; Yanda, C.; Tu, C.; Grobmyer, S.R. Near infrared fluorescent lymph node mapping with indocyanine green in breast cancer patients: A prospective trial. J. Am. Coll. Surg. 2019, 228, 672–678. [Google Scholar] [CrossRef]

- Mondal, S.B.; O’Brien, C.M.; Bishop, K.; Fields, R.C.; Margenthaler, J.A.; Achilefu, S. Repurposing molecular imaging and sensing for cancer image-guided surgery. J. Nucl. Med. 2020, 61, 1113–1122. [Google Scholar] [CrossRef]

- Shen, D.; Xu, B.; Liang, K.; Tang, R.; Sudlow, G.P.; Egbulefu, C.; Guo, K.; Som, A.; Gilson, R.; Maji, D.; et al. Selective imaging of solid tumours via the calcium-dependent high-affinity binding of a cyclic octapeptide to phosphorylated Annexin A2. Nat. Biomed. Eng. 2020, 4, 298–313. [Google Scholar] [CrossRef]

- Antaris, A.L.; Chen, H.; Cheng, K.; Sun, Y.; Hong, G.; Qu, C.; Diao, S.; Deng, Z.; Hu, X.; Zhang, B.; et al. A small-molecule dye for NIR-II imaging. Nat. Mater. 2016, 15, 235–242. [Google Scholar] [CrossRef]

- Luo, S.; Zhang, E.; Su, Y.; Cheng, T.; Shi, C. A review of NIR dyes in cancer targeting and imaging. Biomaterials 2011, 32, 7127–7138. [Google Scholar] [CrossRef]

- Gessler, F.; Forster, M.-T.; Duetzmann, S.; Mittelbronn, M.; Hattingen, E.; Franz, K.; Seifert, V.; Senft, C. Combination of intraoperative magnetic resonance imaging and intraoperative fluorescence to enhance the resection of contrast enhancing gliomas. Neurosurgery 2015, 77, 16–22. [Google Scholar] [CrossRef] [PubMed]

- Mislow, J.M.; Golby, A.J.; Black, P.M. Origins of Intraoperative MRI. Magn. Reason Imaging Clin. 2010, 18. [Google Scholar] [CrossRef] [PubMed]

- Moiyadi, A.; Shetty, P. Navigable intraoperative ultrasound and fluorescence-guided resections are complementary in resection control of malignant gliomas: One size does not fit all. J. Neurol. Surg. A Cent. Eur. Neurosurg. 2014, 75, 434–441. [Google Scholar]

- Ramos, M.; Díez, J.; Ramos, T.; Ruano, R.; Sancho, M.; González-Orús, J. Intraoperative ultrasound in conservative surgery for non-palpable breast cancer after neoadjuvant chemotherapy. Int. J. Surg. 2014, 12, 572–577. [Google Scholar] [CrossRef] [PubMed]

- Sikošek, N.Č.; Dovnik, A.; Arko, D.; Takač, I. The role of intraoperative ultrasound in breast-conserving surgery of nonpalpable breast cancer. Wien. Klin. Wochenschr. 2014, 126, 90–94. [Google Scholar] [CrossRef]

- Haque, A.; Faizi, S.H.; Rather, J.A.; Khan, M.S. Next generation NIR fluorophores for tumor imaging and fluorescence-guided surgery: A review. Bioorg. Med. Chem. 2017, 25, 2017–2034. [Google Scholar] [CrossRef]

- DSouza, A.V.; Lin, H.; Henderson, E.R.; Samkoe, K.S.; Pogue, B.W. Review of fluorescence guided surgery systems: Identification of key performance capabilities beyond indocyanine green imaging. J. Biomed. Opt. 2016, 21, 080901. [Google Scholar] [CrossRef]

- Pogue, B.W.; Paulsen, K.D.; Samkoe, K.S.; Elliott, J.T.; Hasan, T.; Strong, T.V.; Draney, D.R.; Feldwisch, J. Vision 20/20: Molecular-guided surgical oncology based upon tumor metabolism or immunologic phenotype: Technological pathways for point of care imaging and intervention. Med. Phys. 2016, 43, 3143–3156. [Google Scholar] [CrossRef] [PubMed]

- Samkoe, K.S.; Bates, B.D.; Elliott, J.T.; LaRochelle, E.; Gunn, J.R.; Marra, K.; Feldwisch, J.; Ramkumar, D.B.; Bauer, D.F.; Paulsen, K.D.; et al. Application of fluorescence-guided surgery to subsurface cancers requiring wide local excision: Literature review and novel developments toward indirect visualization. Cancer Control 2018, 25. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Yang, L.; Yi, W.; Fan, W.; Wen, Y.; Miao, X.; Xiong, L. Combination of fluorescence-guided surgery with photodynamic therapy for the treatment of cancer. Mol. Imaging 2017, 16. [Google Scholar] [CrossRef]

- Inc, N.D. Optotrak Certus. Available online: https://www.ndigital.com/msci/products/optotrak-certus/ (accessed on 10 January 2020).

- Sastry, R.; Bi, W.L.; Pieper, S.; Frisken, S.; Kapur, T.; Wells, W., III; Golby, A.J. Applications of ultrasound in the resection of brain tumors. J. Neuroimaging 2017, 27, 5–15. [Google Scholar] [CrossRef]

- Sauer, F.; Khamene, A.; Bascle, B.; Schimmang, L.; Wenzel, F.; Vogt, S. Augmented Reality Visualization of Ultrasound Images: System Description, Calibration, and Features; IEEE and ACM International Symposium on Augmented Reality; IEEE: New York, NY, USA, 2001. [Google Scholar]

- Decker, R.S.; Shademan, A.; Opfermann, J.D.; Leonard, S.; Kim, P.C.; Krieger, A. Biocompatible near-infrared three-dimensional tracking system. IEEE Trans. Biomed. Eng. 2017, 64, 549–556. [Google Scholar] [PubMed]

- Chung, S.-W.; Shih, C.-C.; Huang, C.-C. Freehand three-dimensional ultrasound imaging of carotid artery using motion tracking technology. Ultrasonics 2017, 74, 11–20. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Ye, M.; Chan, P.-L.; Yang, G.-Z. Real-time surgical tool tracking and pose estimation using a hybrid cylindrical marker. Int. J. CARS 2017, 12, 921–930. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Wang, J.; Liao, H.; Yamashita, H.; Sakuma, I.; Chiba, T.; Kobayashi, E. Self-registration of ultrasound imaging device to navigation system using surgical instrument kinematics in minimally invasive procedure. In Computer Aided Surgery; Fujie, M.G., Ed.; Springer: Tokyo, Japan, 2016. [Google Scholar]

- Gerard, I.J.; Collins, D.L. An analysis of tracking error in image-guided neurosurgery. Int. J. CARS 2015, 10, 1579–1588. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Šuligoj, F.; Švaco, M.; Jerbić, B.; Šekoranja, B.; Vidaković, J. Automated marker localization in the planning phase of robotic neurosurgery. IEEE Access 2017, 5, 12265–12274. [Google Scholar] [CrossRef]

- Chen, X.; Xu, L.; Wang, Y.; Wang, H.; Wang, F.; Zeng, X.; Wang, Q.; Egger, J. Development of a surgical navigation system based on augmented reality using an optical see-through head-mounted display. J. Biomed. Inform. 2015, 55, 124–131. [Google Scholar] [CrossRef] [PubMed]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.; Marín-Jiménez, M. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recogn. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Edgcumbe, P.; Nguan, C.; Rohling, R. Calibration and stereo tracking of a laparoscopic ultrasound transducer for augmented reality in surgery. In Augmented Reality Environments for Medical Imaging and Computer-Assisted Interventions; Liao, H., Linte, C., Masamune, K., Peters, T., Zheng, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 258–267. [Google Scholar]

- Zhang, L.; Ye, M.; Giannarou, S.; Pratt, P.; Yang, G.-Z. Motion-compensated autonomous scanning for tumour localisation using intraoperative ultrasound. In Medical Image Computing and Computer-Assisted Intervention; Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D., Duchesne, S., Eds.; Springer: Cham, Switzherland, 2017; pp. 619–627. [Google Scholar]

- Jayarathne, U.L.; McLeod, A.J.; Peters, T.M.; Chen, E.C. Robust intraoperative US probe tracking using a monocular endoscopic camera. In Medical Image Computing and Computer-Assisted Intervention; Mori, K., Sakuma, I., Sato, Y., Barillot, C., Navab, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Serej, N.D.; Ahmadian, A.; Mohagheghi, S.; Sadrehosseini, S.M. A projected landmark method for reduction of registration error in image-guided surgery systems. Int. J. CARS 2015, 10, 541–554. [Google Scholar] [CrossRef]

- Ma, L.; Nakamae, K.; Wang, J.; Kiyomatsu, H.; Tsukihara, H.; Kobayashi, E.; Sakuma, I. Image-Guided Laparoscopic Pelvic Lymph Node Dissection Using Stereo Visual Tracking Free-Hand Laparoscopic Ultrasound. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, Korea, 11–15 July 2017; IEEE: Seogwipo, Korea, 2017; pp. 3240–3243. [Google Scholar]

- Pratt, P.; Jaeger, A.; Hughes-Hallett, A.; Mayer, E.; Vale, J.; Darzi, A.; Peters, T.; Yang, G.-Z. Robust ultrasound probe tracking: Initial clinical experiences during robot-assisted partial nephrectomy. Int. J. CARS 2015, 12, 1905–1913. [Google Scholar] [CrossRef]

- Palmer, C.L.; Haugen, B.O.; Tegnanderzx, E.; Eik-Neszx, S.H.; Torp, H.; Kiss, G. Mobile 3D Augmented-Reality System for Ultrasound Applications; International Ultrasonics Symposium: Taipei, Taiwan; IEEE: Taipei, Taiwan, 2015. [Google Scholar]

- Jansson, M. A 3D-Ultrasound Guidance Device for Central Venous Catheter Placement Using Augmented Reality; KTH Royal Institute of Technology: Stockholm, Sweden, 2017. [Google Scholar]

- Kanithi, P.K.; Chatterjee, J.; Sheet, D. Immersive Augmented Reality System for Assisting Needle Positioning During Ultrasound Guided Intervention. In Proceedings of the Indian Conference on Computer Vision, Graphics and Image Processing, Guwahati, India, 18–22 December 2016; ACM: Guwahati, India, 2016. [Google Scholar]

- Cho, H.; Park, Y.; Gupta, S.; Yoon, C.; Han, I.; Kim, H.-S.; Choi, H.; Hong, J. Augmented reality in bone tumour resection. Bone Jt. Res. 2017, 6, 137–143. [Google Scholar] [CrossRef]

- Perkins, S.L.; Lin, M.A.; Srinivasan, S.; Wheeler, A.J.; Hargreaves, B.A.; Daniel, B.L. A Mixed-Reality System for Breast Surgical Planning. In Proceedings of the International Symposium on Mixed and Augmented Reality, Nantes, France, 9–13 October 2017; IEEE: Nantes, France, 2017; pp. 269–274. [Google Scholar]

- Glud, A.N.; Bech, J.; Tvilling, L.; Zaer, H.; Orlowski, D.; Fitting, L.M.; Ziedler, D.; Geneser, M.; Sangill, R.; Alstrup, A.K.O.; et al. A fiducial skull marker for precise MRI-based stereotaxic surgery in large animal models. J. Neurosci. Methods 2017, 285, 45–48. [Google Scholar] [CrossRef]

- Bentley, J.N.; Khalsa, S.S.; Kobylarek, M.; Schroeder, K.E.; Chen, K.; Bergin, I.L.; Tat, D.M.; Chestek, C.A.; Patil, P.G. A simple, inexpensive method for subcortical stereotactic targeting in nonhuman primates. J. Neurosci. Methods 2018, 305, 89–97. [Google Scholar] [CrossRef]

- Tabrizi, L.B.; Mahvash, M. Augmented reality—Guided neurosurgery: Accuracy and intraoperative application of an image projection technique. J. Neurosurg. 2015, 123, 206–211. [Google Scholar] [CrossRef] [PubMed]

- Perwög, M.; Bardosi, Z.; Freysinger, W. Experimental validation of predicted application accuracies for computer-assisted (CAS) intraoperative navigation with paired-point registration. Int. J. CARS 2018, 13, 425–441. [Google Scholar] [CrossRef]

- Plishker, W.; Liu, X.; Shekhar, R. Hybrid tracking for improved registration of laparoscopic ultrasound and laparoscopic video for augmented reality. In Computer Assisted and Robotic Endoscopy and Clinical Image-Based Procedures; Cardoso, M., Ed.; Springer: Cham, Switzherland, 2017; pp. 170–179. [Google Scholar]

- Bajura, M.; Fuchs, H.; Ohbuchi, R. Merging virtual objects with the real world: Seeing ultrasound imagery within the patient. Comput. Graph. 1992, 26, 203–210. [Google Scholar] [CrossRef]

- Ungi, T.; Gauvin, G.; Lasso, A.; Yeo, C.T.; Pezeshki, P.; Vaughan, T.; Carter, K.; Rudan, J.; Engel, C.J.; Fichtinger, G. Navigated Breast Tumor Excision Using Electromagnetically Tracked Ultrasound and Surgical Instruments. IEEE Trans. Biomed. Eng. 2016, 63, 600–606. [Google Scholar] [CrossRef]

- Punithakumar, K.; Hareendranathan, A.R.; Paakkanen, R.; Khan, N.; Noga, M.; Boulanger, P.; Becher, H. Multiview Echocardiography Fusion Using an Electromagnetic Tracking System. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society, Orlando, FL, USA, 16–20 August 2016; IEEE: Orlando, FL, USA, 2016; pp. 1078–1081. [Google Scholar]

- Liu, X.; Gu, L.; Xie, H.; Zhang, S. CT-Ultrasound Registration for Electromagnetic Navigation of Cardiac Intervention. In Proceedings of the International Congress on Image and Signal Processing, BioMedical Engineering and Informatics, Shanghai, China, 14–16 October 2017; IEEE: Shanghai, China, 2017. [Google Scholar]

- Zhang, X.; Fan, Z.; Wang, J.; Liao, H. 3D Augmented reality based orthopaedic interventions. In Lecture Notes in Computational Vision and Biomechanics; Zheng, G., Li, S., Eds.; Springer: Cham, Switzherland, 2016; pp. 71–90. [Google Scholar]

- Fanous, A.A.; White, T.G.; Hirsch, M.B.; Chakraborty, S.; Costantino, P.D.; Langer, D.J.; Boockvar, J.A. Frameless and maskless stereotactic navigation with a skull-mounted tracker. World Neurosurg. 2017, 102, 661–667. [Google Scholar] [CrossRef] [PubMed]

- OpenCV Contour Features. Available online: https://docs.opencv.org/3.3.1/dd/d49/tutorial_py_contour_features.html (accessed on 14 February 2020).

- OpenCV Detection of ArUco Markers. Available online: https://docs.opencv.org/3.3.1/d5/dae/tutorial_aruco_detection.html (accessed on 10 October 2020).

- Mallick, S. Head Pose Estimation Using OpenCV and Dlib. Available online: https://www.learnopencv.com/head-pose-estimation-using-opencv-and-dlib/ (accessed on 18 September 2020).

- Milligan, R. Augmented Reality Using OpenCV, OpenGL and Blender. Available online: https://rdmilligan.wordpress.com/2015/10/15/augmented-reality-using-opencv-opengl-and-blender/ (accessed on 5 May 2020).

- OpenCV Camera Calibration and 3D Reconstruction. Available online: https://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html#solvepnpransac (accessed on 15 March 2020).

- Group, K. OpenGL: The Industry’s Foundation for High Performance Graphics. Available online: https://www.opengl.org/ (accessed on 24 June 2020).

- Jana, D. 3D Animated Realistic Human Heart-V2.0. Available online: https://sketchfab.com/models/168b474fba564f688048212e99b4159d (accessed on 14 March 2020).

- Balasundaram, G.; Krafft, C.; Zhang, R.; Dev, K.; Bi, R.; Moothanchery, M.; Popp, J.; Olivo, M. Biophotonic technologies for assessment of breast tumor surgical margins—A review. J. Biophotonics 2021, 14, e202000280. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Fei, B. Comprehensive review of surgical microscopes: Technology development and medical applications. J. Biomed. Opt. 2021, 26, 010901. [Google Scholar] [CrossRef]

- Mela, C.; Papay, F.; Liu, Y. Enhance fluorescence imaging and remove motion artifacts by combining pixel tracking, interleaved acquisition, and temporal gating. IEEE Photonics J. 2021, 13. [Google Scholar] [CrossRef]

- Dip, F.; Boni, L.; Bouvet, M.; Carus, T.; Diana, M.; Falco, J.; Gurtner, G.C.; Ishizawa, T.; Kokudo, N.; Lo Menzo, E.; et al. Consensus conference statement on the general use of near-infrared fluorescence imaging and indocyanine green guided surgery: Results of a modified Delphi study. Ann. Surg. 2020. [Google Scholar] [CrossRef]

- Quang, T.T.; Chen, W.F.; Papay, F.A.; Liu, Y. Dynamic, real-time, fiducial-free surgical navigation with integrated multimodal optical imaging. IEEE Photonics J. 2021, 13. [Google Scholar] [CrossRef]

- Zaffino, P.; Moccia, S.; de Momi, E.; Spadea, M.F. A review on advances in intra-operative imaging for surgery and therapy: Imagining the operating room of the future. Ann. Biomed. Eng. 2020, 48, 2171–2191. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Li, D.; Shang, X.; Wang, J. A review of computer-assisted orthopaedic surgery systems. Int. J. Med. Robot. 2020, 16. [Google Scholar] [CrossRef]

- Quang, T.T.; Kim, H.Y.; Bao, F.S.; Papay, F.A.; Edwards, W.B.; Liu, Y. Fluorescence imaging topography scanning system for intraoperative multimodal imaging. PLoS ONE 2017, 12, e0174928. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.H.; Papay, F.A.; Yanof, J.; West, K.; Bassiri Gharb, B.; Rampazzo, A.; Gastman, B.; Schwarz, G.S. Mixed reality and 3D printed models for planning and execution of face transplantation. Ann. Surg. 2020. [Google Scholar] [CrossRef] [PubMed]

| FRE | TRE | |||||

|---|---|---|---|---|---|---|

| Tx (mm) | Ty (mm) | Tx (mm) | Ty (mm) | R (o) | S (%) | |

| Mean | 1.22 | 1.15 | 1.86 | 1.81 | 2.19 | 3.89 |

| STD | 0.35 | 0.30 | 0.53 | 0.55 | 1.42 | 2.07 |

| Maximum | 1.8 | 1.8 | 2.50 | 2.55 | 6.00 | 7.50 |

| Minimum | 0.7 | 0.8 | 0.80 | 0.80 | 0.50 | 1.10 |

| FRE | TRE | |||||

|---|---|---|---|---|---|---|

| Tx (mm) | Ty (mm) | Tx (mm) | Ty (mm) | R (o) | S (%) | |

| Mean | 1.41 | 1.11 | 1.74 | 1.37 | 0.95 | 1.01 |

| STD | 0.31 | 0.37 | 0.47 | 0.36 | 0.63 | 0.00 |

| Maximum | 1.9 | 1.8 | 2.4 | 2 | 2.5 | 1.8 |

| Minimum | 0.8 | 0.5 | 0.8 | 0.8 | 0.1 | 0.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mela, C.; Papay, F.; Liu, Y. Novel Multimodal, Multiscale Imaging System with Augmented Reality. Diagnostics 2021, 11, 441. https://doi.org/10.3390/diagnostics11030441

Mela C, Papay F, Liu Y. Novel Multimodal, Multiscale Imaging System with Augmented Reality. Diagnostics. 2021; 11(3):441. https://doi.org/10.3390/diagnostics11030441

Chicago/Turabian StyleMela, Christopher, Francis Papay, and Yang Liu. 2021. "Novel Multimodal, Multiscale Imaging System with Augmented Reality" Diagnostics 11, no. 3: 441. https://doi.org/10.3390/diagnostics11030441

APA StyleMela, C., Papay, F., & Liu, Y. (2021). Novel Multimodal, Multiscale Imaging System with Augmented Reality. Diagnostics, 11(3), 441. https://doi.org/10.3390/diagnostics11030441