Development of an Artificial Intelligence System for the Automatic Evaluation of Cervical Vertebral Maturation Status

Abstract

1. Introduction

2. Materials and Methods

2.1. Patients and Dataset

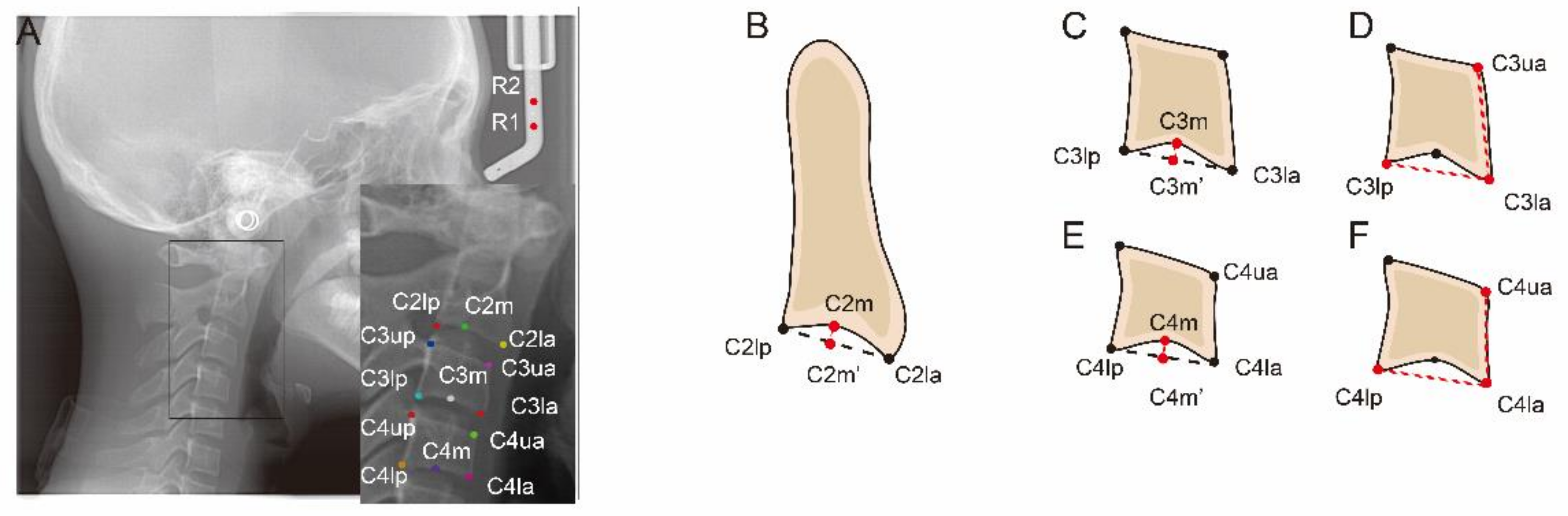

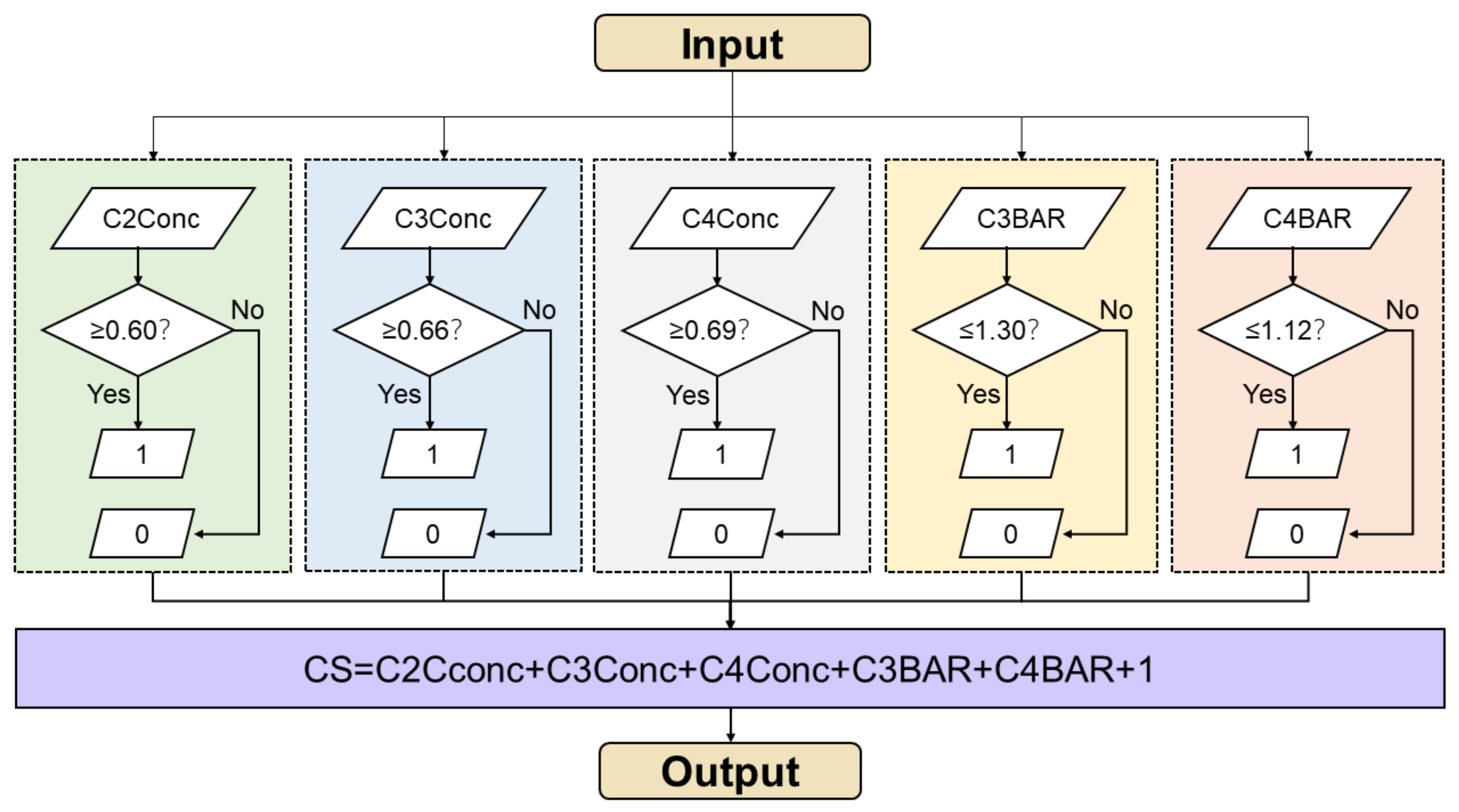

2.2. Manual CVM Staging

2.3. Manual Labelling

2.4. Model Training and Testing

2.5. Statistical Analysis

- Accuracy = , which is a general evaluation of AI performance.

- Precision = = positive predictive value (PPV), which presents the ability of AI to correctly predict positives.

- Recall = = sensitivity, which reflects ability of AI to find all the positive samples.

- Specificity = , which reflects the ability of AI to find all the negative samples.

- F1 score = , which weight precision and recall harmoniously to completely evaluate AI performance.

3. Results

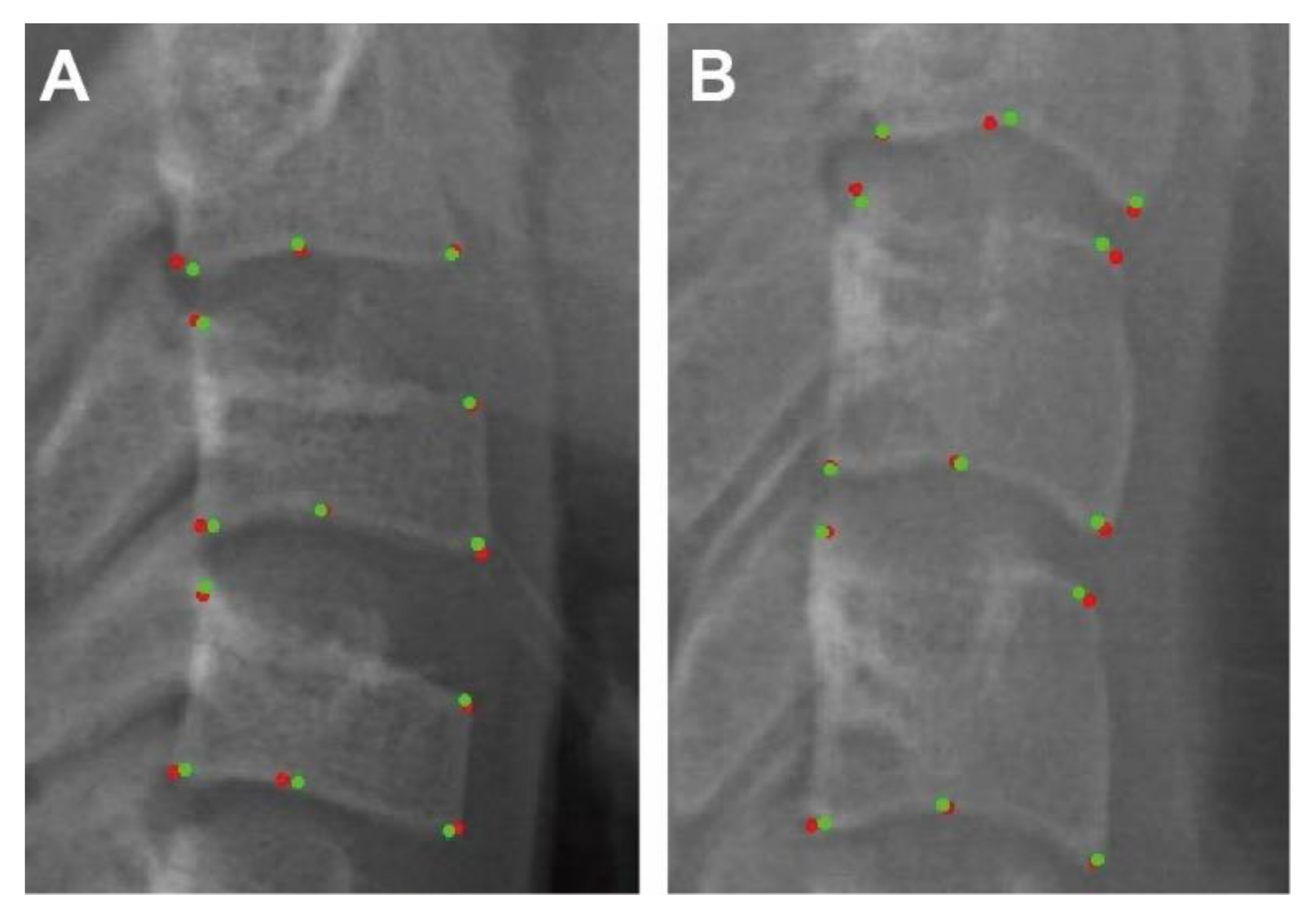

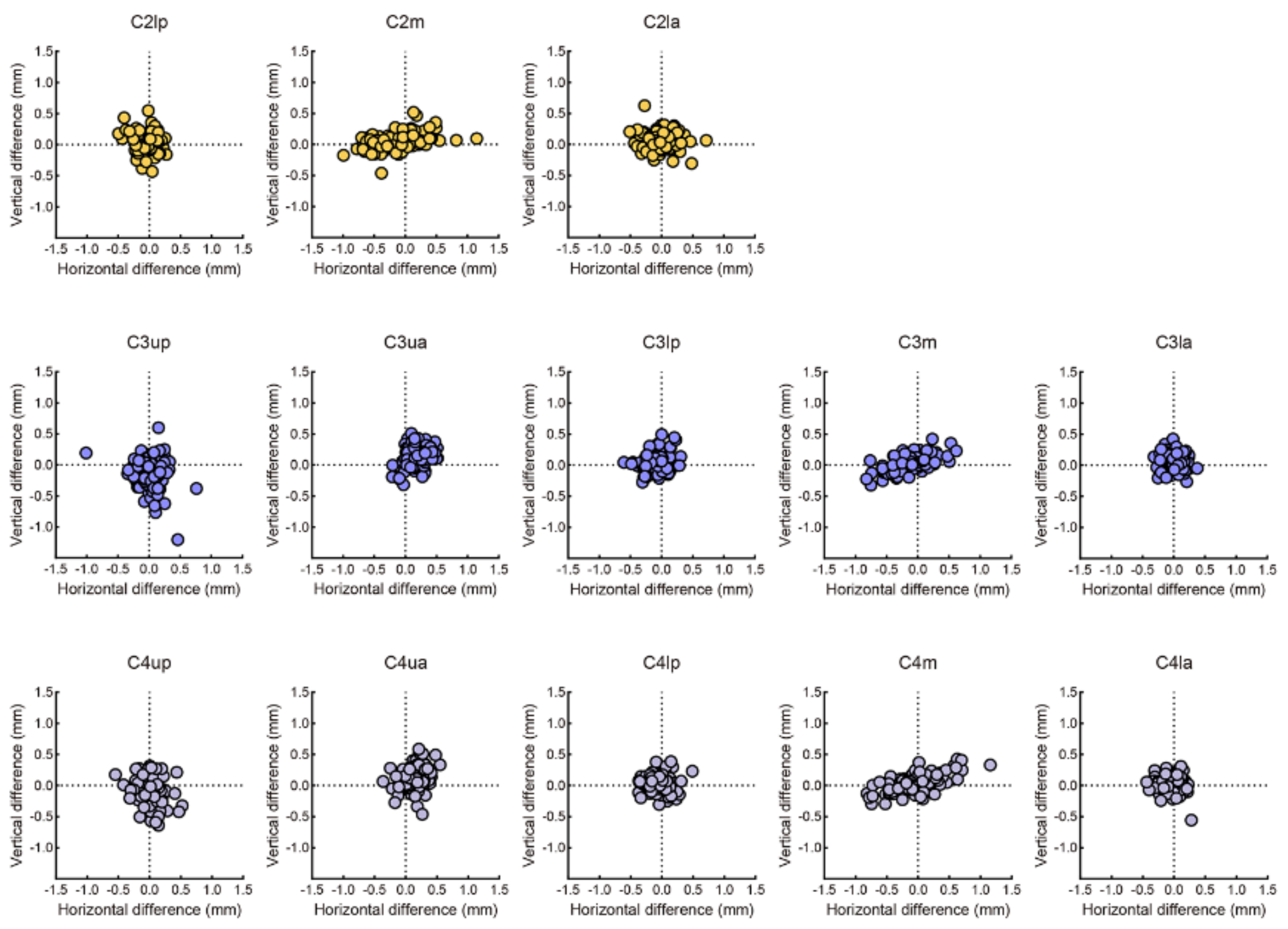

3.1. Evaluation of Labelling

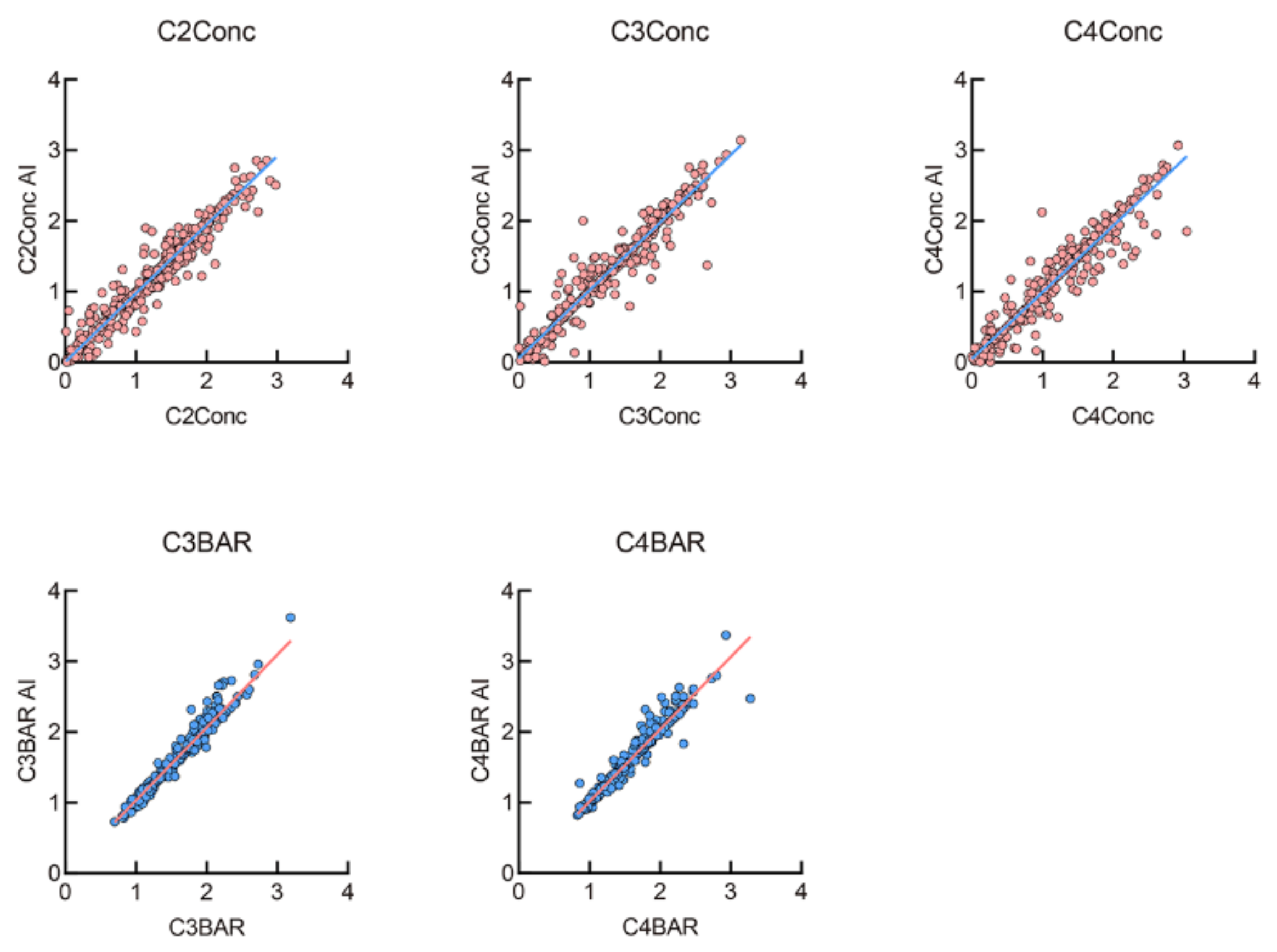

3.2. Evaluation of Measurements

3.3. Evaluation of AI Staging

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zou, J.; Meng, M.; Law, C.S.; Rao, Y.; Zhou, X. Common dental diseases in children and malocclusion. Int. J. Oral Sci. 2018, 10, 7. [Google Scholar] [CrossRef]

- Shen, L.; He, F.; Zhang, C.; Jiang, H.; Wang, J. Prevalence of malocclusion in primary dentition in mainland China, 1988–2017: A systematic review and meta-analysis. Sci. Rep. 2018, 8, 4716. [Google Scholar] [CrossRef]

- Akbari, M.; Lankarani, K.B.; Honarvar, B.; Tabrizi, R.; Mirhadi, H.; Moosazadeh, M. Prevalence of malocclusion among Iranian children: A systematic review and meta-analysis. Dent. Res. J. 2016, 13, 387–395. [Google Scholar] [CrossRef]

- Long, H.; Wang, Y.; Jian, F.; Liao, L.N.; Yang, X.; Lai, W.L. Current advances in orthodontic pain. Int. J. Oral Sci. 2016, 8, 67–75. [Google Scholar] [CrossRef] [PubMed]

- Fleming, P.S. Timing orthodontic treatment: Early or late? Aust. Dent. J. 2017, 62, 11–19. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Zhan, Q.; Zhou, J.; Kuang, Q.; Yan, X.; Zhang, X.; Shan, Y.; Lai, W.; Long, H. A comparison of the effects of Forsus appliances with and without temporary anchorage devices for skeletal Class II malocclusion. Angle Orthod. 2020, 91, 255–266. [Google Scholar] [CrossRef] [PubMed]

- Fishman, L.S. Radiographic evaluation of skeletal maturation. A clinically oriented method based on hand-wrist films. Angle Orthod. 1982, 52, 88–112. [Google Scholar] [CrossRef]

- Baccetti, T.; Franchi, L.; McNamara, J.A. The Cervical Vertebral Maturation (CVM) Method for the Assessment of Optimal Treatment Timing in Dentofacial Orthopedics. Semin. Orthod. 2005, 11, 119–129. [Google Scholar] [CrossRef]

- Nagendrababu, V.; Aminoshariae, A.; Kulild, J. Artificial Intelligence in Endodontics: Current Applications and Future Directions. J. Endod. 2021, 47, 1352–1357. [Google Scholar] [CrossRef]

- Revilla-León, M.; Gómez-Polo, M.; Vyas, S.; Barmak, B.A.; Galluci, G.O.; Att, W.; Krishnamurthy, V.R. Artificial intelligence applications in implant dentistry: A systematic review. J. Prosthet. Dent. 2021. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Naik, S.; Al Kheraif, A.A.; Vishwanathaiah, S.; Maganur, P.C.; Alhazmi, Y.; Mushtaq, S.; Sarode, S.C.; Sarode, G.S.; Zanza, A.; et al. Application and Performance of Artificial Intelligence Technology in Oral Cancer Diagnosis and Prediction of Prognosis: A Systematic Review. Diagnostics 2021, 11, 1004. [Google Scholar] [CrossRef]

- Schwendicke, F.; Chaurasia, A.; Arsiwala, L.; Lee, J.H.; Elhennawy, K.; Jost-Brinkmann, P.G.; Demarco, F.; Krois, J. Deep learning for cephalometric landmark detection: Systematic review and meta-analysis. Clin. Oral Investig. 2021, 25, 4299–4309. [Google Scholar] [CrossRef]

- Hwang, H.W.; Park, J.H.; Moon, J.H.; Yu, Y.; Kim, H.; Her, S.B.; Srinivasan, G.; Aljanabi, M.N.A.; Donatelli, R.E.; Lee, S.J. Automated identification of cephalometric landmarks: Part 2- Might it be better than human? Angle Orthod. 2020, 90, 69–76. [Google Scholar] [CrossRef]

- Larson, D.B.; Chen, M.C.; Lungren, M.P.; Halabi, S.S.; Stence, N.V.; Langlotz, C.P. Performance of a Deep-Learning Neural Network Model in Assessing Skeletal Maturity on Pediatric Hand Radiographs. Radiology 2018, 287, 313–322. [Google Scholar] [CrossRef]

- Kok, H.; Acilar, A.M.; Izgi, M.S. Usage and comparison of artificial intelligence algorithms for determination of growth and development by cervical vertebrae stages in orthodontics. Prog. Orthod. 2019, 20, 41. [Google Scholar] [CrossRef] [PubMed]

- Amasya, H.; Cesur, E.; Yıldırım, D.; Orhan, K. Validation of cervical vertebral maturation stages: Artificial intelligence vs human observer visual analysis. Am. J. Orthod. Dentofac. Orthop. 2020, 158, e173–e179. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Singh, T.; Lee, J.H.; Gaudin, R.; Chaurasia, A.; Wiegand, T.; Uribe, S.; Krois, J. Artificial intelligence in dental research: Checklist for authors, reviewers, readers. J. Dent. 2021, 107, 103610. [Google Scholar] [CrossRef]

- Li, Z.; Peng, C.; Yu, G.; Zhang, X.; Deng, Y.; Sun, J. DetNet: A Backbone network for Object Detection. arXiv 2018, arXiv:1804.06215. [Google Scholar]

- Lee, K.S.; Jung, S.K.; Ryu, J.J.; Shin, S.W.; Choi, J. Evaluation of Transfer Learning with Deep Convolutional Neural Networks for Screening Osteoporosis in Dental Panoramic Radiographs. J. Clin. Med. 2020, 9, 392. [Google Scholar] [CrossRef]

- Hägg, U.; Taranger, J. Maturation indicators and the pubertal growth spurt. Am. J. Orthod. 1982, 82, 299–309. [Google Scholar] [CrossRef]

- Baccetti, T.; Franchi, L.; De Toffol, L.; Ghiozzi, B.; Cozza, P. The diagnostic performance of chronologic age in the assessment of skeletal maturity. Prog. Orthod. 2006, 7, 176–188. [Google Scholar] [PubMed]

- Franchi, L.; Baccetti, T.; De Toffol, L.; Polimeni, A.; Cozza, P. Phases of the dentition for the assessment of skeletal maturity: A diagnostic performance study. Am. J. Orthod. Dentofac. Orthop. 2008, 133, 395–400. [Google Scholar] [CrossRef]

- Perinetti, G.; Contardo, L.; Gabrieli, P.; Baccetti, T.; Di Lenarda, R. Diagnostic performance of dental maturity for identification of skeletal maturation phase. Eur. J. Orthod. 2012, 34, 487–492. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Bittencourt, M.V.; Cericato, G.; Franco, A.; Girão, R.; Lima, A.P.B.; Paranhos, L. Accuracy of dental development for estimating the pubertal growth spurt in comparison to skeletal development: A systematic review and meta-analysis. Dentomaxillofac. Radiol. 2018, 47, 20170362. [Google Scholar] [CrossRef]

- Lai, E.H.; Liu, J.P.; Chang, J.Z.; Tsai, S.J.; Yao, C.C.; Chen, M.H.; Chen, Y.J.; Lin, C.P. Radiographic assessment of skeletal maturation stages for orthodontic patients: Hand-wrist bones or cervical vertebrae? J. Formos. Med. Assoc. 2008, 107, 316–325. [Google Scholar] [CrossRef]

- Flores-Mir, C.; Burgess, C.A.; Champney, M.; Jensen, R.J.; Pitcher, M.R.; Major, P.W. Correlation of skeletal maturation stages determined by cervical vertebrae and hand-wrist evaluations. Angle Orthod. 2006, 76, 1–5. [Google Scholar] [CrossRef]

- Cericato, G.O.; Bittencourt, M.A.; Paranhos, L.R. Validity of the assessment method of skeletal maturation by cervical vertebrae: A systematic review and meta-analysis. Dentomaxillofac. Radiol. 2015, 44, 20140270. [Google Scholar] [CrossRef] [PubMed]

- Szemraj, A.; Wojtaszek-Słomińska, A.; Racka-Pilszak, B. Is the cervical vertebral maturation (CVM) method effective enough to replace the hand-wrist maturation (HWM) method in determining skeletal maturation?-A systematic review. Eur. J. Radiol. 2018, 102, 125–128. [Google Scholar] [CrossRef]

- Fazal, M.I.; Patel, M.E.; Tye, J.; Gupta, Y. The past, present and future role of artificial intelligence in imaging. Eur. J. Radiol. 2018, 105, 246–250. [Google Scholar] [CrossRef]

- Hung, K.; Montalvao, C.; Tanaka, R.; Kawai, T.; Bornstein, M.M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 2020, 49, 20190107. [Google Scholar] [CrossRef]

- Kunz, F.; Stellzig-Eisenhauer, A.; Zeman, F.; Boldt, J. Artificial intelligence in orthodontics: Evaluation of a fully automated cephalometric analysis using a customized convolutional neural network. J. Orofac. Orthop. 2020, 81, 52–68. [Google Scholar] [CrossRef] [PubMed]

- Leonardi, R.; Giordano, D.; Maiorana, F.; Spampinato, C. Automatic cephalometric analysis. Angle Orthod. 2008, 78, 145–151. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Kriegeskorte, N.; Golan, T. Neural network models and deep learning. Curr. Biol. 2019, 29, R231–R236. [Google Scholar] [CrossRef]

- Yadav, S.; Shukla, S. Analysis of k-Fold Cross-Validation over Hold-Out Validation on Colossal Datasets for Quality Classification. In Proceedings of the 2016 IEEE 6th International Conference on Advanced Computing (IACC), Piscataway, NJ, USA, 27–28 February 2016; pp. 78–83. [Google Scholar] [CrossRef]

| Training | Testing | |

|---|---|---|

| Age | 12.02 ± 4.71 | 14.27 ± 4.78 |

| Gender (M/F) | 432/548 | 43/57 |

| CS1 | 242 | 13 |

| CS2 | 214 | 11 |

| CS3 | 164 | 5 |

| CS4 | 108 | 24 |

| CS5 | 52 | 12 |

| CS6 | 200 | 35 |

| Total | 980 | 100 |

| Landmarks/ Measurements | Definition |

|---|---|

| C2lp | The most posterior point of C2 on the lower border |

| C2la | The most anterior point of C2 on the lower border |

| C2m | The deepest point of the concavity at the lower border of C2 |

| C2Conc | The distance between C2m and the line connecting C2lp and C2la |

| C3up | The most posterior point of C3 on the upper border |

| C3ua | The most anterior point of C3 on the upper border |

| C3lp | The most posterior point of C3 on the lower border |

| C3la | The most anterior point of C3 on the lower border |

| C3m | The deepest point of the concavity at the lower border of C3 |

| C3Conc | The distance between C3m and the line connecting C3lp and C3la |

| C3BAR | Ratio between the length of the base (distance C3lp − C3la) and the anterior height (distance C3ua − C3la) of the body of C3. |

| C4up | The most posterior point of C4 on the upper border |

| C4ua | The most posterior point of C4 on the upper border |

| C4lp | The most posterior point of C4 on the lower border |

| C4la | The most anterior point of C4 on the lower border |

| C4m | The deepest point of the concavity at the lower border of C4 |

| C4Conc | The distance between C4m and the line connecting C4lp and C4la |

| C4BAR | ratio between the length of the base (distance C4lp − C4la) and the anterior height (distance C4ua − C4la) of the body of C4 |

| Landmarks | Between Human Examiners | Between AI and Human Examiner | ||

|---|---|---|---|---|

| Mean ± SD (mm) | Mean ± SD (mm) | |||

| C2lp | 0.38 | 0.23 | 0.29 | 0.25 |

| C2lm | 0.70 | 0.44 | 0.47 | 0.33 |

| C2la | 0.41 | 0.28 | 0.35 | 0.27 |

| C3up | 0.56 | 0.40 | 0.48 | 0.38 |

| C3ua | 0.54 | 0.28 | 0.42 | 0.24 |

| C3lp | 0.41 | 0.24 | 0.24 | 0.15 |

| C3m | 0.57 | 0.37 | 0.37 | 0.28 |

| C3la | 0.32 | 0.19 | 0.24 | 0.17 |

| C4up | 0.50 | 0.29 | 0.42 | 0.36 |

| C4ua | 0.53 | 0.30 | 0.43 | 0.27 |

| C4lp | 0.37 | 0.21 | 0.25 | 0.21 |

| C4m | 0.62 | 0.45 | 0.46 | 0.27 |

| C4la | 0.35 | 0.20 | 0.27 | 0.18 |

| Total | 0.48 | 0.12 | 0.36 | 0.09 |

| Precision/PPV | Recall/Sensitivity | Specificity | F1 Score | |

|---|---|---|---|---|

| CS1 | 0.67 | 0.92 | 0.93 | 0.77 |

| CS2 | 1.00 | 0.36 | 1.00 | 0.53 |

| CS3 | 0.25 | 0.40 | 0.94 | 0.31 |

| CS4 | 0.83 | 0.63 | 0.96 | 0.71 |

| CS5 | 0.46 | 1.00 | 0.84 | 0.63 |

| CS6 | 1.00 | 0.74 | 1.00 | 0.85 |

| Accuracy | 0.71 | |||

| ICC | 0.98 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Zhou, H.; Pu, L.; Gao, Y.; Tang, Z.; Yang, Y.; You, M.; Yang, Z.; Lai, W.; Long, H. Development of an Artificial Intelligence System for the Automatic Evaluation of Cervical Vertebral Maturation Status. Diagnostics 2021, 11, 2200. https://doi.org/10.3390/diagnostics11122200

Zhou J, Zhou H, Pu L, Gao Y, Tang Z, Yang Y, You M, Yang Z, Lai W, Long H. Development of an Artificial Intelligence System for the Automatic Evaluation of Cervical Vertebral Maturation Status. Diagnostics. 2021; 11(12):2200. https://doi.org/10.3390/diagnostics11122200

Chicago/Turabian StyleZhou, Jing, Hong Zhou, Lingling Pu, Yanzi Gao, Ziwei Tang, Yi Yang, Meng You, Zheng Yang, Wenli Lai, and Hu Long. 2021. "Development of an Artificial Intelligence System for the Automatic Evaluation of Cervical Vertebral Maturation Status" Diagnostics 11, no. 12: 2200. https://doi.org/10.3390/diagnostics11122200

APA StyleZhou, J., Zhou, H., Pu, L., Gao, Y., Tang, Z., Yang, Y., You, M., Yang, Z., Lai, W., & Long, H. (2021). Development of an Artificial Intelligence System for the Automatic Evaluation of Cervical Vertebral Maturation Status. Diagnostics, 11(12), 2200. https://doi.org/10.3390/diagnostics11122200