Abstract

The fast, reliable, and accurate identification of IDPRs is essential, as in recent years it has come to be recognized more and more that IDPRs have a wide impact on many important physiological processes, such as molecular recognition and molecular assembly, the regulation of transcription and translation, protein phosphorylation, cellular signal transduction, etc. For the sake of cost-effectiveness, it is imperative to develop computational approaches for identifying IDPRs. In this study, a deep neural structure where a variant VGG19 is situated between two MLP networks is developed for identifying IDPRs. Furthermore, for the first time, three novel sequence features—i.e., persistent entropy and the probabilities associated with two and three consecutive amino acids of the protein sequence—are introduced for identifying IDPRs. The simulation results show that our neural structure either performs considerably better than other known methods or, when relying on a much smaller training set, attains a similar performance. Our deep neural structure, which exploits the VGG19 structure, is effective for identifying IDPRs. Furthermore, three novel sequence features—i.e., the persistent entropy and the probabilities associated with two and three consecutive amino acids of the protein sequence—could be used as valuable sequence features in the further development of identifying IDPRs.

1. Introduction

Protein regions which lack stable three-dimensional structures are referred to as intrinsically disordered regions (IDPRs) [1]. In recent years, it has come to be recognized more and more that IDPRs have a huge impact on many important physiological processes [2,3], such as molecular recognition and molecular assembly, the regulation of transcription and translation, protein phosphorylation, cellular signal transduction, etc. [4,5,6]. Furthermore, some human diseases, such as certain types of cancer, Parkinson’s disease, and cardiovascular disease [7,8,9], have been found to be linked with IDPRs. However, the experimental methods used to identify IDPRs are usually expensive and time-consuming [10]. Thus, the fast, reliable, and accurate identification of IDPRs by computational methods is a valuable complement to experimental studies.

There are many computational methods for identifying IDPRs. These methods can be divided into three categories: (1) Physicochemical-based methods, such as FoldIndex [11], GlobPlot [12], IUPred [13], FoldUnfold [14], and IsUnstruct [15], which rely on the amino acid physiochemical properties for identifying disorder. (2) Machine learning-based methods—for instance, DISvgg [16], RFPR-IDP [17], IDP-Seq2Seq [18], SPOT-Disorder [19], SPOT-Disorder2 [20], DISOPRED3 [21], SPINE-D [22], ESpritz [23], BVDEA [10], POODLE-S [24], RONN [25], and PONDRs [26]—which treat the identification of IDRs as labeling each amino acid of a protein sequence or as a classification problem. (3) Meta methods, including MFDp [27], MetaPrDOS [28], and Meta-Disorder predictor [29], which fuse multiple predictors to yield the final prediction for IDPRs.

While all of the above methods have contributed to the development of the field, there are still some new features that have not been discovered. Because of the interaction between amino acids, the question of how to describe them is key to improving predictions based on protein sequences.

In this paper, we develop a deep neural structure composed of a variant VGG19 [30], where the variant VGG19 is situated between two multilayer perceptron (MLP) networks for identifying IDPRs. In the variant VGG19, we erase the fully connected (FC) layers of VGG19 but preserve the other parts of the VGG19 structure and related parameters. In comparison with ResNet, the parameters of VGGNet could be easily manipulated. The MLP network consists of an input layer, hidden layers, and an output layer. The MLPs are employed for transforming the features into the formats suitable for serving as the inputs of the variant VGG19 and classification network, respectively. Compared with our previous DISpre algorithm [31] and DISvgg algorithm [16], we introduce VGG19 as a part of the network instead of as a single MLP network, and additionally use one VGG19 instead of ten VGG16. Moreover, to further improve the performance of prediction, we introduce new features for prediction. For the first time, three sequence features, which are the persistent entropy based on the persistent homology and the probabilities associated with two and three consecutive amino acids of the protein sequence (PCAA2, PCAA3), are introduced for identifying IDPRs. These three novel sequence features together with those used in [32]—i.e., two sequence features, seven physicochemical propensities, and three propensities of amino acids, as well as twenty evolutionary features—are used as the inputs for our neural structure. The simulation results obtained for two blind testing sets, R80 [25] and MXD494 [33], show that our neural structure either performs considerably better than other well-known methods [17,20] or, when relying on a much smaller training set (DIS1616) compared to the one used in [18], attains a similar performance.

2. Datasets and Input Features

In this section, the datasets used in this paper for training and blind testing are presented. The features extracted from the training dataset are depicted. In particular, we introduce three novel features, which are used for the first time for identifying IDPRs. These three novel features are persistent entropy based on persistent homology, PCAA2, and PCAA3.

2.1. Datasets

The dataset DIS1616 from the DisProt [34] (accessed on June 2020) is employed for training and cross validating, while the datasets R80 [25] and MXD494 [33] are used for blind testing. The training dataset DIS1616 consists of 1616 protein sequences which contain 182,316 disordered and 706,362 ordered amino acids. The dataset DIS1616 is randomly split into two subsets: DIS1450 and DIS166. They contain 1450 protein sequences and 166 protein sequences and are used for training and testing, respectively. The blind testing dataset R80 has 78 protein sequences, in which there are 3566 disordered and 29,243 ordered amino acids. There are 494 protein sequences in the blind testing dataset, MXD494, among which 44,087 disordered and 152,414 ordered amino acids are presented.

2.2. Input Features Used for the Identification of IDPRs

The features fed to our neural structure for identifying IDPRs can be summarized as five sequence features, seven physiochemical propensities, and three propensities of amino acids, as well as twenty evolutionary features of the given protein sequence. Of these five sequence features, persistent entropy based on persistent homology, PCAA2, and PCAA3 are, for the first time, introduced for identifying IDPRs. The remaining two sequence features are the Shannon entropy and topological entropy [32]. Topological entropy is used to depict the complexity of the protein sequence. The seven physiochemical properties of the amino acids are steric parameter, polarizability, volume, hydrophobicity, isoelectric point, helix, and sheet probability, as illustrated in the reference [35]. Three propensities of the amino acids are Remark 465, Deleage/Roux, and Bfactor(2STD), which are derived from the GlobPlot NAR paper [12]. Twenty evolutionary features can be determined through the Position-Specific Substitution Matrix (PSSM) [36], which is computed using the Position-Specific Iterative Basic Local Alignment Search Tool (PSI-BLAST) [37].

2.2.1. The Computation of Persistent Entropy

In this section, we will to briefly illustrate the procedure used for computing the persistent homology as well as its persistent entropy from the given protein sequence. More information related to the computation of the persistent homology and its persistent entropy can be found in [38,39].

Given a protein sequence of length L, we choose a sliding window of odd length N to extract N consecutive amino acids from . For simplicity, we first transform into a sequence of size through appending amino acids to both ends of . The appended amino acids at both ends are identical to either the first or last amino acid of the protein sequence . Thus, utilizing a sliding window of size N, we can slice the transformed of size into L amino acid subsequences with . To compute the persistent entropy of , we need to map each amino acid in to a set of points, which leads us to define

where the value for k is and is the delta function. We use a one to one correspondence to represent the set of amino acid symbols as:

Thus, each amino acid symbol with in () is mapped to , where we have:

We use and to project different amino acids to different positions on the axis. and are combined with ; then, all amino acids in are projected to different positions on the axis. Thus, we can map each amino acid for in () to a unique element in the set of in through Equations (1)–(3).

The persistent entropy of associated with can be computed as

where denotes a filtration with its associated persistence diagram (we assume for all ). We have . A filtration of the simplicial complex () associated with is obtained through increasing the parameter values —i.e.,

with . In Equation (5), the simplicial complex () is chosen to be the Vietoris Rips complex of , which is defined as:

where is the ball centered at with the radius . Given a filtration defined by (5), a barcode in the k-dimensional persistence with endpoints corresponds to a k-dimensional hole that appears at filtration time and remains until filtration time . The set of bars , representing the birth and death times of homology classes, is called the persistence barcode for the filtration of (5). Analogously, the set of points is called the persistence diagram of the filtration of (5). The persistent entropy of each amino acid for in () is therefore equal to the persistent entropy of associated with .

2.2.2. The Computation of the Features Using the Probabilities Associated with the Protein Sequence

The probability associated with two and three consecutive amino acids of the protein sequence depends on the probability of each amino acid occurring in the observed protein, which depends on the protein sequence length and the number of each individual amino acids in the protein sequence. We put all amino acids from all proteins in DIS1616 together and, based on this set, calculate the probabilities associated with two and three consecutive amino acids of the protein sequence. Consider the given protein sequence . For convenience, we define two sets:

which represent all the possible combinations of two or three consecutive amino acids in this protein sequence. Two novel features introduced in this paper are:

which can be derived from the probability features and , respectively, associated with two or three consecutive amino acids of the protein sequence . Using the notation function, and in Equations (9) and (10) for can, respectively, be computed using:

In view of (7) and (8), functions and in (11) and (12) are defined as:

where and , respectively, represent and . It is easy to verify and for and . Functions and in (11) and (12) defined over the sets and , respectively, are scaled probability features and , with

where we have and . The probability features and associated with two and three consecutive amino acids of the protein sequence, respectively, are equal to:

In (17) and (18), we have:

where we assume that the set of the protein sequences is denoted by (in this paper, we have DIS1616). For a given protein sequence , the functions and , which, respectively, count the total number of occurrences of a particular combination of two or three consecutive amino acids in , are equal to:

where and , respectively, represent for and for .

2.2.3. Pre-Processing the Data Extracted from the Protein Sequences

In this section, we illustrate how to compute the input of our deep neural network, which is composed of 35 features derived from a protein sequence. Of these 35 features, there are twenty evolutionary features which are determined through the PSSM [36] computed through the PSI-BLAST [37]. Seven physiochemical properties of the amino acids are steric parameter, polarizability, volume, hydrophobicity, isoelectric point, helix, and sheet probability, which can be obtained from the paper [35]. Three propensities of the amino acids are Remark 465, Deleage/Roux, and Bfactor (2STD), as detailed in the GlobPlot NAR paper [12]. The other two features used to measure the complexity of the protein sequence are Shannon entropy and topological entropy [32].

Given a protein sequence of length L, we choose a sliding window of odd size N to extract N consecutive amino acids. Then, for these amino acids in the sliding window, we compute the evolutionary features, physiochemical properties, and propensities, as defined in the previous paragraph. These thirty computed feature values of amino acids in the sliding window are averaged and the averaged results are used to represent the feature values of the amino acid in the center of the sliding window. For simplicity, we first transform into a sequence of size through appending zeros to the both ends of the protein sequence. With this sliding window of size N, we also compute the Shannon and topological entropy through the procedure from Equations (1)–(14), as described in the paper [32], as well as the persistent entropy defined in (4). Thus, for each with in the protein sequence , we can combine it with a feature matrix

where for , , and , respectively, align to a 20-dimensional PSSM of the evolutionary information [36,37], seven physiochemical properties [35], three propensities of amino acids from the paper [12], and three entropies (Shannon, topological [32], and persistent entropy). We also use Equations (11) and (12) to compute two novel features and () that are associated with two or three consecutive amino acids of the protein sequence and set and . Finally, we modify the feature matrix defined in (23) to a feature matrix:

with

where () is defined in (23). The input to our deep neural network is (), as defined in (25).

3. The Structure of Our Neural Network and Training Procedure

In this section, we develop a deep neural structure composed of a variant VGG19, where the variant VGG19 is situated between two MLP networks used for identifying IDPRs. Then, we introduce the process of training the deep neural network.

3.1. The Structure of Our Deep Neural Network

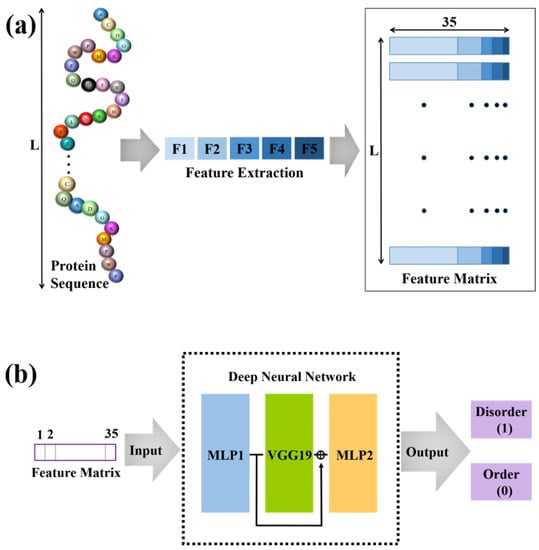

The overall architecture of our model, as shown in Figure 1, is based on a variant VGG19 in cascade with two MLP networks, with the variant VGG19 being situated between two MLP networks. In the variant VGG19, we erase the fully connected (FC) layers of VGG19 but preserve the remaining VGG19 structure and its associated weights and biases.

Figure 1.

The overall framework for the prediction of intrinsically disordered proteins. (a) We extract five types of features from the protein sequence and obtain the feature matrix with 35 features for each amino acid. (b) The obtained feature matrix is input into the deep neural network. The output can be used to predict IDPRs.

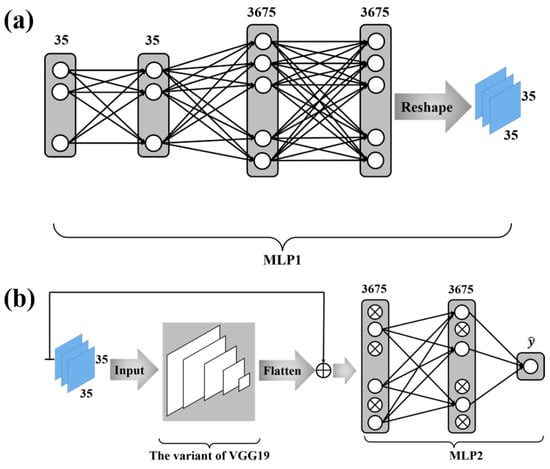

Figure 2a depicts the structure of the MLP network whose outputs are fed as the inputs to the variant VGG19 . This MLP network with two hidden layers takes each column (i.e., features) defined in (25) as its input and yields a vector as its output. The output vector of this MLP network is then mapped to a matrix through the reshape function of Keras, and this matrix is fed as the input to the variant VGG19. The two hidden layers contain 35 and 3675 neurons, respectively. The activation functions of neurons in this MLP are the rectified linear unit (ReLU).

Figure 2.

The deep neural network configuration. (a) is the first part of the deep neural network configuration. The function of MLP1 is to convert the protein sequence features into a mode suitable for VGG19 input. (b) is the second part of the deep neural network configuration. We use a variant of VGG19 for further feature extraction and MLP2 for classification. In MLP2, a dropout algorithm is used.

The output of the variant VGG19 is a vector. As shown in Figure 2b, the skip connection is employed, where the sum of the output from the variant VGG19 and the output from the MLP network connecting to the features defined in (25) is fed as the input to a novel MLP network. This MLP network contains one hidden layer with 3675 neurons, whose activation functions are chosen to be the ReLU. The output layer has only 1 neuron with the sigmoid function as its activation function—i.e.,

where () is the output of this sigmoid function and the index i is the i-th amino acid in the protein sequence . The dropout algorithm [40] with a dropout percentage of is employed for this MLP network.

The total loss function of our model for a package of size m (i.e., the number of amino acids used in each iteration during the training) is therefore defined as:

In Equation (27), the predicted probability of the output is equal to:

where is equal to either 1, suggesting that the i-th amino acid is disordered, or to 0, implying that it is ordered.

3.2. Training Procedure

In this section, we present the process of training the deep neural network developed in the previous section. The training dataset we use in this paper is DIS1450 from the DisProt [34]. We put all amino acids from all proteins in DIS1450 together and, based on this set, randomly divide them into packages of 128 amino acids. The training procedure is as follows: For each amino acid in a given package, we use the deep neural network constructed above to calculate the predicted probability defined in the Equation (28). When we have calculated all predicted probabilities for this given package, we can use the Equation (27) to estimate the average loss for the package. This computed averaged loss of the package is used to update the weights and biases of our network via a stochastic gradient descent (SGD) algorithm [41], where the learning rate . We repeat the above process until all the packages have completed. We refer to this process as an epoch. Then, we repeat the above process until the loss function stops converging or reaches the maximum number of epochs.

3.3. Performance Evaluation

Four metrics were used to evaluate the performance of IDPR prediction [42]. These were sensitivity (), specificity (), balanced accuracy (), and Matthews correlation coefficient (). The related formulas are as follows:

We use , , , and to represent the number of true positives, false positives, true negatives, and false negatives, respectively. The values of can be any number between and 1. The prediction accuracy for both ordered and disordered residue increases as the value becomes closer and closer to 1.

4. Experimental Results

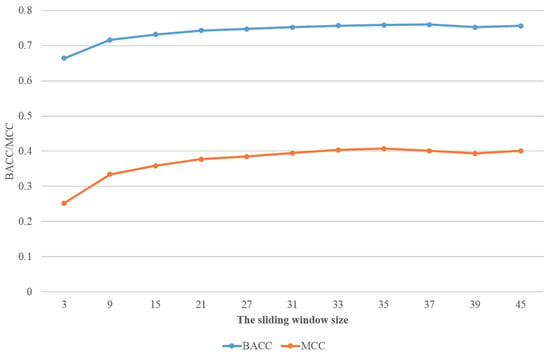

In this section, we will demonstrate the performance of our deep neural network on the different test sets: DIS166 [34], R80 [25], and MXD494 [33]. As a comparison, we also present the simulation results of the best known predictors for these datasets, such as RFPR-IDP (available at http://bliulab.net/RFPR-IDP/server (accessed on 26 March 2021)), SPOT-Disorder2 (available at https://sparks-lab.org/server/spot-disorder2/ (accessed on 26 March 2021)), DISvgg [16], and IDP-Seq2Seq [18]. For convenience, we refer to our method as MLP-VGG19-MLP. A ten-fold cross validation was performed on the training dataset DIS1450. The results of MLP-VGG19-MLP with different window sizes are shown in Table 1. In addition, the values achieved for and with different sliding window sizes are shown in Figure 3. When the sliding window size was larger than 33, the values tended to be smooth. Thus, we used the sliding window size of in subsequent simulations.

Table 1.

Performance on dataset DIS1450 with different sliding window sizes.

Figure 3.

The performance with different sliding window sizes on and .

On the test sets DIS166, R80, and MXD494, the performance of MLP-VGG19-MLP was superior to that of RFPR-IDP, SPOT-Disorder2, and DISvgg. The value of MLP-VGG19-MLP is on the test set DIS166, on the blind test set R80, and on the blind test set MXD494. The simulation results show that MLP-VGG19-MLP either considerably outperforms these methods or, when relying on a much smaller training dataset compared to the one used in [18], attains a performance similar to that of IDP-Seq2Seq [18]. Table 2, Table 3 and Table 4, respectively, present the performances of all these methods on test sets DIS166, R80, and MXD494.

Table 2.

Performance of various methods on dataset DIS166.

Table 3.

Performance of various methods on blind test dataset R80.

Table 4.

Performance of various methods on blind test dataset MXD494.

5. Conclusions

In this study, a deep neural structure is developed for identifying IDPRs, where a variant VGG19 is situated between two MLP networks. Furthermore, for the first time, three novel sequence features—i.e., persistent entropy, PCAA2, and PCAA3—are introduced for identifying IDPRs. In comparison with our previous DISvgg algorithm, the prediction performance of MLP-VGG19-MLP exceeded it. Furthermore, only one VGG19 was used in this paper, while ten VGG16nets were employed in the previous paper. In comparison with RFPR-IDP, SPOT-Disorder2, and IDP-Seq2Seq, MLP-VGG19-MLP relies on a much smaller training set to achieve a performance that is better or similar to that achieved using other methods. The simulation results show that our neural structure either considerably outperforms other known methods or, when relying on a much smaller training set, attains a similar performance. Three novel sequence features could be used as valuable sequence features in the further development of identifying IDPRs.

Author Contributions

Z.W. designed the study, carried out the data analysis, and drafted the manuscript. J.Z. participated in the design of the study and revised the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets DIS1616, DIS1450, and DIS166; models; and code can be found at the website https://github.com/ZakeWang/MLP_VGG19_MLP.git accessed on 9 January 2022.

Acknowledgments

We would like to thank the DisProt database (http://www.disprot.org/ accessed on 9 January 2022), which is the basis of our research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dyson, H.J.; Wright, P.E. Intrinsically unstructured proteins and their functions. Nat. Rev. Mol. Cell Biol. 2005, 6, 197–208. [Google Scholar] [CrossRef] [PubMed]

- Iakoucheva, L.M.; Brown, C.J.; Lawson, J.D.; Obradović, Z.; Dunker, A.K. Intrinsic disorder in cell-signaling and cancer-associated proteins. J. Mol. Biol. 2002, 323, 573–584. [Google Scholar] [CrossRef] [Green Version]

- Piovesan, D.; Tabaro, F.; Mičetić, I.; Necci, M.; Quaglia, F.; Oldfield, C.J.; Aspromonte, M.C.; Davey, N.E.; Davidović, R.; Dosztányi, Z. DisProt 7.0: A major update of the database of disordered proteins. Nucleic Acids Res. 2017, 45, D219–D227. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Uversky, V.N. Functional roles of transiently and intrinsically disordered regions within proteins. FEBS J. 2015, 282, 1182–1189. [Google Scholar] [CrossRef]

- Holmstrom, E.D.; Liu, Z.; Nettels, D.; Best, R.B.; Schule, R.B. Disordered rna chaperones can enhance nucleic acid folding via local charge screening. Nat. Commun. 2019, 10, 1–11. [Google Scholar] [CrossRef]

- Sun, X.L.; Jones, W.T.; Rikkerink, E.H.A. Gras proteins: The versatile roles of intrinsically disordered proteins in plant signalling. Biochem. J. 2012, 442, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Uversky, V.N.; Oldfield, C.J.; Dunker, A.K. Intrinsically disordered proteins in human diseases: Introducing the D2 concept. Annu. Rev. Biophys. 2008, 37, 215–246. [Google Scholar] [CrossRef]

- Uversky, V.N.; Oldfield, C.J.; Midic, U.; Xie, H.; Xue, B.; Vucetic, S.; Iakoucheva, L.M.; Obradovic, Z.; Dunker, A.K. Unfoldomics of human diseases: Linking protein intrinsic disorder with diseases. BMC Genom. 2009, 10, S7. [Google Scholar] [CrossRef] [Green Version]

- Kulkarni, V.; Kulkarni, P. Intrinsically disordered proteins and phenotypic switching: Implications in cancer. Prog. Mol. Biol. Transl. Sci. 2019, 166, 63–84. [Google Scholar]

- Kaya, I.E.; Ibrikci, T.; Ersoy, O.K. Prediction of disorder with new computational tool: BVDEA. Expert Syst. Appl. 2011, 38, 14451–14459. [Google Scholar] [CrossRef] [Green Version]

- Prilusky, J.; Felder, C.E.; Zeev-Ben-Mordehai, T.; Rydberg, E.H.; Man, O.; Beckmann, J.S.; Silman, I.; Sussman, J.L. FoldIndex: A simple tool to predict whether a given protein sequence is intrinsically unfolded. Bioinformatics 2005, 13, 3435–3438. [Google Scholar] [CrossRef] [PubMed]

- Linding, R.; Russell, R.B.; Neduva, V.; Gibson, T.J. Globplot: Exploring Protein Sequences for Globularity and Disorder. Nucleic Acids Res. 2003, 31, 3701–3708. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dosztanyi, Z.; Csizmok, V.; Tompa, P.; Simon, I. IUPred: Web server for the prediction of intrinsically unstructured regions of proteins based on estimated energy content. Bioinformatics 2005, 21, 3433–3434. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Galzitskaya, O.V.; Garbuzynskiy, S.O.; Lobanov, M.Y. FoldUnfold: Web server for the prediction of disordered regions in protein chain. Bioinformatics 2006, 22, 2948–2949. [Google Scholar] [CrossRef] [Green Version]

- Lobanov, M.Y.; Galzitskaya, O.V. The Ising model for prediction of disordered residues from protein sequence alone. Phys. Biol. 2011, 8, 35004. [Google Scholar] [CrossRef]

- Xu, P.; Zhao, J.; Zhang, J. Identification of Intrinsically Disordered Protein Regions Based on Deep Neural Network-VGG16. Algorithms 2021, 14, 107. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Liu, B. RFPR-IDP: Reduce the false positive rates for intrinsically disordered protein and region prediction by incorporating both fully ordered proteins and disordered proteins. Briefings Bioinform. 2021, 22, 2000–2011. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.J.; Pang, Y.H.; Liu, B. IDP-Seq2Seq: Identification of intrinsically disordered regions based on sequence to sequence learning. Bioinformatics 2020, 36, 5177–5186. [Google Scholar] [CrossRef]

- Hanson, J.; Yang, Y.; Paliwal, K.; Zhou, Y. Improving protein disorder prediction by deep bidirectional long short-term memory recurrent neural networks. Bioinformatics 2017, 33, 685–692. [Google Scholar] [CrossRef] [Green Version]

- Hanson, J.; Paliwal, K.K.; Litfin, T.; Zhou, Y. SPOT-Disorder2: Improved Protein Intrinsic Disorder Prediction by Ensembled Deep Learning. Genom. Bioinform. 2019, 17, 645–656. [Google Scholar] [CrossRef]

- Jones, D.T.; Cozzetto, D. DISOPRED3: Precise disordered region predictions with annotated protein-binding activity. Bioinformatics 2015, 31, 857–863. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Faraggi, E.; Xue, B.; Dunker, A.K.; Uversky, V.N.; Zhou, Y. SPINE-D: Accurate prediction of short and long disordered regions by a single neural-network based method. J. Biomol. Struct. Dyn. 2012, 29, 799–813. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Walsh, I.; Martin, A.J.; Domenico, T.D.; Tosatto, S.C. ESpritz: Accurate and fast prediction of protein disorder. Bioinformatics 2012, 28, 503–509. [Google Scholar] [CrossRef] [Green Version]

- Shimizu, K.; Hirose, S.; Noguchi, T. POODLE-S: Web application for predicting protein disorder by using physicochemical features and reduced amino acid set of a position-specific scoring matrix. Bioinformatics 2007, 23, 2337–2338. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.R.; Thomson, R.; McNeil, P.; Esnouf, R.M. RONN: The bio-basis function neural network technique applied to the detection of natively disordered regions in proteins. Bioinformatics 2005, 21, 3369–3376. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, K.; Vucetic, S.; Radivojac, P.; Brown, C.J.; Dunker, A.K.; Obradovic, Z. Optimizing long intrinsic disorder predictors with protein evolutionary information. J. Bioinform. Comput. Biol. 2005, 3, 35–60. [Google Scholar] [CrossRef] [PubMed]

- Mizianty, M.J.; Stach, W.; Chen, K.; Kedarisetti, K.D.; Disfani, F.M.; Kurgan, L. Improved sequence-based prediction of disordered regions with multilayer fusion of multiple information sources. Bioinformatics 2010, 26, 489–496. [Google Scholar] [CrossRef] [Green Version]

- Kozlowski, L.P.; Bujnicki, J.M. MetaDisorder: A meta-server for the prediction of intrinsic disorder in proteins. BMC Bioinform. 2012, 13, 111. [Google Scholar] [CrossRef] [Green Version]

- Schlessinger, A.; Punta, M.; Yachdav, G.; Kajan, L.; Rost, B. Improved disorder prediction by combination of orthogonal approaches. PLoS ONE 2009, 4, e4433. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, H.; Zhao, J.; Sun, G. The Prediction of Intrinsically Disordered Proteins Based on Feature Selection. Algorithms 2019, 12, 46. [Google Scholar] [CrossRef] [Green Version]

- He, H.; Zhao, J.X. A Low Computational Complexity Scheme for the Prediction of Intrinsically Disordered Protein Regions. Math. Probl. Eng. 2018, 2018, 8087391. [Google Scholar] [CrossRef] [Green Version]

- Peng, Z.L.; Kurgan, L. Comprehensive comparative assessment of in-silico predictors of disordered regions. Curr. Protein Pept. Sci. 2012, 13, 6–18. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hatos, A.; Hajdu-Soltész, B.; Monzon, A.M.; Palopoli, N.; Álvarez, L.; Aykac-Fas, B.; Bassot, C.; Benítez, G.I.; Bevilacqua, M.; Chasapi, A.; et al. DisProt: Intrinsic protein disorder annotation in 2020. Nucleic Acids Res. 2020, 48, D269–D276. [Google Scholar] [CrossRef] [Green Version]

- Meiler, J.; Muller, M.; Zeidler, A.; Schmaschke, F. Generation and evaluation of dimension-reduced amino acid parameter representations by artificial neural networks. J. Mol. Model. 2001, 7, 360–369. [Google Scholar] [CrossRef]

- Jones, D.T.; Ward, J.J. Prediction of disordered regions in proteins from position specific score matrices. Proteins Struct. Funct. Genet. 2003, 53, 573–578. [Google Scholar] [CrossRef] [PubMed]

- Pruitt, K.D.; Tatusova, T.; Klimke, W.; Maglott, D.R. NCBI Reference Sequences: Current status, policy and new initiatives. Nucleic Acids Res. 2009, 37, D32–D36. [Google Scholar] [CrossRef] [Green Version]

- Atienza, N.; Gonzalez-Diaz, R.; Rucco, M. Persistent entropy for separating topological features from noise in vietoris-rips complexes. J. Intell. Inf. Syst. 2019, 52, 637–655. [Google Scholar] [CrossRef] [Green Version]

- Edelsbrunner, H.; Harer, J. Persistent homology—A survey. Contemp. Math. 2008, 453, 257–282. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. Ournal Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Bottou, L.; Curtis, F.E.; Nocedal, J. Optimization Methods for Large-Scale Machine Learning. Siam Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Monastyrskyy, B.; Fidelis, K.; Moult, J.; Tramontano, A.; Kryshtafovych, A. Evaluation of disorder predictions in CASP9. Proteins 2011, 79, 107–118. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).