3.1. Deep Learning Networks and Dataset

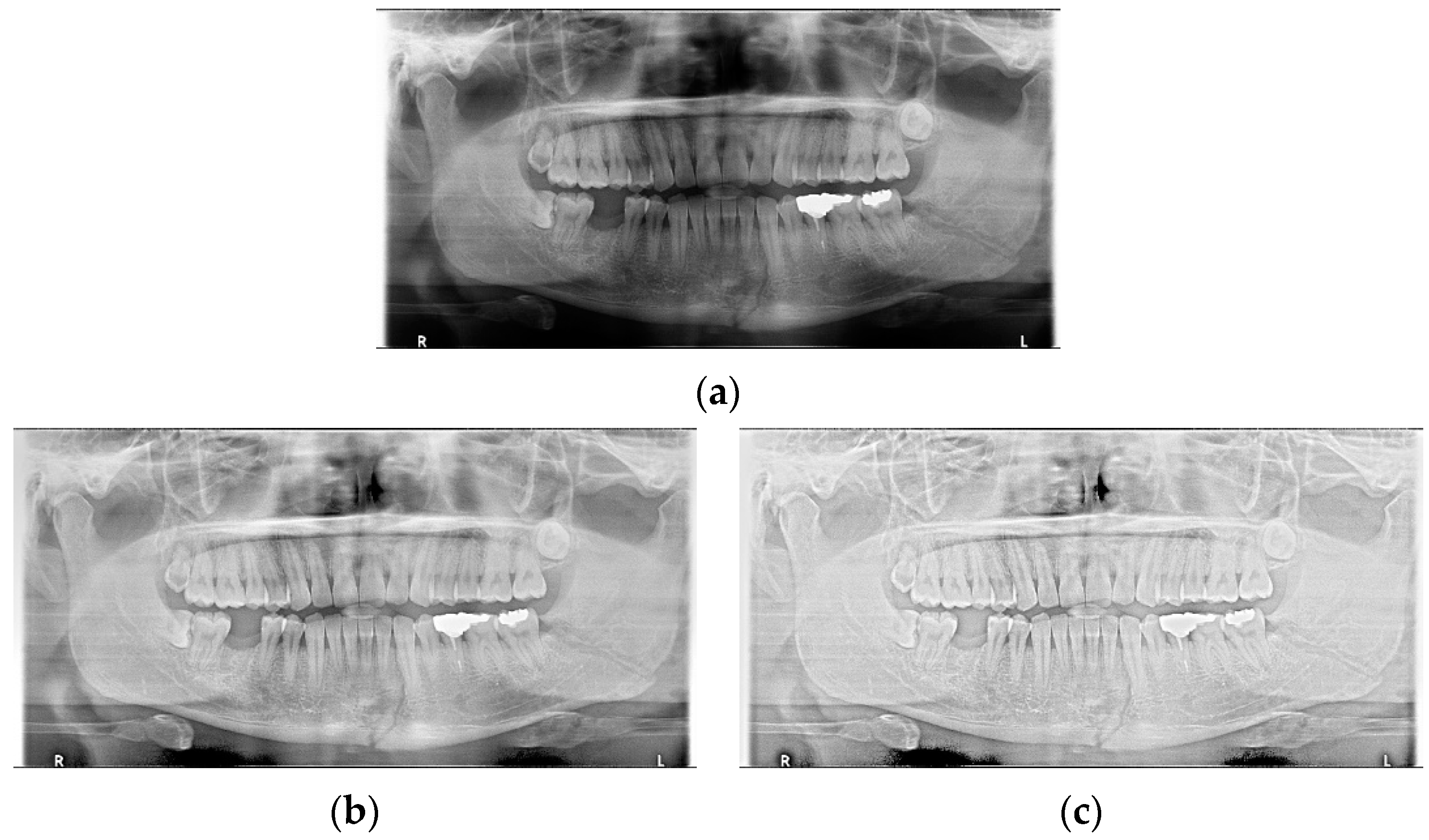

Deep learning methods were implemented on a PC with an Intel i7-9700K processor, 32 GB RAM, and an NVIDIA TITAN RTX. The deep learning networks consisted of the Windows version of YOLOv4 and U-NET using PyTorch. The training dataset consisted of 360 panoramic radiographs of mandibular fractures, and the test dataset consisted of 60 panoramic radiographs of mandibular fractures. The resolution of the radiographs ranged from to pixels. All datasets were approved by the Institutional Review Board (IRB) of Kyungpook National University Dental Hospital.

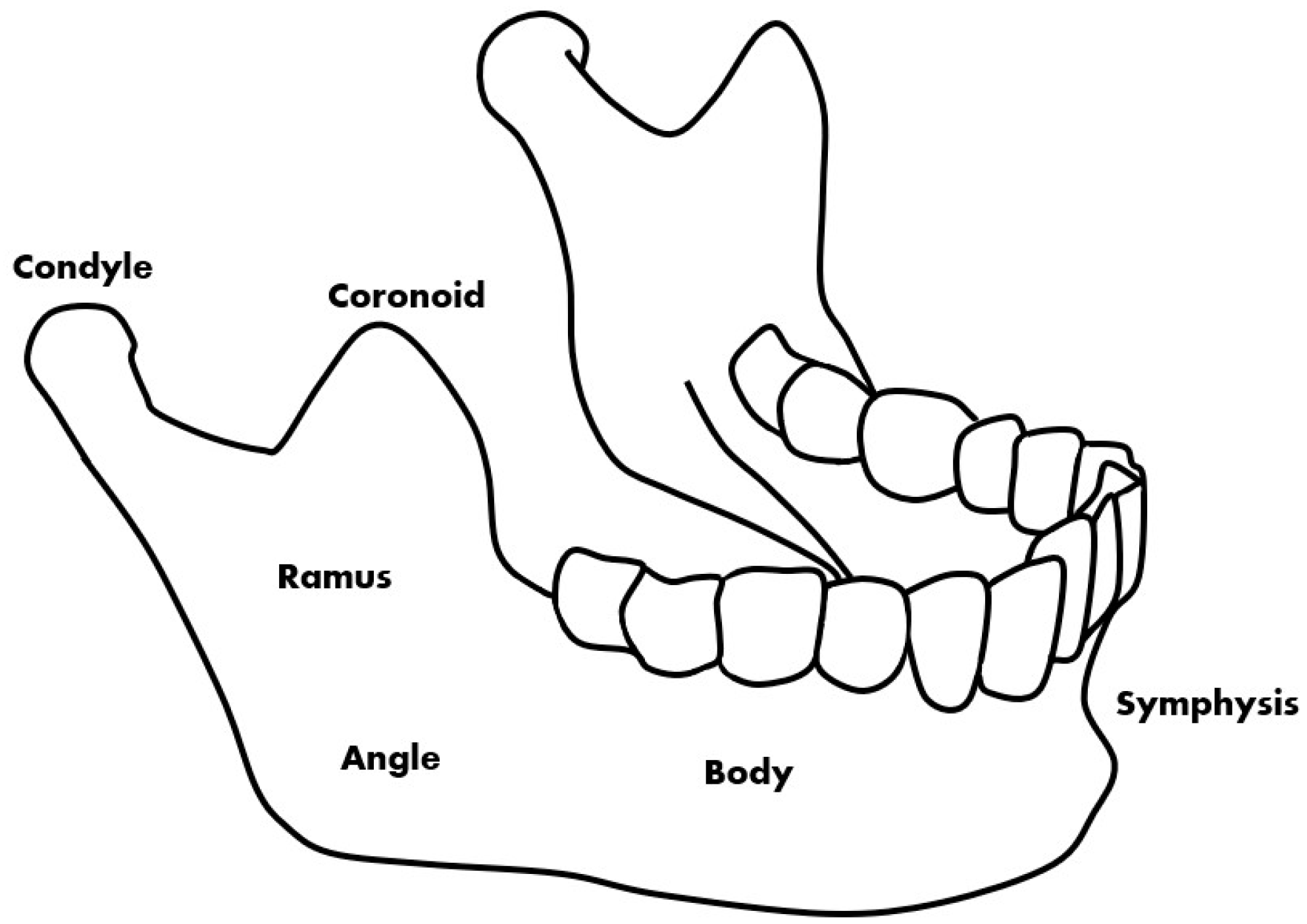

Among the 420 mandibular fracture panoramic radiographs, 360 training data images are selected, which are good to determine fractures, and other images are used as test images. Training data and test data are completely separated. Mandibular fractures are classified into six anatomical structure types. Symphysis, body, angle, and ramus are expressed as middle fractures in this study, and condyle and coronoid processes are expressed as side fractures. Generally, when the fracture distributions of the training data and the test data are divided into middle and side fractures, the distribution is generally similar as shown in

Table 4 and

Table 5.

Also, in Dongas, P. et al. [

1], it is divided into 7 regions as symphysis, body, angle, ramus, subcondyle, condyle, and coronoid. In that case, middle fractures (symphysis, body, angle, ramus, subcondyle) account for 90.4% and side fractures (condyle, coronoid) account for 9.6%. In our study, ramus and subcondyle are collectively referred to as ramus and classified into 6 regions. Also, the middle fractures of 91.666% and the side fractures of 8.334% for the training data, the middle fractures of 84.536% and side fractures of 15.084% for the test data are nearly consistent with the referred case. Therefore, it can be seen from the fracture distribution of training and test data that the deep learning method of this study is close from general fracture distributions.

3.2. Evaluation Metric

The evaluation metric is an important value that can be used to evaluate the performance of deep-learning modules. In this study, performance was compared using three evaluation indicators: precision, recall, and F1 scores.

The precision score is related to the misdetection of the fracture diagnosis, as shown in Equation (1); if the number of misdetections in the fracture diagnosis is small, the precision score increases. The recall score is related to the ‘undetection’ of the fracture diagnosis, as shown in Equation (2), and if there is less detection, the recall score increases. Therefore, it is not possible to claim that the performance of either module is better if the precision or recall scores are higher; fortunately, though, it is possible to compare the precision and recall scores through the F1 score to determine whether the performance is improved. The F1 score is the harmonic average of precision and recall, and the performance evaluation metric of the module can be determined using the F1 score. There is no true negative in this evaluation metric because there is no normal patient image in the test panorama image first, and it is not correct to use the true negative metric as it does not know where the fracture occurs.

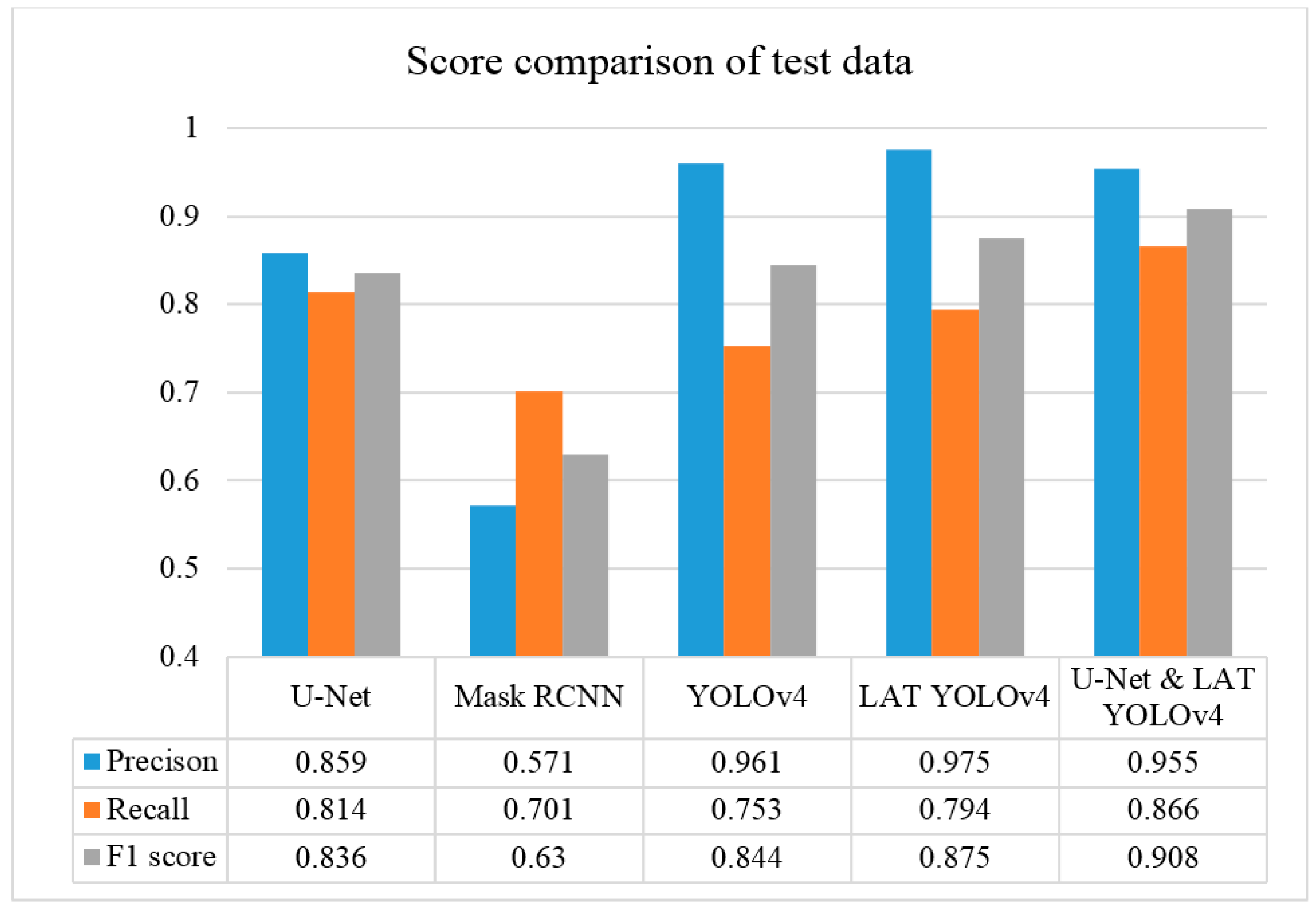

3.3. Comparison of Deep Learning Modules

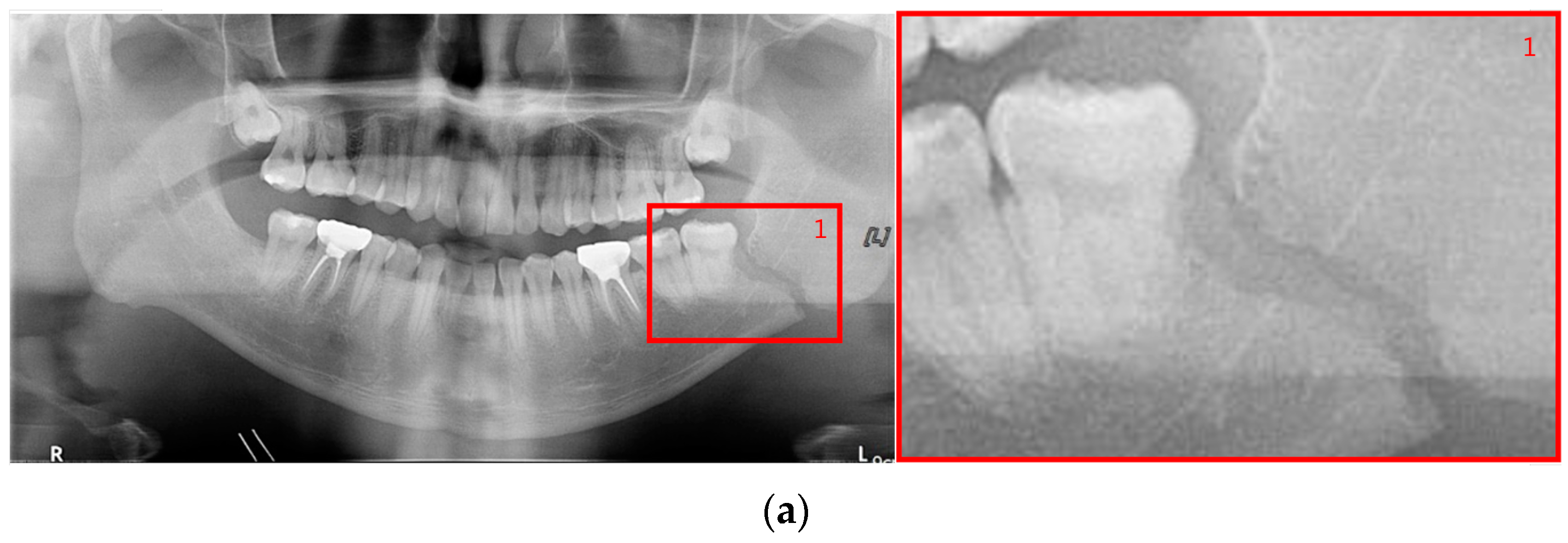

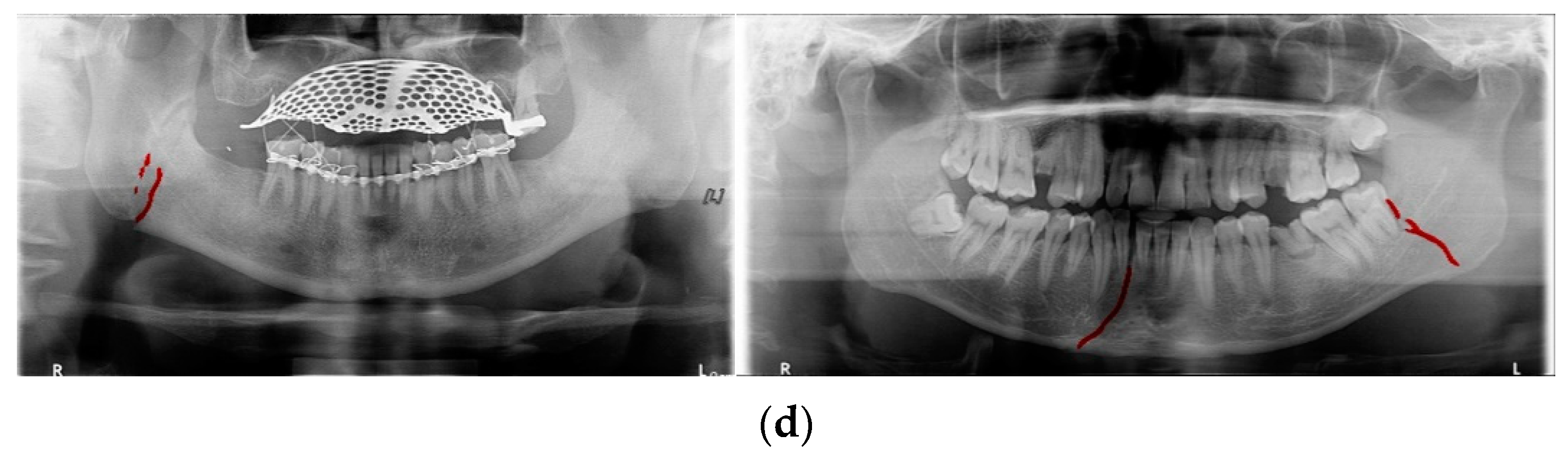

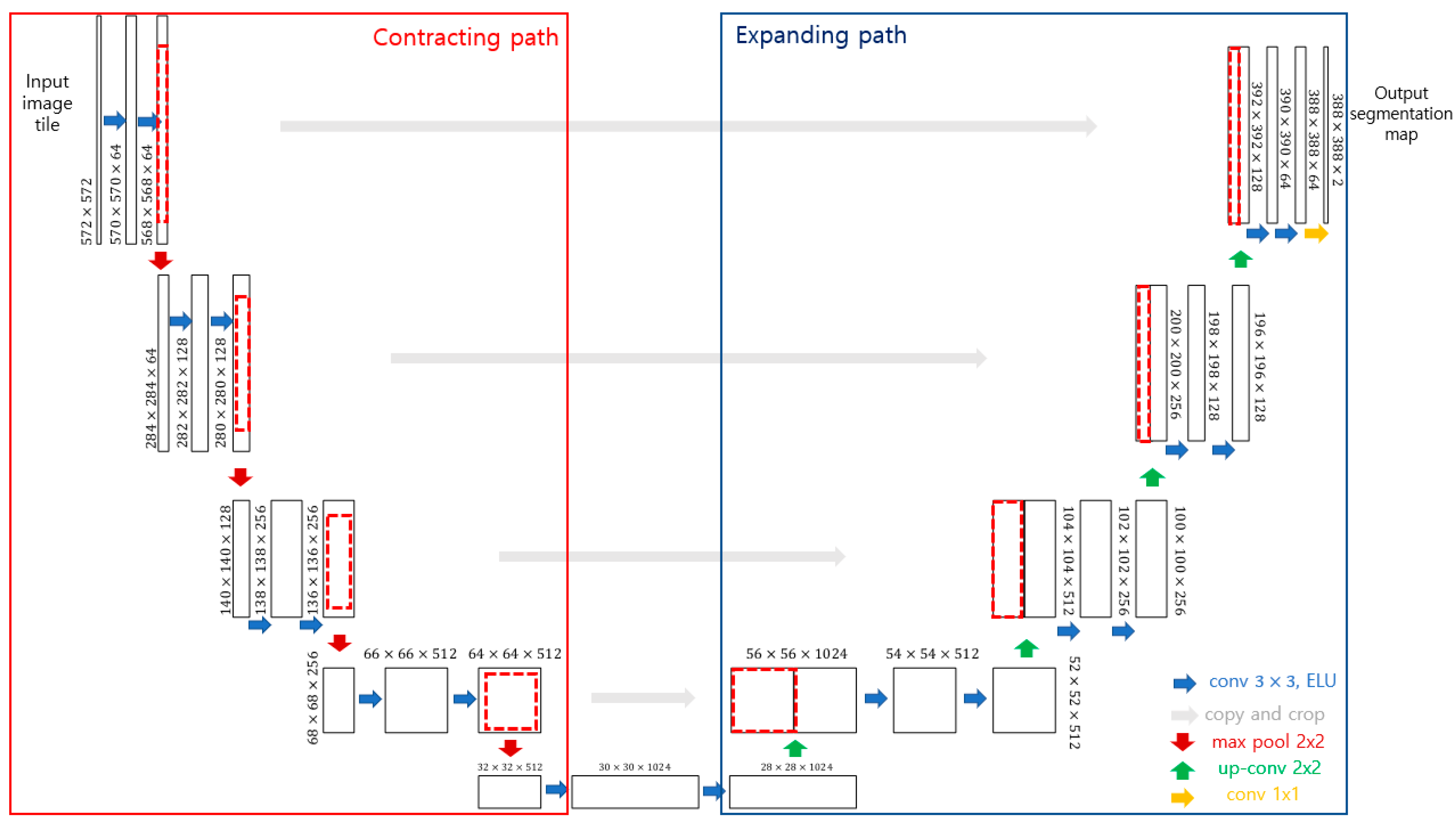

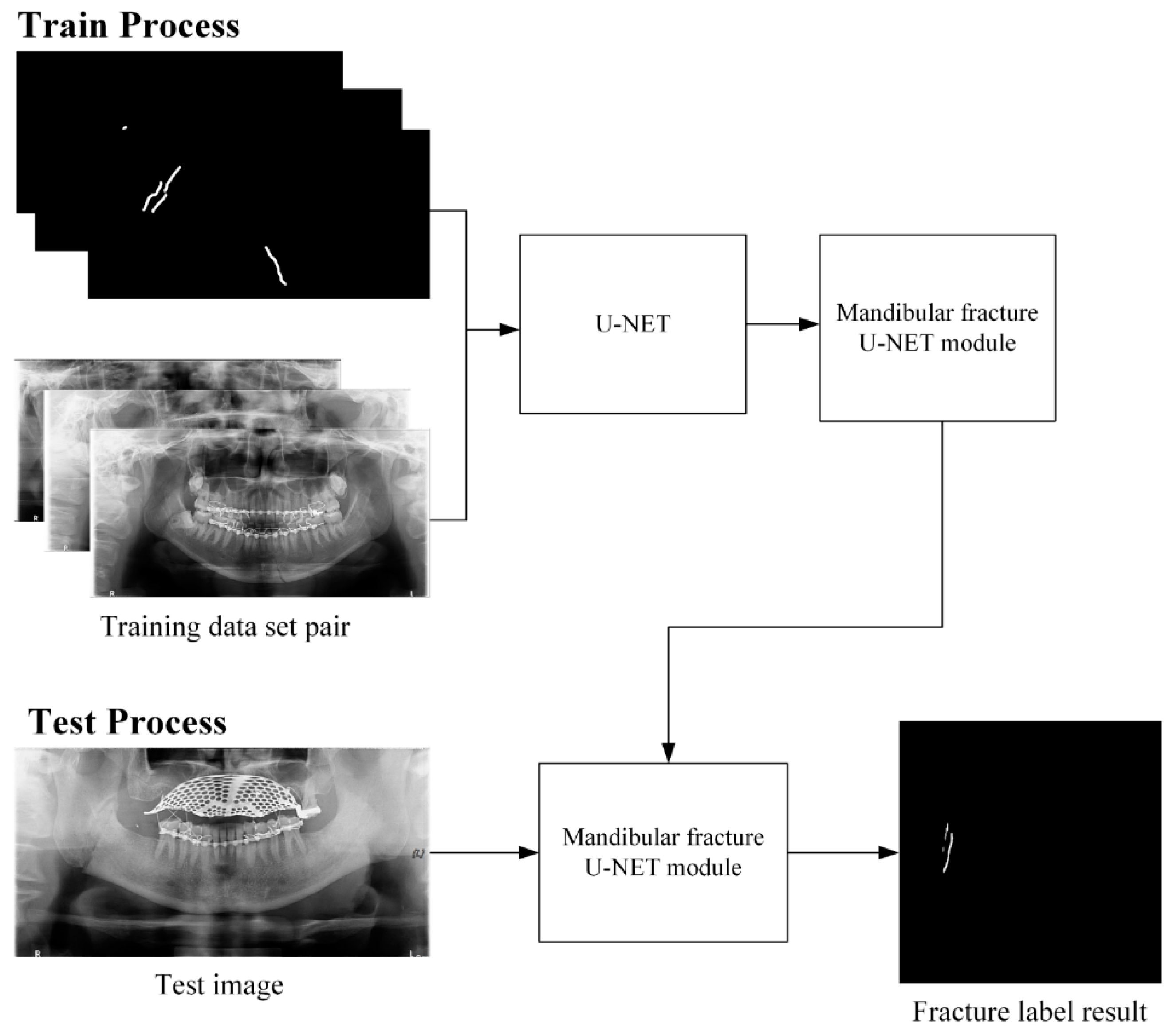

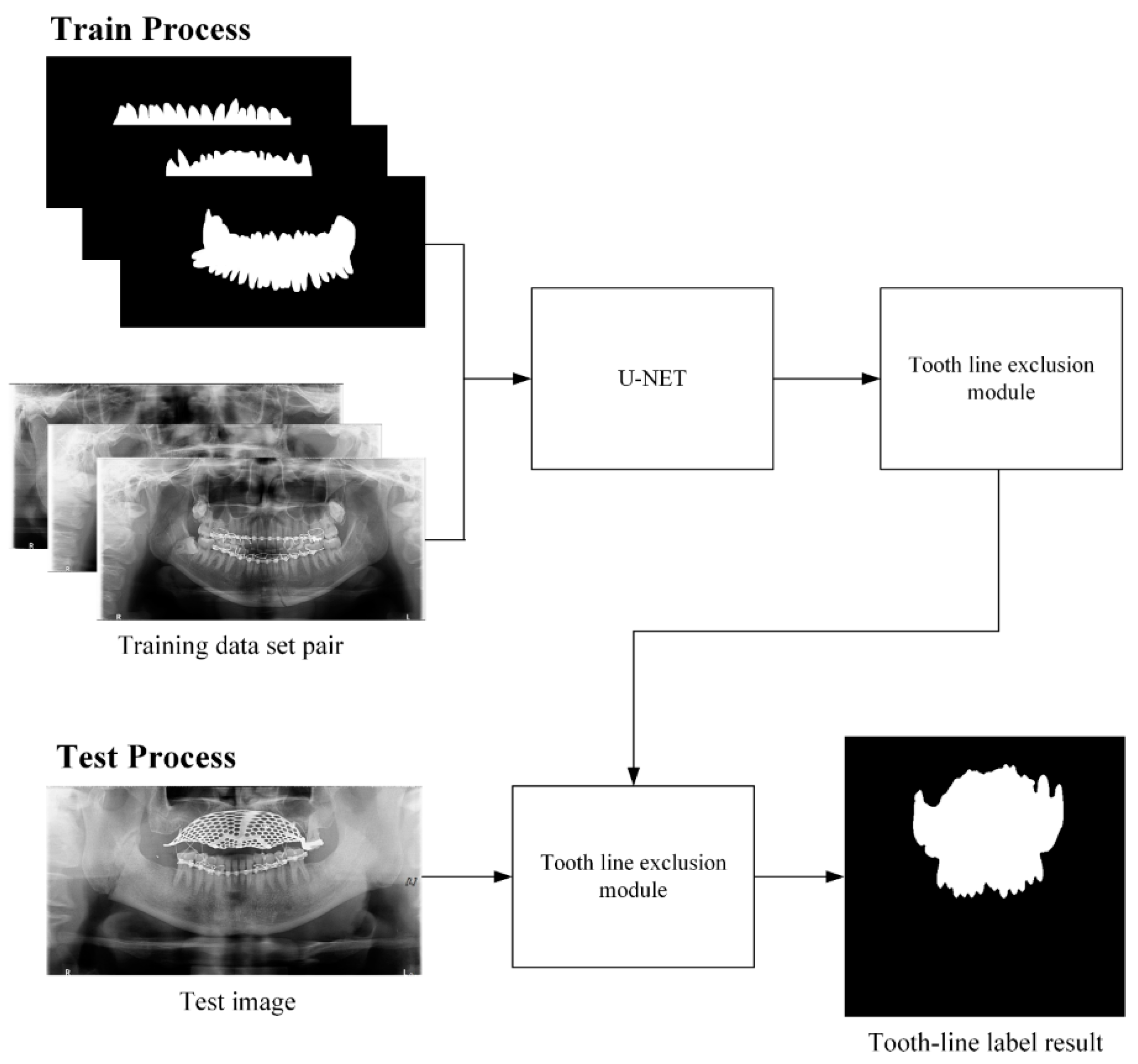

We compared the fracture detection capabilities of Mask R-CNN, YOLOv4, U-Net, LAT YOLOv4, and U-Net with LAT YOLOv4 using the same 360 training datasets and 60 test sets and showed that the proposed U-Net with LAT YOLOv4 module has improved mandibular fracture detection capabilities over other modules. First, the Mask R-CNN module was classified into six classes (symphysis, body, angle, ramus, condyle, and coronoid) on an anatomical basis and the fracture region was marked in the form of a polygon. Condyle fractures have many dislocated fractures; therefore, the fracture cannot be marked in the form of polygons and was marked as box-shaped. The learning was performed only with Mask R-CNN basic augmentation, without any other special data augmentation. The training data of the YOLOv4 module was also classified into six classes, such as the case of Mask R-CNN, and learned using YOLOv4 basic augmentation. The U-Net module did not have class classification and labeled mandibular fractures to train 360 panoramic images of the same training dataset. The LAT YOLOv4 module is a combination of MLAT and SLAT modules, and the YOLOv4 module was trained by processing MLAT, SLAT, and Gamma correction images on training data images, and each of the MLAT and SLAT modules was trained with 1080 panoramic images of training data. The U-Net and LAT YOLOv4 modules are a combination of U-Net and LAT YOLOv4 modules, and U-Net modifies the existing activation function to ELU. Because of the dislocated fracture of the condyle, only the remaining fracture areas, except for the condyle and coronoid, that is, the side fracture area was intensively trained. The training data used in this module also used the same 360 panoramic images of training data as the previous learning module. The final proposed mandibular fracture detection module is a combination of the LAT YOLOv4 and U-Net modules.

In

Table 6, the parameters used for training are indicated, and in

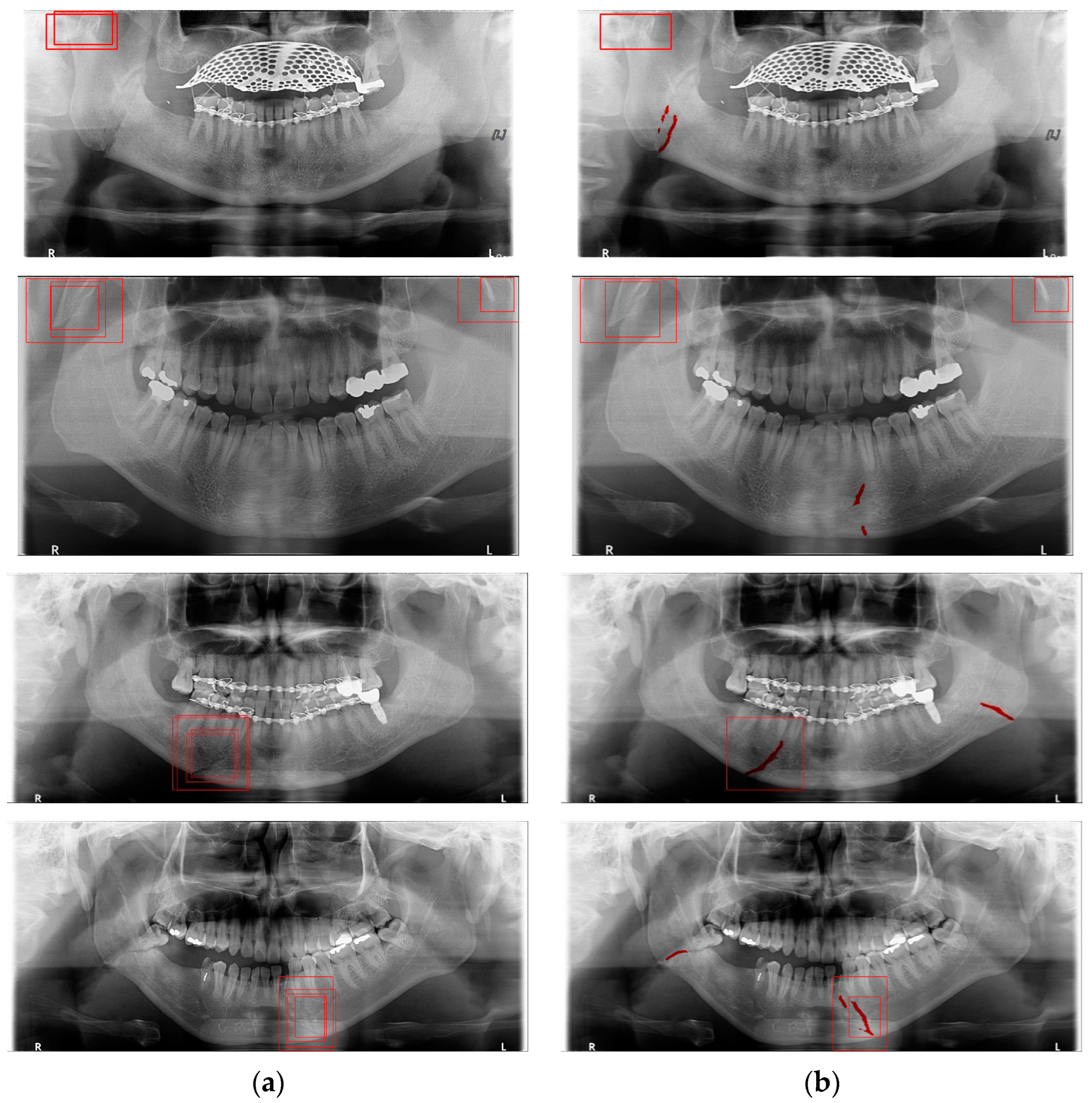

Figure 11,

Figure 12 and

Figure 13, the results of the doctor’s diagnosis, Mask R-CNN, YOLOv4, U-Net, LAT YOLOv4, and U-Net withYOLOv4 are compared.

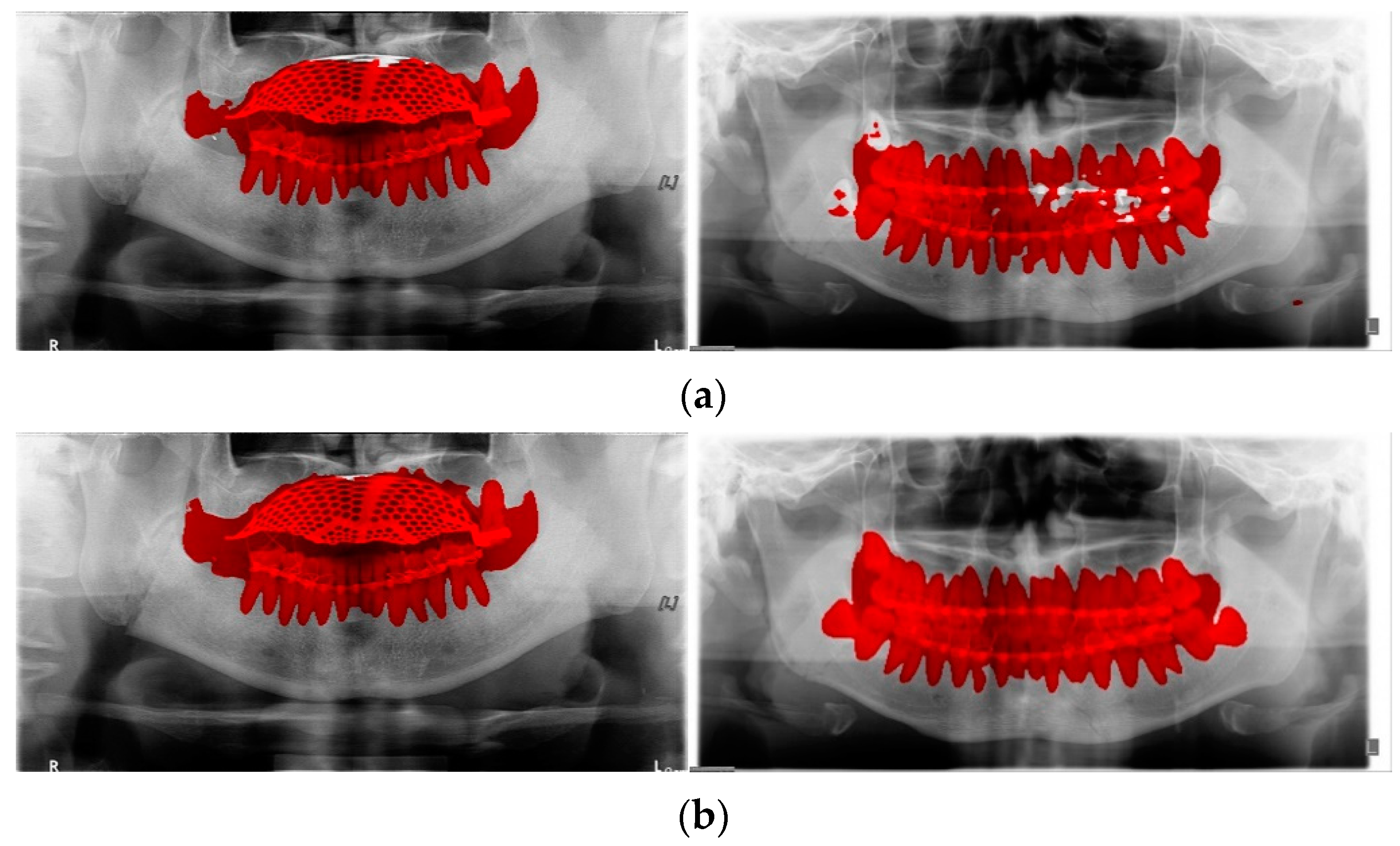

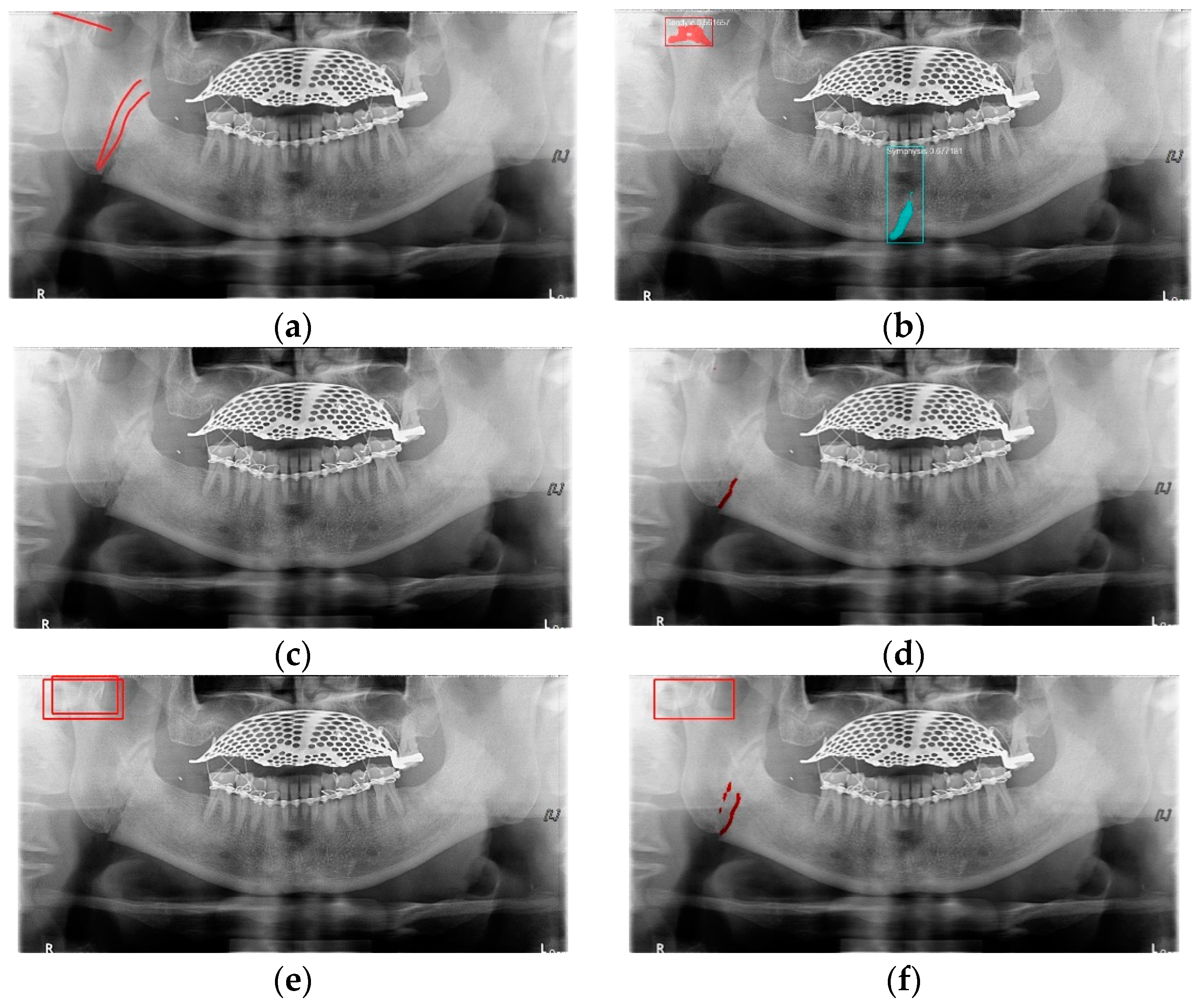

Figure 11 illustrates fractures in the angle and condylar regions, and in Mask R-CNN, the symphysis is misdiagnosed as a fracture. In

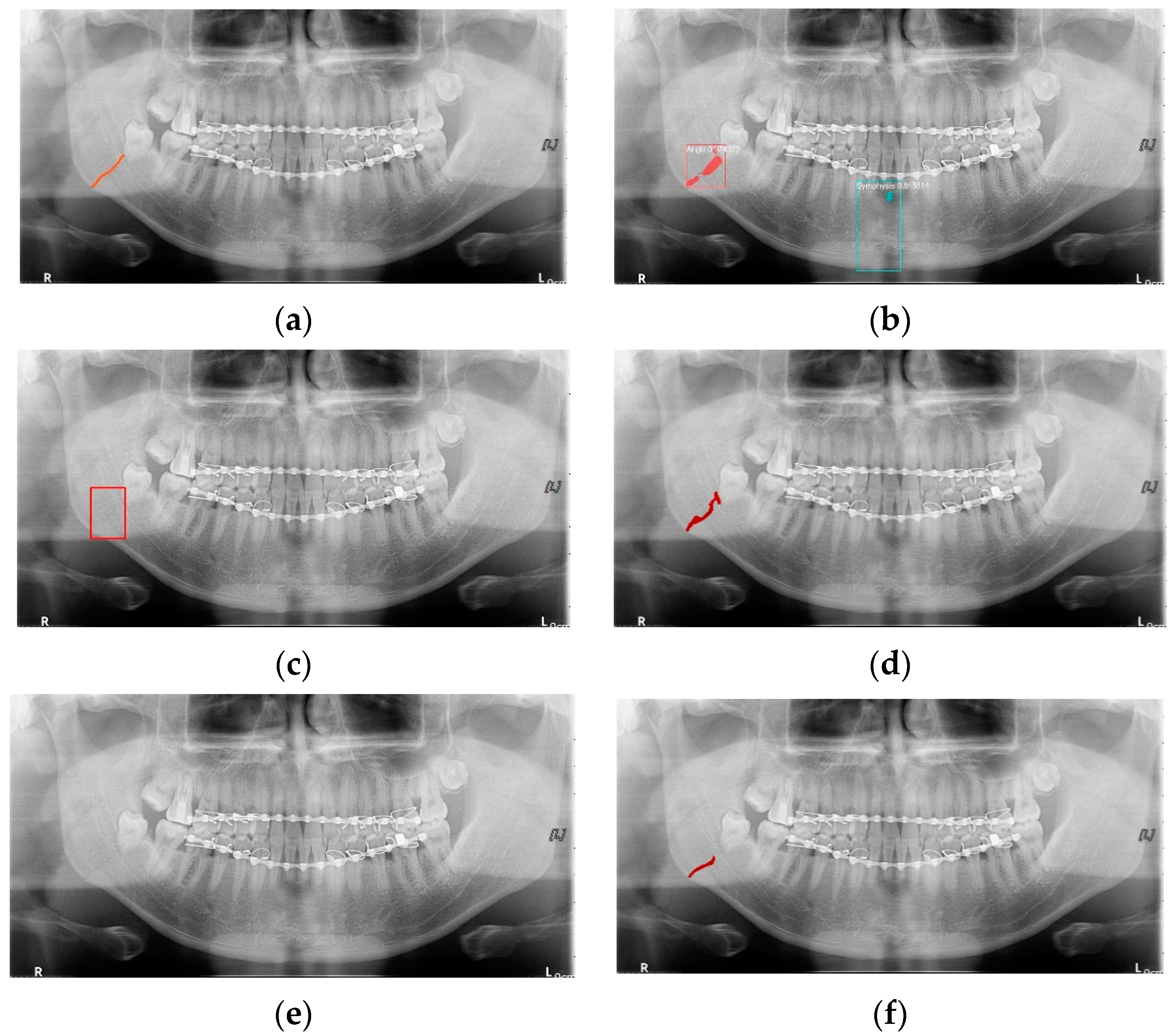

Figure 12, the Mask R-CNN misdiagnoses the symphysis as a fracture, the result of the fact that the YOLOv4 module has better performance detecting angle fractures rather than does the LAT YOLOv4, as shown in

Figure 12c,e. In short, the LAT-processed image does not always have the advantage of better revealing fractures compared with the normal panoramic radiograph.

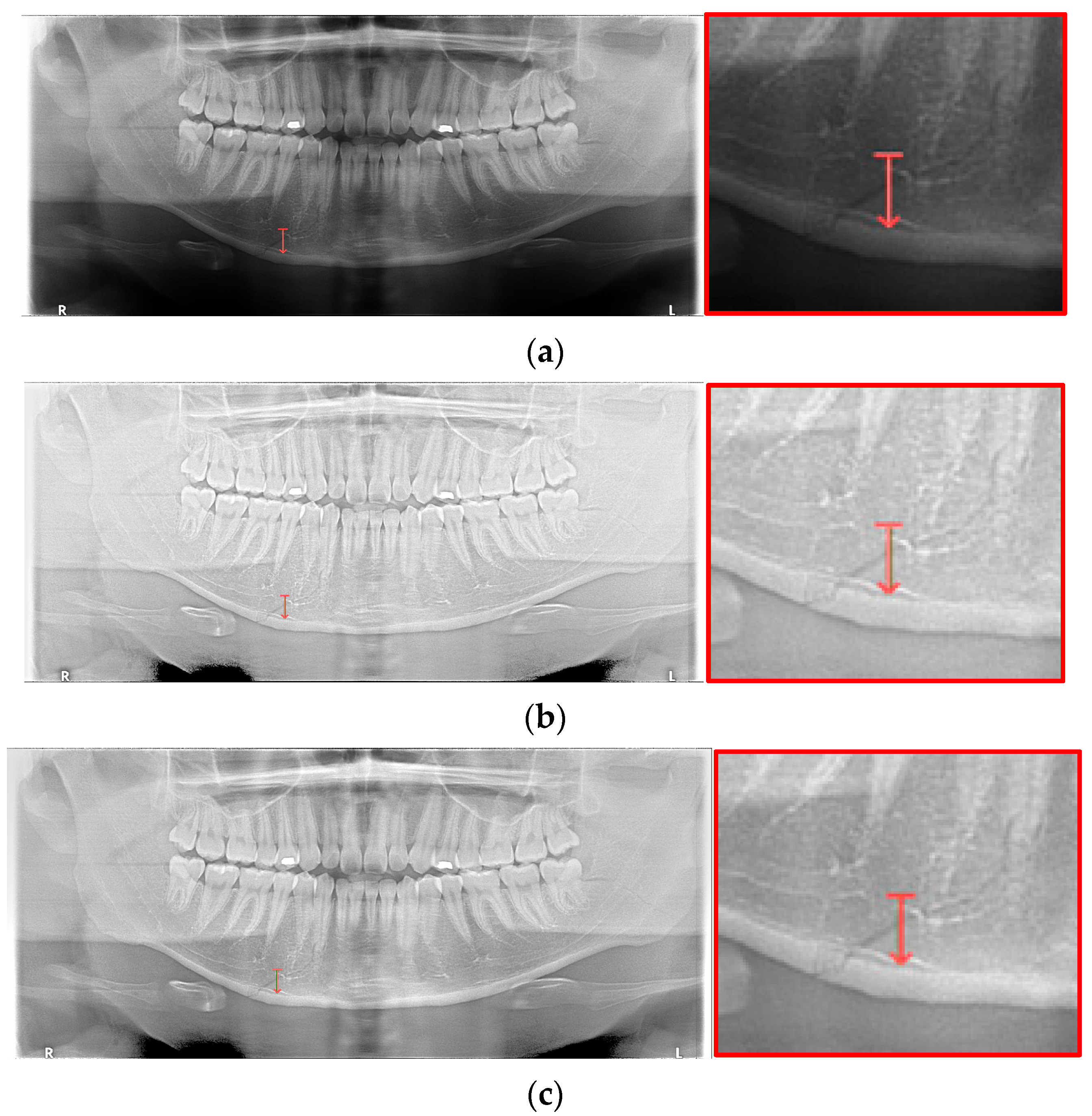

For the case of

Figure 12 images, it is possible to check the amount of local contrast improvement from the line profile information. In order to compare the changes in pixel brightness near angle fracture in the normal, SLAT and MLAT panoramic radiographs, the result of line profiles are shown in

Figure 14 and

Table 7. When comparing the maximum pixel brightness, minimum pixel brightness, average pixel brightness, and standard deviation in the line profile of the straight arrow near the angle fracture site, the normal panoramic radiograph has the largest standard deviation value of 5.6. Due to the characteristics of LAT processing, a dark area increases contrast, but as it becomes a bright area, contrast is maintained or slightly lowered.

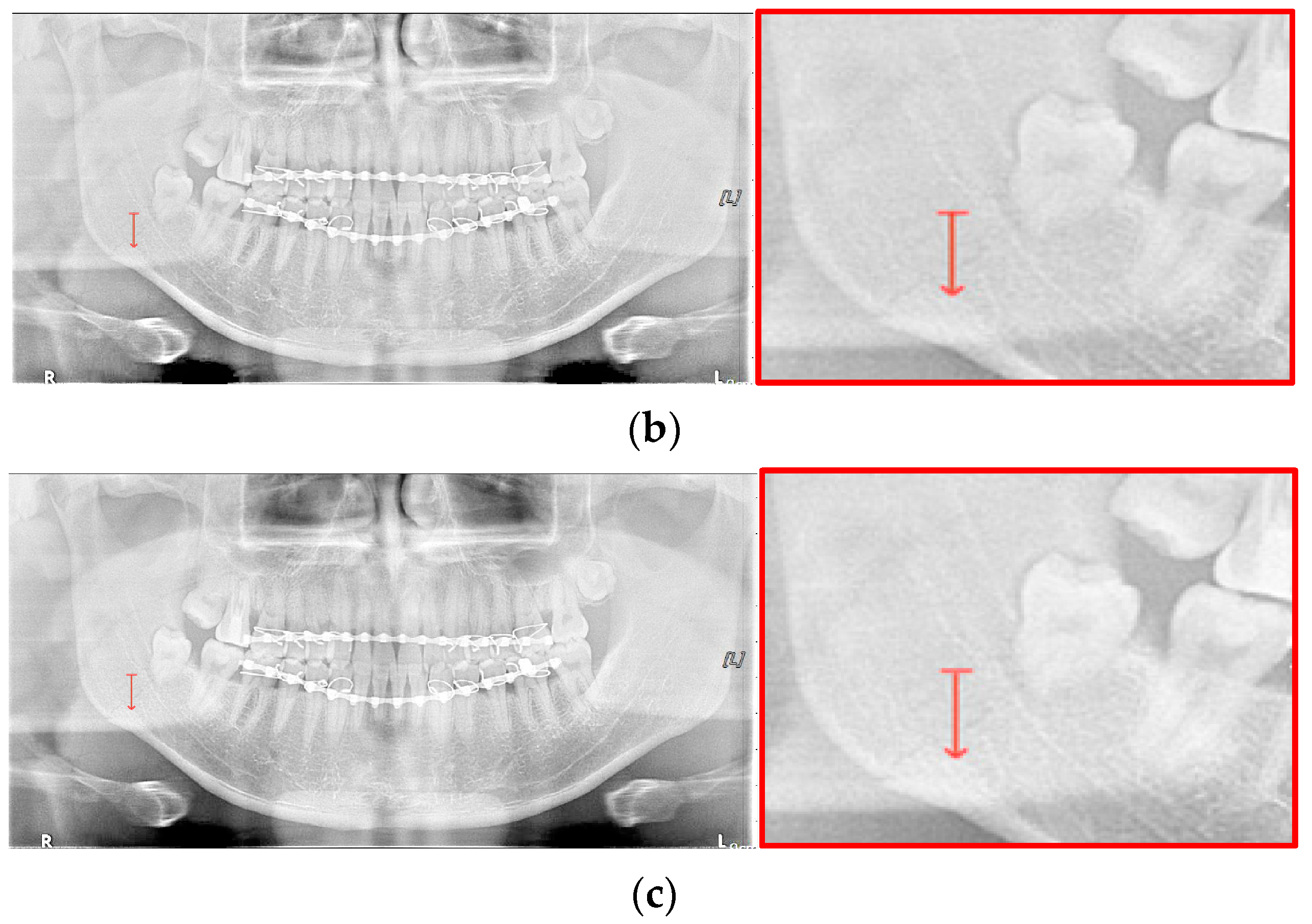

However, except for some bright radiograph images, the effect of LAT processing is evident in most dark radiograph images. As shown in

Figure 15, most of the LAT-processed radiographs have a high standard deviation value compared to the normal radiographs. In

Table 7, the LAT-processed panoramic radiographs shows the higher deviation values of 14.6 and 15.2, which are reasonably more than 9.3 of the normal panoramic radiograph.

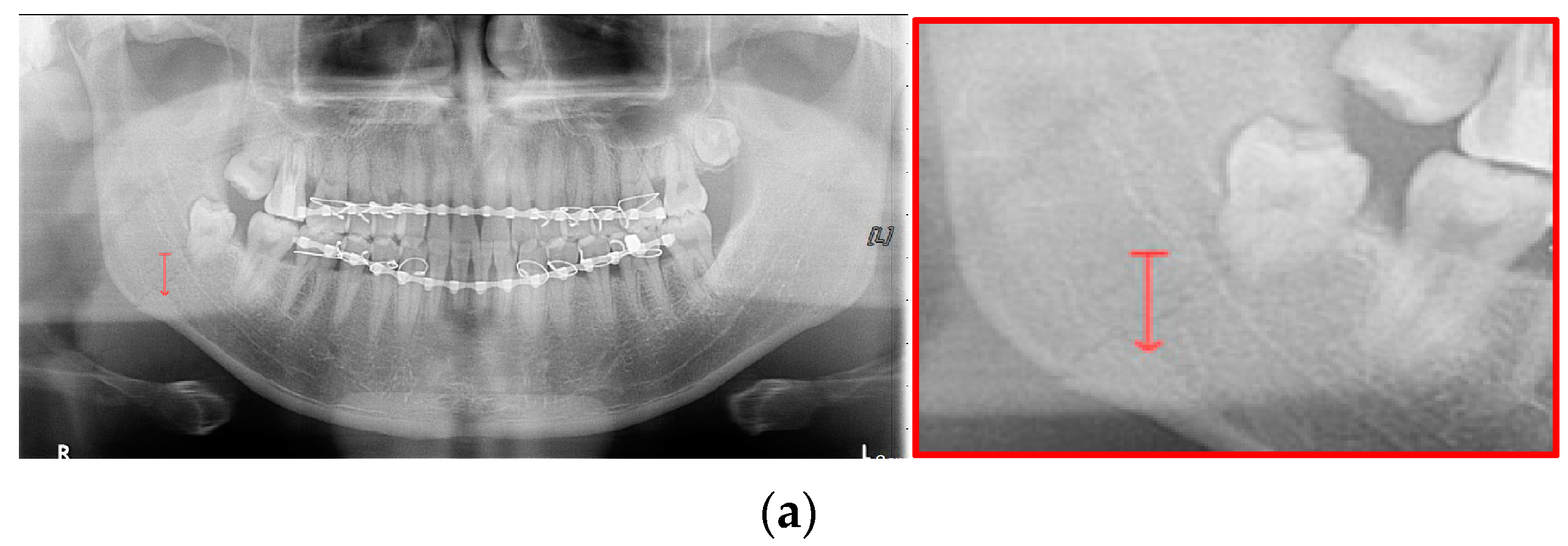

In

Figure 13, the ramus is misdiagnosed as a fracture. Therefore, Mask R-CNN had the lowest precision score because the misdiagnosis rate was higher than that of the other modules (

Figure 16). The YOLO module has a low misdiagnosis rate, while the ‘undiagnosis’ rate is high; therefore, the F1 score is low owing to the ’undiagnosis’ rate. As shown in

Figure 11,

Figure 12 and

Figure 13, YOLOv4 and LAT YOLOv4 have strong advantages over location information; therefore, they tend to detect well in the condyle region, that is, the side fracture, while they tend to detect poorly in the symphysis, body, and angle regions, where location information is ambiguous. Unlike Mask R-CNN and YOLO, U-Net is an image segmentation deep learning network, not an object detection deep learning network and labels mandibular fractures. U-Net marks fractures as lines on the label during training; however, it is difficult to label dislocated fractures, such as condyle fractures (

Figure 11). Therefore, in the U-Net module, the side fracture was not diagnosed or misdiagnosed, and the precision-recall score was lower than that of the YOLO modules. It was judged that if the two deep learning networks are used together, the shortcomings of YOLO and U-Net complement each other and help improve mandibular fracture performance. In the proposed module, duplicate boxes that occurred in LAT YOLOv4 were removed before merging with the U-Net. In the proposed U-Net with LAT YOLOv4, the precision score was reduced; however, many ‘undiagnoses’ were eliminated; therefore, the recall score was increased, and it can be observed that the overall F1 score improved the performance by more than 90%.