Abstract

This paper is devoted to the development and improvement of mechanisms for the functioning of an automated system for correcting the points of the planned trajectory of the tool of a laser robotic welding complex in the pre-process. Correction of the points of the planned trajectory is carried out in two stages: in the first stage, the focal laser radiation is corrected; in the second stage, the position of the tool is corrected. Correction of the focal laser radiation is carried out in conjunction with the automated focusing of the camera by moving the tool of the industrial complex along its own axis. The functioning of position correction mechanisms is based on methods for recognizing the edges of the gap line to be welded from the image obtained from the charge-coupled device (CCD) camera. The edges of the gap to be welded in the image are segmented using threshold selection. The boundaries of the threshold selection segment are the extreme values of the pixel distribution of the entire image in brightness. For unambiguous recognition of the desired edges based on the segmentation result, the features defining them as a pair of continuous, conditionally parallel lines are formalized. Relative to the recognized pair of edges, the correct position of the planned trajectory point relative to the current position of the welding head is determined. To transfer the correct position, we formalized the calculation model and chose an arbitrary point in the flat image in the workspace laser robotic welding complex, considering the orientation of the tool and the position of the camera. The results obtained made it possible to develop a correction system and successfully test it in the industrial complex.

1. Introduction

The technological process of laser welding is one of the most promising high-tech methods for obtaining permanent joints of metal structures [1]. The use of compact fiber lasers for obtaining welded joints of metal products has considerable advantages [2] and significantly expands the design and technological capabilities of enterprises [3,4].

Robotic manipulators are used to deliver focused radiation to the treatment area. Combining technological and auxiliary welding equipment with a robotic arm forms a laser robotic welding complex (LRC-W).

However, the implementation of the LRC-W in small-scale and piece production is difficult. First of all, this is due to the high labor intensity of the production changeover and debugging of the technological solution [5]. As a result, the final cost of production increases significantly [6] and the introduction of laser welding into production becomes less expedient [7].

One of the ways to increase the efficiency of small-scale production is to increase the level of automation [8] of production at LRC-W [9]. Currently, enterprises with small-scale production are adapting the approaches of technological preparation used in large-scale production. However, the adaptation does not provide an effective reduction in the complexity of production changeover and debugging of technological solutions.

Technological preparation of robotic industrial complexes (process design, operation planning, selection of optimal equipment, creation of control programs, etc.) [10] is carried out in specialized computer-aided manufacturing (CAM) [11] and computer-aided process planning (CAPP) systems based on three-dimensional models [12]. The tool trajectory is transferred from the model space to the working space of the industrial LRC-W by using mathematical methods of coordinate transformation [13].

In contrast to ideal objects, which are three-dimensional geometric models built via computer-aided design (CAD), real parts may have some geometric deviations [14], so the laser beam trajectory built on a three-dimensional model can pass by the joint of parts, even in the case of an ideal interface of coordinate systems according to which the robot’s control program was created.

Due to the fact that laser welding creates aggressive conditions for monitoring and measuring equipment, correcting the trajectory points on the fly is less preferable during execution.

The most promising solution to eliminate the problems of mismatch points of the planned trajectory is a software and hardware correction complex that functions in the preprocessing and automatically adapts the control program of movement to the installed blanks of the product in the tooling.

2. Problem Formulation

Deviation of the position of focused laser radiation by a fraction of a millimeter during laser welding can significantly affect the quality of the result (Figure 1).

Figure 1.

Positioning of the LRC-W tool in the process of planning a laser tack with an inaccurately located point of the trajectory relative to the gap between workpieces.

Today, software and hardware systems for correcting the points of the trajectory of the LRC-W tool are based on detecting the welded line [15]. In most cases, detection and recognition is performed using the following tools:

- (1)

- structured light tracking systems that project laser bands onto the study area, detecting and processing surface-distorted data [16] (for example, Meta Vision, Servo Robot, Scout, and Scansonic solutions);

- (2)

- software and hardware complexes for processing images obtained from a CCD camera of various configurations and operation spectra.

Structured light tracking systems allow good detection of the junction lines of workpieces with a significant gap but are poorly applicable in the case of small size [15]—that is, gaps that in most cases are welded by the LRC-W. The requirements of a fixed sensor location additionally impose restrictions on the positioning and orientation of the tool at the point of the projected trajectory when planning a preliminary detour. In addition, tracking devices are more expensive than solutions based on computer vision technologies.

Software and hardware complexes for processing images obtained with CCD do not have all the disadvantages of structural light tracking systems. However, for their stable operation, they require obtaining a high-quality image that allows them to sufficiently recognize the studied geometric primitives and outlines.

The first problem that occurs during recognition is a blurry image obtained as a result of the deviation of the LRC-W tool from the planned position. The result of the deviation is the defocusing of the CCD camera at the points of the translated trajectory from the CAM to the LRC-W workspace. Image blurring can be eliminated by automated focusing by moving the tool along its own orientation axis (in our case, along the axis of the laser beam). In other words, the problem is solved by developing an autofocus system for a CCD camera that uses the movement of an industrial complex tool as a focusing mechanism.

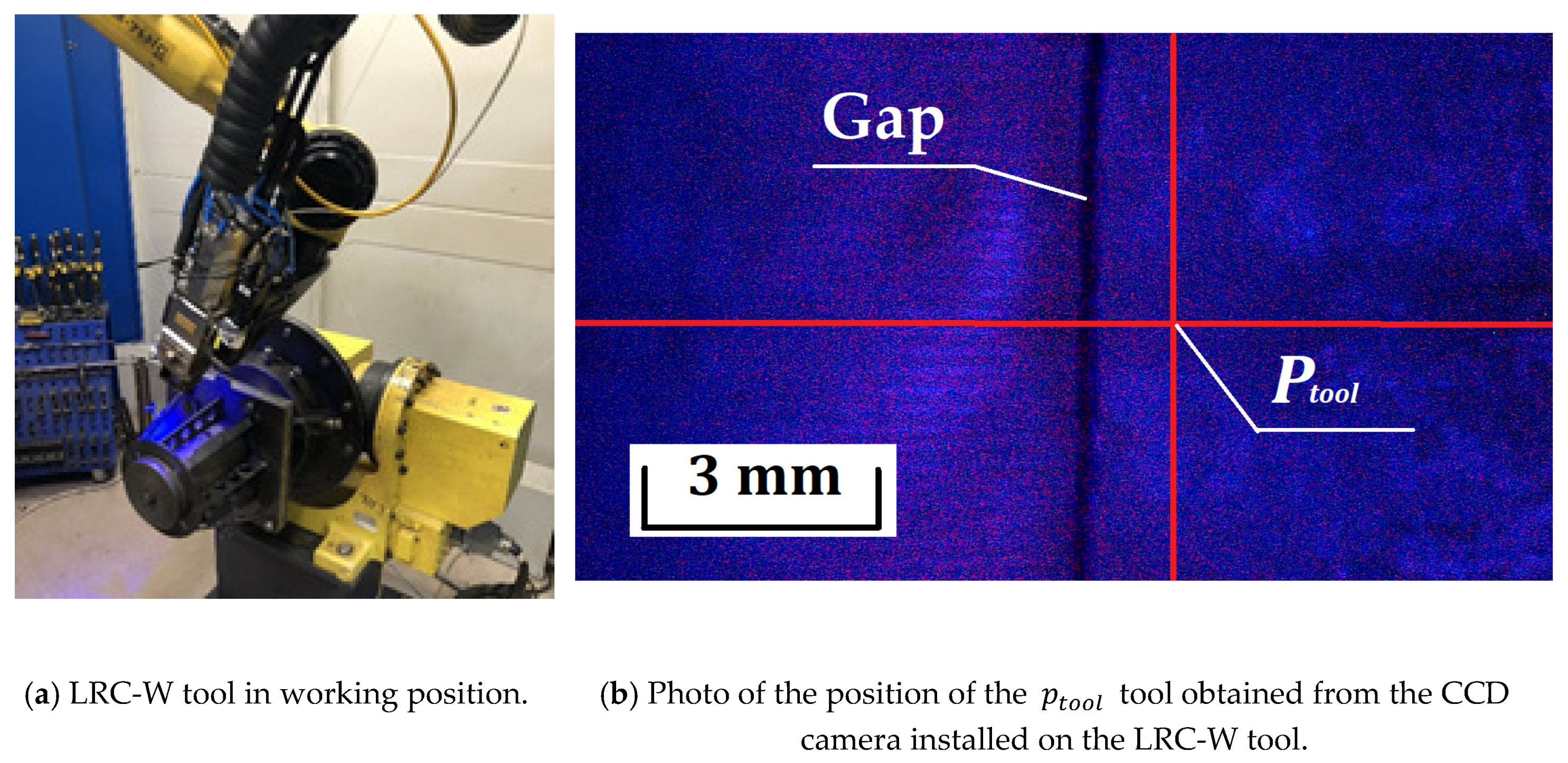

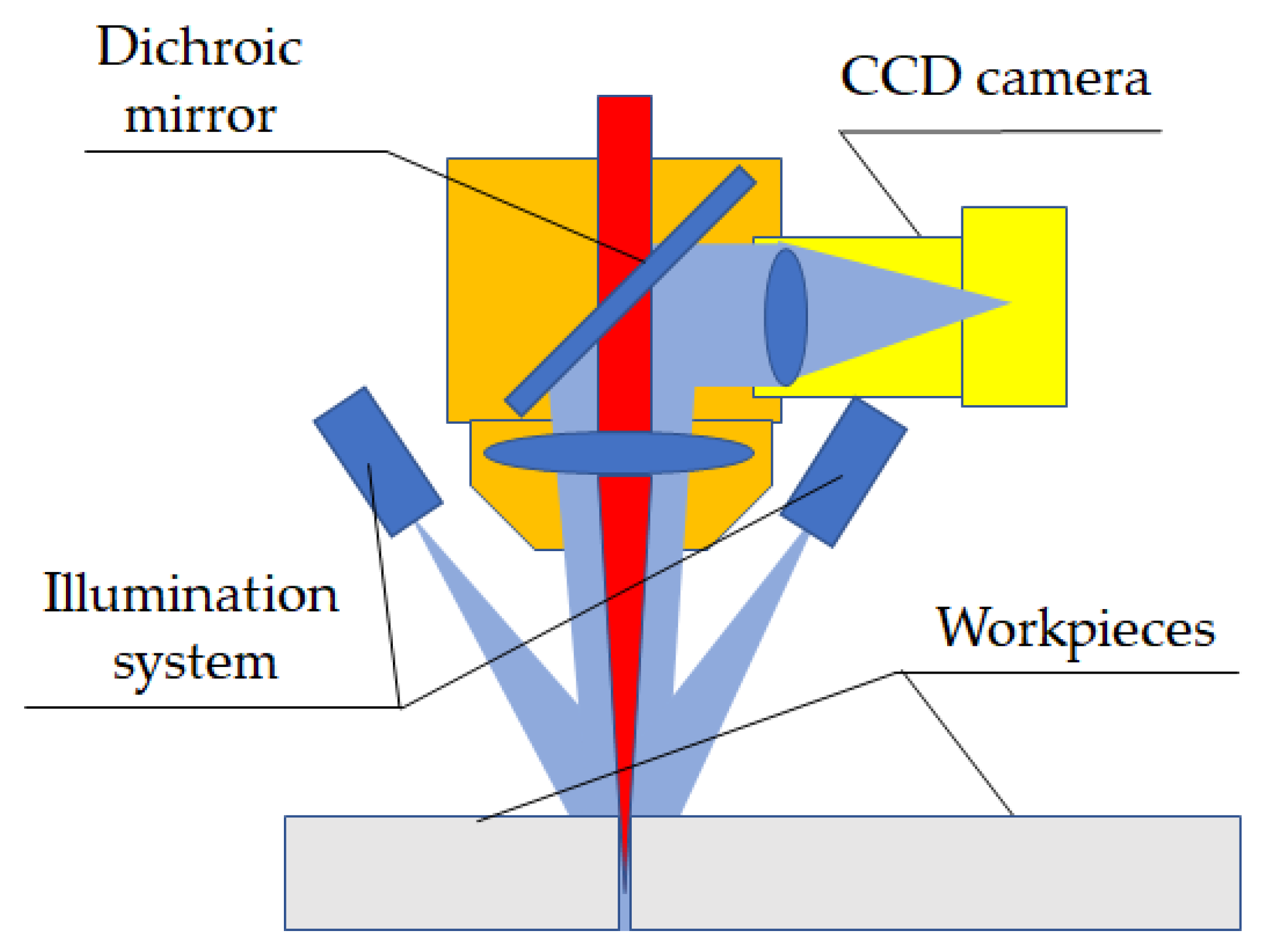

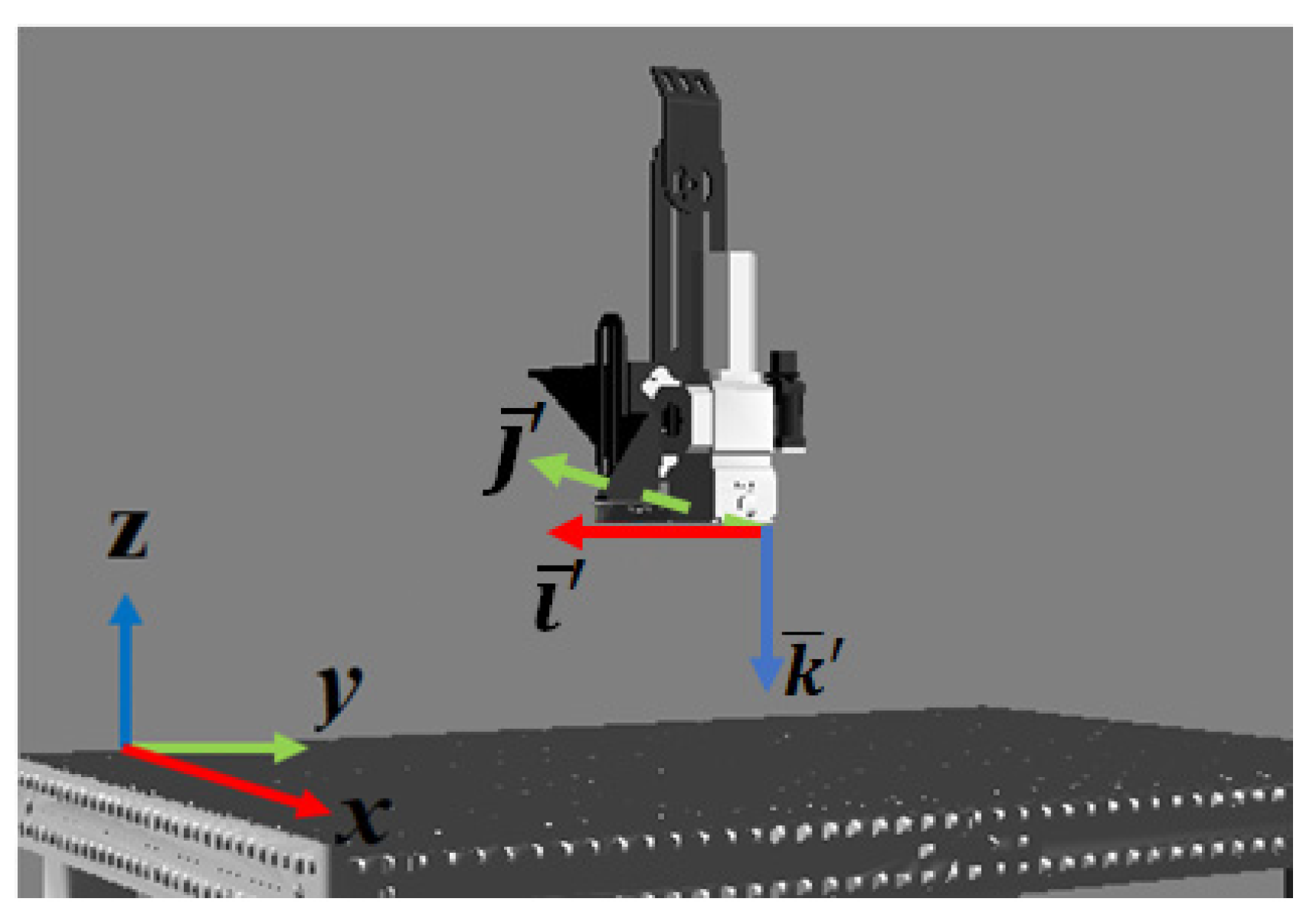

The second problem with obtaining a high-quality image is the presence of noise. As a solution to this problem, the research area is illuminated with LED illumination. For this purpose, a remote [17] bilateral LED illumination system was installed on the LRC-W tool (Figure 2).

Figure 2.

Scheme of the LRC-W tool.

To ensure that the points of the planned trajectory can be corrected relative to the geometric primitives and outlines of the processed part, the design data structure is expanded with data on the necessary orientation of the LRC-W tool at the correction point, which ensures a sufficient position of the CCD camera in the research area. In other words, in the process of designing a technological process in CAM, the process engineer additionally designs the position of the tool, which he will take during the correction process, sufficient for recognizing geometric primitives and outlines, as well as correction of the position of the tool. Designing the position of the tool at the points of the trajectory will ensure the applicability of recognition methods for cases of non-trivial connections (angular, overlapping, t-shaped, and others).

Various solutions were identified to highlight the gap in the image obtained from the CCD camera, but most of them have disadvantages that do not allow them to be fully used as ready-made solutions for use in their own correction system. In this regard, there is a natural need to develop and improve existing methods and algorithms.

In [18,19], the gap is segmented by applying a median filter with a transformation core size. However, the study does not specify the limitations and limits of applicability of the proposed method of segmentation of the gap. A similar conclusion can be drawn from [20], in which a threshold transformation is performed to select the gap, while the threshold value is not explicitly defined.

In [21,22], the gap is considered as a region of pixels with a lower gray level, allowing one to uniquely identify and segment the desired area. Unfortunately, they do not provide methods for determining the threshold selection for unambiguous line selection. Similarly, it is proposed to detect the gap in [23], where the selection of the desired line is performed by lightening the entire image until the main background becomes white, as a result of which only the gap is represented on the image. The question of determining the value of the lightening coefficient sufficient for contrast selection in the work remains open.

In [24], there is recognition of the gap between workpieces during welding. The visibility of the desired object is achieved by using two-way illumination, which allows one to create a darkening of the gap between workpieces during the movement of the tool. The proposed algorithm in this paper is not a complex scheme consisting of several consecutive operations. The input data represent a restricted area of interest containing the front gap relative to the welding point. The image of the area of interest is segmented by using a Canny operator. A Hough boundary detector is applied to the binarized image, creating candidate lines, after which false gaps are filtered out by the Kalman operator. However, given the specifics of the Canny operator [25], the proposed algorithm will not allow for adaptive use. For various surfaces of welded workpieces, the Canny operator requires correction of configuration parameters, which makes it difficult to use the proposed algorithm in the developed automated tool correction system (LRC-W).

In [26], a method for determining control points of correction is considered, but its applicability is limited to a small neighborhood of the current position of the LRC-W tool, since it implies a local approximation of the trajectory by a mathematical function.

Detecting the gap using contrasting brightness segmentation in conditions of good illumination of the workpiece surface is the most promising way to highlight the desired object in the image. Given the basic requirement of laser welding to find focused radiation at the central point of the gap [27], detecting the gap as a set of the darkest pixels, with sufficient illumination, can significantly reduce the requirements for recognizable edges, since they can act as the boundaries of the dark area of the gap, which in most cases coincide with the edges of the gap. This feature can be achieved by using a bilateral LED illumination that creates a shadow in the gap. In other words, segmentation of dark pixels does not require preparation of joint edges for recognition. In addition, the requirements for the surface texture of workpieces can be reduced. Recognizing and determining the center point of a dark line that represents a gap allows us to ignore mirror reflections and surface scratches, except for the presence of serious defects that are comparable in depth and length to the recognized gap. However, it remains a question of unambiguously determining the desired object in an image containing areas that fall within the range of the threshold selection in brightness but are not gaps.

In addition, in the analyzed works, the question of determining the correct position on the line relative to the current position of the LRC-W instrument remains open. The issue of transferring the corrected position of the tool from the two-dimensional image space to the working space of the LRC-W, considering its orientation deviated from the neutral position, remains to be formalized.

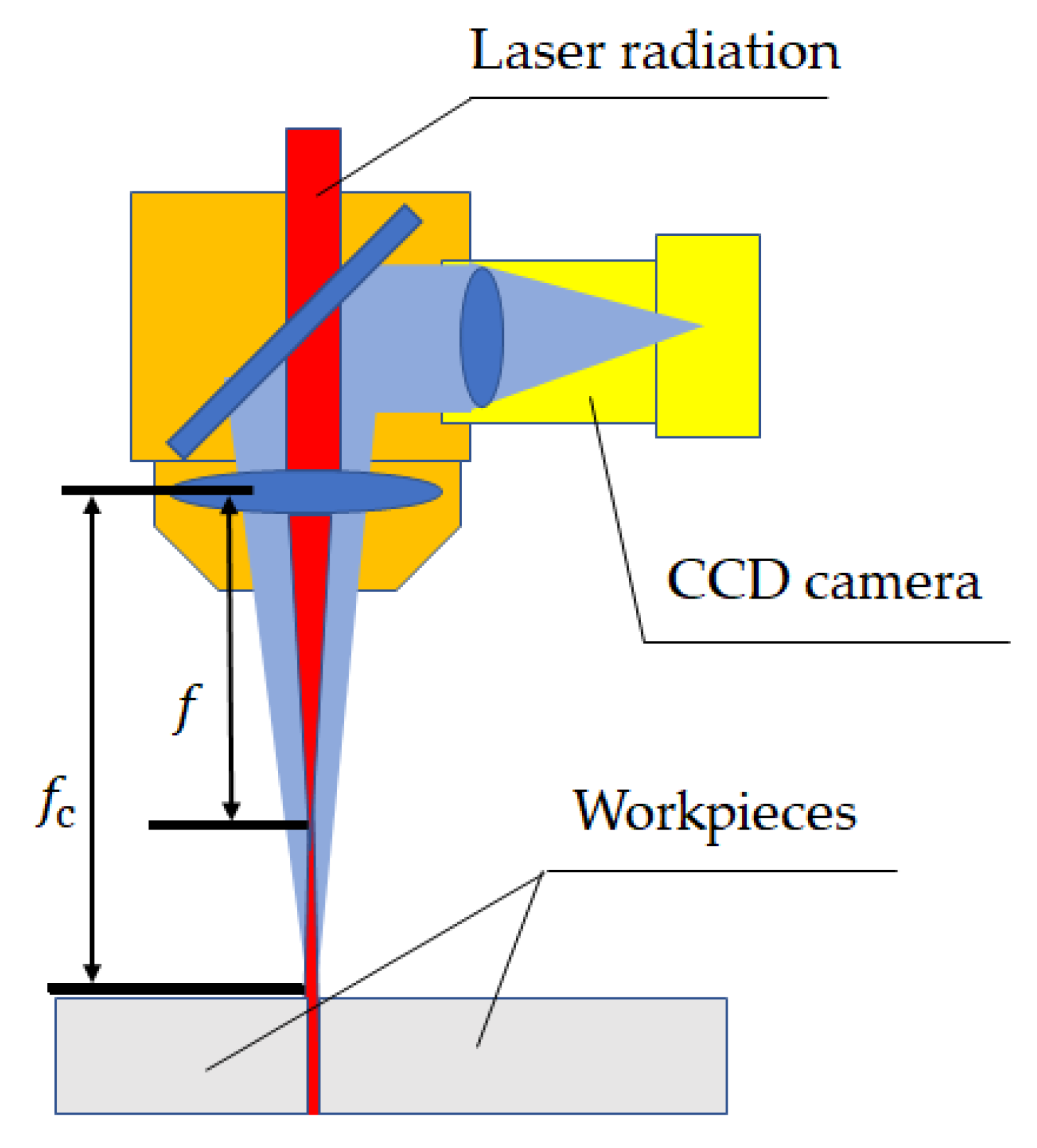

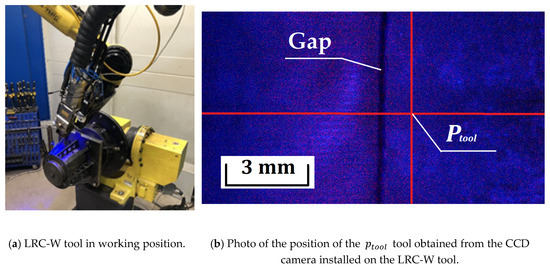

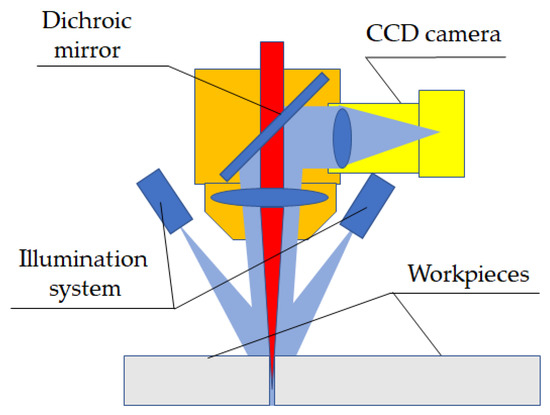

Correction of the trajectory points for the laser welding preparation process requires not only the implementation of the tool position correction but also the correction of the focal length of the laser radiation. Automation of laser focal length correction can be combined with automated focusing of the CCD camera, determined by the mm difference (Figure 3).

Figure 3.

Scheme for determining the focal length of laser radiation and CCD camera.

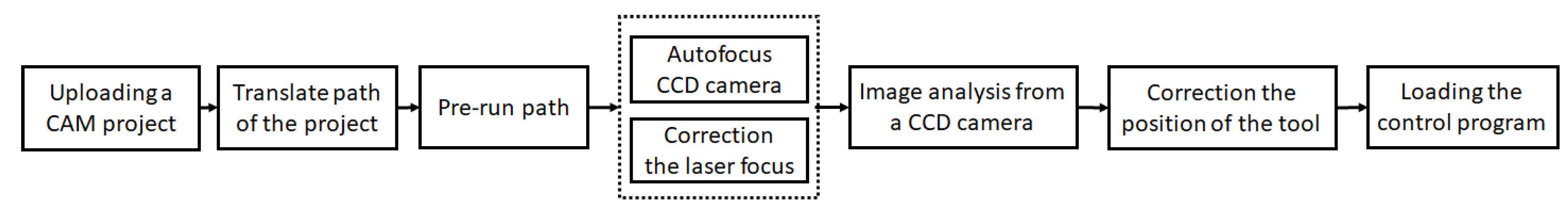

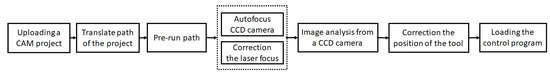

Based on the analysis of the current state of the methods of automated correction of the LRC-W tool, the sequence of operations of the correction system is represented by the scheme shown in Figure 4.

Figure 4.

Scheme of the sequence of operations of the system for correcting the points of the planned trajectory in the preprocessing of laser welding using computer vision systems.

Based on [28], the maximum value of the joint misalignment to obtain a high-quality result is mm. Additional design of the tool orientation at the points of the planned trajectory for the correction process in conjunction with bilateral illumination, in conditions of the joint misalignment not exceeding mm, allow gap recognition.

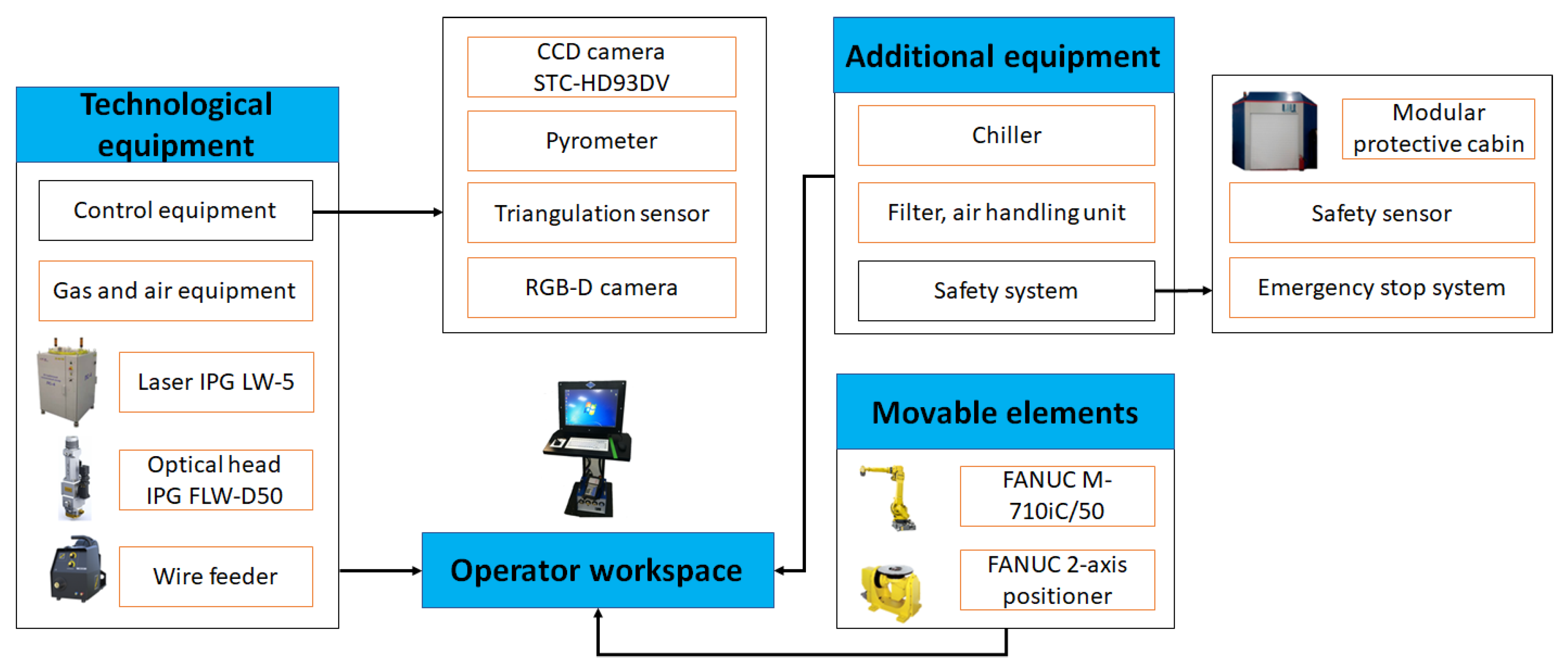

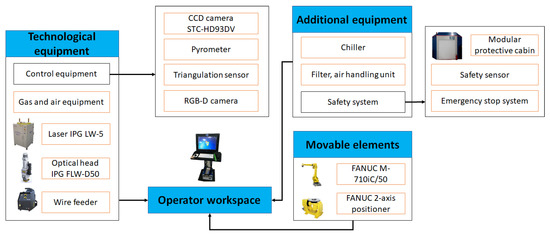

The structural scheme of the equipment used in the LRC-W is shown in Figure 5. The laser equipment used makes it possible to achieve a focused beam diameter of mm with a Rayleigh length of mm and to provide welding of plates up to mm thick with a gap width of up to mm [29].

Figure 5.

The structural scheme of a laser robotic welding complex (© 2020 IEEE [30]).

Preliminary research has shown that when the size of the area captured by the camera is mm, it is possible to fully observe the darkened gap line with a width of mm. The maximum deviation of the tool position from the gap line is estimated at 6 mm.

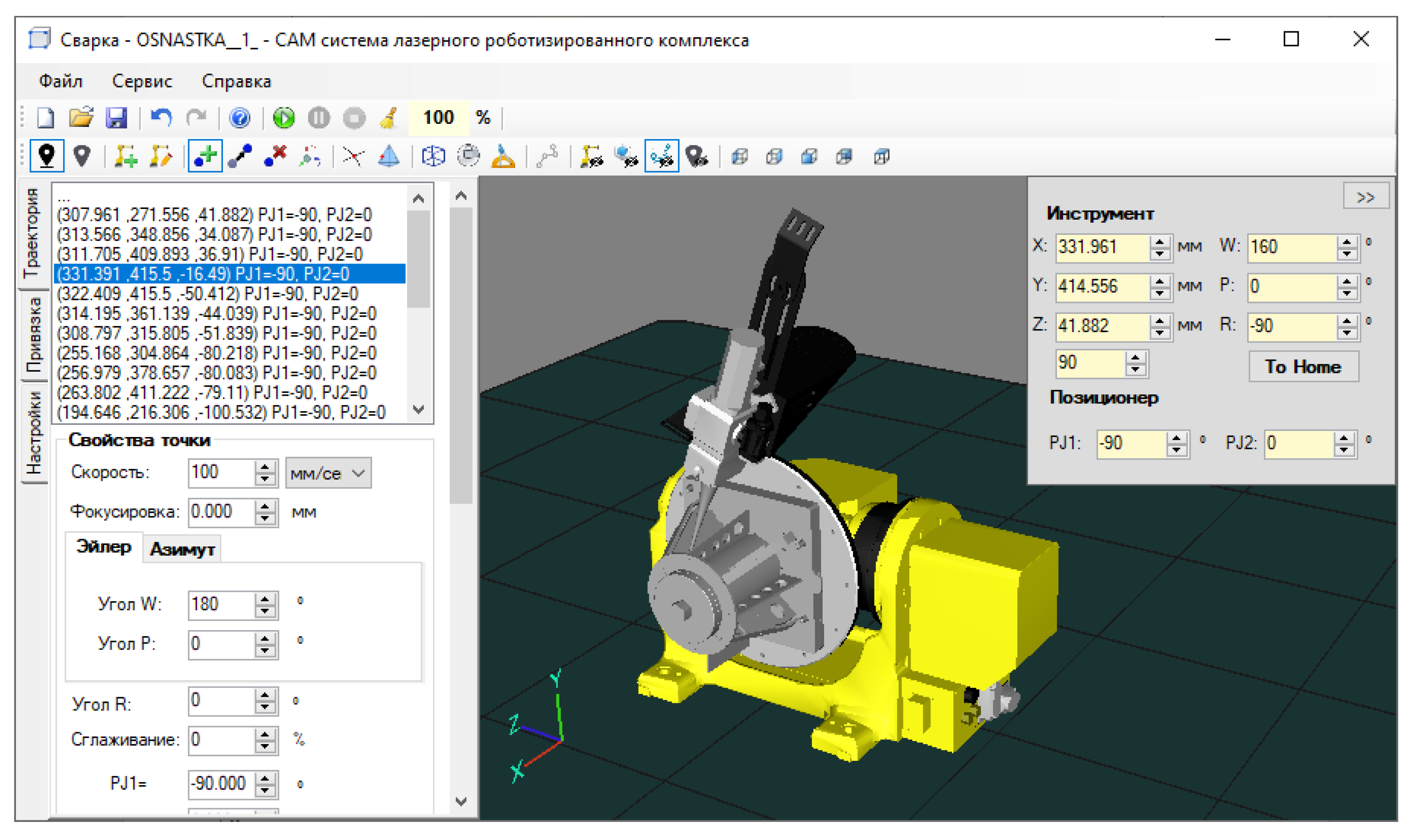

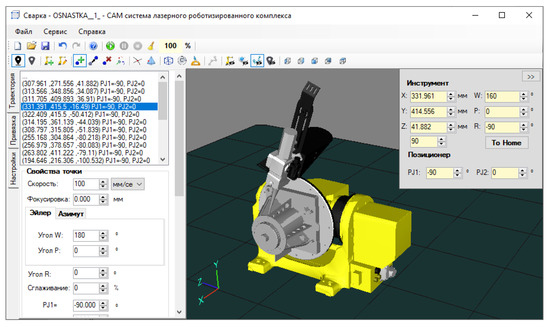

As a CAM/CAPP system, the author’s development [31] is used, which provides technological preparation of the laser robotic complex (Figure 6).

Figure 6.

The authors’ system for the technological preparation of a laser robotic complex.

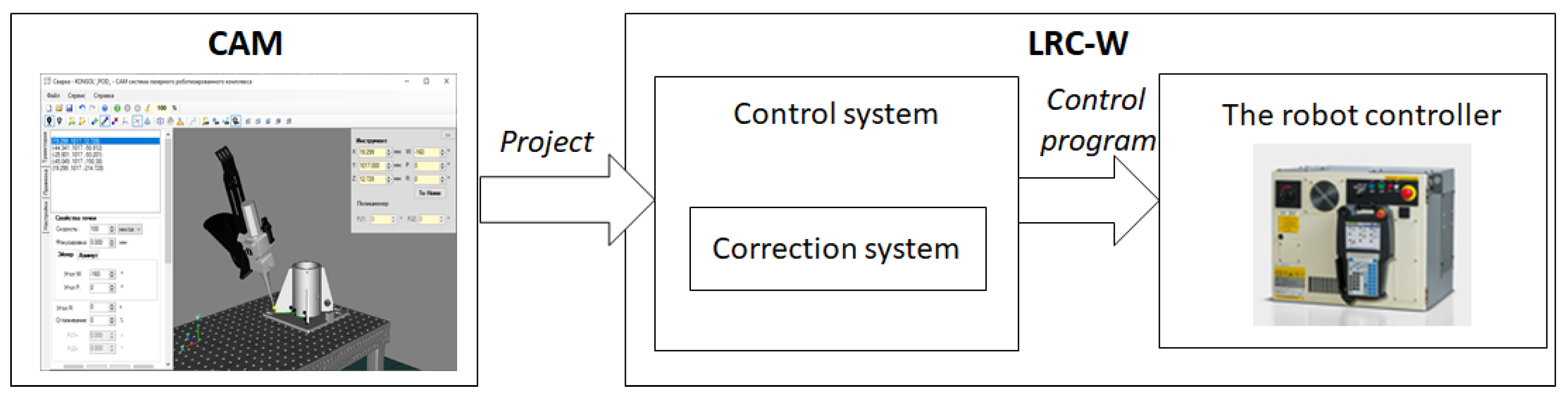

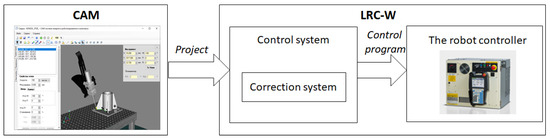

The developed technological project in CAM is transmitted to the correction system, which is a module of the industrial LRC-W control system that interacts with technical equipment. As part of the correction system in the preprocessing of laser welding, a preliminary detour of the planned trajectory is performed and its correction is performed. After this, the control system sends the corrected control program to the robot controller (Figure 7).

Figure 7.

Scheme of the process of transferring the project from CAM to the LRC-W industrial complex using the trajectory correction system.

As a result, the aim of the research was to develop mechanisms for automating the correction of the focal length and position of the industrial LRC-W tool in the pre-process of laser welding, by processing images obtained from a CCD camera, allowing us to create and implement an automated system for correcting the points of the planned trajectory on an industrial LRC-W operating in small-scale and piece production.

To achieve this goal, the tasks are formulated, the solution of which forms the contribution of this paper:

- Develop a method for jointly correcting the focus of laser radiation at the point of the planned trajectory and automating the focus of the CCD camera by moving the LRC-W tool along its own orientation axis;

- Determine the dependence of the boundaries of the range of threshold pixel selection gap in the image, allowing for an unambiguous segmentation of the welded edges in the image obtained with the CCD camera tool LRK-W;

- Carry out the formalization of the recognition of the welded edges of the workpiece on the result of segmentation, considering the presence of image regions in the rising range threshold selection for brightness, but not the gap;

- Based on the result of edge recognition, determine the correct position of the point of the planned trajectory of the LRC-W tool;

- Formalize the transfer of the corrected point value of the planned trajectory from the flat image space to the working space of the LRC-W, considering the current orientation of the tool.

3. Joint Correction of the Focal Length of Laser Radiation and Focusing of the CCD Camera

It is possible to correct the focal length of laser radiation by focusing the image of a CCD camera. Then, based on the scheme shown in Figure 6 and the assessment of the degree of image clarity when moving the tool along the orientation axis, it is possible to determine the focused image and therefore the focused position of the laser.

In [32], we consider various methods for evaluating the image clarity obtained from a CCD camera. As the most promising method applicable to the correction system, it is worth highlighting methods based on calculating the image gradient. The main advantages of the gradient method are high calculation speed and efficient application in a small image capture area with contrasting transitions in the focused position.

The definition of the value based on which the image clarity is evaluated in this case will be represented by the following sequence of actions:

- Gaussian blur of the original image ;

- Calculating the grad gradient;

- Calculating the sum of the pixel brightness of the blurred image gradient in the Hue, Saturation, Value (HSV) space, , where is the functional brightness matrix of a flat image.

In the process of obtaining images from a CCD camera, the focused image is determined by the extreme value .

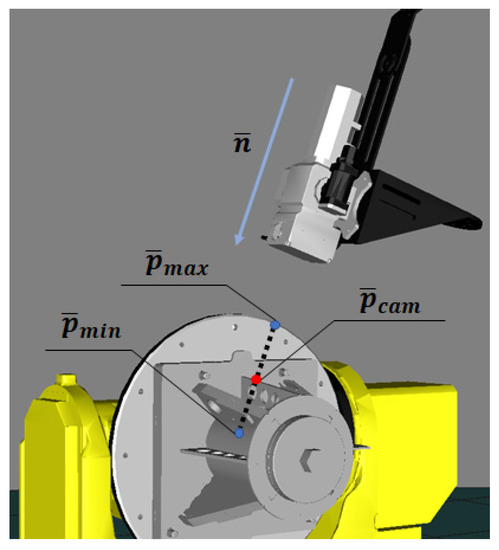

The focused image is determined during the movement of the LRC-W tool along the localization area starting from the position and ending at , where the position , , is the position of the LRC-W tool positioning relative to the camera focus point, is the value that characterizes the length of the localization area of the focused image and is the normalized vector describing the orientation of the LRC-W tool (Figure 8).

Figure 8.

Scheme of tool movement in the process of automated detection of the focused position of the CCD camera.

When estimating the time of calculating the value of in , the speed with the minimum absolute error will be estimated as:

Let the position of the tool relative to the focus of the CCD camera be the focused position of the observed objects with focus ; then, the focused position of the tool relative to the laser radiation with focus f is defined as:

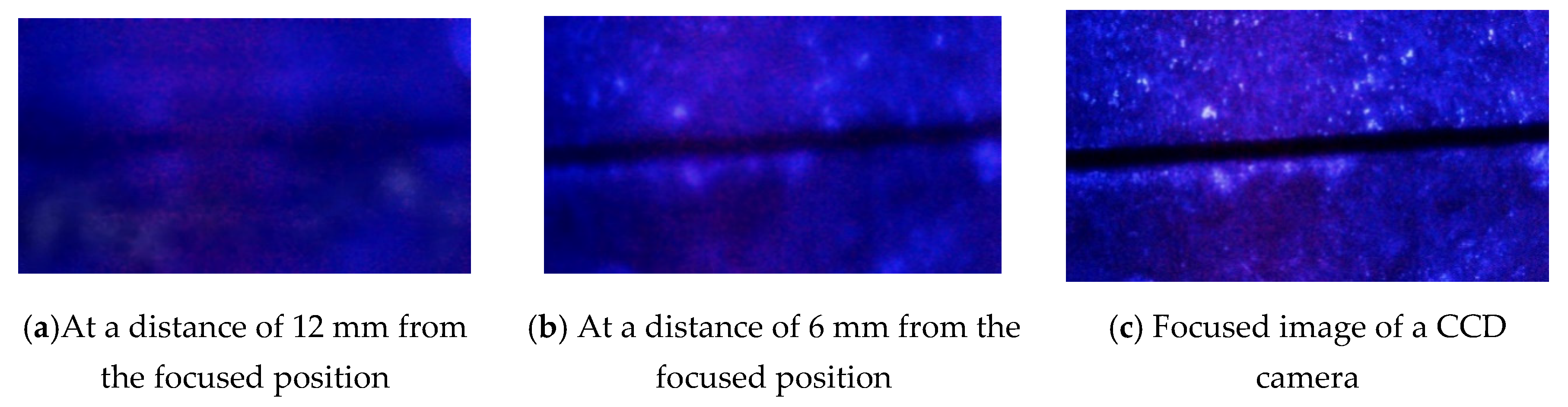

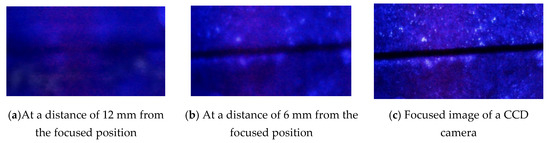

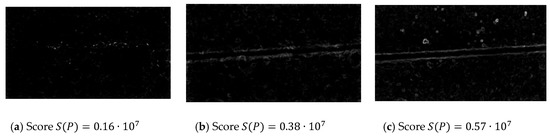

Images from a CCD camera captured during autofocus are shown in Figure 9. The results of the image definition assessment are shown in Figure 10.

Figure 9.

Images captured from a CCD camera during autofocus.

Figure 10.

Result of image blur estimation.

Thus, a method was developed for jointly correcting the focus of laser radiation at the point of the planned trajectory and automating the focus of the CCD camera by moving the LRC-W tool along its own orientation axis.

4. Segmentation and Recognition of the Gap to Be Welded

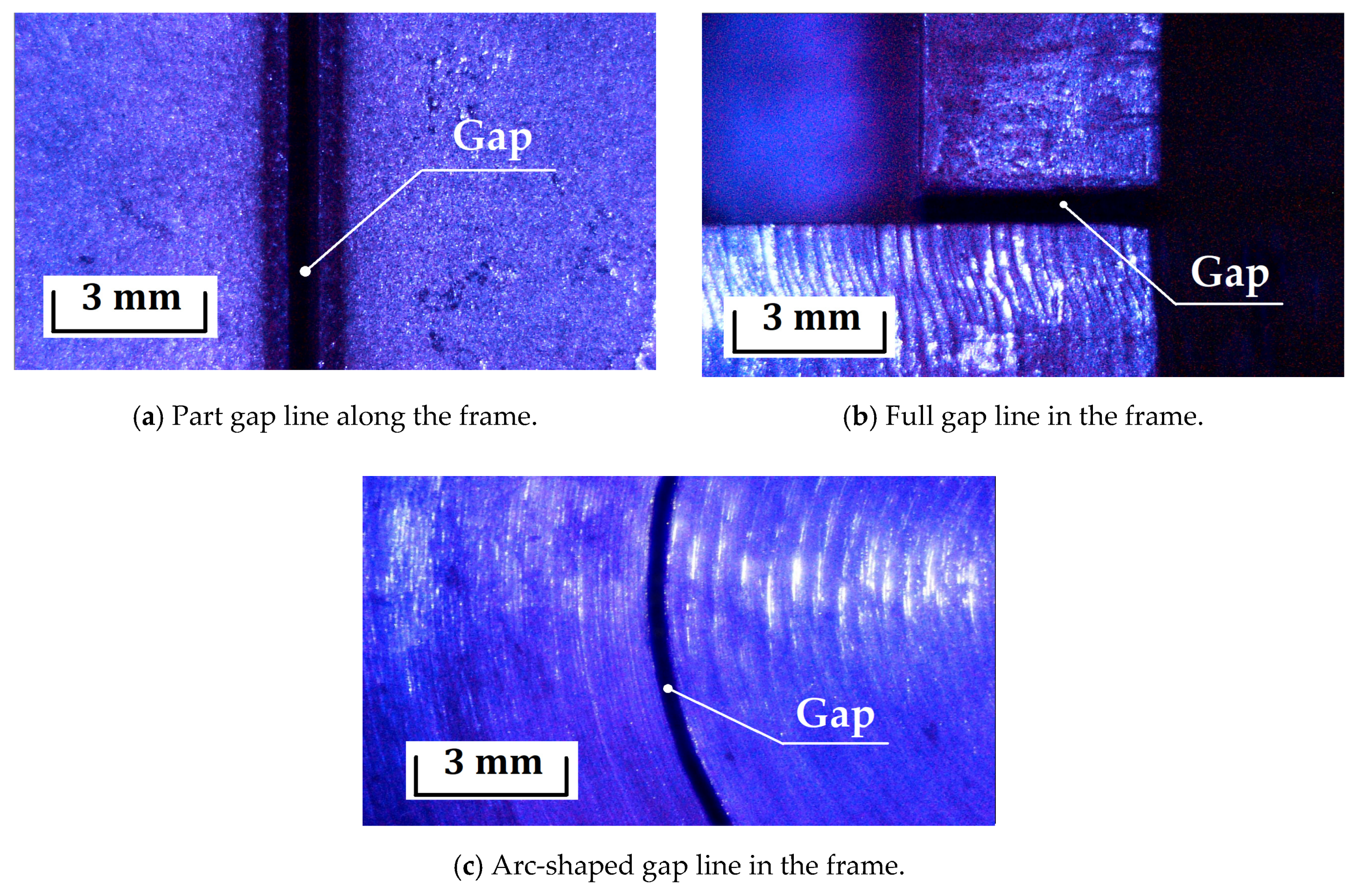

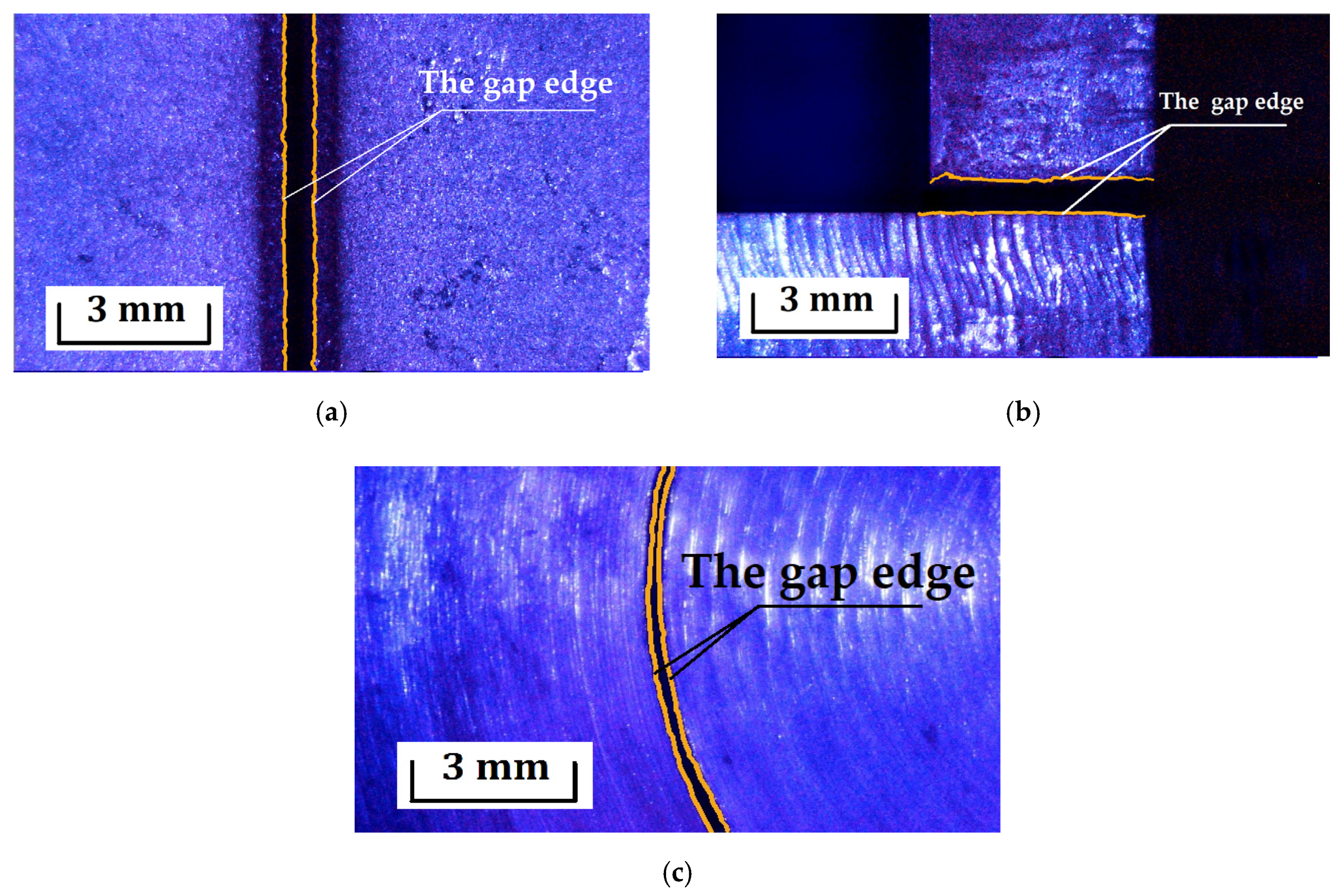

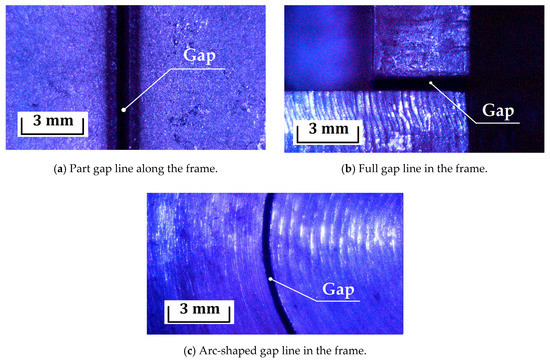

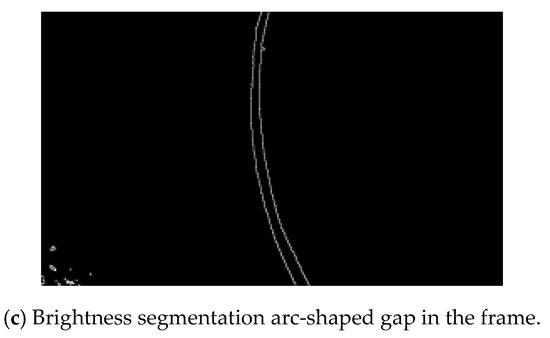

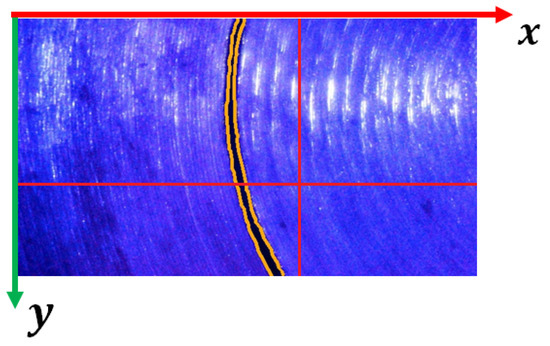

Assessment of gaps between welded workpieces is performed based on the result of brightness segmentation of the image. To do this, one can take advantage of the fact that when there is sufficient light in the image, the gap is represented by a continuous dark stripe (Figure 11).

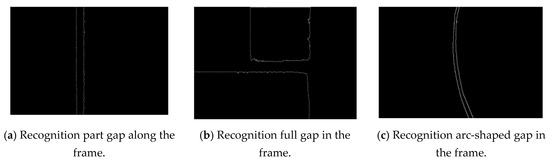

Figure 11.

Images of gap lines between welded workpieces with the LRC-W complex obtained from a CCD camera of HD resolution.

The images of the welded gap presented in Figure 11 were obtained in HD resolution from a STC-HD93DV CCD camera installed on the LRC-W tool according to the scheme shown in Figure 6. The system described in [20] was used as LED illumination. Figure 11a shows a photo containing part of the gap (the line continues beyond the photo), Figure 11b shows a photo containing the entire gap (including the edges of the line) of the workpieces being welded, and Figure 11c shows an image of an arc-shaped gap.

For line segmentation, the brightness range is determined and threshold selection is performed. However, welded metal surfaces that are illuminated with LED illumination to reduce the noise level in the image may have different reflection coefficients depending on the product and, as a result, a different range of pixel selection.

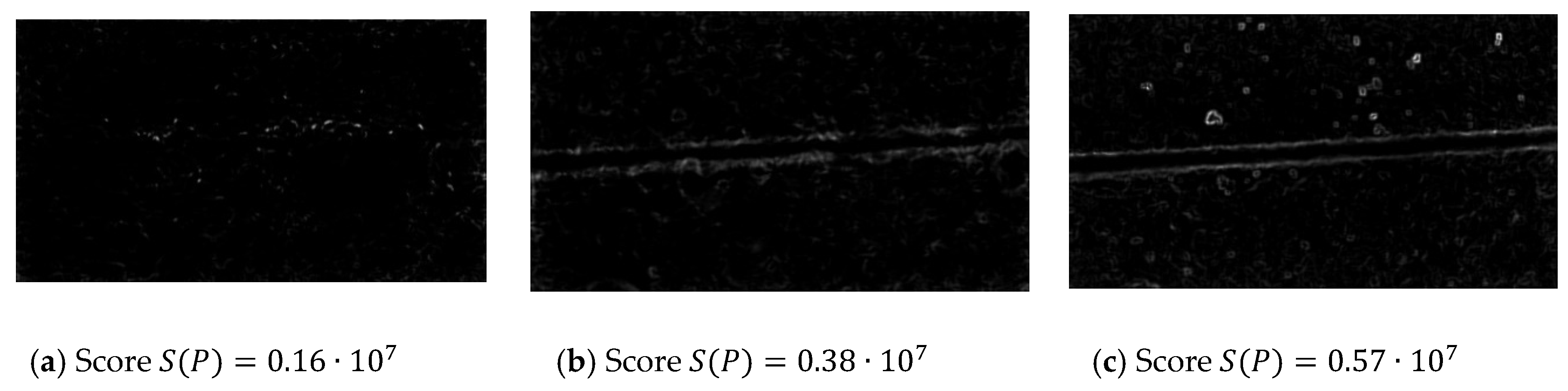

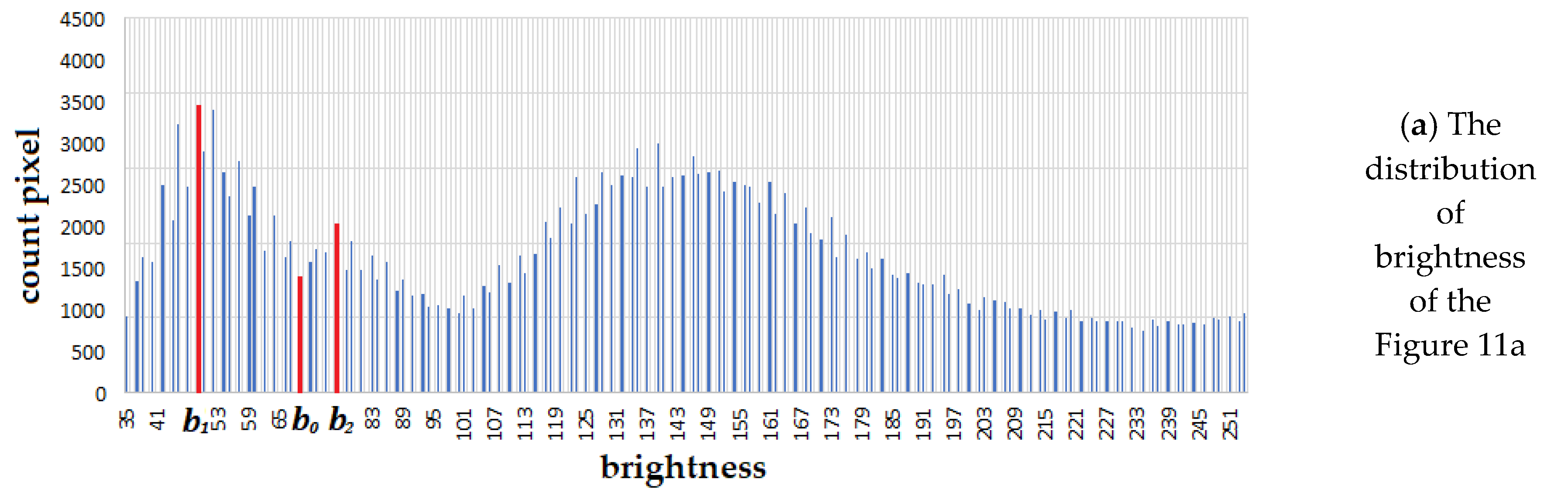

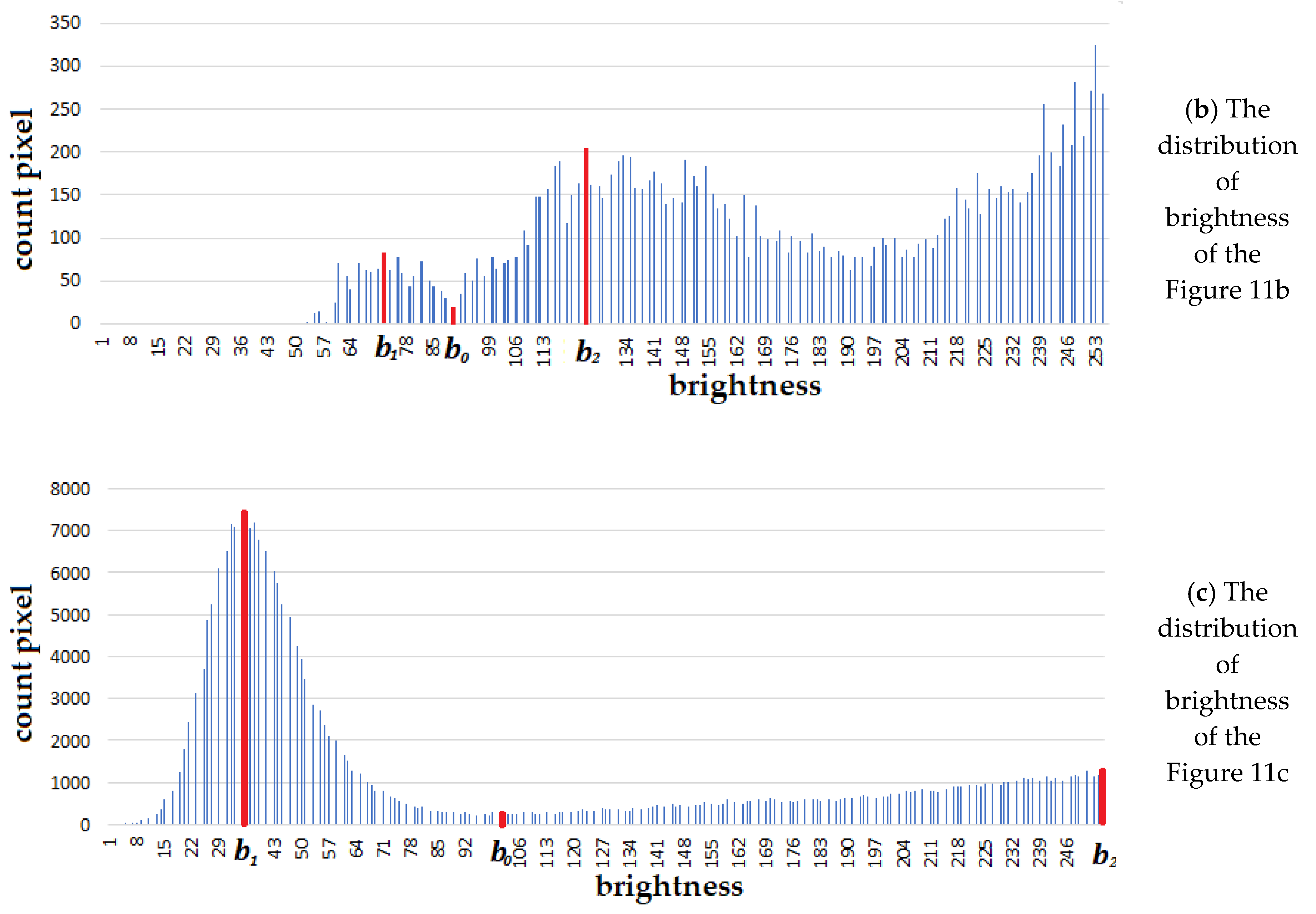

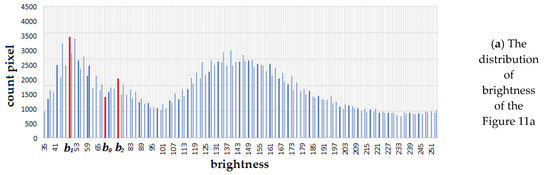

To determine the range, the distribution of image pixels in the HSV [33] space is calculated by the brightness value (Figure 12), where brightness is represented by a value in the range from 0 to 255 (is low brightness, and is high brightness).

Figure 12.

Histograms of the brightness distribution of pixels in the HSV space of the images in Figure 11 respectively.

The distribution graph shows that the images have several local maxima, and , which characterize the dominant value of the brightness of the gap and the metal surface of the welded workpieces. The pixels of the gap are determined by belonging to the segment , where is the point of the local minimum on the interval . If there are a large number of local maxima, and are the first two that have minimum brightness values.

During the analysis of the histogram, it was found that the vast majority of pixels have a maximum brightness of 255 in the HSV space. Since the number of pixels with the maximum brightness in the research is not interesting, the value is cut out of the distribution.

Segmentation of the gap on the original image obtained from the CCD camera is represented by the following sequence of actions:

- Grayscale translation of the original image in the HSV color space;

- Lightening the image 1.5-fold;

- Threshold selection (including binarization) of an image by brightness from 0 to ;

- Applying the close morphological transformation (one of the combinations of erosion and dilation transformation) with a 3 × 3 core size;

- Calculating a gradient with a 2 × 2 core size.

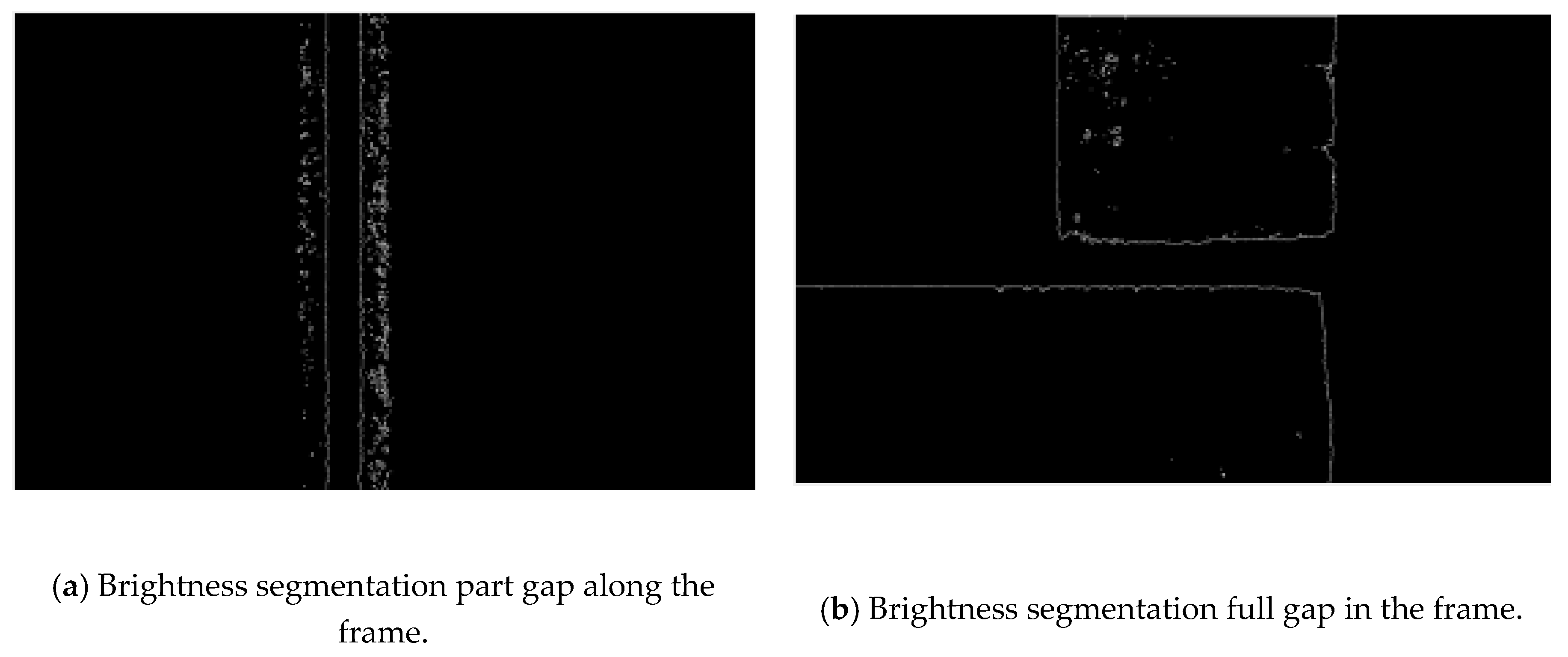

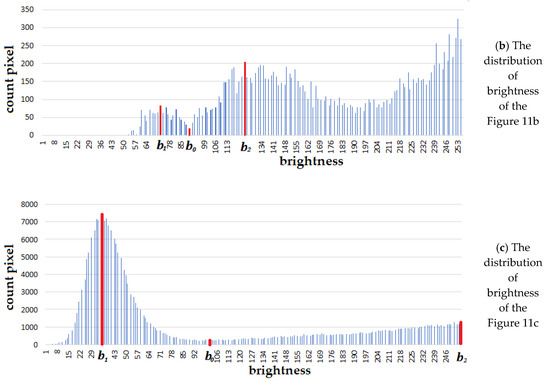

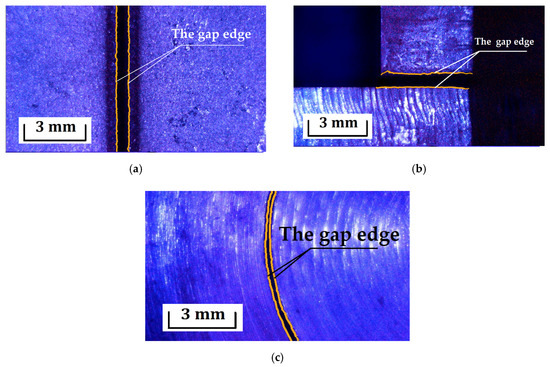

Figure 13.

The result of brightness segmentation of the gap of the welded workpieces.

Thus, the boundaries of the threshold selection in the process of segmentation of the welded edges of the blank gap were determined by the extreme values of the pixel distribution of the entire image in brightness.

The edges of the gap between the welded workpieces are characterized by two conditionally parallel lines. Their recognition, based on segmentation results, is performed using contour analysis tools.

A contour in the computer vision is an external boundary that describes the outline of a geometric object in the image [34]. The process of obtaining all the contours ( is the number of contours) of an image can be performed using standard computer vision libraries (for example, OpenCV). The result in this case is an array of contours, each of which is represented by a set of segments , where is the number of segments i of the contour defined by two points, and , on the plane.

To determine the pairs of and contours characterizing the edge line of the gap of the welded blanks, we used the following conditions:

where , is the length of the contours, is the hu moment, and is calculated similarly to .

Equation (1) defines the proximity of contours and of length with the proximity coefficient . Equation (2) selects the contours and in length among all with the selection coefficient . The moment of Equation (3) determines the statistical similarity of the contours, with the similarity parameter .

If or , where | | is the power of the set, the segmentation result contains large interference or false geometric outlines similar to the edges of the joint.

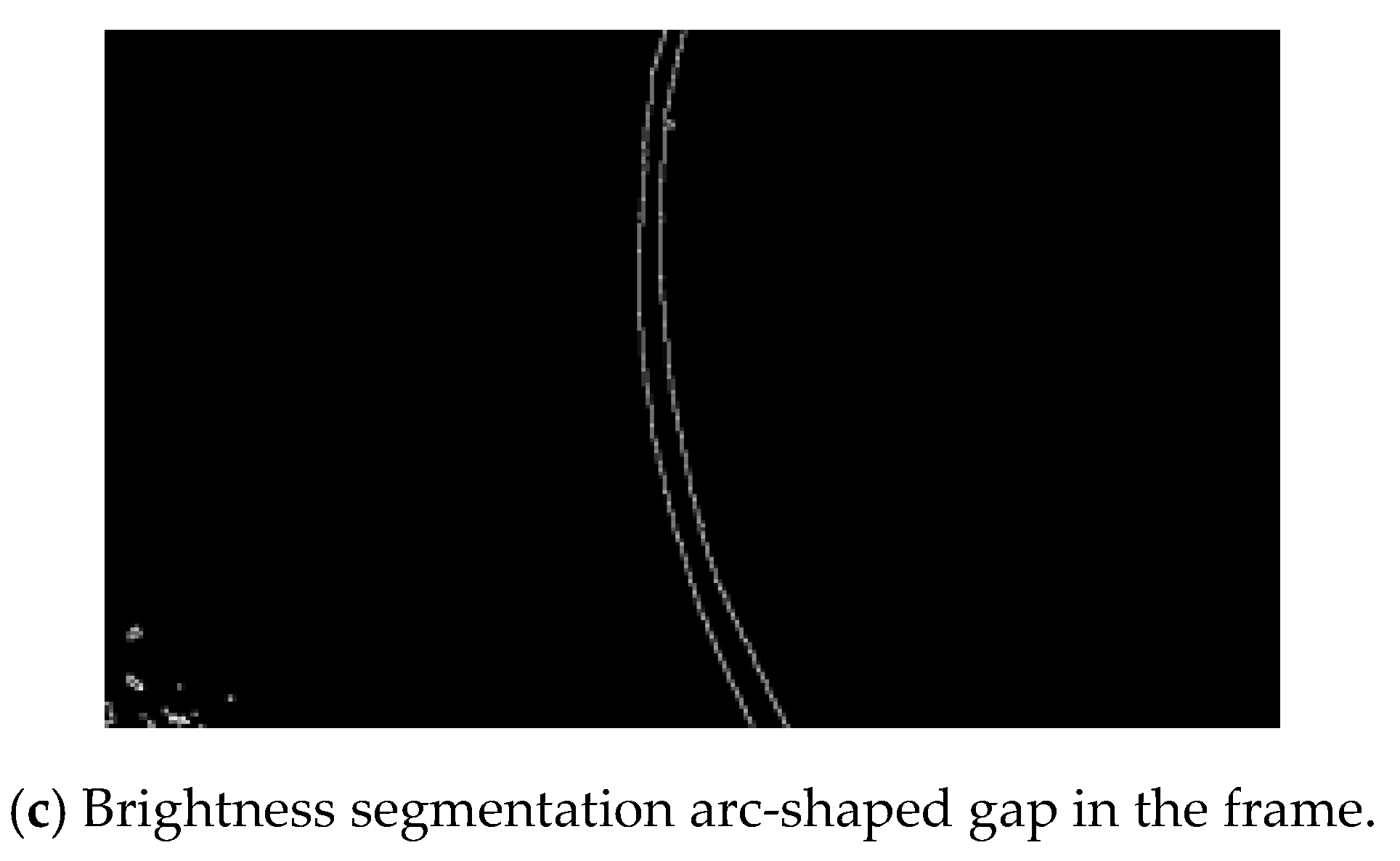

The solution to the problem of recognizing the edges to be welded requires expansion in the case when the image contains the edges of the gap that pass into the external borders of the workpieces. The need to expand the recognition task is related to the fact that the transition point can be the beginning or end point of laser welding (Figure 14).

Figure 14.

Result of recognition of the gap based on segmentation results.

Defining the sections and that represent the edges of the joint uses the attribute that characterizes them. Its essence lies in the fact that, locally, and are conditionally parallel contours (a slight deviation of the segments in the direction relative to each other is permitted, which allows them to be considered parallel).

Since the contours and can consist of a large number of segments, they are reduced by applying the Douglas‒Pecker algorithm to reduce the number of calculations [35].

The determination of segments belonging to the edges of the joint of the welded workpieces is carried out by the method of sorting and pair comparison of elements and . In this case, the segments and form the edges of the joint if the following conditions are met:

where is the angle between the segments, , ( and similarly), is defined as , and is the coefficient that characterizes the width of the gap.

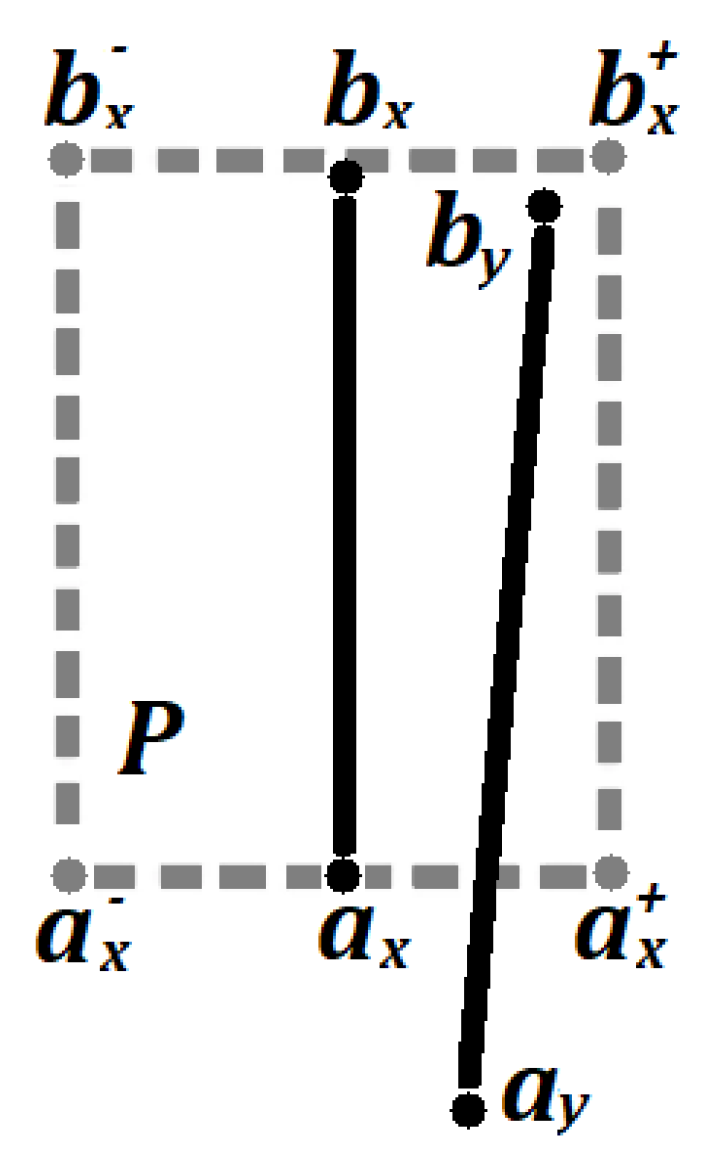

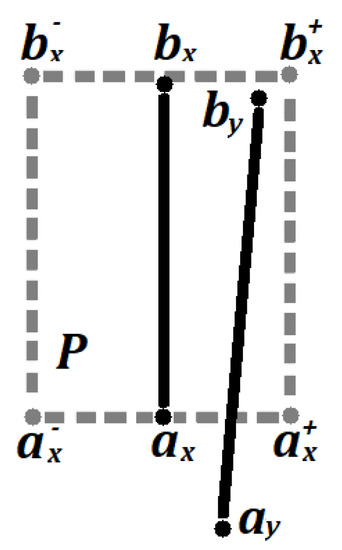

If the set in Equation (5) is not empty, then verification of ownership is performed via or , where is a closed area bounded by segments (Figure 15).

Figure 15.

Scheme for determining the segments that belong to the edges of the joint and the parts to be welded (© 2020 IEEE [5]).

It is worth noting that the values of , , , and are two-dimensional vectors of the flat image space.

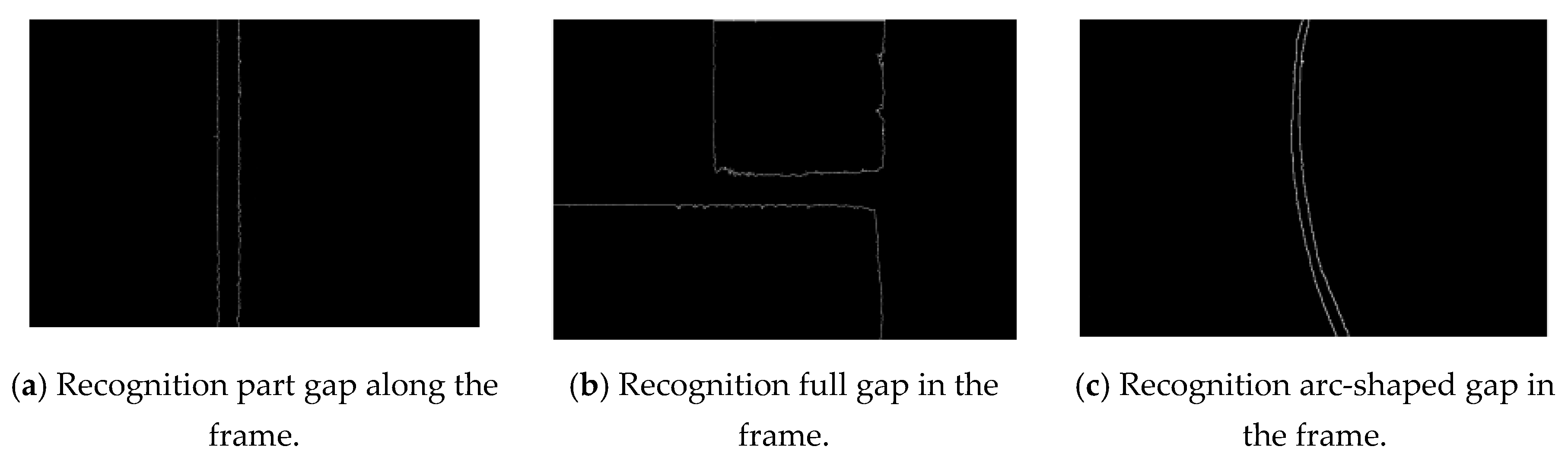

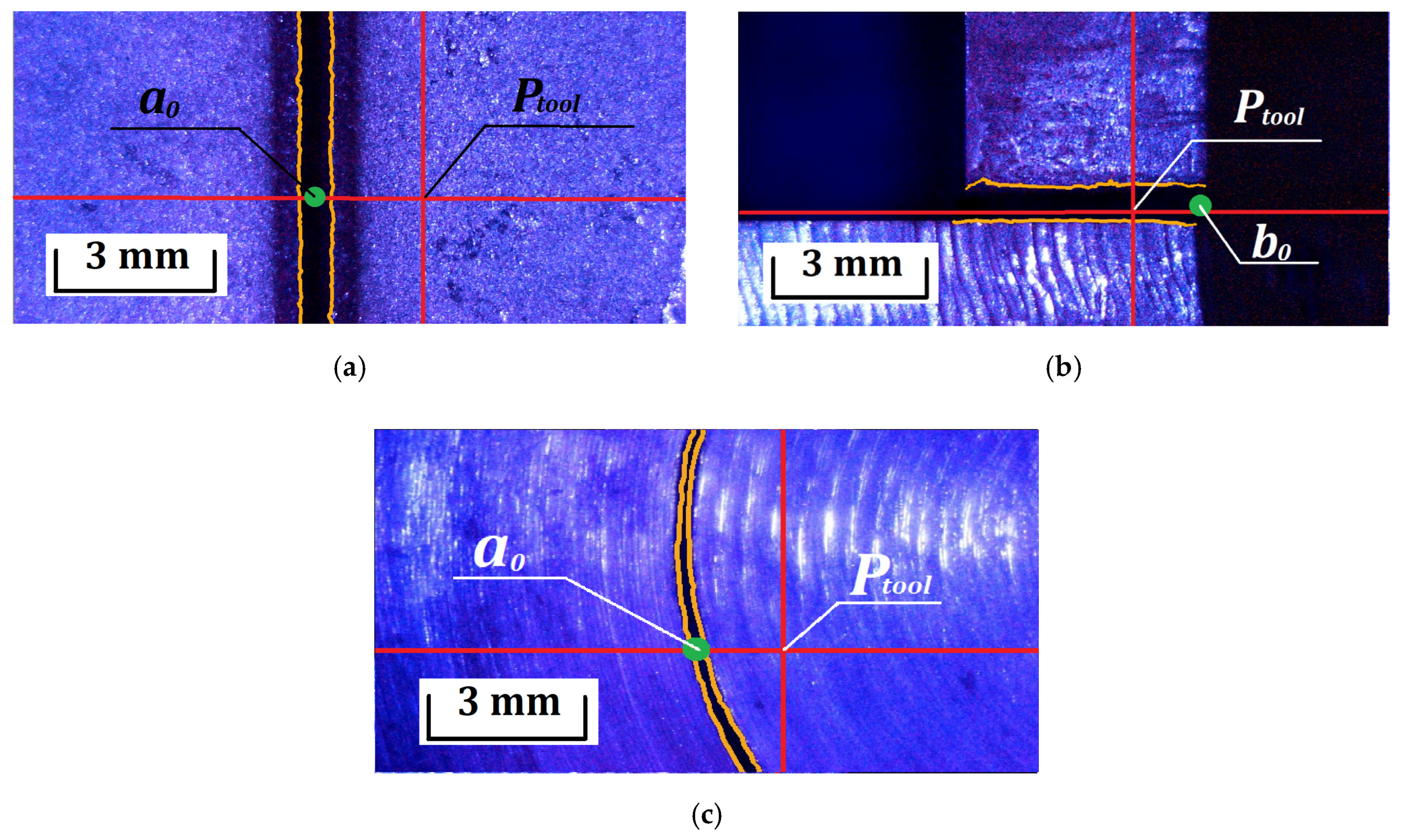

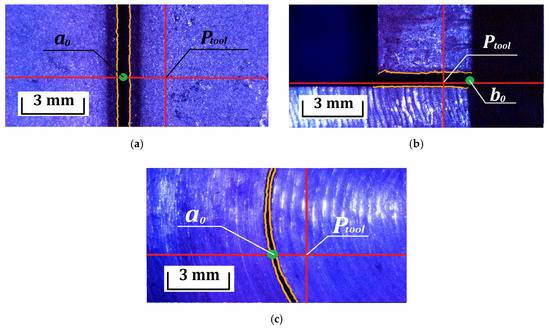

The resulting sets of segments and represent the edges of the workpiece gap to be welded. An example of the recognition result is shown in Figure 16.

Figure 16.

The result of recognition of the gap of welded workpieces in the image obtained from the CCD camera LRC-W with used value parameter recognition: (a) w = 65 , , , ; (b) w = 55 , , , ; (c) w = 30 , , , .

In this way, the rules and parameters for recognizing welded edges based on the segmentation result were formalized as a pair of continuous, conditionally parallel lines.

5. Determining the Correct Position of the LRC-W Tool and Translation of the Coordinates from the Image Space to the LRC-W Workspace

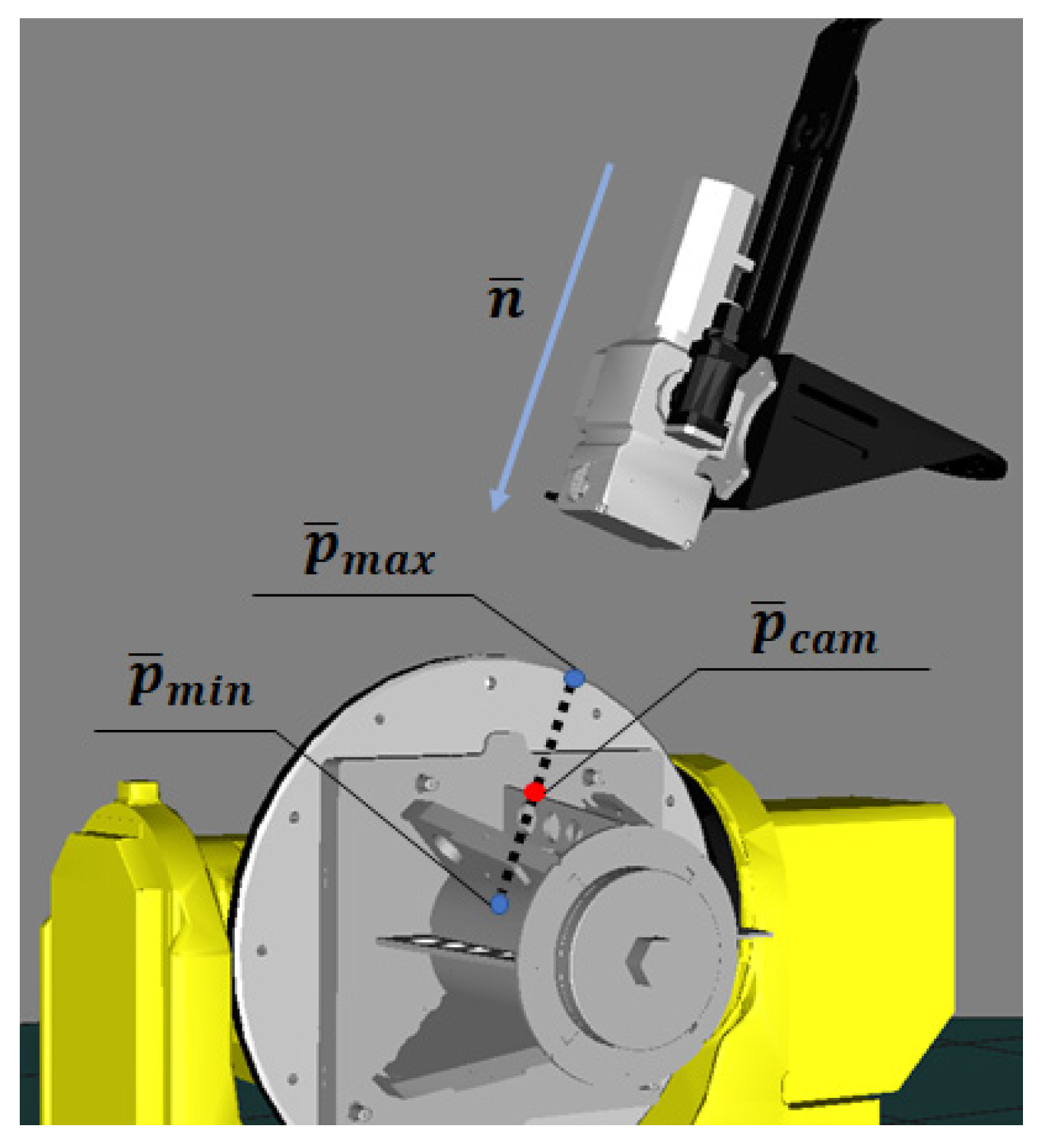

Correction of the position of the LRC-W tool at the point of the movement path should be carried out when the current position of the is not located inside the welded gap between workpieces.

The main candidates for the correct position of points relative to the gap are:

- The starting/ending point of welding , defined by the expression , where points and are the extreme positions of the contours and (not counting the intersection with the image borders);

- The closest point of the gap defined by the expression , where

The line is a straight line on a plane passing through the point in the direction of (here, ).

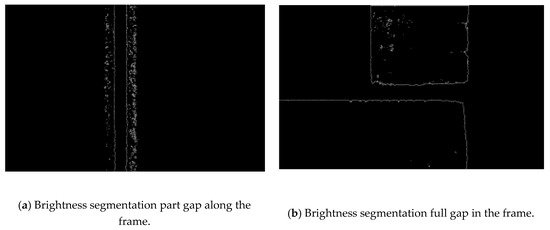

The correct position of the tool is defined as if there are welding start/end points on the image, and in all other cases. The result of applying the correction point calculation method is shown in Figure 17.

Figure 17.

The result of determining the correction point in the case: (a) part gap along the frame; (b) full gap in the frame; (c) arc-shaped gap in the frame.

Relative to the correct start and end points of welding, sections of acceleration and deceleration of the tool are designed to ensure that the target speed is reached at the edges of the processing zone in order to reduce edge defects [36].

In this way, candidates for the correct position of the current position of the LRC-W tool relative to the recognized edges of the blank gap were identified.

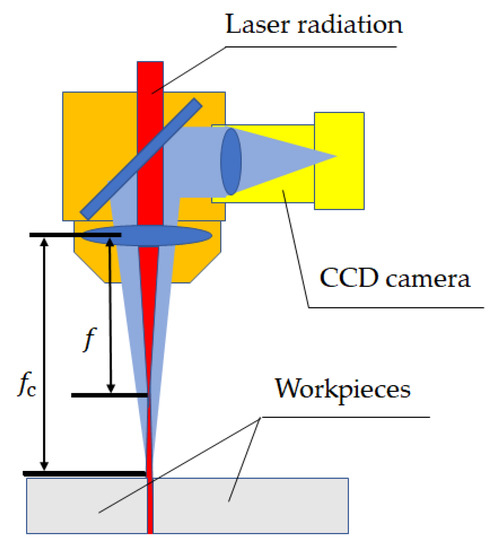

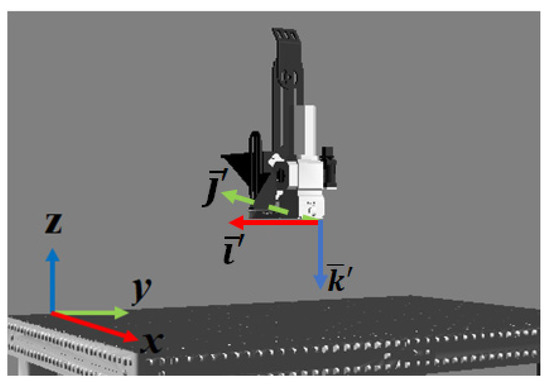

The positioning of the LRC-W tool in the working space is described by the position of the laser focus and the orientation of the laser beam, represented by the Euler angle system [37].

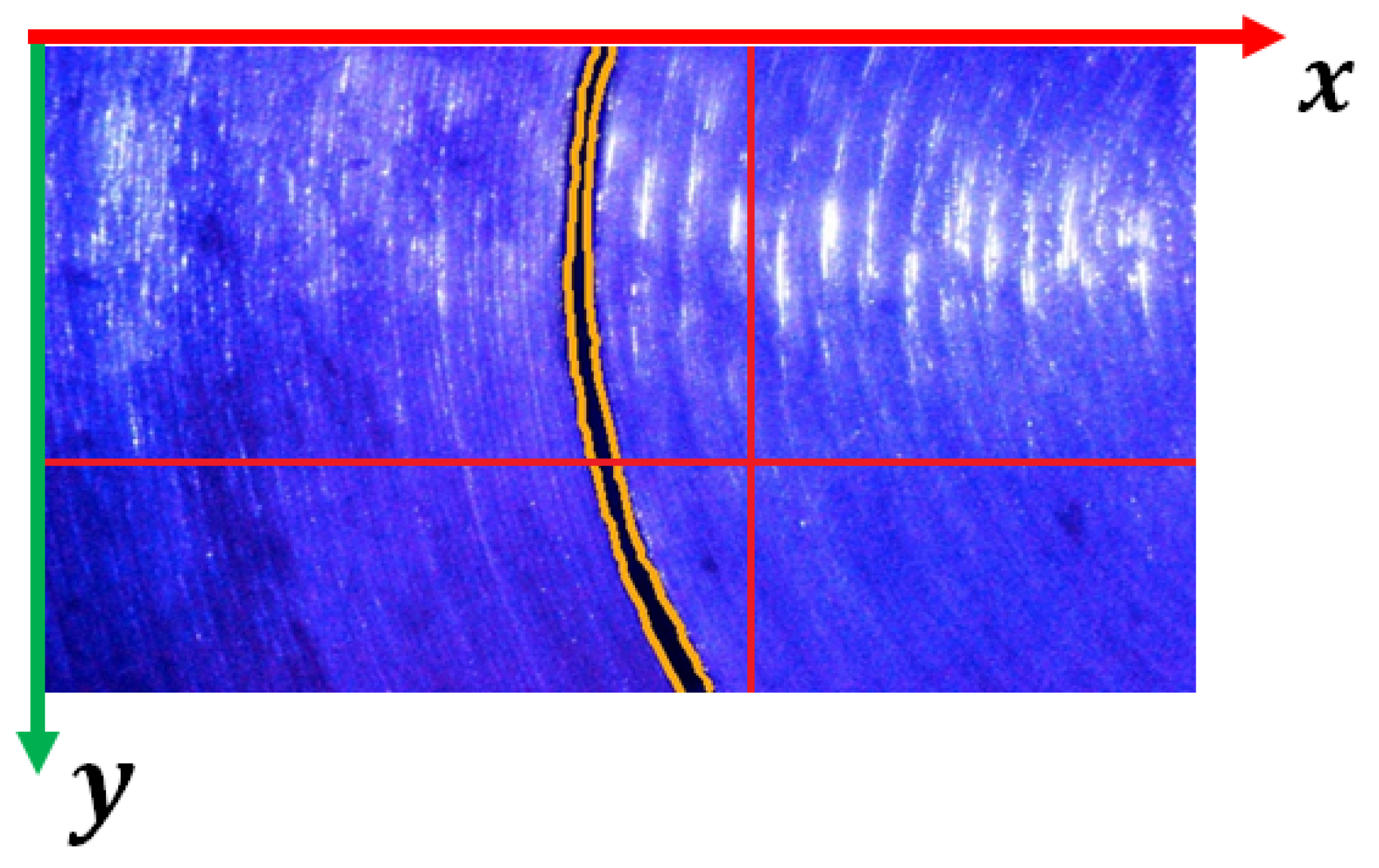

Image pixels are positioned relative to the upper-left edge with the axes pointing along the edges (Figure 18).

Figure 18.

Image coordinate system.

The position of an arbitrary point of a flat image is translated into the space of an industrial complex by the transformation:

where is the scale factor and is a transformation matrix defined as:

Figure 19.

Location of the image base relative to the base of the LRC-W workspace.

Here, , , and are the basis vector of the base of the LRC-W workspace; , , and of the rotation matrix by the angle around , , and , respectively; is the angle vector that characterizes the location of the CCD camera’s field of view in the LRC-W space with a neutral orientation , and determines the deviation of the tool with the current orientation from the neutral .

In this way, the calculation model for transferring the correct position from the flat image space to the LRC-W workspace was formalized, considering the orientation of the tool and the camera position.

6. Discussion

The results of the study show that the developed mechanisms allow one to achieve automated correction of the points of the planned trajectory of the industrial LRC-W tool in the preprocessing, calculating the correct position relative to the welded edges of the gap between workpieces recognized in the image obtained from the CCD camera installed on the LRC-W tool.

To ensure that the gap to be welded can be recognized in the image obtained from the CCD camera, the research area is illuminated with LED illumination, which reduces the noise level. The bilateral placement of the LED backlight allows us to additionally darken the gap and create an area of dark pixels.

Deviations of the position of the LRC-W tool at the points of the planned trajectory based on the result of transfer from the CAM model space create the problem of a blurred image that does not allow recognition of the objects of interest. The problem is fixed by automatically focusing the CCD camera. The automatic focusing mechanism uses the tool’s movement along its own axis orientation. In the process of focusing, the image clarity is evaluated using the gradient method, which is used to determine the most focused image. Using the known difference between the focus of the camera and the focus of the laser radiation on the basis of the result of focusing the CCD camera, the current focal length of the laser radiation at the point of the planned trajectory is determined and corrected.

Recognition of the edges of the gap is performed by pre-contrast segmentation of the desired object by brightness. The boundaries of the threshold selection segment of pixels that characterize the desired area in the brightness range are the extreme values of the pixel distribution of the entire image in brightness. On the one hand, this method allows one to determine the desired gap and make the necessary corrections relative to it; on the other hand, it imposes additional requirements on the illumination of the study area so that the line represents the area of the darkest pixels of the image. Our research has shown that a qualitatively highlighted area of the image provides segmentation of the desired line.

As the intermediate results of the study show, the segmentation operation does not allow one to uniquely determine the desired edges of the gap, since the image may contain various elements located in the threshold selection area. This feature has been confirmed by other researchers. In this regard, the segmentation results are used to recognize the edges of the gap to be welded. The features that characterize the desired edges are a set of rules that correspond to the selection on the image of those segments that are conditionally parallel and located relative to each other within the specified width of the gap.

As candidates for the correct position of the planned trajectory relative to the current position of the tool, the closest and two edge points of the gap are used. Thanks to technological solutions in the project, in most cases, it is possible to have a single gap in the image, relative to which the correct position is determined. However, the possibility of having multiple lines is not excluded. The developed methods allowed us to recognize several gaps in a single image, but the methods used to determine candidates for the correct position of the tool did not provide the possibility of technologically correct position determination. In this regard, the issue of developing rules for determining the correct position in cases where there are several gaps with different relative positions on the image in this work remains open and represents an opportunity for further work by the authors.

Translation of the coordinates of the correct position of the flat image space into the three-dimensional working space of the industrial LRC-W is carried out according to the developed calculation model. The model considers the position of the LRC-W tool and the location of the CCD camera in space, allowing for unambiguous determination of the correct position of the robotic arm in the world space at a given focal length.

The results obtained were applied in the authors’ automated laser welding control system [38], for correcting the points of the tool trajectory transmitted from the CAM system [15] to the working space of the LRC-W. The solution was designed as an automated subsystem that functions in the preprocessing of laser welding and creates a preliminary detour of the trajectory and correction of its points relative to the gap.

During the primary experimental research of the automated subsystem, successful results of the correction subsystem functioning were obtained. Some of the results are shown in the figures in this paper. At the moment, the authors plan to develop a methodology for full tests to determine the conditions for limiting the functioning of the developed methods, which is a further task in their research work.

Author Contributions

Project administration, L.A.; conceptualization and methodology, R.D. and P.V.; software, R.D.; writing, R.D.; review and editing, R.D. and L.A.; validation, R.D., L.A., and P.V.; experimental research, L.A. and R.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The research was carried out at the Scientific and Educational Center for the Introduction of Laser Technologies, Vladimir State University named after Alexander and Nikolay Stoletovs.

Conflicts of Interest

The authors declare no conflict of interest.

Patents

The results of the work were applied to the automated process control system for laser robotic processing, which received a certificate of registration (Patent RF 2020661002, 2020).

Abbreviations

The following abbreviations are used in this manuscript:

| CAD | computer-aided design |

| CAM | computer-aided manufacturing |

| CAPP | computer-aided process planning |

| CCD | charge-coupled device |

| HSV | hue, saturation, value |

| LED | light-emitting diode |

| LRC-W | laser robotic complex-welding |

| TCP | tool center point |

References

- Josefine, S.; Choquet, I.; Kaplan, A.F.H. Laser welding process—A review of keyhole welding modelling. Phys. Procedia 2015, 78, 182–191. [Google Scholar]

- Grigoryants, A.; Shiganov, I.; Misyurov, A. Technological Processes of Laser Processing; Bauman, N.E., Ed.; Publishing House Moscow State Technical University: Moscow, Russia, 2006. [Google Scholar]

- Kovács, A. Integrated task sequencing and path planning for robotic remote laser welding. Int. J. Prod. Res. 2016, 54, 1210–1224. [Google Scholar] [CrossRef]

- Alexios, P.; Stavropoulos, P.; Stavridis, J. Adaptive control of thermal processes: Laser welding and additive manufacturing paradigms. Procedia CIRP 2018, 67, 233–237. [Google Scholar]

- Pan, Z.; Polden, J.; Larkin, N.; Van Duin, S.; Norrish, J. Recent progress on programming methods for industrial robots. Robot. Comput. Integr. Manuf. 2012, 28, 87–94. [Google Scholar] [CrossRef]

- Shen, W.; Hu, T.; Zhang, C.; Ye, Y.; Li, Z. A welding task data model for intelligent process planning of robotic welding. Robot. Comput. Integr. Manuf. 2020, 64, 101934. [Google Scholar] [CrossRef]

- Maiolino, P.; Woolley, R.; Branson, D.; Benardos, P.; Popov, A.; Ratchev, S. Flexible robot sealant dispensing cell using RGB-D sensor and off-line programming. Robot. Comput. Integr. Manuf. 2017, 48, 188–195. [Google Scholar] [CrossRef]

- Yang, J.Z.; Zhang, Y.; Chen, J.H.; Wang, W.Q.; Liu, Y. Off-line programming system of multi-axis platform for dual beam laser welding. In Proceedings of the 2015 International Conference Applied Mechanics, Mechatronics and Intelligent Systems (AMMIS2015), World Scientific, Nanjing, China, 19–20 June 2015. [Google Scholar]

- Polden, J. Automated Offline Programming for Low Volume Robotic Manufacturing. Ph.D. Thesis, Faculty of Engineering, University of Wollongong, Wollongong, Australia, 2014. [Google Scholar]

- Erdős, G.; Kardos, C.; Kemény, Z.; Kovács, A.; Váncza, J. Process planning and offline programming for robotic remote laser welding systems. Int. J. Comput. Integr. Manuf. 2016, 29, 1287–1306. [Google Scholar] [CrossRef]

- Rong, Z. The analysis and research of welding new technology. J. Eng. Mech. Mach. 2017, 2, 35–39. [Google Scholar]

- Rodionov, D.; Lyukhter, A.; Prokoshev, V. The mechanisms of constructing trajectories of alaser robotic complex for 3D polygonal models. J. Phys. Conf. Series. 2018, 132, 012007. [Google Scholar] [CrossRef]

- Lyukhter, A.B.; Zvyagin, M.Y.; Golubev, A.S.; Rodionov, D.V. Perenos koordinat trekhmernykh CAD-modeley v prostranstvo obrabatyvayemykh detaley v robotizirovannykh stanochnykh kompleksakh. Din. Slozhnykh Sist. XXI Veka 2017, 11, 40–46. [Google Scholar]

- Njaastad, E.B.; Egeland, O. Automatic touch-up of welding paths using 3d vision. IFAC PapersOnLine 2016, 49, 73–78. [Google Scholar] [CrossRef]

- Zeng, J.; Chang, B.; Du, D.; Peng, G.; Chang, S.; Hong, Y.; Shan, J. A vision-aided 3D path teaching method before narrow butt joint welding. Sensors 2017, 17, 1099. [Google Scholar] [CrossRef]

- Regaard, B.; Kaierle, S.; Poprawe, R. Seam-tracking for high precision laser welding applications—Methods, restrictions and enhanced concepts. J. Laser Appl. 2009, 21, 183–195. [Google Scholar] [CrossRef]

- Chkalov, R.V.; Kochuev, D.A.; Rodionov, D.V.; Prokoshev, V.G.; Lyukhter, A.B. Laser powder cladding automated control method based on advanced monitoring system of processing area by CCD-camera. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2020; Volume 896, p. 012128. [Google Scholar]

- Mitchell, D.; Fang, G. Autonomous weld seam identification and localisation using eye-in-hand stereo vision for robotic arc welding. Robot. Comput. Integr. Manuf. 2013, 29, 288–301. [Google Scholar]

- Mitchell, D.; Fang, G. Weld seam detection using computer vision for robotic arc welding. In Proceedings of the IEEE International Conference on Automation Science and Engineering CASE, Seoul, Korea, 20–24 August 2012; IEEE: New York, NY, USA, 2012. [Google Scholar]

- Shah, H.N.M.; Sulaiman, M.; Shukor, A.Z.; Kamis, Z. An experiment of detection and localization in tooth saw shape for butt joint using KUKA welding robot. Int. J. Adv. Manuf. Technol. 2018, 97, 3153–3162. [Google Scholar] [CrossRef]

- Huabin, C.; Lin, T.; Chen, S. Seam tracking and dynamic process control for high precision arc welding. In Robotic Welding, Intelligence and Automation; Springer: Berlin/Heidelberg, Germany, 2011; pp. 193–201. [Google Scholar]

- Shi, F.; Zhou, L.; Lin, T.; Chen, S. Efficient weld seam detection for robotic welding from a single image. In Robotic Welding, Intelligence and Automation; Springer: Berlin/Heidelberg, Germany, 2007; pp. 289–294. [Google Scholar]

- Cieszyński, W.; Zięba, M.; Reiner, J. Real time trajectory correction system of optical head in laser welding. Acta Mech. Autom. 2015, 9, 265–269. [Google Scholar] [CrossRef]

- Nilsen, M.; Sikström, F.; Christiansson, A.K.; Ancona, A. In-process monitoring and control of robotized laser beam welding of closed square butt joints. Procedia Manuf. 2018, 25, 511–516. [Google Scholar] [CrossRef]

- Huo, Y.K.; Wei, G.; Zhang, Y.D.; Wu, L.N. An adaptive threshold for the canny operator of edge detection. In Proceedings of the 2010 International Conference on Image Analysis and Signal Processing, Zhejiang, China, 9–11 April 2010; IEEE: New York, NY, USA, 2010; pp. 371–374. [Google Scholar]

- Golubev, A.; Vlasov, I. Technological control of joints in the process of laser welding by means of machine vision. Tech. Sci. Theory Pract. 2015, 10, 46. [Google Scholar]

- Jeng, J.Y.; Mau, T.F.; Leu, S.M. Prediction of laser butt joint welding parameters using back propagation and learning vector quantization networks. J. Mater. Process. Technol. 2000, 99, 207–218. [Google Scholar] [CrossRef]

- ISO. ISO 13919-2:2001 Welding—Electron and Laser Beam Welded Joints; ISO: Geneva, Switzerland, 2001. [Google Scholar]

- Evtihiev, N.N.; Ochin, O.F.; Begunov, I.A. Lazernye Tekhnologii: Uchebnoe Posobie; MFTI: Moscow, Russia, 2020; Volume 240, ISBN 978-5-91559-281-9. [Google Scholar]

- Rodionov, D.; Lyukhter, A.; Prokoshev, V. Methods of automatic correction of technological trajectory of laser welding complex by means of computer vision. In Proceedings of the 2020 International Conference on Industrial Engineering, Applications and Manufacturing (ICIEAM), Sochi, Russia, 18–22 May 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Rodionov, D.; Lyukhter, A.; Prokoshev, V. Automated System of Technological Preparation of Production on Laser Robotic Complexes. Patent RF No. 2020619863, 2020. [Google Scholar]

- Vatamanyuk, I.V.; Ronzhin, A.L. Primenenie metodov ocenivaniya razmytosti cifrovyh izobrazhenij v zadache audiovizual’nogo monitoringa. Inf. Upr. Sist. 2014, 4, 16–23. [Google Scholar]

- Cardani, D. Adventures in HSV Space; Laboratorio de Robótica, Instituto Tecnológico Autónomo de Mexico: Mexico City, Mexico, 2001. [Google Scholar]

- Marengoni, M.; Stringhini, D. High level computer vision using opencv. In Proceedings of the 2011 24th SIBGRAPI Conference on Graphics, Patterns, and Images Tutorials, Alagoas, Brazil, 28–30 August 2011; IEEE: New York, NY, USA, 2011; pp. 11–24. [Google Scholar]

- Wu, S.T.; Marquez, M.R.G. A non-self-intersection Douglas-Peucker algorithm. In Proceedings of the 16th Brazilian Symposium on Computer Graphics and Image Processing (SIBGRAPI 2003), Sao Carlos, Brazil, 12–15 October 2003; IEEE: New York, NY, USA, 2003; pp. 60–66. [Google Scholar]

- Rodionov, D. Upravlenie lazernym robotizirovannym kompleksom v kraevyh uchastkah zony obrabotki. Din. Slozhnykh Sist. XXI Veka 2019, 13, 5–13. [Google Scholar]

- Rodionov, D.; Lyukhter, A.; Prokoshev, V. Calculation of the points of the technological trajectory of the laser robotic complex relative to the tool and tooling in the CAM. St. Petersburg State Polytech. Univ. J. Comput. Sci. Telecommun. Control Syst. 2020, 12, 123–135. [Google Scholar]

- Rodionov, D.; Lyukhter, A.; Prokoshev, V. Automated Process Control System of the Laser Robotic Complex. Patent RF No. 2020661002, 2020. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).