Predicting Vehicle-Engine-Radiated Noise Based on Bench Test and Machine Learning

Abstract

1. Introduction

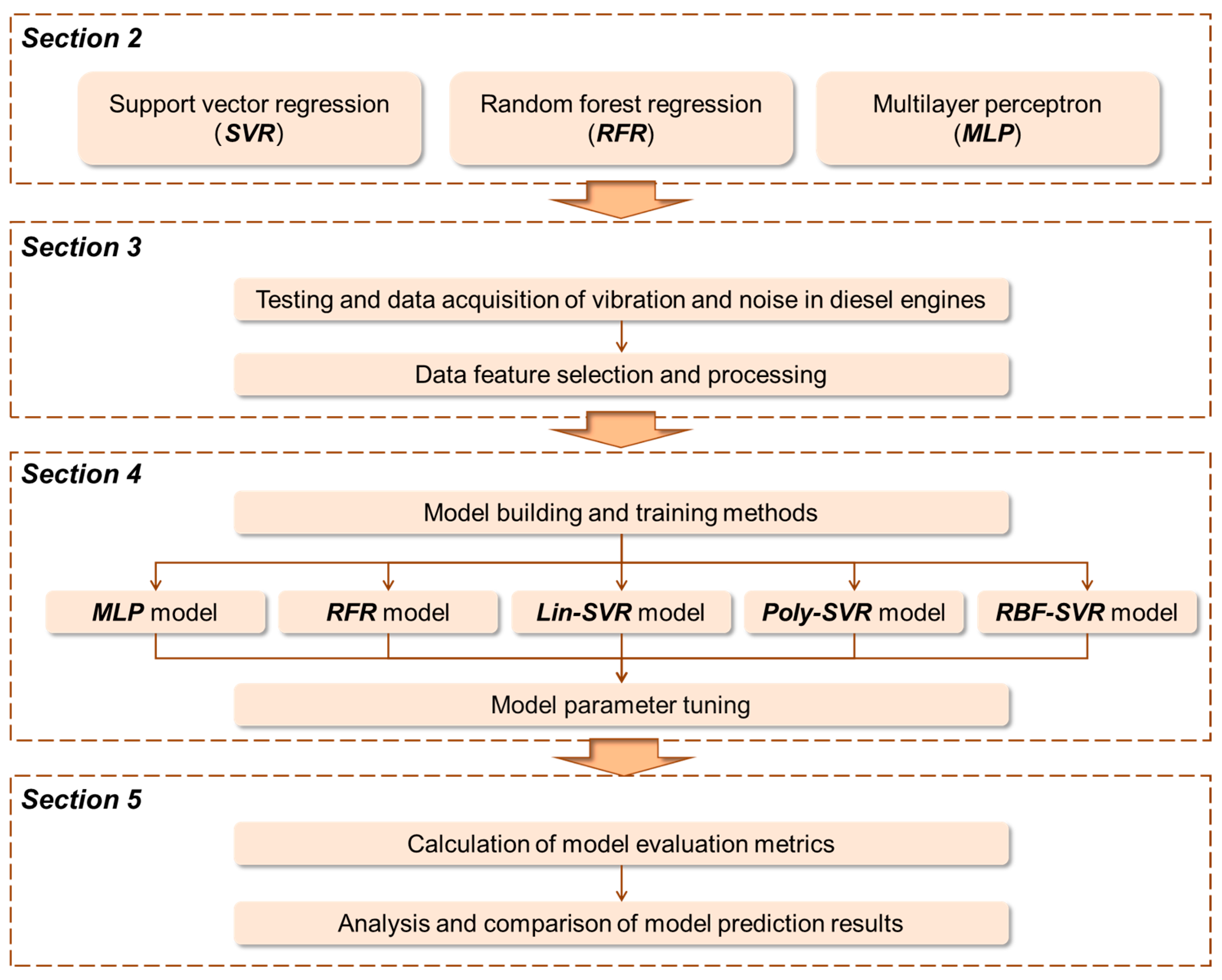

2. Analysis and Comparison Methods

2.1. Support Vector Regression

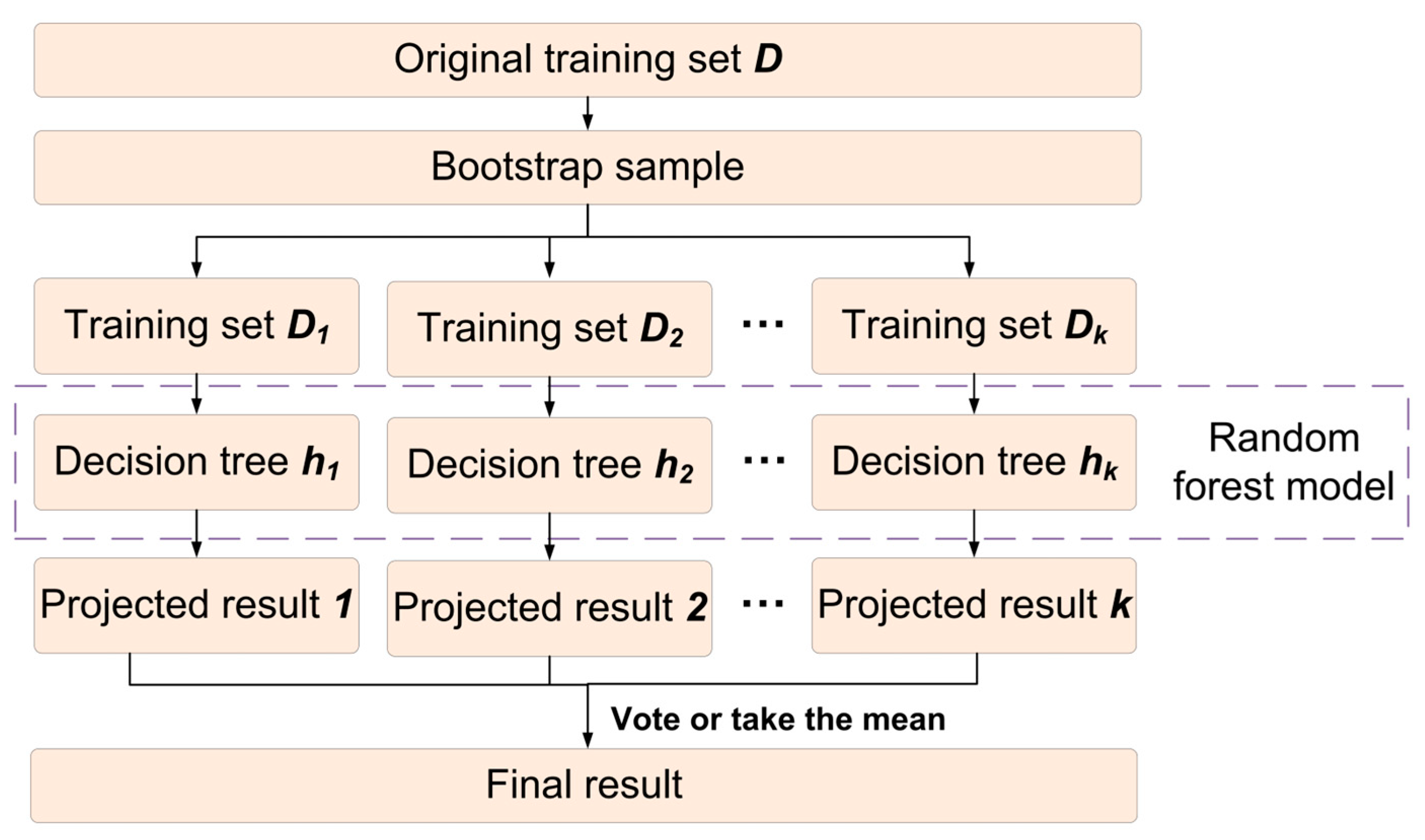

2.2. Random Forest Regression

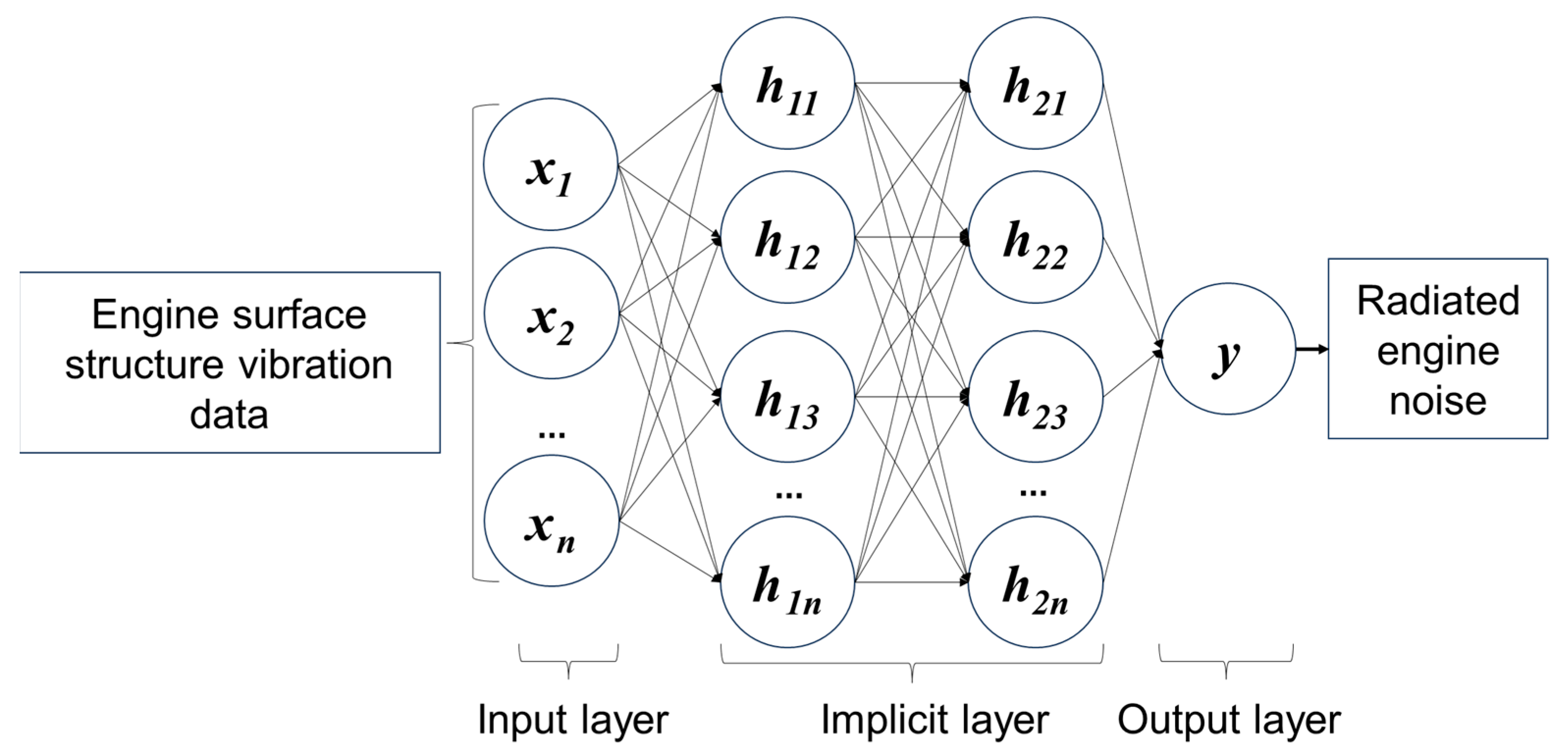

2.3. Multilayer Perceptron

3. Experiment and Data Collection

3.1. Engine Vibration and Noise Test

3.2. Feature Selection and Data Processing

4. Development of Prediction Models

4.1. Training Methodology

4.2. Parameter Tuning

5. Results and Discussion

5.1. Model Evaluation Metrics

5.2. Analysis and Discussion

5.3. Transferability and Limitations

6. Conclusions

- Using time-domain vibration data from the engine surface, radiated noise prediction models were constructed. The prediction results on the test set indicate that all algorithms effectively predict engine radiated noise, with the Poly-SVR model demonstrating the best overall performance (MAE: 0.1, MaxAE: 0.24, MedAE: 0.08). In contrast, the MLP model exhibited the poorest performance (e.g., MAE: 0.26, MaxAE: 0.6, MedAE: 0.19). This suggests that Poly-SVR is particularly suited for capturing the temporal dynamics in vibration signals, achieving up to 16% better accuracy compared to linear models in time-domain scenarios.

- A radiated noise prediction model was developed using frequency-domain vibration data from an engine surface. Performance comparisons on the test set showed that the Lin-SVR and Poly-SVR models achieved the best prediction performance. In this study, the optimal value of the degree parameter in the Poly-SVR algorithm is always 1, meaning the decision functions calculated by Poly-SVR and Lin-SVR are identical; as such, the final results were identical (MAE: 0.18, MaxAE: 0.42, MedAE: 0.13), outperforming the worst-performing RBF-SVR model (MAE: 0.89, MaxAE: 2.08, MedAE: 0.63). These findings highlight the effectiveness of linear and polynomial kernel functions in processing spectra, likely due to their ability to more effectively model harmonic components after dimensionality reduction using PCA, although RBF-SVR may overfit in high-dimensional frequency spaces.

- Evaluating the optimal algorithm for each measurement point using MaxAE, MAE, and MedAE as metrics, the measurement point between cylinders 3 and 4 on the engine top surface yields the best prediction performance (MAE: 0.15, MaxAE: 0.48, MedAE: 0.1). Because this location is close to critical engine components, it is likely to capture the most representative vibration mode, reducing the prediction error by 13% compared to other points.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SVR | Support vector regression |

| RFR | Random forest regression |

| MLP | Multilayer perceptron |

| RMS | Root mean square |

| PCA | Principal component analysis |

| Lin-SVR | Linear kernel support vector regression |

| Poly-SVR | Polynomial kernel support vector regression |

| RBF-SVR | Radial basis function kernel support vector regression |

| MaxAE | Maximum absolute error |

| MAE | Mean absolute error |

| MedAE | Median absolute error |

| LR | Linear regression |

| CI | Confidence interval |

References

- Huang, H.; Lim, T.C.; Wu, J.; Ding, W.; Pang, J. Multitarget prediction and optimization of pure electric vehicle tire/road airborne noise sound quality based on a knowledge-and data-driven method. Mech. Syst. Signal Process. 2023, 197, 110361. [Google Scholar] [CrossRef]

- Yu, X.; Dai, R.; Zhang, J.; Yin, Y.; Li, S.; Dai, P.; Huang, H. Vehicle structural road noise prediction based on an improved Long Short-Term Memory method. Sound Vib. 2025, 59, 2022. [Google Scholar] [CrossRef]

- Yang, M.; Dai, P.; Yin, Y.; Wang, D.; Wang, Y.; Huang, H. Predicting and optimizing pure electric vehicle road noise via a locality-sensitive hashing transformer and interval analysis. ISA Trans. 2025, 157, 556–572. [Google Scholar] [CrossRef] [PubMed]

- Siano, D.; Bozza, F. Combustion Noise Prediction in a Small Diesel Engine Finalized to the Optimization of the Fuel Injection Strategy. In Proceedings of the SAE 2009 Noise and Vibration Conference and Exhibition, St. Charles, IL, USA, 19–21 May 2009. [Google Scholar] [CrossRef]

- Moreau, S. Turbomachinery Noise Predictions: Present and Future. Acoustics 2019, 1, 92–116. [Google Scholar] [CrossRef]

- Férand, M.; Livebardon, T.; Moreau, S.; Sanjosé, M. Numerical Prediction of Far-Field Combustion Noise from Aeronautical Engines. Acoustics 2019, 1, 174–198. [Google Scholar] [CrossRef]

- Liu, Y.; Dowling, A.P.; Swaminathan, N.; Morvant, R.; Macquisten, M.A.; Caracciolo, L.F. Prediction of combustion noise for an aeroengine combustor. J. Propuls. Power 2014, 30, 114–122. [Google Scholar] [CrossRef]

- Hipparge, V.; Bhalerao, S.; Chavan, A.; Chaudhari, V.; Suresh, R. Agricultural Tractor Engine Noise Prediction and Optimization through Test and Simulation Techniques. In Proceedings of the Symposium on International Automotive Technology, Pune, India, 23–25 January 2024. [Google Scholar] [CrossRef]

- Dupré, T.; Denjean, S.; Aramaki, M.; Kronland-Martinet, R. Analysis by synthesis of engine sounds for the design of dynamic auditory feedback of electric vehicles. Acta Acust. 2023, 7, 36. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, H.; Yang, B.; Shi, S. Underwater Radiated Noise Prediction Method of Cabin Structures under Hybrid Excitation. Appl. Sci. 2023, 13, 12667. [Google Scholar] [CrossRef]

- Ding, P.; Xu, Y.; Sun, X.M. Multi-task learning for aero-engine bearing fault diagnosis with limited data. IEEE Trans. Instrum. Meas. 2024, 73, 1–11. [Google Scholar] [CrossRef]

- Mariani, V.C.; Och, S.H.; dos Santos Coelho, L.; Domingues, E. Pressure prediction of a spark ignition single cylinder engine using optimized extreme learning machine models. Appl. Energy 2019, 249, 204–221. [Google Scholar] [CrossRef]

- Zhan, X.; Bai, H.; Yan, H.; Wang, R.; Guo, C.; Jia, X. Diesel engine fault diagnosis method based on optimized VMD and improved CNN. Processes 2022, 10, 2162. [Google Scholar] [CrossRef]

- Zhang, P.; Gao, W.; Li, Y.; Wang, Y. Misfire detection of diesel engine based on convolutional neural networks. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 235, 2148–2165. [Google Scholar] [CrossRef]

- Wang, J.; Huang, Y.; Wei, L.; Wang, X. Research on engine cooling system condition monitoring based on deep digital twin. Proc. Inst. Mech. Eng. Part D: J. Automob. Eng. 2025, 9544070251317757. [Google Scholar] [CrossRef]

- Li, Y.; Han, M.; Han, B.; Le, X.; Kanae, S. Fault diagnosis method of diesel engine based on improved structure preserving and k-nn algorithm. In Advances in Neural Networks–ISNN 2018, Proceedings of the 15th International Symposium on Neural Networks, Minsk, Belarus, 25–28 June 2018; Springer International Publishing: Cham, Switzerland, 2018; Volume 15, pp. 656–664. [Google Scholar] [CrossRef]

- Wen, P.J.; Huang, C. Machine learning and prediction of masked motors with different materials based on noise analysis. IEEE Access 2022, 10, 75708–75719. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, J.; Guo, P.; Bi, F.; Yu, H.; Ni, G. Sound quality prediction for engine-radiated noise. Mech. Syst. Signal Process. 2015, 56, 277–287. [Google Scholar] [CrossRef]

- Fu, J.; Yang, R.; Li, X.; Sun, X.; Li, Y.; Liu, Z.; Zhang, Y.; Sunden, B. Application of artificial neural network to forecast engine performance and emissions of a spark ignition engine. Appl. Therm. Eng. 2022, 201, 117749. [Google Scholar] [CrossRef]

- Molina, S.; Novella, R.; Gomez-Soriano, J.; Olcina-Girona, M. New combustion modelling approach for methane-hydrogen fueled engines using machine learning and engine virtualization. Energies 2021, 14, 6732. [Google Scholar] [CrossRef]

- Huang, H.; Huang, X.; Ding, W.; Zhang, S.; Pang, J. Optimization of electric vehicle sound package based on LSTM with an adaptive learning rate forest and multiple-level multiple-object method. Mech. Syst. Signal Process. 2023, 187, 109932. [Google Scholar] [CrossRef]

- Huang, H.; Huang, X.; Ding, W.; Yang, M.; Yu, X.; Pang, J. Vehicle vibro-acoustical comfort optimization using a multi-objective interval analysis method. Expert Syst. Appl. 2023, 213, 119001. [Google Scholar] [CrossRef]

- Hao, P.Y.; Chiang, J.H.; Chen, Y.D. Possibilistic classification by support vector networks. Neural Netw. 2022, 149, 40–56. [Google Scholar] [CrossRef] [PubMed]

- Teixeira, R.; Nogal, M.; O’Connor, A. Adaptive approaches in metamodel-based reliability analysis: A review. Struct. Saf. 2021, 89, 102019. [Google Scholar] [CrossRef]

- Chaabene, W.B.; Flah, M.; Nehdi, M.L. Machine learning prediction of mechanical properties of concrete: Critical review. Constr. Build. Mater. 2020, 260, 119889. [Google Scholar] [CrossRef]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series Landsat NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Kisi, O. Pan evaporation modeling using least square support vector machine, multivariate adaptive regression splines and M5 model tree. J. Hydrol. 2015, 528, 312–320. [Google Scholar] [CrossRef]

- Zhao, J.; Yin, Y.; Chen, J.; Zhao, W.; Ding, W.; Huang, H. Evaluation and Prediction of Vibration Comfort in Engineering Machinery Cabs Using Random Forest with Genetic Algorithm. SAE Int. J. Veh. Dyn. Stab. NVH 2024, 8, 491–512. [Google Scholar] [CrossRef]

- Cutler, A.; Cutler, D.R.; Stevens, J.R. Random forests. In Ensemble Machine Learning, 2nd ed.; Springer: New York, NY, USA, 2012; pp. 157–175. [Google Scholar] [CrossRef]

- Svetnik, V.; Liaw, A.; Tong, C.; Culberson, J.C.; Sheridan, R.P.; Feuston, B.P. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef]

- Gardner, M.W.; Dorling, S.R. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Afzal, S.; Ziapour, B.M.; Shokri, A.; Shakibi, H.; Sobhani, B. Building energy consumption prediction using multilayer perceptron neural network-assisted models; comparison of different optimization algorithms. Energy 2023, 282, 128446. [Google Scholar] [CrossRef]

- Popescu, M.C.; Balas, V.E.; Perescu-Popescu, L.; Mastorakis, N. Multilayer perceptron and neural networks. WSEAS Trans. Circuits Syst 2009, 8, 579–588. Available online: https://dl.acm.org/doi/abs/10.5555/1639537.1639542 (accessed on 12 August 2025).

- Wu, Y.; Liu, X.; Huang, H.; Wu, Y.; Ding, W.; Yang, M. Multi-objective prediction and optimization of vehicle acoustic package based on ResNet neural network. Sound Vib. 2023, 57, 73–95. [Google Scholar] [CrossRef]

- GB/T 1859.3-2015; Reciprocating Internal Combustion Engines—Measurement of Sound Power Level Using Sound Pressure—Part 3. Precision Methods for Hemi-Anechoic Rooms. General Administration of Quality Supervision, Inspection and Quarantine of the People’s Republic of China, Standardization Administration of the People’s Republic of China, Standards Press of China: Beijing, China, 2015.

- Masri, J.; Amer, M.; Salman, S.; Ismail, M.; Elsisi, M. A survey of modern vehicle noise, vibration, and harshness: A state-of-the-art. Ain Shams Eng. J. 2024, 15, 102957. [Google Scholar] [CrossRef]

- Dai, R.; Zhao, J.; Zhao, W.; Ding, W.; Huang, H. Exploratory study on sound quality evaluation and prediction for engineering machinery cabins. Measurement 2025, 253, 117684. [Google Scholar] [CrossRef]

- Jafari, M.; Verma, P.; Zare, A.; Borghesani, P.; Bodisco, T.A.; Ristovski, Z.D.; Brown, R.J. In-cylinder pressure reconstruction by engine acoustic emission. Mech. Syst. Signal Process. 2021, 152, 107490. [Google Scholar] [CrossRef]

- Payri, F.; Luján, J.M.; Martín, J.; Abbad, A. Digital signal processing of in-cylinder pressure for combustion diagnosis of internal combustion engines. Mech. Syst. Signal Process. 2010, 24, 1767–1784. [Google Scholar] [CrossRef]

- Wright, R.F.; Lu, P.; Devkota, J.; Lu, F.; Ziomek-Moroz, M.; Ohodnicki, P.R., Jr. Corrosion sensors for structural health monitoring of oil and natural gas infrastructure: A review. Sensors 2019, 19, 3964. [Google Scholar] [CrossRef]

- Fan, C.; Sun, Y.; Zhao, Y.; Song, M.; Wang, J. Deep learning-based feature engineering methods for improved building energy prediction. Appl. Energy 2019, 240, 35–45. [Google Scholar] [CrossRef]

- Lee, S.; Ha, J.; Zokhirova, M.; Moon, H.; Lee, J. Background information of deep learning for structural engineering. Arch. Comput. Methods Eng. 2018, 25, 121–129. [Google Scholar] [CrossRef]

- Barelli, L.; Bidini, G.; Bonucci, F.; Moretti, E. The radiation factor computation of energy systems by means of vibration and noise measurements: The case study of a cogenerative internal combustion engine. Appl. Energy 2012, 100, 258–266. [Google Scholar] [CrossRef]

- Greenacre, M.; Groenen, P.J.; Hastie, T.; d’Enza, A.I.; Markos, A.; Tuzhilina, E. Principal component analysis. Nat. Rev. Methods Prim. 2022, 2, 100. [Google Scholar] [CrossRef]

- Erbe, C.; Duncan, A.; Hawkins, L.; Terhune, J.M.; Thomas, J.A. Introduction to acoustic terminology and signal processing. In Exploring Animal Behavior Through Sound: Volume 1: Methods; Springer International Publishing: Cham, Switzerland, 2022; pp. 111–152. [Google Scholar] [CrossRef]

- Xu, Y.; Goodacre, R. On splitting training and validation set: A comparative study of cross-validation, bootstrap and systematic sampling for estimating the generalization performance of supervised learning. J. Anal. Test. 2018, 2, 249–262. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Wang, Y.; Wu, J.; Ding, W.; Pang, J. Prediction and optimization of pure electric vehicle tire/road structure-borne noise based on knowledge graph and multi-task ResNet. Expert Syst. Appl. 2024, 255, 124536. [Google Scholar] [CrossRef]

- Rodriguez, J.D.; Perez, A.; Lozano, J. A Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 569–575. [Google Scholar] [CrossRef] [PubMed]

- Ramezan, C.A.; Warner, T.A.; Maxwell, A.E. Evaluation of sampling and cross-validation tuning strategies for regional-scale machine learning classification. Remote Sens. 2019, 11, 185. [Google Scholar] [CrossRef]

- Aldahdooh, A.; Masala, E.; Janssens, O.; Van Wallendael, G.; Barkowsky, M.; Le Callet, P. Improved performance measures for video quality assessment algorithms using training and validation sets. IEEE Trans. Multimed. 2018, 21, 2026–2041. [Google Scholar] [CrossRef]

- Huang, H.; Huang, X.; Ding, W.; Yang, M.; Fan, D.; Pang, J. Uncertainty optimization of pure electric vehicle interior tire/road noise comfort based on data-driven. Mech. Syst. Signal Process. 2022, 165, 108300. [Google Scholar] [CrossRef]

- Zhu, H.; Zhao, J.; Wang, Y.; Ding, W.; Pang, J.; Huang, H. Improving of pure electric vehicle sound and vibration comfort using a multi-task learning with task-dependent weighting method. Measurement 2024, 233, 114752. [Google Scholar] [CrossRef]

| Kernel Function Name | Displayed Formula | Parameter Range |

|---|---|---|

| Linear kernel | \ | |

| Polynomial kernel | ||

| Radial basis function kernel | ||

| Sigmoid kernel |

| Speed (r·min−1) | Duty | Maximum Cylinder Pressure (bar) | Power (kW) | Torque (N·m) |

|---|---|---|---|---|

| 1600 | 100% | 104.6 | 45.8 | 273.1 |

| 1800 | 100% | 127.1 | 55.8 | 296.2 |

| 2000 | 100% | 147.0 | 61.0 | 291.3 |

| 2200 | 100% | 155.4 | 67.6 | 293.3 |

| 2400 | 100% | 155.5 | 70.9 | 282.1 |

| 2600 | 100% | 156.7 | 74.5 | 273.5 |

| 2800 | 100% | 152.7 | 76.3 | 260.0 |

| 3000 | 100% | 147.9 | 75.7 | 241.1 |

| Sensor Type | Sensor Model | Range | Temperature Range | Sensitivity |

|---|---|---|---|---|

| Triaxial accelerometer | PCB 357A67 | ±50 g | −54–+121 °C | 100 mV/g |

| Microphone | PCB 378B02 | 15–146 dB | −40–+80 °C | 50 mV/Pa |

| Arithmetic | Hyperparameter | Search Space |

|---|---|---|

| Lin-SVM | Lin kernel regularization parameter C | [1, 10, 100, 1000] |

| RBF-SVM | The RBF kernel regularization parameter C | [1, 10, 100, 1000] |

| The RBF kernel coefficient γ | [0.01, 0.1, 1, 10, 100] | |

| Poly-SVM | Poly kernel regularization parameter C | [1, 10, 100, 1000] |

| Poly kernel coefficient γ | [0.01, 0.1, 1, 10, 100] | |

| Highest power | [1, 2, 3, 4, 5] | |

| MLP | Learning rate | [0.00001, 0.0001, 0.001, 0.01, 0.1] |

| Number of neurons | [10, 25, 50, 100] | |

| Weight optimizer | [Adam, LBS, SGD] | |

| RFR | Maximum number of features | [None, 1, 2, 4, 8] |

| Number of weak learners | [10, 25, 50, 75, 100, 125] | |

| Maximum depth of decision tree | [None, 1, 2, …, 10] |

| Measurement Point | Lin-SVR | Poly-SVR | RBF-SVR | ||||||

| MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | |

| P1 | 0.42 | 0.18 | 0.13 | 0.42 | 0.18 | 0.13 | 2.08 | 0.89 | 0.63 |

| P2 | 0.42 | 0.17 | 0.11 | 0.42 | 0.17 | 0.11 | 2.26 | 0.81 | 0.31 |

| P3 | 0.58 | 0.16 | 0.12 | 0.58 | 0.16 | 0.12 | 2.03 | 0.72 | 0.29 |

| P4 | 0.89 | 0.38 | 0.31 | 0.89 | 0.38 | 0.31 | 2.42 | 0.71 | 0.22 |

| P5 | 0.5 | 0.16 | 0.12 | 0.5 | 0.16 | 0.12 | 2.49 | 0.63 | 0.15 |

| P6 | 0.53 | 0.15 | 0.09 | 0.53 | 0.15 | 0.09 | 2.03 | 0.47 | 0.18 |

| P7 | 0.65 | 0.23 | 0.18 | 0.65 | 0.23 | 0.18 | 2.4 | 0.97 | 0.6 |

| Measurement Point | MLP | RFR | LR | ||||||

| MaxAE /dB | MAE /dB | MaxAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | |

| P1 | 0.68 | 0.25 | 0.18 | 1.28 | 0.27 | 0.19 | 1.84 | 0.79 | 0.57 |

| P2 | 1.65 | 0.23 | 0.12 | 0.57 | 0.18 | 0.13 | 2.00 | 0.72 | 0.28 |

| P3 | 0.92 | 0.21 | 0.13 | 0.49 | 0.14 | 0.1 | 1.80 | 0.64 | 0.26 |

| P4 | 2.45 | 0.31 | 0.13 | 1.13 | 0.28 | 0.18 | 1.87 | 0.63 | 0.20 |

| P5 | 2.73 | 0.3 | 0.12 | 0.51 | 0.15 | 0.12 | 1.73 | 0.56 | 0.14 |

| P6 | 1.31 | 0.2 | 0.08 | 0.59 | 0.16 | 0.11 | 1.80 | 0.42 | 0.16 |

| P7 | 2.07 | 0.36 | 0.29 | 0.95 | 0.29 | 0.2 | 2.12 | 0.86 | 0.54 |

| Measurement Point | Lin-SVR | Poly-SVR | RBF-SVR | ||||||

| MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | |

| P1 | 0.45 | 0.2 | 0.2 | 0.45 | 0.2 | 0.2 | 2.42 | 0.7 | 0.19 |

| P2 | 0.62 | 0.19 | 0.17 | 0.62 | 0.19 | 0.17 | 2.37 | 0.76 | 0.24 |

| P3 | 0.57 | 0.19 | 0.14 | 0.57 | 0.19 | 0.14 | 2.34 | 0.71 | 0.22 |

| P4 | 0.46 | 0.23 | 0.22 | 0.46 | 0.23 | 0.22 | 2.4 | 0.58 | 0.18 |

| P5 | 0.59 | 0.15 | 0.13 | 0.59 | 0.15 | 0.13 | 2.44 | 0.63 | 0.19 |

| P6 | 0.44 | 0.14 | 0.1 | 0.44 | 0.14 | 0.1 | 2.43 | 0.63 | 0.19 |

| P7 | 0.53 | 0.18 | 0.14 | 0.53 | 0.18 | 0.14 | 2.27 | 0.77 | 0.32 |

| Measurement Point | MLP | RFR | LR | ||||||

| MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | |

| P1 | 2.81 | 0.26 | 0.09 | 0.52 | 0.16 | 0.12 | 2.35 | 0.78 | 0.26 |

| P2 | 2.53 | 0.24 | 0.08 | 0.84 | 0.2 | 0.11 | 2.12 | 0.72 | 0.23 |

| P3 | 2.4 | 0.24 | 0.08 | 1.61 | 0.22 | 0.14 | 2.01 | 0.72 | 0.23 |

| P4 | 2.33 | 0.23 | 0.07 | 0.71 | 0.19 | 0.13 | 1.95 | 0.69 | 0.20 |

| P5 | 2.6 | 0.21 | 0.04 | 0.58 | 0.17 | 0.13 | 2.17 | 0.63 | 0.12 |

| P6 | 2.62 | 0.26 | 0.09 | 0.53 | 0.15 | 0.11 | 2.19 | 0.78 | 0.26 |

| P7 | 2.45 | 0.26 | 0.11 | 0.65 | 0.18 | 0.12 | 2.05 | 0.78 | 0.32 |

| Measurement Point | Lin-SVR | Poly-SVR | RBF-SVR | ||||||

| MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | |

| P1 | 0.49 | 0.18 | 0.17 | 0.49 | 0.18 | 0.17 | 2.16 | 0.74 | 0.29 |

| P2 | 0.54 | 0.17 | 0.15 | 0.54 | 0.17 | 0.15 | 2.25 | 0.79 | 0.36 |

| P3 | 0.53 | 0.17 | 0.14 | 0.53 | 0.17 | 0.14 | 2.13 | 0.73 | 0.27 |

| P4 | 0.72 | 0.24 | 0.2 | 0.72 | 0.24 | 0.2 | 2.53 | 0.63 | 0.17 |

| P5 | 0.48 | 0.15 | 0.1 | 0.48 | 0.15 | 0.1 | 2.23 | 0.66 | 0.23 |

| P6 | 0.46 | 0.16 | 0.12 | 0.46 | 0.16 | 0.12 | 2.26 | 0.64 | 0.18 |

| P7 | 0.58 | 0.21 | 0.18 | 0.58 | 0.21 | 0.18 | 2.43 | 0.93 | 0.57 |

| Measurement Point | MLP | RFR | LR | ||||||

| MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | MaxAE /dB | MAE /dB | MedAE /dB | |

| P1 | 1.68 | 0.22 | 0.12 | 0.99 | 0.21 | 0.15 | 2.03 | 0.68 | 0.19 |

| P2 | 1.98 | 0.24 | 0.11 | 0.44 | 0.13 | 0.1 | 2.39 | 0.74 | 0.17 |

| P3 | 1.65 | 0.23 | 0.14 | 1.08 | 0.21 | 0.12 | 1.99 | 0.71 | 0.22 |

| P4 | 2.51 | 0.24 | 0.09 | 0.61 | 0.21 | 0.13 | 3.03 | 0.74 | 0.14 |

| P5 | 1.63 | 0.19 | 0.1 | 0.49 | 0.16 | 0.14 | 1.97 | 0.59 | 0.16 |

| P6 | 1.57 | 0.19 | 0.08 | 0.44 | 0.15 | 0.12 | 1.90 | 0.59 | 0.13 |

| P7 | 1.63 | 0.29 | 0.18 | 0.57 | 0.16 | 0.1 | 1.97 | 0.90 | 0.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, R.; Yin, Y.; Peng, Y.; Zheng, X. Predicting Vehicle-Engine-Radiated Noise Based on Bench Test and Machine Learning. Machines 2025, 13, 724. https://doi.org/10.3390/machines13080724

Liu R, Yin Y, Peng Y, Zheng X. Predicting Vehicle-Engine-Radiated Noise Based on Bench Test and Machine Learning. Machines. 2025; 13(8):724. https://doi.org/10.3390/machines13080724

Chicago/Turabian StyleLiu, Ruijun, Yingqi Yin, Yuming Peng, and Xu Zheng. 2025. "Predicting Vehicle-Engine-Radiated Noise Based on Bench Test and Machine Learning" Machines 13, no. 8: 724. https://doi.org/10.3390/machines13080724

APA StyleLiu, R., Yin, Y., Peng, Y., & Zheng, X. (2025). Predicting Vehicle-Engine-Radiated Noise Based on Bench Test and Machine Learning. Machines, 13(8), 724. https://doi.org/10.3390/machines13080724