Abstract

To address the shortcomings of existing robot collision detection algorithms that use six-dimensional force sensors, a force compensation algorithm based on Kane’s dynamics is proposed, along with a collision detection algorithm that uses the six-dimensional force sensor data combined with the robot’s outer surface equations to derive the robot body’s collision point coordinates. Firstly, a collision detection model for a joint-type collaborative robot is presented. Secondly, based on Kane’s dynamics equations, a force compensation model for the joint-type collaborative robot is established and the corresponding force compensation algorithm is derived. Thirdly, a collision detection algorithm is derived, and an example using a cylindrical joint robot with a link’s outer surface equation is used to solve the collision point. The collision is categorized into nine cases, and the coordinates of the collision point are solved for each case. Finally, force compensation and collision detection experiments are conducted on an AUBO-I5 joint-type collaborative robot. The results of the force compensation show that the comparison curves for forces/torques in three directions are consistent, and the relative error is below 5.6%. The collision detection results indicate that the computed collision positions match the actual collision positions, thus verifying the correctness of the theoretical analysis of the force compensation and collision detection algorithms. The research results provide a theoretical basis for ensuring safety in human–robot collaboration.

1. Introduction

In today’s global economic environment, innovation has become a key driver of economic growth and competitiveness. With rapid advancements in technology, countries around the world are seeking new breakthroughs in the field of robotics. Collaborative robots have gained increasing recognition and adoption globally, demonstrating an indispensable role in both industrial and non-industrial sectors [1,2,3,4]. The original design intention of collaborative robots is to interact and collaborate with humans in the workplace, improving both work efficiency and safety. In a work scenario, collaborative robots directly cooperate with humans, making safety concerns of paramount importance [5,6,7]. To ensure the safety of human–robot collaboration, collision point detection in robots has become a crucial issue that needs to be addressed.

Collision point detection is not only an important means to ensure safety but also a critical basis for timely repairs of the robot body after a collision [8,9,10,11]. In the context of robot safety, operators can analyze the cause of a collision based on the specific coordinates of the collision point, preventing secondary collisions. During the later repair process, force/torque analysis can be conducted at the specific collision location, allowing for timely repairs and preventing more severe damage during subsequent operations.

In order to ensure that the robot can interact with the external environment and provide feedback with important information in a timely manner during collision point detection, the use of sensors becomes a crucial component in collision detection [12,13,14,15,16,17]. Sensors assist the robot in perceiving the external environment and help the robot make correct behavioral decisions. Vision sensors analyze and interpret the captured environmental images, converting them into symbols, enabling the robot to identify object positions and various states. However, their stability and reliability in complex environments are questionable, and the maintenance cost is relatively high. Infrared sensors detect the presence of objects by sensing certain forms of thermal radiation emitted by surrounding environmental objects. These sensors are easily interfered with by various heat sources and sunlight, and their sensitivity to detection can be influenced by the ambient temperature.

The six-dimensional force sensor can convert multidimensional force/torque signals into electrical signals, and can be used to monitor the changes in the magnitude and direction of forces/torques in three spatial directions. It represents a new branch of force sensors [18,19,20,21,22,23,24,25,26]. By installing the six-dimensional force sensor on the robot’s base to ensure safety in human–robot interaction, it not only reduces costs but also effectively reduces the system’s computational load, improving the system’s response speed. When the six-dimensional force sensor provides force/torque feedback to the robot’s control system and outputs the data to the upper-level computer, the operator can respond immediately and formulate the next task, achieving better compliance control during the robot’s operations and human–robot interaction [18,27,28].

In safety studies of robots using six-dimensional force sensors, the robot’s own gravity and dynamic forces generated by inertia can cause the six-dimensional force sensor’s detection results to be non-zero even when no external force is applied, which greatly increases the error in collision detection. Therefore, before collision detection, force compensation control should be applied to the robot, ensuring that the six-dimensional force sensor’s detection result is consistently zero under no external force, providing the foundation for the collision detection algorithm. In the area of force compensation using six-dimensional force sensors, reference [29] conducted a force-following algorithm study after compensating for gravity, allowing the robot to adjust its end-effector pose in real-time according to the feedback from the force sensor, achieving compliance with external forces. However, the study did not address dynamic force compensation. Reference [30] divides force compensation into gravity compensation and dynamic force compensation, with dynamic force compensation using the more traditional Newton–Euler method that requires more calculations. This algorithm is relatively complex, and dividing force compensation into two parts will reduce the accuracy of the algorithm.

In terms of collision point detection using six-dimensional force sensors, reference [31] projects the external collision force vector into the optimal plane for solution, obtaining two coordinates. The actual collision point is near these two points, but the exact position cannot be calculated, and the collision point can only be approximated as the midpoint of the two points, leading to a significant error between the algorithm’s collision point and the actual collision point. Further exploration of the optimal solution is needed. Reference [32] used a similar method, but the intersection of the projection lines can only fall near the real contact point’s projection, and a decision factor must be introduced to search for the optimal contact point.

In response to the shortcomings of existing collision point detection algorithms for robots using six-dimensional force sensors, this paper proposes a force compensation algorithm and a collision detection algorithm. The force compensation algorithm is based on Kane’s dynamics, converting dynamic forces into the generalized resultant force to be compensated. It unifies the derivation of force compensation and compensates the sensor’s zero position value in real-time when the robot is not subjected to external forces, addressing the issues of complexity and insufficient accuracy in previous force compensation algorithms. This provides the foundation for improving the robot collision detection algorithm. The collision detection algorithm utilizes the six-dimensional force sensor installed on the base of a joint robot and combines the robot’s outer surface equations to derive the collision point coordinates on the robot body after a collision, enabling the detection of the robot body’s collision point.

The remaining work arrangement is organized as follows: Section 2 presents the derivation process of the force compensation algorithm and collision detection algorithm for articulated collaborative robots, and takes the articulated robot with a cylindrical outer surface as an example to solve the collision point. Section 3 presents the verification of the force compensation algorithm and collision detection algorithm, and conducts error analysis on the experimental results. Section 4 summarizes the work of this article and looks forward to its future development.

2. Theoretical Analysis

2.1. Collision Detection Model

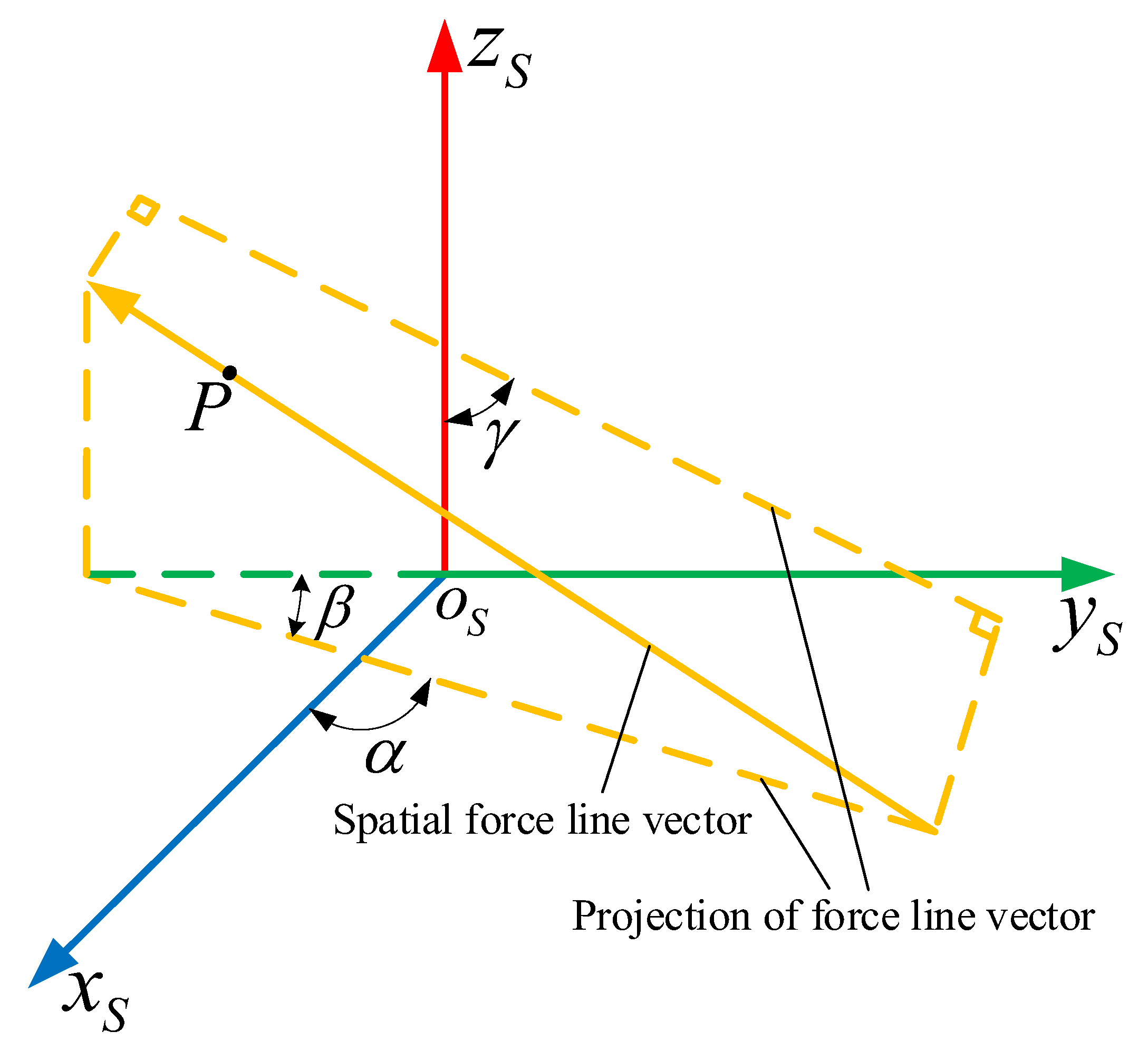

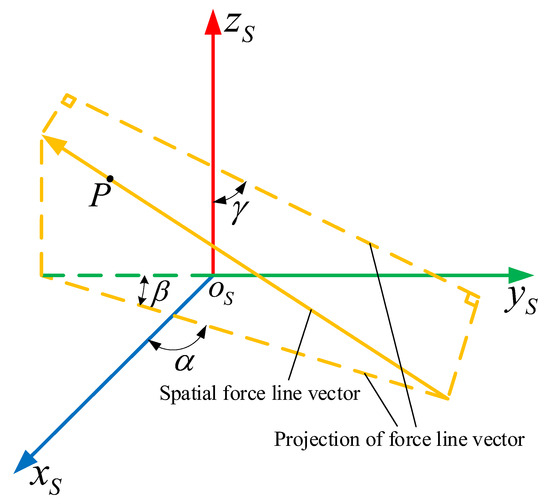

In the detection results of the six-dimensional force sensor, the force vector belongs to the generalized six-dimensional external force, allowing the sensor to detect the force’s magnitude, direction, and line of action. By analyzing the changes in the generalized force vector and torque vector expressions of the six-dimensional force sensor at the base, it can be concluded that

In the Equation, F is the force vector of ; M is the torque vector of . By using the detection results of , the direction vector and a point in space can be solved to obtain the representation of the spatial force vector in the sensor coordinate system. Therefore, the magnitude, direction vector, and direction cosine of the calculated resultant force are

Among them, the point that the spatial force vector passes through can be represented by Equations (5) and (6).

The final expression of the spatial force vector obtained in the sensor coordinate system is

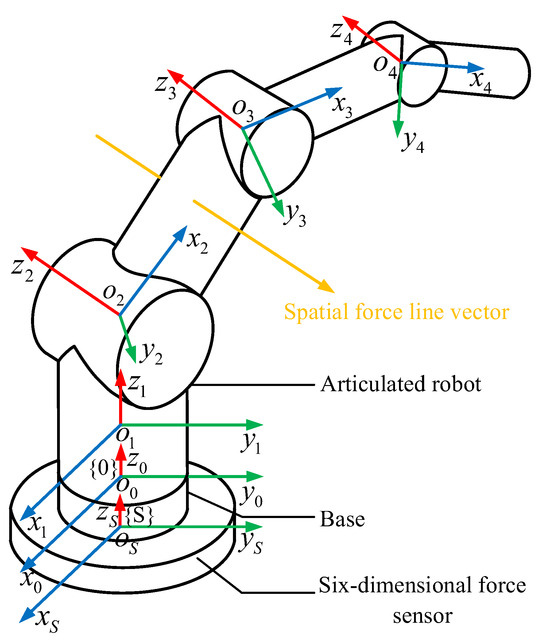

Figure 1 illustrates a schematic diagram of spatial force line vectors.

Figure 1.

Schematic diagram of the spatial force line vector.

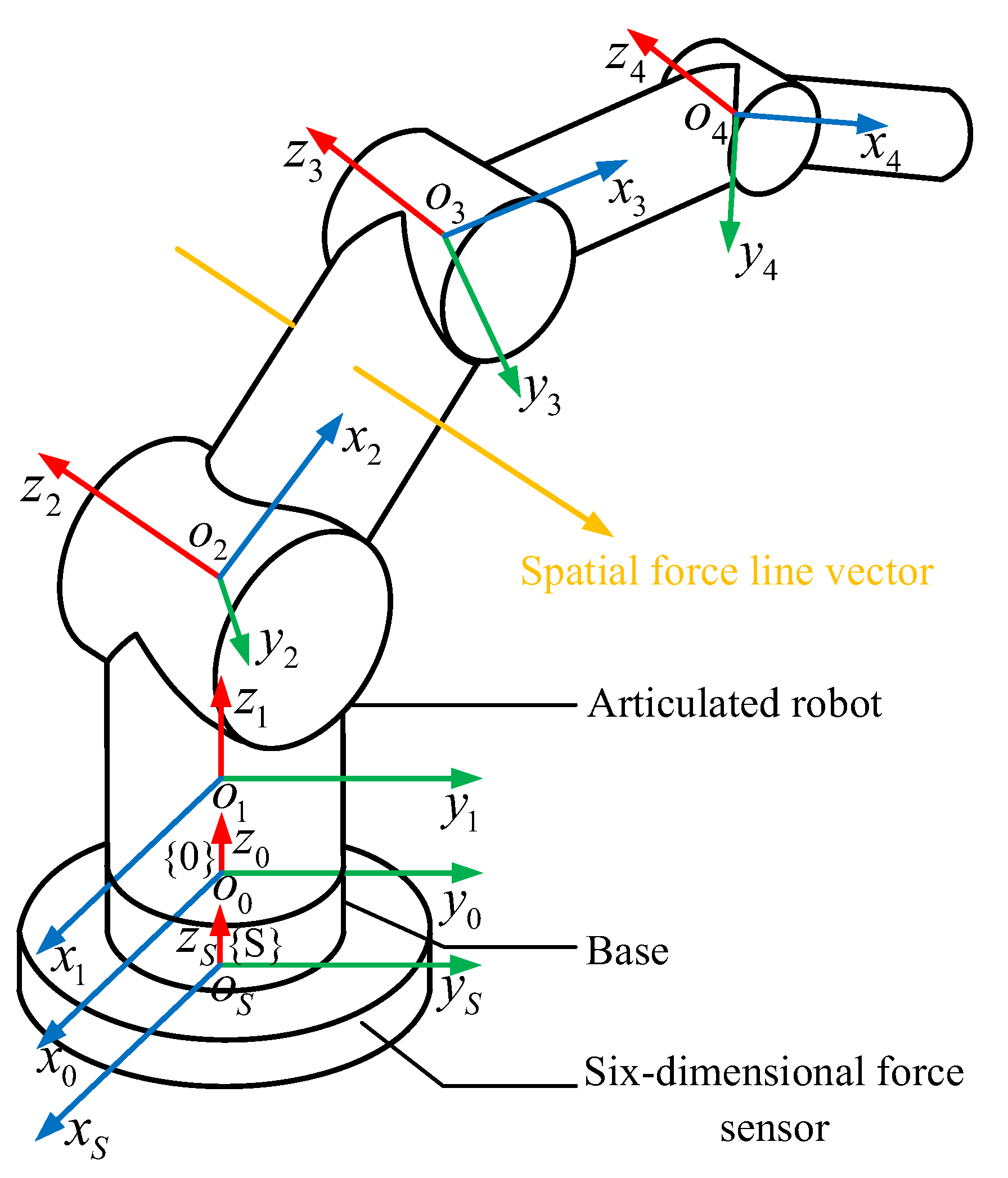

Based on the known model of the robot, the D-H parameter method is applied to establish the coordinate system of each joint of the robot, represented as , . Figure 2 shows the collision detection model of the articulated robot. In this model, the coordinate system of each joint of the robot and the visual rays of the spatial force line vector are displayed. When the force line vector rays pass through the robot body, they represent that the robot has collided.

Figure 2.

Collision detection model of the articulated robots.

The general expression for the homogeneous transformation matrix of a connecting rod is

In Equation (8), is the abbreviation for ; is the abbreviation for .

2.2. Force Compensation Algorithm

Robot dynamics is the core field of studying the relationship between robot motion and force/torque, providing mathematical models and physical laws for robot design, control, and optimization. The main methods for dynamic modeling include the Newton–Euler method, Lagrange method, and Kane method. The Newton–Euler method uses recursive calculations, which are divided into forward recursion and backward recursion, while the Lagrange method requires constructing the kinetic and potential energy of the system to obtain the equation of motion. The Kane method is suitable for both complete and incomplete systems. For complex systems with multiple degrees of freedom such as robotic mechanisms, applying the Kane method can reduce computational steps and improve computational efficiency [33]. In terms of modeling accuracy, the Kane method also has an advantage [34,35]. From Table 1, it can be seen that the Kane method has greater advantages in terms of computational complexity and efficiency.

Table 1.

The computational scale of the robot dynamics modeling method.

Joint robots can be seen as a system composed of n rigid bodies, each of which can be viewed as a mass point with a mass of and the centroid of , where i = 1, 2, , n. The Kane’s dynamics equation of the rigid-body system is

In Equation (9),

—active force vector acting on mass point i;

—the mass of mass point i;

—the acceleration vector of mass point i;

—the position vector of mass point i in the reference coordinate system;

—differential displacement of mass point i.

In Kane’s dynamics, the partial velocity and the partial angular velocity of the center of mass point i are defined as

Among them, and are the system velocity and angular velocity of connecting rod i, respectively, and is the centroid position coordinate of connecting rod i, where j = 1, 2, , n.

The differential displacement of mass point i can be expressed as

By using Equations (9)–(12), the Kane’s dynamics equation can be rewritten as

In Equation (13), represents the generalized active force of connecting rod i, while denotes the generalized inertial force of connecting rod i. Additionally, represents the centroid acceleration of connecting rod i.

The generalized joint torques are defined as , and they are expressed as

In Equation (14), represents the angular acceleration of connecting rod i, and denotes the inertia tensor of connecting rod i, given by

The elements in the matrix are

In Equation (16), the rigid body consists of unit body dv and the density of the unit body is .

The joint torque of each connecting rod can be decomposed into three directions of torque components, that is

The articulated robot has n rotating joints, denoted as , with a distance of between the joints. The distance from the centroid of connecting rod i to joint is . The joint angle is considered the generalized coordinate, and the rotational speed is taken as the generalized velocity. The recursive algorithm for its velocity, acceleration, and partial velocity is as follows

In Equation (18), is the rotation matrix from coordinate system to coordinate system , represents the acceleration of connecting rod i, denotes the joint deflection velocity of connecting rod i, and is the unit vector of the rotational angular velocity of connecting rod i, which corresponds to the unit vector in the Z direction of coordinate system , where .

Subsequently, the relationship between the position, velocity, acceleration, and torque of various joints in multi-rigid-body systems is analyzed using the Kane’s dynamics equation. Based on Equation (18), the center of mass and angular velocity of each connecting rod are derived and then combined with the Kane’s dynamics equation to establish the recursive formulas for the driving force and torque of each joint actuator. Finally, we summarize the dynamic model of the multi-rigid-body system.

The Kane’s dynamics algorithm is divided into two parts: generalized active force and generalized inertial force. Generalized inertial force refers to the force generated by a robot during start stop, variable speed motion, or external force to maintain its own motion state. The joint torque generated by the inertia force of the robot can be derived from Equations (14)–(17).

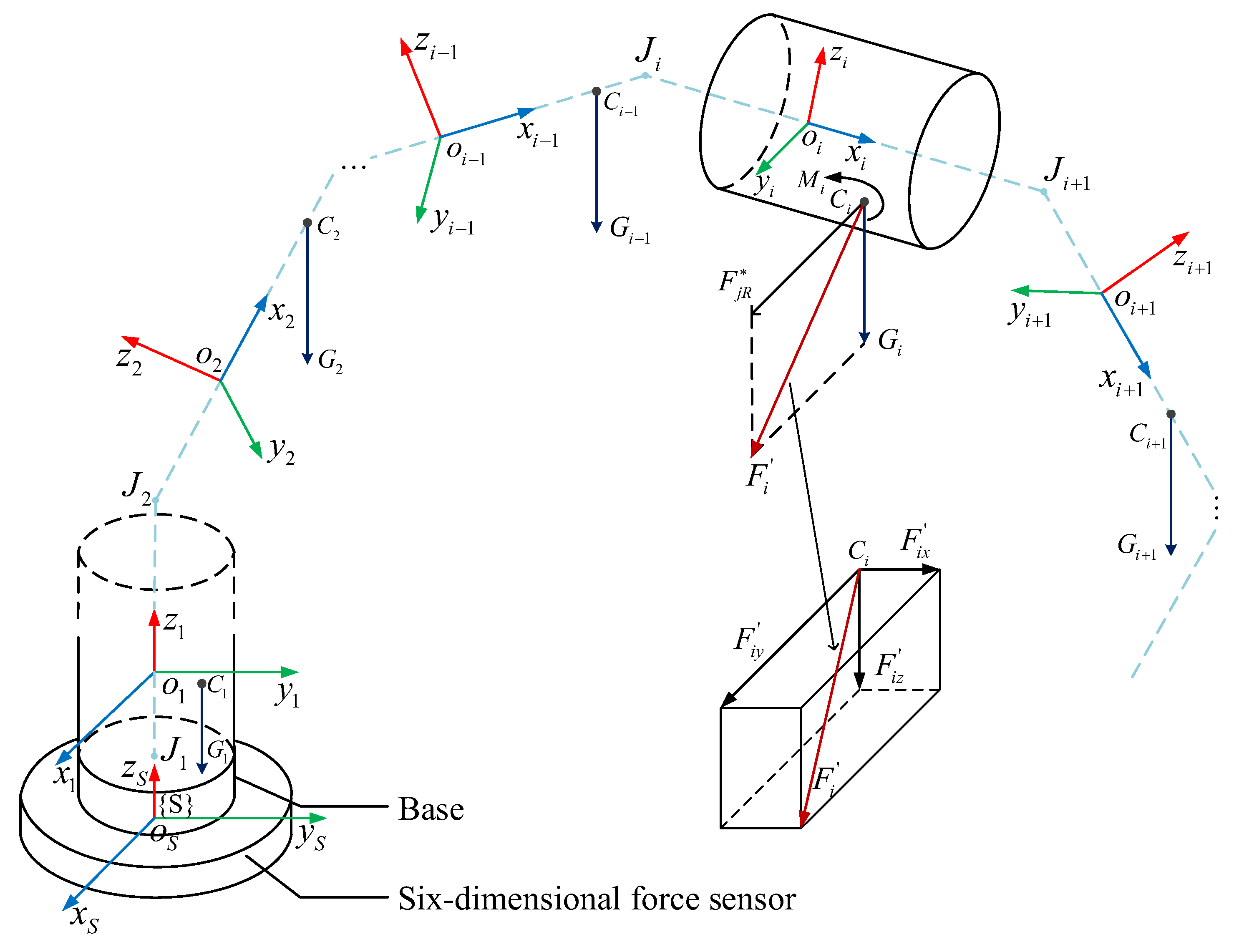

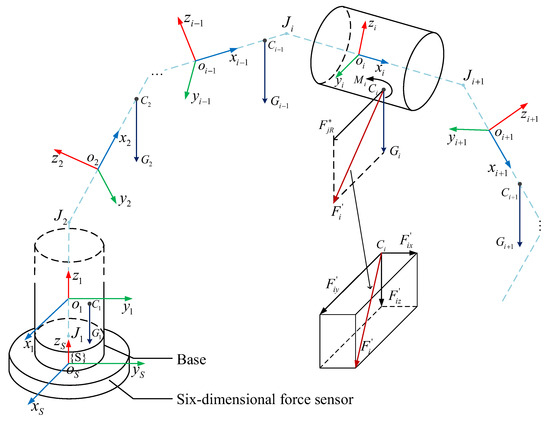

According to the Kane’s dynamics equation, the force compensation model of the joint-type collaborative robot can be established, as shown in Figure 3. Among them, the dashed line represents each link of the robot, and the coordinate system of each connecting rod and its own gravity are also represented. The generalized compensating force of connecting rod i is also shown in the figure.

Figure 3.

Force compensation model of the joint-type collaborative robot.

According to the force compensation model, the robot needs to represent the generalized compensation force and joint torque experienced by each connecting rod in the sensor coordinate system , assuming no external force, within the force compensation algorithm. Among them, the generalized compensating resultant force is the sum of the three directional components of the generalized inertial force and the resultant force of the connecting rod’s own gravity, expressed as

In the equation, i is the unit vector in the positive x-axis direction, j is the unit vector in the positive y-axis direction, k is the unit vector in the positive z-axis direction, is the resultant force of the three directional components of the generalized inertial force, is the gravitational force of the connecting rod itself, and is the generalized compensating resultant force.

The inertial force analysis of robot linkages must be based on the center of mass, as the center of mass is the natural reference point for rigid-body motion. The direction vector w of the generalized compensating resultant force is derived based on the position vector of the centroid , expressed as follows

Then, the components of the generalized compensating force in three directions can be obtained, allowing the generalized compensating force to be uniformly represented as

From Equations (19)–(26), it can be concluded that the generalized force/torque vector that needs to be compensated on connecting rod i is

According to the Cartesian coordinate transformation, it is represented in the coordinate system , as follows

In Equation (28), the transformation matrix is

In Equation (29), is the rotation matrix from coordinate system to coordinate system , and represents the origin of the coordinate system based on the position vector matrix operator of the origin of the coordinate system , expressed as

Then, the generalized force/torque vectors of each connecting rod can be obtained in the form of a summary in the coordinate system , as follows

The final expression for the force compensation of the six-dimensional force sensor at the base can be obtained, namely and .

When the robot is in a certain pose, the detection results of the six-dimensional force sensor on the base for force/torque are and , respectively, expressed as . Therefore, the force/torque information after force compensation is

The detection result of the robot’s six-dimensional force sensor remains zero during motion in the absence of external force due to force compensation derivation, thus achieving the detection of accidental collisions.

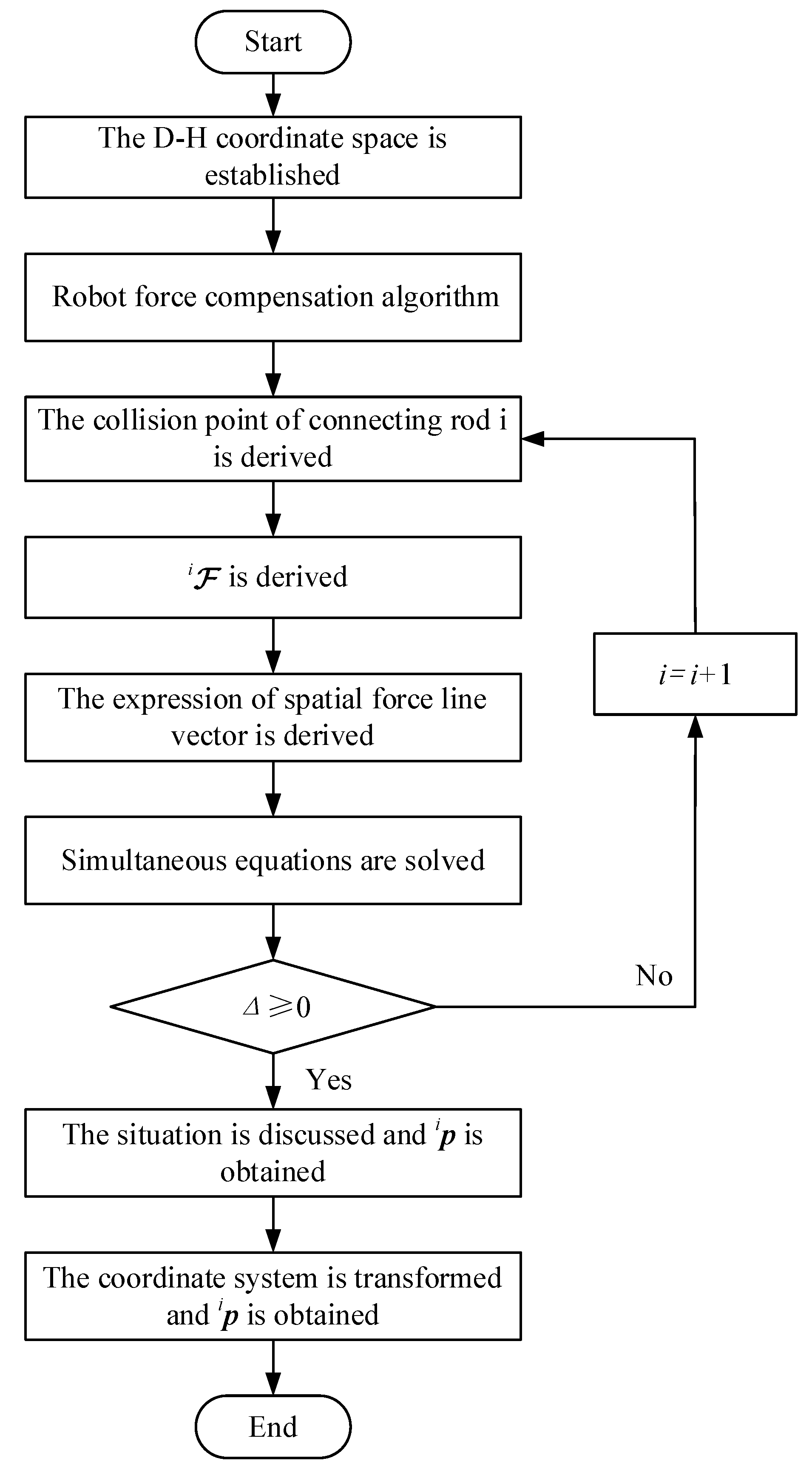

2.3. Collision Detection Algorithm

When discussing the existence of collision points for robot connecting rod i, Cartesian transformation is required to represent the generalized force vector and torque vector represented in the sensor coordinate system within the coordinate system , as follows

Furthermore, the representation of the spatial force vector equation on the robot connecting rod i in the coordinate system can be obtained, expressed as

By converting Equation (34) into a parametric equation, the following can be derived

In Equation (35), is the generalized coordinate of the collision point position, represents a point on the coordinate system through which the spatial force vector passes, and h denotes the distance between possible collision points and .

By combining Equation (35) with the external surface equation of the known robot connecting rod i, a quadratic equation based on the unknown variable h can be formulated for both equations. Considering the specificity of the equations for the outer surface of each connecting rod, this thesis discusses the relationship between and 0 using the Delta root finding formula.

In the equation, a is the coefficient of the quadratic term; b is the coefficient of the first-order term; and c is a constant term.

By substituting the detection results into the equation, if , the force vector equation intersects with the robot connecting rod i at , where is the position vector of the collision point existing in the coordinate system . The specific situation of the collision point can be discussed and solved in 9 different ways. If , meaning no collision point exists on connecting rod i, the same method should be applied to continue discussing the robot connecting rod . After obtaining , it needs to be converted to the base coordinate system, where the resulting position vector is the final collision point , expressed as follows

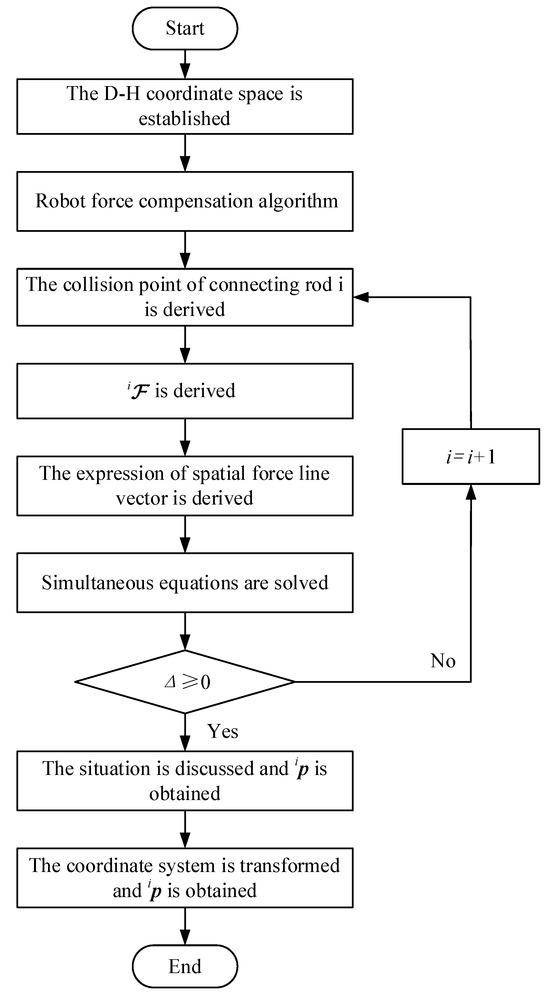

Figure 4 shows the flowchart of the solution process for the collision point.

Figure 4.

Flow chart for solving collision points.

2.4. Solving Examples

Taking a joint robot with a cylindrical outer surface as an example, numerical verification is performed. By using Equations (33)–(35), the representation of the spatial force vector on connecting rod i can be obtained.

The cylindrical surface equation of the robot connecting rod i is given as follows

In Equation (39), is the radius of connecting rod i; is the length of connecting rod i; is the distance from the bottom of the circle to the origin of the coordinate system; and is the distance from the top of the circle to the origin of the coordinate system.

By combining Equations (35) and (39), we obtain

The algebraic formula for can be obtained from Equation (36) as follows

By discussing the value of , we can obtain the algebraic expressions of intersection points in three different situations.

- The situation of

When , the intersection point may appear on the cylindrical surface or the bottom/top circular surface.

(1) Appears on a cylindrical surface

According to Equation (37), it can be concluded that

When , substituting into the force vector parameter equation in Equation (35) provides the following:

Similarly, when , the following is obtained:

(2) Appears on the bottom/top circular surface

When the intersection point appears on the bottom circle, substituting into the force vector parameter equation yields

Substituting again and solving for y, z, the following is obtained:

When the intersection point appears on the top circle, substituting into the force vector parameter equation yields

Substituting again and solving for y, z, the following is obtained:

- 2.

- The situation of

When , there is a unique solution for h, given by

By substituting h into the parameter equation of the force line vector, the result can be obtained, as follows

- 3.

- The situation of

When , h does not exist, indicating that the collision point is not on the robot connecting rod in that segment.

According to the range of values for and x, collisions are classified into 9 possible scenarios. Using Equations (42)–(50), the coordinates of the collision point are calculated for each possible scenario. According to Equation (38), the obtained collision point is represented in the base coordinate system, as shown in Table 2. Among them, the yellow vector represents the spatial force line vector, and the dashed cylinder represents the non-body connecting rod outside the length range of the robot connecting rod in the x-axis direction.

Table 2.

Collision situation for connecting rods.

3. Algorithm Verification

3.1. Verification of Force Compensation Algorithm

3.1.1. Simulation Verification

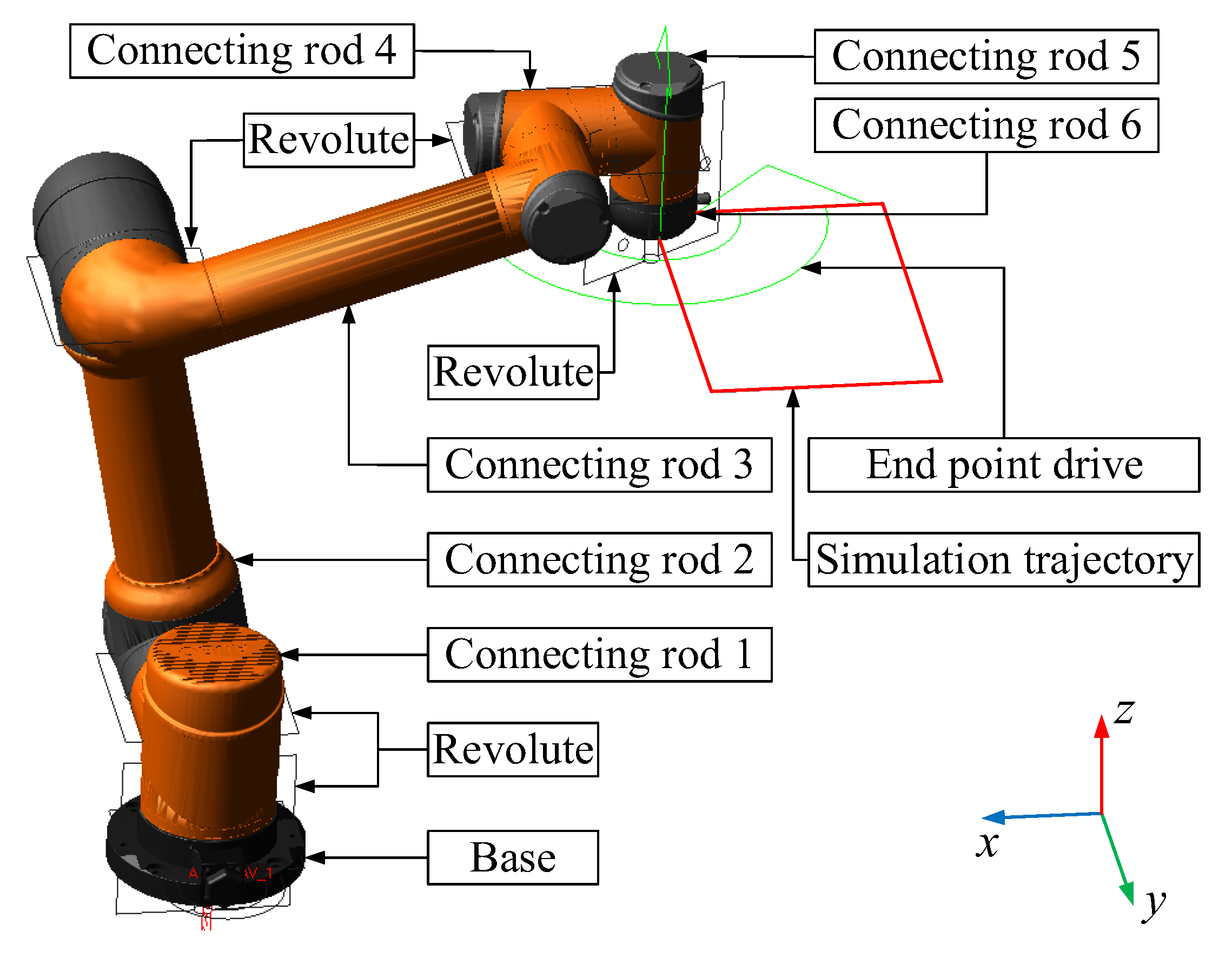

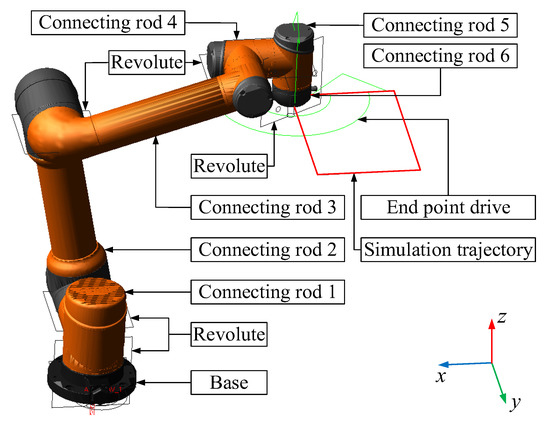

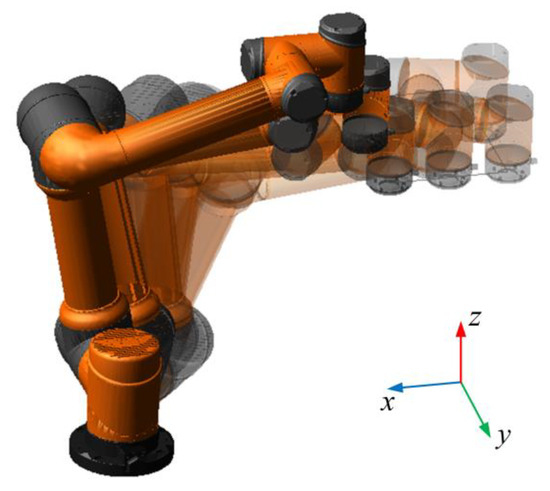

To verify the correctness and feasibility of the Kane’s dynamics-based robot compensation algorithm proposed in this thesis, the model of the AUBO-I5 articulated collaborative robot was imported into ADAMS (2020) for simulation verification. Motion pair conditions were added to each joint, and structural parameters such as the mass and inertia tension of each connecting rod of the robot were set. Point drives were also set at the end of the robot to run a simulated rectangular trajectory along the x-axis direction of 200 mm and the y-axis direction of 300 mm. In order to simulate the variable speed movements of robots, such as in an emergency stop and start, the robot will operate at three different speeds and accelerations: fast, medium, and slow. The simulation model is shown in Figure 5.

Figure 5.

Simulation model of the AUBO-I5 robot.

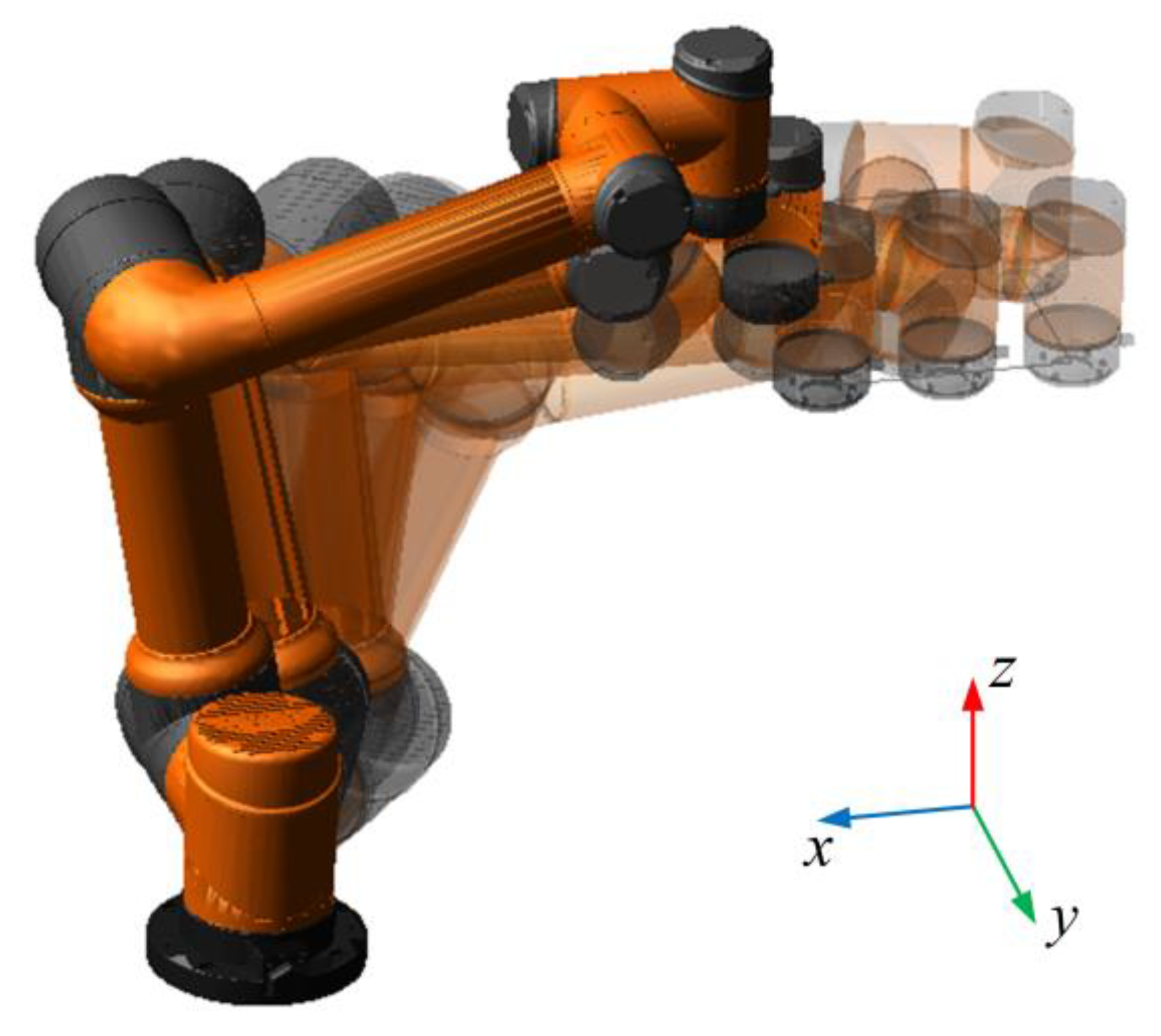

The simulation motion has a termination time of 8.0 s and 400 steps. The residual image of the simulation motion is presented in Figure 6, while the point driving function at the end is provided in Table 3.

Figure 6.

Simulated motion residual image.

Table 3.

End point driving function.

Set the sampling frequency to 1000 Hz, and during simulation motion, real-time statistics of the three directions of force and torque acting on the robot base can be obtained to obtain simulation motion results.

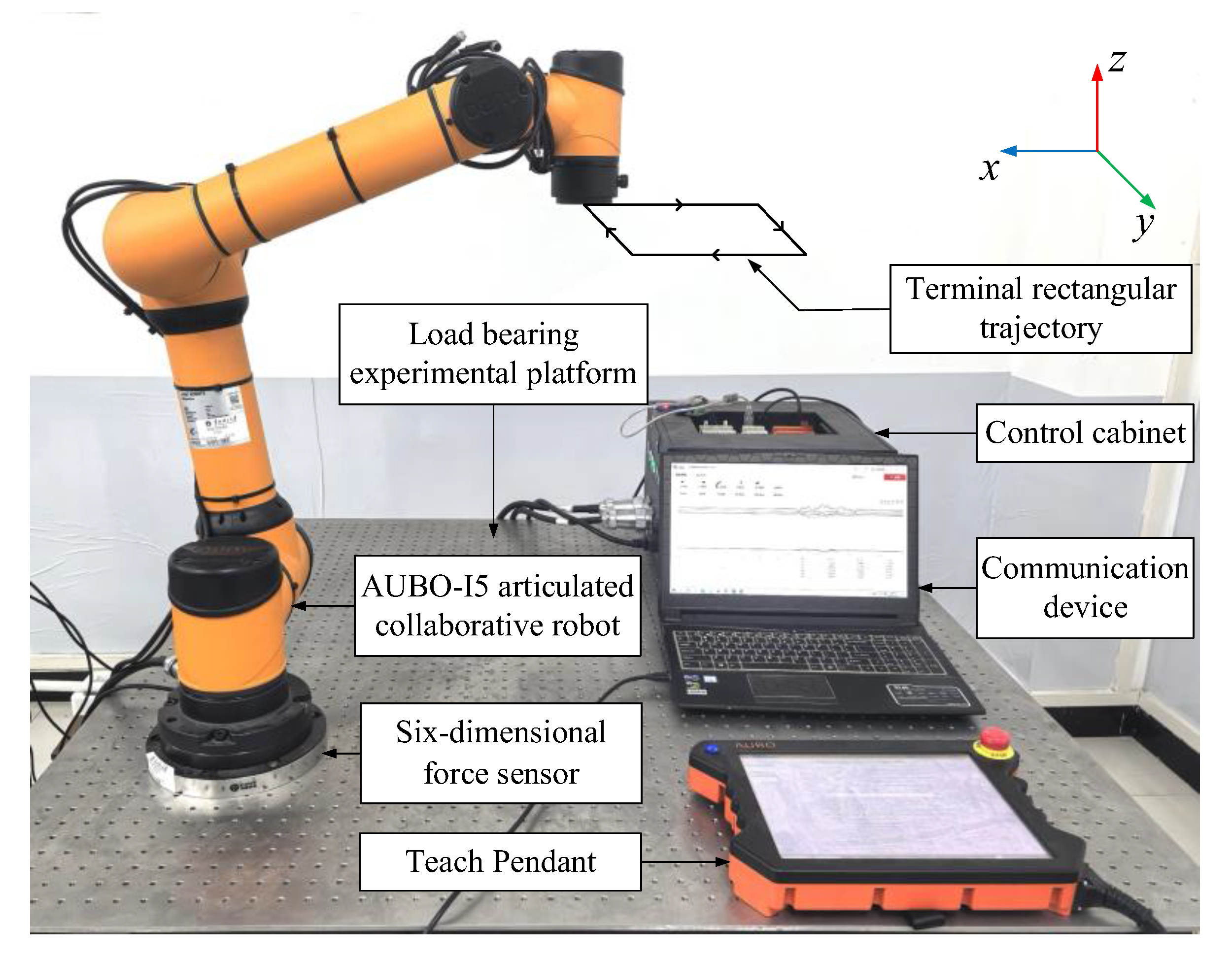

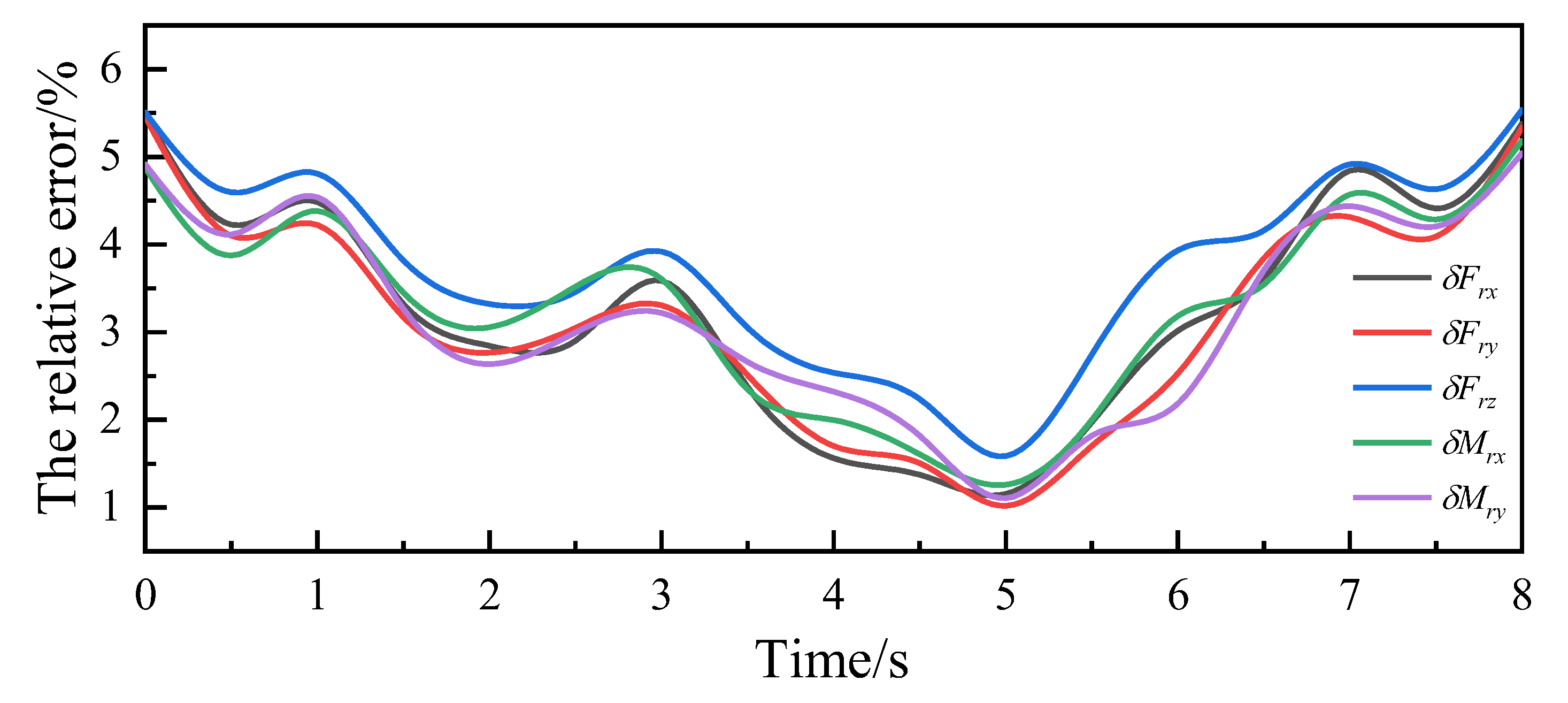

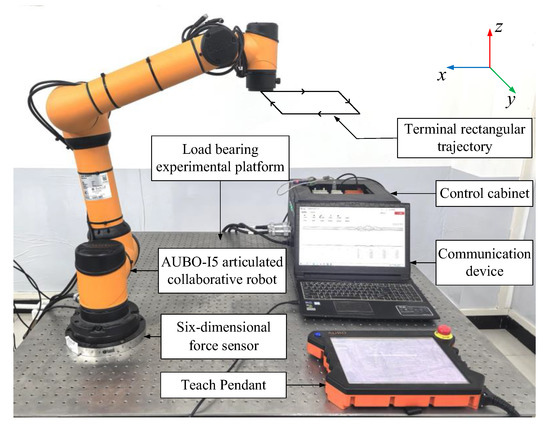

3.1.2. Experimental Verification

To verify the correctness of the force compensation algorithm and compare it with the simulation experiment results, the AUBO-I5 articulated collaborative robot (Aobo Intelligent Technology Co., Ltd., Beijing, China) was used for force compensation experiments. The robot has 6 degrees of freedom, a maximum working radius of 886.5 mm, a mass of 24 kg, and a repeat positioning accuracy of 0.02 mm. A KWR200X six-dimensional force sensor (Changzhou Kunwei Sensing Technology Co., Ltd., Beijing, China) was installed on the robot base and connected to communication equipment to facilitate interaction between the robot and the external environment. The sensor is made of alloy steel, with a diameter of 200 mm, a height of 25.5 mm, a resolution of 0.03% F.S., and an accuracy of 0.5% F.S.; the sampling frequency is 1000 Hz.

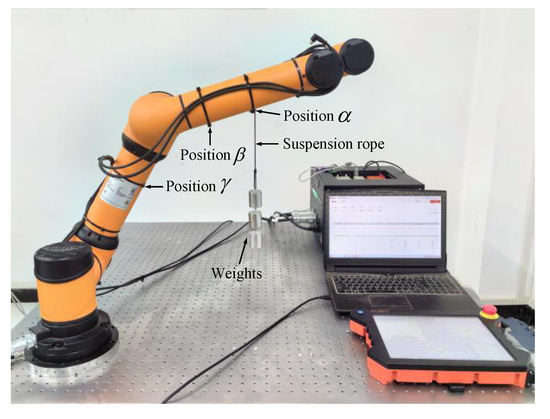

For comparative experiments, the robot was programmed to run the same rectangular trajectory as in the simulation experiment using a teaching pendant, and the three directions of force and torque acting on the base of the robot during operation were monitored in real time through the six-dimensional force sensor. The experiment is shown in Figure 7.

Figure 7.

Experimental diagram of the AUBO-I5 robot force compensation.

When the robot runs along the planned path, it monitors the forces and torques acting on the base in real time through sensor data acquisition software, and the experimental motion results can be obtained from this.

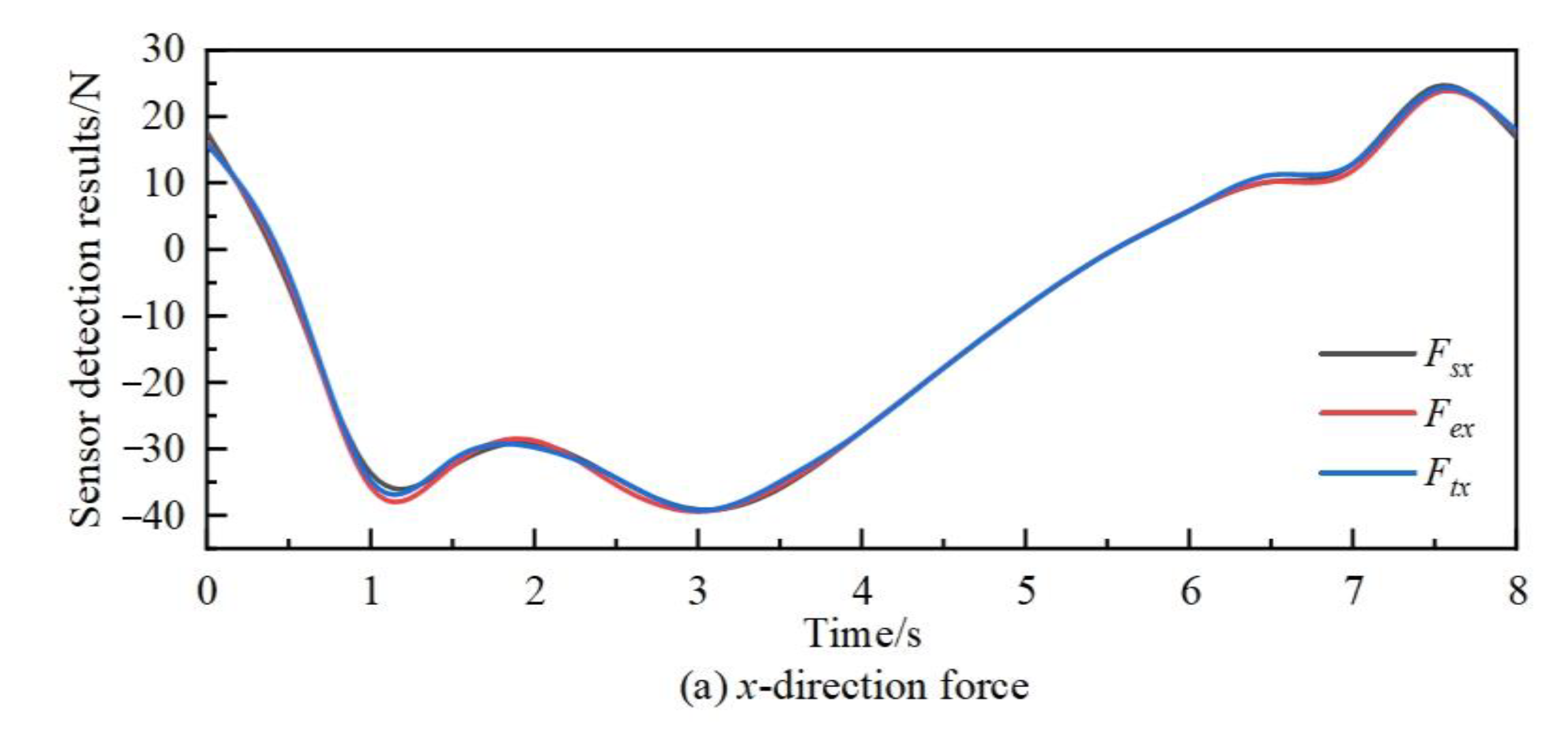

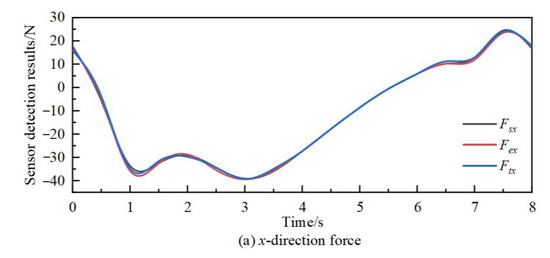

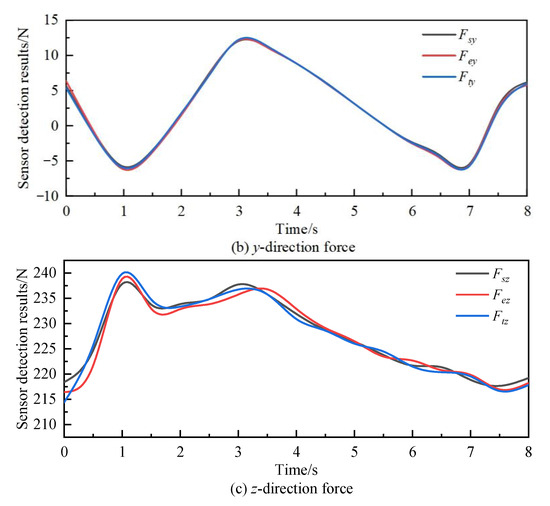

3.1.3. Comparative Verification

According to Equations (14)–(17) and (19)–(32), the theoretical results for robot force compensation can be obtained. The velocity and acceleration during each time period can be obtained from the simulated velocity curve. Based on Equations (14)–(17), the velocity and acceleration at the end can be iterated onto each connecting rod, and the force and torque experienced by each connecting rod can be represented on the sensor coordinate system as theoretical values, expressed as , , , , , and . Similarly, the data obtained through simulation can be represented as , , , , , and . The data obtained through experiments can be represented as , , , , , and .

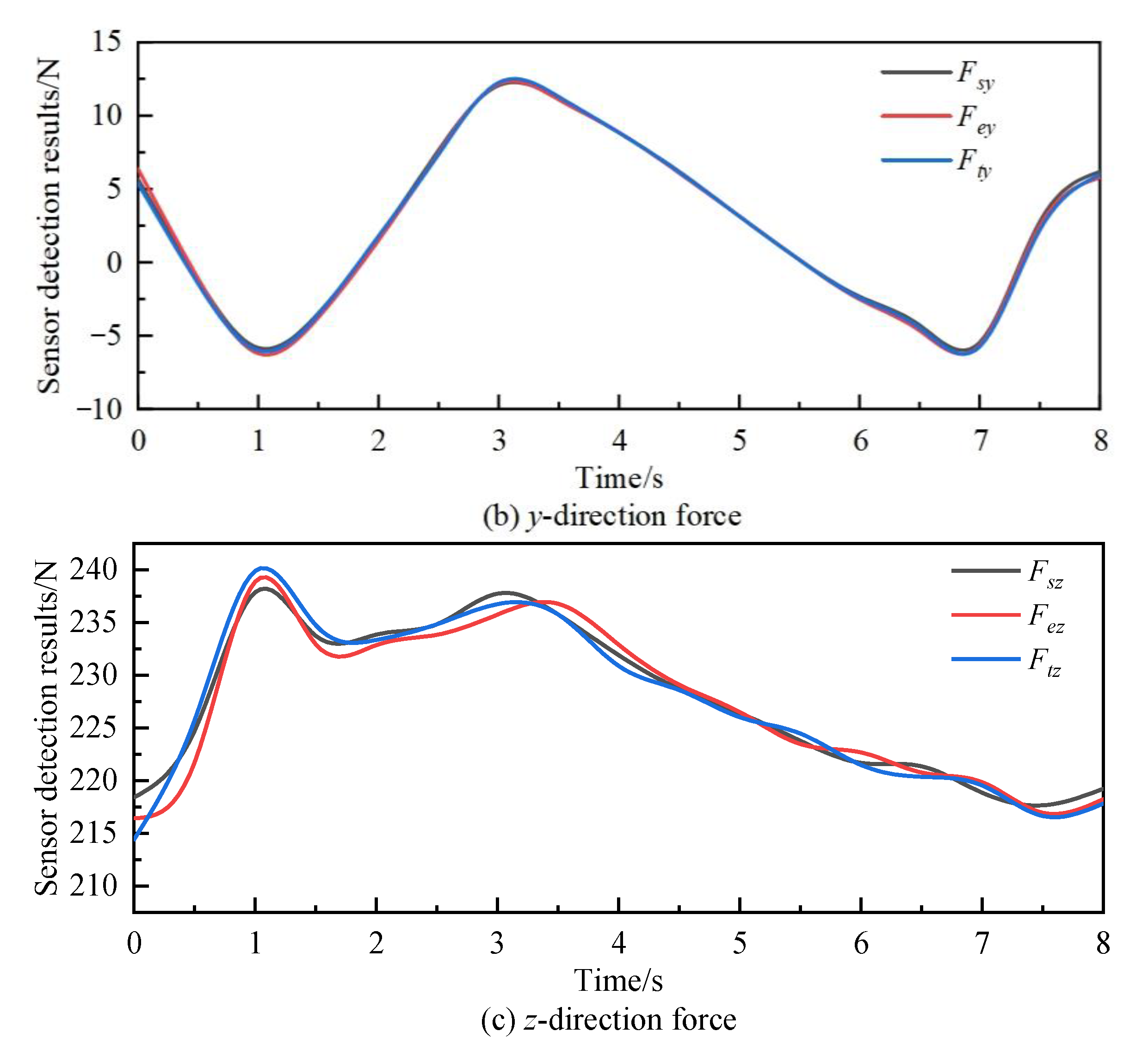

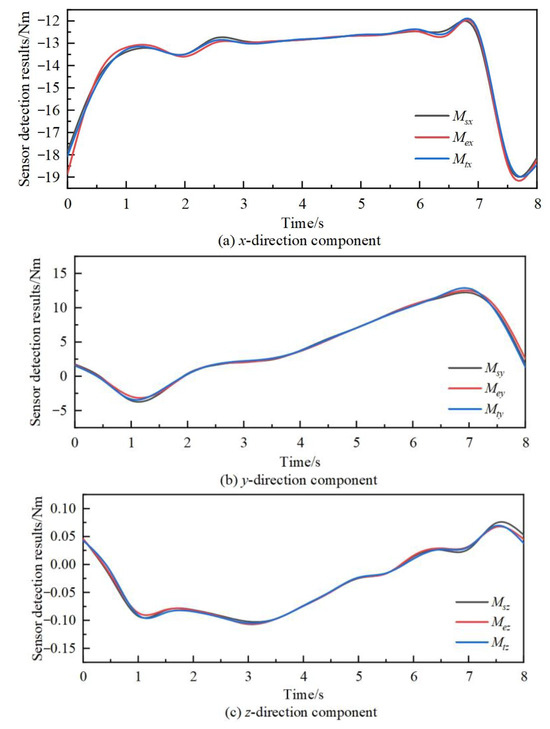

We compare the theoretical results, simulation results, and experimental results obtained from the calculation to verify the correctness of the theoretical analysis. The comparison of the component forces in three directions and the component moments in three directions is shown in Figure 8 and Figure 9.

Figure 8.

Force comparison chart.

Figure 9.

Torque comparison chart.

In Figure 8 and Figure 9, the curves represent the simulation results, experimental results, and theoretical results of forces and moments in the x, y, and z directions.

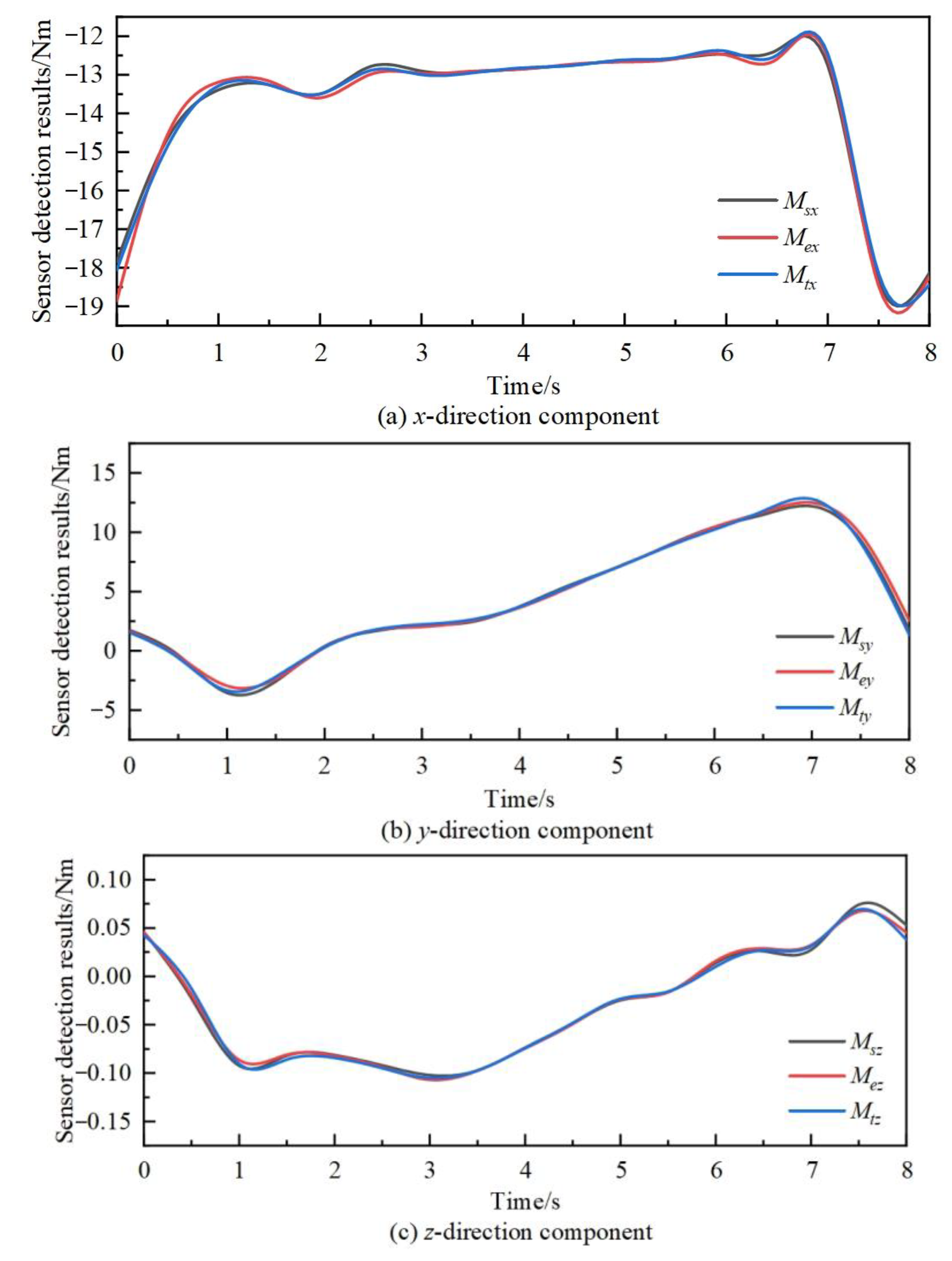

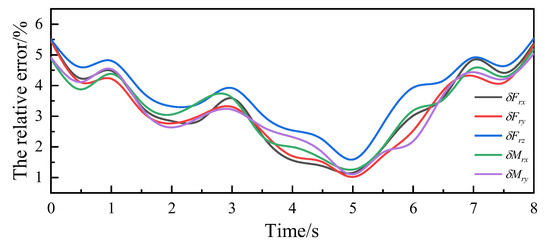

To further verify the accuracy of the force compensation algorithm, an error analysis was performed using theoretical results, simulation results, and experimental results. The relative error calculation formula for the three directional forces is given as follows

The meaning of the relative error constructed is to standardize the total deviation between the simulation and experimental results and the theoretical value using the joint magnitude of the two, avoiding the dominant error caused by a single result magnitude being too large, and playing a normalization role.

In the equation, is the force data in the simulation results, is the force data in the experimental results, and is the force data in the theoretical results; is the absolute error between simulation force and theoretical force, and is the absolute error between experiment force and theoretical force.

Similarly, the relative error of the torque in three directions is considered, where is the absolute error between simulation torque and theoretical torque, and the absolute error is the absolute error between experiment torque and theoretical torque, thus conducting error analysis on the torque in the three directions. Due to the small base of the moment in the z-direction, a significant calculation error occurs, and its reference value is low, therefore, it is ignored. The relative error of the force/torque of the robot in theory, simulation, and experiment is shown in Figure 10, and the maximum value of the relative error is shown in Table 4.

Figure 10.

Relative error of force/torque.

Table 4.

The maximum value of relative error.

From Figure 8, Figure 9 and Figure 10 and Table 4, it can be observed that the comparison curves of force/torque in the three directions remain consistent, with the maximum relative error occurring in the z-direction component. Due to the significant gravity and dynamic force exerted by the robot itself in this direction, the overall error during comparison verification is relatively high. However, the relative error does not exceed 5.6%, confirming the correctness of the theoretical analysis of the force compensation algorithm. When the robot runs for 4 s to 6 s, the relative error of the three directions of force/torque is minimized. During this period, the end of the robot moves in the x-positive direction, and the acceleration of the robot linkage motion is minimized. From this, it can be concluded that during the working process of the robot, a smaller rotation amplitude of each joint led to smoother movements and more stable changes in force/torque, resulting in more accurate force/torque compensation values. This force compensation algorithm demonstrates higher accuracy and stronger practicality, effectively compensating for the zero position value of the sensor of the robot in real time without external force.

3.2. Verification of Collision Detection Algorithm

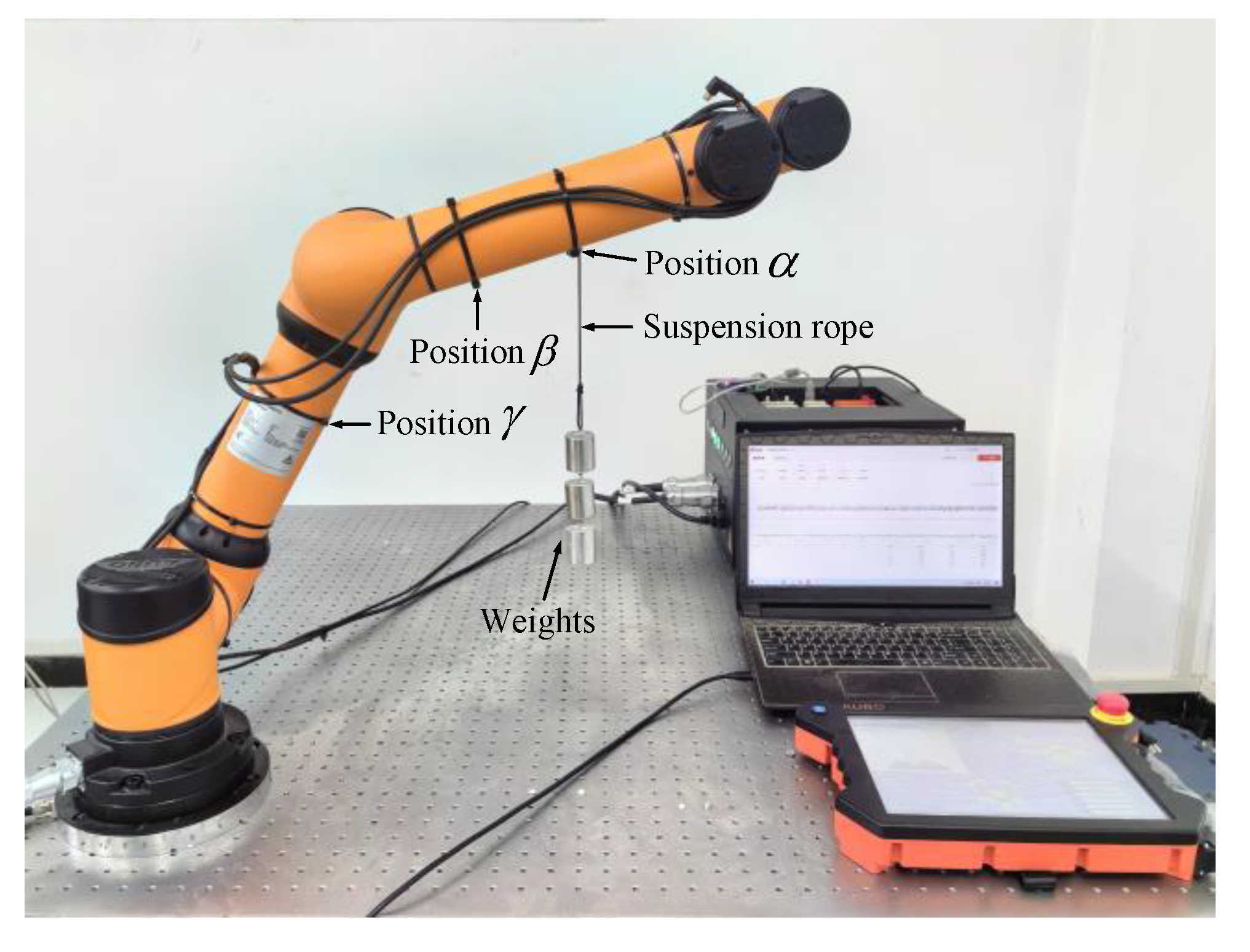

To verify the effectiveness and accuracy of the collision point detection algorithm proposed in this thesis, collision point detection experiments were conducted using the AUBO-I5 robot. The robot’s pose during work is simulated by controlling its behavior through the control cabinet and teaching pendant.

To simulate collision forces of known magnitude and direction, weights are suspended on the robot connecting rod and the suspension rope is cut to simulate the collision force. The position of the simulated collision force is known, and the experimental result is based on the sensor coordinate system.

The pose of the simulated robot in operation was set as follows: . Weights were hung at different positions (position α, position β, and position γ) on various connecting rods of the robot for collision testing. The specific coordinates of the collision location are shown in Table 5.

Table 5.

Collision position coordinates.

The detection results recorded by the data collection software at the moment of cutting were input into the proposed collision point detection algorithm. The experiment is shown in Figure 11.

Figure 11.

Experimental diagram of robot collision point detection.

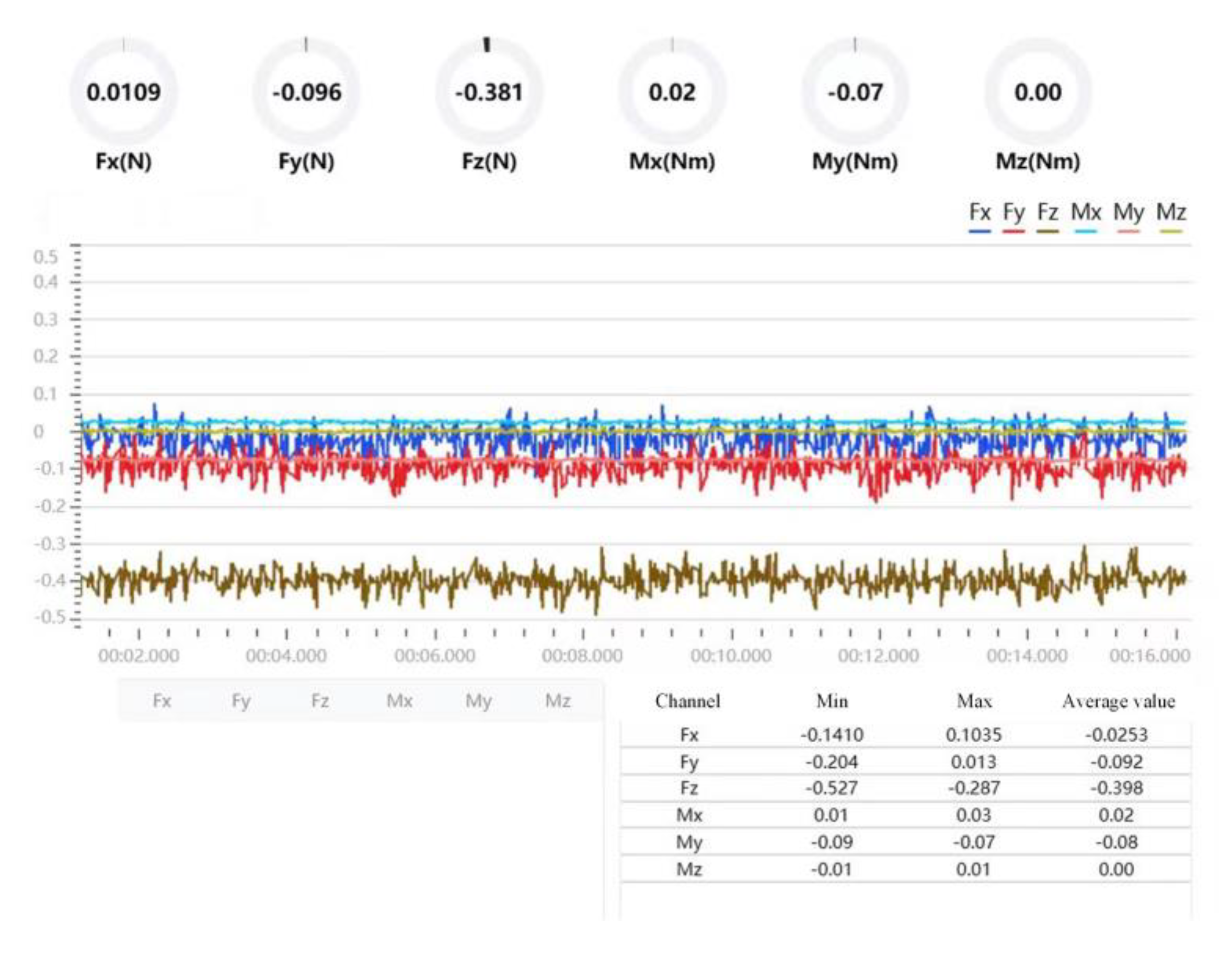

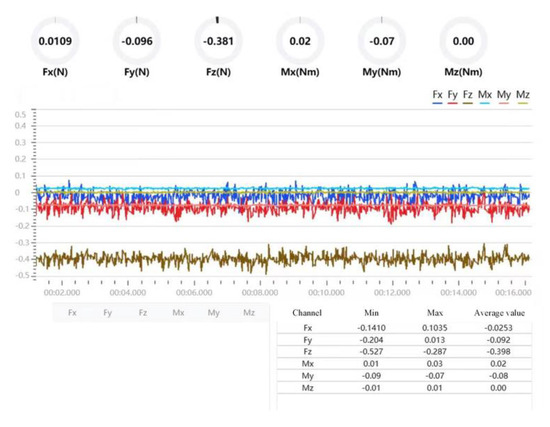

The display interface of the sensor data acquisition software is shown in Figure 12.

Figure 12.

Display interface of sensor data acquisition software.

To verify the accuracy of the proposed algorithm, an error analysis was conducted on the experimental results.

The relative error calculation equation for each direction of a position is

In the equation, represent the absolute errors in calculating the position; denote the calculated collision position coordinates; and the coordinates of the actual collision position are represented by .

The experimental results of position α are shown in Table 6.

Table 6.

Experimental results of collision detection at position α.

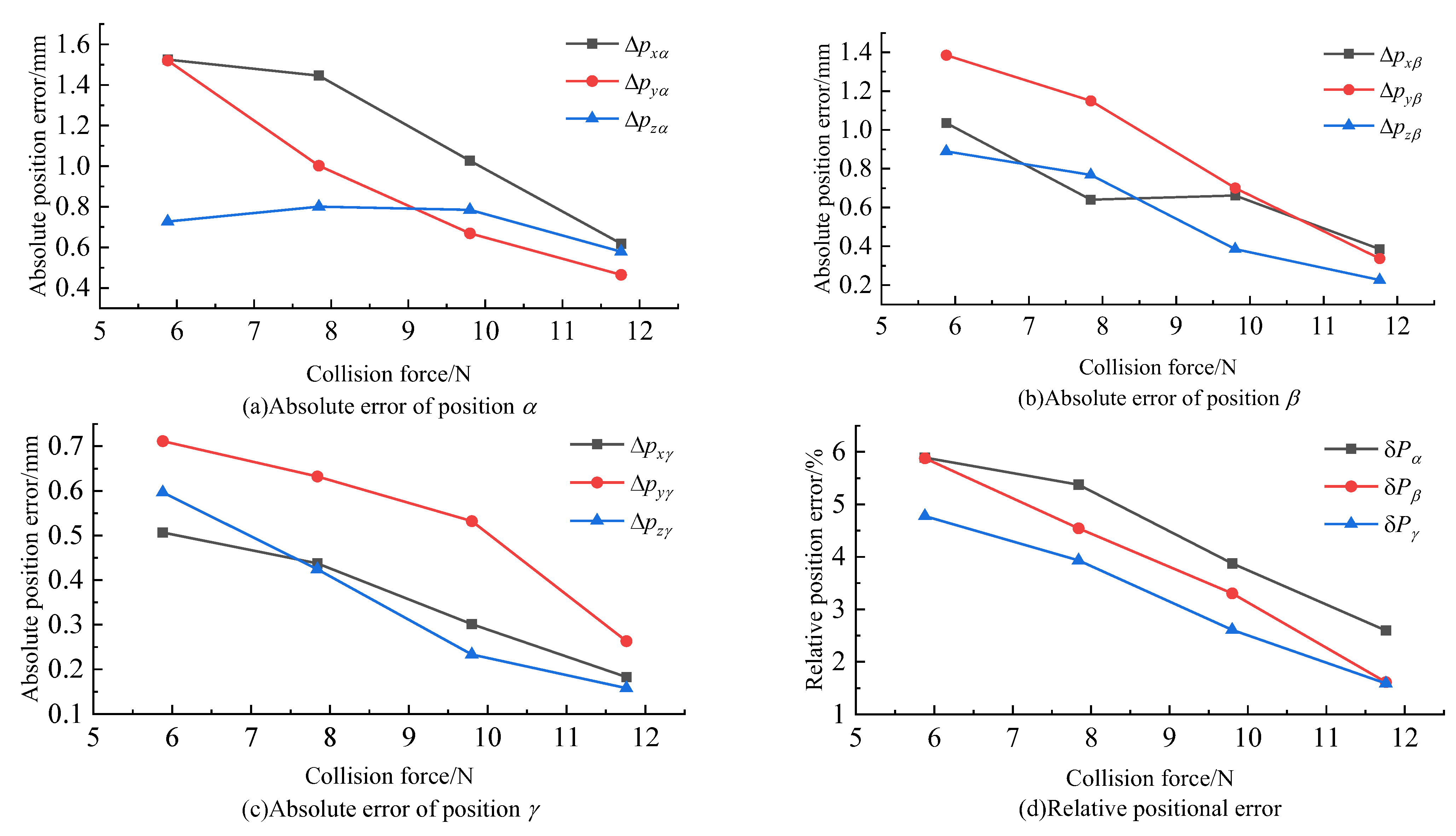

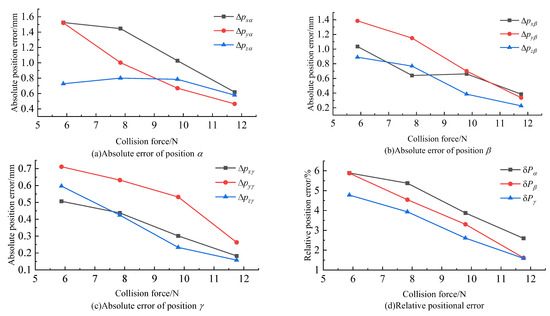

When the robot is subjected to different collision forces at different positions, its absolute and relative position errors are shown in Figure 13.

Figure 13.

Experimental results.

From Figure 12, it can be observed that the relative error of the collision point position obtained through this algorithm decreases as the given external force increases, with the relative error remaining below 5.8%. Since position γ is closer to the base sensor than positions α and β, the absolute and relative position errors obtained in all directions at position γ are generally smaller compared to those at positions α and β. It can be inferred that the accuracy of the detected collision point position improves as the collision distance to the sensor decreases. This collision detection algorithm demonstrates smaller errors and effectively identifies the specific location coordinates of collision points.

4. Conclusions

Collision detection plays a crucial role in safety research on collaborative robots, providing a solid foundation for the smooth execution of human–robot collaboration and the continuous advancement of intelligent automation processes. Among these, force compensation calibrates the robot’s own force perception system, enabling the robot to avoid interference from gravity and inertial forces, thus accurately sensing the external collision force acting on it, laying the foundation for robot collision detection. To address the limitations of traditional collision detection algorithms for robots using six-dimensional force sensors, a force compensation algorithm based on Kane’s dynamics and a collision detection algorithm that combines the six-dimensional force sensor’s detection results with the robot’s outer surface equations to derive the collision point coordinates of the robot body are proposed. Force compensation and collision detection experiments were carried out on the AUBO-I5 joint-type collaborative robot. In the force compensation experiment, a comparison and error analysis were conducted for simulation, experimental, and theoretical results, obtaining force compensation comparison curves and error analysis results during the robot’s motion. The results showed that the comparison curves for forces/torques in three directions were consistent, with relative errors below 5.6%. In the collision detection experiment, the detection results for the base’s six-dimensional force sensor were recorded and input into the proposed collision point detection algorithm. The experimental results were consistent with the actual collision position, and the relative position error was below 5.8%. Both sets of experiments validated the correctness and feasibility of the theoretical analysis of the force compensation and collision detection algorithms.

The force compensation and collision detection algorithms proposed in this paper have the following future outlook: Due to the fact that a small number of robots may have complex shapes, solving the external surface equations of robots using this algorithm may result in complex equations, which may reduce the efficiency of solving the coordinates of collision points. In the future, we will continue to optimize collision detection algorithms, improve their universality, and attempt to develop a complete system that can detect whether a collision has occurred in real-time and, after a collision, detect the specific coordinates of the robot’s outer surface collision point in real-time and display them within the system. Through this system, when a collision occurs, the robot can be controlled in real-time to ensure safe operation during its work.

Author Contributions

Conceptualization, Y.W.; Methodology, Y.W.; Validation, Y.W.; Data curation, Y.W.; Writing—original draft, Y.W.; Writing—review & editing, Y.W. and Z.W.; Visualization, Y.X.; Supervision, Y.F.; Project administration, Z.W.; Funding acquisition, Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China [No. 51505124], Science and Technology Project of Hebei Education Department [ZD2020151], Hebei Province Innovation Capability Enhancement Plan Project [225676144H], Tangshan Science and Technology Innovation Team Training Plan Project [21130208D], and Key Research Project of North China University of Science and Technology (ZD-ST-202305-23).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zafar, H.M.; Langås, F.E.; Sanfilippo, F. Exploring the synergies between collaborative robotics, digital twins, augmentation, and industry 5.0 for smart manufacturing: A state-of-the-art review. Robot. Comput. Integr. Manuf. 2024, 89, 102769. [Google Scholar] [CrossRef]

- Dimitropoulos, N.; Michalos, G.; Arkouli, Z.; Kokotinis, G.; Makris, S. Industrial collaborative environments integrating AI, Big Data and Robotics for smart manufacturing. Procedia CIRP 2024, 128, 858–863. [Google Scholar] [CrossRef]

- Singh, A.B.; Himanshu, P. Collaborative robots for industrial tasks: A review. Mater. Today Proc. 2022, 52, 500–504. [Google Scholar] [CrossRef]

- Montini, E.; Cutrona, V.; Dell’Oca, S.; Landolfi, G.; Bettoni, A.; Rocco, P.; Carpanzano, E. A Framework for Human-aware Collaborative Robotics Systems Development. Procedia CIRP 2023, 120, 1083–1088. [Google Scholar] [CrossRef]

- Salgado, K.K.; Dimitropoulos, N.; Michalos, G.; Makris, S. Suitability assessment method for safe robot tooling design in Human-Robot Collaborative applications. Procedia CIRP 2024, 128, 770–775. [Google Scholar] [CrossRef]

- Wang, S.L.; Zhang, J.Q.; Wang, P.; Law, J.; Calinescu, R.; Mihaylova, L. A deep learning-enhanced Digital Twin framework for improving safety and reliability in human–robot collaborative manufacturing. Robot. Comput. Integr. Manuf. 2024, 85, 102608. [Google Scholar] [CrossRef]

- Luca, C.; Emmanuel, F. A Safety 4.0 Approach for Collaborative Robotics in the Factories of the Future. Procedia Comput. Sci. 2023, 217, 1784–1793. [Google Scholar] [CrossRef]

- Park, J.M.; Kim, T.; Gu, C.Y.; Kang, Y.; Cheong, J. Dynamic collision estimator for collaborative robots: A dynamic Bayesian network with Markov model for highly reliable collision detection. Robot. Comput. Integr. Manuf. 2024, 86, 102. [Google Scholar] [CrossRef]

- Wu, Y.; Jia, X.H.; Li, T.J.; Liu, J.Y. A real-time collision avoidance method for redundant dual-arm robots in an open operational environment. Robot. Comput. Integr. Manuf. 2025, 92, 102. [Google Scholar] [CrossRef]

- Xu, X.M.; Gan, Y.H.; Xu, C.; Dai, X.Z. Robot Collision Detection Based on Dynamic Model. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 6578–6582. [Google Scholar] [CrossRef]

- Li, W.; Han, Y.; Wu, J.; Xiong, Z.H. Collision Detection of Robots Based on a Force/Torque Sensor at the Bedplate. IEEE ASME Trans. Mechatron. 2020, 25, 2565–2573. [Google Scholar] [CrossRef]

- Svarny, P.; Rozlivek, J.; Rustler, L.; Sramek, M.; Deli, O.; Zillich, M.; Hoffmann, M. Effect of active and passive protective soft skins on collision forces in human–robot collaboration. Robot. Comput. Integr. Manuf. 2022, 78, 102363. [Google Scholar] [CrossRef]

- Xu, T.; Tuo, H.; Fang, Q.Q.; Shan, D.B.; Jin, H.Z.; Fan, J.Z.; Zhu, Y.H.; Zhao, J. A novel collision detection method based on current residuals for robots without joint torque sensors: A case study on UR10 robot. Robot. Comput. Integr. Manuf. 2024, 89, 102777. [Google Scholar] [CrossRef]

- Lv, H.; Liu, L.Y.; Gao, Y.M.; Zhao, S.; Yang, P.P.; Mu, Z.G. A compound planning algorithm considering both collision detection and obstacle avoidance for intelligent demolition robots. Rob. Auton. Syst. 2024, 181, 104781. [Google Scholar] [CrossRef]

- Mu, Z.G.; Liu, L.Y.; Jia, L.H.; Zhang, L.Y.; Ding, N.; Wang, C.J. Intelligent demolition robot: Structural statics, collision detection, and dynamic control. Autom. Constr. 2022, 142, 104490. [Google Scholar] [CrossRef]

- Sun, T.; Sun, J.R.; Lian, B.B.; Li, Q. Sensorless admittance control of 6-DoF parallel robot in human-robot collaborative assembly. Robot. Comput. Integr. Manuf. 2024, 88, 102742. [Google Scholar] [CrossRef]

- Li, Z.J.; Ye, J.H.; Wu, H.B. A Virtual Sensor for Collision Detection and Distinction with Conventional Industrial Robots. Sensors 2019, 19, 2368. [Google Scholar] [CrossRef]

- Zhou, C.B.; Xia, M.Y.; Xu, Z.B. A six dimensional dynamic force/moment measurement platform based on matrix sensors designed for large equipment. Sens. Actuators A Phys. 2023, 349, 114085. [Google Scholar] [CrossRef]

- He, Z.X.; Liu, T. Design of a three-dimensional capacitor-based six-axis force sensor for human-robot interaction. Sens. Actuators A Phys. 2021, 331, 112939. [Google Scholar] [CrossRef]

- Zhang, Z.J.; Chen, Y.P.; Zhang, D.L.; Xie, J.M.; Liu, M.Y. A six-dimensional traction force sensor used for human-robot collaboration. Mechatronics 2019, 57, 164–172. [Google Scholar] [CrossRef]

- Li, Y.J.; Wang, G.C.; Zhang, J.; Jia, Z.Y. Dynamic characteristics of piezoelectric six-dimensional heavy force/moment sensor for large-load robotic manipulator. Measurement 2012, 45, 1114–1125. [Google Scholar] [CrossRef]

- Pu, M.H.; Luo, C.X.; Wang, Y.Z.; Yang, K.Y.; Zhao, R.D.; Tian, L.X. A configuration design approach to the capacitive six-axis force/torque sensor utilizing the compliant parallel mechanism. Measurement 2024, 237, 115205. [Google Scholar] [CrossRef]

- Sun, Y.J.; Liu, Y.W.; Zou, T.; Jing, M.H.; Liu, H. Design and optimization of a novel six-axis force/torque sensor for space robot. Measurement 2015, 65, 135–148. [Google Scholar] [CrossRef]

- Li, Y.J.; Sun, B.Y.; Zhang, J.; Qian, M.; Jia, Z.Y. A novel parallel piezoelectric six-axis heavy force/torque sensor. Measurement 2008, 42, 730–736. [Google Scholar] [CrossRef]

- Chen, D.F.; Song, A.G.; Li, A. Design and Calibration of a Six-axis Force/torque Sensor with Large Measurement Range Used for the Space Manipulator. Procedia Eng. 2015, 99, 1164–1170. [Google Scholar] [CrossRef]

- Wang, Z.J.; Li, Z.X.; He, J.; Yao, J.T.; Zhao, Y.S. Optimal design and experiment research of a fully pre-stressed six-axis force/torque sensor. Measurement 2013, 46, 2013–2021. [Google Scholar] [CrossRef]

- Templeman, J.O.; Sheil, B.B.; Sun, T. Multi-axis force sensors: A state-of-the-art review. Sens. Actuators A Phys. 2020, 304, 111772. [Google Scholar] [CrossRef]

- Cai, D.J.; Yao, J.T.; Gao, W.H.; Zhang, H.Y.; Li, Z.Y. Design and analysis of parallel six-dimensional force sensor based on near-singular characteristics. Meas. Sci. Technol. 2024, 35, 045116. [Google Scholar] [CrossRef]

- Park, J.Y.; Lee, J.Y.; Kim, S.H.; Kim, S.R. Optimal Design of Passive Gravity Compensation System for Articulated Robots. Trans. Korean Soc. Mech. Eng. A 2012, 36, 103–108. [Google Scholar] [CrossRef]

- Duan, J.J.; Liu, Z.C.; Bin, Y.M.; Cui, K.K.; Dai, Z.D. Payload Identification and Gravity/Inertial Compensation for Six-Dimensional Force/Torque Sensor with a Fast and Robust Trajectory Design Approach. Sensors 2022, 22, 439. [Google Scholar] [CrossRef]

- Wang, Z.J.; Lu, L.; Yan, W.K.; He, J.; Cui, B.Y.; Li, Z.X. Research on Collision Point Identification Based on Six-Axis Force/Torque Sensor. J. Sens. 2020, 2020, 881263. [Google Scholar] [CrossRef]

- Konstantinos, K.; Haninger, K.; Gkrizis, C.; Dimitropoulos, N.; Kruger, J.; Michalos, G.; Makris, S. Collision detection for collaborative assembly operations on high-payload robots. Robot. Comput. Integr. Manuf. 2024, 87, 102708. [Google Scholar] [CrossRef]

- Shi, W.; Xi, A.M.; Zhang, Y.B. Robot Dynamics Modeling and Simulation Based on Kane. Microcomput. Inf. 2008, 29, 222–223. (In Chinese) [Google Scholar]

- Chen, Z.G.; Ruan, X.G.; Li, Y. Dynamic Modeling of a Self-balancing Cubical Robot. J. Beijing Univ. Technol. 2018, 44, 376–381. (In Chinese) [Google Scholar]

- Zuo, B.; Liu, J.G.; Li, Y.M. Dynamic Modeling on the Parallel Active Vibration Isolation System of Space Station Science Experimental Rack. Mach. Des. Manuf. 2014, 3, 199–203. (In Chinese) [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).