Abstract

The Olfati-Saber flocking (OSF) algorithm is widely used in multi-agent flocking control due to its simplicity and effectiveness. However, this algorithm is prone to trapping multi-agents in dynamic equilibrium under multiple obstacles, and dynamic equilibrium is a key technical issue that needs to be addressed in multi-agent flocking control. To overcome this problem, we propose a dynamic equilibrium judgment rule and design a fuzzy flocking control (FFC) algorithm. In this algorithm, the expected velocity is divided into fuzzy expected velocity and projected expected velocity. The fuzzy expected velocity is designed to make the agent escape from the dynamic equilibrium, and the projected expected velocity is designed to tow the agent, bypassing the obstacles. Meanwhile, the sensing radius of the agent is divided into four subregions, and a nonnegative subsection function is designed to adjust the attractive/repulsive potentials in these subregions. In addition, the virtual leader is designed to guide the agent in achieving group goal following. Finally, the experimental results show that multi-agents can escape from dynamic equilibrium and bypass obstacles at a faster velocity, and the minimum distance between them is consistently greater than the minimum safe distance under complex environments in the proposed algorithm.

1. Introduction

Flocking is a cluster composed of a large number of agents, enabling the system to achieve overall coordination through the behaviors of individual agents [1,2,3]. To date, there have been a lot of studies on the control of coordination behaviors generated by agents, including flocking control [4,5], consensus control [6,7], and formation control [8,9], to name a few.

Flocking control is the basis for investigating consensus control and formation control. Olfati-Saber [10] proposed a flocking control algorithm. In this algorithm, collisions between agents and between the agent and obstacles are avoided by virtual attractive/repulsive potential, velocity matching between agents is achieved by the velocity consensus term, and goal following is realized by virtual leaders. Meanwhile, flocking control has been extensively investigated by numerous researchers [11,12,13]. From the perspective of virtual leaders, current research on flocking control can be divided into each agent with virtual leader information [14,15,16], some agents with virtual leader information [17,18], and multi-agents with multiple virtual leaders’ information [19]. Overall, the aforementioned studies have contributed to the advancement of multi-agent flocking control based on virtual leaders. However, it is noted in [20,21,22] that some agents fail to follow the virtual leader in multiple obstacle environments. The reason is that the virtual forces of these agents have reached equilibrium with near-zero acceleration and velocity, which is referred to as dynamic equilibrium. Therefore, the virtual force problem of multi-agents in multiple obstacle environments is critical in flocking control research [23].

At present, the methods to solve the virtual force problem mainly include the geometric filling [24,25] and intelligent decision-making methods [26,27,28]. In the geometric filling method, it is generally assumed that the agent has the ability to perceive global obstacle information. Furthermore, to change the virtual force of the agent under multiple obstacles such that it can follow the goal, the non-convex obstacles are transformed into convex ones via geometric filling rules, followed by the generation of virtual agents on their surface. In the intelligent decision-making method, the agent is first trained in multiple obstacle environments to form obstacle avoidance movement rules. Then, the obstacle information perceived by the agent is utilized to match with the training model, producing the virtual force for obstacle avoidance and goal following. On the whole, the geometric filling and intelligent decision-making methods enable multi-agents to escape from the dynamic equilibrium. The geometric filling method requires the obtaining of global obstacle information, which limits its practical application. The intelligent decision-making method involves a complex training structure, and the real-time performance requires further improvement. In addition, these methods suffer from the multi-attribute decision-making problem.

Fuzzy control is an effective method for solving the multi-attribute decision-making problem [29,30]. In [31], the attractive/repulsive function is designed by the fuzzy control method, thus proposing a multi-agent flocking control algorithm with obstacle avoidance. The computational complexity of this algorithm is low. However, it is possible for the agent to be trapped in dynamic equilibrium when perceiving multiple obstacles. Motivated by the above studies, this paper attempts to calculate the fuzzy expected velocity by the established membership functions and fuzzy rules, which enable the agent to escape from the dynamic equilibrium. The contributions of this paper are summarized as follows:

- (1)

- A dynamic equilibrium judgment rule is proposed to determine whether the agent is trapped in dynamic equilibrium. If the agent is trapped in dynamic equilibrium, the fuzzy expected velocity is calculated by the established membership functions and fuzzy rules. In contrast, if the agent is not trapped, the projected expected velocity is obtained by the obstacle projection method.

- (2)

- The sensing radius region of the agent is divided into four subregions and a nonnegative subsection function is designed to adjust the attractive/repulsive potentials in these subregions.

- (3)

- A fuzzy flocking control (FFC) algorithm is developed for multi-agents trapped in dynamic equilibrium under multiple obstacles. In the algorithm, the interaction term between agents is reconstructed using the fuzzy expected velocity, projected expected velocity, and nonnegative subsection function.

The remainder of this paper is organized as follows. Some preliminary knowledge is introduced in Section 2. In Section 3, the FFC algorithm is proposed. In Section 4, the properties analysis of the FFC algorithm is presented. Numerical experiments and the discussion are reported in Section 5. The conclusions are given in Section 6.

2. Preliminaries

2.1. Agent-Based Representation

Consider a group of N -agents moving in the n-dimensional Euclidean space, . Denote as the position, velocity, and control input (or acceleration) acting on -agent i, at the current time. Then, the second-order dynamic model of -agent i can be formulated as:

where represents the upper velocity limit of -agent i, if , otherwise , denotes the first order derivative of the vector , and represents the Euclidean norm of the vector . Furthermore, a virtual -agent (e.g., multi-drone precision firefighting and multi-robot rescue) is introduced to achieve group goal following. Denote as the position, velocity, and control input acting on the -agent at the current time. Then, the second-order dynamic model of the -agent can be described as:

2.2. Proximity Net of -Agents and -Agents

The flocking composed of N -agents can be described by an undirected graph , where is the set of -agent nodes, is the set of edges, and is the configuration of all -agents. To avoid collisions between -agents and obstacles, a number of virtual -agents are generated at the boundary points of obstacles. All -agents can be described by a directed bipartite graph , where is the set of -agent nodes and is the set of edges. Then, the proximity net of -agents and -agents can be described by

Let r be the sensing radius, be the set of neighboring -agents of -agent i, be the set of neighboring -agents of -agent i, and be the position of -agent k, then

2.3. Collective Potential Functions

The lattice-type structure can be used to capture the geometry between -agents and between the -agent and -agent. In this structure, the distances between the -agent and its neighboring -agents are equal, as are the distances to its neighboring -agents. Thus, the positions of -agents and -agents are represented by the following constraints:

where , , , , , , , and . To obtain the solutions of these constraints, it is necessary to construct two smooth collective potential functions and such that their local minima correspond to the solutions of the constraints (6) and (7), respectively. The detailed construction procedure is described below:

First, the bump function is introduced as

where . Second, let be the pairwise attractive/repulsive potential between -agents and be the pairwise attractive/repulsive potential between the -agent and -agent, then

where , , , , and . It is noted that has a finite cut-off at since for all . In addition, it is essential to make and to guarantee that and have a minimum at . Finally, the collective potential functions are defined by

It can be observed that the collective potential functions and can be minimized at (6) and (7), respectively.

2.4. A Previous Algorithm for Evaluating

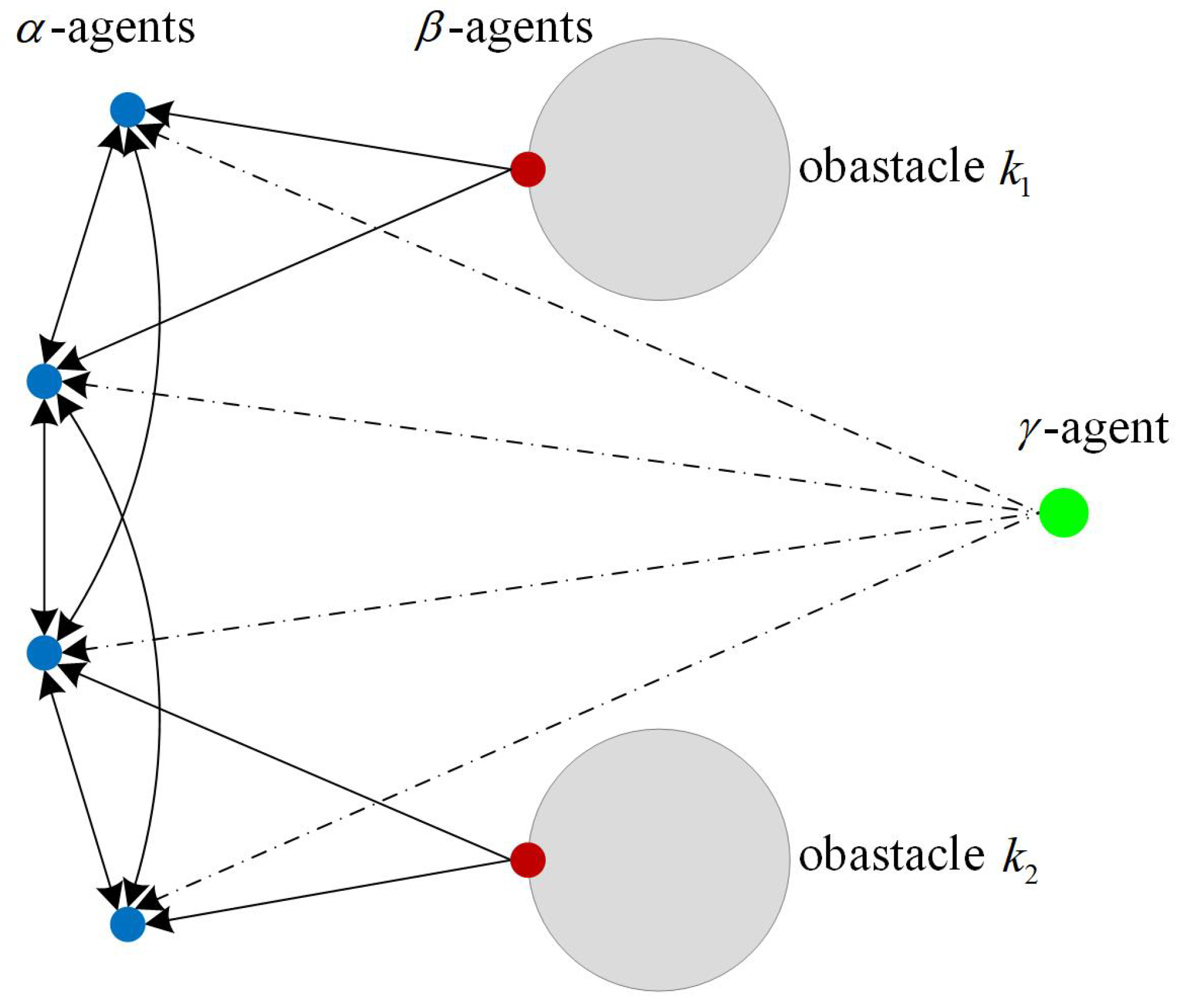

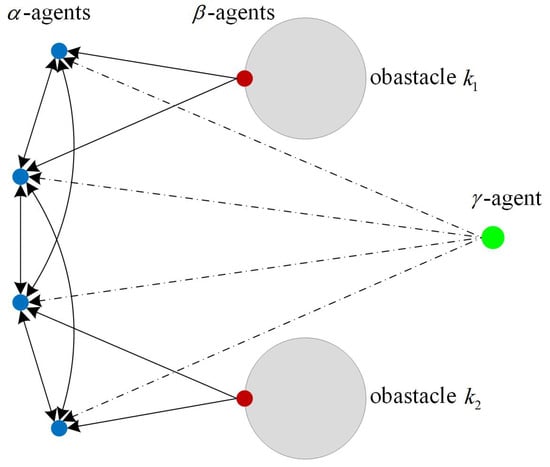

In the Olfati-Saber flocking (OSF) algorithm [10], the interaction framework between -agents, the -agent, and obstacles is shown in Figure 1. In addition, the control input of -agent i is evaluated by

where is the interaction term, is the interaction term, and is the interaction term. These interaction terms can be independently evaluated as follows:

Figure 1.

The interaction framework between -agents, -agent, and obstacles.

2.4.1. Evaluate

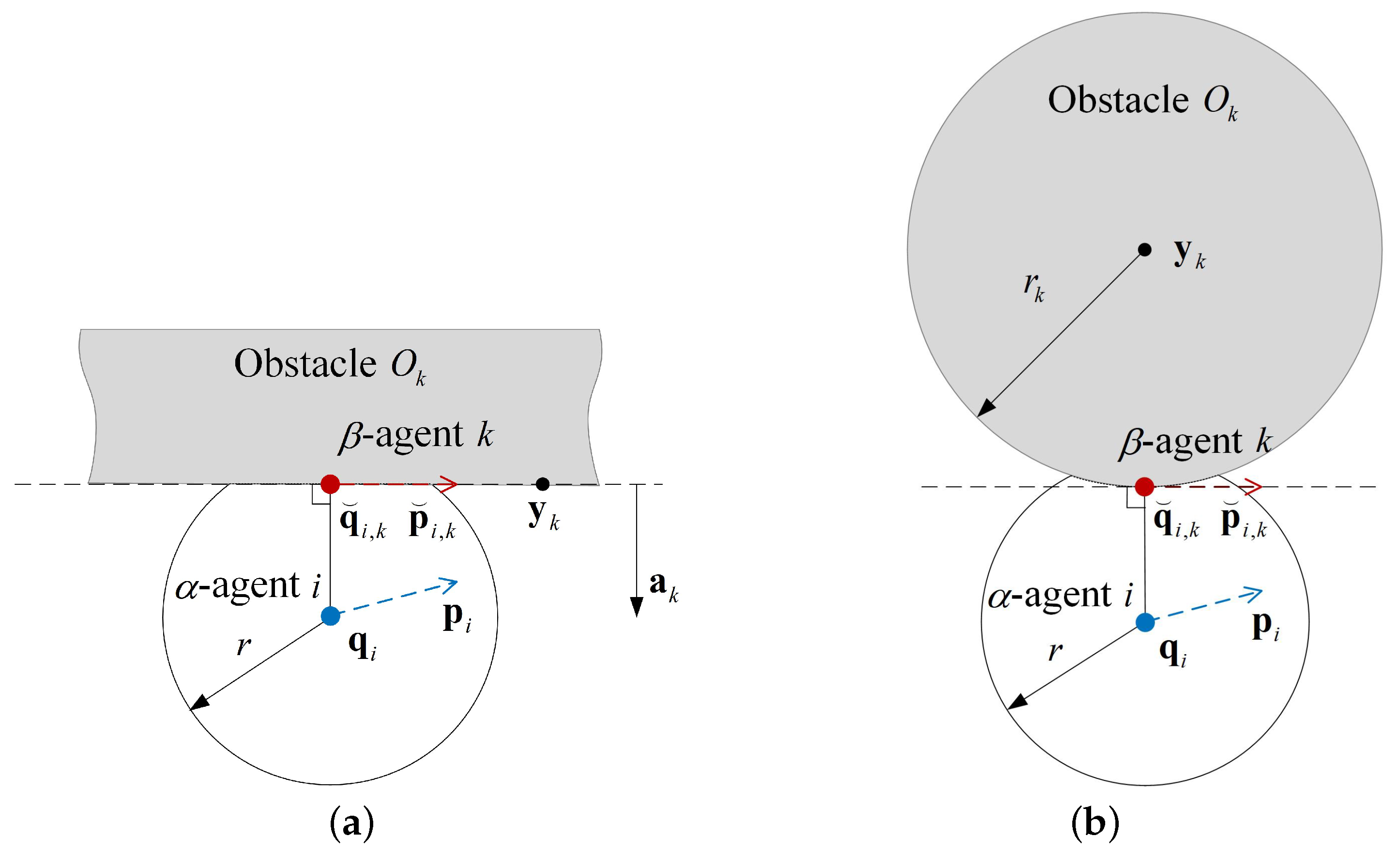

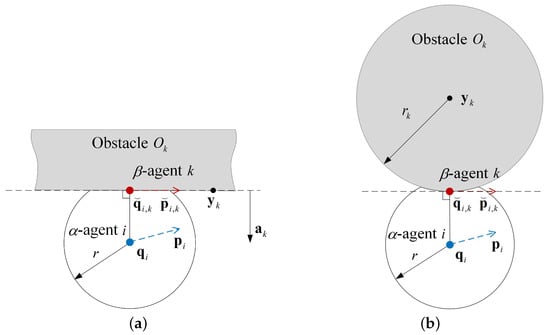

When -agent i perceives obstacle within its sensing radius r, a virtual -agent k is generated at the projection point of -agent i on the boundary of obstacle . Then, the position and velocity of -agent k are determined using the obstacle projection method. In this method, the position and velocity of -agent k is the projection of -agent i in the tangential direction of the obstacle surface, as illustrated in Figure 2. Let be the velocity of -agent k. For the infinite wall obstacle, the following formulas hold:

Figure 2.

The position and velocity of -agents in the Olfati-Saber flocking (OSF) algorithm. (a) Infinite wall obstacle. (b) Spherical obstacle.

where is the projection matrix, is the unit normal vector, is the passing point of the infinite wall boundary, and is the identity matrix. For the spherical obstacle, the following formulas are defined:

where is the center of the spherical obstacle, , and is the radius of the spherical obstacle. With the position and velocity of -agent k, the interaction term is constructed as

where and are the feedback gains, is the gradient of the pairwise attractive/repulsive potential between -agent i and -agent k at position , is the heterogeneous adjacency between -agent i and -agent k, and

2.4.2. Evaluate

The interaction term is constructed as

where and are the feedback gains, is the gradient of the pairwise attractive/repulsive potential between -agents i and j at position , is the homogeneous adjacency between -agents i and j, and

Here, the first term in Formulas (16) and (18) is referred to as the gradient-based term, and the second term in Formulas (16) and (18) is referred to as the consensus term.

2.4.3. Evaluate

The interaction term is constructed as

where and are the feedback gains.

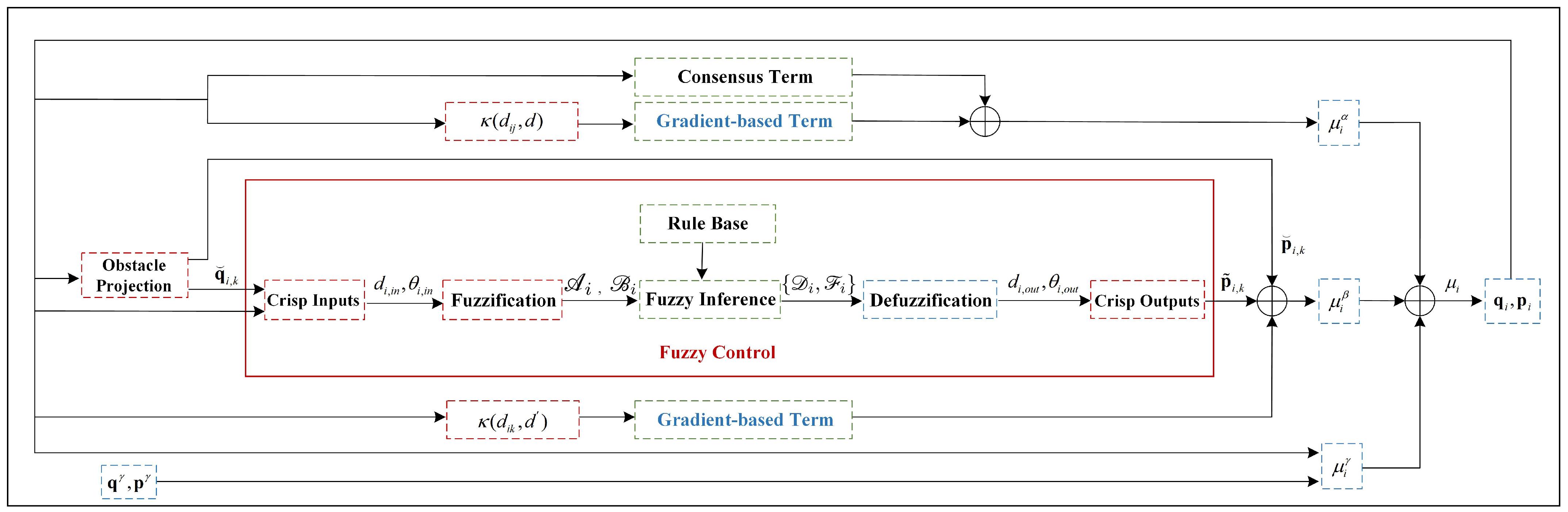

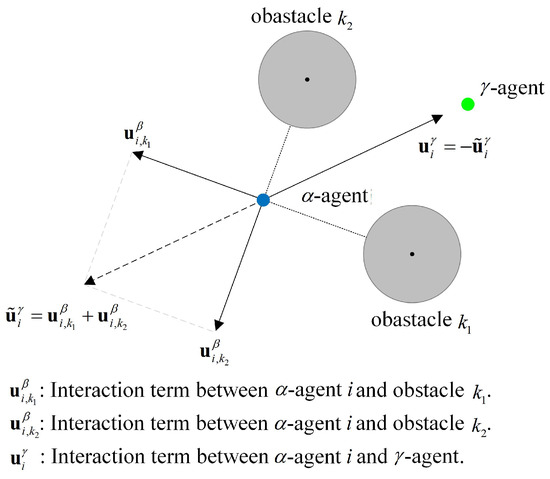

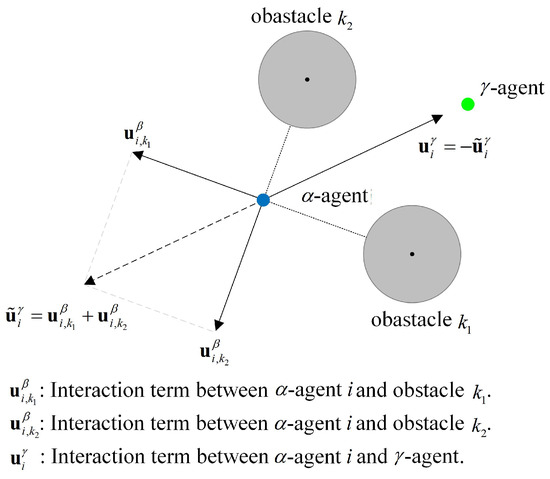

Definition 1.

Define α-agent i being trapped in dynamic equilibrium when it perceives obstacles preventing it from following the γ-agent, and both its acceleration and velocity are near-zero for a period of time. The scenario of α-agent i being trapped in dynamic equilibrium is shown in Figure 3. It is observed that the virtual forces acting on α-agent i reach equilibrium, affected by the γ-agent and the obstacles and .

Figure 3.

The scenario of -agent i being trapped in dynamic equilibrium.

Problem 1.

In this paper, each α-agent needs to avoid colliding with the β-agents and other α-agents while following the γ-agent. Therefore, each α-agent needs to evaluate the interaction terms (16), (18), and (20). Affected by these interaction terms, α-agent i is considered in dynamic equilibrium when it has little or no displacement over time, i.e.,

where is the step time, m is the number of steps, and λ is the small constant. In dynamic equilibrium, α-agent i remains at static or in oscillatory motion; hence, it cannot bypass the obstacles and follow the group goal. The problem of multi-agents being trapped in dynamic equilibrium is difficult to solve due to its complexity.

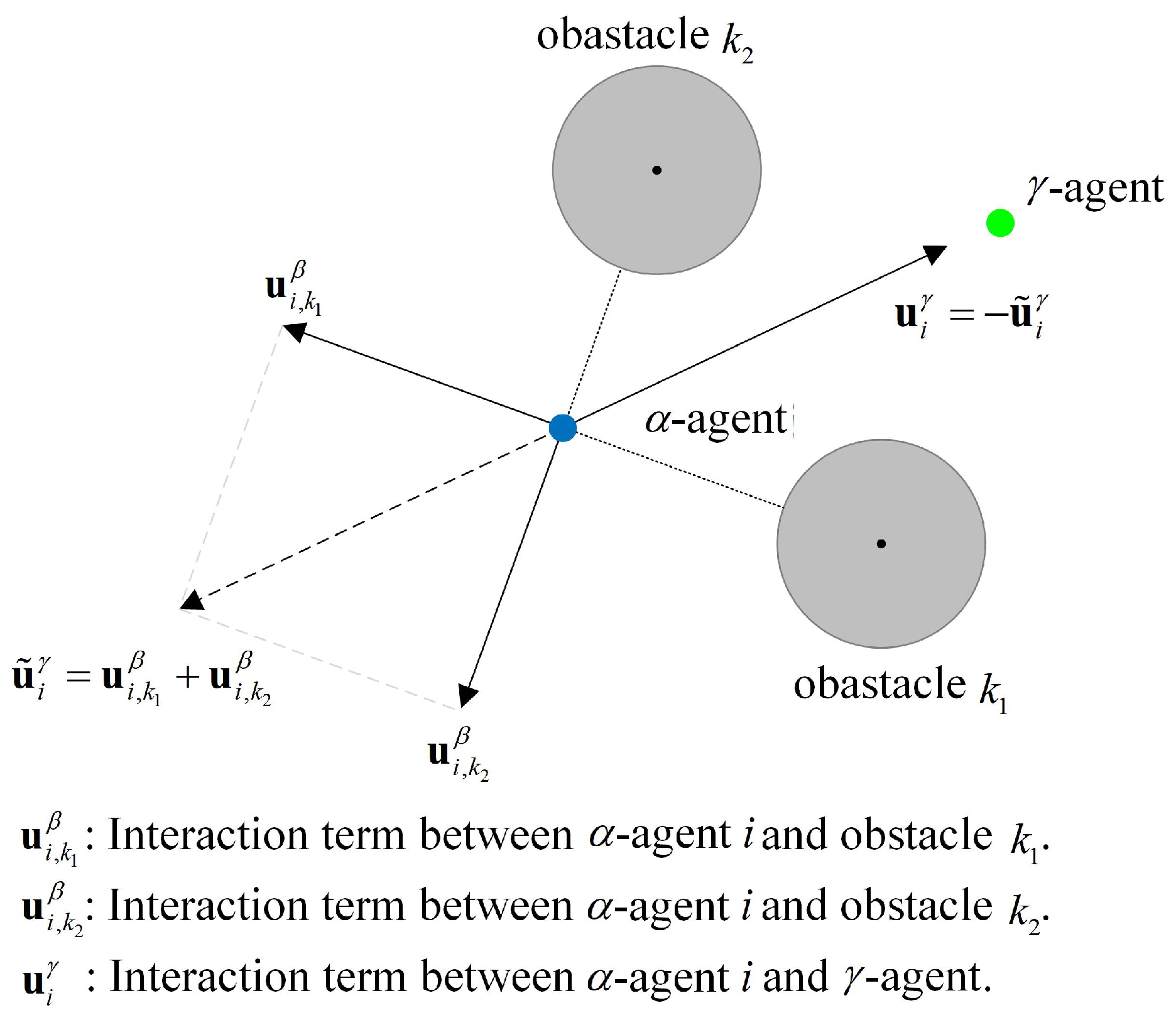

3. Proposed Algorithm

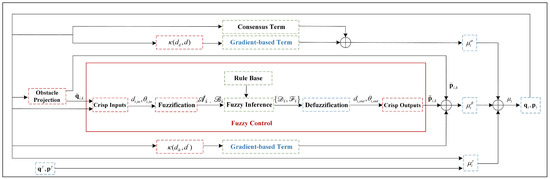

To solve Problem 1, a fuzzy flocking control (FFC) algorithm is proposed. In this algorithm, the interaction term , interaction term , and interaction term are reconstructed such that the -agent can escape from the dynamic equilibrium, bypass obstacles, and avoid collisions. The structure of this algorithm is shown in Figure 4, and the details are described below:

Figure 4.

Structure of fuzzy flocking control (FFC) algorithm.

3.1. Evaluate

The interaction term is reconstructed by calculating the fuzzy expected velocity of -agent for -agent and designing a nonnegative subsection function. When -agent i is trapped in dynamic equilibrium, the fuzzy expected velocity of -agent for -agent is obtained by the fuzzy control method, and -agent i can escape from the dynamic equilibrium based on this fuzzy expected velocity. When -agent i is not trapped in dynamic equilibrium, the projected expected velocity of -agent for -agent is obtained by Formula (14) or (15), which enables -agent i to bypass the obstacles. Meanwhile, the sensing radius region of -agent i is divided into four subregions, followed by the design of a nonnegative subsection function to adjust the attractive/repulsive potentials in these subregions, which reduces the possibility of collisions between the -agent and obstacles.

3.1.1. Fuzzy Expected Velocity Calculated by Fuzzy Control

When -agent i perceives the obstacles that prevent it from following the -agent, determine whether the position of -agent i satisfies Condition (21). If Condition (21) holds, -agent i is trapped in dynamic equilibrium. Then, the fuzzy expected velocity of -agent i for -agent k is calculated by the fuzzy control method. The core idea of this method is to encode experience-based knowledge in the form of fuzzy rules. In this method, the crisp inputs with precise values are first converted to fuzzy variable sets, which is referred to as fuzzification. Then, these fuzzy variable sets are transformed into new fuzzy variable sets by performing fuzzy inference based on the fuzzy rules. Finally, the new fuzzy variable sets are converted back to the crisp outputs, which is also referred to as defuzzification. The details of this method are described as follows:

Crisp inputs: The fuzzy expected velocity of -agent i for -agent k is equal to the distance between the current position of -agent i and the expected position of -agent i at the next time divided by the step time . Thus, determining the expected position of -agent i is crucial to obtain the fuzzy expected velocity . It is known that the expected position of -agent i can be calculated by phase and distance. In this regard, the crisp inputs to the fuzzy control are defined as the phase and distance. To deal with phases simply, the reference coordinate system is rotated to the agent coordinate system when -agent i perceives the obstacles. In the agent coordinate system, the origin is the position of -agent i, and the direction of the x-axis points to the -agent. Next, the relative phase between -agent i and obstacle boundary point k can be obtained in the agent coordinate system, and if . Denote as the relative phase set of -agent i, and as the number of positive and negative elements in the relative phase set , and and as the obstacle boundary points corresponding to the maximum and minimum values of the phases in the phase set . Then, the input relative phase and the input distance if , otherwise and . Here, the input relative phase and the input distance are the crisp inputs to the fuzzy control.

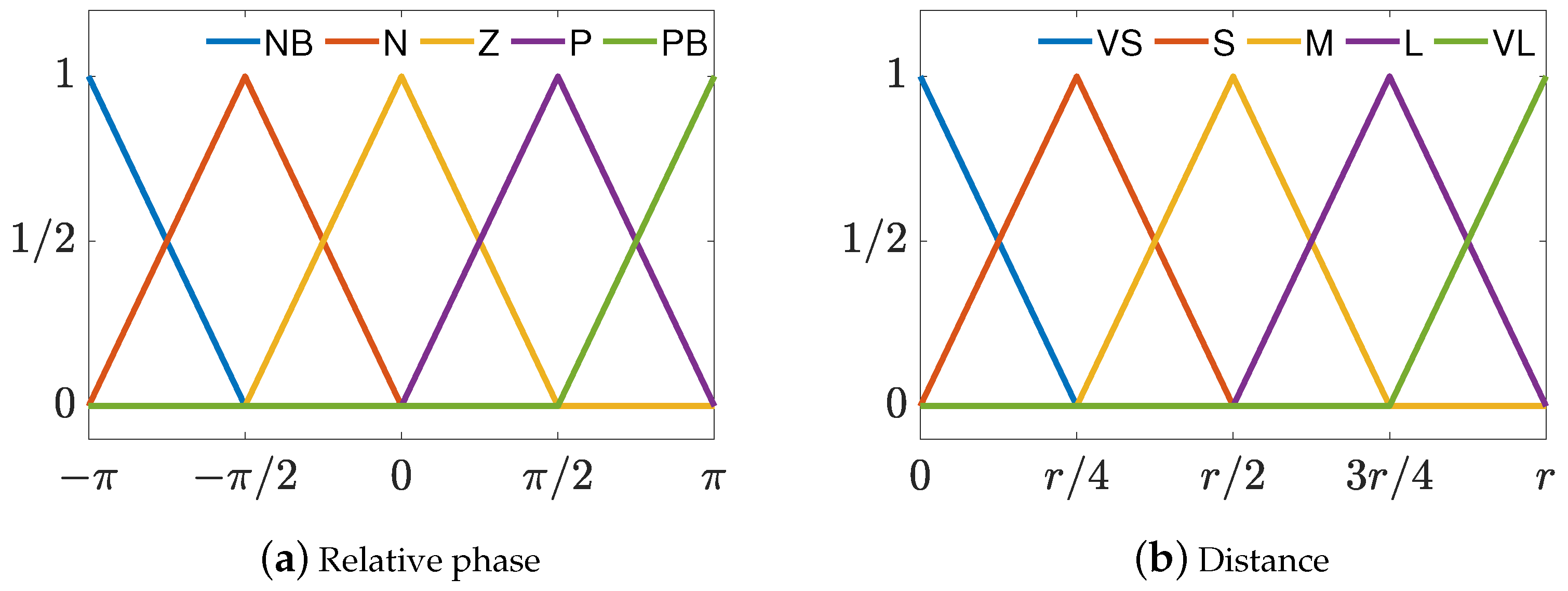

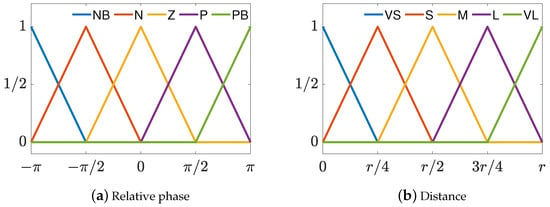

Fuzzification: Let denote the fuzzy variable set of the input relative phase , , where , , , , and are the abbreviations of negativebig, negative, zero, positive, and positivebig. Meanwhile, let denote the fuzzy variable set of the input distance , , where , , , , and are the abbreviations of veryshort, short, medium, long and verylong. Then, the triangular membership functions and , which are shown in Figure 5a,b, are used to obtain the degrees corresponding to the linguistic variables in fuzzy variable sets and , respectively. Thus, the input relative phase and the input distance can be converted to the input fuzzy variable sets and , which are given by

Figure 5.

Two membership functions of fuzzy variables.

Fuzzy inference: With the input fuzzy variable sets and , it is possible to perform fuzzy inference. The fuzzy inference procedure utilizes fuzzy rules and the Mamdani algorithm [32] to create a mapping between the input fuzzy variable sets and the output fuzzy variable sets. Based on experience and intuition, the rule base is defined in Table 1, where denotes the fuzzy variable set of the output relative phase and represents the fuzzy variable set of the output relative distance. It can be seen from Table 1 that the first fuzzy rule is

where is a fuzzy variable of the input relative phase, is a fuzzy variable of the input distance, is a fuzzy variable of the output relative phase, and is a fuzzy variable of the output distance. Furthermore, the Mamdani algorithm consists of two steps: (i) assign the degree of the lth fuzzy rule, calculated by

where , is the total number of fuzzy rules in the rule base, and ∧ denotes the “min” operation, and (ii) let be the degree of the lth fuzzy rule in the output fuzzy subset for the input , given by

then the output fuzzy variable sets are the synthesis of and , obtained by

where ∨ denotes the “max” operation.

Table 1.

The rule base for fuzzy control.

Defuzzification: Let be the output relative phase, and be the output distance. Then, the output fuzzy variable sets can be converted into a series of subregions using the membership functions and . In addition, let be the number of subregions obtained by the membership functions , and be the phase in the center of subregion g. Similarly, let be the number of subregions obtained by the membership functions , and be the distance in the center of subregion g. Then, the output relative phase and the output distance are obtained by calculating the center of gravity as follows:

Crisp outputs: The expected position of -agent i is calculated by

where

and is the relative azimuth, is the relative elevation, and ⊙ is the Hadamard product. Note that if -agent i is in two-dimensional Euclidean space. Thus, the fuzzy expected velocity of -agent i for -agent k is calculated by

Substituting Formula (29) into Formula (34), we have

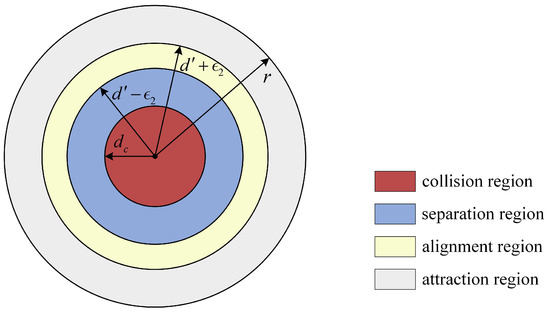

3.1.2. Nonnegative Subsection Function

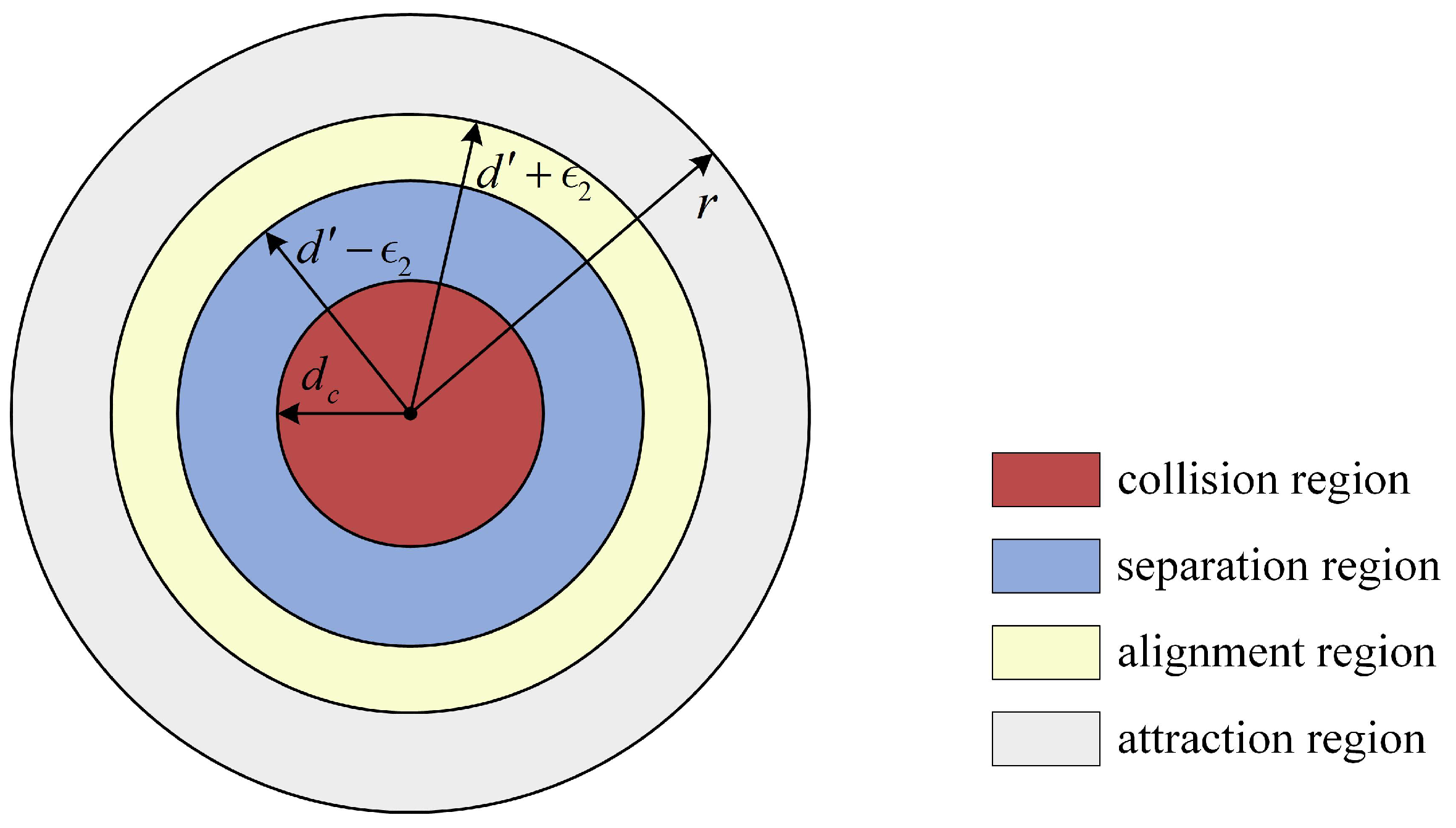

When the distance between -agent i and -agent k is less than , the repulsion enables their separation. However, the continuous reduction in distance between -agent i and -agent k presents a collision risk. Early warning can be used to reduce the collision risk [33]. Hence, we define as the minimum early-warning distance, and the collision region as the area within a distance from -agent i. Furthermore, maintaining a strict distance between -agent i and -agent k is difficult in practice. To solve this problem, we define as the disturbance factor, and the alignment region as the area whose distance from -agent i is greater than and less than . Thus, the sensing radius region of -agent i is divided into four subregions, as shown in Figure 6. It is known that the attractive/repulsive potential can achieve separation, alignment, and attraction between -agent i and -agent k [10]. Here, a nonnegative subsection function is designed to adjust the attractive/repulsive potential in these four subregions, given by

where , , , and are the weights, , is the minimum safe distance for avoiding collisions since the size of -agent i cannot be ignored in practice, is a constant, , and is the desired distance for collision avoidance.

Figure 6.

Four subregions within the sensing radius of -agent i.

With the fuzzy expected velocity of -agent i for -agent k and the nonnegative subsection function , the interaction term is reconstructed as

where is the feedback gain, is the distance between -agent i and -agent k, , is the projected expected position of -agent i for -agent k, is the projected expected velocity of -agent i for -agent k, and if -agent i is trapped in dynamic equilibrium, otherwise . Here, the projected expected position and projected expected velocity are calculated by Formulas (14) and (15).

3.2. Evaluate

With the nonnegative subsection function , the interaction term is reconstructed as

where is the distance between -agents i and j, .

3.3. Evaluate

To increase the attractiveness of the -agent to -agent i, the interaction term is reconstructed as

Using Formulas (37)–(39) and (13), the control input for -agent i can be obtained. In summary, the details of the FFC algorithm are described in Algorithm 1.

| Algorithm 1 FFC algorithm |

|

4. Properties Analysis of FFC Algorithm

Denote as the total potential energy between the -agents, as the total potential energy between the -agent and -agent, as the total potential energy between the -agent and -agent, and as the kinetic energy between the -agent and -agent. Then, the total energy can be defined by

where

Theorem 1.

Consider a group of N α-agents with its motion model (1). The control inputs acting on these α-agents are obtained by Formulas (13) and (37)–(39). Assume that the γ-agent is static, i.e., . Furthermore, assume that the initial total energy is finite. Then, holds for all .

Proof.

Calculating the first order derivative of the total energy , we can obtain

where

Formulas (13), (37)–(39) and (45) can be rewritten as

Let , , , and . In addition, let be a diagonal matrix whose ith diagonal element is given by , be a adjacency matrix whose th element is , be a Laplacian matrix given by , be a diagonal matrix whose ith diagonal element is given by , be a adjacency matrix whose th element is , be a diagonal matrix whose ith diagonal element is given by , and be a adjacency matrix whose th element is . This introduces a matrix as follows

Formula (50) can be equivalently reformulated as

where and are the identity matrices, is the zero matrix, and ⊗ is the Kronecker product.

Since , , , , and , , are positive semidefinite matrices, then is a positive semidefinite matrix. Thus, we have and . It can be seen that the total energy is a nonincreasing function, i.e., for all . According to Formula (40), we have . Therefore, holds for all .

□

Theorem 2.

Consider a group of N α-agents satisfying Theorem 1. Then, there is no collision between the α-agent and objects (e.g., other α-agents or obstacles).

Proof.

Assume that there are collisions between -agents and collisions between the -agent and obstacles occurring at time t, . Then, we have

It is known from Formulas (9) and (10) that the pairwise attractive/repulsive potential and tend to infinity. That is, if . This contradicts the proof that is a nonincreasing function. Hence, , i.e., there is no collision between the -agent and objects. □

5. Experimental Results and Discussion

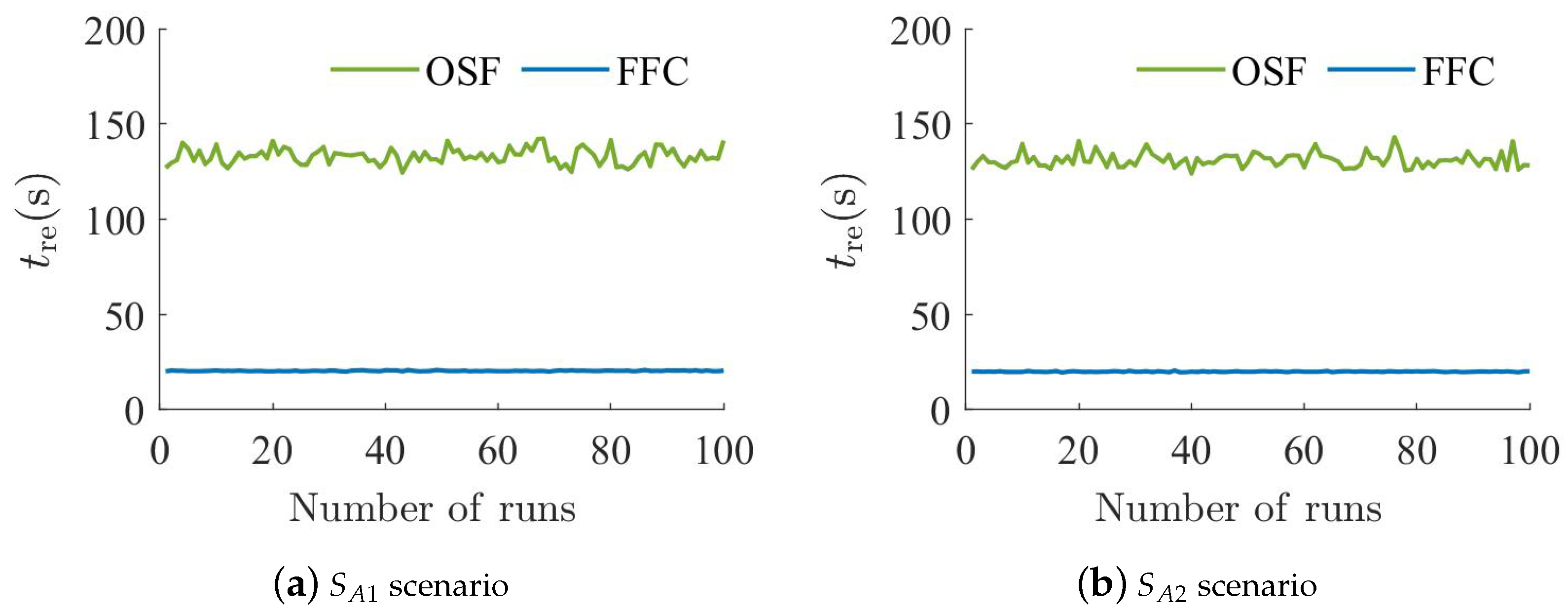

In this section, three experiments are undertaken to demonstrate the effectiveness of the proposed FFC algorithm, including the spherical or wall obstacle scenario, spherical and wall obstacle combination scenario, and complex obstacle scenario experiments. All experiments are carried out via Matlab R2022b on the DELL PC, which has a GHz i9-14900K CPU and 32 GB of memory. In addition, the OSF algorithm [10] is selected for comparison with the FFC algorithm. Meanwhile, denote as the distance between the center of the -agents and the -agent, as the minimum distance between the -agent and objects, and as the time taken for all -agents to bypass obstacles and re-aggregate near the -agent. The distances and , and the time , are used to evaluate the performance of the OSF and FFC algorithms.

The parameters of the OSF and FFC algorithms are shown in Table 2. Specifically, the feedback gains satisfy , , , , , , and . If the values of these feedback gains are identical, it can better capture the combined effects of the interaction terms , , and . Moreover, if the values of these feedback gains are high, the velocity of the -agent may tend to saturation. The weights , , and satisfy . In addition, the weight is crucial for early warning and should satisfy .

Table 2.

Experimental parameters.

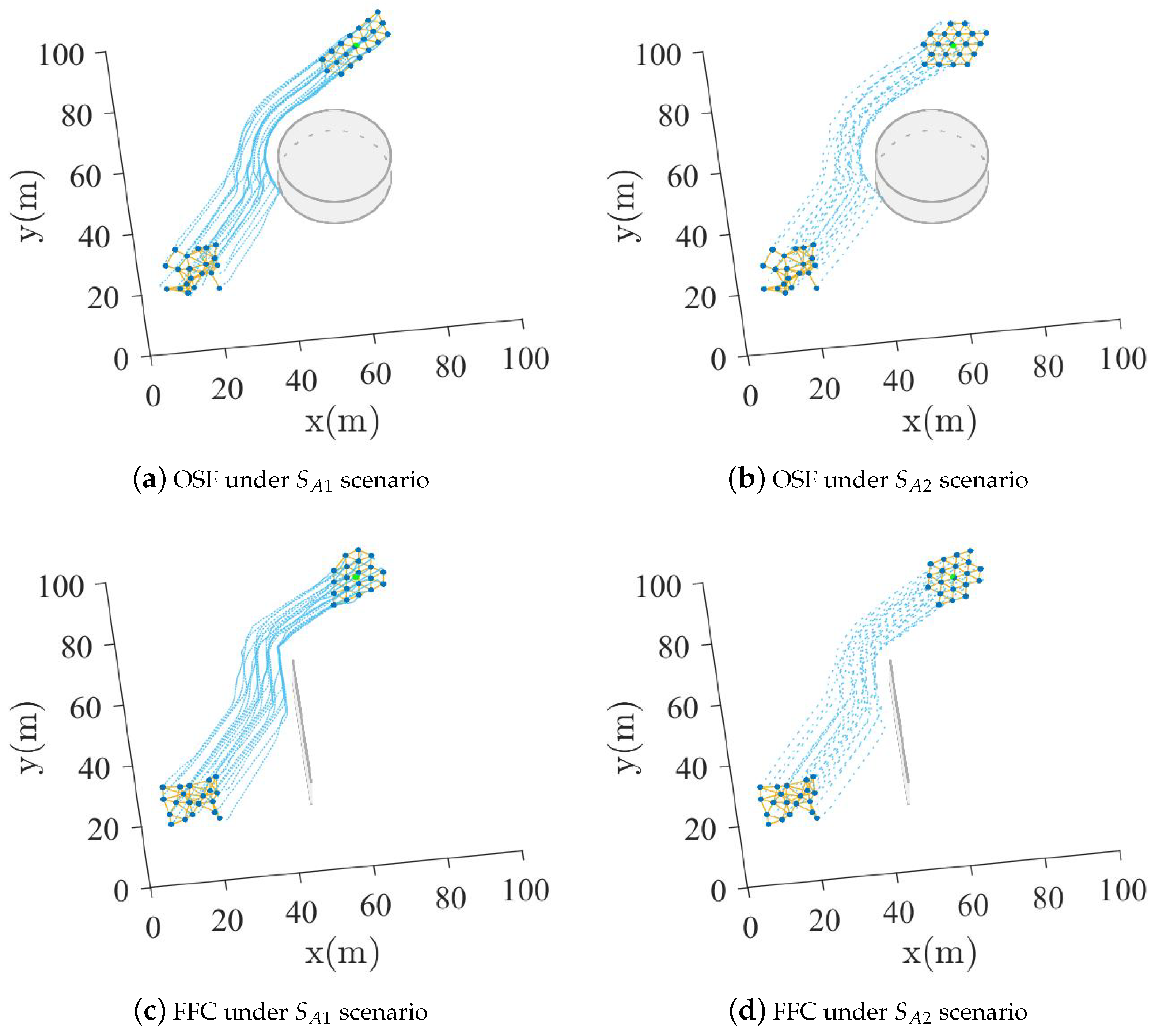

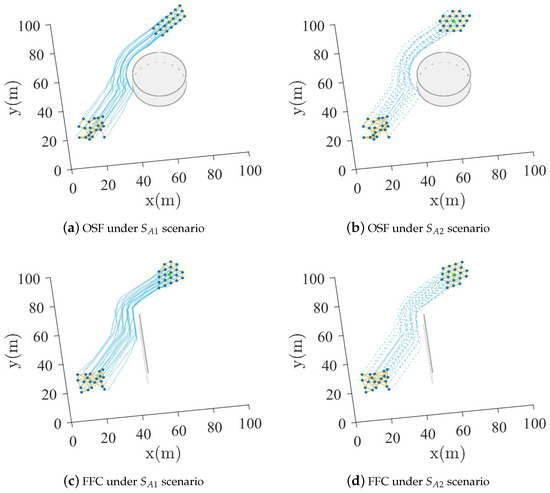

5.1. Spherical or Wall Obstacle Scenario Experiment

In this experiment, two obstacle scenarios are selected as follows:

- :

- There is a spherical obstacle of radius 15 at coordinates .

- :

- There is a wall obstacle of length 30 at coordinates .

The number of -agents is , the initial positions of these -agents are selected randomly from , and the initial velocities of these -agents are selected randomly from . In addition, the initial position of the virtual -agent is , and the initial velocity of the virtual -agent is zero.

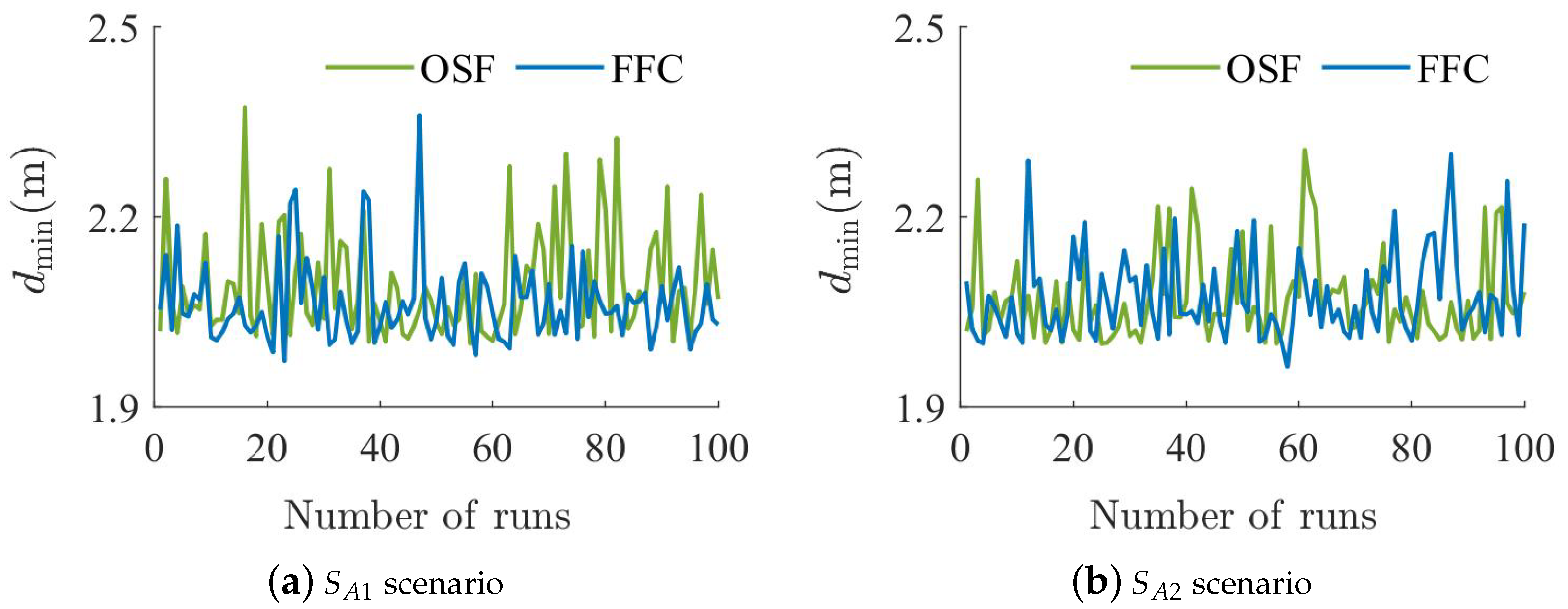

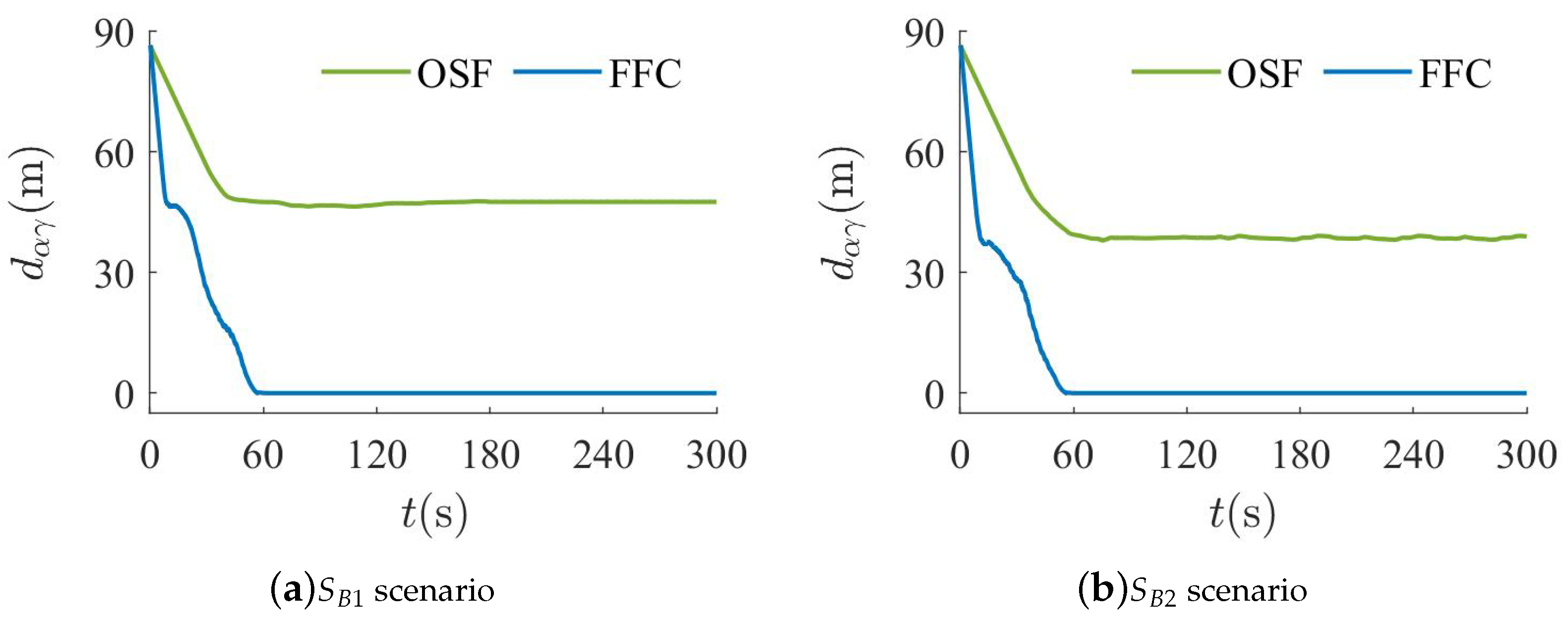

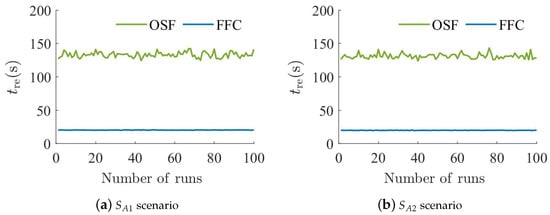

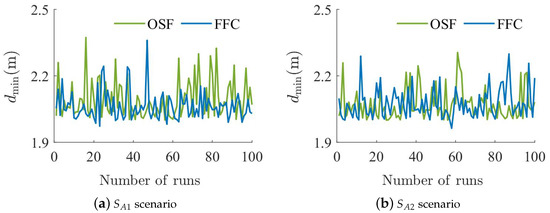

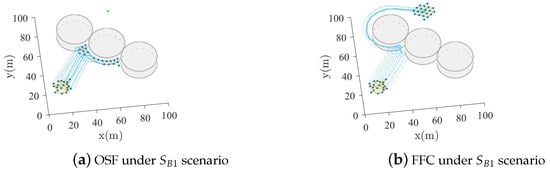

The visual comparison of the two algorithms is shown in Figure 7. It can be seen that the two algorithms can enable all -agents to bypass spherical or wall obstacles and follow the -agent. Furthermore, the quantitative comparison of the two algorithms over 100 runs is shown in Figure 8 and Figure 9. It can be seen from Figure 8 that the time taken for all -agents to bypass obstacles and re-aggregate near the -agent is reduced compared to the OSF algorithm, which indicates that the FFC algorithm enables -agents to bypass the obstacle faster. Meanwhile, it can be seen from Figure 9 that the minimum distance of the two algorithms between the -agent and objects is consistently greater than the minimum safe distance, i.e., , which shows that there are no collisions between the -agent and objects [34].

Figure 7.

Motion process of the two algorithms in spherical or wall obstacle scenario. The blue dots are the -agents, the yellow solid line between blue dots is the neighborhood relationship between -agents, the blue dotted lines are the motion trajectories of -agents, and the green dot is the -agent.

Figure 8.

The time taken for all -agents to bypass obstacles and re-aggregate near the -agent over 100 runs in spherical or wall obstacle scenario.

Figure 9.

Minimum distance between -agent and objects over 100 runs in spherical or wall obstacle scenario.

5.2. Spherical and Wall Obstacle Combination Scenario Experiment

In this experiment, four obstacle scenarios are selected as follows:

- :

- There are three spherical obstacles of radius 15 at coordinates , , and .

- :

- There are six wall obstacles of length 20 at coordinates , , , , , and .

- :

- There are two spherical obstacles of radius 15 at coordinates and . Moreover, there are two wall obstacles of length 24 at coordinates and .

- :

- There is a spherical obstacle of radius 15 at coordinates . In addition, there are four wall obstacles of length 20 at coordinates , , , and .

The number of -agents is , the initial positions of these -agents are selected randomly from , and the initial velocities of these -agents are selected randomly from . In addition, the initial position of the virtual -agent is , and the initial velocity of the virtual -agent is zero.

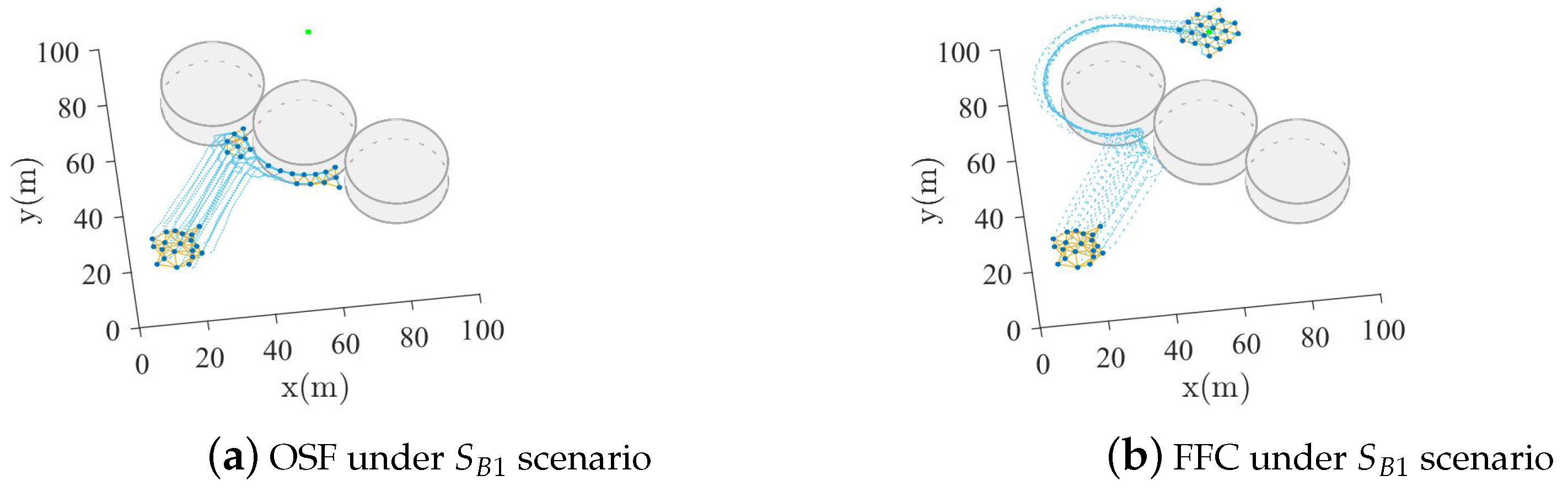

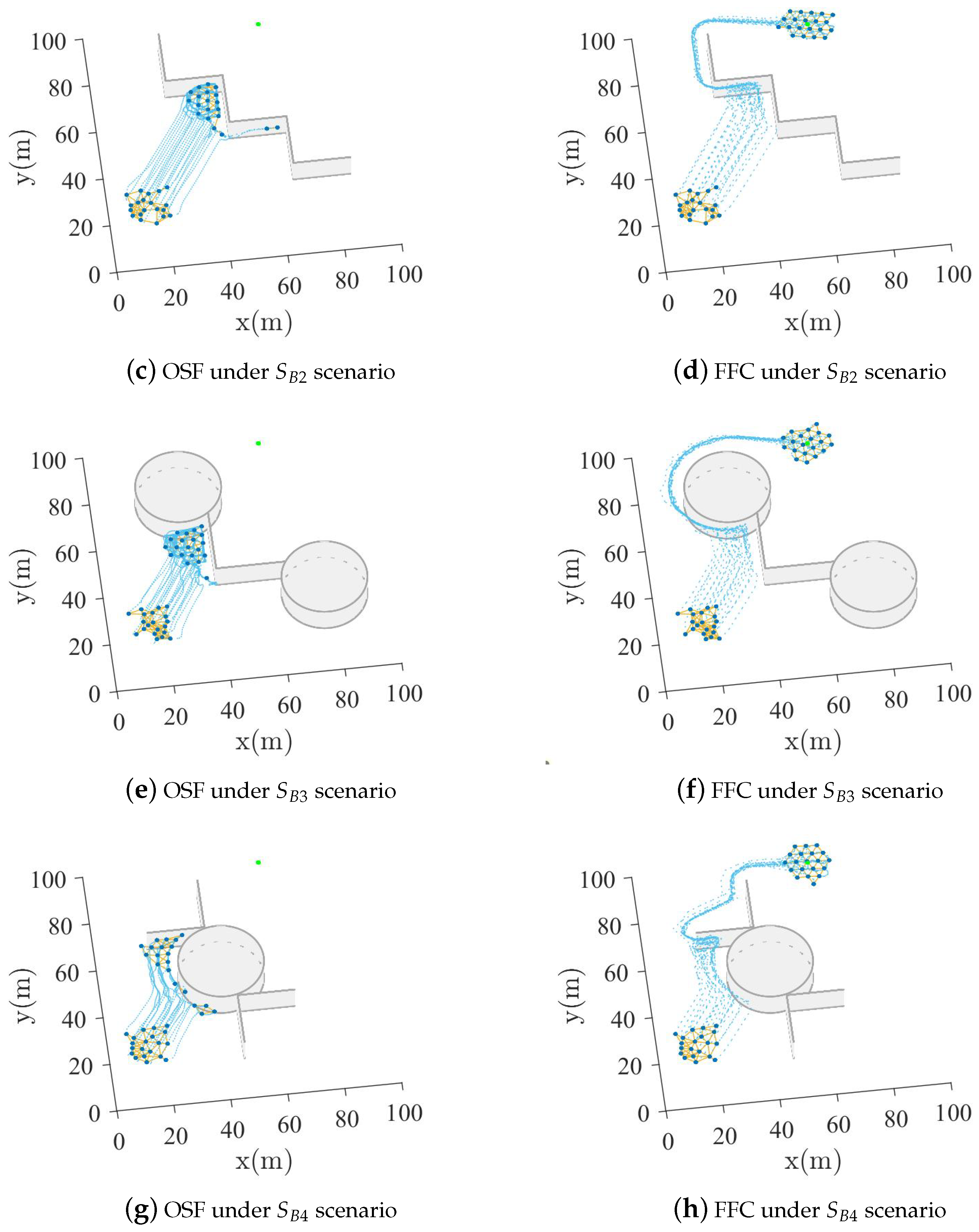

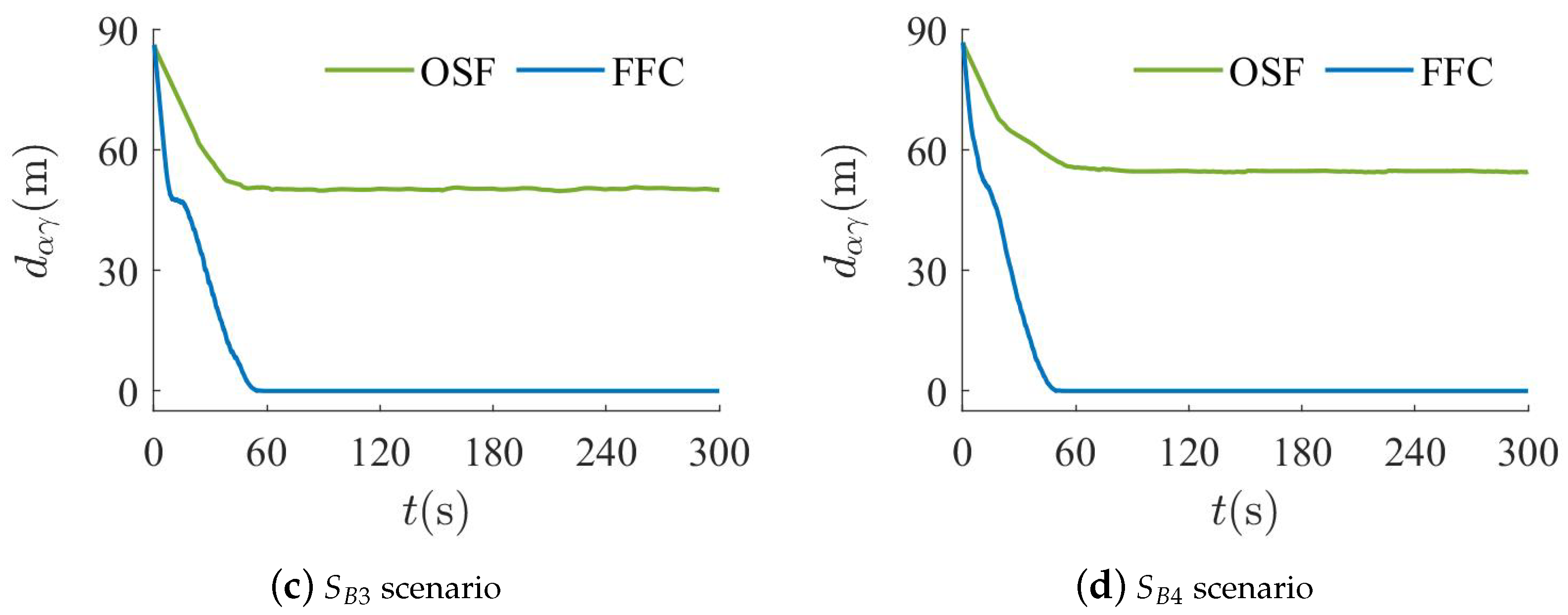

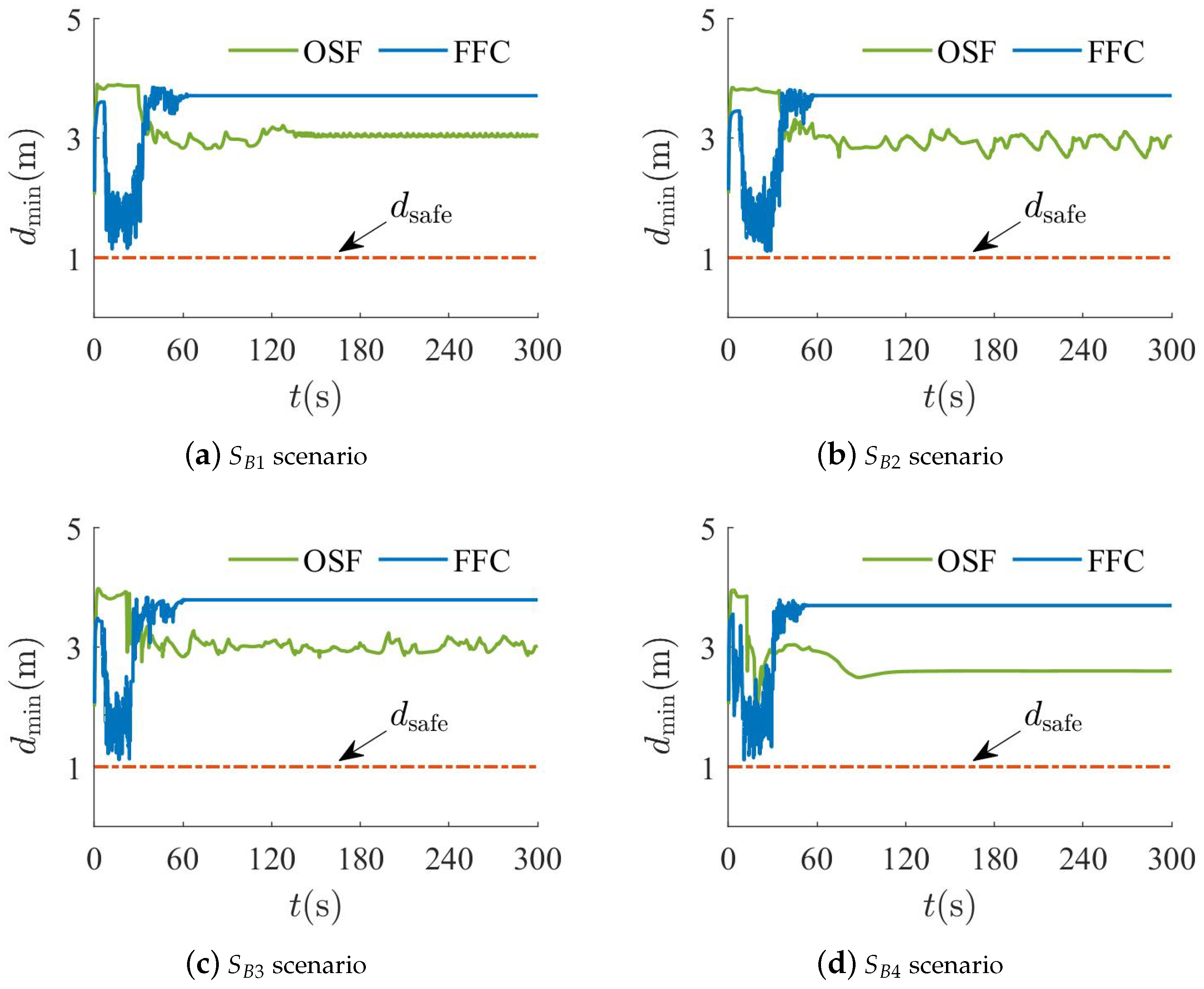

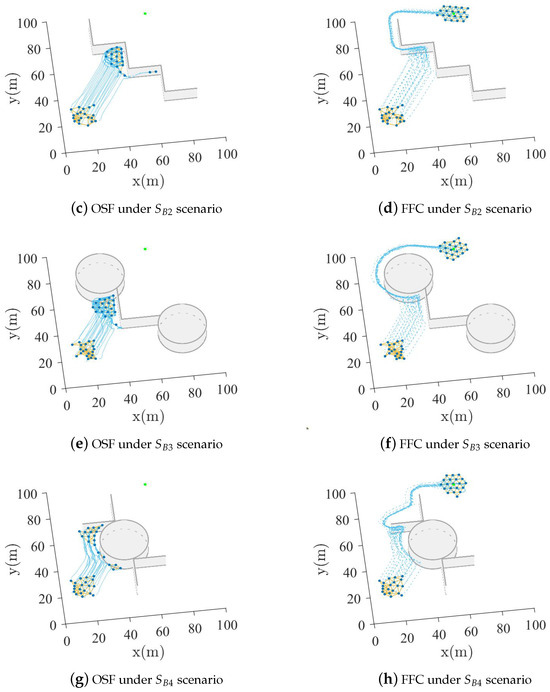

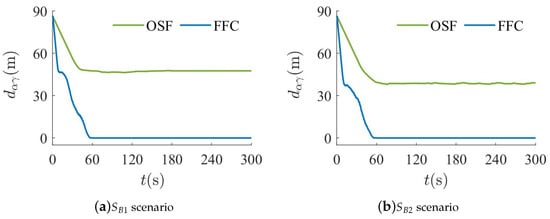

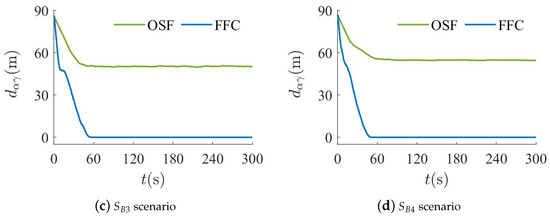

Both visual and quantitative comparisons for the two algorithms are shown in Figure 10, Figure 11 and Figure 12. It can be seen from Figure 10 that almost all -agents of the OSF algorithm remain stationary or oscillate near the combined position of multiple obstacles. Furthermore, it can be observed from Figure 11 that -agents of the OSF algorithm cannot re-aggregate near the -agent. The reason is that -agents are trapped in dynamic equilibrium, and it is difficult to bypass the obstacles. However, the FFC algorithm enables all -agents to bypass the obstacles and follow the -agent. This indicates that the FFC algorithm can enable -agents to escape from the dynamic equilibrium due to the alteration of the virtual force equilibrium. It can be noted from Figure 12 that the minimum distance of the FFC algorithm between the -agent and objects decreases near the combined position of multiple obstacles. Nevertheless, the minimum distance remains greater than the minimum safe distance .

Figure 10.

Motion process of the two algorithms in spherical and wall obstacle combination scenario. The blue dots are the -agents, the yellow solid line between blue dots is the neighborhood relationship between -agents, the blue dotted lines are the motion trajectories of -agents, and the green dot is the -agent.

Figure 11.

Distance between the center of -agents and -agent in spherical and wall obstacle combination scenario.

Figure 12.

Minimum distance between -agent and objects in spherical and wall obstacle combination scenario.

5.3. Complex Obstacle Scenario Experiment

In this experiment, a complex obstacle scenario is selected as follows:

- :

- There are four spherical obstacles of radius 15 at coordinates , , , and , and two spherical obstacles of radius 10 at coordinates and . Additionally, there are seven wall obstacles of length 10 at coordinates , , , , , , and , seven wall obstacles of length 20 at coordinates , , , , , , and , two wall obstacles of length at coordinates and , and four wall obstacles of length at coordinates , , , and .

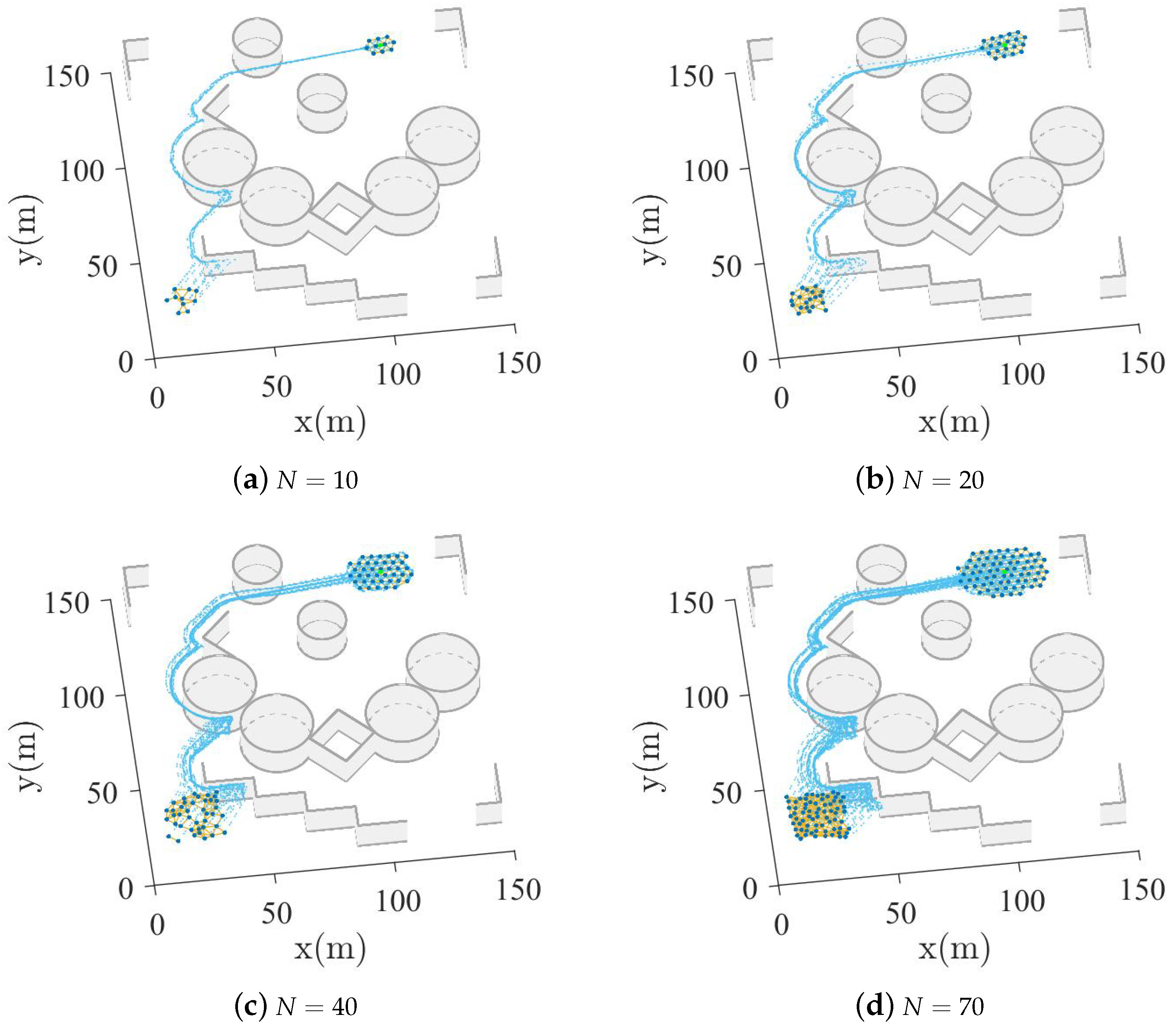

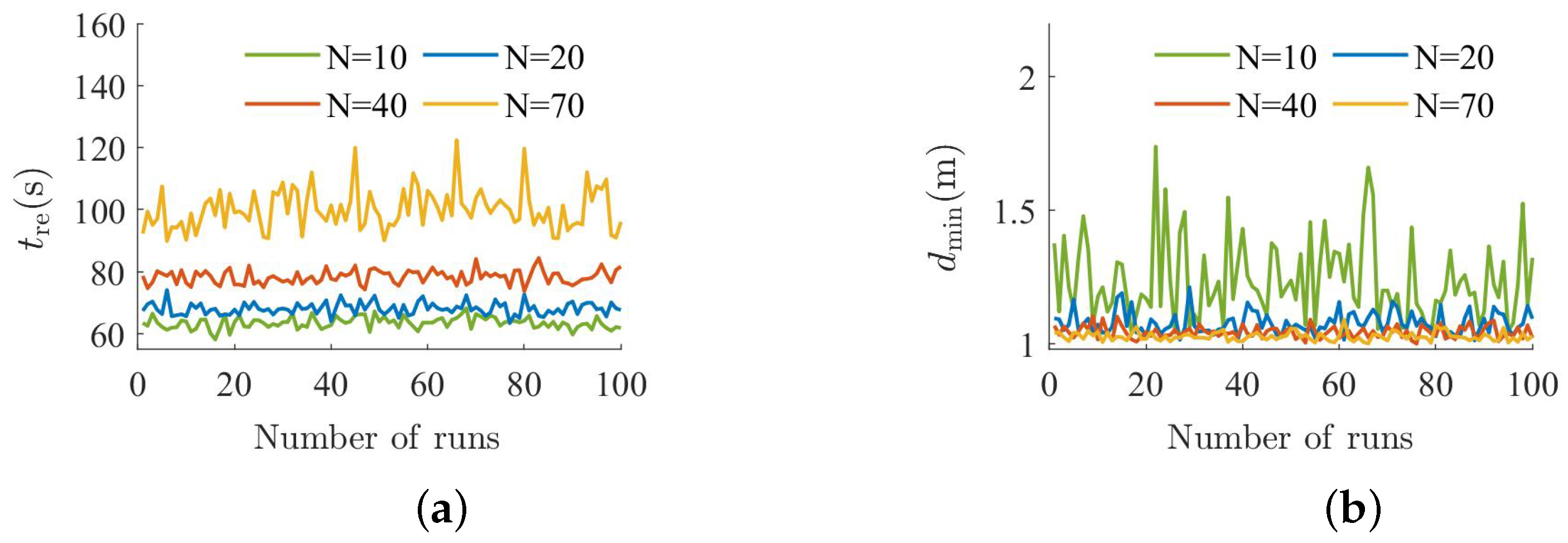

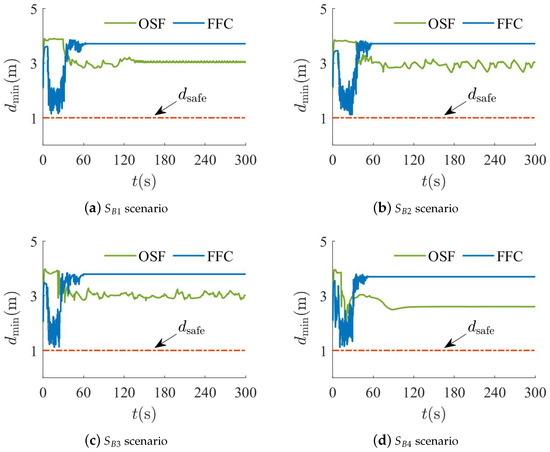

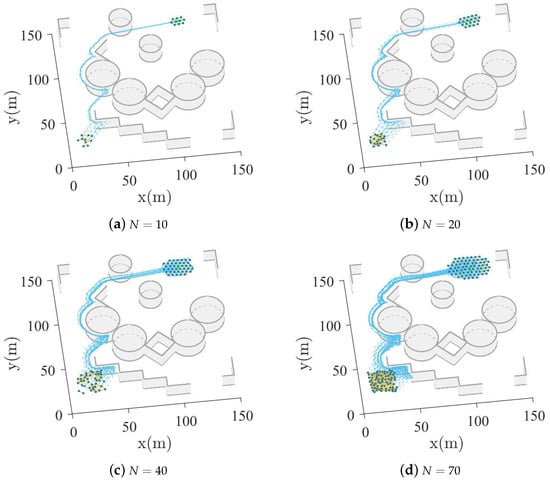

The number of -agents is , the initial positions of these -agents are selected randomly from , and the initial velocities of these -agents are selected randomly from . In addition, the initial position of the virtual -agent is , and the initial velocity of the virtual -agent is zero.

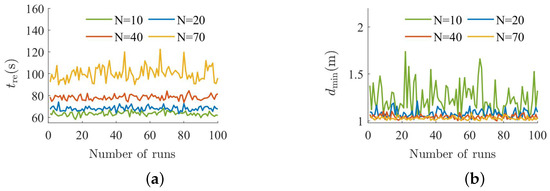

The visual results of the FFC algorithm in the complex obstacle scenario are shown in Figure 13. It can be seen that all -agents of the FFC algorithm can bypass the complex obstacles and follow the -agent. Furthermore, the quantitative results of the FFC algorithm over 100 runs are shown in Figure 14. It can be observed that as the number of -agents increases, all -agents of the FFC algorithm can still follow the -agent and do not collide with the objects. In summary, the FFC algorithm is suitable for obstacle avoidance in complex obstacle environments.

Figure 13.

Motion process of the FFC algorithm in complex obstacle scenario. The blue dots are the -agents, the yellow solid line between blue dots is the neighborhood relationship between -agents, the blue dotted lines are the motion trajectories of -agents, and the green dot is the -agent.

Figure 14.

The quantitative results of the FFC algorithm over 100 runs in complex obstacle scenario. (a) The time taken for all -agents to bypass obstacles and re-aggregate near the -agent. (b) Minimum distance between -agent and objects.

6. Conclusions

In this paper, we proposed an FFC algorithm to solve the problem of multi-agents trapped in dynamic equilibrium under multiple obstacles. The proposed FFC algorithm calculates the fuzzy expected velocity by the established membership function and fuzzy rules when the agent is trapped in dynamic equilibrium. Furthermore, the FFC algorithm divides the sensing radius region of the agent into four subregions and designs a nonnegative subsection function to adjust the attractive/repulsive potentials in these subregions. The properties analysis demonstrates that multi-agents can achieve collision-free goal-reaching motion when the sufficient conditions in Theorems 1 and 2 are satisfied. The experimental results verify that the proposed algorithm enables multi-agents to escape from dynamic equilibrium and is suitable for obstacle avoidance in complex obstacle environments. However, it is found that the time taken for all agents to bypass obstacles and re-aggregate near the goal position increases with the number of agents. To overcome this problem, we will focus on reducing the computational complexity and improving the scalability of the flocking control algorithm in the future. This includes optimizing the pairwise attractive/repulsive potential and defining multi-agent motion rules. In addition, we will focus on improving the robustness of the flocking control algorithm, such as minimizing sensor noise.

Author Contributions

Conceptualization, methodology, software, investigation, and writing—original draft preparation, W.L.; conceptualization, formal analysis, investigation, writing—review and editing, and funding acquisition, X.S.; writing—review and editing, supervision, project administration, and funding acquisition, Y.J.; validation, data curation, and visualization, X.L.; resources and writing—review and editing, J.W.; data curation and visualization, Z.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supposed in part by the Foundation from the Guangxi Zhuang Autonomous Region under Grants AA23062038 and 2024GXNSFAA010270, and in part by the National Natural Science Foundation of China under Grants U23A20280 and 62161007.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yan, C.; Wang, C.; Xiang, X.; Low, K.H.; Wang, X.; Xu, X.; Shen, L. Collision-Avoiding Flocking with Multiple Fixed-Wing UAVs in Obstacle-Cluttered Environments: A Task-Specific Curriculum-Based MADRL Approach. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 10894–10908. [Google Scholar] [CrossRef]

- Tran, V.P.; Garratt, M.A.; Kasmarik, K.; Anavatti, S.G.; Leong, A.S.; Zamani, M. Multi-gas source localization and mapping by flocking robots. Inf. Fusion 2023, 91, 665–680. [Google Scholar] [CrossRef]

- Shi, L.; Cheng, Y.; Shao, J.; Sheng, H.; Liu, Q. Cucker-Smale flocking over cooperation-competition networks. Automatica 2022, 135, 109988. [Google Scholar] [CrossRef]

- Li, J.; Zhang, W.; Su, H.; Yang, Y. Flocking of partially-informed multi-agent systems avoiding obstacles with arbitrary shape. Auton. Agents Multi-Agent Syst. 2015, 29, 943–972. [Google Scholar] [CrossRef]

- Lyu, Y.; Hu, J.; Chen, B.M.; Zhao, C.; Pan, Q. Multivehicle Flocking with Collision Avoidance via Distributed Model Predictive Control. IEEE Trans. Cybern. 2021, 51, 2651–2662. [Google Scholar] [CrossRef] [PubMed]

- Pignotti, C.; Vallejo, I.R. Flocking estimates for the Cucker–Smale model with time lag and hierarchical leadership. J. Math. Anal. Appl. 2018, 464, 1313–1332. [Google Scholar] [CrossRef]

- Jiang, W.; Liu, K.; Charalambous, T. Multi-agent consensus with heterogeneous time-varying input and communication delays in digraphs. Automatica 2021, 135, 109950. [Google Scholar] [CrossRef]

- Zou, Y.; An, Q.; Miao, S.; Chen, S.; Wang, X.; Su, H. Flocking of uncertain nonlinear multi-agent systems via distributed adaptive event-triggered control. Neurocomputing 2021, 465, 503–513. [Google Scholar] [CrossRef]

- Yu, Z.; Jiang, H.; Hu, C. Second-order consensus for multiagent systems via intermittent sampled data control. IEEE Trans. Syst. Man Cybern. Syst. 2017, 48, 1986–2002. [Google Scholar] [CrossRef]

- Olfati-Saber, R. Flocking for multi-agent dynamic systems: Algorithms and theory. IEEE Trans. Autom. Control 2006, 51, 401–420. [Google Scholar] [CrossRef]

- Sakai, D.; Fukushima, H.; Matsuno, F. Flocking for Multirobots Without Distinguishing Robots and Obstacles. IEEE Trans. Control Syst. Technol. 2017, 25, 1019–1027. [Google Scholar] [CrossRef]

- Jia, Y.; Wang, L. Leader–Follower Flocking of Multiple Robotic Fish. IEEE/ASME Trans. Mechatron. 2015, 20, 1372–1383. [Google Scholar] [CrossRef]

- Wu, J.; Yu, Y.; Ma, J.; Wu, J.; Han, G.; Shi, J.; Gao, L. Autonomous Cooperative Flocking for Heterogeneous Unmanned Aerial Vehicle Group. IEEE Trans. Veh. Technol. 2021, 70, 12477–12490. [Google Scholar] [CrossRef]

- Su, H.; Wang, X.; Lin, Z. Flocking of Multi-Agents with a Virtual Leader. IEEE Trans. Autom. Control 2009, 54, 293–307. [Google Scholar] [CrossRef]

- Zhou, Z.; Ouyang, C.; Hu, L.; Xie, Y.; Chen, Y.; Gan, Z. A framework for dynamical distributed flocking control in dense environments. Expert Syst. Appl. 2024, 241, 122694. [Google Scholar] [CrossRef]

- Yan, T.; Xu, X.; Li, Z.; Li, E. Flocking of multi-agent systems with unknown nonlinear dynamics and heterogeneous virtual leader. Int. J. Control Autom. Syst. 2021, 19, 2931–2939. [Google Scholar] [CrossRef]

- Sun, Z.; Xu, B. A flocking algorithm of multi-agent systems to optimize the configuration. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 7680–7684. [Google Scholar] [CrossRef]

- Zhao, W.; Chu, H.; Zhang, M.; Sun, T.; Guo, L. Flocking Control of Fixed-Wing UAVs with Cooperative Obstacle Avoidance Capability. IEEE Access 2019, 7, 17798–17808. [Google Scholar] [CrossRef]

- Qiu, Z.; Zhao, X.; Li, S.; Xie, Y.; Chen, C.; Gui, W. Finite-time formation of multiple agents based on multiple virtual leaders. Int. J. Syst. Sci. 2018, 49, 3448–3458. [Google Scholar] [CrossRef]

- Duhé, J.F.; Victor, S.; Melchior, P. Contributions on artificial potential field method for effective obstacle avoidance. Fract. Calc. Appl. Anal. 2021, 24, 421–446. [Google Scholar] [CrossRef]

- Azzabi, A.; Nouri, K. An advanced potential field method proposed for mobile robot path planning. Trans. Inst. Meas. Control 2019, 41, 3132–3144. [Google Scholar] [CrossRef]

- Semnani, S.H.; Liu, H.; Everett, M.; De Ruiter, A.; How, J.P. Multi-agent motion planning for dense and dynamic environments via deep reinforcement learning. IEEE Rob. Autom. Lett. 2020, 5, 3221–3226. [Google Scholar] [CrossRef]

- Beaver, L.E.; Malikopoulos, A.A. An overview on optimal flocking. Annu. Rev. Control 2021, 51, 88–99. [Google Scholar] [CrossRef]

- Szczepanski, R.; Bereit, A.; Tarczewski, T. Efficient local path planning algorithm using artificial potential field supported by augmented reality. Energies 2021, 14, 6642. [Google Scholar] [CrossRef]

- Song, J.; Hao, C.; Su, J. Path planning for unmanned surface vehicle based on predictive artificial potential field. Int. J. Adv. Rob. Syst. 2020, 17, 1729881420918461. [Google Scholar] [CrossRef]

- Bayat, F.; Najafinia, S.; Aliyari, M. Mobile robots path planning: Electrostatic potential field approach. Expert Syst. Appl. 2018, 100, 68–78. [Google Scholar] [CrossRef]

- He, Z.; Chu, X.; Liu, C.; Wu, W. A novel model predictive artificial potential field based ship motion planning method considering COLREGs for complex encounter scenarios. ISA Trans. 2023, 134, 58–73. [Google Scholar] [CrossRef]

- Szczepanski, R. Safe artificial potential field-novel local path planning algorithm maintaining safe distance from obstacles. IEEE Rob. Autom. Lett. 2023, 8, 4823–4830. [Google Scholar] [CrossRef]

- Mao, X.; Zhang, H.; Wang, Y. Flocking of quad-rotor UAVs with fuzzy control. ISA Trans. 2018, 74, 185–193. [Google Scholar] [CrossRef] [PubMed]

- Ntakolia, C.; Moustakidis, S.; Siouras, A. Autonomous path planning with obstacle avoidance for smart assistive systems. Expert Syst. Appl. 2023, 213, 119049. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, T.; Jian, J. Flocking with obstacle avoidance based on fuzzy logic. In Proceedings of the IEEE ICCA 2010, Xiamen, China, 9–11 June 2010; pp. 1876–1881. [Google Scholar] [CrossRef]

- Sharma, A.K.; Singh, D.; Singh, V.; Verma, N.K. Aerodynamic Modeling of ATTAS Aircraft Using Mamdani Fuzzy Inference Network. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 3566–3576. [Google Scholar] [CrossRef]

- Song, W.; Yang, Y.; Fu, M.; Qiu, F.; Wang, M. Real-Time Obstacles Detection and Status Classification for Collision Warning in a Vehicle Active Safety System. IEEE Trans. Intell. Transp. Syst. 2018, 19, 758–773. [Google Scholar] [CrossRef]

- Wu, J.; Ji, Y.; Sun, X.; Liang, W. Obstacle Boundary Point and Expected Velocity-Based Flocking of Multiagents with Obstacle Avoidance. Int. J. Intell. Syst. 2023, 2023, 1493299. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).