1. Introduction

Advances in marine exploration, security operations, ecological surveillance, and subsea resource exploitation are driving growing demands for technologies capable of identifying submerged objects. Nevertheless, aquatic conditions present substantial difficulties. The swift degradation and diffusion of light through water create formidable obstacles for conventional detection approaches. (Consequently, the accurate detection of underwater objects has become a critical technical problem that urgently needs to be addressed.) Currently, the urgent problem we need to solve is boosting accuracy and efficiently detecting targets in complex environments. To this end, we have innovatively introduced multimodal fusion technology and the MSCA attention mechanism.

In complex environments, underwater object recognition tasks often encounter numerous challenges, particularly in low-visibility or dynamic scenes, where single-modality images frequently fail to capture the complete scene effectively. Traditional single-modality images, such as Visible Light Image, are typically susceptible to varying illumination conditions and weather changes, which results in a sharp decline in their detection performance in challenging environments characterized by low light, smoke, rain, or snow. Infrared Image exhibits superior adaptability compared to Visible Light Image under low-visibility conditions, providing clearer scene information during nighttime or harsh weather. As a result, the integration of multimodal imaging has become pivotal in advancing the capabilities of detection systems [

1]. Compared to single-modal data, multimodal data offers more comprehensive scene information, allowing individual modalities to compensate for each other’s limitations.

With the advancement of technology, multimodal object detection faces greater challenges. For example, how to effectively fuse image information from different modalities, how to handle data heterogeneity between different sensors, and how to solve the real-time problem in data processing at scale are still hotspots of current research [

2]. The recent advancement in deep neural networks has introduced innovative approaches to integrating multimodal visual data.

Current multimodal object detection techniques based on convolutional neural networks and other deep learning models have achieved significant progress in multiple realms. To tackle the challenges posed by intricate underwater conditions and scarce sample data, Zeng et al. [

3] incorporated an adversarial occlusion mechanism into the R-CNN framework, achieving a 2.6% enhancement in mean average precision. To tackle the complex challenge of underwater target classification, Qiao et al. [

4] developed an efficient and precise classifier by leveraging local wavelet acoustic patterns combined with a multilayer perceptron architecture. Lin et al. [

5] addressed the problems of overlap, occlusion, and blur of underwater targets and constructed a generalizable model. Wei Biao, Qiao et al. [

4], addressing the heterogeneity and difficulty of underwater passive target classification, proposed a design scheme for a real-time, accurate underwater target classifier based on Local Wavelet Acoustic Patterns and a Multilayer Perceptron neural network. Liu et al. [

6] introduced the parametric attention mechanism SimAm into the YOLOv8 model, which can deduce and distribute three-dimensional attention weights without increasing network parameters. The improved model’s MAP@0.5 can reach 91.8%. To enhance computational focus on target regions, Wen et al. [

7] developed an enhanced YOLOv5s-CA architecture through the integration of Coordinate Attention and Squeeze-and-Excitation modules, achieving a 2.4% improvement in mean average precision. Meanwhile, Zhang et al. [

8] incorporated a global attention mechanism into YOLOv5 to strengthen feature extraction in critical areas, while implementing a multi-branch re-parameterization design to boost multi-scale feature integration capabilities. To advance detection architecture, Lei et al. [

9] implemented Swin Transformer as YOLOv5’s backbone while optimizing both the PANet feature fusion strategy and confidence loss function, subsequently enhancing both detection precision and model robustness. In parallel developments, Zhou et al. [

10] created a specialized underwater detection framework incorporating cross-stage multi-branch architecture and large-kernel spatial pyramid modules to handle diverse target scales. Similarly, Gao et al. [

11] developed a large-kernel convolutional network leveraging self-attention mechanisms for long-range dependency modeling, effectively addressing both false alarms and missed detections while strengthening small-object identification.

Although significant advances have been achieved in detection accuracy, existing approaches remain primarily concentrated on single-modal object detection and demonstrate considerable constraints when applied to underwater target recognition in intricate environments. To bridge this gap, a novel YOLOv8-FUSED framework is introduced. The core innovations presented in this study are outlined as follows:

- [1]

A mid-term fusion strategy is employed to integrate features from infrared and Visible Light Images. This approach yields richer feature representations, thereby significantly enhancing the model’s robustness in complex environments.

- [2]

By introducing MSCA, the network can more precisely focus on significant target regions and information, leading to improved Detection Accuracy.

- [3]

To address the lightweight requirement of the model, an ESC_Head was designed, effectively reducing the model’s parameter count and enhancing computational efficiency.

2. Method

YOLOv8 comprises three components: the Backbone, Neck, and Head. In comparison to other YOLO series models, it incorporates several enhancements in network architecture, training process, and feature extraction capabilities, resulting in faster processing velocity and improved accuracy. In this paper, the primary focus is on refining the network Structure of YOLOv8n.

All computational experiments were executed on a Windows 10 platform equipped with an NVIDIA GeForce RTX 4070 GPU, 12 GB memory, and an Intel Core i5-12400F processor. The implementation was based on PyTorch 1.13.1, with Python 3.7 and CUDA 11.7 providing the underlying computational environment.

2.1. YOLOv8-FUSED Object Detection Algorithm

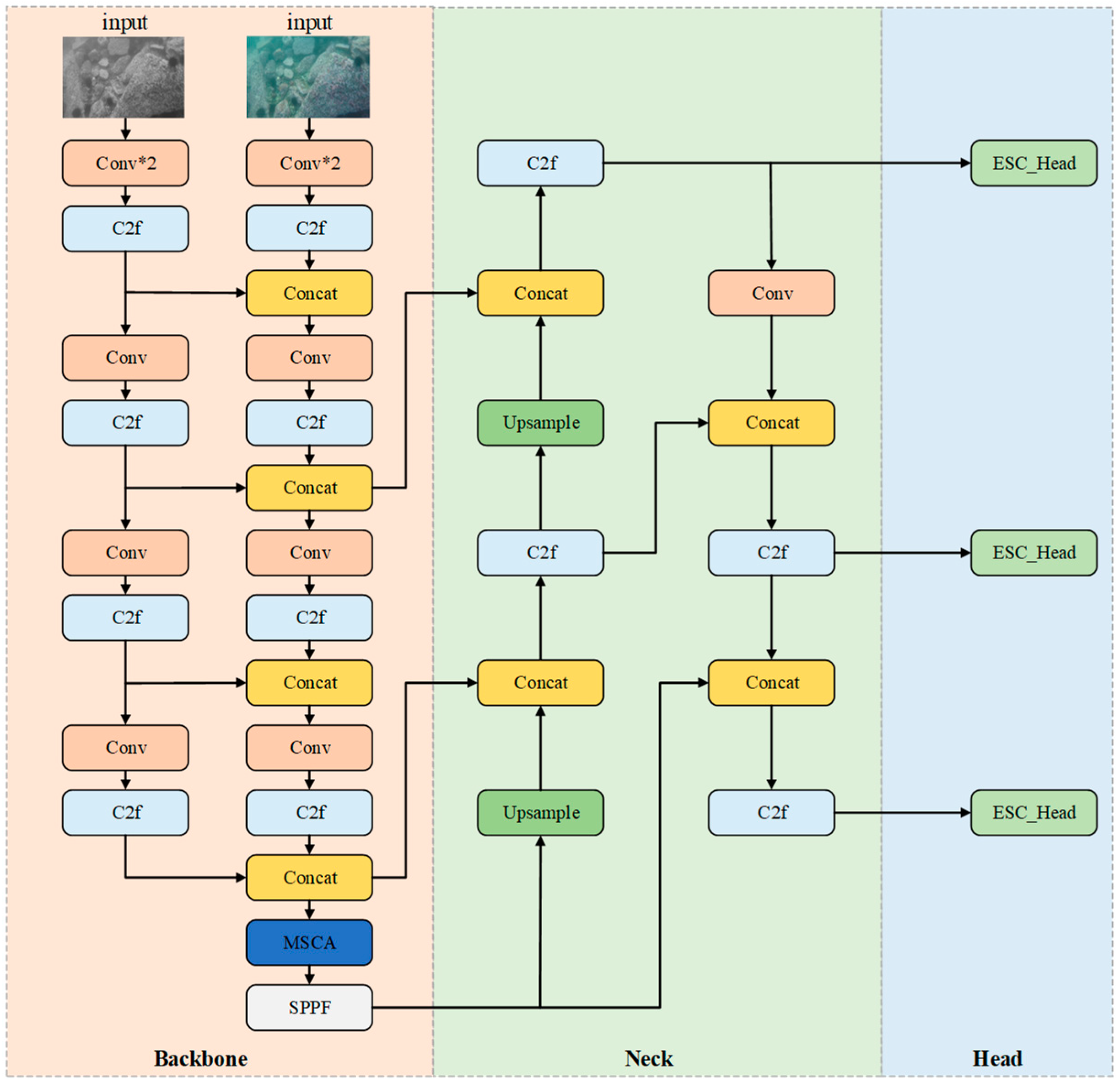

Building upon the YOLOv8 architecture and incorporating a multimodal fusion strategy, this research introduces a novel underwater detection framework termed YOLOv8-FUSED. The methodology first employs intermediate fusion to combine visible and infrared imaging features, generating more comprehensive representations that substantially augment environmental adaptability. Subsequently, a multi-scale cross-axis attention mechanism (MSCA) is embedded into the backbone network, enabling refined focus on critical regions and consequently elevating detection precision. Furthermore, to address lightweight deployment needs, an ESC_Head component is implemented, successfully optimizing both parameter volume and computational performance. The overall architecture of the enhanced YOLOv8-FUSED system is illustrated in

Figure 1.

2.2. Visible Light and Infrared Light Fusion Model

The process of underwater image acquisition fundamentally differs from terrestrial scenarios due to water’s selective absorption and scattering of light wavelengths, target distance variations, and illumination source characteristics. Given the superior propagation capacity of shorter-wavelength blue light, subaquatic scenes generally display a dominant blue-green cast. Although artificial illumination can expand observable ranges, it simultaneously creates localized overexposure and intensifies light diffusion through suspended particulates. These compounded physical phenomena result in characteristic visual degradations including diminished contrast, uneven illumination, loss of acuity, highlight artifacts, and elevated sensor noise.

The field of multimodal object detection has attracted growing research interest and witnessed broadening implementation across visual computing domains, driven by continuous progress in multisensory fusion methodologies. Multimodal target detection utilizes multiple data modalities, such as Visible Light Image, Infrared Image, LiDAR point clouds, and radar images, which are obtained from various sensors. Information fusion then enables efficient target detection and recognition. Compared to single-modality data, multimodal data can provide more comprehensive environmental information. Multimodal fusion offers significant advantages in underwater fish target detection, particularly when using Visible Light Image and Infrared Image as input. The subaquatic realm is characterized by severely limited visibility, significant optical distortion, and high concentrations of suspended particulates, leading to turbidity. These conditions impede the accurate detection of underwater targets by single-modality images, particularly Visible Light Image. Infrared Image can address these challenges by offering supplementary information, particularly in low-light conditions. Consequently, by fusing multimodal images, both Detection Accuracy and robustness can be substantially enhanced [

12].

Multimodal fusion strategies are systematically classified according to their integration stage within the detection pipeline, comprising early, intermediate (feature-level), and late fusion paradigms. The early fusion approach integrates multisource data at the preprocessing stage, where infrared and visible spectra are initially combined before being processed through the detection architecture. This method allows the model to simultaneously process all input data during the initial feature extraction stage, thereby capturing complex relationships between different data sources [

13]. The intermediate fusion strategy entails independent feature extraction from infrared and visible imaging data, with subsequent integration of these feature representations inside the detection architecture. This method not only allows for independent feature extraction from different data sources but also enables the capture of higher-level interactions at the feature level [

14]. In object detection, late fusion refers to the integration of output results at the final decision level, after each data source has been processed by independent models. An intermediate fusion approach is adopted, as illustrated in

Figure 2. Initially, both Visible Light Image and infrared images are fed into the model. Following two downsampling operations for each feature map type, the respective feature information is then concatenated and fused. This initial fusion step aims to extract fundamental image features, including edges, textures, and shapes. Subsequently, further downsampling and convolution operations are applied to both types of feature maps. During this stage, high-level features are primarily extracted. These high-level features from both modalities are then concatenated and fused. Subsequently, the process continues to a stage where more advanced features are extracted. At this point, the information within the feature maps grows increasingly abstract, enabling the description of the target’s overall shape and structure. By fusing images from these two modalities, the model can effectively leverage the strengths of both, thereby yielding richer feature representations and addressing the inherent limitations of a single modality [

15].

2.3. ESC_Head

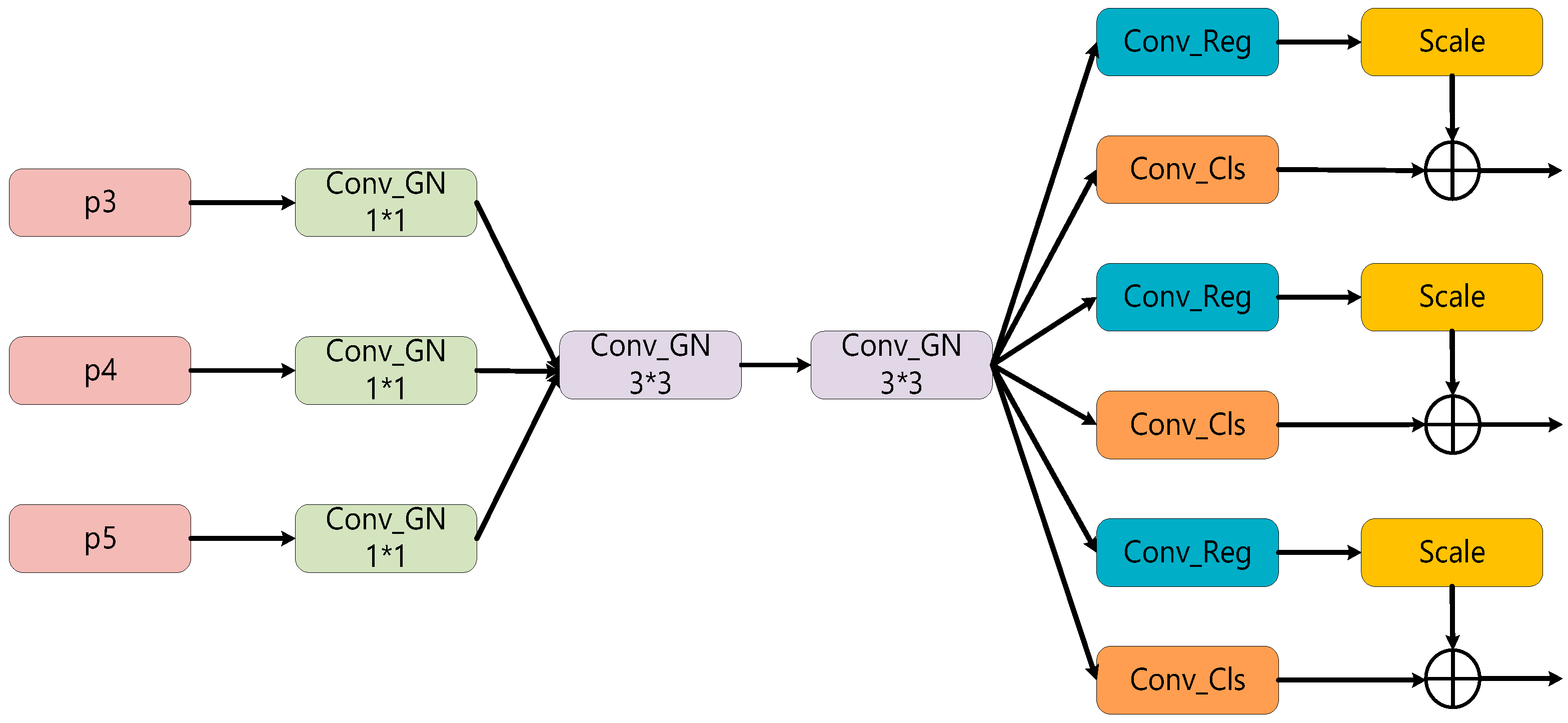

YOLOv8 utilizes a dual-branch decoupled header structure, where separate branches compute classification and bounding box regression losses, respectively. This design incorporates two convolutional modules per branch, consequently introducing significant growth in parameter volume and computational demands. To address this, we designed the ESC_Head, the structural diagram of which is presented in

Figure 3.

A shared convolutional approach was implemented to minimize parameter count while enhancing processing speed. For subaquatic target recognition tasks, particularly within computational budget limitations requiring real-time performance, both inference velocity and memory footprint represent critical considerations. By employing a parameter sharing mechanism, shared convolution allows the weights of the convolutional kernel to be reused across different positions of the input data, thereby significantly reducing the number of parameters and lowering the computational burden. These enhancements simultaneously boost inference velocity and alleviate overfitting resulting from parameter redundancy, rendering the approach especially appropriate for extensive scene analysis in underwater imagery.

A dedicated scaling module was integrated into the architecture to handle the inherent multi-scale variations in underwater imagery. This component enables dynamic resizing of hierarchical features, thus improving the framework’s capability to address multi-scale detection challenges. Underwater targets in underwater environments often exhibit varying sizes and distances, making it challenging for traditional object detection models to effectively address the problem of scale inconsistency. The Scale layer enables models to more precisely adjust their response to targets of varying scales. This is particularly crucial in underwater images, where targets often present diverse scales and clarity issues due to factors like water currents, fluctuating lighting, or inherent image quality problems. The application of the Scale layer can significantly alleviate these difficulties and enhance Detection Accuracy.

The use of shared convolutions not only significantly reduces the number of model parameters but also, through its parameter sharing mechanism, enables the model to more efficiently learn universal traits in underwater images, such as low-level traits like edges and textures of underwater targets. Such capability proves essential in subaquatic target recognition, where imagery frequently suffers from degraded visual information due to influences including optical conditions and water turbidity. Through shared convolutions, the model can fetch similar traits at different positions, thereby improving the learning efficiency of local traits, and consequently elevating detection accuracy.

Throughout the entire detection process, first, the feature maps P3, P4, and P5 output by different tiers of the Feature Pyramid Network (FPN) are unified in terms of channel quantity using 1 × 1 convolutional layers, and then batch normalization is conducted using GroupNorm. This operation not only helps to warrant the stability of the training procedure but also provides high-quality feature maps for subsequent convolutional operations. Next, the feature maps, after 1 × 1 convolution, are input into two consecutive 3 × 3 convolutional layers, which, through a shared convolutional mechanism, significantly reduces the model’s computation burden while enhancing the model’s capability to extract underwater image traits. Finally, the output feature maps, after trait extraction, respectively, enter the regression branch and the classification branch. The regression branch (Conv_Reg) outputs, via convolution, the bounding box regression prediction results for underwater targets, which are typically coordinate offsets; while the classification branch (Conv_Cls) outputs, via convolution, the category prediction results, such as foreground (fish and other underwater organisms), background classification, or multiclass probability prediction. In underwater images, distinguishing between underwater targets and the background can be quite challenging due to factors such as water quality and light. Through this branching structure, the model can simultaneously focus on the positioning and classification of underwater targets, improving the precision and accuracy of detection. Using a Scale layer in the regression branch can balance the prediction scales of feature maps at different levels, especially adapting to the detection requirements of multi-scale underwater targets. The target sizes and positions in underwater images exhibit significant variations. The Scale layer enables the model to be more adaptable to object detection at different scales, improving the detection competency for distant underwater targets, thereby enhancing the performance of multi-scale detection.

2.4. MSCA Attention Mechanism

In subaquatic object recognition, attention mechanisms serve an essential function by emulating the human visual system’s selective focus capability. This enables models to concentrate on salient regions while filtering out extraneous background content, ultimately enhancing detection precision. To mitigate the problem of excessive computational complexity when processing high-resolution images, previous object detection research proposed using attention mechanisms as a tool to establish long-range dependencies. Generally speaking, attention mechanisms can be categorized into channel attention mechanisms and spatial attention mechanisms, particularly in semantic segmentation [

16].

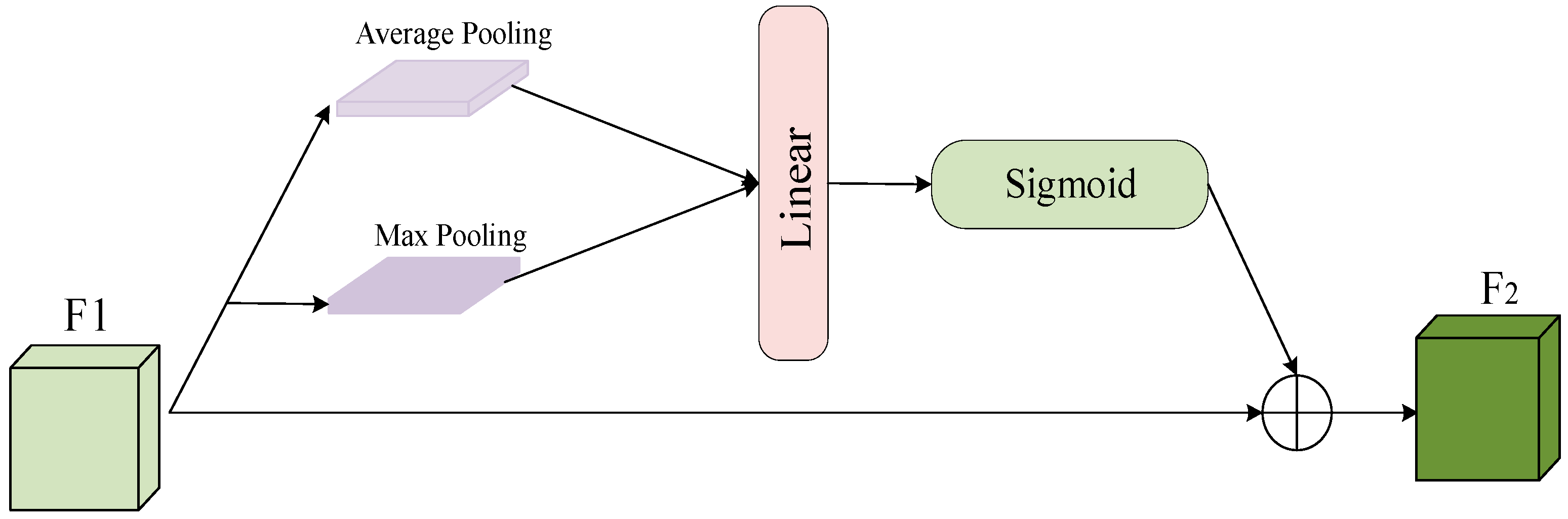

Illustrated in

Figure 4, the channel attention module assigns adaptive weights to input features based on inter-channel dependencies. This architecture initially compresses feature maps through parallel global average and max pooling procedures, producing compressed representations with dimensions (batch_size, channels, 1, 1). These aggregated features are then fed into a two-layer multilayer perceptron to derive specialized weighting coefficients for individual channels. These learned weights are then passed through a Sigmoid activation function to output attention weights ranging from 0 to 1. The resulting channel attention weights are then multiplied element-wise with the input feature map, thereby enhancing the response of important channels and suppressing the influence of unimportant channels [

17].

As shown in

Figure 5, the spatial attention mechanism module focuses on weighting the input feature map based on the significance of each spatial location. A spatial attention map is generated through a 7 × 7 convolutional operation, which has no bias term and a kernel size of 7, to capture local spatial information. The spatial attention map outputs weights through a Sigmoid activation function, representing the significance of each spatial location. Then, this attention map is multiplied by the input feature map, enhancing the information of important locations and suppressing the influence of unimportant locations [

18].

However, the above-mentioned channel attention mechanism and spatial attention mechanism rarely focus on target features, such as the target’s size and shape. Therefore, how to effectively encode global context while taking into account the different shapes and sizes of targets still remains a problem worth investigating. To enhance the model’s response to important features, especially in cases of blur, low contrast, or heavy occlusion, and to help the model better recognize targets, this paper designs MSCA based on an effective axial attention mechanism. Its principle is shown in

Figure 6, where d, k1 × k2 denotes depthwise convolution (d) using a kernel size of k1 × k2. We use convolution to extract multi-scale features and then take advantage of them as attention weights to re-weight the input of MSCA. MSCA contains three parts: depthwise directional convolution for aggregating local information, multi-branch depthwise directional band convolution for capturing multi-scale context, and 1 × 1 convolution for modeling relations between different channels. The algorithm directly uses the output of the 1 × 1 convolution as attention weights to reweight the input of MSCA. Stacking a series of building blocks produces a suitable convolutional encoder, named MSCAN. For MSCAN, we adopt a common hierarchy, including four stages. The spatial resolutions are H4 × W4, H8 × W8, H16 × W16, and H32 × W32, respectively, where H and W are the height and width of the input image. Each stage contains a stack of the aforementioned downsampling blocks and building blocks, where the downsampling block has a convolution with a stride of 2 and a kernel size of 3 × 3, followed by a batch normalization layer. It is noteworthy that, in each building block of MSCAN, we use Batch Normalization instead of Layer Normalization, because we discovered in our experiments that Batch Normalization provides a greater performance gain.

Mathematically, MSCA can be written as:

where F represents the input functionality. Att and Out are the attention map and output, respectively. is the element matrix multiplication operation. DW-Conv represents depthwise separable convolution, Scalei, i ∈ {0, 1, 2, 3}, represents the i-th branch in

Figure 6b. In each fork, two depthwise separable strip convolutions are used to approximate the standard depthwise separable convolution with a large kernel. There, the kernel sizings of each fork are set to 7, 11, and 21, respectively. There are two reasons for selecting depthwise separable strip convolutions. On one hand, strip convolution is lightweight. In order to simulate a standard 2D convolution with a kernel sizing of 7 × 7, only a pair of 7 × 1 and 1 × 7 convolutions are needed. On the other hand, strip convolutions can be used to supplement mesh convolutions and help extract strip features. Finally, the MSCA attention mechanism is onboarded to the backbone part of the YOLOv8 mock-up.

3. Experimental Results and Analysis

3.1. Experimental Environment

The software versions and computing platform utilized in the experiments remain fully consistent with those specified in

Section 2. The experiment dataset consists of 6034 valid underwater images of four distinct marine species—sea cucumbers, sea urchins, scallops, and starfish—obtained from a public dataset. The URPC2021 dataset was acquired in the waters of the Changshan Islands, Dalian, by the Underwater Robot Professional Contest in 2021 and features bounding box annotations in Pascal VOC format. The images typically have a resolution of 3840 × 2160, and the scenes encompass near-seabed pastures and sandy substrates. The dataset demonstrates diversity in target scale, pose, distribution density, and seabed environment. Significantly, it features samples with challenging imaging conditions, such as blur, color cast, and uneven illumination, thus providing a comprehensive benchmark for evaluating and improving model robustness. For model training and evaluation, the dataset was randomly partitioned into training and test sets at a 7:3 ratio. Specifically, the training set consisted of 4224 images, while the test set contained 1810 images.

3.2. Evaluation Metrics

This study employs precision, recall, mean average precision (mAP), and parameter count as key indicators to comprehensively assess the detection capability of the proposed model. The calculation methods for these metrics are presented below:

The evaluation framework employs three fundamental metrics: True Positives (TPs), False Positives (FPs), and False Negatives (FNs). Mean Average Precision (mAP) serves as the primary performance indicator, computed by averaging precision values across all object categories. Two specific mAP variants are utilized: mAP@0.5 with a fixed IoU threshold of 0.5, and mAP@0.5:0.95 which averages.

3.3. Performance Comparison and Result Analysis

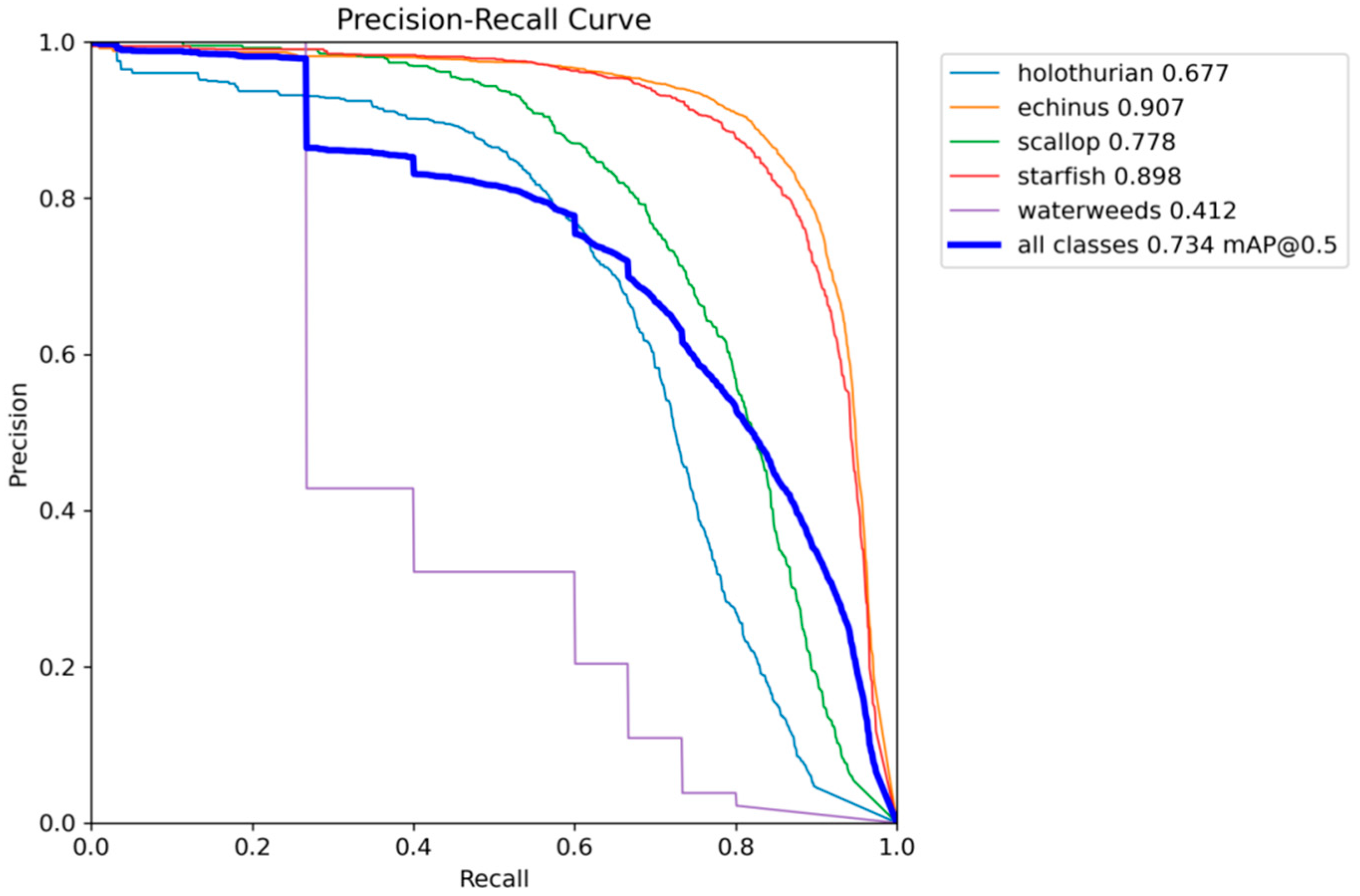

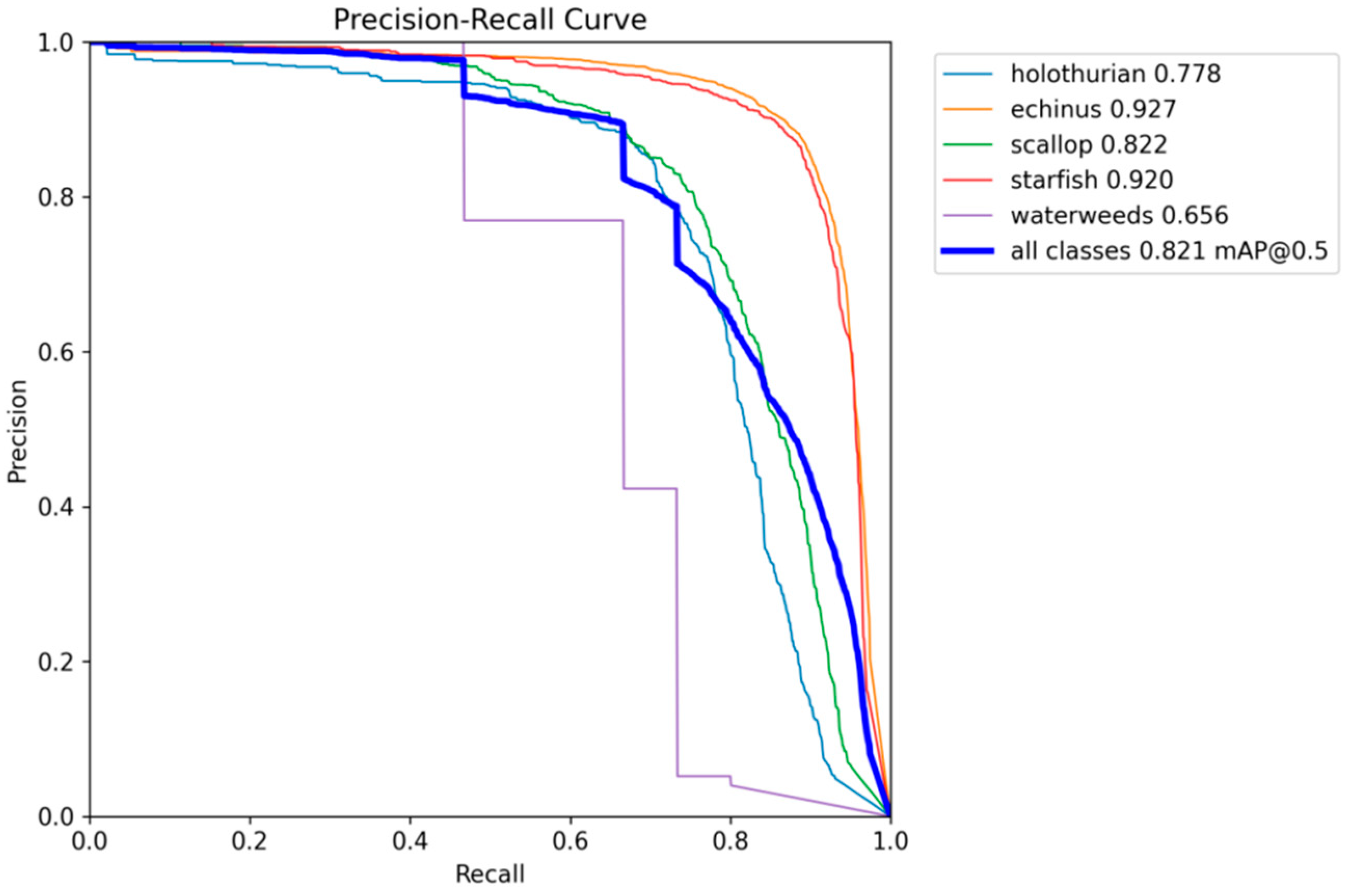

The improved Multimodal Underwater Object Detection model YOLOv8-FUSED was trained and validated on the dataset URPC2021, as shown in

Figure 7 and

Figure 8 YOLOv8-FUSED demonstrates an impressive detection performance for all categories of objects, with an mAP@0.5 of 82.1%, an 8.7% improvement compared to the original YOLOv8n model. The enhanced model’s performance is further evidenced by the blue precision–recall curve, which demonstrates a clear progression toward the optimal region. This trajectory reflects an enhanced equilibrium between precision and recall metrics, accompanied by substantially strengthened capabilities in target localization and classification. In addition, the precision for each category has also significantly improved compared to the original YOLOv8n model. Specifically, holothurian improved by 10%, echinus by 2%, scallop by approximately 5%, and starfish by 2%. Notably, waterweeds improved by approximately 25%, reflecting that YOLOv8-FUSED’s detection ability for such difficult-to-recognize targets has been fully improved.

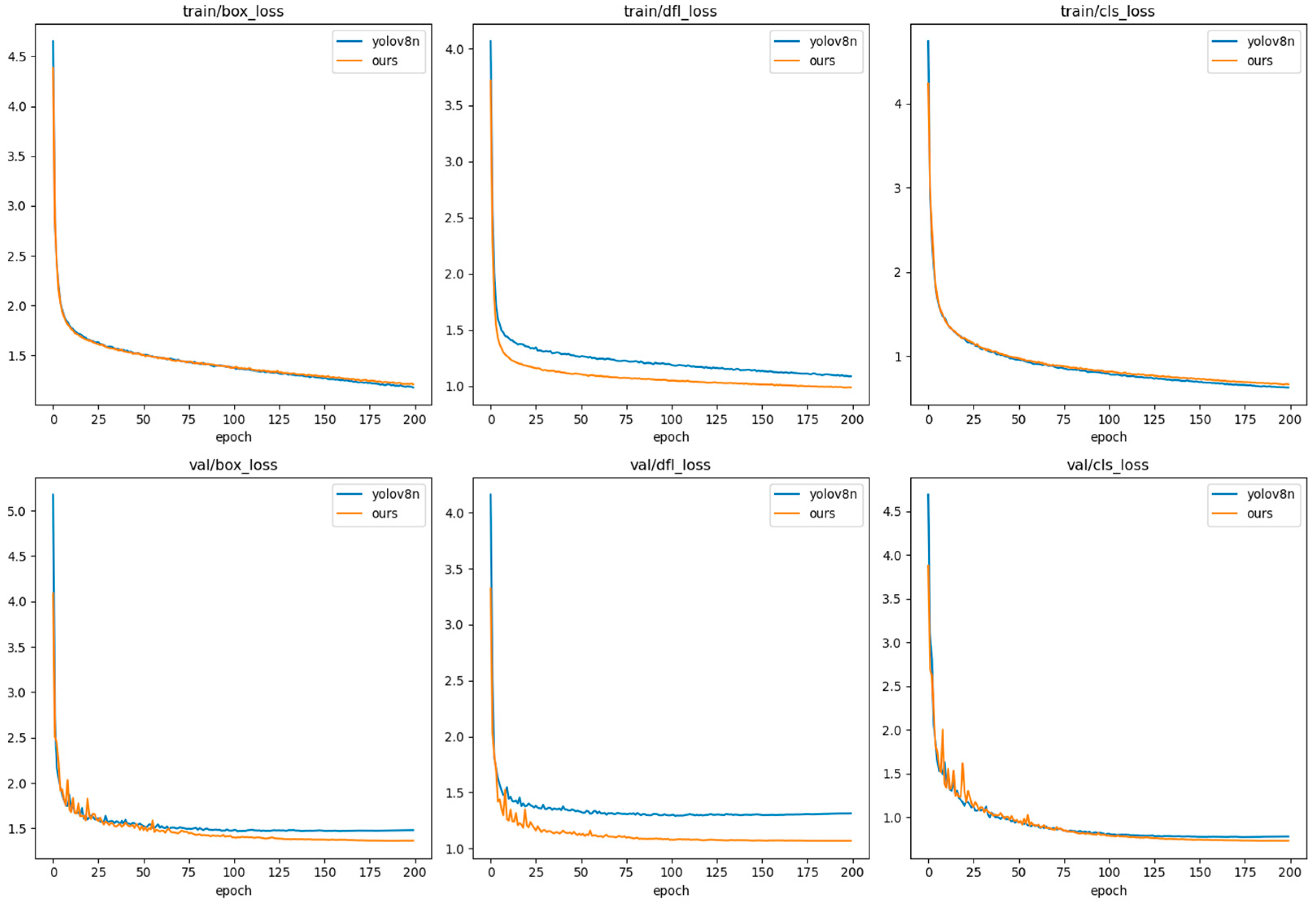

A comparative analysis of training procedures between the baseline YOLOv8n and the enhanced multimodal YOLOv8-FUSED framework reveals consistent convergence behavior, with

Figure 9 demonstrating a rapid decline in loss metrics for both architectures as training iterations advance. The gradient tends to flatten as the loss value approaches 1.4, and full convergence is achieved when the number of training epochs reaches 200. However, at 200 epochs, the YOLOv8-FUSED model exhibits a lower loss value. This indicates that its training process is more stable, possesses stronger generalization capability for target detection, and carries a lower risk of overfitting. As evidenced in

Figure 10, the enhanced architecture exhibits clear superiority over the baseline in terms of critical performance measures including Precision, Recall, and mAP, validating the efficacy of the proposed modifications.

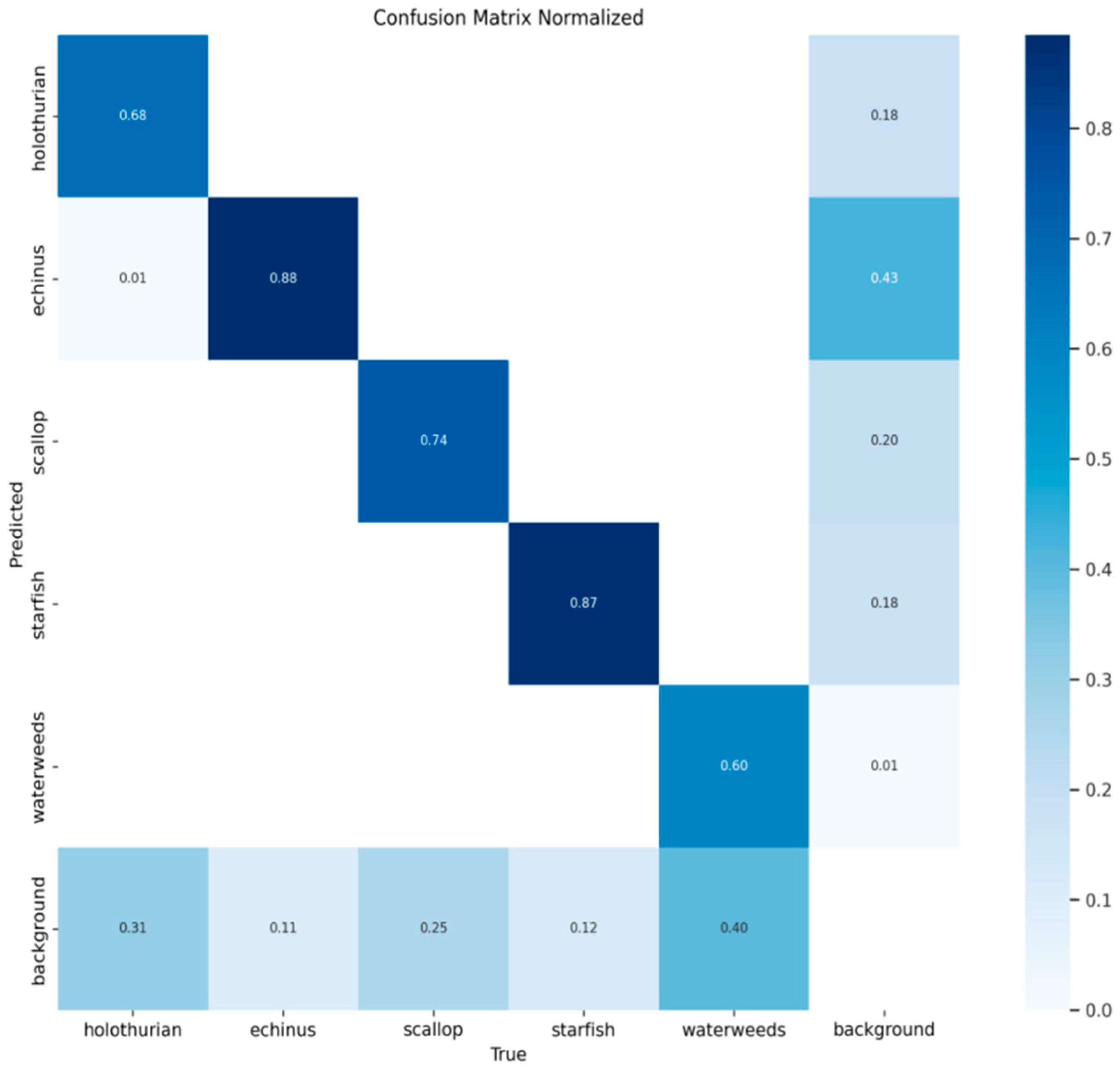

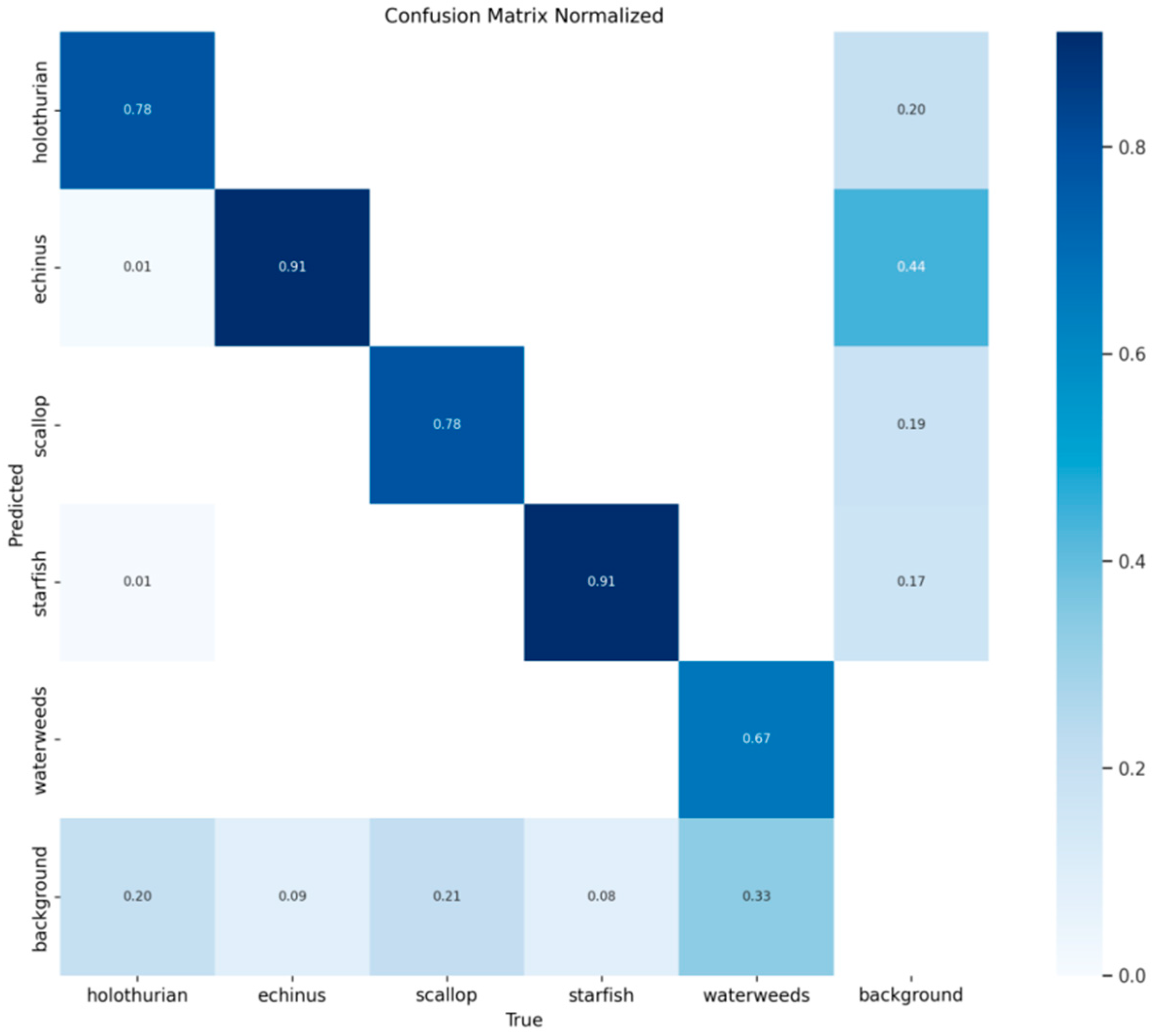

A confusion matrix was generated from the validation dataset to provide a comprehensive evaluation of the enhanced model’s performance, with columnar entries indicating predicted classifications and row-wise annotations corresponding to actual categories.

Comparative analysis of confusion matrices between the baseline YOLOv8n and the enhanced multimodal framework YOLOv8-FUSED, as illustrated in

Figure 11 and

Figure 12, demonstrates substantial improvements in both recognition precision and system robustness across all object categories. Specifically, for the “holothurian” category, the improved model’s recall rate increased from the benchmark model’s 0.68 to 0.78, while its false detection rate markedly decreased. This demonstrates an improvement in its object detection accuracy. For the ‘echinus’ and ‘scallop’ categories, the improved model achieved recall rates of 0.91 and 0.78, respectively. The enhanced architecture exhibits a notable decrease in false alarm rate relative to the reference model, while demonstrating superior discrimination capacity under conditions of substantial background interference. Similarly, for the ‘starfish’ and ‘waterweeds’ categories, the enhanced model also significantly improved Detection Accuracy, with recall rates reaching 0.91 and 0.67, respectively. Notably, within the ‘waterweeds’ category, the refined model effectively minimized false negatives and substantially reduced the false positive rate. Regarding background classification, the improved model also demonstrated excellent performance in minimizing background false positives and enhancing the distinction between background elements and targets. The refined framework substantially strengthens its operational resilience and environmental adaptability in challenging subaquatic conditions through enhanced capacity to concentrate on relevant areas while minimizing disruptive background elements. This resulted in higher Detection Accuracy and considerable practical application potential.

To more intuitively verify the effectiveness of the model improvement,

Figure 13 presents a comparison of the visual detection results between the baseline YOLOv8n model and the improved Multimodal YOLOv8-FUSED model on the URPC2021 dataset. As clearly shown in

Figure 13, the improved model demonstrates significant enhancements in both target Detection Accuracy and robustness. Although the reference architecture can identify multiple objects, its output continues to display substantial erroneous inclusions and omissions, consequently undermining operational reliability.

For instance, in the first image, the baseline model erroneously detected certain background regions as targets, with low confidence scores for these detections, thereby highlighting its limitations in complex environments. The enhanced framework exhibited marked improvement in both detection precision and operational stability, successfully recognizing targets while effectively suppressing background disturbances. This improvement is particularly evident in the enhanced target detection confidence. For instance, in the “holothurian” category, the confidence score increased from 0.85 for the baseline model to 0.89. Additionally, the improved model enables more accurate target localization in low-contrast and complex background environments. For instance, in the ‘starfish’ category, it effectively reduced missed detections and improved Detection Accuracy. Overall, by optimizing feature extraction and enhancing robustness, the improved model significantly improved target detection performance, demonstrating stronger adaptability, especially when processing diverse targets in complex underwater environments.

Heatmap visualizations, presented in

Figure 14, were conducted to better illustrate the advantages of the original model, with red regions signifying areas where the model exhibits greater attention to features. A significant improvement in the target Detection Accuracy and focusing ability of YOLOv8-FUSED is clearly observed when comparing its heatmaps with those of the original YOLOv8n model. In the heatmap of the original YOLOv8n model, the heat distribution within the target region appears more scattered and less concentrated. This suggests that the model encounters certain errors and uncertainties during object recognition, particularly resulting in suboptimal performance in complex backgrounds and low-contrast areas. In stark contrast, the improved model exhibits more concentrated heat regions in its heatmap, leading to more precise object recognition. These heat regions clearly delineate the target, thereby reducing interference from background noise. This demonstrates the improved model’s enhanced capabilities in target localization and accuracy. The refined framework demonstrates strengthened resilience within intricate aquatic settings, allowing for more precise concentration on relevant regions and thereby elevating both detection consistency and precision.

4. Ablation Experiments and Comparative Experiments

A comprehensive set of modular ablation studies was performed on the URPC2021 benchmark to methodically evaluate the efficacy of individual enhancement components. Each group of experiments was conducted under identical conditions, configured with the same hyperparameters, and employed the same training strategy. The experimental results are presented in

Table 1, where A, B, C, and D denote the benchmark model YOLOv8n, the multimodal fusion strategy, ESC_Head, and the MSCA attention mechanism, respectively.

First,

Table 1 clearly reveals that the original model YOLOv8n exhibits astable performance across most categories. However, its performance on mAP@0.5 and mAP@0.5-0.95 is relatively low, particularly demonstrating poor Detection Accuracy under low-IoU conditions.

The introduction of the multimodal fusion strategy (A + B) led to a significant improvement in the model’s precision and recall. Conversely, with the integration of ESC_Head (A + C), a slight decrease in precision was observed, though recall improved from 0.692 to 0.706. Furthermore, the model size was significantly reduced from 3.2M to 2.83M. Furthermore, incorporating the MSCA attention mechanism (A + D) substantially enhanced the model’s ability to focus on target regions, resulting in notable gains in the detection of “echinus” and “starfish” categories. Furthermore, a precision of only 0.723 was observed when the model lacked the MSCA attention mechanism (A + B + C), which is lower than the 0.769 achieved by the original YOLOv8n model. Conversely, with the incorporation of MSCA (A + B + D), precision significantly increased to 0.807. These findings demonstrate that incorporating the Multi-Scale Cross-Axis Attention (MSCA) module significantly improves the model’s capacity to concentrate on critical image regions while mitigating background interference, leading to subsequent improvements in feature extraction quality.

Finally, the A + B + C + D (OURS) model demonstrated the most superior overall performance. Significant enhancements were observed in both precision and recall, which improved by 3.8 and 9.1 percentage points, respectively. Furthermore, mAP@0.5 and mAP@0.5-0.95 also experienced substantial gains, increasing by 8.6 and 8.3 percentage points, respectively. Compared to A + B + D, the model size was reduced from 4.62M to 3.86M. Ablation experiments demonstrate that the integration of visible and infrared light multimodality fusion strategy, ESC_Head, and MSCA attention mechanism effectively enhances the accuracy of underwater object detection while achieving model compression. This combination exhibits improved adaptability, particularly when dealing with complex environmental factors and diverse objects.

A comparative analysis was performed to validate the enhanced capabilities of the refined YOLOv8-FUSED framework, with detailed outcomes documented in

Table 2. The evaluation demonstrates the proposed framework’s superior performance relative to established detection architectures—encompassing YOLOv5, YOLOv9, YOLOv10, YOLOv11, RTDETR, and MAMBAYOLO—across multiple evaluation metrics. Specifically, the model attained precision and recall rates of 80.7% and 78.3%, respectively. This significantly outperformed other models, particularly by striking a superior balance between detection accuracy and coverage. In contrast, YOLOv5 and RTDETR showed lower precision and recall rates, suggesting substantial issues with missed detections and false positives in their object detection tasks. On the mAP@0.5 metric, our model achieved a remarkable mAP@0.5 value of 82.1%, significantly outperforming YOLOv5 (71.4%) and other YOLO series models, such as YOLOv9 (73.3%) and YOLOv10 (71.8%).

Specifically, our model exhibited excellent detection performance across various categories, particularly in the “holothurian,” “echinus,” and “scallop” categories, where its mAP@0.5 values surpassed those of other models. In the more challenging “waterweeds” category, an mAP@0.5 of 65.6% was achieved, representing a significant improvement compared to models like YOLOv5 (31.8%) and RTDETR (28.8%). This demonstrates its enhanced adaptability under challenging conditions such as low contrast, complex backgrounds, and object occlusion.

Overall, by fusing multimodal data, incorporating ESC_Head, and employing the MSCA attention mechanism, our model significantly enhanced the accuracy, robustness, and computational efficiency of Underwater Object Detection. This is particularly evident in complex environments, where it enables more precise detection of multiclass targets. An excellent balance between Detection Accuracy and speed is achieved, demonstrating superior performance and offering significant practical value compared to existing models.

Figure 15 presents the accuracy progression during the training of various models. A detailed comparison of the experimental curves indicates that the improved model (OURS) consistently achieved an outstanding performance across all key metrics, significantly outperforming other comparative models. Notably, across metrics including accuracy, recall, mAP@0.5, and mAP@0.5-0.95, the OURS model consistently maintained high scores throughout the training process and exhibited stable improvement in later stages, underscoring its excellent performance in object detection tasks, particularly under stringent IoU requirements. In contrast, while YOLOv10 and YOLOv11 also demonstrated a good performance in terms of accuracy and recall, their rate of improvement was comparatively slower, and their detection capabilities were notably weaker under more stringent IoU standards. RT-DETR and mambaYOLO, however, consistently showed inferior results across multiple metrics, particularly at elevated IoU thresholds, where their detection performance demonstrated sluggish improvement, indicating a constrained optimization potential. Overall, the OURS model has successfully enhanced the accuracy, robustness, and adaptability of object detection by leveraging an optimized architectural design and training strategy, thereby exhibiting significant advantages and promising application potential, particularly in complex environments.

5. Conclusions

This paper proposes YOLOv8-FUSED, a multimodal visible and infrared light fusion Underwater Object Detection model, which is designed and trained based on the YOLOv8 model and integrates Multimodal Image Fusion Technology. First, by adopting an intermediate fusion strategy to integrate features from infrared and visible imaging modalities, the model acquires more comprehensive feature representations, thereby yielding substantially improved operational resilience in challenging environments. Second, we propose the Multi-Scale Cross-Axis Attention Mechanism, MSCA. This architectural component facilitates enhanced concentration on salient regions within the input data, consequently elevating recognition precision. Finally, addressing the lightweight requirement, this paper designs the Efficient Shared Convolution Head, ESC_Head, which effectively reduces the model’s parameter count while improving computational efficiency. Overall, a series of comparative experiments has demonstrated that the improved model significantly enhances the accuracy, robustness, and computational efficiency of Underwater Object Detection. It is particularly effective in complex environments, enabling more accurate detection of multiclass targets. Compared to existing models, it strikes an excellent balance between Detection Accuracy and speed, thus offering significant practical application value.

While this article has made some progress, there are still some limitations that need to be further improved in the future. For example, light decays exponentially with depth in water, resulting in low overall image luminance and poor contrast, which affects the generalization competency of the mock-up. Meanwhile, water surface undulation and local light sources can cause irregular light spots and shadows, severely obscuring target traits, making it difficult for the mock-up to fetch discriminative texture and outline info. In addition, suspended grain leads to severe vision blur effects and color aliasing. Before the light reaches the camera sensor, it is scattered in large quantities, and the image seems to be covered with a layer of “haze”, and the target edge becomes blurred. In addition, the scattering of light by grain (backscattering) will form a large amount of noise in the image, further submerging the target signal.

Looking ahead, underwater object detection remains a promising area of research with considerable room for advancement. This includes the construction of more comprehensive underwater datasets, encompassing a wider variety of marine life and artificial objects, to thoroughly evaluate and enhance the generalization capabilities of models. Furthermore, embedded deployment optimization will be conducted to achieve end-to-end real-time performance verification.