1. Introduction

Optimization algorithms play a pivotal role in resolving complex engineering challenges, identifying optimal solutions under specific constraints [

1,

2]. In recent years, meta-heuristic algorithms have garnered significant attention for their capacity to efficiently explore vast solution spaces without requiring gradient information [

3]. These algorithms, inspired by natural phenomena, physical laws, and swarm intelligence, have been successfully applied across diverse domains including forecasting, control systems, and mechanical engineering. However, within the specific application domain of electric winch operation in petroleum drilling rigs, traditional optimization methods encounter significant challenges. As the power core of the derrick hoisting system, electric winches must strike a balance between operational efficiency and safety considerations. During field operations, operator deviations in controlling the winch’s hoisting/lowering speed can readily trigger severe safety incidents such as ‘top bumping’ or ‘bottom slamming’. Such occurrences not only directly threaten personnel safety and equipment structural integrity but also precipitate a cascade of consequences including drilling operation interruptions and drill string damage. This underscores the critical impact of winch speed control precision on drilling safety and continuity.

This class of electric winch trajectory planning problems essentially constitutes a typical complex multi-objective optimization challenge. Its core characteristic lies in the simultaneous optimization of multiple conflicting objectives, where improvement in any single objective often leads to deterioration in others. Consequently, solutions capable of achieving a Pareto-optimal solution set must be sought [

4,

5]. To resolve this engineering challenge, an integrated solution combining ‘algorithm performance enhancement with engineering scenario adaptation’ must be developed. Practical engineering problems such as electric winch trajectory planning exhibit complex constraints, high dimensionality, and strong nonlinearity [

6,

7]. These characteristics render traditional mathematical optimization techniques—which rely on gradient information and possess weak constraint handling capabilities—ineffective. Such approaches not only frequently converge to local optima but also struggle to identify balanced solutions amidst multi-objective conflicts.

Against this backdrop, metaheuristic algorithms have garnered significant attention and experienced rapid development due to their advantages of requiring no gradient information, exhibiting strong global convergence, and possessing high flexibility [

8]. Early meta-heuristic algorithms primarily fall into three categories: evolutionary, physics-inspired, and swarm intelligence-based [

3]. Representative examples include genetic algorithms (GAs) [

9], simulated annealing (SA) [

10], and particle swarm optimization (PSO) [

11]. Subsequent developments have yielded improved algorithms such as gray wolf optimization (GWO) [

12] and the whale optimization algorithm (WOA) [

13]. Today, the algorithm classification system has become more systematic, with new categories emerging such as chemical reaction-based algorithms [

14], Teaching and Learning-based Optimization (TLBO) inspired by human behavior [

15], gradient-based optimization algorithms (GSO) [

16], and the Portia Spider Algorithm (PSA) [

17]. Current research priorities have shifted from ‘proposing entirely novel algorithms’ to ‘enhancing the performance of existing algorithms’. Mainstream approaches include introducing adaptive mechanisms, integrating chaotic strategies, or combining machine learning to construct hybrid models. Crucially, evolutionary algorithms within meta-heuristics demonstrate unique advantages when addressing such multi-objective problems [

18,

19]. These algorithms can simultaneously search for multiple Pareto-optimal solutions through population iteration while maintaining robust performance for high-dimensional, strongly nonlinear problems. They effectively address variable coupling and dynamic constraints in winch trajectory planning, overcoming the shortcomings of traditional mathematical optimization techniques, namely high computational cost and susceptibility to local optima.

In multi-objective optimization algorithms, the second-generation non-dominated sorting genetic algorithm (NSGA-II) has become a cornerstone method within the field due to its adoption of rapid non-dominated sorting and crowding degree calculation mechanisms to enhance solution convergence and distribution [

20,

21]. However, NSGA-II exhibits notable limitations when addressing complex problems such as electric winch trajectory optimization, which impede its performance on intricate tasks. These primarily include: susceptibility to premature convergence due to diminished population diversity [

22]; sluggish convergence rates when handling high-dimensional problems [

23]; and suboptimal performance of the crowding distance metric mechanism when multiple objectives are involved [

23]. To overcome these limitations, researchers have proposed various enhancement strategies. For instance, Lu et al. enhanced population diversity by introducing chaotic mappings [

24]; Pires et al. simultaneously improved convergence accuracy and distribution uniformity through local search strategies [

25]. Other studies integrated specialized operators such as Lévy walks [

26] or simulated annealing [

27] to enhance global search capabilities or better balance ‘exploration’ and “exploitation”. However, these improvements predominantly focused on single dimensions, with few studies simultaneously integrating ‘global exploration—local exploitation—parameter adaptation’ to form a synergistic mechanism.

Regarding the optimization of electric winch trajectory planning, existing research has yet to integrate trajectory planning models for the hoisting and lowering phases with high-performance multi-objective algorithms. To address this gap, this study constructs an enhanced algorithm (NSGAII-Levy-SA) based on an improved NSGA-II framework. This algorithm combines Levy flight with simulated annealing strategies and applies it to optimize the velocity trajectory of electric winches on oil drilling platforms.

The main contributions are summarized as follows:

Introduction of the Lévy flight mutation operator to broaden the global search scope and circumvent local optima traps;

Integration of the simulated annealing local search mechanism to enhance late-stage optimization capabilities;

Design of an adaptive parameter control strategy to dynamically coordinate the balance between exploration and exploitation.

The remainder of this paper is organized as follows:

Section 2 details the architecture and mechanics of the proposed NSGA-II-Levy-SA algorithm;

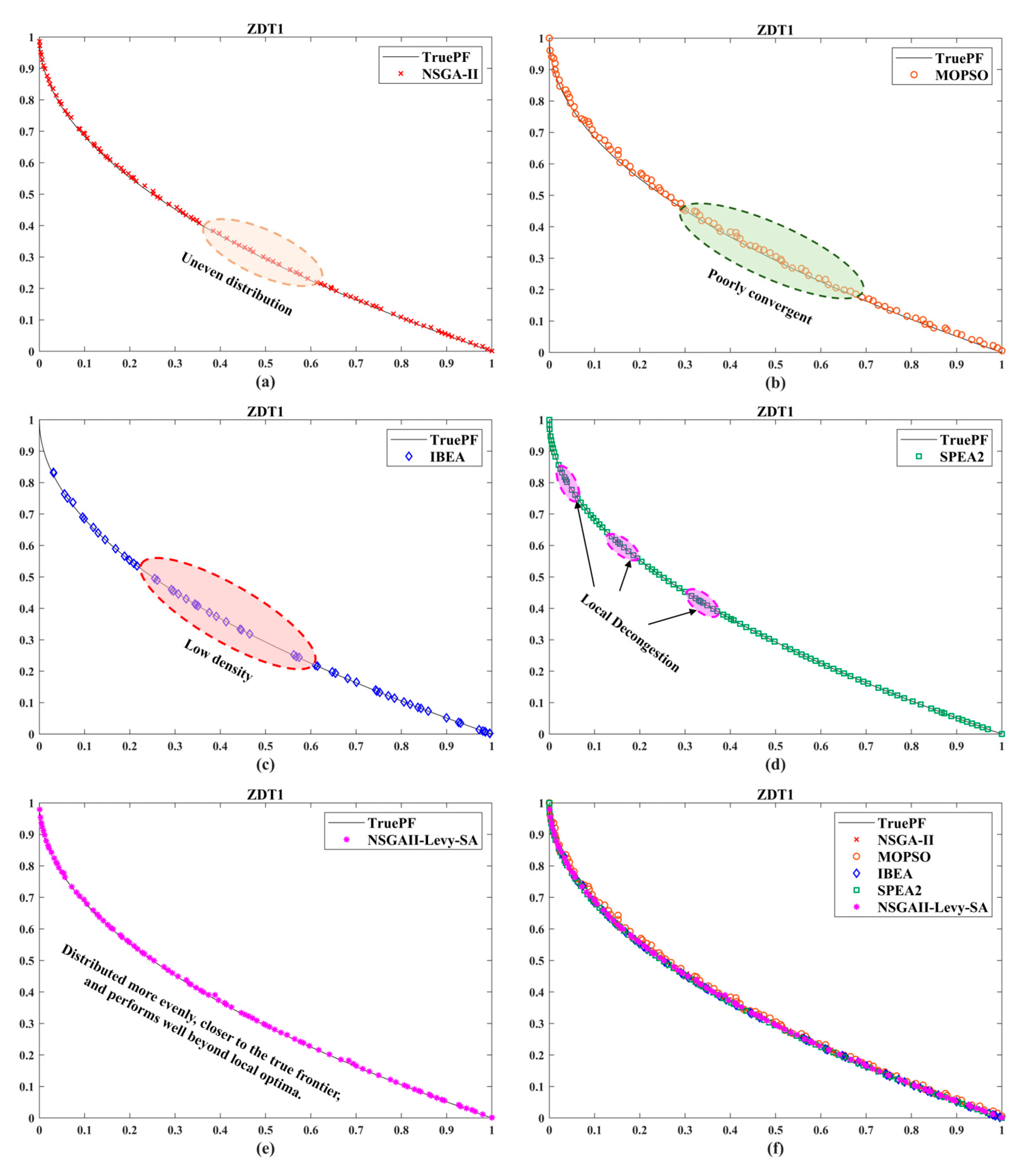

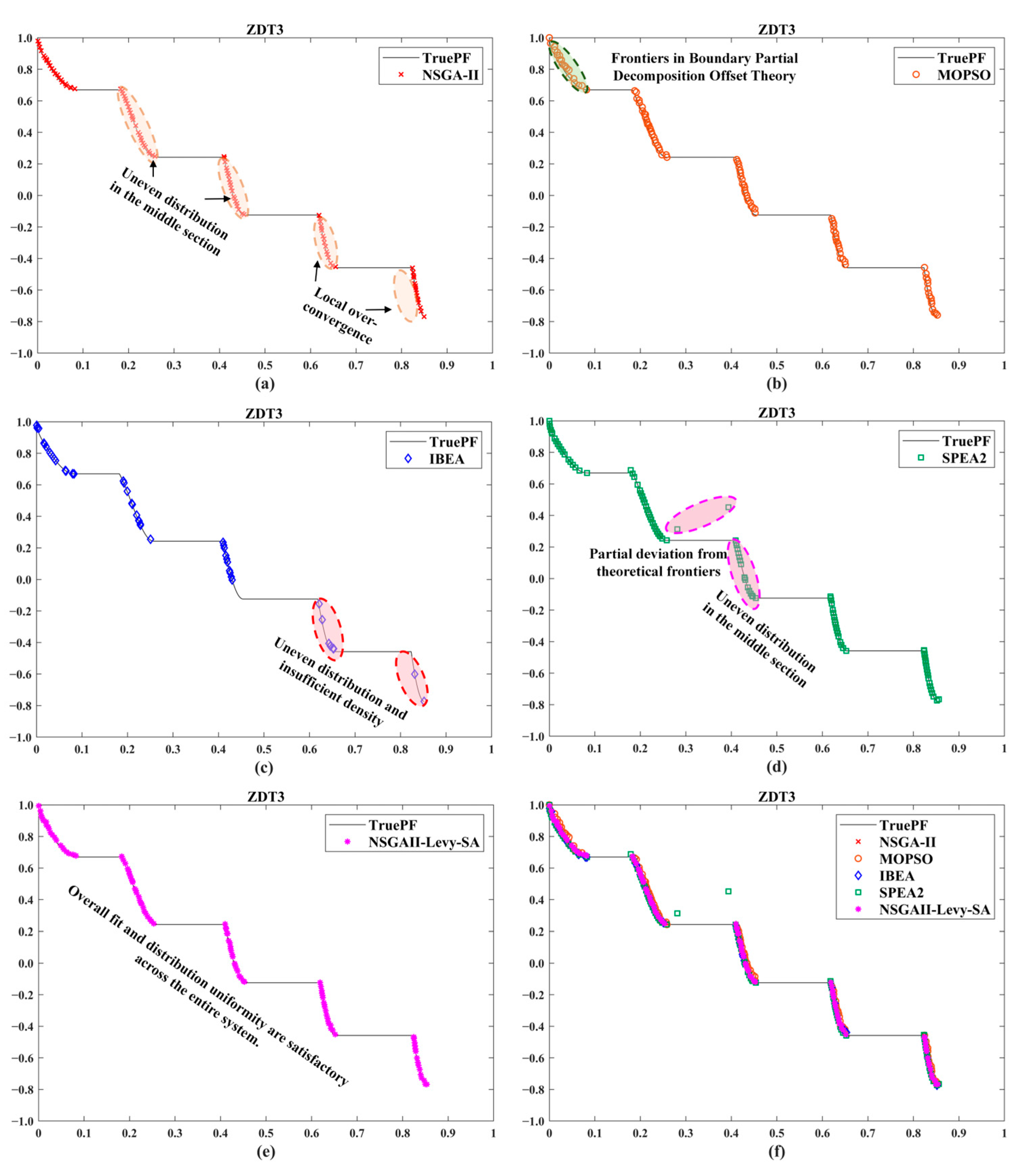

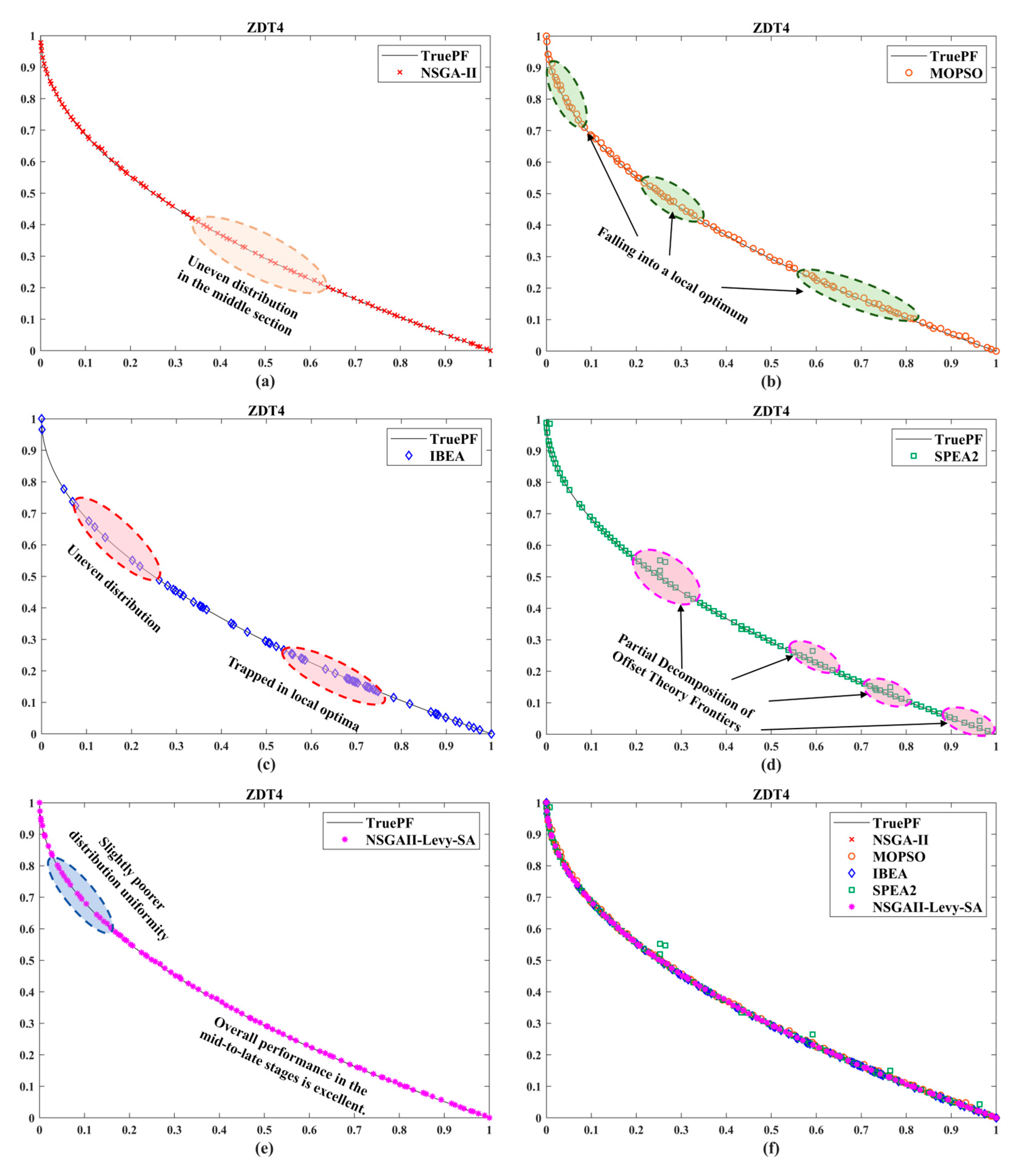

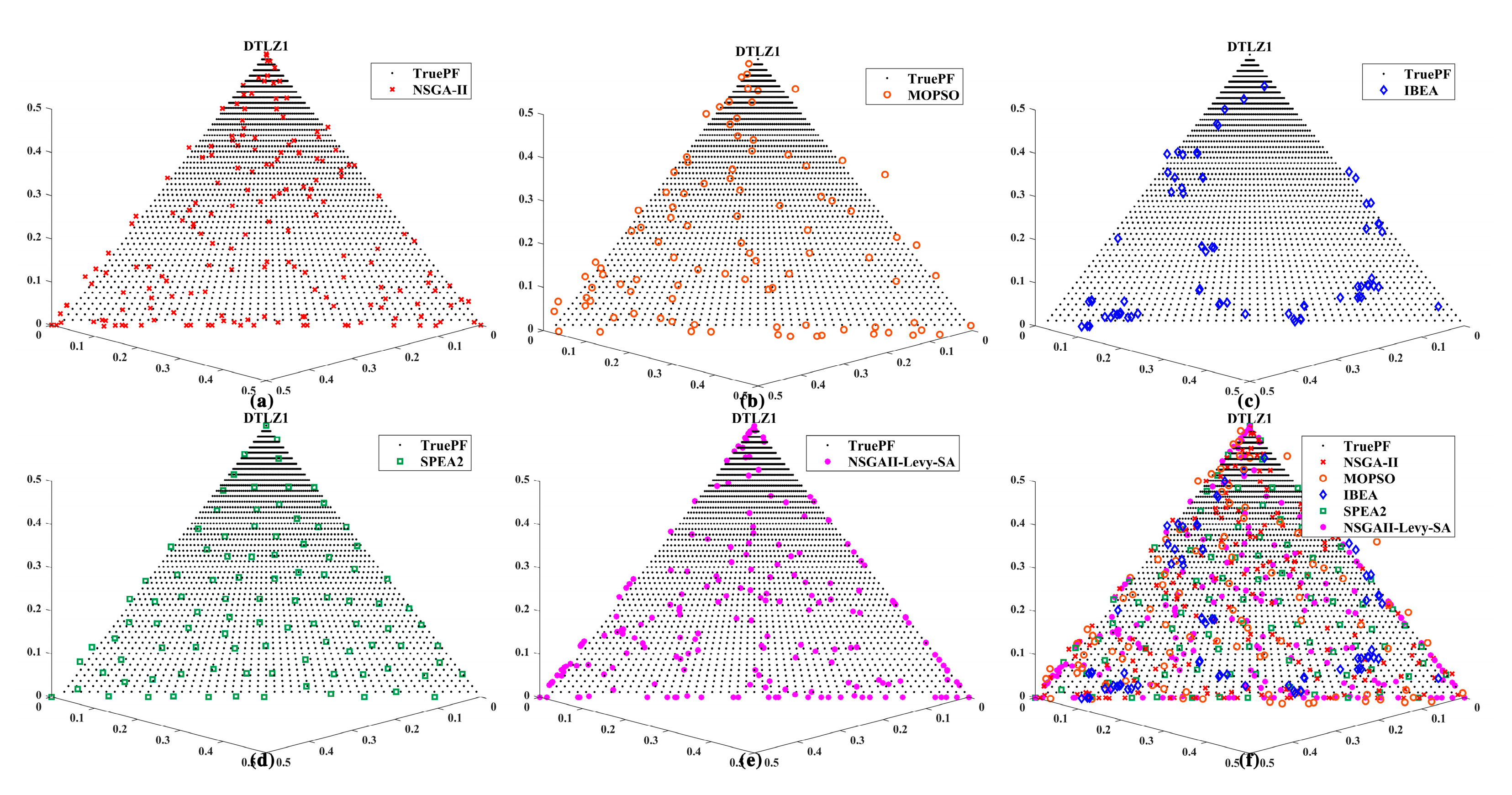

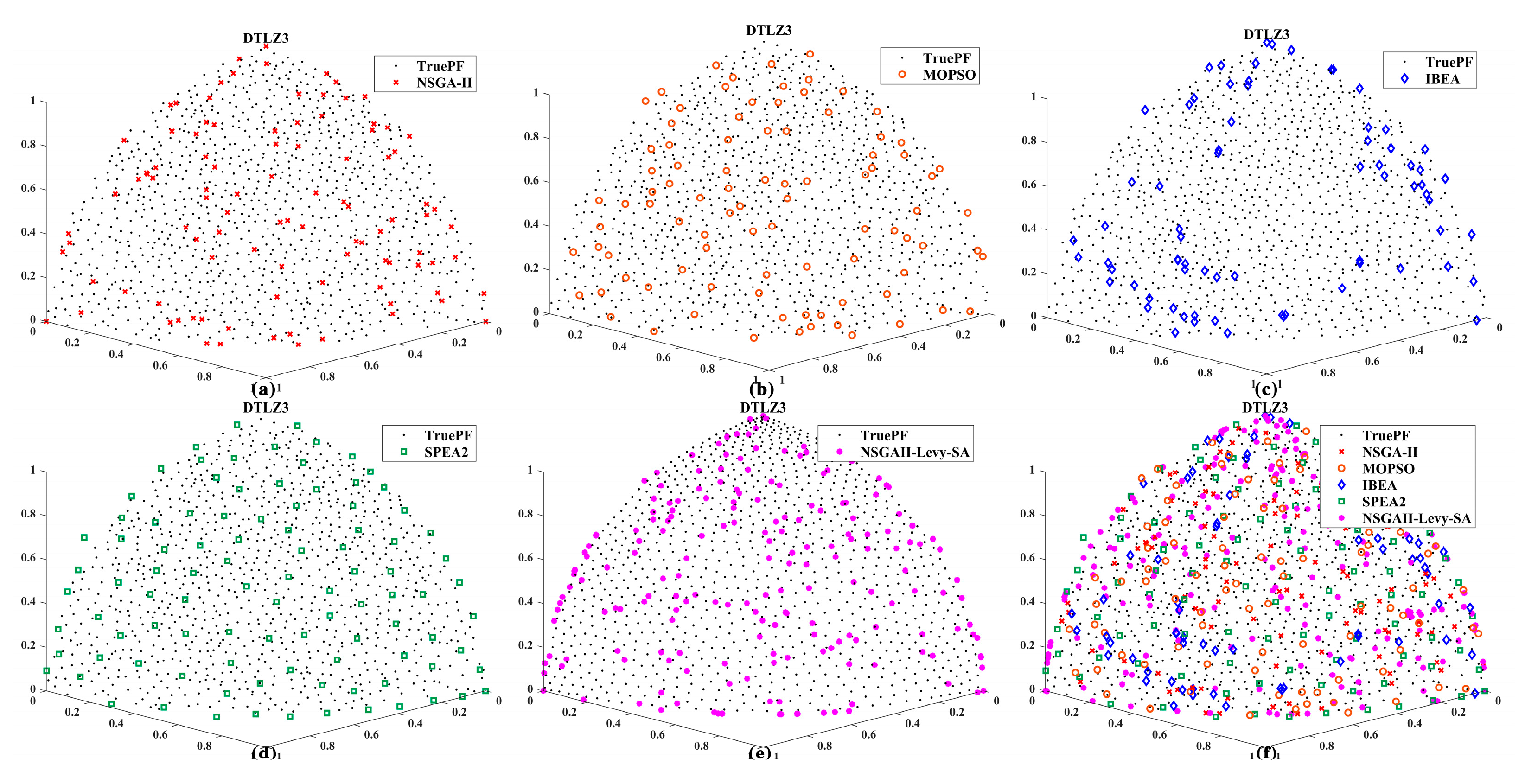

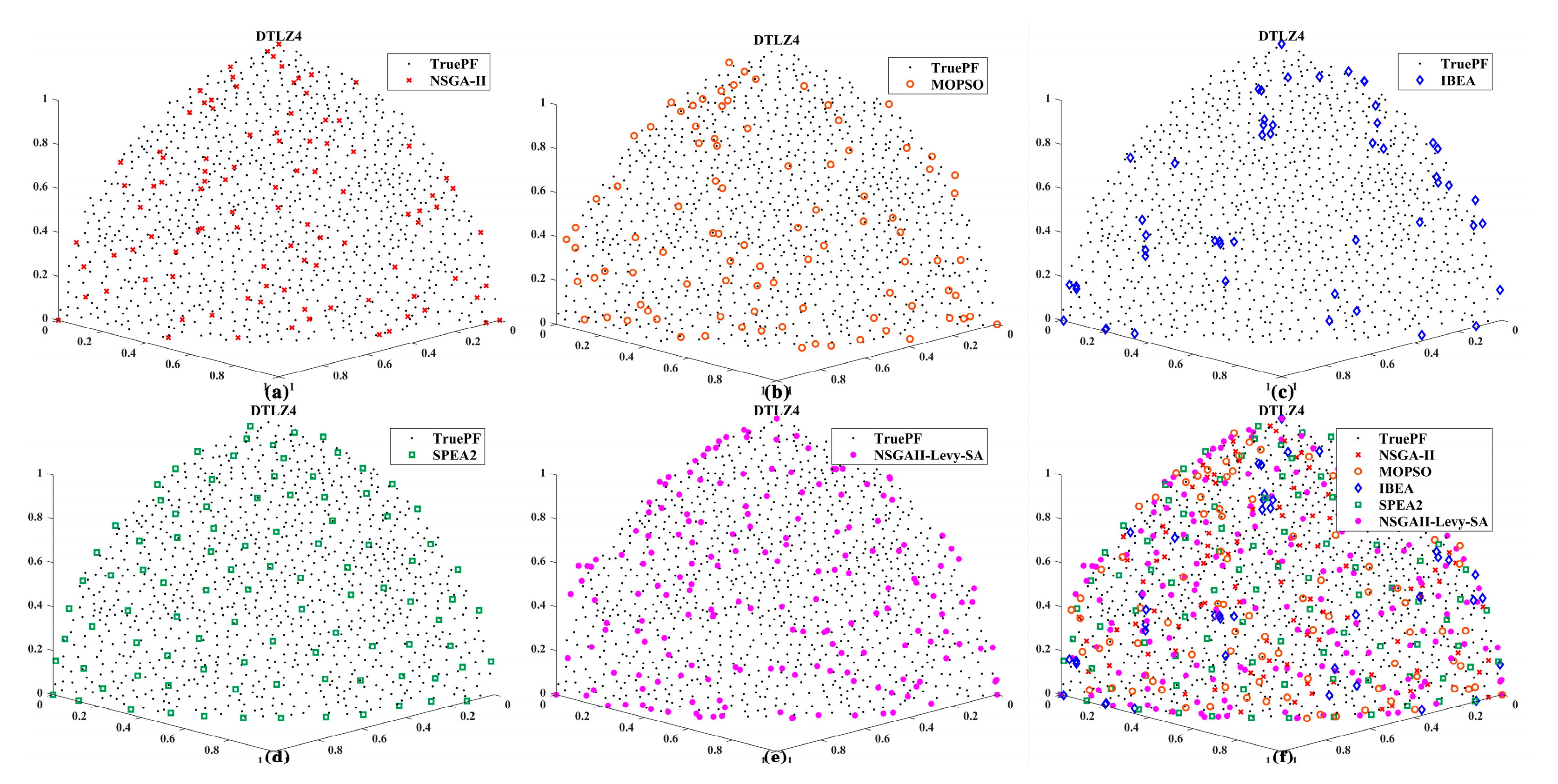

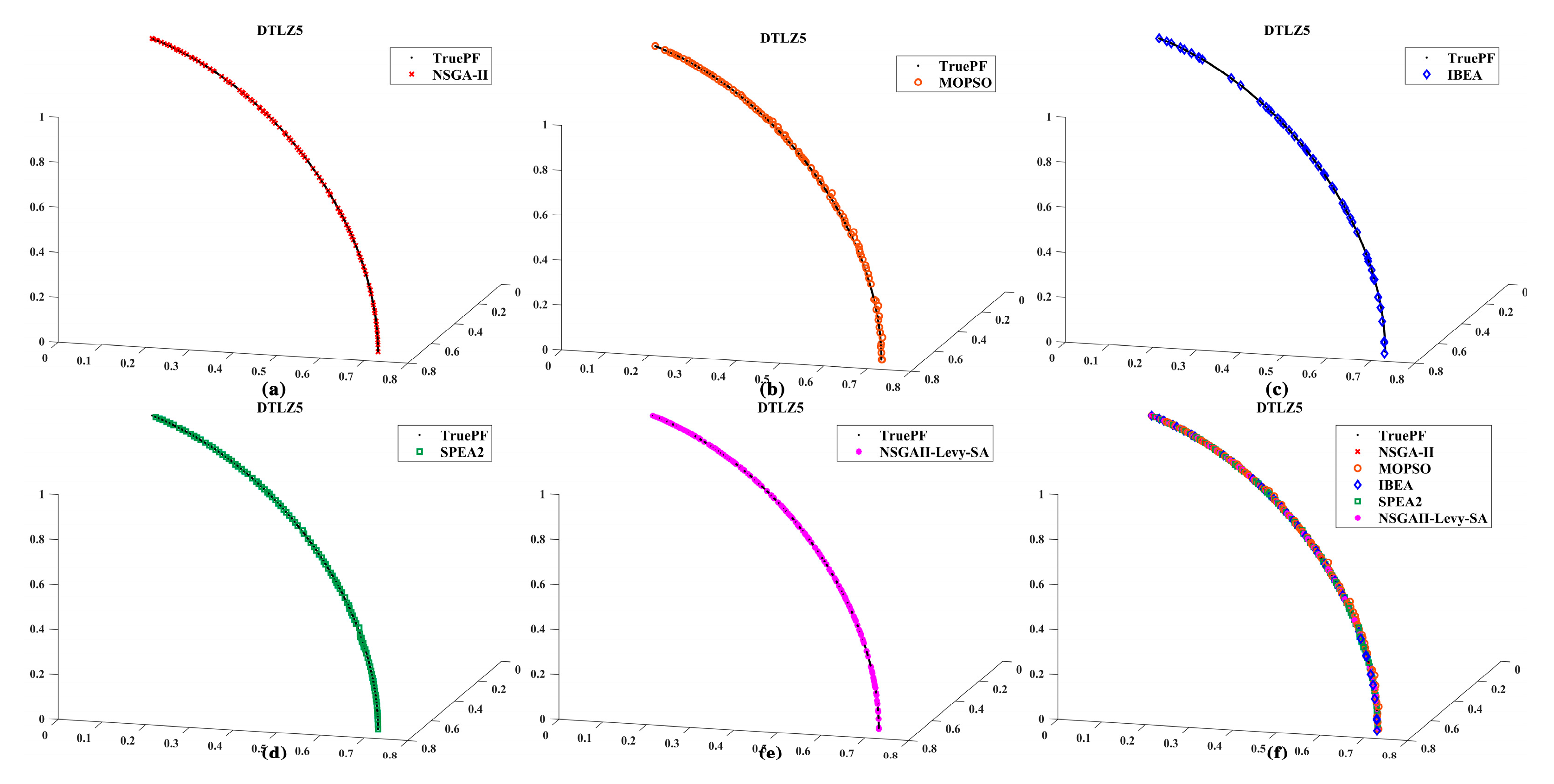

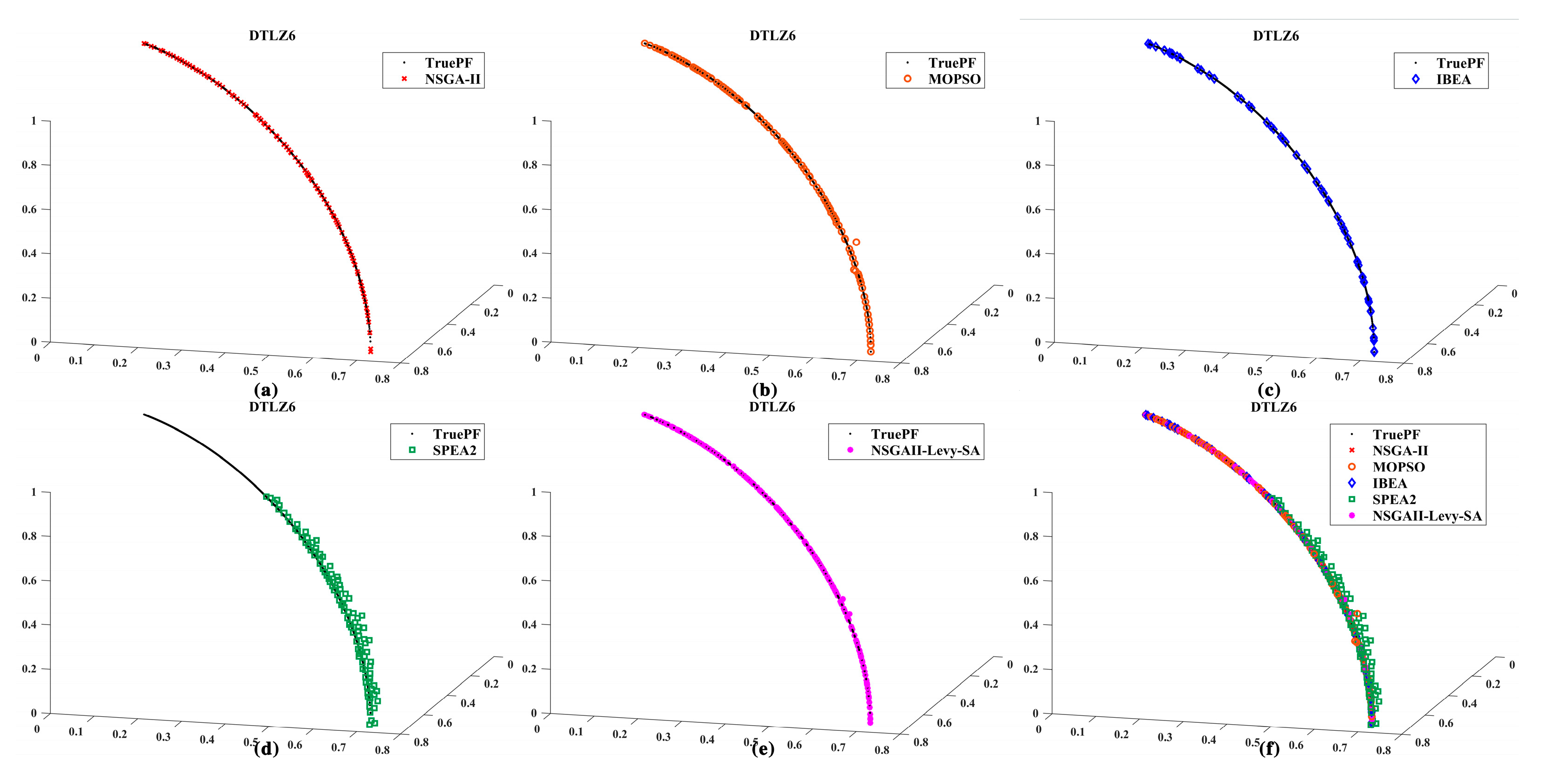

Section 3 presents a comparative analysis of the algorithm’s performance on standard test functions (ZDT and DTLZ series);

Section 4 demonstrates the application of the proposed algorithm to the velocity trajectory optimization of electric winches in oil drilling rigs; and finally,

Section 5 summarizes the principal findings and outlines prospective directions for future research.

2. An Improved NSGA-II Algorithm Integrating Lévy Flight and Simulated Annealing

The Non-dominated Sorting Genetic Algorithm II (NSGA-II), proposed by Deb et al. in 2002, is a classic multi-objective evolutionary algorithm [

20]. Its core mechanics rely on fast non-dominated sorting and crowding distance computation to maintain population convergence and diversity. This section focuses on elucidating the proposed enhancements to the NSGA-II algorithm and does not provide a detailed description of the foundational algorithm itself.

2.1. Analysis of Core Innovation Points

2.1.1. Global Exploration Variation Strategy Based on Lévy Flight

The mutation operators in traditional genetic algorithms typically generate small step sizes. This often causes the algorithm to become trapped in local optima when confronted with multimodal, complex optimization problems, thereby hindering effective exploration of the entire search space. To overcome this limitation, the proposed algorithm incorporates a mutation operator based on the Lévy flight mechanism, which is characterized by a heavy-tailed distribution of step lengths. This property enables the operator to frequently make small, localized steps for intensive search while occasionally generating large, long-range jumps, thus facilitating escape from local optima and enhancing global explorative capability.

Lévy flight is a specialized random walk in which the step size, denoted as “

s”, follows a Lévy distribution. It is characterized by frequent short-distance movements interspersed with occasional long-distance jumps. This heavy-tailed property facilitates an efficient global search strategy, enabling the algorithm to escape local optima and explore vast regions of the search space effectively. The probability density function of the Lévy distribution is provided in Equation (1).

where

is the location parameter, which shifts the distribution curve horizontally to make the distribution range

, and

is the scale parameter.

The stride

s for the Lévy walk can be generated using the Mantegna algorithm, calculated as shown in Formula (2).

where

and

; the calculation formula for

is given by Equation (3):

The value of is typically set to 1.5.

In the algorithm, the update process for individual

xi in generation

t is expressed as follows (Equation (4)):

where

and

represent the upper and lower bound vectors of the decision variable, respectively;

s denotes the random stride generated via the Lévy distribution; and

signifies the stride scaling factor that adapts to the iteration count

t.

By incorporating a Lévy flight operator, which leverages its characteristic mix of frequent short steps and occasional long jumps, the algorithm stochastically updates individual positions within the population. This enhances its capacity to explore and identify global optima. This mechanism thereby increases population diversity and accelerates the convergence toward globally optimal solutions. Consequently, the global exploration capability of the algorithm is significantly enhanced. The occasional long-range jumps facilitate escape from local optima basins of attraction and enable the exploration of novel, potentially more promising regions of the search space. This process effectively maintains population diversity and mitigates the risk of premature convergence.

2.1.2. Local Development and Elite Retention in Simulated Annealing

As the algorithm progresses into later iterations, a majority of the solutions converge toward the Pareto-optimal front. At this stage, the primary focus of the algorithm shifts from global exploration to the refinement of high-quality, non-dominated solutions—a process termed local exploitation. To facilitate this refinement process, a simulated annealing mechanism is incorporated.

Simulated annealing is a stochastic optimization algorithm based on a physical annealing process. Its core principle lies in accepting not only better solutions but also inferior ones with a certain probability at a given “temperature” T, thereby enabling an escape from shallow local minima. This acceptance probability follows the Metropolis criteria. For a current solution xcurrent and a new solution xneighbor generated within its neighborhood, their energies (fitness values) are denoted as Ecurrent and Eneighbor, respectively. The energy function E is defined as the sum of multi-objective function values, i.e., , where M denotes the total number of objectives, and fm(x) represents the value of the mth objective function.

The acceptance probability

P of the new interpretation is defined by Formula (5):

where

.

This algorithm employs a dual-temperature cooling strategy to achieve a more refined search-control mechanism:

- (1)

Intergenerational Temperature Adjustment: Before performing SA optimization on the tth generation population, an initial temperature Tinit(t) is set based on the global iteration progress, as shown in Equation (6).

where

is the global initial temperature, and

is the total number of iterations.

- (2)

Internal Iterative Cooling: Within the inner loop, k local searches are performed on a given solution, and the temperature T decays after each iteration:

where

η is the cooling rate.

This dual cooling schedule enables the algorithm to apply varying search intensities across different evolutionary stages. The inter-generational temperature adjustment promotes overall stability during the later phases of evolution, while the intra-generational cooling facilitates intensive exploration within individual local search procedures. Consequently, this approach significantly enhances the convergence precision and algorithmic stability.

2.1.3. Adaptive Parameter Control Strategy

To enable the algorithm to intelligently balance exploration and exploitation throughout the entire evolutionary process, an adaptive parameter control strategy was designed.

- (1)

Adaptive adjustment of the Leviathan flight step scaling factor α(t) as shown in Equation (8):

where

C is a constant. In the early stages of evolution,

is large. Combined with the decision variable range

, this enables the Lévy walk to generate large strides, promoting global exploration. As

t increases,

decreases linearly, causing the mutation stride to shorten. The algorithm then shifts toward more refined local search.

- (2)

Dynamic Switching of the Lévy Flight Index β:

During the initial phase (), the algorithm employs a larger value to stabilize the step size distribution. In the later phase (), it switches to a smaller value of , which increases the “tail” heaviness of the Lévy distribution. This increases the probability of long-distance jumps, aiding in breaking potential deadlocks during the later stages of the search and escaping from deep local optima.

- (3)

Dynamic Control of Simulated Annealing Temperature T:

The simulated annealing (SA) component utilizes a dual cooling schedule that integrates inter-generational adjustment with intra-generational cooling. This strategy ensures a gradual transition from extensive global exploration in the initial phases to intensive local exploitation in the final phases.

The adaptive parameter control mechanism allows the algorithm to dynamically modulate its search strategy in response to the varying requirements of different evolutionary stages. This capability facilitates a seamless transition and maintains a dynamic balance between global exploration and local exploitation, thus significantly enhancing the algorithm’s robustness and overall optimization performance.

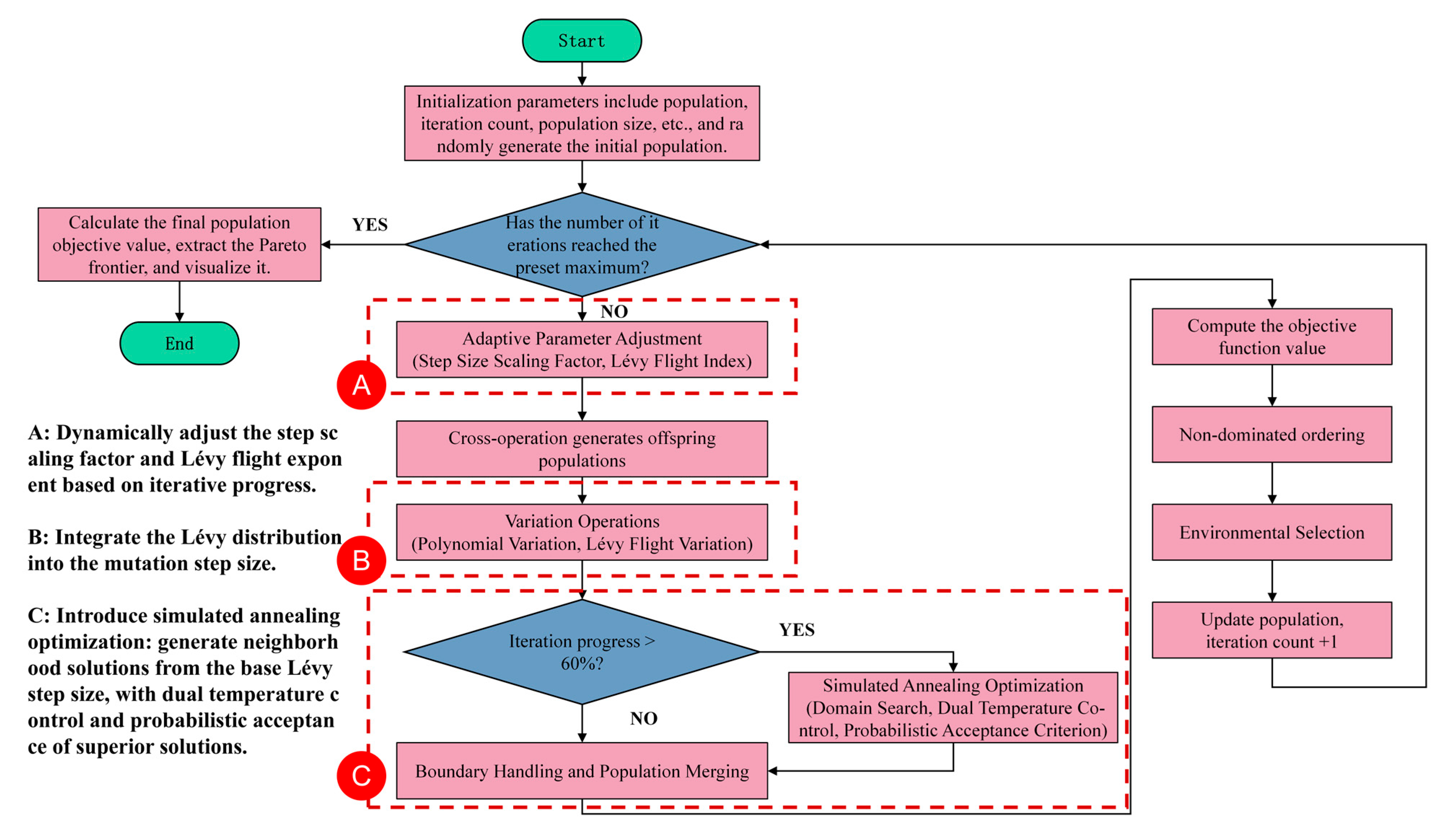

2.2. Operational Framework of the NSGAII-Levy-SA Algorithm

The operational framework of the NSGA-II-Levy-SA algorithm consists of five sequential phases:

Phase 1: Initialization. The initial population is randomly generated, and key parameters (e.g., the maximum number of iterations and variable boundaries) are configured. The objective function values for all individuals are subsequently evaluated.

Phase 2: Adaptive Variation. Algorithmic parameters, including the step scaling factor and Lévy flight index, are adaptively adjusted. Offspring are then generated through simulated binary crossover (SBX), followed by individual updating via polynomial mutation integrated with Lévy flight mutation.

Phase 3: Local Intensification. A simulated annealing (SA) procedure is activated in the later stages of evolution (typically when iteration progress exceeds 60%). New candidate solutions are generated based on Lévy strides, and their acceptance is governed by a dual-temperature control mechanism following a probabilistic criterion.

Phase 4: Environmental Selection. Fast non-dominated sorting is applied to the merged population (parents and offspring) to identify Pareto frontiers. Individuals are subsequently selected for the next generation based on their Pareto rank and crowding distance.

Phase 5: Termination and Output. The iterative process terminates upon reaching the predefined maximum number of iterations. The Pareto-optimal frontier is extracted from the final population, and the optimization results are outputted (e.g., for visualization and analysis).

The overall workflow of the NSGA-II-Levy-SA algorithm is schematically illustrated in

Figure 1.

4. Application of NSGAII-Levy-SA Algorithm in Optimizing Electric Winch Trajectories for Oil Drilling Rigs

In oil drilling field operations, deviations in the rig operator’s control of electric winch hoisting/lowering speeds can easily trigger safety incidents such as “top-out” or “crashing into the drill floor” in the traveling block system. This not only directly threatens personnel safety and the structural integrity of core equipment but also causes chain reactions including drilling operation interruptions and drill string damage, highlighting the critical impact of winch speed control precision on drilling safety and continuity.

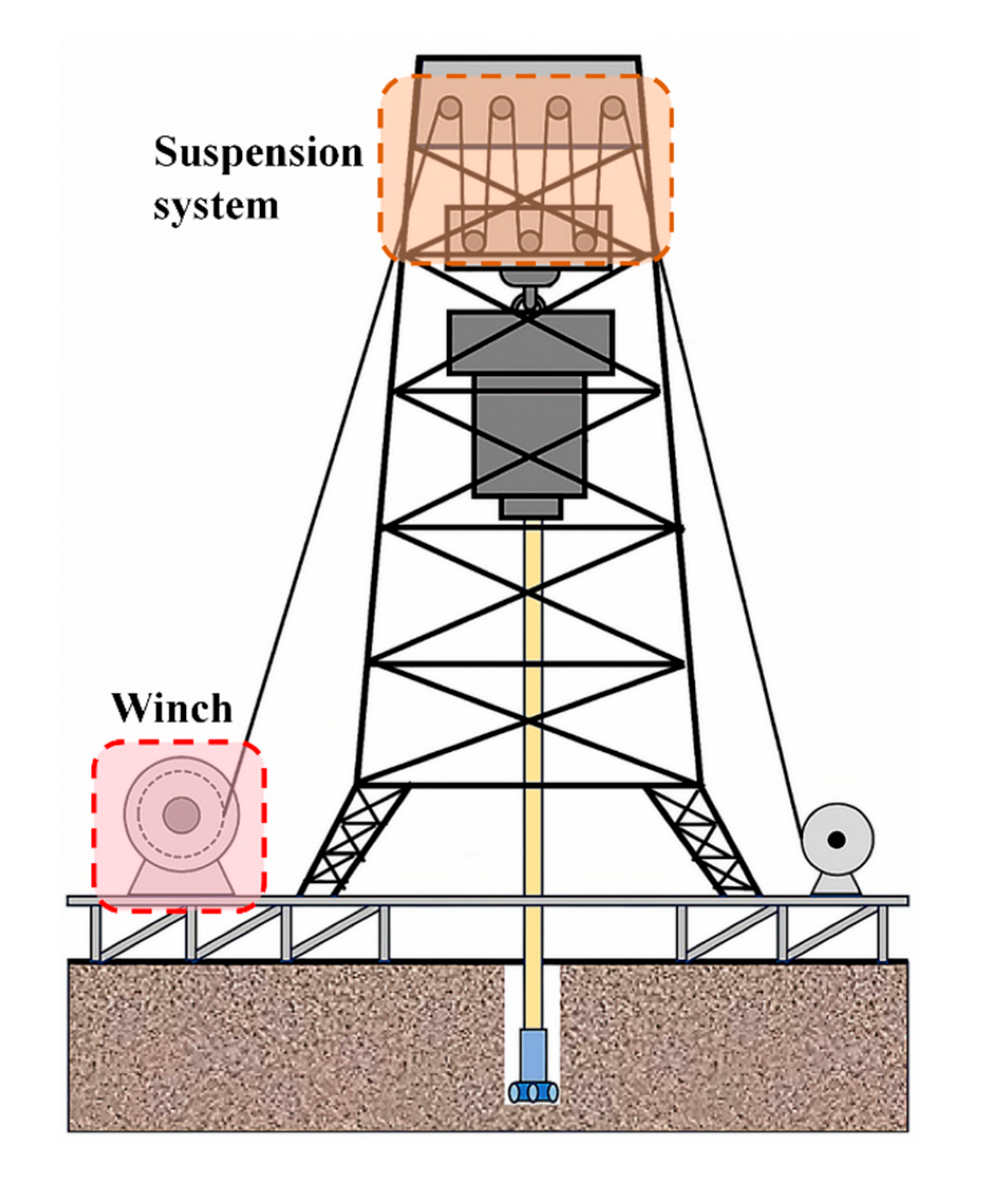

Figure 12 shows a schematic diagram of the drilling system [

33].

As the power core of the derrick hoist system, the electric winch of an oil drilling rig must balance operational efficiency with operational safety. It must meet the required lifting speed of drilling tools to shorten the operational cycle while ensuring a smooth lifting process to avoid system impacts caused by speed fluctuations. Therefore, scientific speed planning is a core element for safe and efficient drilling operations.

Based on field requirements, the speed and position response curves of electric winches must meet three core technical specifications:

- (1)

Smoothness Requirements: Avoid residual vibrations caused by “hard impacts” and “soft impacts” during high-speed lifting. Ensure that acceleration is differentiable, and that displacement, velocity, and acceleration curves are continuously differentiable throughout the entire range. Displacement and velocity curves must be smooth without inflection points to suppress vibrations at their source.

- (2)

Operational Efficiency Requirements: Optimize acceleration/deceleration time within smoothness constraints to shorten drill string hoisting cycles. Ensure rapid and precise positioning of drill string at target locations, meeting controllable operational cycle demands.

- (3)

Energy Efficiency Requirements: Minimize total energy consumption of the winch drive motor while satisfying smoothness and efficiency requirements.

In constructing the motion control curve, a single-segment cubic polynomial acceleration function is applied to the acceleration/deceleration intervals during hoisting, paired with a quintic polynomial displacement curve. This design ensures that displacement, velocity, acceleration, and deceleration curves all satisfy continuous differentiability, meeting smoothness requirements. Detailed mathematical derivations are simplified here.

To enhance speed planning performance, this paper employs a quintic polynomial to refine the acceleration segment of the traditional S-shaped speed curve. Based on this refinement, a multi-objective trajectory optimization model for the winch is established, selecting time, energy consumption, and impact as the three optimization objectives. Specifically, the proposed quintic polynomial curve fits the displacement variation during motion. By adjusting polynomial coefficients, it achieves continuous and smooth transitions in displacement, velocity, acceleration, and deceleration, thereby effectively reducing system impact and improving motion smoothness.

Subsequently, a multi-objective trajectory optimization model for the winch was constructed based on this curve and solved using the NSGA-II-Levy-SA algorithm to obtain a Pareto-optimal solution set. Subsequent analysis was conducted under ideal conditions, assuming rigid connections and neglecting factors such as transmission vibration, external disturbances, and load mass variations to focus on evaluating the performance of the trajectory planning algorithm itself.

4.1. Establishing the Winch Speed Response Curve

The winch speed response curve consists of three segments: the acceleration interval [0, T1], the constant velocity interval [T1, T2], and the deceleration interval [T2, T3]. The acceleration curve a(t) for intervals [0, T1] and [T2, T3] is a cubic polynomial, while the velocity curve for interval [T1, T2] is a horizontal straight line representing the constant velocity segment.

The equations for the hoist’s displacement, velocity, acceleration, and deceleration response curves during the acceleration segment are defined by Formula (11):

Among these, a0, a1, a2, a3, a4, and a5 are the undetermined coefficients of the quintic polynomial, which can be determined from the boundary conditions.

The equations for the displacement, velocity, acceleration, and deceleration response curves of the winch during the uniform motion segment are given by Formula (12):

The displacement, velocity, acceleration, and deceleration response curve equations for the winch during the deceleration phase are given by Formula (13):

To enhance operational efficiency, the durations of acceleration and deceleration phases must be minimized. Therefore, the maximum acceleration values during these phases should approach the hoist’s maximum achievable acceleration as closely as possible. Combining this requirement with initial velocity and acceleration conditions, the boundary conditions for Equations (11) and (13) are established as Equations (14) and (15).

where

denotes the maximum acceleration of the acceleration segment,

denotes the maximum deceleration of the acceleration segment,

denotes the maximum speed,

t1 ∈ [0,

T1], and

t2 ∈ [

T2,

T3].

By simultaneously deriving Equations (11) and (14), Equation (16) can be obtained:

Define local variables in the deceleration segment: when

t = T

2 and when

t = T

3, mapping the time interval [T

2, T

3] to [0, T], the calculation process and method for the deceleration segment are identical to those for the acceleration segment. Thus, Equation (13) can be rewritten as Equation (17):

Here, .

When the control system specifies the maximum speed

and target displacement

, the time parameters

T1,

T2, and

T3 can be calculated using Equations (16) and (17).

4.2. Optimal Trajectory Planning Based on NSGA-II-Levy-SA

The optimization problem can be formulated as in Equation (19):

In the equation, represents the objective function; , are the constraint functions; is the n-dimensional decision variable.

The decision variables for multi-objective optimization of trajectories are the time lengths of each interpolation interval, denoted as

, where

,

i = 1, 2, ⋯

n,

represents the time sequence of trajectory points. The time of the first point is

, typically set to

. The defined objective functions and constraints are shown in Equation (20).

Here, f1 represents the time-optimization objective function, where N denotes the number of interpolation intervals; f2 is the energy objective function, where signifies the power variable for each interval, and I represent the motor shaft rotational inertia, and denotes acceleration; f3 is the impact objective function, where indicates the torque variable for each interval, and I denote the motor shaft rotational inertia, and represents acceleration.

The constraint condition is given by Equation (21):

In the formula, and represent the hoist’s operating speed and maximum allowable speed, respectively. and represent the acceleration during the hoist’s acceleration phase and the maximum allowable acceleration, respectively. and represent the acceleration during the hoist’s deceleration phase and the maximum allowable acceleration, respectively.

Assuming the parameters for the total displacement, velocity, acceleration during the acceleration phase, deceleration during the deceleration phase, and rotational inertia of the winch during a single drill string hoist are as shown in

Table 12:

Calculations were performed based on the parameters listed in

Table 12, and MATLAB (R2023b) was utilized to simulate the variations in motion parameters within the electric winch of an oil drilling rig, as shown in

Figure 13.

The decision variable takes values within the range

, with the velocity and acceleration at the winch’s starting and ending points set to zero. The constraint condition is given by Equation (22):

The NSGA-II-Levy-SA algorithm was employed for multi-objective optimization of the winch, with a maximum iteration count of 300 and a population size of 100. This process generated the Pareto front as shown in

Figure 14.

The distribution pattern of the Pareto frontier reveals a clear trade-off between duration, energy consumption, and impact intensity: extending the duration simultaneously reduces both energy consumption and impact severity. Conversely, pursuing shorter durations inevitably entails higher energy consumption and more pronounced impacts. Therefore, engineering applications must make selections based on practical operational constraints. This study prioritizes energy consumption and impact mitigation. The selected parameters are shown in

Table 13, and the optimized curve is depicted in

Figure 15.

Simulation results demonstrate that the optimized trajectory is smooth, continuous, and free of abrupt changes. A comparative analysis of the motion curves before (

Figure 13) and after (

Figure 15) optimization reveals a significant enhancement in smoothness: the optimized acceleration and jerk profiles exhibit markedly reduced oscillations and more gradual transitions, effectively eliminating the sharp peaks present in the pre-optimization curves. Throughout the entire operation, both velocity and acceleration remain within the specified constraint limits. The quantitative metrics in

Table 14 were computed through numerical simulation based on the objective functions defined in Equation (20), where the total operating time corresponds to the sum of all time intervals, while the total energy consumption and total impact were obtained by integrating the squared power and squared torque over the complete motion trajectory using the optimized parameters. A quantitative comparison of the total operating time, total energy consumption, and total impact force before and after optimization is presented in

Table 14.

The table data indicates that compared to the baseline before optimization, the total operating time of the winch decreased by 6%, energy consumption dropped by 17.99%, and overall impact was reduced by 27.4%. Consequently, the multi-objective optimized trajectory enhances efficiency while ensuring stable operation, significantly lowering both energy consumption and impact.

The optimized performance in time, energy consumption, and impact holds clear value for industrial applications. The reduction in cycle time directly enhances tripping operation efficiency and equipment throughput. The decrease in energy consumption translates to significant operational cost savings and a reduced environmental footprint. The effective suppression of mechanical impact is crucial for extending the service life of key components such as wire ropes and gear transmission systems, thereby reducing maintenance frequency and the risk of downtime. Collectively, these improvements demonstrate that the proposed optimized trajectory not only enhances the economy and safety of a single operation but also contributes to the efficient, reliable, and long-term stable operation of the drilling rig at a systemic level.

4.3. Discussion on Practical Robustness

It is important to acknowledge that the present study and its promising results were obtained under idealized simulation conditions, which assumed rigid connections and neglected practical disturbances such as load mass variations, transmission vibrations, and external perturbations. This focus allowed for a clear evaluation of the trajectory planning algorithm’s core performance. In real-world industrial applications, these factors could influence the system’s dynamic response. However, the smooth and continuously differentiable nature of the quintic polynomial-based trajectories generated by the proposed method provides inherent robustness to a degree. By minimizing high-frequency components and avoiding abrupt changes in acceleration, the planned motion is less likely to excite detrimental vibrations even under parameter variations. Furthermore, this optimized trajectory serves as a high-quality reference input for downstream closed-loop controllers (e.g., PID or MPC), which are responsible for disturbance rejection. The superior smoothness of the reference facilitates more stable and precise tracking control, thereby enhancing the overall system’s resilience to disturbances. Investigating the algorithm’s performance under a wider range of operational uncertainties and conducting hardware-in-the-loop tests constitute key directions for our future work.

6. Conclusions

The proposed hybrid NSGA-II algorithm, integrating Lévy flight and simulated annealing, enhances the performance of traditional multi-objective evolutionary algorithms by organically combining three complementary strategies. Lévy flight provides robust global exploration capabilities, and simulated annealing ensures efficient local convergence accuracy, while adaptive parameter control skillfully coordinates these two behaviors. This hybrid framework enables the algorithm to generate more uniformly distributed and better converged sets of Pareto-optimal solutions when tackling multi-objective optimization problems with complex characteristics. Comparative evaluations against NSGA-II, MOPSO, IBEA, and SPEA2 on two- and three-objective benchmark functions demonstrate that NSGAII-Levy-SA exhibits superior performance in convergence speed, solution diversity, and distribution uniformity. In engineering applications, this algorithm reduced the motion trajectory optimization results for oil drilling electric winches by 6% (time), 17.99% (energy), and 27.4% (impact), validating its practical effectiveness.

The current limitations of this paper and future research directions include:

The algorithm’s effectiveness has only been validated for optimizing electric winch trajectories in oil drilling rigs and has not yet been tested in other engineering domains.

Benchmark evaluations are limited to ≤3 objectives, leaving the scalability for high-dimensional problems unvalidated. These constraints highlight key research directions for developing multi-objective optimization algorithms.

Algorithmic performance remains somewhat sensitive to parameter tuning. Despite the introduction of adaptive strategies, hyperparameters such as the step size scaling factor in Lévy walk and the initial temperature in simulated annealing still require adjustment for specific problems, thereby compromising their “out-of-the-box” convenience.

The algorithm exhibits relatively high computational complexity, and the computational overhead introduced by mixed strategies may render it unsuitable for online optimization scenarios with stringent real-time requirements.

Integration with Surrogate Models: For computationally expensive simulations, a promising direction is to hybridize NSGAII-Levy-SA with surrogate models (e.g., Gaussian processes, neural networks). The key research problem is how to intelligently manage the interaction between the global search of Lévy flight and the uncertainty prediction of the surrogate to maximize convergence speed without sacrificing solution quality.