A Dual-Task Improved Transformer Framework for Decoding Lower Limb Sit-to-Stand Movement from sEMG and IMU Data †

Abstract

1. Introduction

- (1)

- A dual-task learning framework is proposed for recognizing lower limb motion intentions during sit-to-stand (STS) movements based on the fusion of sEMG signals and kinematic data, enabling the efficient concurrent learning of various tasks through a shared feature representation.

- (2)

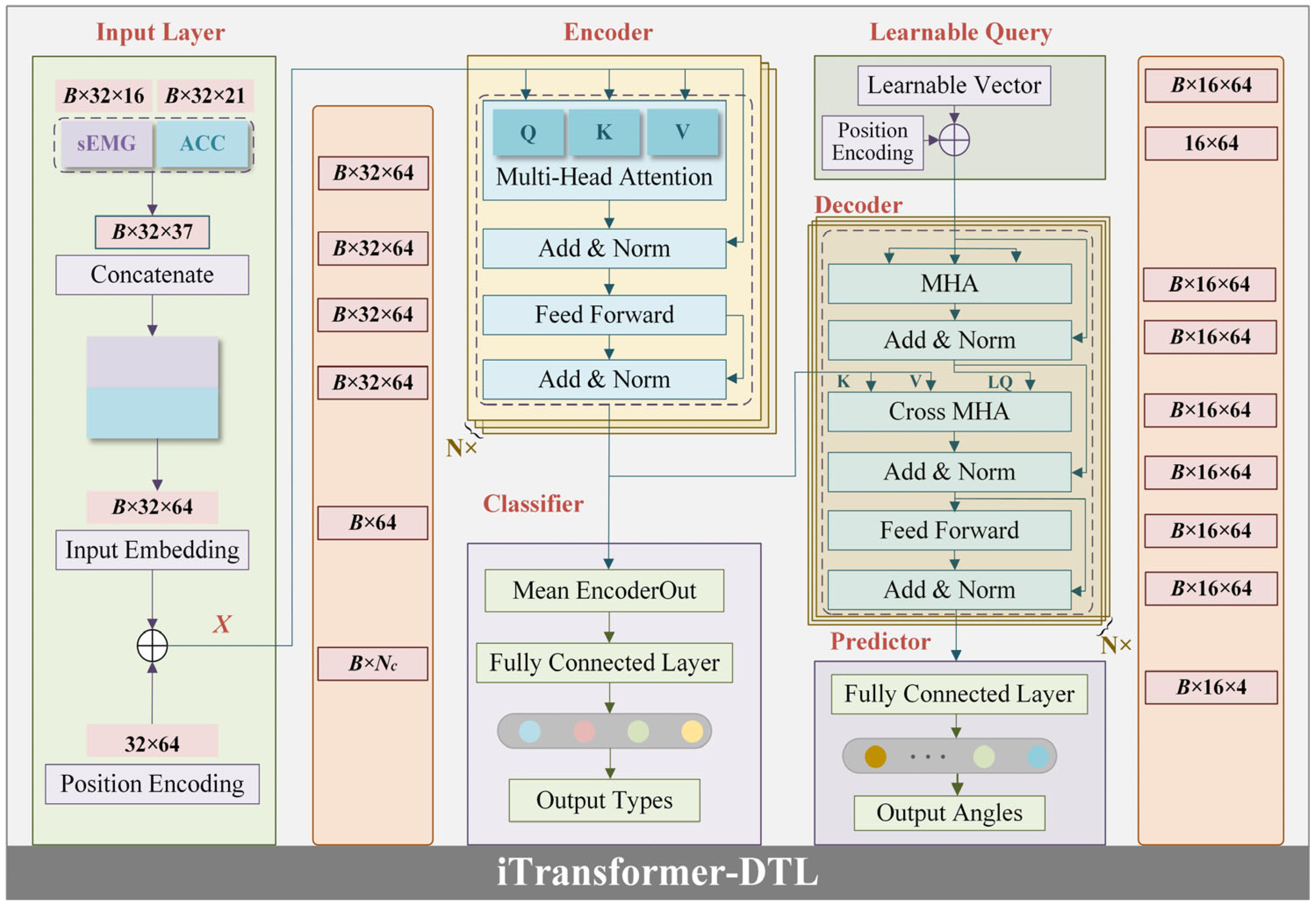

- A dual-task learning framework with an improved Transformer (iTransformer-DTL) architecture is created to carry out both classification and prediction tasks simultaneously. The framework incorporates a learnable query mechanism to effectively extract information from the contextual representations and directly decodes the entire sequence at once, significantly improving generation efficiency.

- (3)

- The model underwent effective experimental validation on a lower limb sit-to-stand transfer movement dataset collected from healthy individuals and stroke patients.

2. Theoretical Background

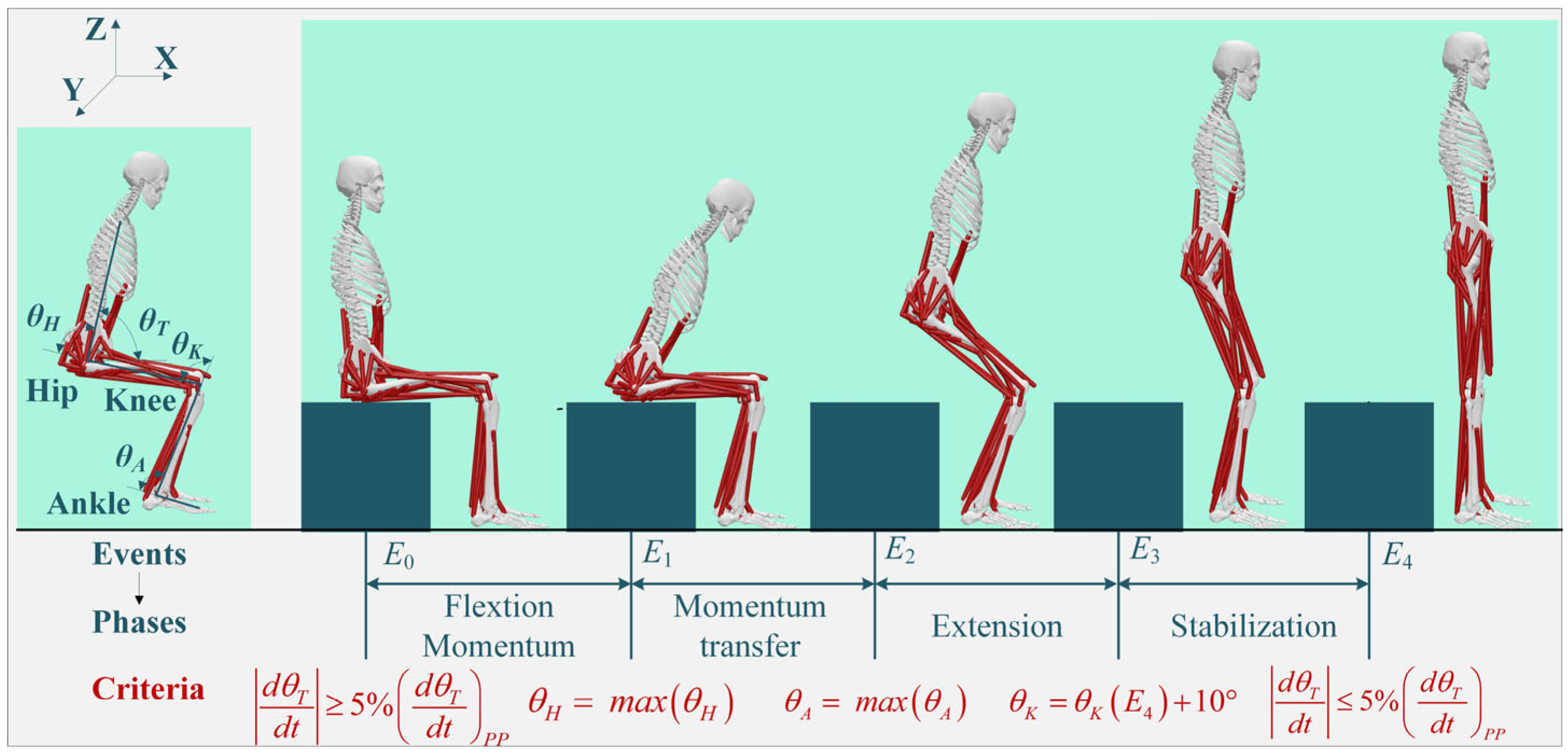

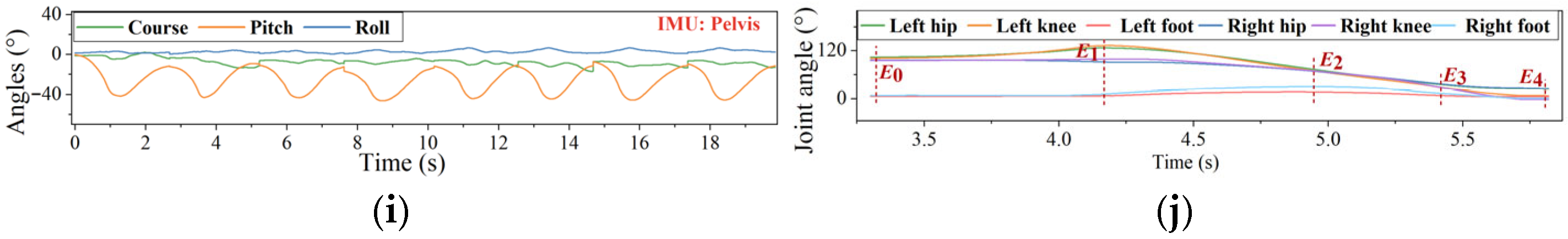

2.1. STS Movement Segmentation

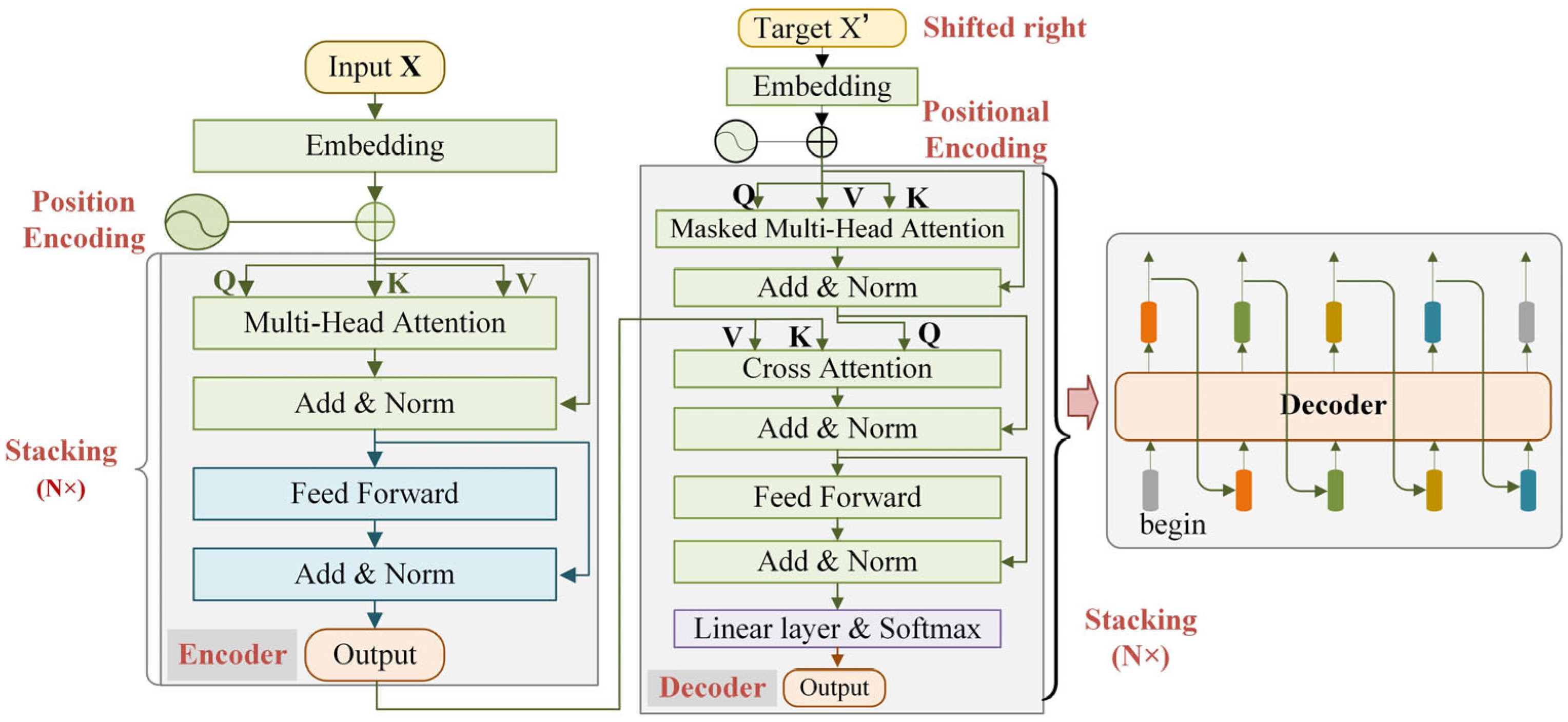

2.2. Traditional Transformer Networks

3. Methods

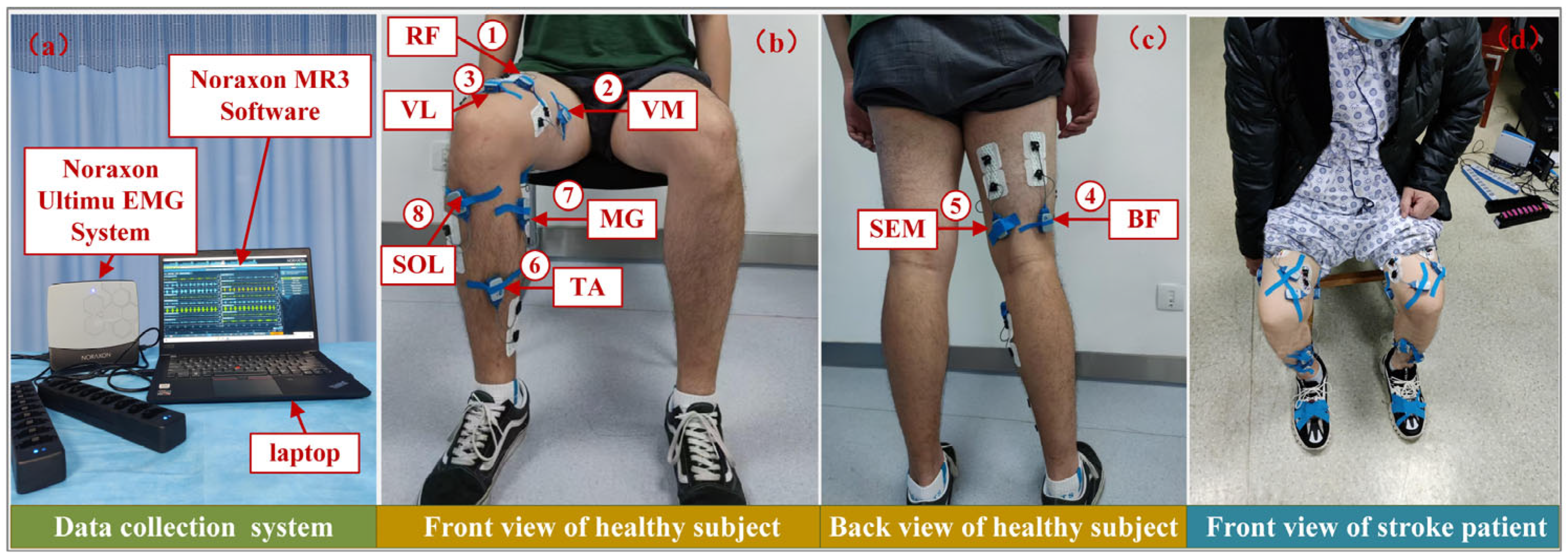

3.1. Dataset

3.2. Data Preprocessing

3.2.1. Resampling and Filtering

3.2.2. Data Normalization

3.2.3. Sample Segmentation

3.2.4. Dataset Partitioning

3.3. Network Structure Design

- (1)

- Input layer

- (2)

- Encoder

- (3)

- Decoder

- (4)

- Learnable Query (LQ)

- (5)

- Classifier

- (6)

- Predictor

| Parameter Description | Settings | Parameter Description | Settings |

|---|---|---|---|

| Sequence length/predicted length | 32/16 | Dropout | 0.2 |

| Output categories | 4 | Decoder layers | 2 |

| Total channels | 37 | Decoder input dim | 64 |

| Encoder layers | 2 | Decoder MHA output dim | 16 |

| Encoder input dim | 64 | Decoder MHA heads | 4 |

| Encoder MHA output dim | 16 | Decoder cross MHA output dim | 16 |

| Encoder MHA heads | 4 | Decoder cross MHA heads | 4 |

| Encoder last linear middle dim | 128 | Decoder last linear middle dim | 128 |

3.4. Loss Functions

3.5. Training Settings and Evaluation Metrics

4. Results and Discussion

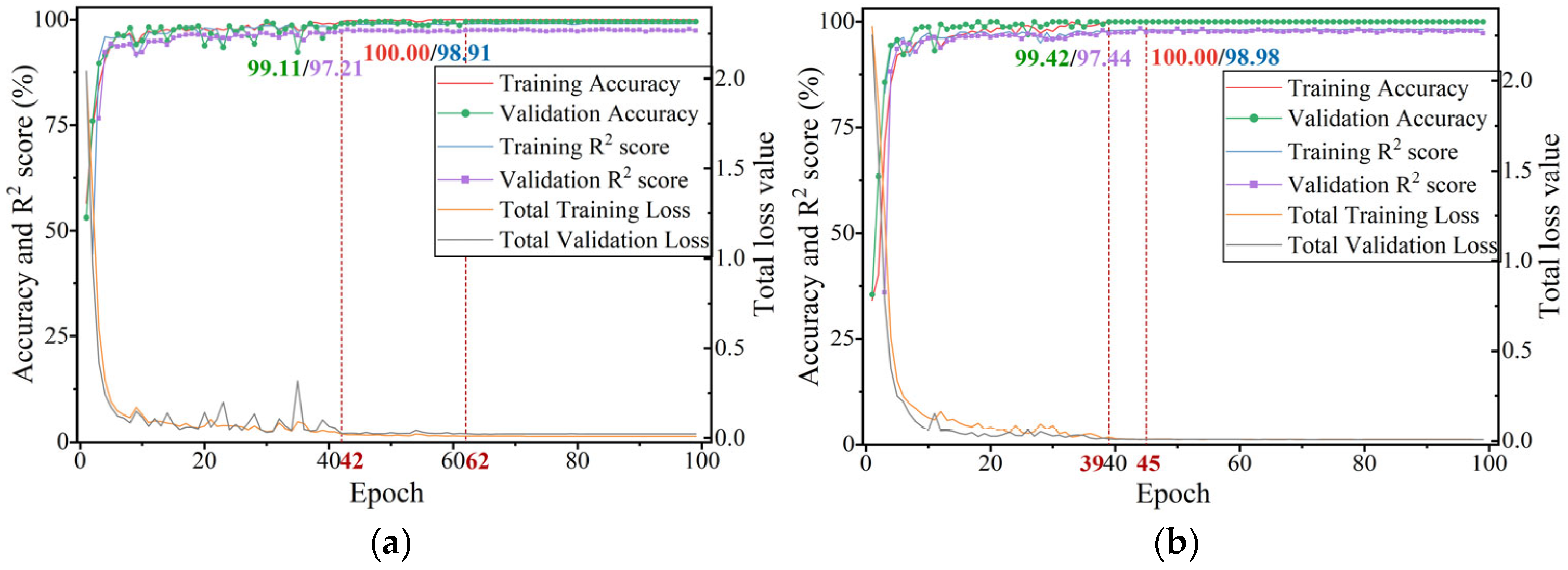

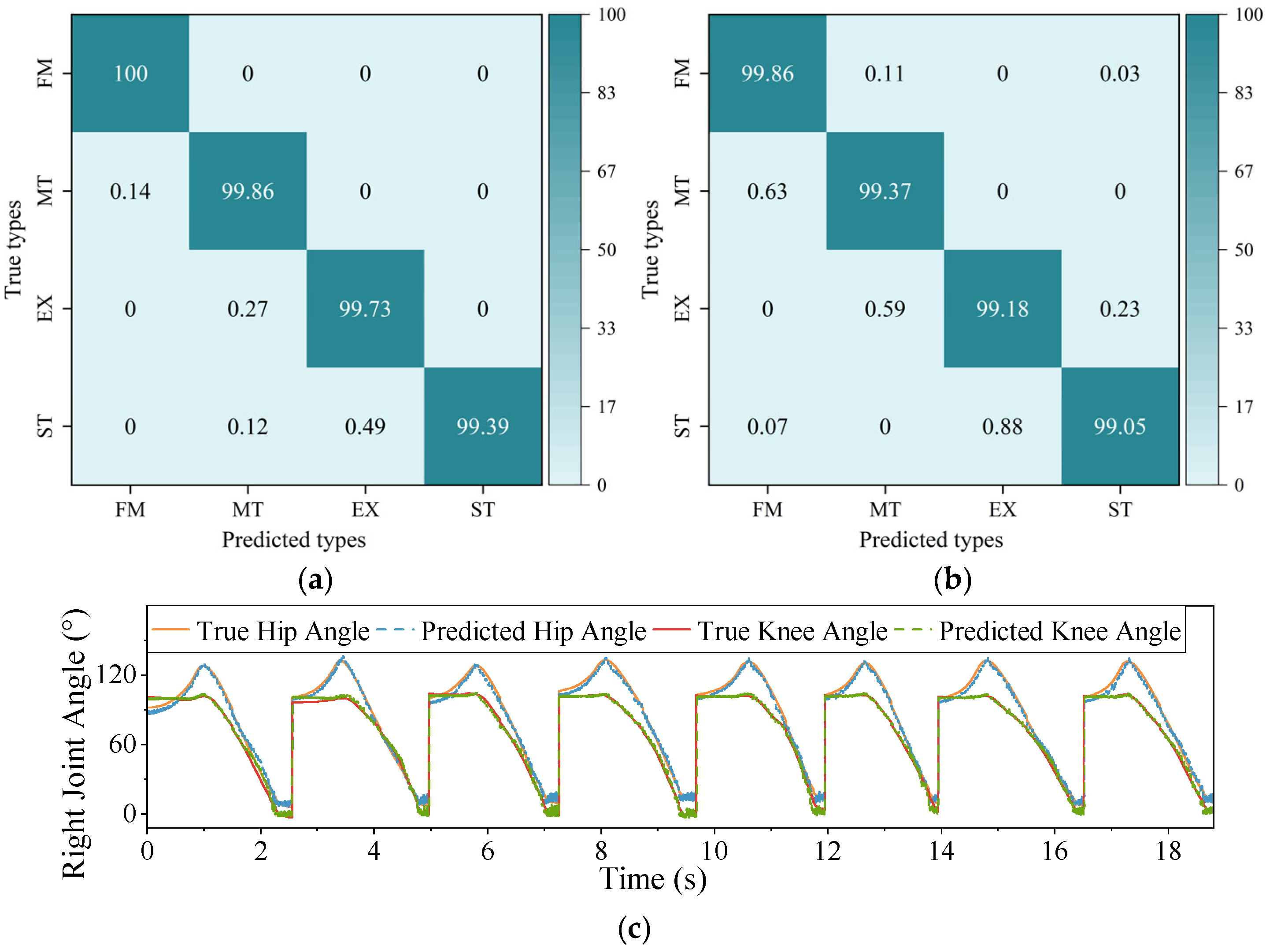

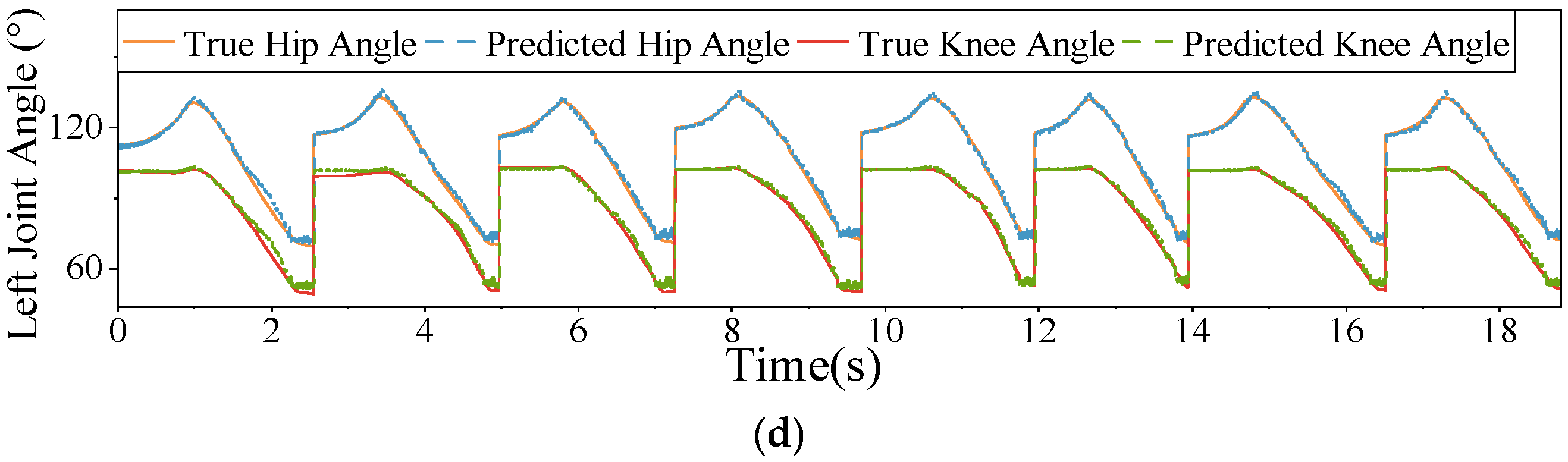

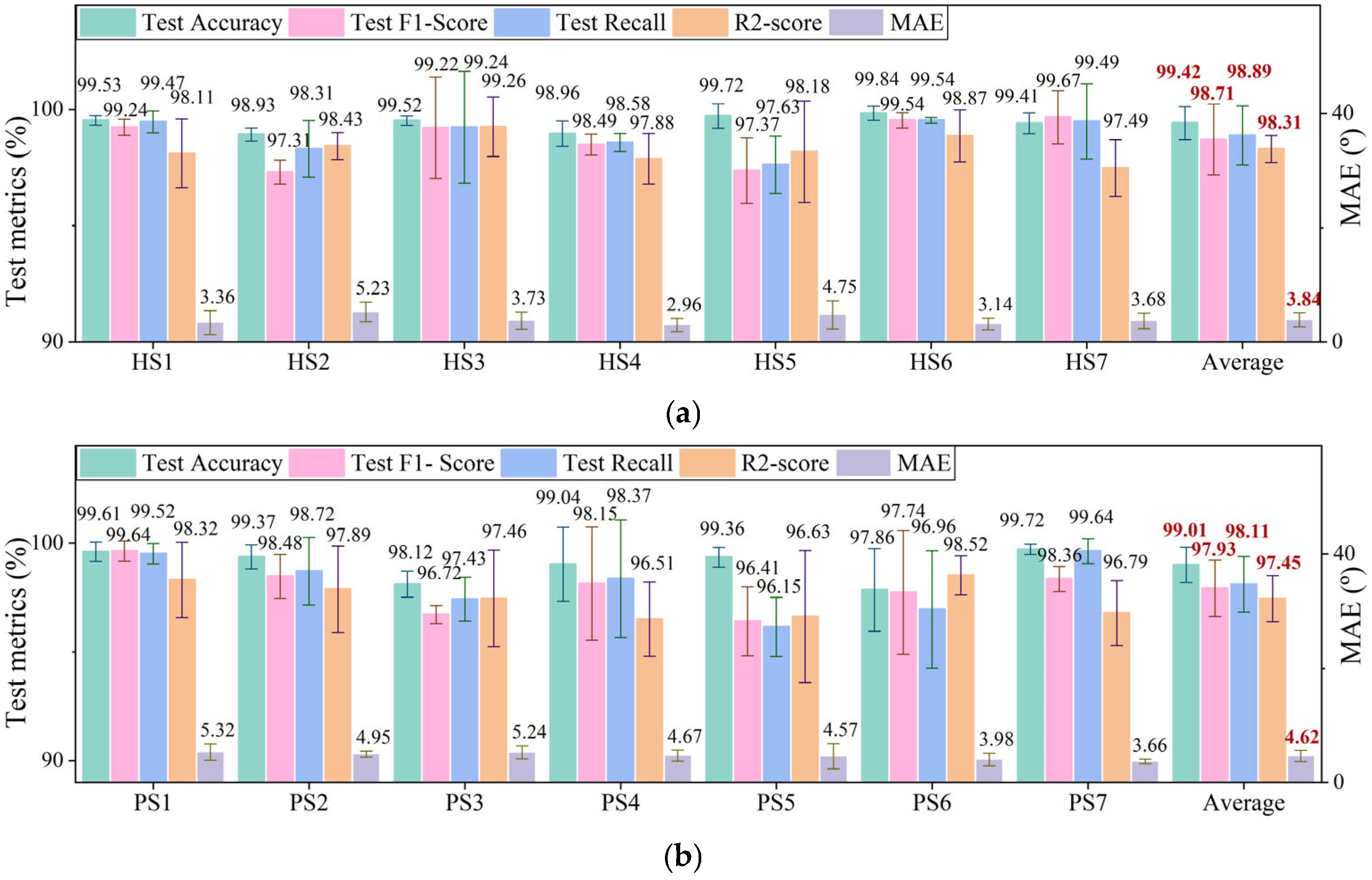

4.1. Recognition Results

4.2. Ablation Study

4.3. Comparison with State-of-the-Art Methods

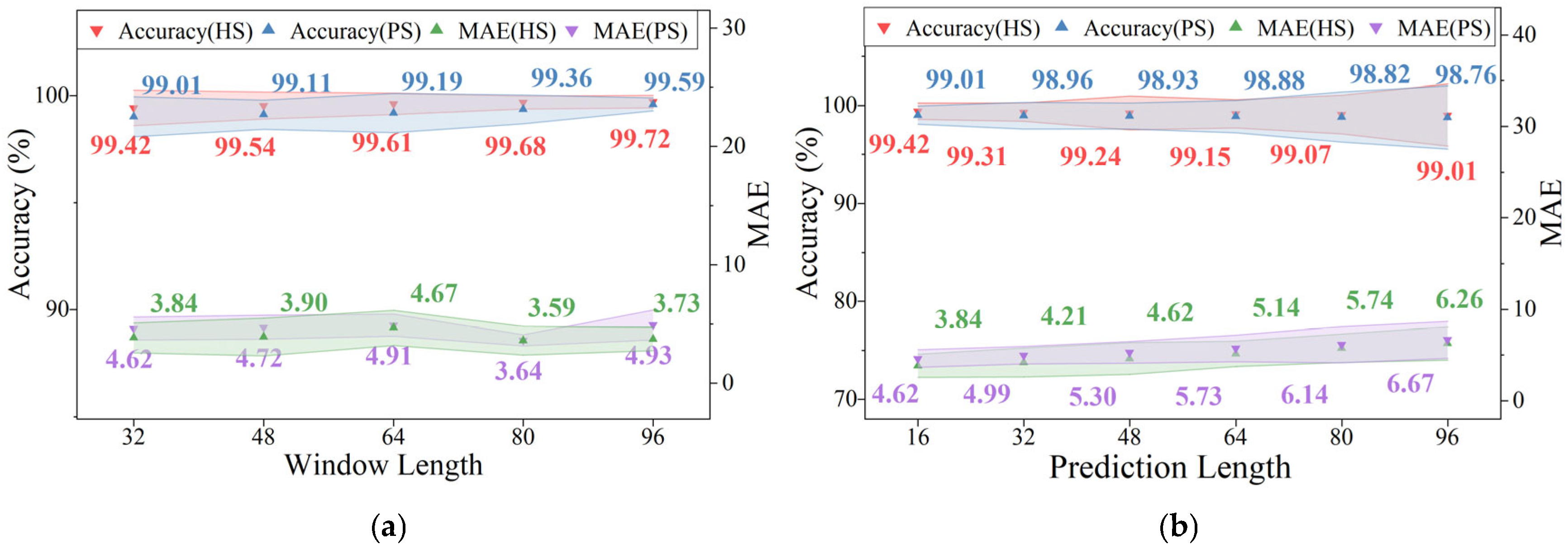

4.4. Effect of Window Length on Model Performance

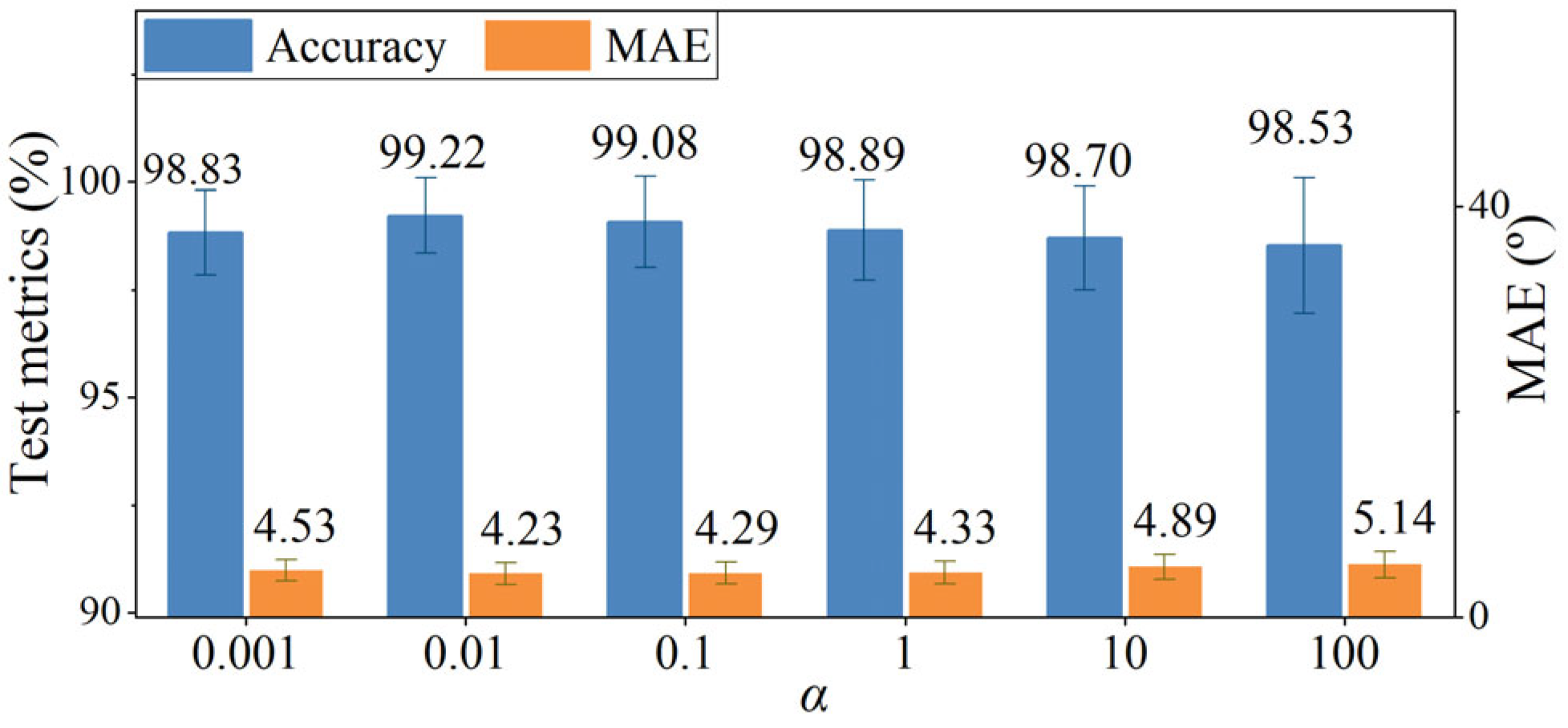

4.5. Effect of Scaling Factor α in the Loss Function

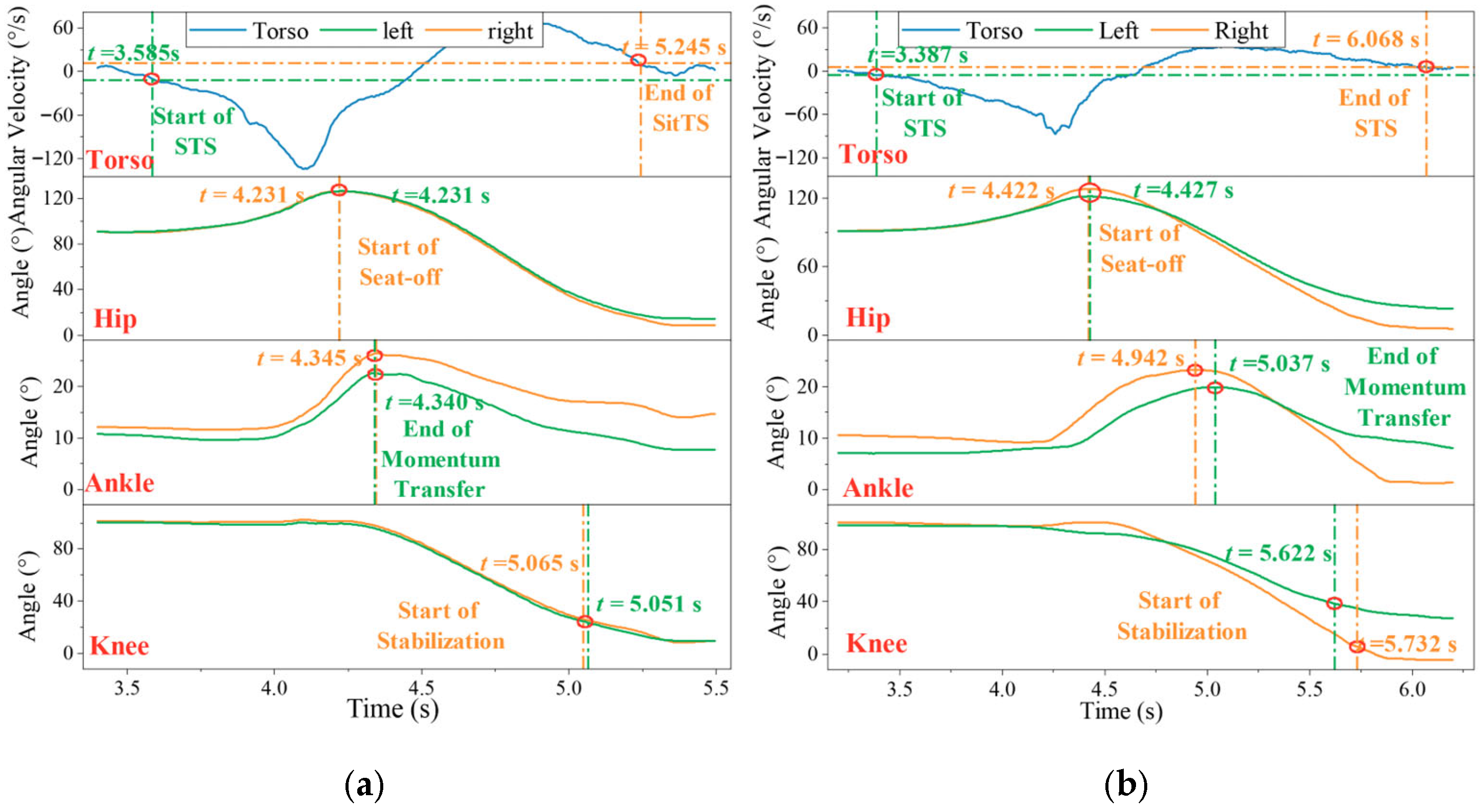

4.6. Healthy vs. Abnormal Subjects in STS Motion

5. Conclusions

- (1)

- The learnable queries are currently initialized randomly, which may not be optimal for all tasks or subjects. Future work will explore task-informed or anatomy-guided initialization strategies to enhance convergence and performance.

- (2)

- The current framework was evaluated in an intra-subject setting; its generalizability across individuals—especially across those with diverse impairment levels—remains limited. We plan to integrate cross-subject transfer learning or domain adaptation techniques to improve robustness in real-world clinical deployment.

- (3)

- The system relies on a relatively high number of sEMG channels, which increases hardware complexity and cost. Ongoing efforts will focus on channel selection or sensor reduction methods to develop a lightweight, cost-effective version suitable for the practical rehabilitation setting.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Arene, N.; Hidler, J. Understanding Motor Impairment in the Paretic Lower Limb after a Stroke: A Review of the Literature. Top. Stroke Rehabil. 2009, 16, 346–356. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.-C. Progress in Sensorimotor Rehabilitative Physical Therapy Programs for Stroke Patients. World J. Clin. Cases 2014, 2, 316. [Google Scholar] [CrossRef]

- Morone, G.; Iosa, M.; Calabrò, R.S.; Cerasa, A.; Paolucci, S.; Antonucci, G.; Ciancarelli, I. Robot- and Technology-Boosting Neuroplasticity-Dependent Motor-Cognitive Functional Recovery: Looking towards the Future of Neurorehabilitation. Brain Sci. 2023, 13, 12–14. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, H.; Lu, T.; Vladareanuv, V.; Li, Q.; Zhao, C. Teaching Training Method of a Lower Limb Rehabilitation Robot. Int. J. Adv. Robot. Syst. 2016, 13, 57. [Google Scholar] [CrossRef]

- Banala, S.K.; Kim, S.H.; Agrawal, S.K.; Scholz, J.P. Robot Assisted Gait Training with Active Leg Exoskeleton (ALEX). In Proceedings of the 2nd Biennial IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics, BioRob 2008, Scottsdale, AZ, USA, 19–22 October 2008; Volume 17, pp. 653–658. [Google Scholar] [CrossRef]

- Baud, R.; Manzoori, A.R.; Ijspeert, A.; Bouri, M. Review of Control Strategies for Lower-Limb Exoskeletons to Assist Gait. J. Neuroeng. Rehabil. 2021, 18, 119. [Google Scholar] [CrossRef]

- Zhang, Y.P.; Cao, G.Z.; Li, L.L.; Diao, D.F. Interactive Control of Lower Limb Exoskeleton Robots: A Review. IEEE Sens. J. 2024, 24, 5759–5784. [Google Scholar] [CrossRef]

- Su, D.; Hu, Z.; Wu, J.; Shang, P.; Luo, Z. Review of Adaptive Control for Stroke Lower Limb Exoskeleton Rehabilitation Robot Based on Motion Intention Recognition. Front. Neurorobot. 2023, 17, 1186175. [Google Scholar] [CrossRef]

- Zhang, C.; Yu, Z.; Wang, X.; Chen, Z.J.; Deng, C.; Xie, S.Q. Exploration of Deep Learning-Driven Multimodal Information Fusion Frameworks and Their Application in Lower Limb Motion Recognition. Biomed. Signal Process. Control 2024, 96, 106551. [Google Scholar] [CrossRef]

- Zhang, P.; Zhang, J.; Chen, Y.; Jia, J.; Elsabbagh, A. Research on Human-Machine Synergy Control Method of Lower Limb Exoskeleton Based on Multi-Sensor Fusion Information. IEEE Sens. J. 2024, 24, 35346–35358. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, C.; Yu, Z.; Deng, C. Decoding of Lower Limb Continuous Movement Intention from Multi-Channel SEMG and Design of Adaptive Exoskeleton Controller. Biomed. Signal Process. Control 2024, 94, 106245. [Google Scholar] [CrossRef]

- Zhang, C.; Yu, Z.; Wang, X.; Chen, Z.J.; Deng, C. Temporal-Constrained Parallel Graph Neural Networks for Recognizing Motion Patterns and Gait Phases in Class-Imbalanced Scenarios. Eng. Appl. Artif. Intell. 2025, 143, 110106. [Google Scholar] [CrossRef]

- Wang, E.; Chen, X.; Li, Y.; Fu, Z.; Huang, J. Lower Limb Motion Intent Recognition Based on Sensor Fusion and Fuzzy Multitask Learning. IEEE Trans. Fuzzy Syst. 2024, 32, 2903–2914. [Google Scholar] [CrossRef]

- Gautam, A.; Panwar, M.; Biswas, D.; Acharyya, A. MyoNet: A Transfer-Learning-Based LRCN for Lower Limb Movement Recognition and Knee Joint Angle Prediction for Remote Monitoring of Rehabilitation Progress from SEMG. IEEE J. Transl. Eng. Health Med. 2020, 8, 2100310. [Google Scholar] [CrossRef] [PubMed]

- Xue, J.; Lai, K.W.C. Continuous Lower Limb Multi-Intent Prediction for Electromyography-Driven Intrinsic and Extrinsic Control. Adv. Intell. Syst. 2024, 6, 2300318. [Google Scholar] [CrossRef]

- Wang, X.; Dong, D.; Chi, X.; Wang, S.; Miao, Y.; An, M.; Gavrilov, A.I. SEMG-Based Consecutive Estimation of Human Lower Limb Movement by Using Multi-Branch Neural Network. Biomed. Signal Process. Control 2021, 68, 102781. [Google Scholar] [CrossRef]

- Li, X.; Zhang, X.; Zhang, L.; Chen, X.; Zhou, P. A Transformer-Based Multi-Task Learning Framework for Myoelectric Pattern Recognition Supporting Muscle Force Estimation. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3255–3264. [Google Scholar] [CrossRef]

- Sun, Z.; Li, Z.; Wang, H.; He, D.; Lin, Z.; Deng, Z.H. Fast Structured Decoding for Sequence Models. Adv. Neural Inf. Process. Syst. 2019, 32, 3016–3026. [Google Scholar]

- Norman-Gerum, V.; McPhee, J. Comprehensive Description of Sit-to-Stand Motions Using Force and Angle Data. J. Biomech. 2020, 112, 110046. [Google Scholar] [CrossRef]

- Li, Y.A.; Chen, Z.J.; He, C.; Wei, X.P.; Xia, N.; Gu, M.H.; Xiong, C.H.; Zhang, Q.; Kesar, T.M.; Huang, X.L.; et al. Exoskeleton-Assisted Sit-to-Stand Training Improves Lower-Limb Function Through Modifications of Muscle Synergies in Subacute Stroke Survivors. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3095–3105. [Google Scholar] [CrossRef]

- Al-Quraishi, M.S.; Elamvazuthi, I.; Tang, T.B.; Al-Qurishi, M.; Parasuraman, S.; Borboni, A. Multimodal Fusion Approach Based on EEG and EMG Signals for Lower Limb Movement Recognition. IEEE Sens. J. 2021, 21, 27640–27650. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, C.; Yu, Z. Exploration of Lower Limb Multi-Intent Recognition based on Improved Transformer during Sit-to-Stand Movements. In Proceedings of the 2025 10th International Conference on Automation, Control and Robotics Engineering (CACRE), Wuxi, China, 16–19 July 2025; pp. 306–310. [Google Scholar] [CrossRef]

- Su, B.Y.; Wang, J.; Liu, S.Q.; Sheng, M.; Jiang, J.; Xiang, K. A Cnn-Based Method for Intent Recognition Using Inertial Measurement Units and Intelligent Lower Limb Prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1032–1042. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the IEEE International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Xia, Y.; Li, J.; Yang, D.; Wei, W. Gait Phase Classification of Lower Limb Exoskeleton Based on a Compound Network Model. Symmetry 2023, 15, 163. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Xiu, H.; Ren, L.; Han, Y.; Ma, Y.; Chen, W.; Wei, G.; Ren, L. An Optimization System for Intent Recognition Based on an Improved KNN Algorithm with Minimal Feature Set for Powered Knee Prosthesis. J. Bionic Eng. 2023, 20, 2619–2632. [Google Scholar] [CrossRef]

- Cai, C.; Yang, C.; Lu, S.; Gao, G.; Na, J. Human Motion Pattern Recognition Based on the Fused Random Forest Algorithm. Meas. J. Int. Meas. Confed. 2023, 222, 113540. [Google Scholar] [CrossRef]

- Wei, C.; Wang, H.; Lu, Y.; Hu, F.; Feng, N.; Zhou, B.; Jiang, D.; Wang, Z. Recognition of Lower Limb Movements Using Empirical Mode Decomposition and K-Nearest Neighbor Entropy Estimator with Surface Electromyogram Signals. Biomed. Signal Process. Control 2022, 71, 103198. [Google Scholar] [CrossRef]

- Yang, Y.; Tao, Q.; Li, S.; Fan, S. EMG-Based Dual-Branch Deep Learning Framework with Transfer Learning for Lower Limb Motion Classification and Joint Angle Estimation. Concurr. Comput. Pract. Exp. 2025, 37, e70263. [Google Scholar] [CrossRef]

- Han, J.; Wang, H.; Tian, Y. SEMG and IMU Data-Based Angle Prediction-Based Model-Free Control Strategy for Exoskeleton-Assisted Rehabilitation. IEEE Sens. J. 2024, 24, 41496–41507. [Google Scholar] [CrossRef]

| Sub | M/F | Age (years) | Height (cm) | Weight (kg) | Sub | M/F | Age (years) | Height (cm) | Weight (kg) | Paretic Side | FMA-LE Score |

|---|---|---|---|---|---|---|---|---|---|---|---|

| HS1 | M | 26 | 181 | 75 | PS1 | M | 45 | 175 | 70 | R | 14 |

| HS2 | M | 22 | 183 | 72 | PS2 | F | 54 | 160 | 60 | R | 10 |

| HS3 | M | 23 | 180 | 85 | PS3 | M | 53 | 176 | 73 | R | 14 |

| HS4 | M | 21 | 178 | 72 | PS4 | M | 49 | 173 | 66 | L | 12 |

| HS5 | M | 21 | 185 | 80 | PS5 | M | 47 | 170 | 64 | R | 12 |

| HS6 | M | 20 | 175 | 72 | PS6 | M | 45 | 168 | 62 | L | 16 |

| HS7 | M | 24 | 168 | 62 | PS7 | F | 33 | 160 | 40 | R | 12 |

| Mean ± Std | / | 22.4 ± 1.9 | 178.6 ± 5.3 | 74.0 ± 6.1 | Mean ± Std | / | 46.6 ± 6.5 | 168.9 ± 6.2 | 62.1 ± 9.9 | / | 12.9 ± 1.8 |

| Mode | Accuracy (%) | F1-Score (%) | Recall (%) | R2 Score (%) | MAE (º) | NPs | MACs | IT (ms) |

|---|---|---|---|---|---|---|---|---|

| CNN | 97.48 ± 0.81 | 96.15 ± 1.89 | 96.49 ± 1.69 | 91.79 ± 2.84 | 6.05 ± 1.29 | 2,387,140 | 332,037,120 | 34.13 |

| MobileNet | 97.33 ± 0.83 | 95.75 ± 1.64 | 96.22 ± 1.52 | 90.98 ± 2.79 | 6.39 ± 1.57 | 2,370,830 | 226,246,656 | 32.71 |

| LSTM | 97.01 ± 1.17 | 95.13 ± 2.74 | 95.61 ± 2.45 | 94.12 ± 2.55 | 5.873 ± 1.37 | 259,396 | 170,278,912 | 31.20 |

| GRU | 97.23 ± 0.65 | 95.73 ± 1.73 | 96.13 ± 1.54 | 94.31 ± 2.45 | 5.54 ± 1.13 | 204,996 | 150,307,968 | 29.91 |

| BERT | 96.78 ± 1.49 | 95.21 ± 2.50 | 95.49 ± 2.69 | 94.01 ± 2.37 | 5.63 ± 1.34 | 268,214 | 141,582,592 | 38.83 |

| Transformer | 97.21 ± 0.84 | 95.97 ± 1.56 | 96.13 ± 1.48 | 94.76 ± 2.09 | 5.16 ± 1.15 | 312,190 | 353,863,552 | 43.64 |

| iTransformer-DTL | 99.22 ± 0.76 | 98.17 ± 1.41 | 98.50 ± 1.28 | 97.88 ± 2.21 | 4.23 ± 1.11 | 170,376 | 130,228,224 | 29.61 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Zhang, C.; Yu, Z.; Liu, Y.; Deng, C. A Dual-Task Improved Transformer Framework for Decoding Lower Limb Sit-to-Stand Movement from sEMG and IMU Data. Machines 2025, 13, 953. https://doi.org/10.3390/machines13100953

Wang X, Zhang C, Yu Z, Liu Y, Deng C. A Dual-Task Improved Transformer Framework for Decoding Lower Limb Sit-to-Stand Movement from sEMG and IMU Data. Machines. 2025; 13(10):953. https://doi.org/10.3390/machines13100953

Chicago/Turabian StyleWang, Xiaoyun, Changhe Zhang, Zidong Yu, Yuan Liu, and Chao Deng. 2025. "A Dual-Task Improved Transformer Framework for Decoding Lower Limb Sit-to-Stand Movement from sEMG and IMU Data" Machines 13, no. 10: 953. https://doi.org/10.3390/machines13100953

APA StyleWang, X., Zhang, C., Yu, Z., Liu, Y., & Deng, C. (2025). A Dual-Task Improved Transformer Framework for Decoding Lower Limb Sit-to-Stand Movement from sEMG and IMU Data. Machines, 13(10), 953. https://doi.org/10.3390/machines13100953