1. Introduction

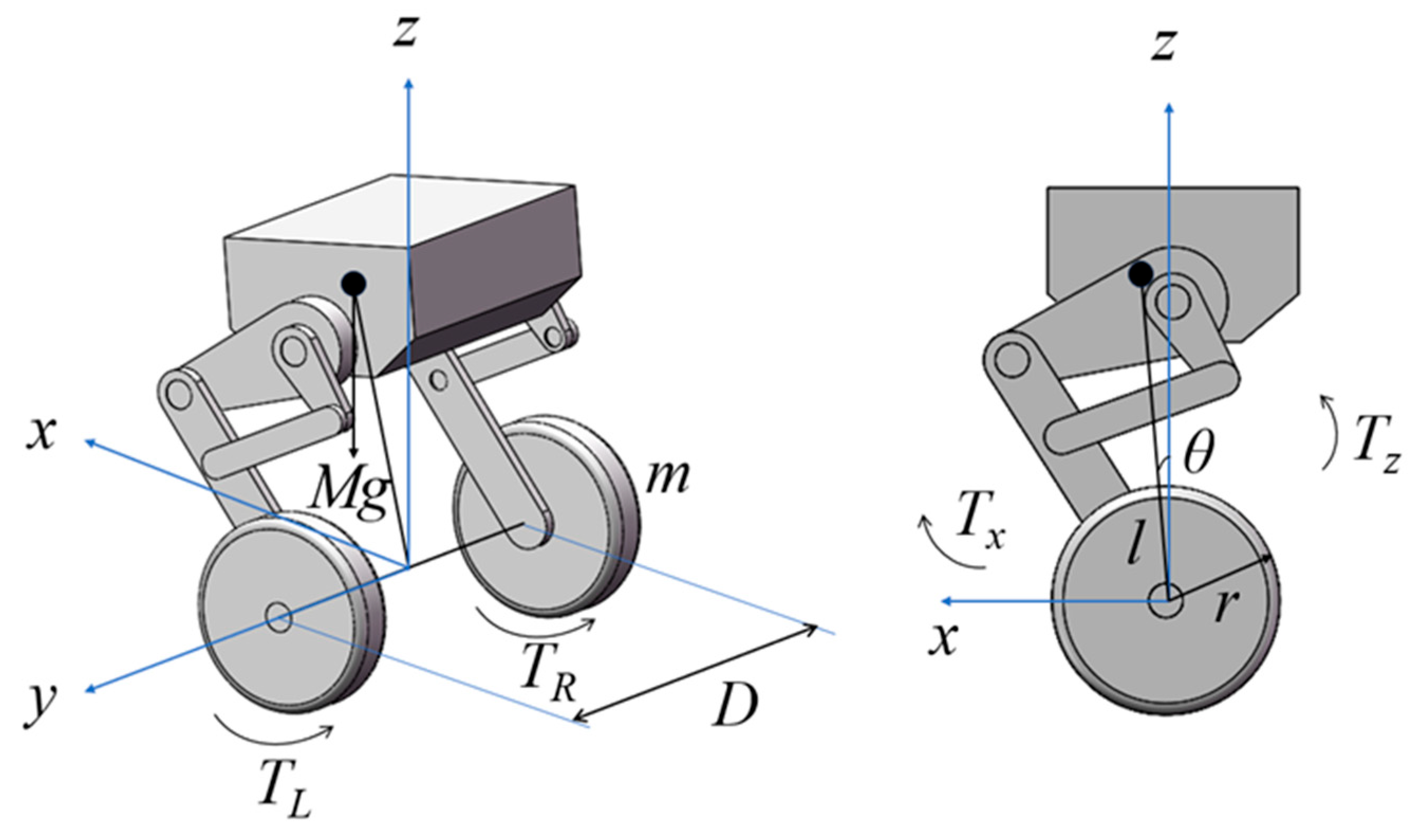

In the field of mobile robotics, wheeled bipedal robots (WBRs) combine the advantages of humanoid and wheeled robots. They use leg structures to isolate torso vibrations caused by terrain irregularities, while employing wheels for balance and dynamic motion, making them well-suited for human working environments [

1]. However, in typical working environments, WBRs are frequently subject to various types of disturbances, leading to issues such as slow control response, insufficient vibration suppression, overshoot, or oscillations, which negatively affect their performance [

2]. Therefore, it is essential to design an anti-disturbance balance control algorithm that ensures control precision under limited computational resources, further enhancing the anti-disturbance performance of WBRs.

For WBRs, balance capability and anti-disturbance performance are fundamental for executing other actions. Traditional balance control methods can achieve basic balance control for WBRs, such as Proportional–Integral–Derivative (PID), Linear Quadratic Regulator (LQR), and Zero-Moment Point (ZMP) methods. These methods typically simplify the WBR to a Linear Inverted Pendulum (LIP) by ignoring leg dynamics to achieve balance control [

3]. Appropriate control algorithms can also be obtained through model reduction methods. A reduced-order model based on the Cuckoo Search Algorithm can reduce the computational burden of balance control [

4]. In nonlinear domains that cannot be simplified, balance control can be achieved using the State-Dependent Riccati Equation (SDRE) control algorithm [

5]. For WBRs with complex structures and state-space equations that are difficult to solve, Reinforcement Learning (RL) and Adaptive Dynamic Programming (ADP) can be employed to provide adaptive optimal control solutions based on learning [

6]. For WBRs with known state-space equations, LQR can effectively achieve balance control with highly efficient performance [

7]. However, the gain coefficients of the aforementioned control algorithms are mostly fixed, resulting in limited real-time responsiveness when the robot’s external environment changes.

When the system model is known and the main control device has sufficient computational power, Model Predictive Control (MPC) can be effectively used for state tracking of the model. Thanks to the “online” feature of MPC, the algorithm can update the planned trajectory within the feedback loop at a certain frequency, providing the robot with enhanced robustness against disturbances [

8]. Additionally, MPC can incorporate various inequality constraints, enhancing the system’s insensitivity to external disturbances [

9].

The original MPC method can effectively achieve anti-disturbance balance control for WBRs. A control algorithm is designed based on the robot’s state-space model and formulated as a quadratic programming problem, yielding optimal outputs for given prediction and control horizons. In certain scenarios, this method achieves minimal control efforts superior to those of the LQR algorithm [

10]. Shahida Khatoon et al. [

11] applied MPC with Linear Quadratic Gaussian Controller (LQG) to a wheeled inverted pendulum system and conducted impact tests, with simulation results showing that MPC exhibits strong robustness. Yu Jianqiao et al. [

12] applied MPC to the posture control of a WBR, ensuring that the robot tracks the posture trajectory with minimal error and maintains balance. Niloufar Minouchehr et al. [

13] treated a two-wheeled inverted pendulum as an underactuated nonlinear system and designed an MPC algorithm to achieve anti-disturbance control. Marco Kanneworff et al. [

14] developed an Intrinsically Stable MPC (IS-MPC) algorithm, achieving stable control of an arm-equipped wheeled inverted pendulum robot under explicit constraints. Cao Haixin et al. [

15] designed a constrained MPC algorithm to achieve balance control for a WBR on sloped terrains and enhanced the robustness of the control algorithm by developing an Extended State Observer (ESO). These methods applied MPC to WBRs and achieved balance control; however, they did not constrain system states and control inputs based on the robot’s hardware performance. This oversight could lead to inaccuracies in the MPC’s computed results, rendering the robot uncontrollable due to hardware power limitations. In particular, when the robot is subjected to disturbances, the driving wheel motors often need to output large torques within a short time to maintain balance. However, if the motors operate beyond their rated torque for extended periods, issues such as control delays, overheating, and performance degradation may occur. Unlike hard constraints, which may render the optimization problem infeasible under large disturbances, soft constraints introduce a penalty mechanism that tolerates minor violations. This design ensures the continuous feasibility of the controller while balancing constraint satisfaction and control performance, thereby enhancing robustness and practicality in real-world applications. By introducing soft constraints into the MPC framework, the range of control inputs can be effectively limited, ensuring that the control signals remain within the rated torque and below the peak torque. This not only protects the hardware from overload but also enhances the feasibility and anti-disturbance performance of the control algorithm. To enhance the effectiveness of MPC in practical applications, researchers have proposed various methods to optimize control performance. Daniel C. Fernández et al. [

16] conducted simulation experiments with multiple prediction horizons, achieving the control objectives of reducing control errors and ensuring computational speed. Li Xingjia et al. [

17] employed the Transient Search Optimization (TSO) algorithm to optimize the parameters of the MPC objective function, reducing the overshoot and steady-state error of the controlled robot. A. K. Kashyap et al. [

18] combined the Ant Colony Optimization (ACO) algorithm with MPC to optimize the robot’s position-solving problem in obstacle scenarios. Chen Zhenbin et al. [

19] applied the Proximal Policy Optimization (PPO) learning method to adaptively adjust the prediction range within the MPC framework, achieving stable trajectory tracking. Jin Mengtao et al. [

20] used the Chaos Particle Swarm Optimization (CPSO) algorithm to optimize the solving capability of MPC, effectively improving trajectory tracking performance. These methods optimized MPC parameters for various target scenarios applied to robots, improving the tracking performance of target state variables. However, the optimized parameters were relatively limited, and the considered scenarios did not comprehensively account for disturbances. For WBRs with high real-time response requirements, the sizes of the MPC’s prediction and control horizons affect both the computation of control outputs and the main control device’s computation time [

21]. Among common heuristic optimization algorithms, the Dung Beetle Optimizer (DBO) algorithm proposed by Xue Jiankai et al. [

22] can achieve multi-objective optimization and solutions. Yang Pei et al. [

23] applied DBO to solve the optimization model for lightweight objectives, obtaining a global optimal solution with fewer iterations. Li Yanhui et al. [

24] applied DBO to optimize wind models, obtaining an optimal set of model parameters. Due to its powerful solution space exploration capability, DBO outperforms classic optimization algorithms such as PSO [

25].

Therefore, this paper proposes a soft-constrained MPC algorithm tailored to the hardware specifications of WBRs and optimizes the combination of prediction and control horizons for different disturbance scenarios, further enhancing the robot’s stability under disturbances. The main contributions of this paper are as follows:

- (1)

To address the problem of anti-disturbance control for WBRs, a soft-constrained MPC algorithm considering hardware power limitations is proposed to optimize the feasible domain of control outputs.

- (2)

The domain parameters of the MPC algorithm are optimized using the heuristic DBO algorithm, reducing the computation time of the main control device while ensuring the robot’s anti-disturbance performance.

- (3)

An SRobo110-II experimental platform and disturbance equipment are constructed to validate the proposed method through comparisons with the LQR balance control algorithm and others.

Besides this section, the structure of this paper is as follows:

Section 2 establishes the dynamic model of the WBR through Newtonian mechanics analysis.

Section 3 designs the MPC algorithm, sets reasonable control constraints based on the robot’s hardware, and optimizes the sizes of the MPC’s prediction and control horizons using the heuristic DBO algorithm.

Section 4 constructs the experimental platform for the WBR and conducts anti-disturbance experiments with multiple parameter sets, analyzing the anti-disturbance performance under different horizon sizes and control algorithms.

Section 5 provides a conclusion of the research presented in this paper.

3. MPC Algorithm Design for WBRs

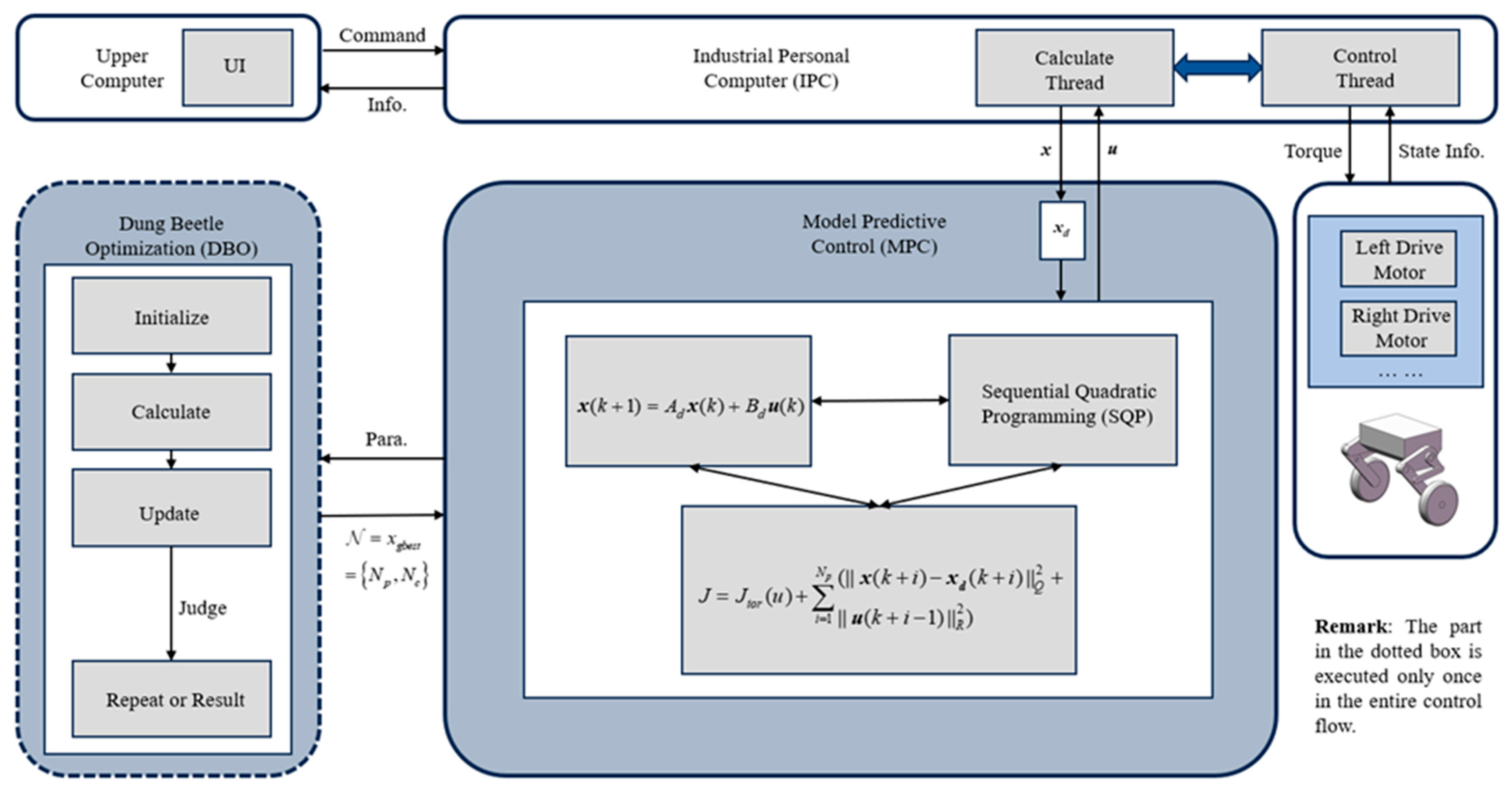

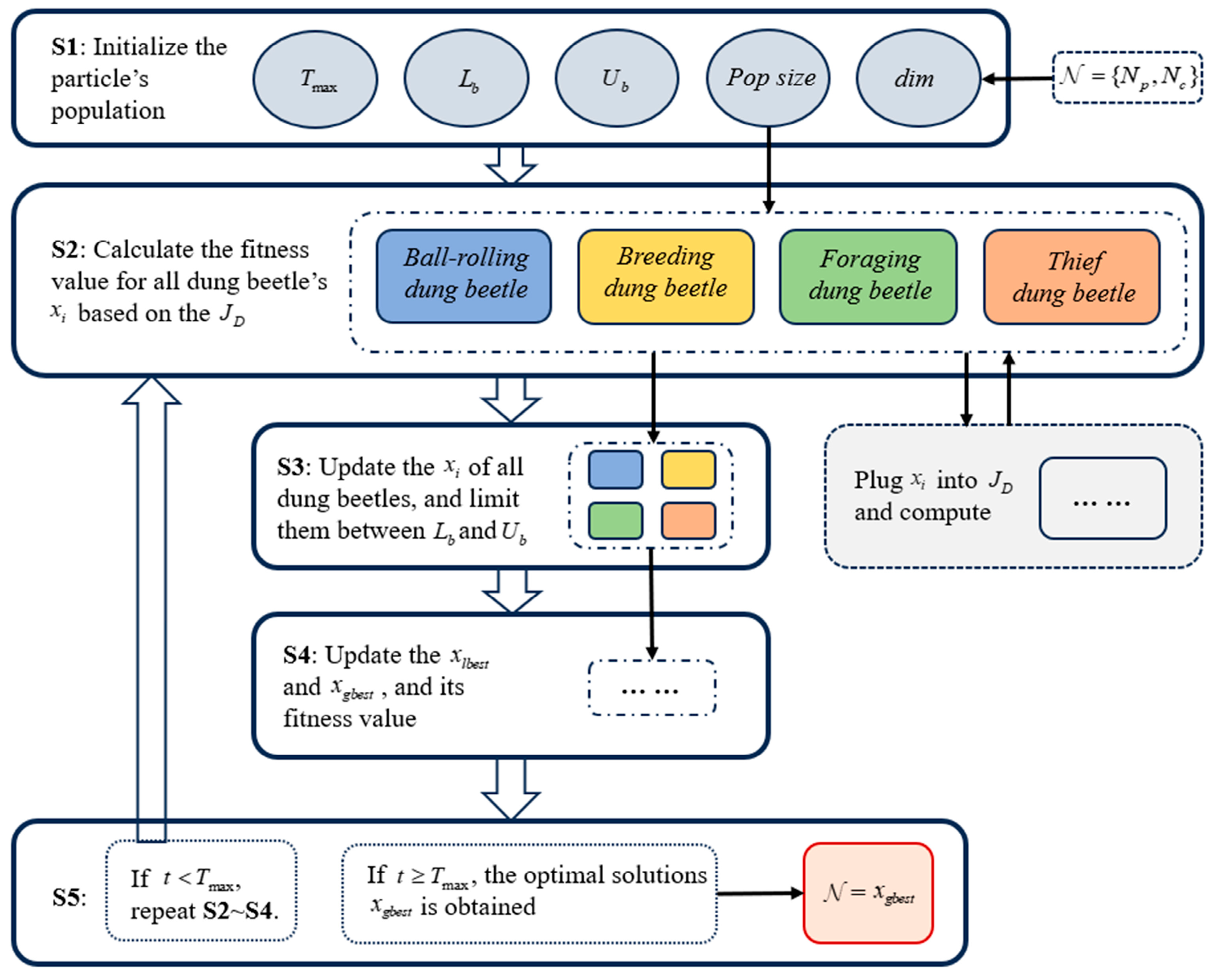

As discussed in

Section 2, under wheeled motion, the WBR can be equivalently simplified to a wheeled inverted pendulum system. The wheeled inverted pendulum system is highly unstable. Without a control algorithm, the system will gradually diverge over time, making it difficult to restore balance. Therefore, a proper control algorithm is required to ensure the system’s controllability and allow it to converge over time, maintaining a balanced posture. MPC is widely used in the industrial field, and in the past decade, extensive research has been conducted on MPC’s technical selection, variable control, and performance estimation [

30]. Therefore, this paper adopts MPC as the balance control algorithm for the WBR and optimizes the horizon parameters of the MPC with anti-disturbance as the control objective. The control algorithm proposed in this paper, along with the control framework applied to the robot, is shown in

Figure 3.

In

Section 3.1, the state-space equation is discretized, and a soft-constrained MPCler is formulated. Subsequently,

Section 3.2 analyzes the stability of the wheeled inverted pendulum system under the MPC framework. In

Section 3.3 and

Section 3.4, the horizon parameters of MPC are embedded into the dung beetle optimization algorithm, and a cost function is devised to iteratively refine these parameters.

3.1. Soft-Constrained MPC Algorithm

In practical applications, the system state variables are obtained through discrete sampling during the control cycles. By discretizing Equation (5), the discrete state-space equation used for MPC calculations is obtained:

The discretization method is as follows:

where

and

represent the state vector and control input at time step

,

represents the state vector at time step

,

is the discretization period,

and

are the discretized system matrix and input matrix, respectively.

The objective of MPC is to solve an optimization problem at each sampling instant, based on the given prediction horizon

and control horizon

, generating a sequence of future control inputs

such that the system’s behavior within the prediction horizon meets certain performance criteria. At time step

, which serves as the initial sampling instant of the system, a time series

is selected, yielding the system state expression with a step length of

:

By rearranging Equation (11) into matrix form, it can be rewritten as:

where

and

are recursive matrices composed of

and

, respectively;

is the stacked vector of future predicted states, and

is the stacked vector of future control inputs, expressed as:

The following cost function is defined for MPC:

where

and

are the weight matrices for the state error and control input, respectively.

is the desired state vector, and for anti-disturbance balance control,

holds at any time step in the time series

.

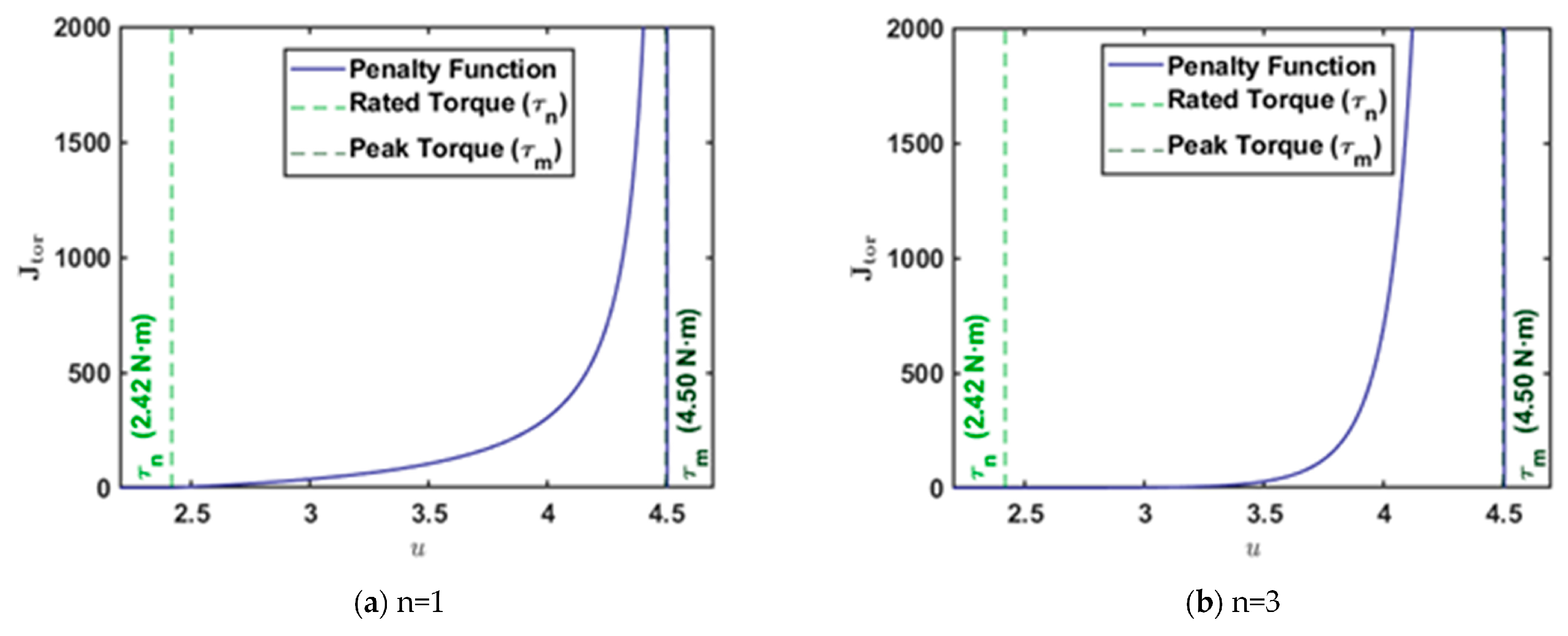

In the control framework of WBRs, the system’s control frequency is typically in the microsecond range to meet real-time response requirements. When the robot is subjected to disturbances, the hub motors need to respond quickly and produce large control torques. However, if the motors operate beyond their rated torque for an extended period, issues such as control lag, overheating, and reduced control precision may arise. Therefore, soft constraints need to be designed to limit the solution domain obtained through MPC, ensuring that the control inputs do not exceed the rated torque for extended periods and always remain below the peak torque.

The designed soft constraint is expressed in the form of a penalty function. Let

be the constraint penalty weight and

be the nonlinear acceleration factor, both of which control the magnitude of the penalty function. The value of

needs to be adjusted according to the motor characteristics. From the motor characteristic curve, it is known that the heating power

of the motor is proportional to the square of the current:

where

is the motor current, and

is the resistance of the motor windings. Let the motor’s temperature rise be denoted as

:

where

is the temperature rise coefficient of the motor, obtained from the motor characteristic curve. Let

be the torque increment corresponding to the temperature rise

. Since the current is proportional to the torque increment, the temperature rise-torque coefficient is defined as:

Based on the motor’s insulation class, the allowable temperature rise range

can be selected, yielding the constraint penalty weight:

where

and

represent the rated torque and peak torque of the hub motor, respectively.

As for the nonlinear acceleration factor

, its magnitude determines the degree of nonlinearity of the penalty function. For the hub motor of the WBR, it is necessary to provide short-term torque that exceeds the rated torque. To allow for short-term overload, the ideal range for

should be between

. Based on the above definitions, the following nonlinear penalty function is designed:

The reference curve of the function in Equation (19) is shown in

Figure 4.

By incorporating the designed penalty function from Equation (19) into Equation (14), the final cost function of the MPC is obtained:

Using the Sequential Quadratic Programming (SQP) algorithm, Equation (20) is solved iteratively by constructing and solving a series of quadratic programming subproblems to gradually approach the optimal solution, yielding the optimal system control input for the corresponding state vector.

3.2. Stability Analysis

System stability is one of the core issues in control design, that is, whether the system can remain controllable or gradually return to the equilibrium point after being subjected to initial perturbations or external disturbances. To verify the stabilizing effect of the proposed soft-constrained MPC controller on the wheeled biped robot and its equivalent wheeled inverted pendulum system, this study conducts an eigenvalue-based stability analysis for the systems with and without the incorporation of the soft-constrained MPC controller.

In the stability analysis, an eigenvalue discrimination method based on state-space theory is adopted. This method requires only the eigenvalue calculation of the system state matrix , which enables a rapid determination of stability while avoiding complex computations. Let the eigenvalues of the continuous-time system state matrix in Equation (5) be denoted as . The following criteria apply:

If the real parts of all eigenvalues satisfy , the system is asymptotically stable.

If the real parts of all eigenvalues satisfy , and for those with , the corresponding Jacobian matrix has no repeated roots, the system is marginally stable.

If there exists any eigenvalue such that , the system is unstable.

First, in the absence of the soft-constrained MPC controller, the main parameters of the robot, such as body mass and moment of inertia, are substituted into matrix

, yielding the following numerical expression:

The eigenvalue calculation gives:

Since , the system is determined to be unstable. This indicates that, without control, the wheeled inverted pendulum system cannot maintain stability, and thus an appropriate controller must be introduced to ensure state convergence.

After incorporating the MPC controller, the closed-loop system matrix becomes

, where

is the equivalent feedback matrix given by:

Thus, the closed-loop state matrix is expressed as:

The eigenvalues of

are calculated as:

The results show that all eigenvalues have negative real parts, indicating that the system achieves asymptotic stability under the soft-constrained MPC controller. Furthermore, the controllability matrix of the closed-loop system is full rank, confirming that the system remains controllable. Therefore, this analysis not only validates the effectiveness of the controller in stabilizing the wheeled inverted pendulum system but also highlights the necessity of the soft-constrained MPC in ensuring both stability and controllability.

3.3. DBO Algorithm Iteration Method

In the process of solving MPC, in addition to setting appropriate constraints, the sizes of the prediction horizon and control horizon are equally critical to computational complexity and control performance. The optimization problem in MPC essentially involves large-scale matrix computations and the iterative solution of quadratic programming problems. Proper selection of prediction and control intervals can balance computational cost and control precision. When designing the MPC algorithm for wheeled biped robots, it is necessary to determine the combination of prediction and control horizons based on the given weight matrices and . This ensures that real-time performance and anti-disturbance capability are achieved while avoiding excessive computational burden.

The Dung Beetle Optimizer (DBO) is a heuristic multi-population optimization algorithm proposed by Xue Jiankai et al. [

20]. This algorithm avoids local optimal solutions through various iterative strategies and is suitable for solving multi-objective optimization problems such as

in MPC. In the iterative computation process, DBO seeks the optimal solution to multi-objective optimization problems by simulating the behavior of dung beetles, generating four subpopulations: ball-rolling, breeding, foraging, and thief. Beetles in different subpopulations update their positions from the initial location according to predefined strategies. During each iteration, they calculate the cost function at their current positions based on the optimization objective, and the final position is taken as the solution to the optimization problem. The position update strategies for the four types of dung beetles are as follows:

- (1)

Ball-Rolling Dung Beetle: Performs the most basic iterative optimization, simulating the process of beetles navigating using sunlight. Random numbers

and obstacle decision number

are defined to determine whether the ball-rolling dung beetle encounters an obstacle during movement. The position update equation for the obstacle-free mode is as follows:

where

is the current iteration count, and

represents the position of the

dung beetle at the

iteration.

is the natural coefficient, determined to be either 1 or −1 based on probabilistic distribution, representing whether the beetle deviates from its original direction.

denotes the regular deflection coefficient, and

denotes the sunlight deflection coefficient.

is the light intensity variation, determined by the current position

and the globally worst position

from the previous iteration.

When the ball-rolling dung beetle encounters an obstacle, it updates its direction through a dance. The position update equation for the obstacle mode is as follows:

where

determines the degree of deviation between the new direction and the original direction.

- (2)

Breeding Dung Beetle: Uses a boundary selection strategy to simulate the dung beetle’s choice of the brooding ball region. Based on the current local optimal solution, it expands both inward and outward to generate two new solutions, thereby searching for a better solution near the local optimum. The position update equation is as follows:

where

is the position of the

brooding ball at the

iteration.

and

represent two independent random vectors of size

.

determines the extent of the brooding ball region expansion, where

is the maximum number of iterations.

and

are the lower and upper bounds of the brooding ball region, ensuring that the region dynamically adjusts with the number of iterations.

and

represent the lower and upper bounds of the optimization problem, respectively.

is the local optimal position at the

iteration.

- (3)

Foraging Dung Beetle: Similarly to the breeding dung beetle, it simulates the selection of a foraging area by young dung beetles. Based on the current global optimal solution, it generates two new solutions to search for a better solution near the global optimum. The position update equation is as follows:

where

and

represent the lower and upper bounds of the foraging area, respectively.

is a normally distributed random number.

is a random vector following a uniform distribution.

is the global optimal position at the

iteration. The definitions of the remaining parameters are the same as those for the breeding dung beetle.

- (4)

Thief Dung Beetle: It searches for a potentially better solution by mimicking the features of the current global optimal solution and introducing random perturbations. The position update equation is as follows:

where

is a constant that determines the magnitude of the random perturbation.

represents a random vector of size

following a normal distribution. The definitions of the remaining parameters are the same as those for the breeding dung beetle and the foraging dung beetle.

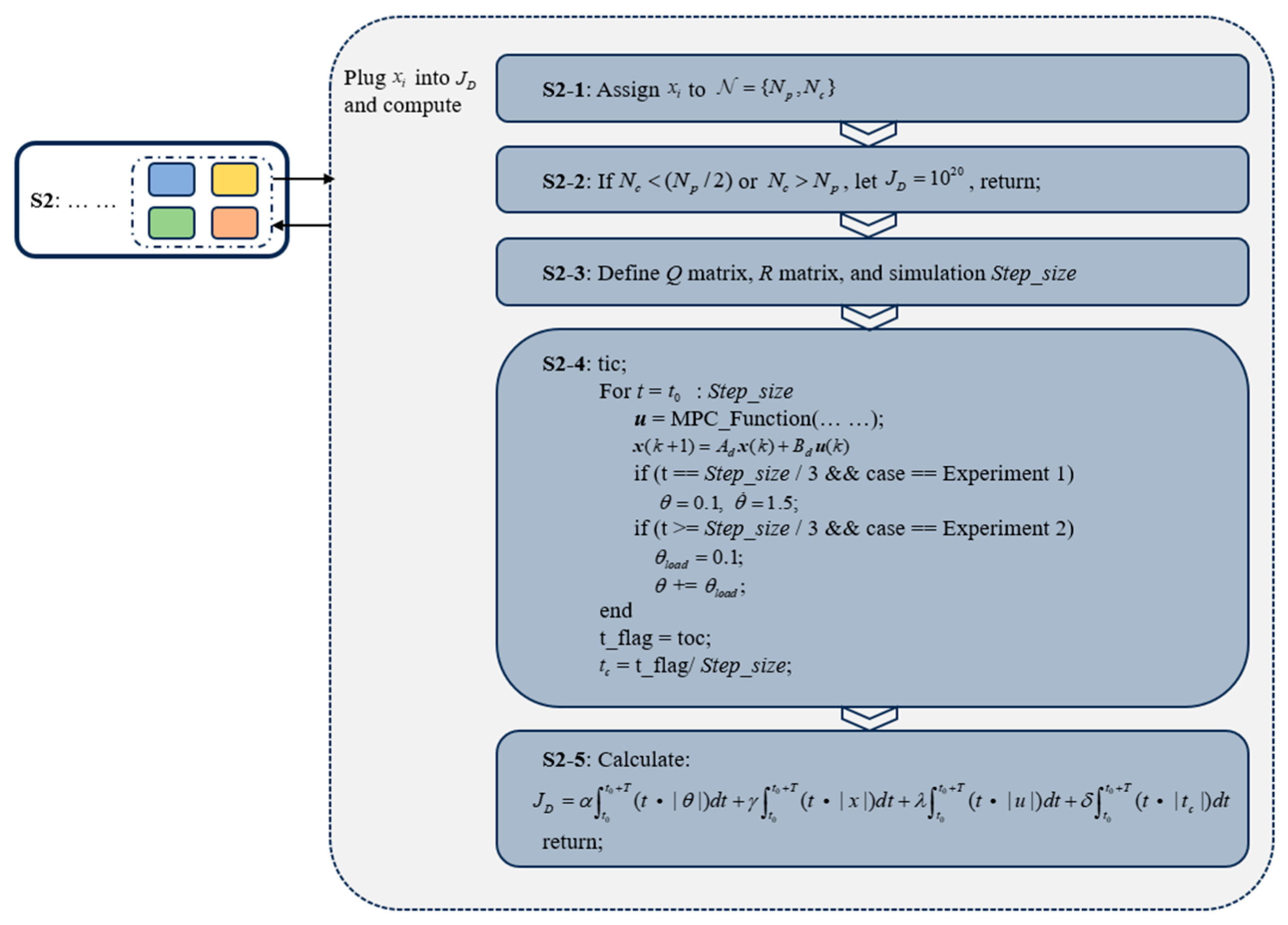

3.4. MPC Optimization Using the DBO Algorithm

In the optimization process of DBO, the design of the cost function

affects the characteristics of the final result. For anti-disturbance scenarios, we aim for the robot to quickly return to a balanced position after being disturbed, minimizing the amplitude of body oscillation and overall displacement, while ensuring the required torque and computation time. This paper is based on the classical Integral of Time-weighted Absolute Error (ITAE) cost function, which fully accounts for the accumulated absolute values of state variables over a certain time period [

31].

is defined as:

where

and

represent the start and duration of the experiment, respectively, and

is the computation time of a single MPC calculation.

,

,

and

are the weighting parameters for the tilt angle of the center of mass, displacement, average torque, and computation time, respectively, with

. The remaining parameters are defined in Equation (5).

By setting the initial values for feasible solutions and specifying the population size and number of iterations, the cost function can be iteratively optimized according to Equation (26). The main optimization process is shown in

Figure 5.

When calculating the position information for each dung beetle, the corresponding horizon parameter combination is transmitted into the state-space equations of the wheeled biped robot, and different types of disturbance simulations are conducted. Based on the simulation results, the cost function is calculated, updating the current optimal position and the position of each dung beetle in the next iteration. Among the four position update methods for dung beetles, the ball-rolling dung beetle serves as the basis for iterative optimization to obtain the optimal solution, with the solution given by Equations (21) and (22). Additionally, the breeding, foraging, and thief dung beetles perform extended iterative optimization around the optimal solution, with the solutions given by Equations (23)–(25), further improving the optimization efficiency.

During disturbance simulations, when

is much smaller than

, MPC may fail to capture key features of the system response, thereby affecting control performance. When

exceeds

, the insufficient prediction horizon may result in model distortion. Therefore, a larger cost function value should be assigned for these two scenarios. Moreover, during each simulation, the single computation time

obtained through the tic and toc methods may vary slightly due to system frequency changes. Thus, the mean value of

over the simulation

should be calculated before computing the cost function. The disturbance simulation and cost function calculation process are shown in

Figure 6.

In summary, by reasonably assigning the weight parameters in , the DBO can be used to obtain an MPC algorithm that balances computational cost and control precision, enabling the WBR to achieve better anti-disturbance performance within a shorter computation time.

4. Anti-Disturbance Experiments for the WBR

This section will experimentally validate the ability of the wheeled biped robot using the MPC algorithm to restore balance under disturbance scenarios and compare it with the commonly used LQR algorithm. Additionally, it will analyze the impact of prediction horizon and control horizon on anti-disturbance performance under DBO, PSO, and non-optimized conditions.

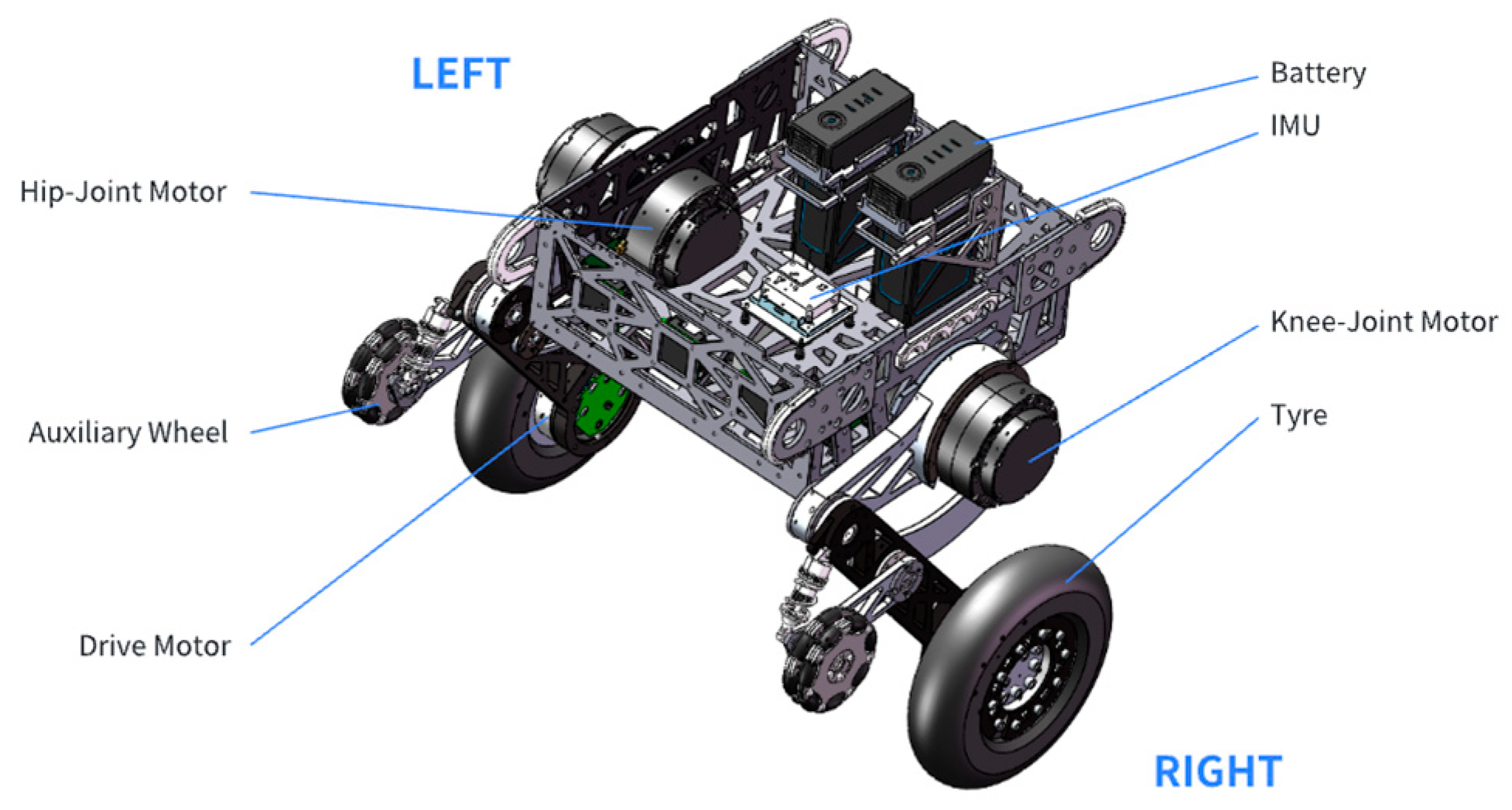

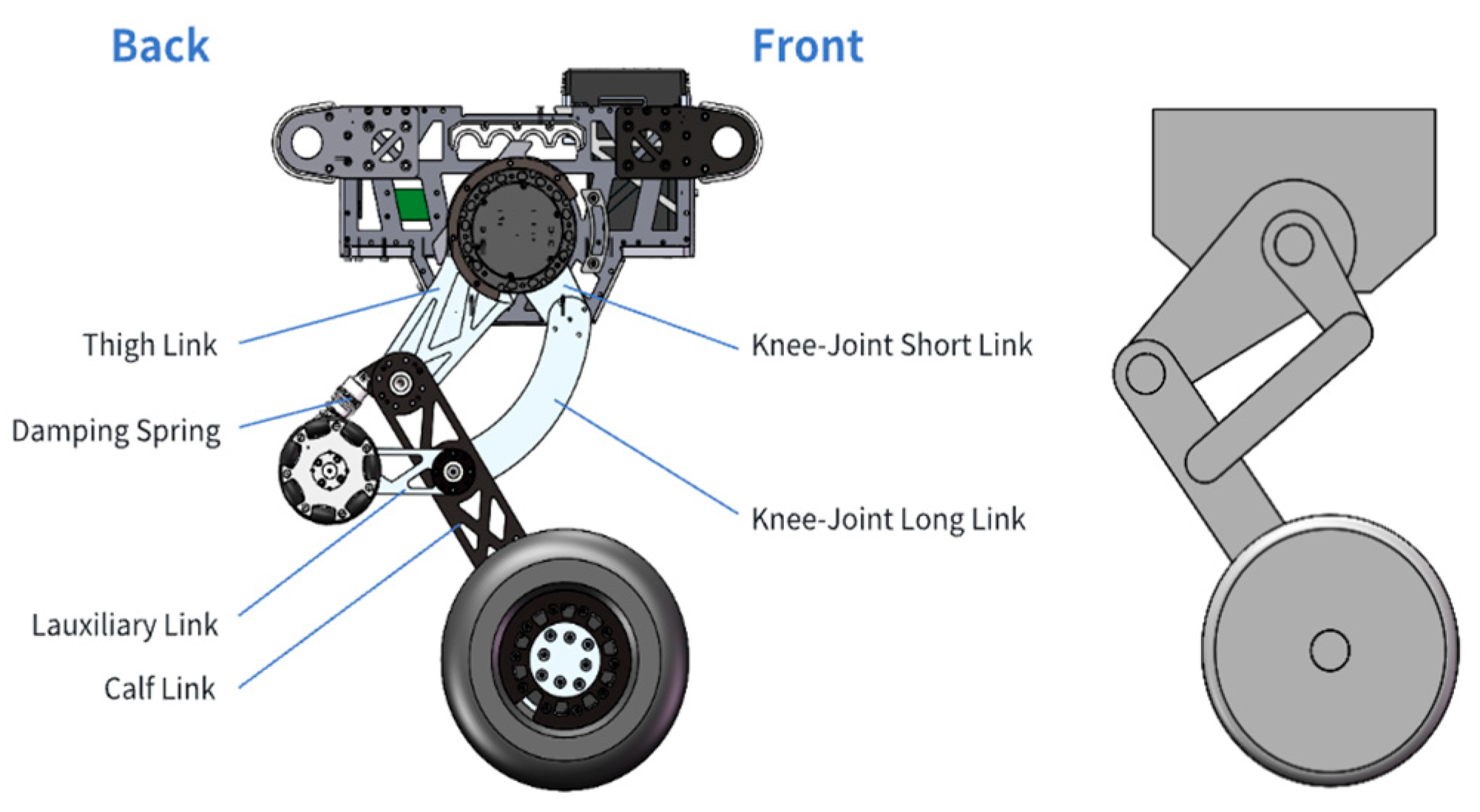

4.1. Experimental Platform Setup

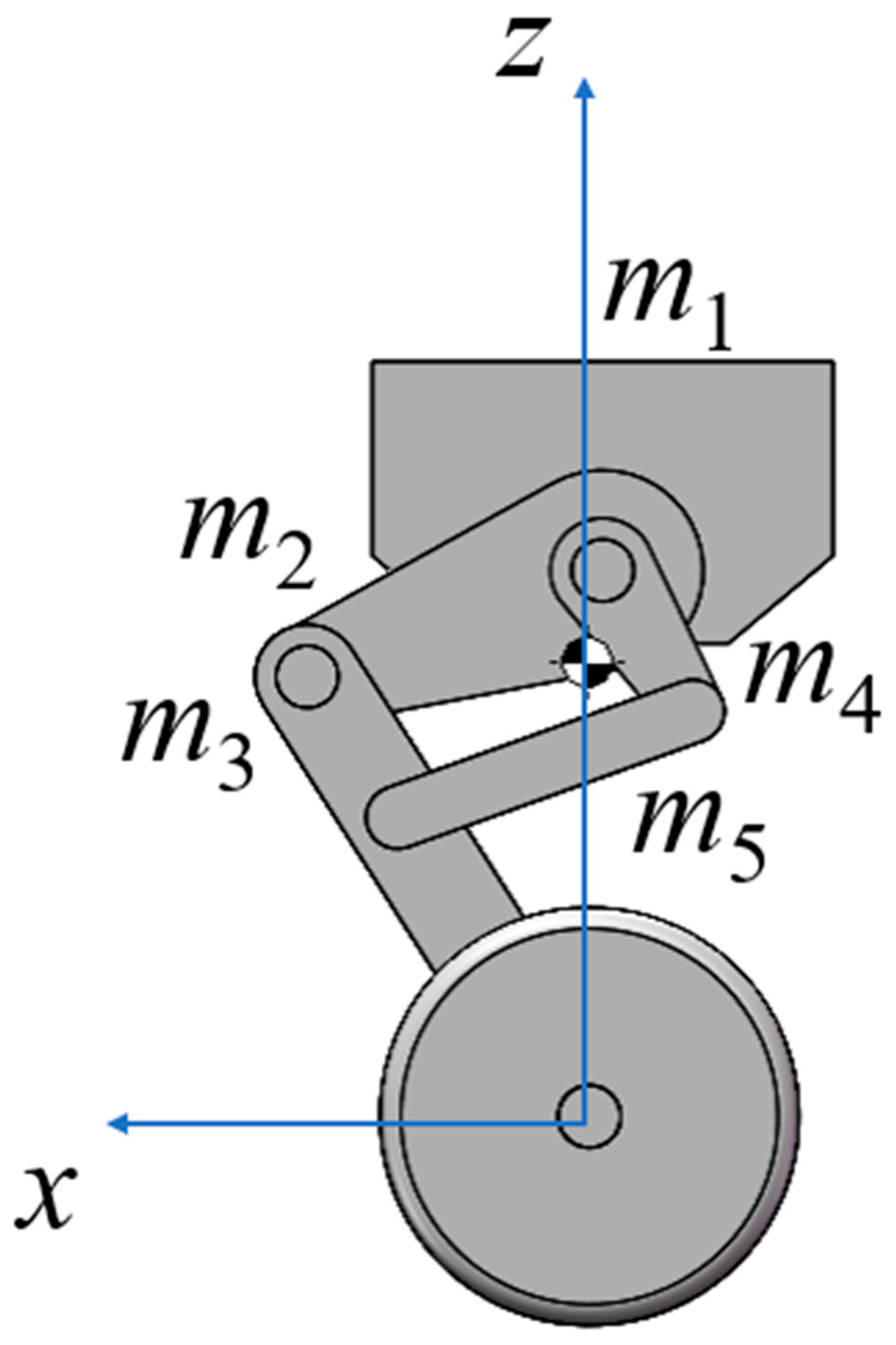

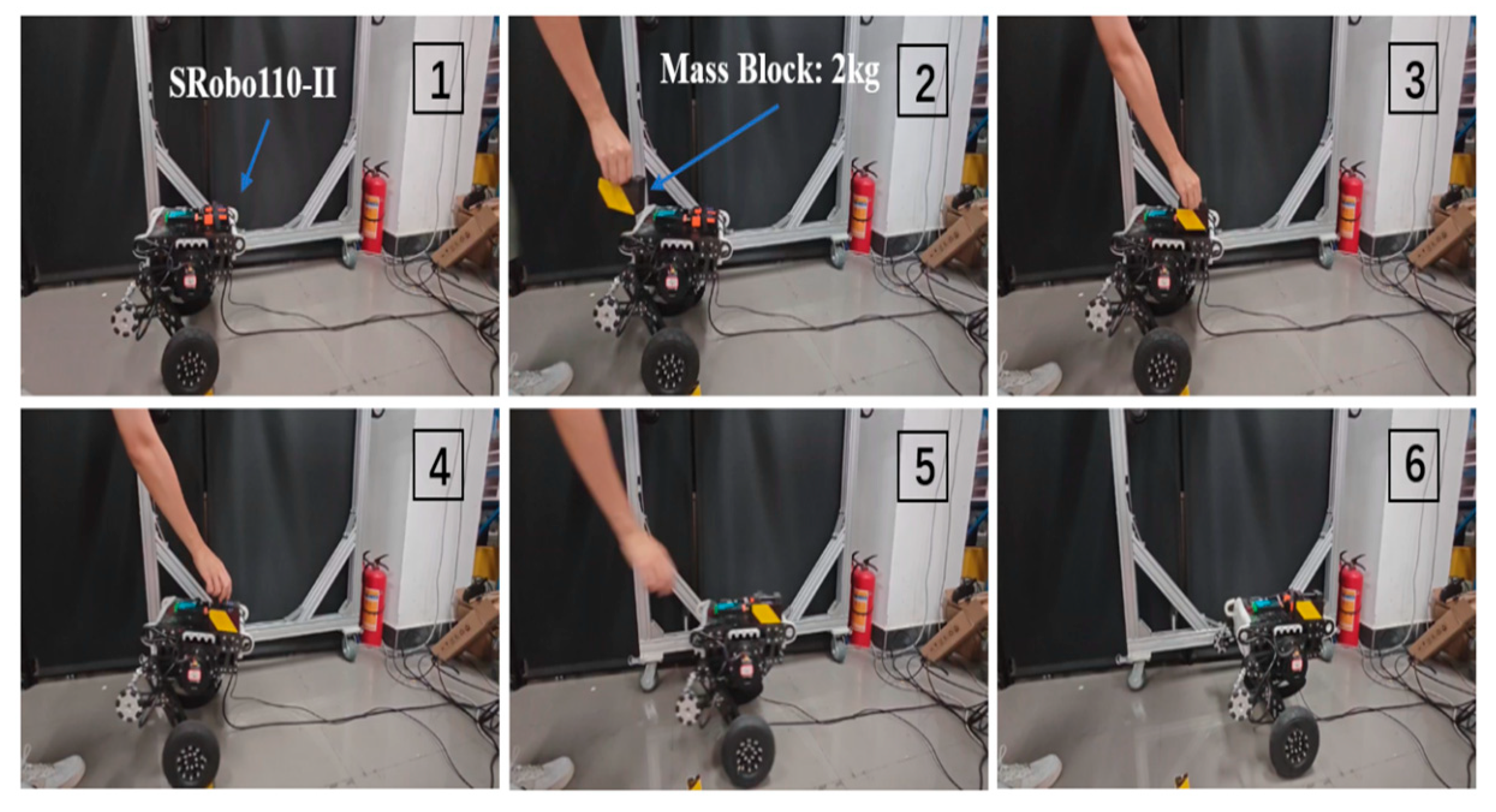

To validate the control algorithm proposed in this paper, a serial-structure wheeled bipedal robot (WBR) named SRobo110-II, independently designed and constructed by our laboratory, is used as the experimental platform [

32]. The complete structure of SRobo110-II is shown in

Figure 7. Its main hardware components include a TB48S battery, an N100 nine-axis Inertial Measurement Unit (IMU), HT-8115 joint motors, MF-9025 hub motors, and an Intel i5-10400H industrial computer connected externally. The IMU transmits data such as the angles and angular velocities of each axis to the industrial computer. The auxiliary wheels allow the robot to perform steering movements when powered off. Two batteries are connected in series to provide a 45.6 V power supply. The joint motors and hub motors respond to commands issued by the industrial computer, driving the links and wheels to achieve posture adjustment and movement. They also return status information such as torque and encoder positions. The serial structure of the robot is shown in

Figure 8. The detailed structure and hardware parameters of the robot are listed in

Table 1.

4.2. Disturbance Experiment Setup

In practical working environments, wheeled biped robots are often exposed to disturbances such as unstructured terrain, external impacts, and vertical loads. To comprehensively validate the effectiveness and superiority of the MPC horizon parameter optimization method based on DBO, as well as to evaluate the disturbance rejection capability of SRobo110-II, three types of experiments are designed in this study. First, in Experiment I, DBO is compared with PSO and ACO [

33] on a simulation platform for optimizing the prediction and control horizons of MPC, and the control performance of different optimization algorithms under disturbance conditions is analyzed. Second, in Experiment II, a pendulum device is constructed using a rope and a 2 kg metal ball. The ball is pulled 1 m horizontally to the front of the robot and then released, so that it collides with the robot’s chest region—while in a balanced state—at the lowest point of its free swing. The impact velocity is determined by the pendulum length and release height, simulating sudden external forces encountered by the robot during locomotion. Finally, in Experiment III, additional mass blocks are gradually mounted onto the robot body to generate vertical load disturbances, in order to observe its posture adjustment process under load. The setups and procedures of the three experiments are illustrated in

Figure 9 and

Figure 10.

4.3. Expt. 1 (Simulation): Process and Result Analysis

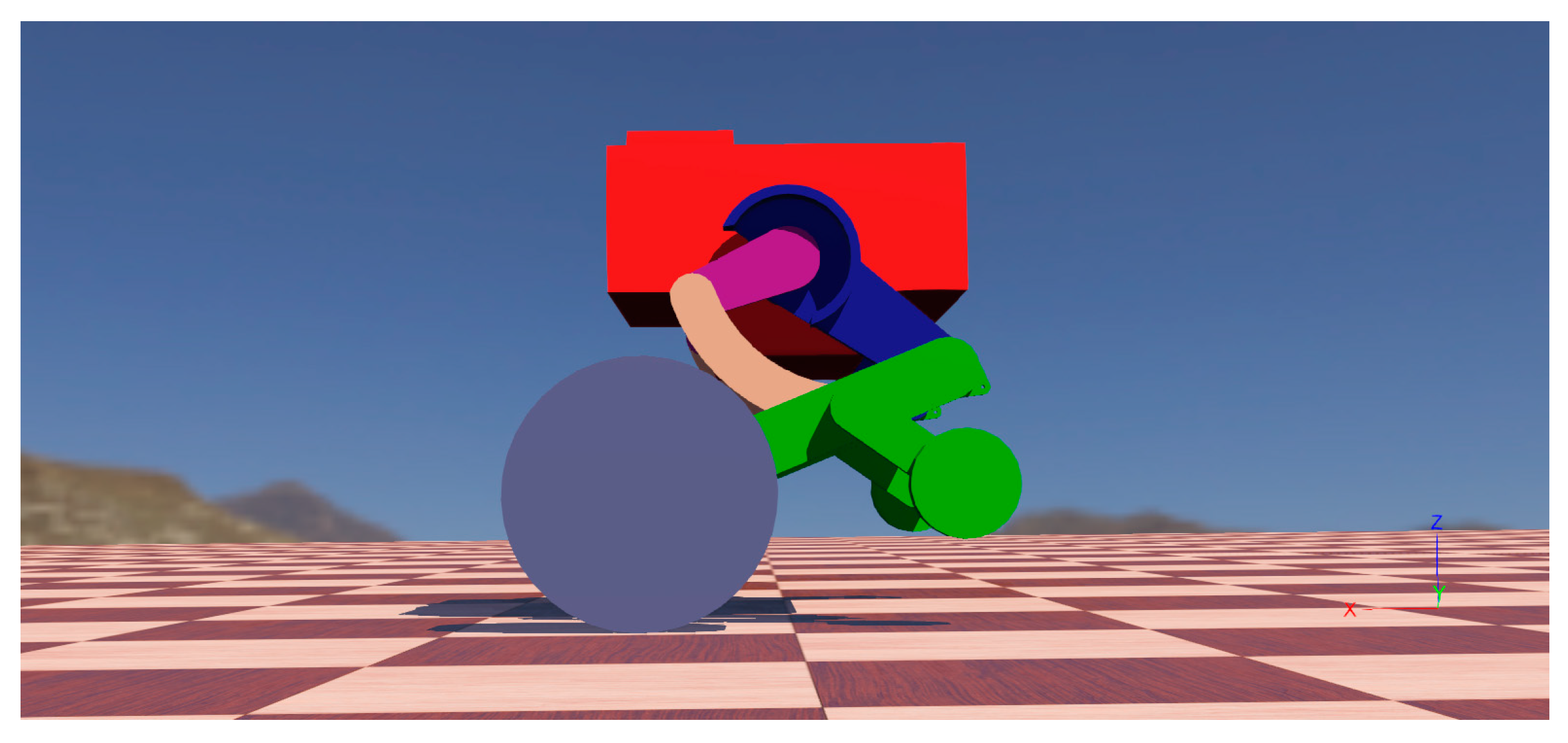

To validate the performance of the DBO algorithm in MPC horizon parameter optimization and to systematically assess the applicability of its optimization results for disturbance rejection control of wheeled biped robots, preliminary experiments were conducted in the Webots simulation environment. As an open-source 3D robotics platform, Webots is equipped with a high-precision physics engine capable of accurately simulating essential physical effects such as gravity, friction, collision, and inertia, thus providing a reliable virtual testing environment for control algorithm verification.

The simulation experiments were carried out on a hardware platform equipped with an AMD Ryzen 7 5800H CPU (base frequency 3.20 GHz). In the Webots project, a standard floor was set as the primary interaction environment for the robot, with physical parameters carefully configured to ensure compliance with the rolling friction model assumptions between the driving wheels and the ground. Based on the wheeled biped robot platform described in

Section 4.2, its simplified model was imported into Webots via the Unified Robot Description Format (URDF), with precise configuration of physical properties such as mass and inertia tensors. The structure of the imported simplified model is shown in

Figure 11.

Webots also provides a comprehensive controller interface that supports co-simulation with the Visual Studio development environment. In addition to the 3D model, virtual IMU sensors, joint motors, and wheel-driving motors were configured at key positions on the robot’s body and joints. The IMU sensor continuously captured the robot’s pitch angle, which was used as a key state variable for the wheeled inverted pendulum system. Through the Visual Studio controller project, the device driver functions and state feedback functions provided by Webots enabled closed-loop interaction between robot motion control and sensor data acquisition.

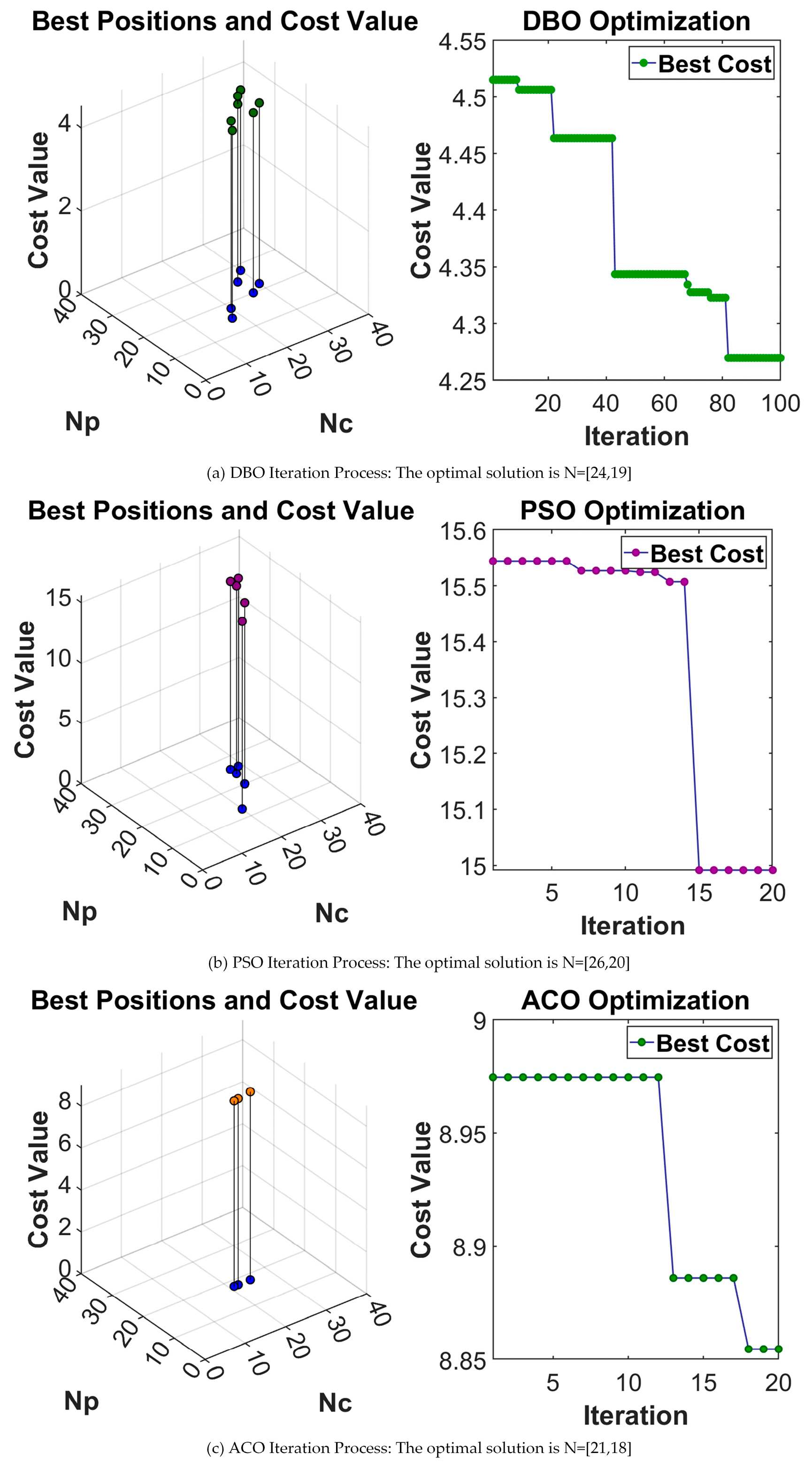

To comprehensively evaluate the optimization efficiency of DBO, PSO and ACO were selected as benchmark heuristic algorithms for comparison. All three algorithms were executed under identical initial conditions, with parameter settings summarized in

Table 2. To highlight the advantage of heuristic algorithms in reducing computational complexity, the maximum search count was set to 1/50 of the theoretical exhaustive count and then converted into the maximum number of iterations according to the population size. During the simulation, the step size was set to

, and once the simulation time reached

, the system parameter

was dynamically adjusted to continuously simulate load disturbances. The final optimization results and performance comparisons are presented in

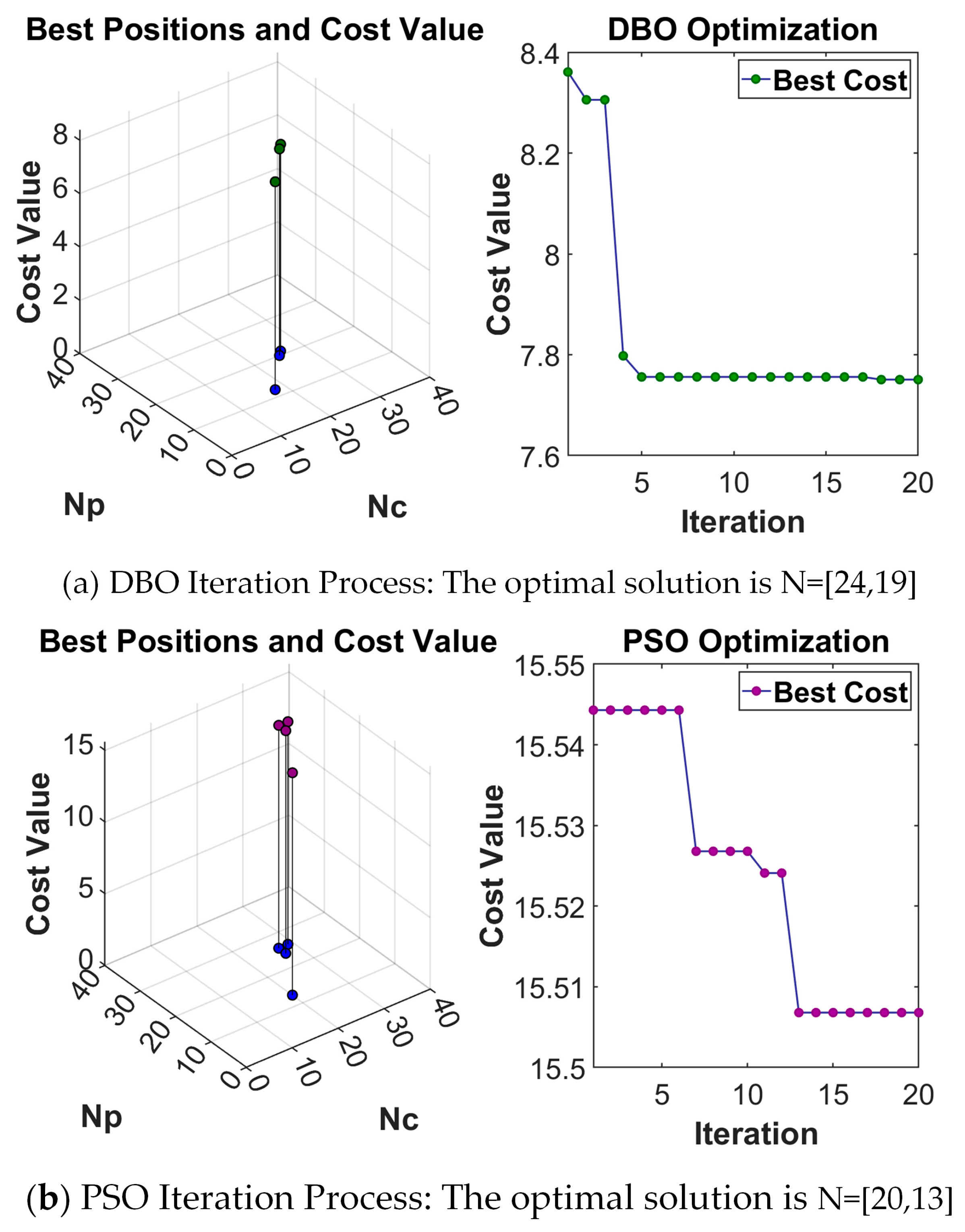

Figure 12.

From

Figure 12, it can be seen that all three methods achieve a certain degree of iterative optimization. After 20 iterations, the optimal solution obtained by DBO yields a lower cost function value compared to PSO and ACO. The experimental results indicate that when the initial conditions are similar, the number of iterations is limited, and the computational time is comparable, DBO demonstrates superior exploration capability of the solution space. This advantage comes from its use of multiple subpopulations to perform extended iterative optimization around the optimal solution region. As a result, within a limited number of iterations, DBO exhibits better spatial search ability and is more suitable for multi-objective optimization tasks in solving MPC domain parameter combinations.

4.4. Expt. 2 (Impact): Process and Result Analysis

After completing the simulation verification in Experiment 1, further tests were conducted on the scenario where the robot is subjected to external impacts. The controller was iteratively optimized using DBO and the commonly used heuristic optimization algorithm PSO, and their control performances under impact disturbances were compared experimentally. The pseudocode of the specific implementation process is shown in

Figure 6. The detailed parameter settings of the two optimization algorithms are consistent with those in Experiment 1, and the final optimization results are presented in

Figure 13 and

Table 3.

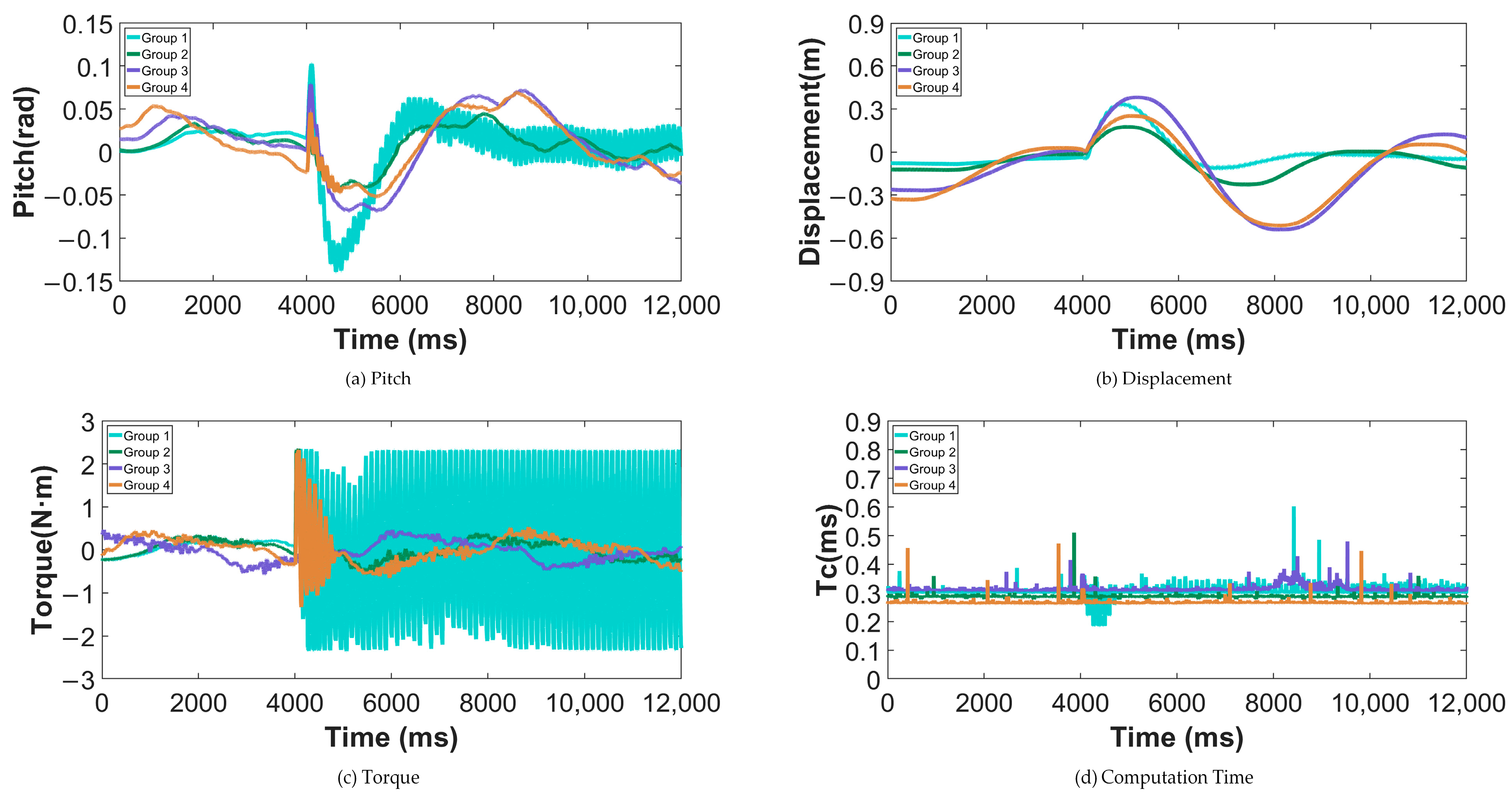

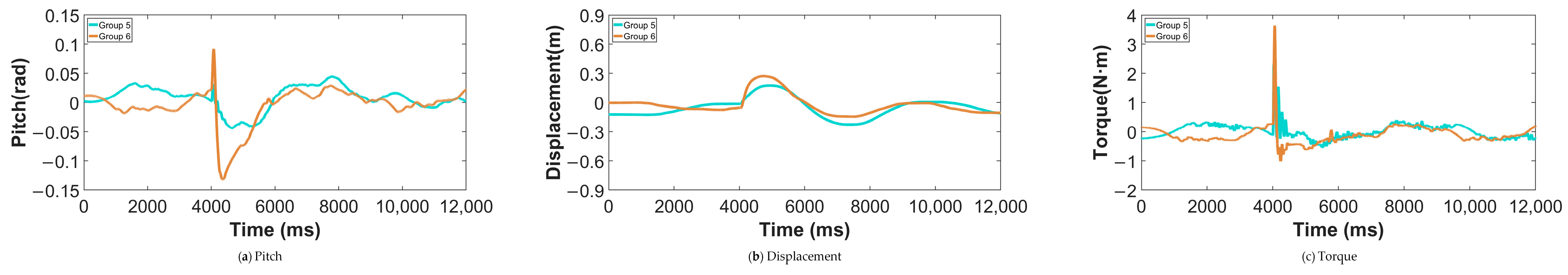

Additionally, two sets of non-optimized parameters are provided—one greater than the maximum optimized value and one smaller than the minimum optimized value—to expand the experimental control groups. The four parameter combinations from

Table 3 are applied to MPC for the impact experiments. The weight matrix is configured as:

By comparing the above experimental results, it can be seen that in all four groups of experiments, the robot was subjected to a horizontal impact from a pendulum mass at a specified moment, and data were analyzed over an 8 s interval following the disturbance. The results show that Group 1 (MPC optimized by DBO) achieved smaller first overshoots in both pitch angle and displacement compared with the other three groups, indicating a stronger capability in suppressing sudden attitude deviations and positional shifts. However, its peak torque and computation time were slightly higher. During the dynamic recovery process, Group 1 achieved the shortest adjustment time for pitch angle, while the displacement adjustment time remained at a normal level, and the torque adjustment time was slightly longer.

Further analysis suggests that this performance difference primarily stems from DBO’s multi-subpopulation cooperative search mechanism, which effectively prevents premature convergence to local optima within limited iterations and enables the discovery of superior control parameter combinations on a global scale. This allows MPC to more proactively correct disturbance errors during prediction and constraint scheduling, thereby ensuring faster convergence of pitch angle and displacement. Although this mechanism leads to an increase in peak torque and some computational overhead, both remain within a reasonable range, demonstrating that MPC optimized by DBO exhibits remarkable advantages in enhancing dynamic performance and impact disturbance rejection.

After comparing the MPC performance optimized by different heuristic algorithms, it can be observed that MPC optimized by DBO shows certain advantages in disturbance suppression. However, to further verify its superiority over traditional controllers, this study also designs an LQR-based control algorithm and conducts comparative experiments against the MPC algorithm using the Group 1 parameters. The cost function is as follows:

The results of the two sets of experiments show that, after being subjected to a horizontal impact at time s, the robot controlled by MPC optimized with DBO exhibits significantly smaller overshoot in pitch angle, displacement, and torque compared with the robot controlled by LQR. Moreover, its pitch angle adjustment time is shorter, while the adjustment times for displacement and torque are slightly longer. The main reason for this difference lies in the rolling optimization mechanism of MPC, which explicitly considers future states and constraints during the control process, enabling the controller to plan ahead and suppress deviations caused by disturbances. In contrast, LQR relies on fixed feedback gains and can only provide a linear response to the current state, lacking foresight for future disturbances, which results in larger overshoot under strong perturbations. Furthermore, the DBO optimization process enables MPC to search for more suitable prediction and control horizons, as well as weight matrices, thereby achieving a better balance between rapid pitch recovery and smooth displacement adjustment, ultimately demonstrating superior disturbance rejection capability.

4.5. Expt. 3 (Load): Process and Result Analysis

Similarly to the process in Expt. 2, simulations and iterative optimizations are conducted for the robot under load disturbance using DBO and PSO. The pseudocode for the process is shown in

Figure 6, and the initial values for both optimization algorithms are the same as in Expt. 1. In the cost function calculation, the simulation

is also set to 1500, and

is continuously adjusted at all times after

to simulate load disturbance. The final optimization results are shown in

Figure 16 and

Table 8.

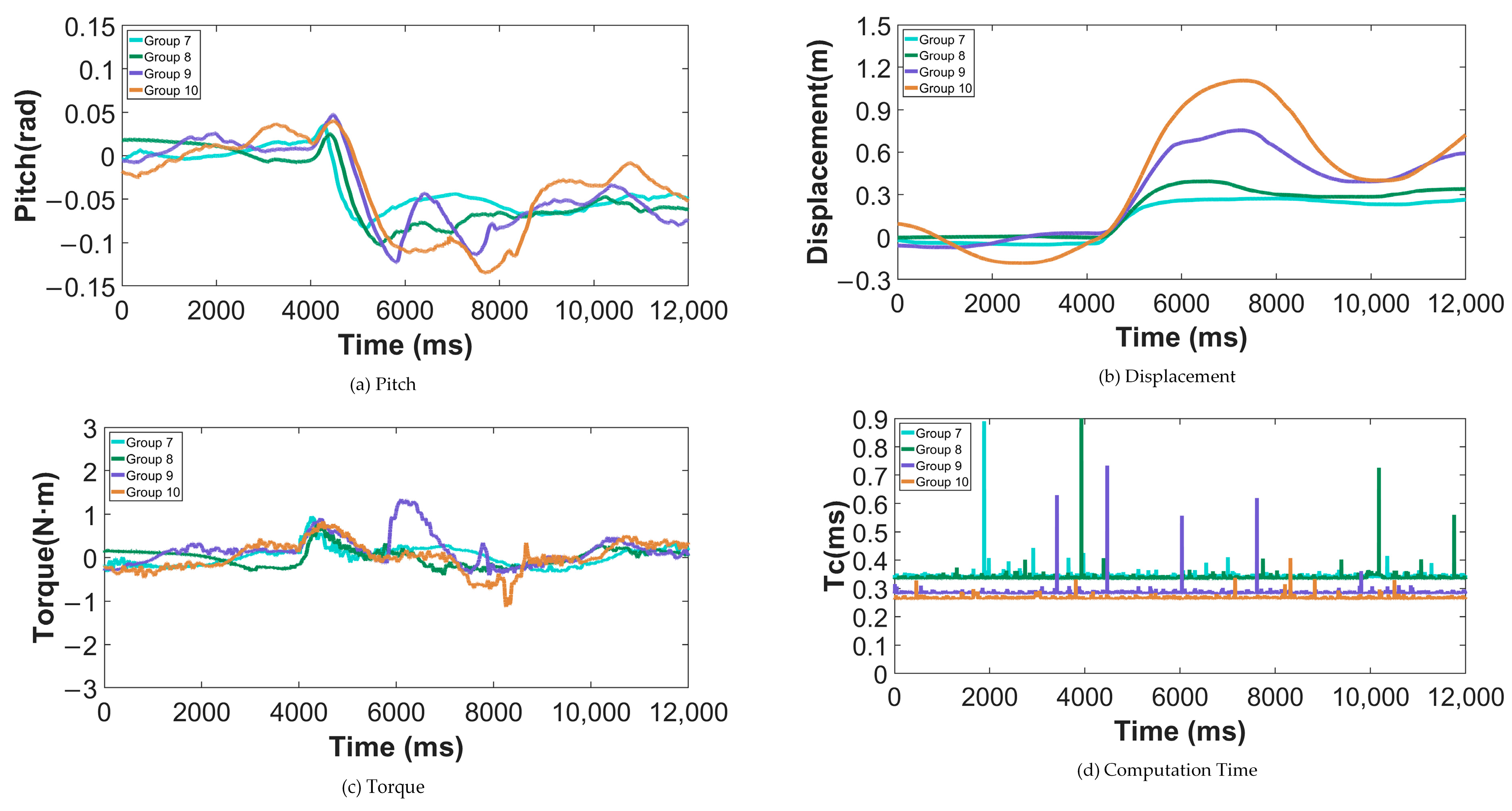

Similarly, two sets of non-optimized parameters are provided—one greater than the maximum optimized value and one smaller than the minimum optimized value—to expand the experimental control groups. The four parameter combinations from

Table 8 are applied to the MPC algorithm for the load disturbance experiments. The weight matrix configuration is provided in Equation (23). The experimental results are shown in

Figure 17 and

Table 9 and

Table 10.

The results of the four load disturbance experiments show that MPC optimized with DBO (Group 7) outperforms the other three groups in terms of both the first overshoot and adjustment time of the pitch angle, demonstrating stronger posture recovery capability. The displacement index remains at a normal level, while the torque exhibits certain overshoot despite having a relatively better adjustment time, with computation time being slightly higher. Overall, the remarkable advantage of DBO-optimized MPC in pitch angle control stems from its subpopulation parallel search and global–local balance mechanism, which enable parameter configurations to better reconcile prediction accuracy and control stability, thereby effectively suppressing posture deviations caused by load disturbances. In contrast, the displacement and torque indicators show relatively neutral performance, reflecting that under complex coupled disturbances, the optimization mainly concentrates its performance gains on the posture dimension to ensure overall balance and safety of the robot when subjected to vertical loads.

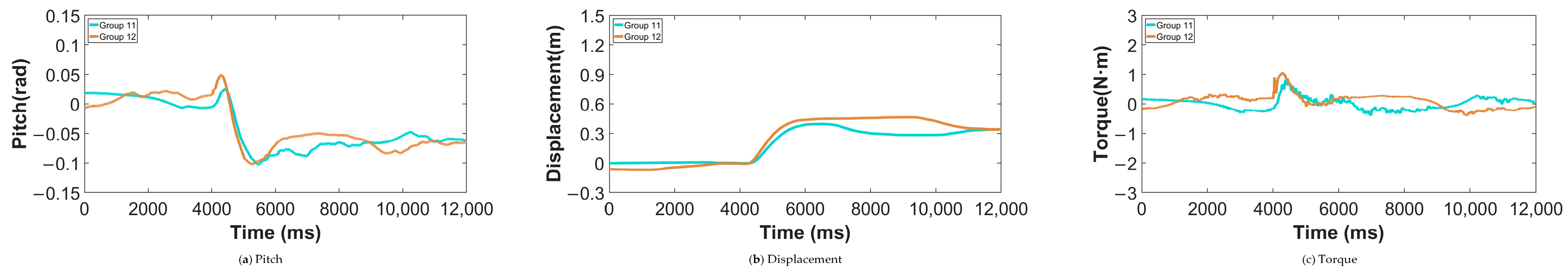

Similarly, a comparative experiment is conducted using the designed LQR control algorithm and the MPC algorithm with the aforementioned seven-parameter configuration, with the experimental results shown in

Figure 18 and

Table 11 and

Table 12.

The comparison results of the two load disturbance experiments show that MPC significantly outperforms LQR in terms of overshoot of pitch angle and displacement, effectively reducing transient deviations caused by load disturbances, though its adjustment time is slightly longer; the torque adjustment time, however, performs well. This is because MPC optimizes future states in advance based on a predictive model, suppressing abrupt changes in attitude and displacement during the initial phase of the disturbance, whereas LQR, as a linear feedback control, struggles to timely compensate for transient deviations caused by nonlinear disturbances, though it has certain advantages in convergence speed. This indicates that MPC is more suitable for maintaining robot attitude stability under complex load disturbances, while LQR has relative advantages in rapid convergence.

5. Conclusions

To address the trade-off between computational cost and control precision, while enhancing the anti-disturbance capability of wheeled bipedal robots, this paper proposes a constrained Model Predictive Control (MPC) algorithm tailored to the hardware of the robot, with parameter optimization and experimental validation. The full text is summarized as follows:

- (1)

For the wheeled motion of the wheeled bipedal robot, it is equivalently simplified to a wheeled inverted pendulum model with a variable pendulum length. A dynamic model is constructed to derive the system’s state-space model, with the balanced posture defined.

- (2)

Based on the hardware characteristics of the robot’s drive motors, a soft-constrained MPC algorithm is proposed to prevent long-term control inputs from exceeding the rated torque, ensuring that the control input remains below the peak torque.

- (3)

The DBO algorithm is used to appropriately select the prediction and control horizons. A cost function is defined with the goal of balancing the computational cost and control precision of the MPC, and the optimal combination for anti-disturbance is obtained through iterative optimization.

- (4)

The SRobo-II experimental platform is constructed, and impact and load disturbance experiments are conducted on the wheeled bipedal robot. Parameters obtained through different optimization methods and control algorithms are compared and analyzed.

The experimental results show that the MPC algorithm optimized with DBO achieves smaller overshoot and faster adjustment time under both impact and load disturbances, with overall performance superior to PSO, ACO, the non-optimized version, and the LQR control algorithm. It should be noted that the MPC algorithm designed and optimized in this work is still based on a simplified equivalent first-order wheeled inverted pendulum model. When the robot’s height or posture changes significantly, the model needs to be reconstructed or adjusted. Therefore, future research will focus on further refining the dynamic model, fully accounting for the influence of structural variations such as the torso, and exploring hierarchical control architectures to achieve stable and disturbance-resistant control under varying pose conditions.