A Framework for Integrating Vision Transformers with Digital Twins in Industry 5.0 Context

Abstract

1. Introduction

2. Background

2.1. Digital Twins

2.2. Vision Transformers

2.3. Industry 5.0 Requirements

2.4. Integration

2.5. Introduction to a New Framework

3. Methods

4. Proposed Framework

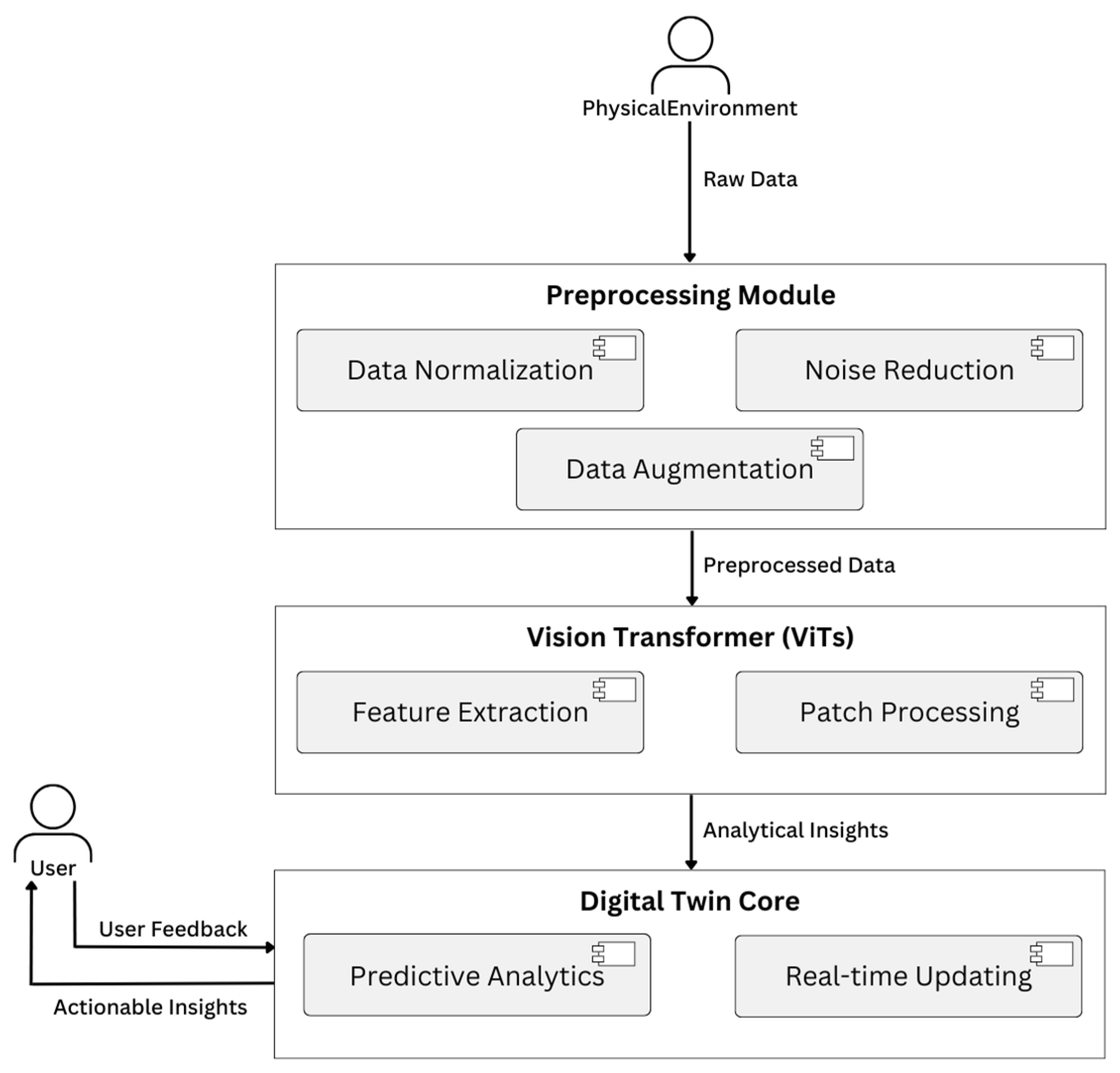

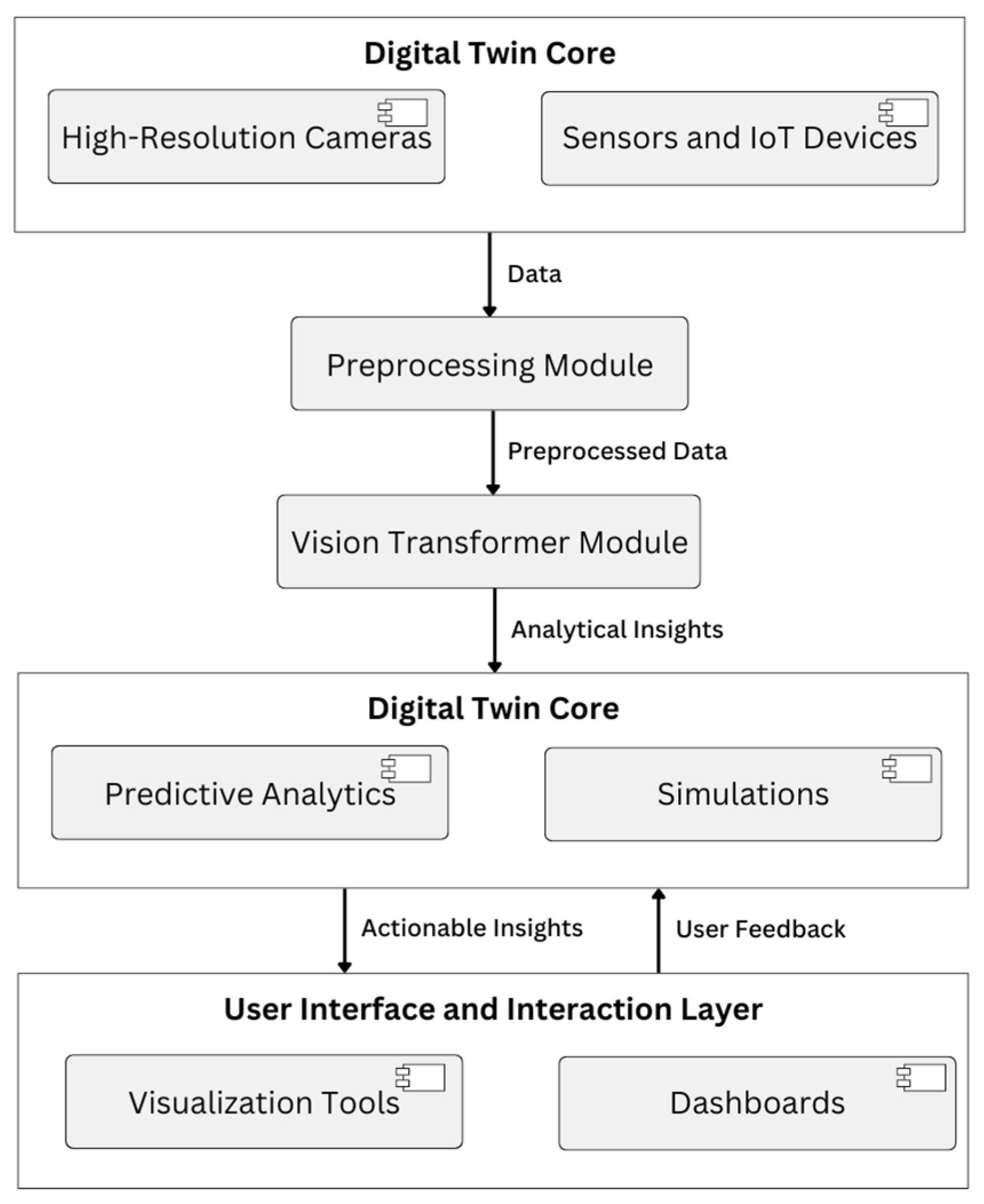

4.1. Architecture Overview

- Data acquisition layer;

- Preprocessing module;

- Vision Transformer integration;

- Digital twin core;

- User interface and interaction layer.

4.2. Components Description

4.2.1. Data Acquisition Layer

4.2.2. Preprocessing Module

4.2.3. Vision Transformer Integration

4.2.4. Digital Twin Core

4.2.5. User Interface and Interaction Layer

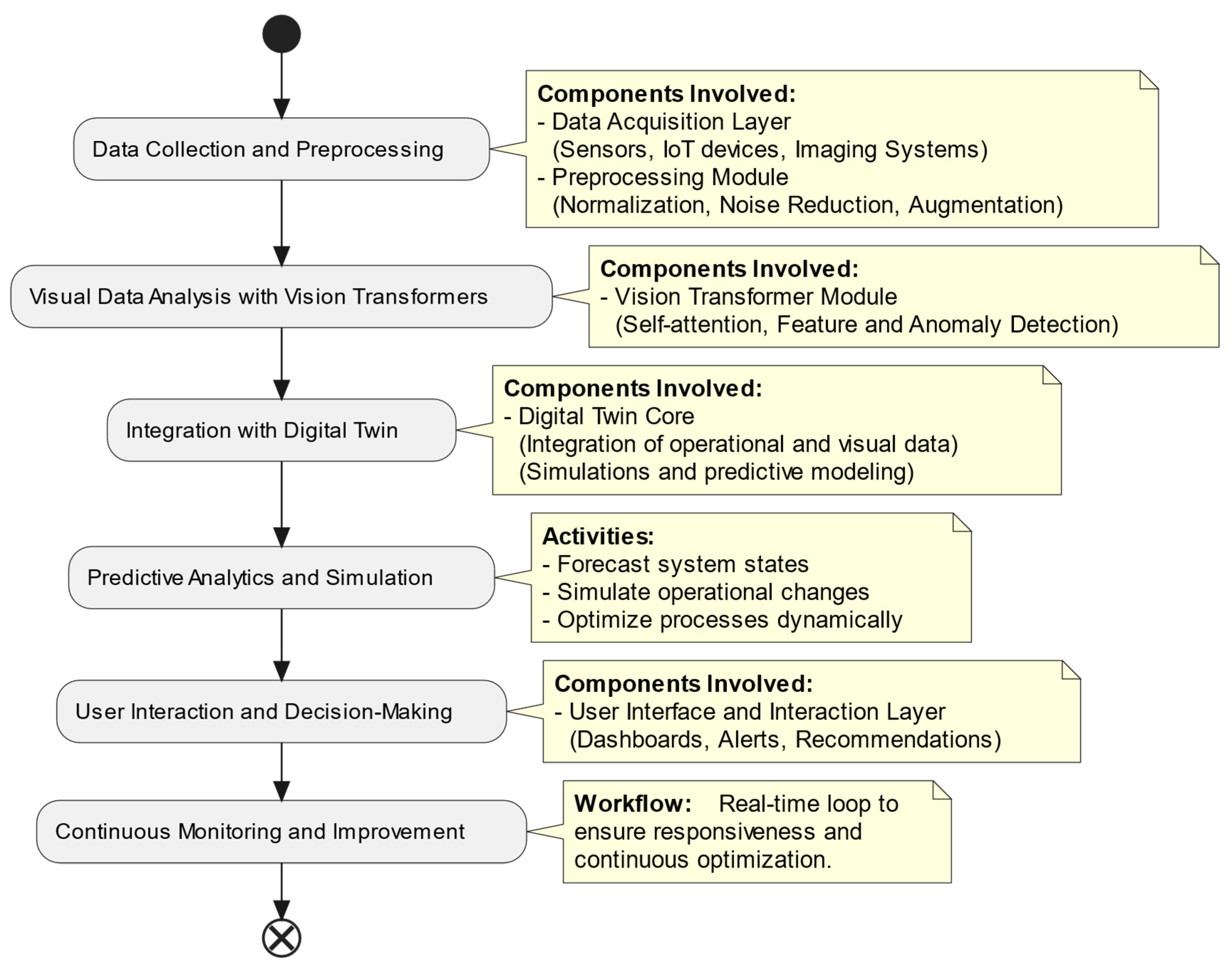

4.3. General Workflow Explanation for the Proposed Framework

- Data collection and preprocessing;

- Visual data analysis with ViTs;

- Integration with the digital twin;

- Predictive analytics and simulation;

- User interaction and decision making;

- Continuous monitoring and improvement.

4.3.1. Data Collection and Preprocessing

4.3.2. Visual Data Analysis with Vision Transformers

4.3.3. Integration with the Digital Twin

4.3.4. Predictive Analytics and Simulation

4.3.5. User Interaction and Decision Making

4.3.6. Continuous Monitoring and Improvement

4.4. Implementation Strategy and Technological Details

- Operational data: A network of Internet of Things sensors continuously tracks important physical system parameters like vibration, temperature, pressure, machine health, and energy usage. Process optimization and real-time diagnostics depend on these data. In order to reduce latency and facilitate quick on-site decision making, edge computing devices are strategically placed to preprocess the data locally.

- Visual data: Real-time visual data are captured by high-resolution cameras and imaging systems, giving granular insights into machinery and industrial processes. Comprehensive situational awareness is ensured by multi-angle imaging. By identifying abnormalities like overheating or problems with heat dissipation, specialized systems such as thermal imaging and infrared sensors provide an additional layer of detail.

- Process and product data: The system incorporates static data sources like technical blueprints, manufacturing specifications, and computer-aided design (CAD) files. To ensure adherence to technical standards and early detection of discrepancies, these static models enable comparisons between expected designs and real-world deviations.

- Data normalization and cleaning: To address variations in units and scales, the gathered data are standardized. Synchronization methods guarantee consistent temporal alignment for sensor time-series data. A high-quality dataset is ensured by employing statistical error-handling techniques to identify and eliminate anomalous or incomplete data points.

- Data augmentation for visual analysis: Techniques like noise injection, color jittering, rotations, and random cropping are used to improve visual data. These techniques enhance ViTs’ generalization and resilience. In order to capture the spatial and temporal features necessary for further analysis, images are separated into smaller, fixed-size patches.

- Layered multi-source data fusion: A multi-layered method of merging data streams from operational, visual, and process sources.

- Feature-level fusion: In order to find connections between observed patterns and physical conditions, feature-level fusion directly integrates visual inputs with raw sensor data.

- Decision-level fusion: Creates a thorough understanding of the system by combining the results of several analytical models, such as anomaly detection and defect classification.

- Multi-stage feature extraction: The Vision Transformer module processes image patches layer by layer, with deeper layers capturing higher-level patterns like system-wide malfunctions or operational trends and initial layers detecting fine-grained details like micro-defects.

- Cross-sensor correlation: ViTs create links between sensor readings and visual abnormalities. To verify equipment health issues, for example, visual indicators of wear found in images are correlated with spikes in vibration or temperature data.

- Hierarchical anomaly detection: The analysis allows for precise and prioritized responses by differentiating between critical system-wide disruptions and minor deviations, such as minor defects or slight operational fluctuations.

- Continuous synchronization: The digital twin is updated in real time to reflect the most recent operational states and visual observations of the physical system, ensuring continuous synchronization. An accurate and dynamic virtual representation is ensured by this synchronization.

- Deep data fusion: The integration creates a unified model for improved simulations by combining ViT-driven visual insights (like defect localization) with operational metrics (like temperature and vibration).

- Personalization and use-case adaptation: The system enables operators to modify simulations according to particular equipment configurations or production settings. This flexibility accommodates a range of industrial settings and product iterations.

- Predictive maintenance: Long-term forecasting anticipates future trends to optimize maintenance schedules and cut costs, while short-term analyses pinpoint urgent maintenance requirements based on observed degradation.

- Scenario simulation: In order to enable proactive adjustments and ensure system resilience, the digital twin simulates “what-if” scenarios, such as equipment failures or environmental changes.

- Multi-objective optimization: The system can concurrently optimize resource consumption, quality, and efficiency thanks to real-time data feeds. Alignment with Industry 5.0 objectives is ensured by dynamic adjustments to production lines or machine parameters.

- Interactive dashboards: Operators can view real-time system performance, predictive analytics, and alert visualizations, facilitating prompt and well-informed decision making.

- AI-assisted recommendations: By utilizing its analytical capabilities, the system produces practical recommendations, like process modifications or maintenance scheduling.

- Augmented reality (AR) integration: AR tools enhance operator efficiency and skill development by superimposing visual guides for maintenance tasks or training simulations.

- Adaptive machine learning: Models are retrained using new data, increasing the accuracy of process optimization, anomaly detection, and predictive analytics.

- Self-healing mechanisms: To ensure fewer interruptions and increased resilience, the system automatically adapts to deviations by rerouting tasks or adjusting machine settings.

- Scalability and future integration: The modular architecture can handle growing technologies like 6G, quantum computing, or next-generation AI models, and it supports scaling across various manufacturing environments.

5. Use Case Scenarios

5.1. Predictive Maintenance Scenario

- Efficiency: Early detection and fixing of problems prevents unscheduled downtime and reduces repair costs. Optimal maintenance scheduling leads to the most efficient use of resources and less waste.

- Accuracy: Through fusion of visual and operational data, the framework improves anomaly detection and failure prediction by decreasing false positives and false negatives.

- Decision making: Real-time simulations and AI-enabled insights equip operators with actionable suggestions, allowing them to make informed decisions that enhance system reliability and productivity.

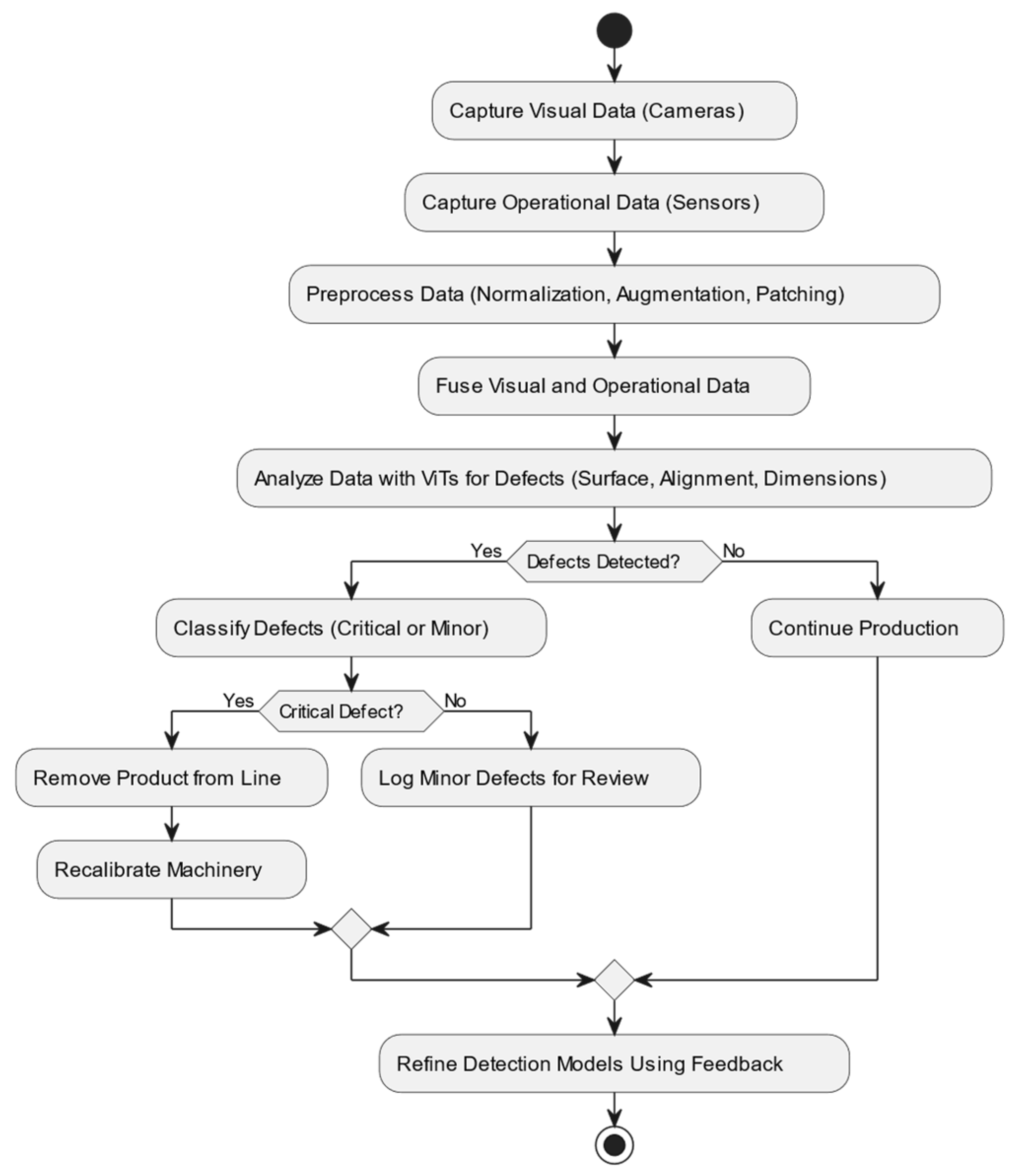

5.2. Quality Control Scenario

- Enhanced defect detection: Due to the state-of-the-art performance of ViTs, it is possible to achieve accurate detection of both visible and subtle defects, e.g., scratches, misalignments, or structural defects.

- Improved efficiency: Automated defect detection and classification accelerate the quality control workflow by minimizing manual inspection work and improving decision-making speed.

- Optimized production: Real-time adaptation guarantees that production lines remain of high quality, minimizes the quantities of waste, and maximizes production.

- Informed decision making: Digital twin simulation integration offers practical information on mechanisms responsible for defects as well as consequences, enabling more rational process adjustments.

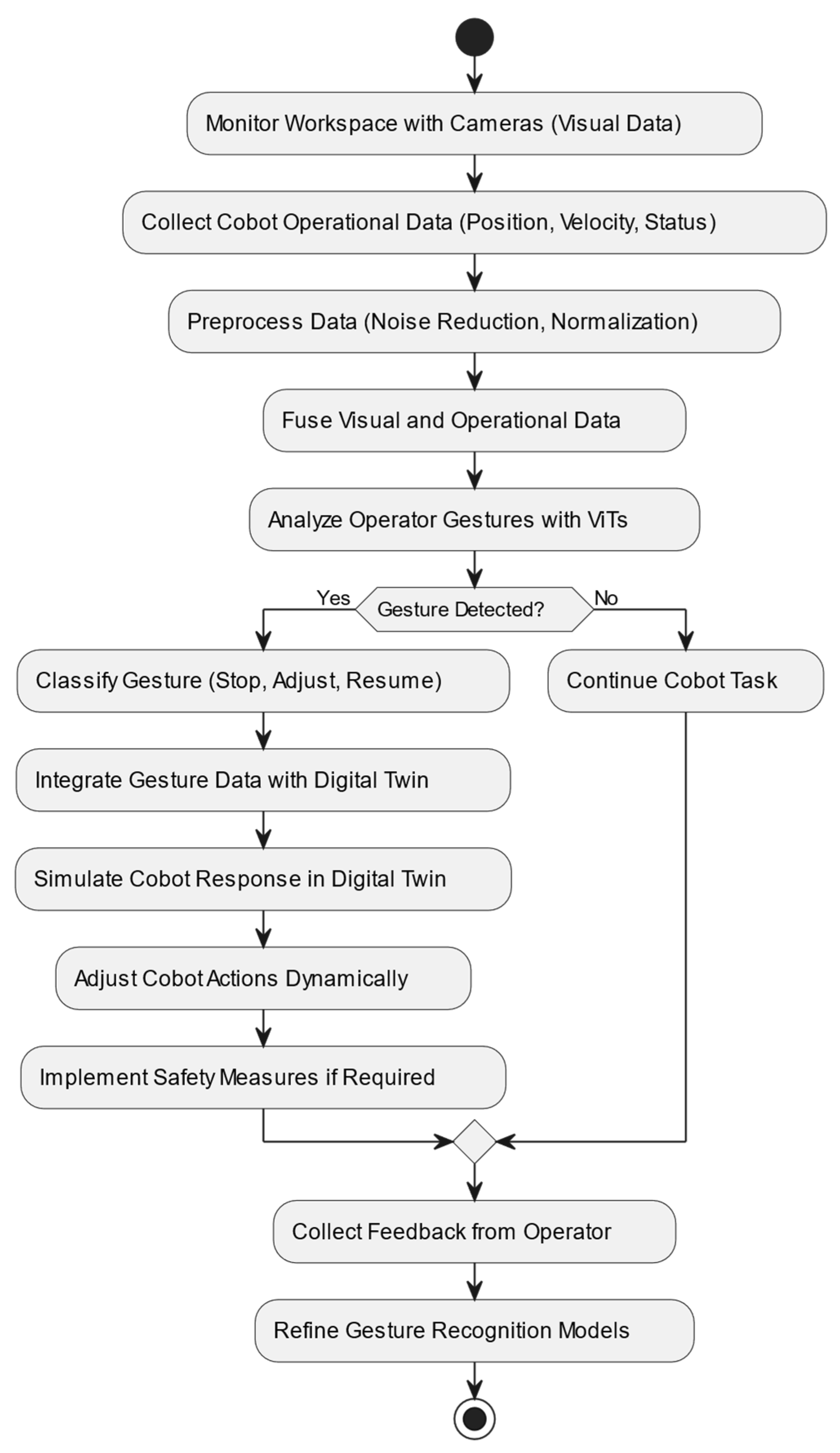

5.3. Human–Robot Collaboration Scenario

- Enhanced safety: The proactive monitoring of the workspace dynamics guarantees operators and cobots stay at safe distances, thereby avoiding accidents.

- Improved efficiency: Real-time gesture recognition and cobot task adaptation reduce latency and improve cooperative workflows.

- Operator empowerment: Intuitive gesture-based controls provide operators with the ability to concentrate on higher-level tasks, while cobots are in charge of routine or physically taxing activities.

6. Advantages of the Framework

6.1. Enhanced Data Analysis

6.2. Real-Time Processing Is at the Forefront

6.3. Scalability and Flexibility

7. Challenges and Considerations

7.1. Technical Challenges

7.2. Ethical and Social Implications

8. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lasi, H.; Fettke, P.; Kemper, H.-G.; Feld, T.; Hoffmann, M. Industry 4.0. Bus. Inf. Syst. Eng. 2014, 6, 239–242. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A Human-Centric Solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Grieves, M.; Vickers, J. Digital Twin: Mitigating Unpredictable, Undesirable Emergent Behavior in Complex Systems. In Transdisciplinary Perspectives on Complex Systems; Kahlen, F.-J., Flumerfelt, S., Alves, A., Eds.; Springer: Cham, Switzerland, 2017; pp. 85–113. [Google Scholar]

- Tao, F.; Zhang, M. Digital Twin Shop-Floor: A New Shop-Floor Paradigm Towards Smart Manufacturing. IEEE Access 2017, 5, 20418–20427. [Google Scholar] [CrossRef]

- Chen, C.-F.R.; Fan, Q.; Panda, R. CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, BC, Canada, 11–17 October 2021; pp. 357–366. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, BC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar]

- Qi, Q.; Tao, F. Digital Twin and Big Data Towards Smart Manufacturing and Industry 4.0: 360 Degree Comparison. IEEE Access 2018, 6, 3585–3593. [Google Scholar] [CrossRef]

- Zheng, Y.; Yang, S.; Cheng, H. An Application Framework of Digital Twin and Its Case Study. J. Ambient Intell. Humaniz. Comput. 2019, 10, 1141–1153. [Google Scholar] [CrossRef]

- Leng, J.; Ruan, G.; Jiang, P.; Liu, Q.; Zhou, X.; Chen, X. Digital twins-based smart manufacturing system design in Industry 4.0: A review. J. Manuf. Syst. 2021, 60, 119–137. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital twin: Enabling technologies, challenges and open research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Shangguan, D.; Chen, L.; Ding, J. A Digital Twin-Based Approach for the Fault Diagnosis and Health Monitoring of a Complex Satellite System. Symmetry 2020, 12, 1307. [Google Scholar] [CrossRef]

- Fang, Y.; Peng, C.; Lou, P.; Zhou, Z.; Hu, J.; Yan, J. Digital-Twin-Based Job Shop Scheduling Toward Smart Manufacturing. IEEE Trans. Ind. Inform. 2019, 15, 6425–6435. [Google Scholar] [CrossRef]

- Kineber, A.F.; Singh, A.K.; Fazeli, A.; Mohandes, S.R.; Cheung, C.; Arashpour, M.; Ejohwomu, O.; Zayed, T. Modelling the relationship between digital twins implementation barriers and sustainability pillars: Insights from building and construction sector. Sustain. Cities Soc. 2023, 99, 104930. [Google Scholar] [CrossRef]

- Wang, W.; Zaheer, Q.; Qiu, S.; Wang, W.; Ai, C.; Wang, J.; Wang, S.; Hu, W. Digital Twins in Operation and Maintenance (O&P). In Digital Twin Technologies in Transportation Infrastructure Management; Springer: Singapore, 2024; pp. 179–203. [Google Scholar]

- Abisset-Chavanne, E.; Coupaye, T.; Golra, F.R.; Lamy, D.; Piel, A.; Scart, O.; Vicat-Blanc, P. A Digital Twin Use Cases Classification and Definition Framework Based on Industrial Feedback. Comput. Ind. 2024, 161, 104113. [Google Scholar] [CrossRef]

- Iliuţă, M.-E.; Moisescu, M.-A.; Pop, E.; Ionita, A.-D.; Caramihai, S.-I.; Mitulescu, T.-C. Digital Twin—A Review of the Evolution from Concept to Technology and Its Analytical Perspectives on Applications in Various Fields. Appl. Sci. 2024, 14, 5454. [Google Scholar] [CrossRef]

- Attaran, S.; Attaran, M.; Celik, B.G. Digital Twins and Industrial Internet of Things: Uncovering Operational Intelligence in Industry 4.0. Decis. Anal. J. 2024, 10, 100398. [Google Scholar] [CrossRef]

- Katsoulakis, E.; Wang, Q.; Wu, H.; Shahriyari, L.; Fletcher, R.; Liu, J.; Achenie, L.; Liu, H.; Jackson, P.; Xiao, Y.; et al. Digital Twins for Health: A Scoping Review. NPJ Digit. Med. 2024, 7, 77. [Google Scholar] [CrossRef]

- Peldon, D.; Banihashemi, S.; LeNguyen, K.; Derrible, S. Navigating Urban Complexity: The Transformative Role of Digital Twins in Smart City Development. Sustain. Cities Soc. 2024, 111, 105583. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, B.; Han, Y.; Liu, B.; Hu, J.; Jin, Y. Vision transformer-based visual language understanding of the construction process. Alex. Eng. J. 2024, 99, 242–256. [Google Scholar] [CrossRef]

- Sun, Q.; Zhang, J.; Fang, Z.; Gao, Y. Self-Enhanced Attention for Image Captioning. Neural Process. Lett. 2024, 56, 131. [Google Scholar] [CrossRef]

- Boulila, W.; Ghandorh, H.; Masood, S.; Alzahem, A.; Koubaa, A.; Ahmed, F.; Khan, Z.; Ahmad, J. A transformer-based approach empowered by a self-attention technique for semantic segmentation in remote sensing. Heliyon 2024, 10, e29396. [Google Scholar] [CrossRef]

- Maurício, J.; Domingues, I.; Bernardino, J. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Appl. Sci. 2023, 13, 5521. [Google Scholar] [CrossRef]

- Jamil, S.; Piran, M.J.; Kwon, O.-J. A Comprehensive Survey of Transformers for Computer Vision. Drones 2023, 7, 287. [Google Scholar] [CrossRef]

- Kameswari, C.S.; Kavitha, J.; Reddy, T.S.; Chinthaguntla, B.; Jagatheesaperumal, S.K.; Gaftandzhieva, S.; Doneva, R. An Overview of Vision Transformers for Image Processing: A Survey. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 30. [Google Scholar] [CrossRef]

- Rangel, G.; Cuevas-Tello, J.C.; Nunez-Varela, J.; Puente, C.; Silva-Trujillo, A.G. A Survey on Convolutional Neural Networks and Their Performance Limitations in Image Recognition Tasks. J. Sens. 2024, 2024, 2797320. [Google Scholar] [CrossRef]

- Zhou, Q.; Li, X.; He, L.; Yang, Y.; Cheng, G.; Tong, Y.; Ma, L.; Tao, D. TransVOD: End-to-End Video Object Detection with Spatial-Temporal Transformers. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 7853–7869. [Google Scholar] [CrossRef]

- Zhang, B.; Liu, L.; Phan, M.H.; Tian, Z.; Shen, C.; Liu, Y. Segvit v2: Exploring efficient and continual semantic segmentation with plain vision transformers. Int. J. Comput. Vis. 2024, 132, 1126–1147. [Google Scholar] [CrossRef]

- Hussain, A.; Hussain, T.; Ullah, W.; Baik, S.W. Vision transformer and deep sequence learning for human activity recognition in surveillance videos. Comput. Intell. Neurosci. 2022, 2022, 3454167. [Google Scholar] [CrossRef]

- Amr, A. Future of Industry 5.0 in Society: Human-Centric Solutions, Challenges and Prospective Research Areas. J. Cloud Comput. 2022, 11, 40. [Google Scholar]

- Ghobakhloo, M.; Iranmanesh, M.; Foroughi, B.; Tirkolaee, E.B.; Asadi, S.; Amran, A. Industry 5.0 Implications for Inclusive Sustainable Manufacturing: An Evidence-Knowledge-Based Strategic Roadmap. J. Clean. Prod. 2023, 417, 138023. [Google Scholar] [CrossRef]

- Kalinaki, K.; Yahya, U.; Malik, O.A.; Lai, D.T.C. A Review of Big Data Analytics and Artificial Intelligence in Industry 5.0 for Smart Decision-Making. In Human-Centered Approaches in Industry 5.0: Human-Machine Interaction, Virtual Reality Training, and Customer Sentiment Analysis; IGI Global: Hershey, PA, USA, 2024; pp. 24–47. [Google Scholar]

- Zafar, M.H.; Langås, E.F.; Sanfilippo, F. Exploring the synergies between collaborative robotics, digital twins, augmentation, and industry 5.0 for smart manufacturing: A state-of-the-art review. Robot. Comput.-Integr. Manuf. 2024, 89, 102769. [Google Scholar] [CrossRef]

- Yitmen, I.; Almusaed, A. Synopsis of Industry 5.0 Paradigm for Human-Robot Collaboration. In Industry 4.0 Transformation Towards Industry 5.0 Paradigm—Challenges, Opportunities and Practices; Yitmen, I., Almusaed, A., Eds.; IntechOpen: London, UK, 2024; pp. 24–47. [Google Scholar]

- Masoomi, B.; Sahebi, I.G.; Ghobakhloo, M.; Mosayebi, A. Do Industry 5.0 Advantages Address the Sustainable Development Challenges of the Renewable Energy Supply Chain? Sustain. Prod. Consum. 2023, 43, 94–112. [Google Scholar] [CrossRef]

- Rame, R.; Purwanto, P.; Sudarno, S. Industry 5.0 and Sustainability: An Overview of Emerging Trends and Challenges for a Green Future. Innov. Green Dev. 2024, 3, 100173. [Google Scholar] [CrossRef]

- Khan, M.; Haleem, A.; Javaid, M. Changes and Improvements in Industry 5.0: A Strategic Approach to Overcome the Challenges of Industry 4.0. Green Technol. Sustain. 2023, 1, 100020. [Google Scholar] [CrossRef]

- Amr, A.; Noor, H.S.A. Human-Centric Collaboration and Industry 5.0 Framework in Smart Cities and Communities: Fostering Sustainable Development Goals 3, 4, 9, and 11 in Society 5.0. Smart Cities 2024, 7, 1723–1775. [Google Scholar] [CrossRef]

- Ordieres-Meré, J.; Gutierrez, M.; Villalba-Díez, J. Toward the Industry 5.0 Paradigm: Increasing Value Creation through the Robust Integration of Humans and Machines. Comput. Ind. 2023, 150, 103947. [Google Scholar] [CrossRef]

- Murtaza, A.A.; Saher, A.; Zafar, M.H.; Moosavi, S.K.R.; Aftab, M.F.; Sanfilippo, F. Paradigm Shift for Predictive Maintenance and Condition Monitoring from Industry 4.0 to Industry 5.0: A Systematic Review, Challenges and Case Study. Results Eng. 2024, 24, 102935. [Google Scholar] [CrossRef]

- Ghobakhloo, M.; Iranmanesh, M.; Mubarak, M.F.; Mubarik, M.; Rejeb, A.; Nilashi, M. Identifying Industry 5.0 Contributions to Sustainable Development: A Strategy Roadmap for Delivering Sustainability Values. Sust. Prod. Consum. 2022, 33, 716–737. [Google Scholar] [CrossRef]

- Longo, F.; Padovano, A.; Umbrello, S. Value-Oriented and Ethical Technology Engineering in Industry 5.0: A Human-Centric Perspective for the Design of the Factory of the Future. Appl. Sci. 2020, 10, 4182. [Google Scholar] [CrossRef]

- Khan, L.U.; Yaqoob, I.; Imran, M.; Han, Z.; Hong, C.S. 6G wireless systems: A vision, architectural elements, and future directions. IEEE Access 2020, 8, 147029–147044. [Google Scholar] [CrossRef]

- Francisti, J.; Balogh, Z.; Reichel, J.; Magdin, M.; Koprda, Š.; Molnár, G. Application Experiences Using IoT Devices in Education. Appl. Sci. 2020, 10, 7286. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Nascimento, F.H.; Cardoso, S.A.; Lima, A.M.; Santos, D.F. Synchronizing a collaborative arm’s digital twin in real-time. In Proceedings of the 2023 Latin American Robotics Symposium (LARS), 2023 Brazilian Symposium on Robotics (SBR), and 2023 Workshop on Robotics in Education (WRE), Salvador, Brazil, 9–11 October 2023; pp. 230–235. [Google Scholar]

- Tao, F.; Zhang, H.; Liu, A.; Nee, A.Y. Digital twin in industry: State-of-the-art. IEEE Trans. Ind. Inform. 2018, 15, 2405–2415. [Google Scholar] [CrossRef]

- Molnár, G.; Sik, D. Smart devices, smart environments, smart students—A review on educational opportunities in virtual and augmented reality learning environment. In Proceedings of the 10th IEEE International Conference on Cognitive Infocommunications, Naples, Italy, 23–25 October 2019; pp. 495–498. [Google Scholar]

- Nagy, E.; Karl, E.; Molnár, G. Exploring the Role of Human-Robot Interactions, within the Context of the Effectiveness of a NAO Robot. Acta Polytech. Hung. 2024, 21, 177–190. [Google Scholar] [CrossRef]

- Nagy, E. Robots in educational processes. J. Appl. Multimed. 2022, 17, 1–7. [Google Scholar]

- Szabo, A.B.; Katona, J. A Machine Learning Approach for Skin Lesion Classification on iOS: Implementing and Optimizing a Convolutional Transfer Learning Model with Create ML. Int. J. Comput. Appl. 2024, 46, 666–685. [Google Scholar]

- Gyonyoru, K.I.K.; Katona, J. Student Perceptions of AI-Enhanced Adaptive Learning Systems: A Pilot Survey. In Proceedings of the IEEE 7th International Conference and Workshop in Óbuda on Electrical and Power Engineering, Budapest, Hungary, 17–18 October 2024; pp. 93–98. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kovari, A. A Framework for Integrating Vision Transformers with Digital Twins in Industry 5.0 Context. Machines 2025, 13, 36. https://doi.org/10.3390/machines13010036

Kovari A. A Framework for Integrating Vision Transformers with Digital Twins in Industry 5.0 Context. Machines. 2025; 13(1):36. https://doi.org/10.3390/machines13010036

Chicago/Turabian StyleKovari, Attila. 2025. "A Framework for Integrating Vision Transformers with Digital Twins in Industry 5.0 Context" Machines 13, no. 1: 36. https://doi.org/10.3390/machines13010036

APA StyleKovari, A. (2025). A Framework for Integrating Vision Transformers with Digital Twins in Industry 5.0 Context. Machines, 13(1), 36. https://doi.org/10.3390/machines13010036