Detecting Anomalies in Hydraulically Adjusted Servomotors Based on a Multi-Scale One-Dimensional Residual Neural Network and GA-SVDD

Abstract

1. Introduction

2. Materials and Methods

2.1. Theoretical Background

2.1.1. M1D_ResNet

- (1)

- The convolution layer and the pooling layer

- (2)

- The fully connected layer

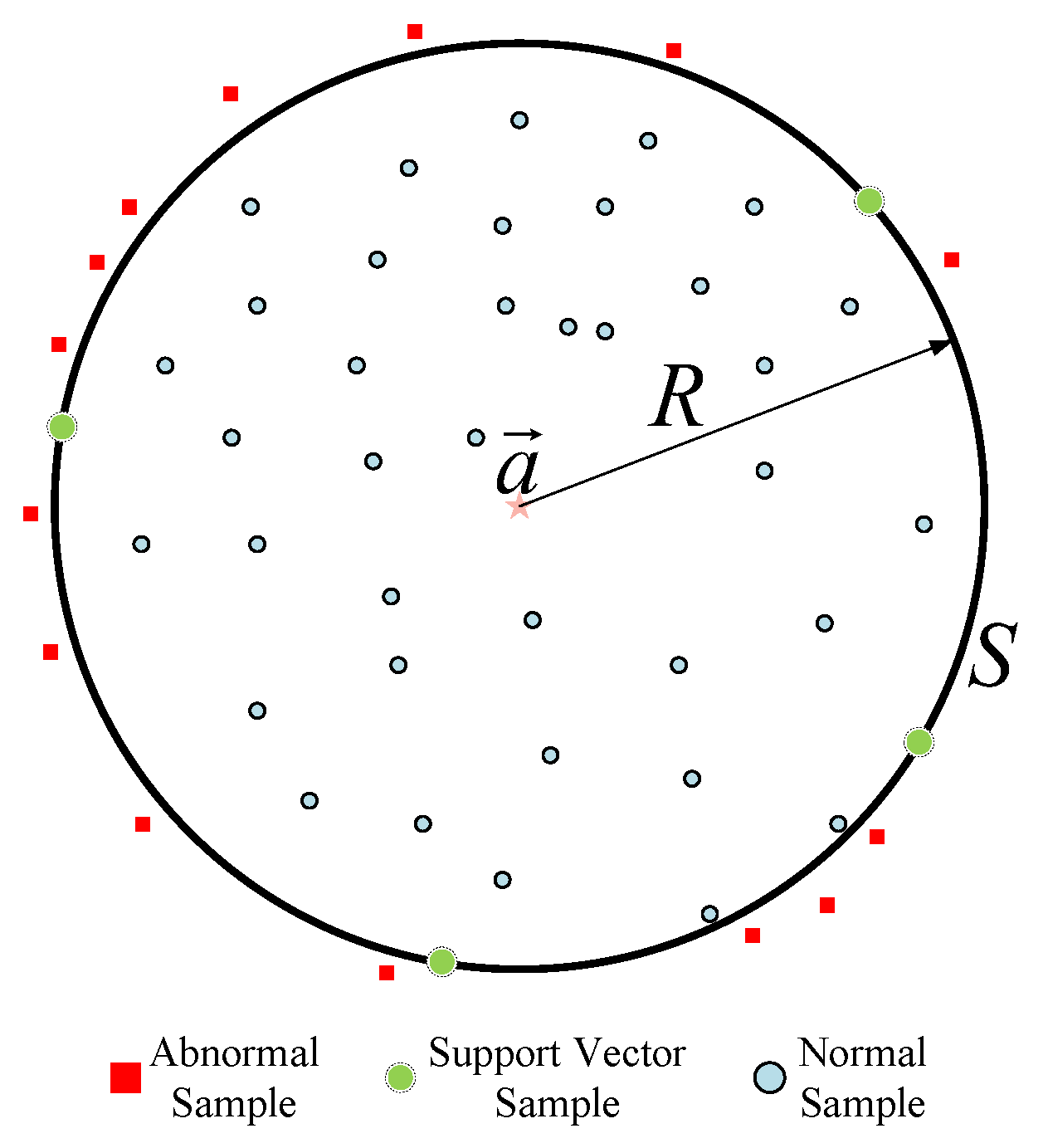

2.1.2. Principle of the SVDD Algorithm

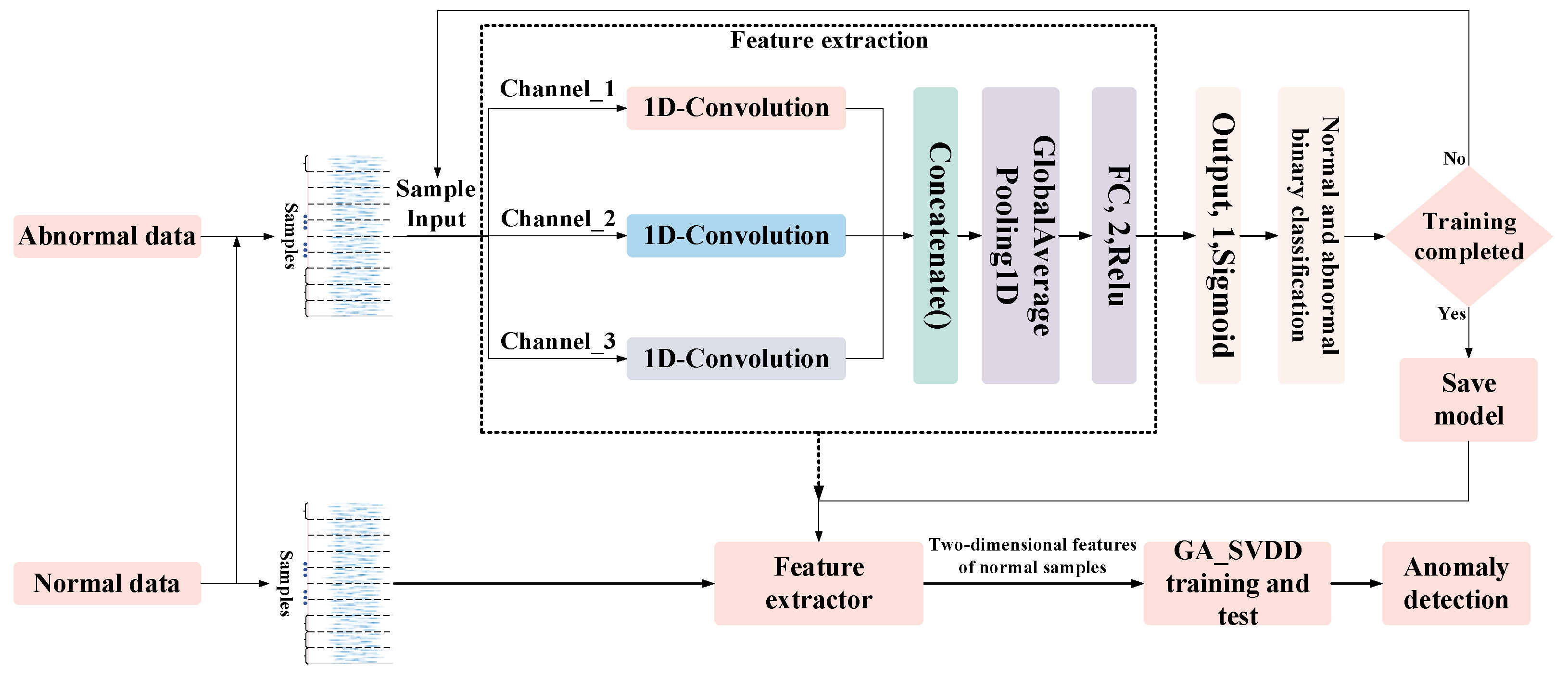

2.2. A Method of Detecting Anomalies Based on M1D_ResNet and GA_SVDD

2.2.1. Method Workflow of This Study

2.2.2. The M1D_ResNet Model’s Structure and Parameter Settings

2.2.3. GA Optimization of the SVDD Process (Including Specific Parameter Settings)

3. Experimental Verification

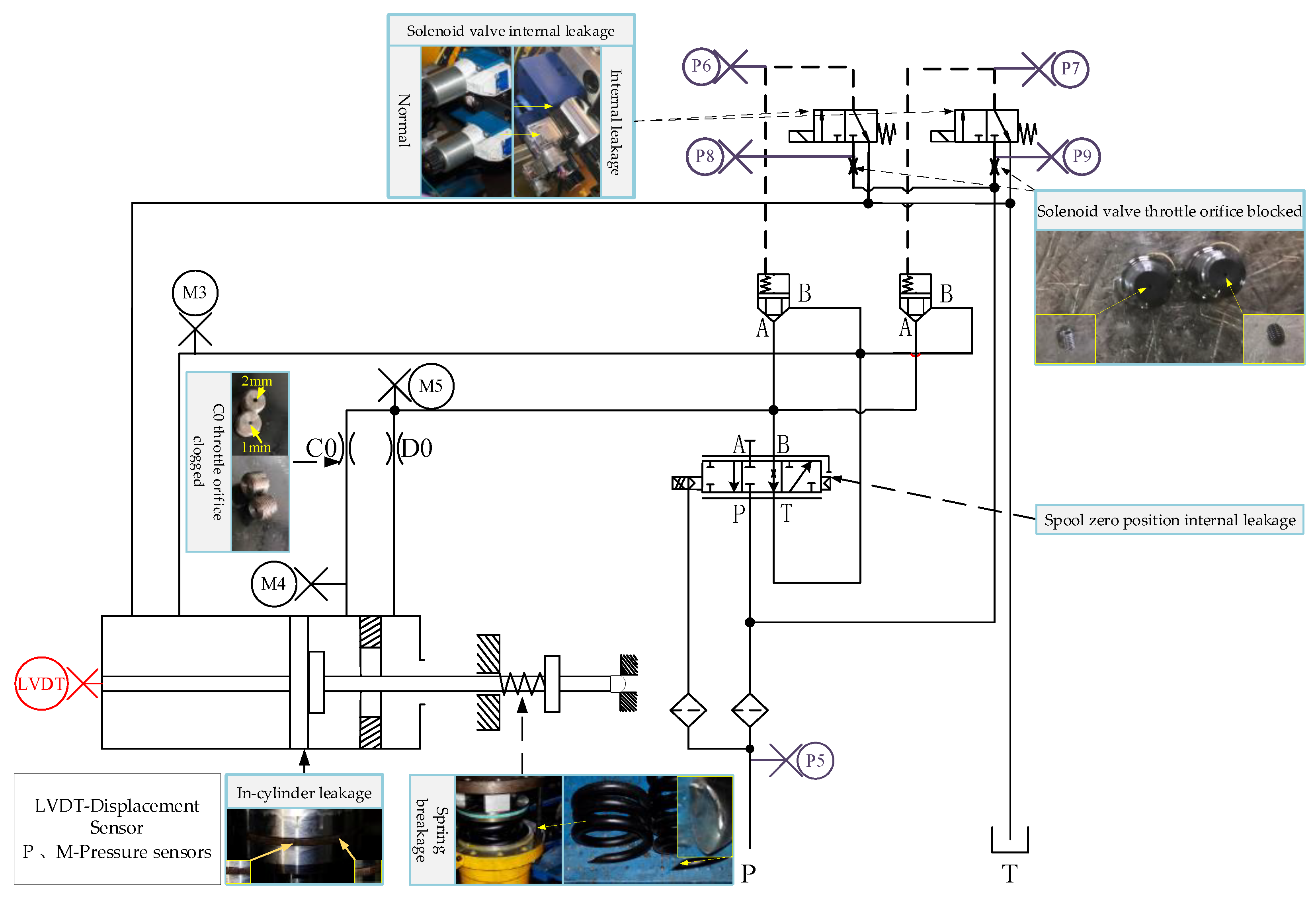

3.1. Signal Acquisition

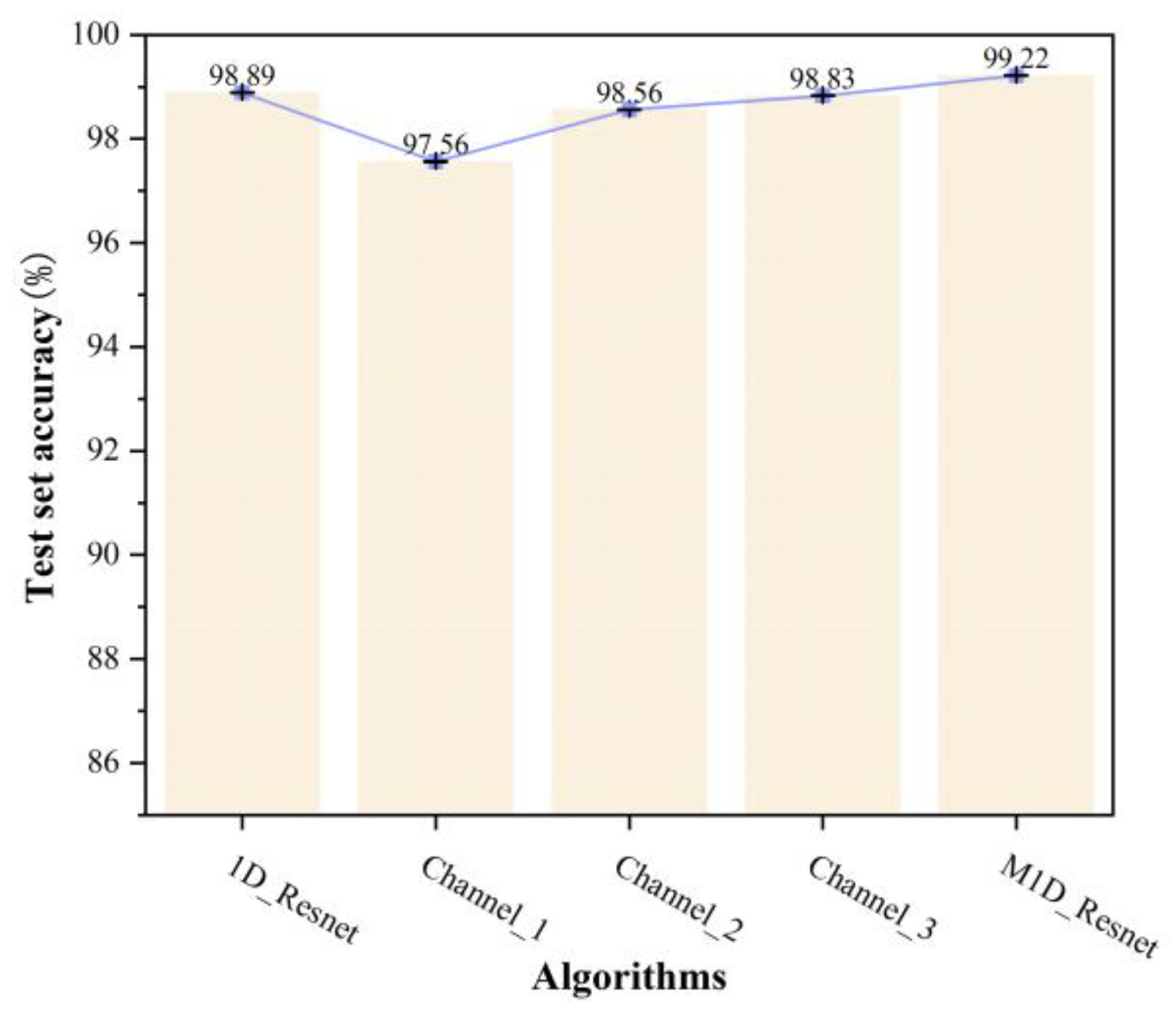

3.2. Ablation Experiment

3.2.1. Settings of the Ablation Hyperparameters

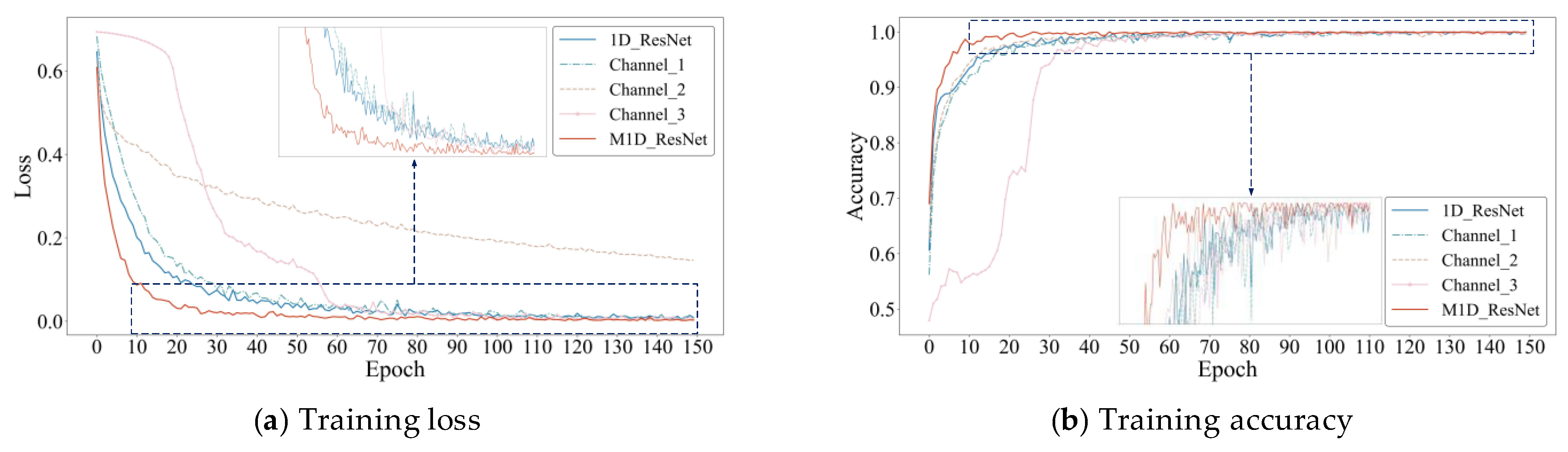

3.2.2. Analysis of the Results of the Ablation Experiment

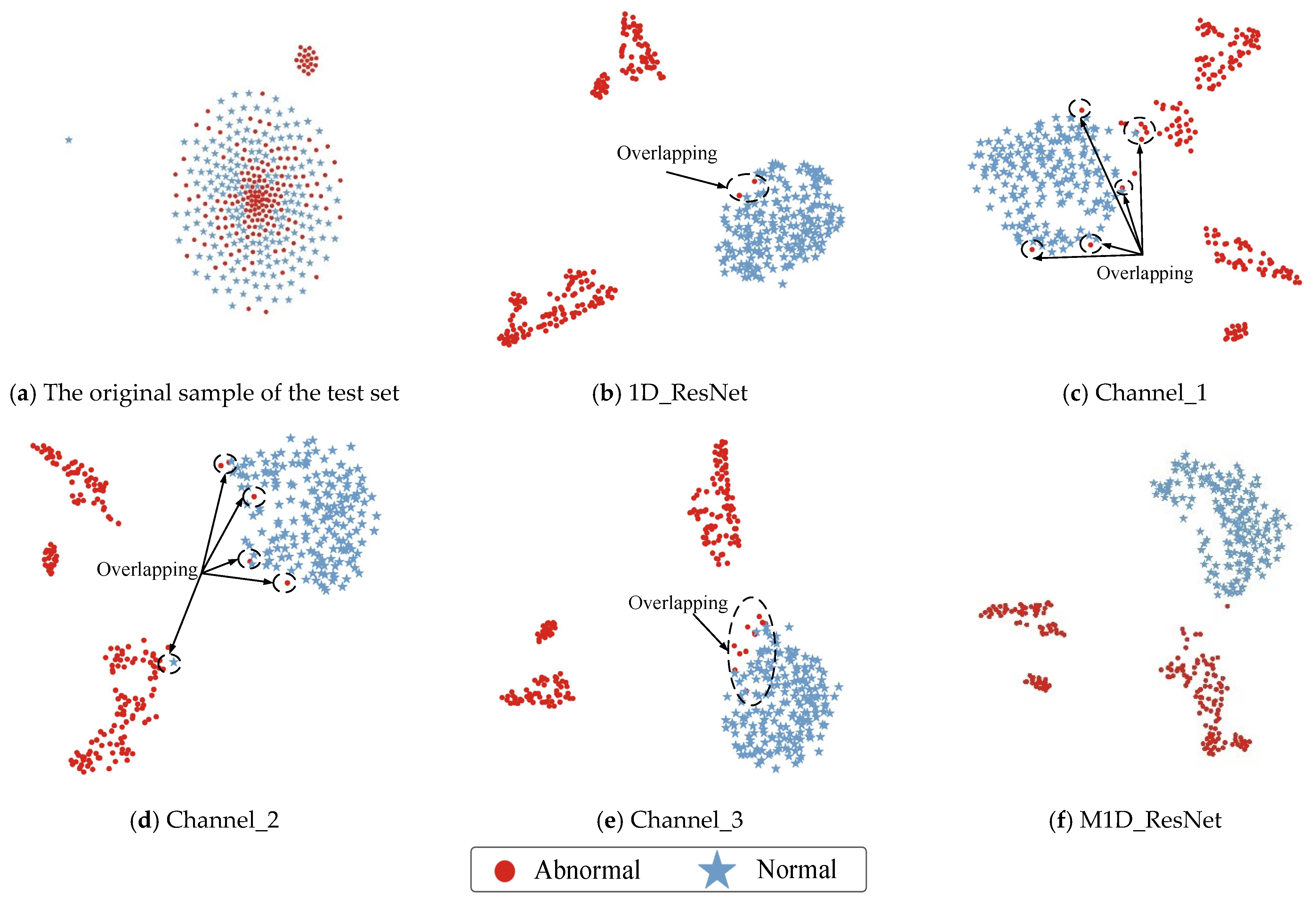

3.2.3. Comparison of the Capabilities of Different Algorithms for Feature Extraction

3.3. Experimental Analysis of GA-SVDD

3.3.1. Arrangement of the Experimental Data of GA-SVDD

3.3.2. Analysis of the Hyperparameter Optimization Process of GA-SVDD

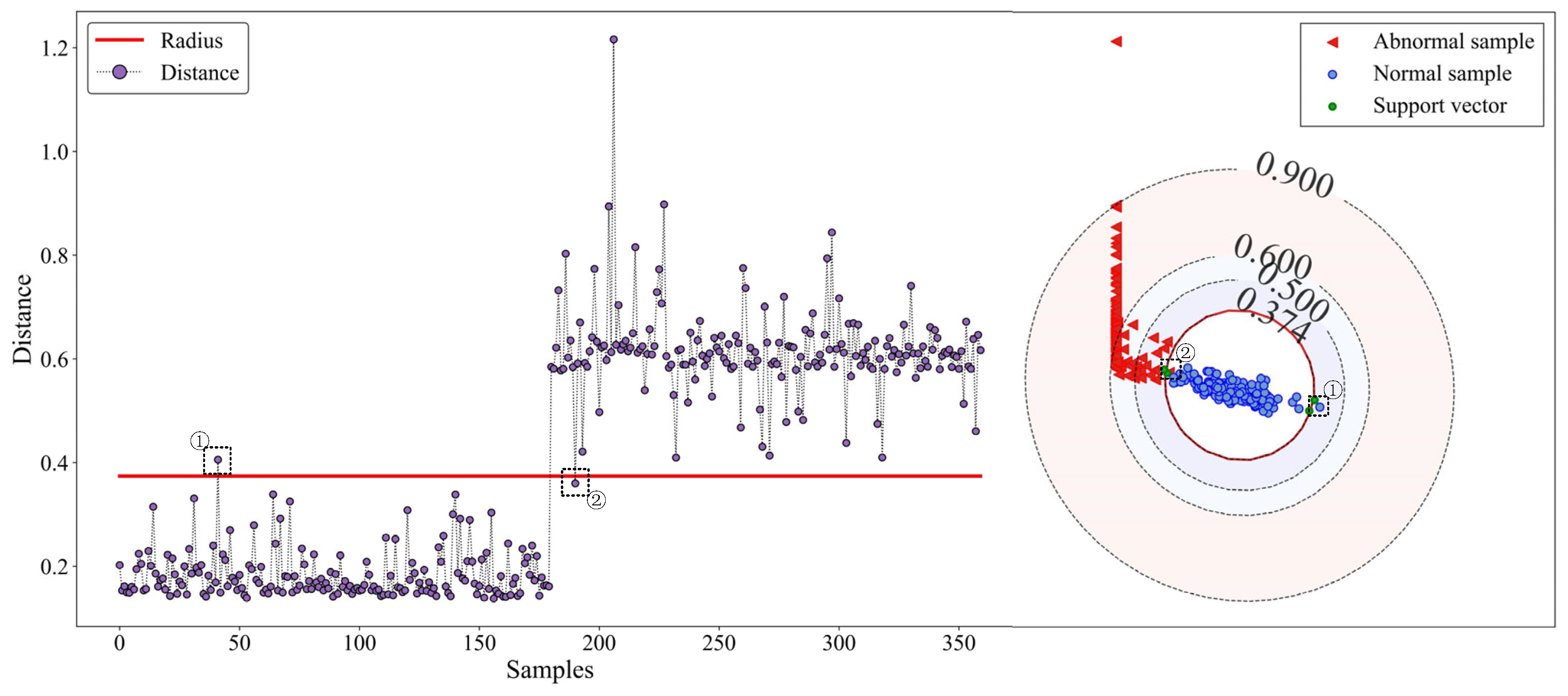

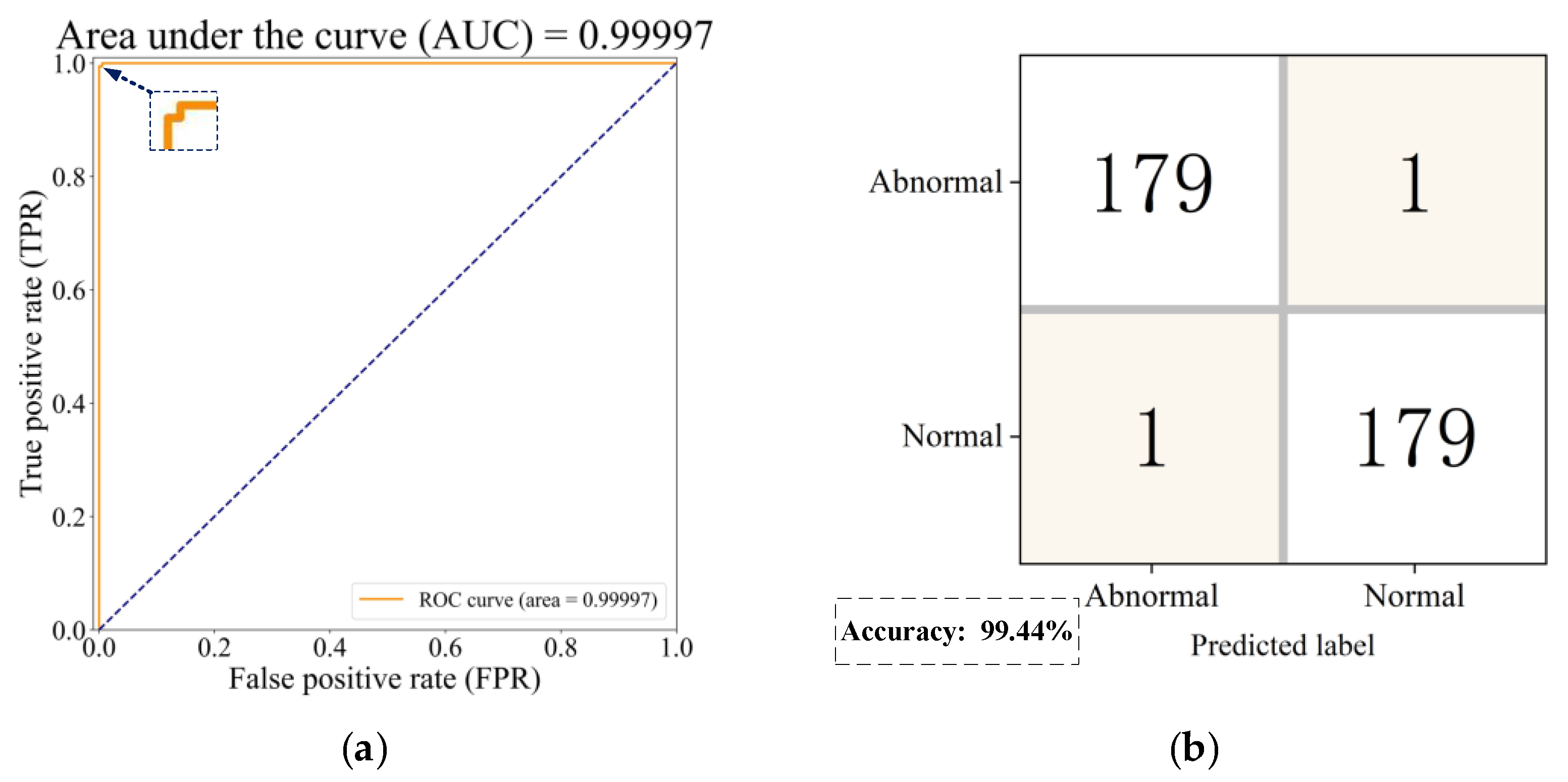

3.3.3. Analysis of SVDD’s Effect on Detecting Anomalies

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, T. Development and prospect of turbine fault diagnosis technology. Equip. Maint. Technol. 2019, 14. [Google Scholar] [CrossRef]

- Li, Y.; Lu, Z.; Liang, X.; Zhou, Y. Analyzing the causes of internal leakage defects in the main regulator and their treatment. Equip. Manag. Maint. 2020, 33–34. [Google Scholar]

- Mu, X. Research on Servo Control, Performance Test and Reliability of Hydraulic Servomotor. Master’s Thesis, Shanghai Jiao Tong University, Shanghai, China, 2018. [Google Scholar]

- Jin, S.X.; Wu, S.L.; Zhang, Z.Y.; Tong, M.F.; Wang, F.L. Analysis of the causes of faulty load shedding and overspeed tripping of heating units. Power Eng. 2002, 2034–2039. [Google Scholar]

- Yu, D.; Xu, J. Simulation analysis of the effect of insensitivity of turbine regulation system on system performance. Power Eng. 1991, 46–52+67–68. [Google Scholar]

- Yu, D.; Xu, J.-Y.; Li, Y.-W. Model-based fault detection in hydraulic speed control system of steam turbine. Chin. J. Electr. Eng. 1993, 64–69. [Google Scholar] [CrossRef]

- Li, W.Z. Research on Networked Fault Diagnosis System for Turbine Regulation System. Master’s Thesis, North China Electric Power University, Beijing, China, 2000. [Google Scholar]

- Wang, Q. FMEA and FTA Based Fault Diagnosis and Its Application in DEH System. Master’s Thesis, North China Electric Power University (Beijing), Beijing, China, 2004. [Google Scholar]

- Wang, X.; Li, X.; Li, F. Analysis on Oscillation in Electro-Hydraulic Regulating System of Steam Turbine and Fault Diagnosis Based on PSOBP. Expert Syst. Appl. 2010, 37, 3887–3892. [Google Scholar] [CrossRef]

- Xu, P. Model-Based Fault Diagnosis of Turbine Regulation System. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2011. [Google Scholar]

- Feng, Y.X.; Cai, S.; Song, C.M.; Wei, S.J.; Liu, J.F.; Yu, D.R. The Research of the Jam Fault Features and Diagnostic Methods of the Slide Valve Oil Motive Based on DEH Data. Adv. Mat. Res. 2013, 860–863, 1791–1795. [Google Scholar] [CrossRef]

- Zhang, Z. Development of Turbine Oil Supply Control System and Hydraulic Servomotor Fault Diagnosis. Master’s Thesis, Dalian University of Technology, Dalian, China, 2019. [Google Scholar]

- Gawde, S.; Patil, S.; Kumar, S.; Kamat, P.; Kotecha, K.; Abraham, A. Multi-Fault Diagnosis of Industrial Rotating Machines Using Data-Driven Approach: A Review of Two Decades of Research. Eng. Appl. Artif. Intell. 2023, 123, 106139. [Google Scholar] [CrossRef]

- Yang, X.; Jiang, A.; Jiang, W.; Zhao, Y.; Tang, E.; Chang, S. Abnormal Detection and Fault Diagnosis of Adjustment Hydraulic Servomotor Based on Genetic Algorithm to Optimize Support Vector Data Description with Negative Samples and One-Dimensional Convolutional Neural Network. Machines 2024, 12, 368. [Google Scholar] [CrossRef]

- Zhou, W.J.; Hu, Y.H.; Tang, J. Research on early warning of hydraulic servomotor failure based on support vector data description. Thermal Turbine 2022, 51, 290–294. [Google Scholar] [CrossRef]

- Jiang, W. Intelligent Information Diagnosis and Monitoring of Hydraulic Failures; Machinery Industry Press: Norwalk, CT, USA, 2013; ISBN 978-7-111-41583-1. [Google Scholar]

- Jiang, W.-L.; Zhao, Y.-H.; Zang, Y.; Qi, Z.-Q.; Zhang, S.-Q. Feature Extraction and Diagnosis of Periodic Transient Impact Faults Based on a Fast Average Kurtogram–GhostNet Method. Processes 2024, 12, 287. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, H.; Dai, X.; Shi, L.; Li, M.; Liu, Z.; Wang, R.; Xia, X. Data-Augmentation Based CBAM-ResNet-GCN Method for Unbalance Fault Diagnosis of Rotating Machinery. IEEE Access 2024, 12, 34785–34799. [Google Scholar] [CrossRef]

- Liu, Z.; Guo, B.; Wu, F.; Han, T.; Zhang, L. An Improved Burr Size Prediction Method Based on the 1D-ResNet Model and Transfer Learning. J. Manuf. Process. 2022, 84, 183–197. [Google Scholar] [CrossRef]

- Tan, A.; Wang, Y.; Zhao, Y.; Zuo, Y. 1D-Inception-Resnet for NIR Quantitative Analysis and Its Transferability between Different Spectrometers. Infrared Phys. Techn. 2023, 129, 104559. [Google Scholar] [CrossRef]

- Liu, R.; Wang, F.; Yang, B.; Qin, S.J. Multiscale Kernel Based Residual Convolutional Neural Network for Motor Fault Diagnosis Under Nonstationary Conditions. IEEE Trans. Ind. Inform. 2020, 16, 3797–3806. [Google Scholar] [CrossRef]

- Pan, H.; Xu, H.; Zheng, J.; Tong, J.; Cheng, J. Twin Robust Matrix Machine for Intelligent Fault Identification of Outlier Samples in Roller Bearing. Knowl.-Based Syst. 2022, 252, 109391. [Google Scholar] [CrossRef]

- Pan, H.; Sheng, L.; Xu, H.; Tong, J.; Zheng, J.; Liu, Q. Pinball Transfer Support Matrix Machine for Roller Bearing Fault Diagnosis under Limited Annotation Data. Appl. Soft Comput. 2022, 125, 109209. [Google Scholar] [CrossRef]

- Moya, M.M.; Koch, M.W.; Hostetler, L.D. One-Class Classifier Networks for Target Recognition Applications. NASA STI/Recon Tech. Rep. N 1993, 93, 24043. [Google Scholar]

- Bishop, C.M. Novelty Detection and Neural Network Validation. In Proceedings of the ICANN ’93, Amsterdam, The Netherlands, 13–16 September 1993; Gielen, S., Kappen, B., Eds.; Springer: London, UK, 1993; pp. 789–794. [Google Scholar]

- Cabral, G.G.; Oliveira, A.L.I. One-Class Classification Based on Searching for the Problem Features Limits. Expert Syst. Appl. 2014, 41, 7182–7199. [Google Scholar] [CrossRef]

- Tax, D.M.J.; Duin, R.P.W. Support Vector Domain Description. Pattern Recognit. Lett. 1999, 20, 1191–1199. [Google Scholar] [CrossRef]

- Zou, Y.; Wu, H.; Guo, X.; Peng, L.; Ding, Y.; Tang, J.; Guo, F. MK-FSVM-SVDD: A Multiple Kernel-Based Fuzzy SVM Model for Predicting DNA-Binding Proteins via Support Vector Data Description. Curr. Bioinf. 2021, 16, 274–283. [Google Scholar] [CrossRef]

- Huang, C.; Min, G.; Wu, Y.; Ying, Y.; Pei, K.; Xiang, Z. Time Series Anomaly Detection for Trustworthy Services in Cloud Computing Systems. IEEE Trans. Big Data 2022, 8, 60–72. [Google Scholar] [CrossRef]

- He, Z.; Zeng, Y.; Shao, H.; Hu, H.; Xu, X. Novel Motor Fault Detection Scheme Based on One-Class Tensor Hyperdisk. Knowl.-Based Syst. 2023, 262, 110259. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, Y.; Gao, Z.; Qin, Y.; Wei, X. PSO-SVDD Normal Domain-Based Hidden Bearing Disease Monitoring under Fault-Free Data Conditions. Mod. Manuf. Eng. 2019, 94–99, 107. [Google Scholar] [CrossRef]

- Xu, E.; Li, Y.; Peng, L.; Yang, M.; Liu, Y. An Unknown Fault Identification Method Based on PSO-SVDD in the IoT Environment. Alex. Eng. J. 2021, 60, 4047–4056. [Google Scholar] [CrossRef]

- Luo, D.; Xue, X. PSO-SVDD-based fault diagnosis of gearbox. Ship Electron. Eng. 2023, 43, 119–122. [Google Scholar]

- Bai, Q. Analysis of Particle Swarm Optimization Algorithm. Stud. Comp. Intell. 2010, 3, 180. [Google Scholar] [CrossRef]

- Guo, Y.; Xiao, H. Genetic Algorithm-Tuned Adaptive Pruning SVDD Method for HRRP-Based Radar Target Recognition. Int. J. Remote Sens. 2018, 39, 3407–3428. [Google Scholar] [CrossRef]

- Tax, D.M.J.; Duin, R.P.W. Support Vector Data Description. Mach. Learn. 2004, 54, 45–66. [Google Scholar] [CrossRef]

| Status Type | Classification | Number of Samples | Length of the Sample |

|---|---|---|---|

| Normal | 1 | 900 | 1024 |

| In-cylinder leakage, light | 0 | 100 | 1024 |

| In-cylinder leakage, heavy | 0 | 100 | 1024 |

| Breakage of the spring | 0 | 100 | 1024 |

| Blockage of the solenoid valve’s throttle orifice | 0 | 100 | 1024 |

| Internal leakage of the servo valve’s spool at zero position | 0 | 100 | 1024 |

| Internal leakage of the solenoid valve, light | 0 | 100 | 1024 |

| Internal leakage of the solenoid valve, heavy | 0 | 100 | 1024 |

| Clogging of the C0 throttle orifice, light | 0 | 100 | 1024 |

| Clogging of the C0 throttle orifice, heavy | 0 | 100 | 1024 |

| Parameter Name | Optimizer | Loss Function | Indicator | Epochs | Batch Size | |

|---|---|---|---|---|---|---|

| Name | Learning Rate | |||||

| Parameter value | SGD | 0.001 | binary_crossentropy | accuracy | 150 | 32 |

| Model | Params (M) | FLOPs (M) | Average Time Consumed for Processing a Single Sample (ms) |

|---|---|---|---|

| 1D_ResNet | 3.849 | 349.365 | 1.134 |

| Channel_1 | 3.849 | 349.104 | 1.179 |

| Channel_2 | 6.291 | 571.533 | 1.308 |

| Channel_3 | 8.732 | 793.962 | 1.300 |

| M1D_ResNet | 18.872 | 1714.598 | 3.275 |

| Dataset | Status | Label | Number of Samples | Length of the Sample |

|---|---|---|---|---|

| Training set | Normal | 1 | 540 | 2 |

| Validation set | Normal | 1 | 180 | 2 |

| Test set | Normal | 1 | 180 | 2 |

| In-cylinder leakage, light | −1 | 20 | 2 | |

| In-cylinder leakage, heavy | −1 | 20 | 2 | |

| Breakage of the spring | −1 | 20 | 2 | |

| Blockage of the solenoid valve’s throttle orifice | −1 | 20 | 2 | |

| Internal leakage of the servo valve’s spool at zero position | −1 | 20 | 2 | |

| Internal leakage of the solenoid valve, light | −1 | 20 | 2 | |

| Internal leakage of the solenoid valve, heavy | −1 | 20 | 2 | |

| Clogging of the C0 throttle orifice, light | −1 | 20 | 2 | |

| Clogging of the C0 throttle orifice, heavy | −1 | 20 | 2 |

| Parameter Name | Penalty Factor | Radial Kernel Parameter Gamma | Support Vector Count | Percentage of Support Vectors | Radius of the Hypersphere |

|---|---|---|---|---|---|

| Parameter value | 0.47879115 | 0.00203236 | 4 | 0.5556% | 0.3739 |

| Dimensions of the Feature | Classification | Test Set | Testing Time per Sample (ms) | ||

|---|---|---|---|---|---|

| Average Value | Minimum Value | Maximum Value | |||

| 2 | Normal | 180 | 0.0174 | 0.01253 | 0.0223 |

| Abnormal | 180 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Jiang, A.; Jiang, W.; Zhao, Y.; Tang, E.; Qi, Z. Detecting Anomalies in Hydraulically Adjusted Servomotors Based on a Multi-Scale One-Dimensional Residual Neural Network and GA-SVDD. Machines 2024, 12, 599. https://doi.org/10.3390/machines12090599

Yang X, Jiang A, Jiang W, Zhao Y, Tang E, Qi Z. Detecting Anomalies in Hydraulically Adjusted Servomotors Based on a Multi-Scale One-Dimensional Residual Neural Network and GA-SVDD. Machines. 2024; 12(9):599. https://doi.org/10.3390/machines12090599

Chicago/Turabian StyleYang, Xukang, Anqi Jiang, Wanlu Jiang, Yonghui Zhao, Enyu Tang, and Zhiqian Qi. 2024. "Detecting Anomalies in Hydraulically Adjusted Servomotors Based on a Multi-Scale One-Dimensional Residual Neural Network and GA-SVDD" Machines 12, no. 9: 599. https://doi.org/10.3390/machines12090599

APA StyleYang, X., Jiang, A., Jiang, W., Zhao, Y., Tang, E., & Qi, Z. (2024). Detecting Anomalies in Hydraulically Adjusted Servomotors Based on a Multi-Scale One-Dimensional Residual Neural Network and GA-SVDD. Machines, 12(9), 599. https://doi.org/10.3390/machines12090599