Abstract

The upsurge of autonomous vehicles in the automobile industry will lead to better driving experiences while also enabling the users to solve challenging navigation problems. Reaching such capabilities will require significant technological attention and the flawless execution of various complex tasks, one of which is ensuring robust localization and mapping. Recent surveys have not provided a meaningful and comprehensive description of the current approaches in this field. Accordingly, this review is intended to provide adequate coverage of the problems affecting autonomous vehicles in this area, by examining the most recent methods for mapping and localization as well as related feature extraction and data security problems. First, a discussion of the contemporary methods of extracting relevant features from equipped sensors and their categorization as semantic, non-semantic, and deep learning methods is presented. We conclude that representativeness, low cost, and accessibility are crucial constraints in the choice of the methods to be adopted for localization and mapping tasks. Second, the survey focuses on methods to build a vehicle’s environment map, considering both the commercial and the academic solutions available. The analysis proposes a difference between two types of environment, known and unknown, and develops solutions in each case. Third, the survey explores different approaches to vehicle localization and also classifies them according to their mathematical characteristics and priorities. Each section concludes by presenting the related challenges and some future directions. The article also highlights the security problems likely to be encountered in self-driving vehicles, with an assessment of possible defense mechanisms that could prevent security attacks in vehicles. Finally, the article ends with a debate on the potential impacts of autonomous driving, spanning energy consumption and emission reduction, sound and light pollution, integration into smart cities, infrastructure optimization, and software refinement. This thorough investigation aims to foster a comprehensive understanding of the diverse implications of autonomous driving across various domains.

1. Introduction

In the last decade, self-driving vehicles have shown impressive progress, with many researchers working in different laboratories and companies and experimenting in various environmental scenarios. Some of the questions in the minds of potential users relate to the advantages that are offered by this technology and whether we can rely on it or not. To respond to these questions, one needs to look at the numbers of accidents and deaths registered daily from non-autonomous vehicles. According to the World Health Organization’s official website, approximately 1.3 million people, mainly children and young adults, die yearly in vehicle crashes. Most of these accidents (93%) are known to occur in low- and middle-income countries [1]. Human behaviors, including traffic offenses, driving while under the influence of alcohol or psychoactive substances, and late reactions, are the major causes of crashes. Consequently, self-driving technology has been conceived and developed to replace human–vehicle interaction. Despite some of the challenges of self-driving vehicles, the surveys in [2] indicate that about 84.4% of people are in favor of using self-driving vehicles in the future. Sale statistics also show that the worth of these vehicles was USD 54 billion in 2021 [3]. This outcome demonstrates the growing confidence in the usage of this technology. It has also been suggested that this technology is capable of reducing pollution, energy, time consumption, and accident rates, while also ensuring the safety and comfort of its users [4].

The Society of Automotive Engineers (SAE) divides the technical revolution resulting from this technology into five levels. The first two levels do not provide many automatic services and, therefore, have a low risk potential. The challenge starts from level three, where the system is prepared to avoid collisions and has assisted steering, braking, etc. Moreover, humans must be prudent with respect to any hard decision or alert from the system. Levels four and five refer to vehicles that are required to take responsibility for driving without any human interaction. Hence, these levels are more complex, especially if one considers how dynamic and unpredictable the environment can be, where there may be a chance of collision from anywhere. Ref. [4] affirms that no vehicle has achieved robust results in these levels at the time of writing this paper.

Creating a self-driving vehicle is a complicated task, where it is necessary to perform predefined steps consistently and achieve the stated goals in a robust manner. The principal question that should be answered is: how can vehicles work effectively without human interaction? Vehicles need to continuously sense the environment; hence, they should be equipped with accurate sensors, like Global Navigation Satellite System (GNSS), Inertial Measurement Unit (IMU), wheel odometry, LiDAR, radar, cameras, etc. Moreover, a high-performance computer is needed to handle the immense volume of data collected and accurately extract relevant information. This information helps the vehicles make better decisions and execute them using specific units prepared for this purpose.

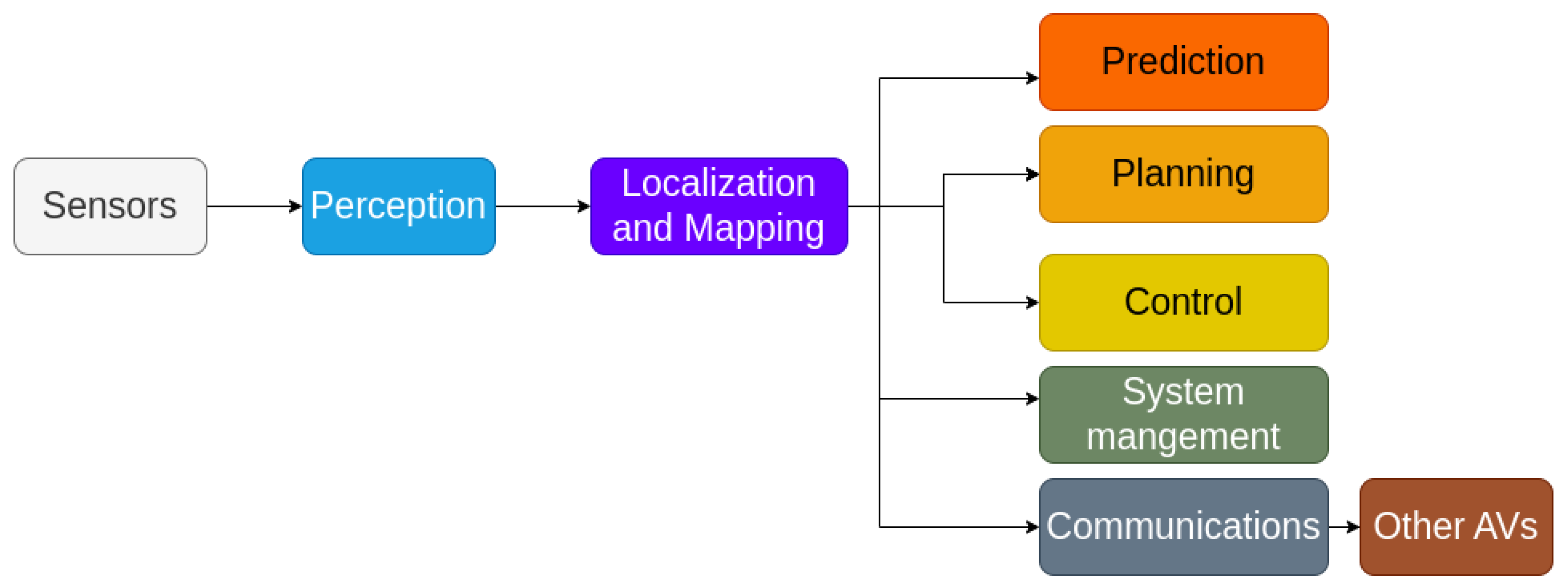

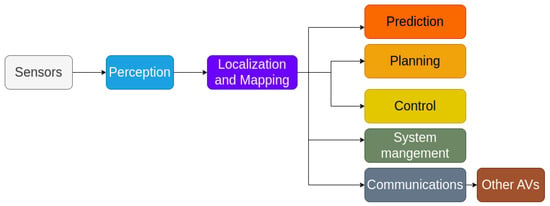

To achieve autonomous driving, Ref. [5] proposed an architecture that combines localization, perception, planning, control, and system management. Localization is deemed an indispensable step in the operation autonomous systems since we need accurate information of its location. Figure 1 illustrates the importance of localization and mapping steps in the deployment of other self-driving tasks, like path planning (e.g., finding the shortest path to a certain destination), ensuring reliable communications with other AVs, and performing necessary tasks at the right moment, such as overtaking vehicles, breaking, or accelerating. A small localization error can lead to significant issues, like traffic perturbation, collisions, accidents, etc.

Figure 1.

Steps to follow to achieve a self-driving vehicle.

Finding positions can be implemented with a 3D vector, with lateral, longitudinal, and orientation components called ”heading”:

where and are the Cartesien coordinates of the vehicle and is the rotation of it. There exist other representations using speed, acceleration, etc., see [6] for more details. However, we use the above representation most of the time, where the height is generally equal to 0, as there is no rotation about x-axis and y-axis. This vector is denoted by the state vector xt.

One of the classical methods is based on the Global Navigation Satellite Systems (GNSS). This technique uses the trilateration approach, which allows the detection of the position anywhere in the world using three satellites. Moreover, this is a cheap solution. However, GNSS-based methods may suffer from signal blockage, and they are not preferred in some environments, especially when an obstacle cuts the line of sight. In addition, the errors of positioning exceed 3 m. All these issues can lead to unreliability and unsafety in driving. Several papers in the literature have attempted to address this problem. For example, the D-GPS or RTK-based technologies [7].

Localization based on wheels odometry is another approach that can be used to find the poses that represents the position based on a known starting point and the distance traveled. This alternative method can be applied without external references and utilized in other autonomous systems not only in self-driving vehicles. However, the method suffers from cumulative errors provided by wheel slips [7].

Inertial Navigation System (INS) uses the data provided by the IMU sensor, including accelerometers, gyroscopes, and magnetometers, and the dead-reckoning to localize the vehicle without any external references. But, that is still very restricted and limited because of cumulative errors [7].

The weakness of the traditional methods is that they work only with one source of information, and their performance depends relatively on the structure of the environment. Due to these limitations, researchers have attempted to combine information from various sensors in order to explore the advantages provided by each of them and tackle the above mentioned issues. We found that the subject of localization and mapping is widely treated and surveyed by other previous articles in the literature. Table 1 presents our effort to put together the previous surveys that investigated the same subject. We have identified some concepts that must be investigated in relation to the localization and mapping in autonomous vehicles, which include feature extraction, mapping, ego-localization (vehicle localization based on its own sensors), co-localization (vehicle localization based on its own sensors and nearby vehicles), simultaneous localization and mapping (SLAM), security of autonomous vehicles, environmental impact, and finally we present the challenges and future directions in the area.

Table 1.

Comparison of previous surveys on localization and mapping.

The surveys [8,9] studied visual-based localization methods, where they investigated a couple of methods of data representation and feature extraction. They deeply discussed the localization techniques as well as the challenges that can be met by these techniques. However, Light Detection and Ranging (LiDARs) methods are neglected in these two surveys. Grigorescu et al. [10] restricted their survey to only deep learning methods. Similarly, Refs. [11,12] have surveyed 3D object detection methods and feature extraction methods in points cloud datasets. On the other hand, many articles [13,14,15,16] have tackled the problem of Simultaneous Localization and Mapping (SLAM) and have listed recent works previously elaborated in this field. These surveys lack information on the feature extraction, and they did not present much information about the existing types of maps. An interesting survey was performed in [17], where they analyzed different methods that can be applied to Vehicular Ad-hoc Network (VANET) localization, but without tackling neither feature extraction nor mapping methods. Like the survey we present here, Kuutti et al. [18] have investigated the positioning methods according to each sensor. Moreover, they have emphasised the importance of cooperative localization, by reviewing the Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) localization. However, these surveys did not present any feature extraction methods. Badue et al. [19] surveyed extensively the methods that aim to solve the localization and mapping problem in self-driving cars. However, issues related to the protection and security of autonomous vehicles have not been investigated. The survey in [20] covered the localization and mapping problem for racing autonomous vehicles. Based on these limitations in the previous surveys that can be found in the literature, we put forward here a survey that consists of the following main contributions:

- Comprehensively reviewing the feature extraction methods in the field of autonomous driving.

- Providing clear details on what are the solutions to create a map for autonomous vehicles, by investigating the solutions that use pre-built maps, which can be pre-existing commercial maps or generated by some academic solutions, or the online map solution, which creates a map in the same time with the positioning process.

- Providing necessary background information and demonstrating many existing solutions to solve the localization and mapping tasks.

- Reviewing and analysing localization approaches that exist in the literature together with the related solutions to ensure data security.

- Exploring the environmental footprint and impact of self-driving vehicles in relation to localization and mapping techniques and unveiling solutions for this.

The rest of this paper is organized as follows: Section 2 presents different methods to extract relevant features, precisely from LiDAR and camera sensors. Section 3 depicts relevant tips to generate an accurate map. Section 4 addresses the recent localization approaches in the literature. Section 5 highlights the levels of possible attacks and some defense mechanisms for localization security. Section 6 discusses the environmental impact of self-driving vehicle. Section 7 draws a synthetic conclusion and provides a perspective for future work in the area.

2. Feature Extraction

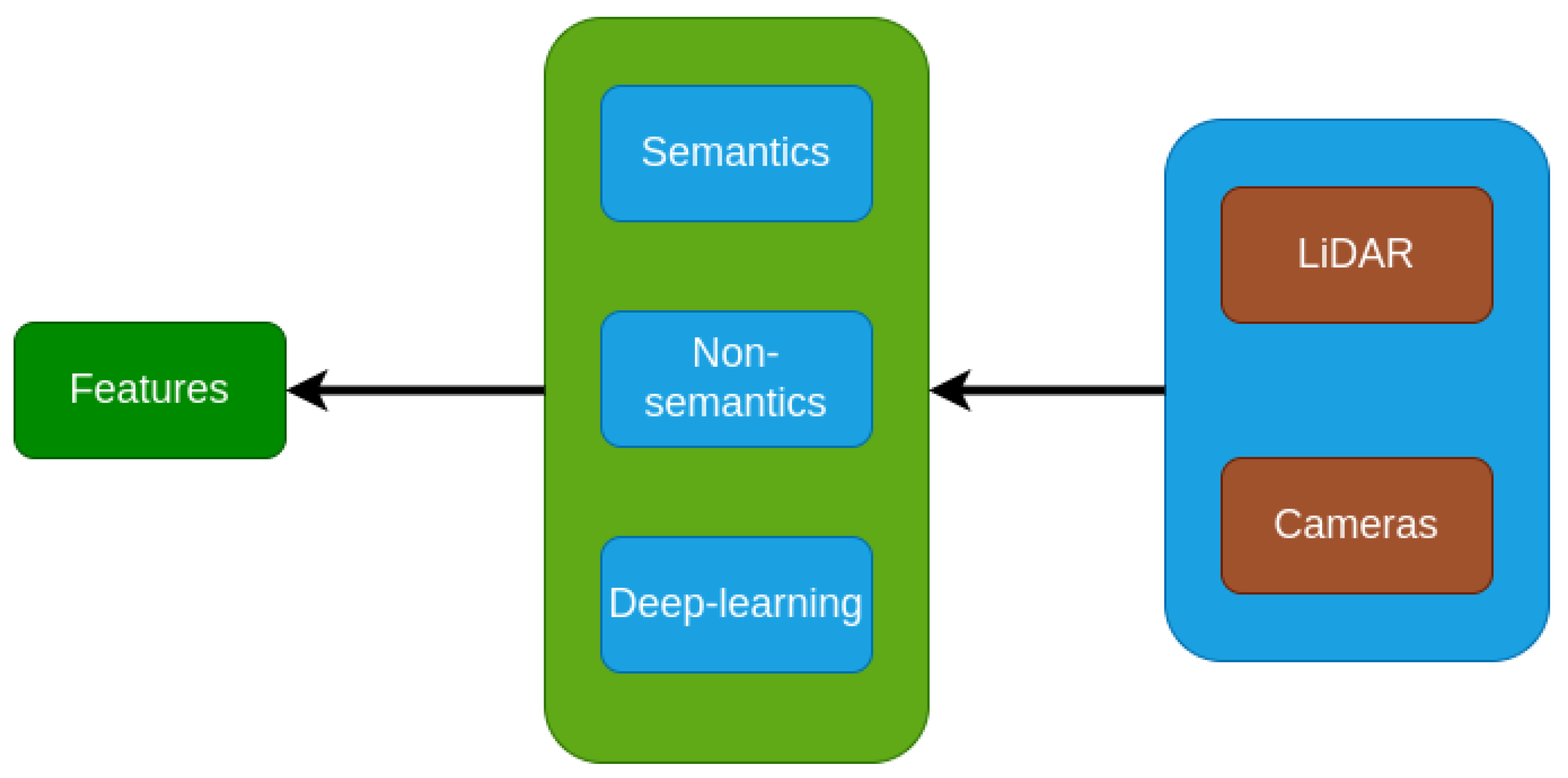

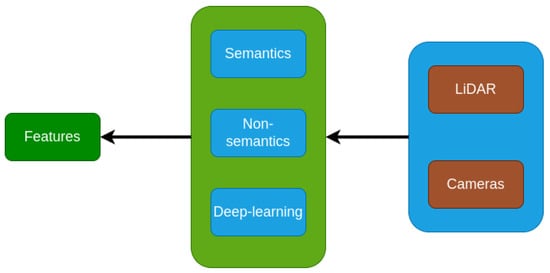

Feature extraction is the process of providing a relevant representation of the raw data. In other words, it is manipulating the data to be easy to learn while being more robust to generalize. It is the same here with sensors’ data. Extracting suitable information is very useful, and it will reduce cost, energy and aid the models to be fast and accurate in the execution. In this section, we give an overview of what was done previously in the area. However, we limit the discussions to two sensors, namely, LiDAR and cameras, because we believe that they are more suitable and useful in practice. Figure 2 provides a clear flowchart to understand these features.

Figure 2.

Flowchart of feature extraction.

2.1. Semantic Features

Semantic features essentially deals with extracting a known target (poles, facades, curbs, etc.) from the raw data, in order to localize the vehicle more accurately.

LiDAR features: We can extract lane markings using specialized methods that differentiate lanes from the surrounding environment. These techniques analyze how LiDAR beams reflect off surfaces and consider variations in elevation to accurately separate lane boundaries. In [21], authors extract lane marking as a feature to localize the vehicle by using the Radon transform to approximate polylines of the lane. Then, they have applied the Douglas-Peukers algorithm to refine the shape points that estimate the road center lines. In the work carried out by Im et al. [22], attempts were made to detect two kinds of features. The first category was produced using LiDAR reflectivity from layer 17 to 32 to extract road marking. Indeed, a binarization layer was applied on the extracted ground plane. This binarization is done by using the Otsu thresholding method [23]. Finally, they used the Hough transform to infer road marking lines. On the other hand, the second category focuses on the extraction of the building walls because they resemble lines in the 2D horizontal plane. So, they have projected all these features into a 2D probabilistic map. However, the existence of the poles like street trees affects the creation of the map of lines. To solve this problem, unnecessary structures were eliminated by applying the Iterative-End-Point-Fit (IEPF) algorithm. This is considered as one of the most reliable algorithms for line fitting, and it gives the best accuracy in a short calculation time. Zhang et al. [24] explored the height of curbs between 10–15 cm, and the smoothness and continuity of points cloud around the curbs to propose a method to track the curbs. This approach is also used in [25] with some modifications. The existence of curbs on almost every road could be regarded as very useful. The authors of [26] used the combination of information from LiDAR and cameras to extract poles like traffic signs, trees, and street lamps, since they are more representative for localization purposes. The authors projected, in each layer, the point clouds in a 2D grid map after removing the ground plane, and they assumed that the connected grid cell of points, at the same height, is supposed to be a candidate object. To ensure the reliability of this method, they have created a cylinder for each landmark candidate and form the poles’ shapes. From the camera, they attempted to calculate a dense disparity mapping and apply pattern recognition on the result of the map to detect landmarks. Kummerle et al. [27] adopts the same idea as [26], where they attempted to extract poles and facades from the LiDAR scans. They also detected road marking by a stereo camera. In [28], the authors extracted, as the first kind of such features, building facades, traffic poles, and trees, by calculating the variance of each voxel, then, they checked the size of a fixed threshold, and concluded on the closeness of the points. Those voxels are grouped and arranged in the same vertical direction. Hence, each cluster that respects the variance condition in each voxel is considered as a landmark. The second kind is interested in the reflectivity of the ground, which is extracted with the RANSAC algorithm from LiDAR intensity. A performant algorithm to detect poles was proposed in [29]. Firstly, a 3D voxel map was applied on the set of points cloud; then, they eliminated cells with fewer points. Thereafter, they determined the cluster’s boundaries by calculating the intensity of points in the pole’s shape candidate to deduce the highest and lowest parts. Finally, they checked if the points clouds in the entire core of the pole candidates did satisfy the density condition to be considered as pole candidates. Ref. [30] implemented a method with three steps: voxelization, horizontal clustering, and vertical clustering (voxelization is a preprocessing step). Typically, it is intended to remove the ground plan and regroup each part of the points cloud into voxels; then, pick the voxels with the number of points greater than a fixed threshold. Also, they exploited the pole characteristics in an urban area, like the isolation of the poles, distinguishable from the surrounding area, in order to extract horizontal and vertical features. Ref. [31] is an interesting article that provides highly accurate results of detecting pole landmarks. Indeed, the authors have used a 3D probabilistic occupancy grid map based mainly on the Beta distribution applied for each layer. Then, they have calculated the difference between the average occupancy value inside the pole and the maximum occupancy value of the surrounding poles, which is a value between . Greater value means higher probability that it is a pole. A GitHub link for the method was provided in [31]. Ref. [32] is another method that uses the pole landmarks, providing a high-precision method to localize self-driving vehicles. The method assumed five robust conditions: common urban objects, time-invariant location, time-invariant appearance, viewpoint-invariant observability, and high frequency of occurrence. First, they removed the ground plan using the M-estimator Sample Consensus (MSAC). Secondly, they performed an occupied grid map, which isolates the empty spaces. Afterwards, they performed clustering with the connected component analysis (CCA) to group the connected occupied grid into an object. Finally, PCA was used to infer the position of the poles. The idea is to use the three eigenvalues obtained in these three conditions ( is a fixed threshold).

These conditions formed precisely the dimensions of the poles. The article [33] has detected three kinds of features. First, planar features, like walls, building facades, fences, and other planar surfaces. Second, pole features include streetlights, traffic signs, tree trunks. Third, curbs shapes. After removing the ground plan using the Cloth Simulation Filter, the authors have concluded that all landmarks are vertical and higher above the ground plan and contain more points than its surrounding. They filtered cells that do not respect the above conditions. Planar features and curb features are considered as lines that could be approximated by a RANSAC algorithm or running a Hough transform. This research focuses on the use of pole-like structures as essential landmarks for accurate localization of autonomous vehicles in urban and suburban areas. A novel approach is to create a detailed map of the poles, taking into account both geometric and semantic information. Ref. [34] improves the localization of autonomous vehicles in urban areas using bollard-like landmarks. A novel approach integrates geometric and semantic data to create detailed maps of bollards using a mask-rank transformation network. The proposed semantic particle filtering improves localization accuracy, validated on the Semantic KITTI dataset. Integrating semantics into maps is essential for robust localization of autonomous vehicles in uncertain urban environments. Ref. [35] introduces a novel method for accurate localization of mobile autonomous systems in urban environments, utilizing pole-like objects as landmarks. Range images from 3D LiDAR scans are used for efficient pole extraction, enhancing reliability. The approach stands out for its improved pole extraction, efficient processing, and open-source implementation with a dataset, surpassing existing methods in various environments without requiring GPU support.

Camera features. Due to its low cost, and lightweight, the camera is an important sensor widely used by researchers and automotive companies. Ref. [13] divided cameras into 4 principal types: monocular camera, stereo camera, RGB-D camera, and event camera. Extracting features from the camera sensor is another approach that should be investigated. Ref. [8] is one of the best works done in visual-based localization, where the authors have given an overall idea about the recent trend in this field; they divide features into local and global features. Local features search to extract precise information from a local region in the image, which is more robust for the generalization task and more stable with image variations. Secondly, global features focus on the extraction of features from the whole image. These features could either be semantical or not. Most researchers working with semantics try to extract contours, line segments, and objects in general. In [36], the authors extracted two types of linear features, edges, and ridges by using a Hough transform. Similarly, authors of [37] have used a monocular camera to extract lanes’ markings and pedestrian crossing lines. Polylines approximate these features. On the other hand, Ref. [38] performed a visual localization using cameras, where they brought out edges from environment scenes as a features/input. Each image input is processed as follows: the first step consists of solving the radial distortion issue, which is the fact that straight lines bend into circular arcs or curves [39]. This problem can be solved by the radial distortion correction methods and projective transformation into the bird’s eye view. Moreover, they calculated the gradient magnitude, which creates another image, called gradient image, split into several intersecting regions. After that, a Hough transform is applied to refine the edges of the line segments. Finally, a set of conditions depending on geometric and connectivity of the segment was checked to find the last representation of the edge polylines. Localization of mobile devices was investigated in [40] by using features that satisfy some characteristics, such as: permanent (statical), informative (distinguishable from others), widely available. From the features we can derive: alignment tree, autolib station, water fountain, street lights, traffic lights, etc. Reference [41] introduced MonoLiG, a pioneering framework for monocular 3D object detection that integrates LiDAR-guided semi-supervised active learning (SSAL). The approach optimizes model development using all data modalities, employing LiDAR to guide the training without inference overhead. The authors utilize a LiDAR teacher, monocular student cross-modal framework for distilling information from unlabeled data, augmented by a noise-based weighting mechanism to handle sensor differences. They propose a sensor consistency-based selection score to choose samples for labeling, which consistently outperforms active learning baselines by up to in labeling cost savings. Extensive experiments on KITTI and Waymo datasets confirm the framework’s effectiveness, achieving top rankings in official benchmarks and outperforming existing active learning approaches.

2.2. Non-Semantics Features

Non-semantics features are unlike the semantics once; they do not have any significance in their contents. They provide an abstract scan without adopting any significant structure like poles, buildings, etc. This gives a more general representation of the environment and reduces execution time instead of searching about a specific element that can not exist everywhere.

LiDAR features. In [42], the method consists of four main steps. They started with pre-processing, which aligns each local neighborhood to a local reference frame using Principal Component Analysis (PCA). The smallest principal component is taken as a normal (perpendicular to the surface). Secondly, Pattern Generation is where the remaining local neighborhood points are transformed from 3D into 2D within a grid map; each cell contains the maximum value of reflectivity. Furthermore, the descriptor calculation is performed by the DAISY descriptor, which works by convolving the intensity pattern by a Gaussian kernel. After that, a gradient of intensities is calculated for eight radial orientations. Moreso, a smoothening is performed using Gaussian kernel of three different standard deviations. Finally, a normalization step is applied to maintain the value of the gradients within the descriptor. ’GRAIL’ [42] is able to compare the query input with twelve distinctive shapes that can be used as relevant features for localization purposes. Hungar et al. [43] used a non-semantical approach where a reduction of the time of execution, by selecting points whose sphere of the local neighborhood with radius r, including an amount of point that will exceed a fixed threshold, was carried out. After that, they distinguish the remaining patterns into curved and non-curved by using k-medoids clustering. The authors use a DBSCAN clustering to aggregate similar groups to infer features. Lastly, the creation of the key features and map features relied on different criteria, including distinctiveness, uniqueness, spatial diversity, tracking stability, persistence. A 6D pose estimation was performed by [44], where they describe the vehicle’s roadside and considered it a useful feature for the estimation model. To achieve this, they proposed preprocessing the point cloud through ROI (Region of Interest) filtering in order to remove long-distance background points. After that, a RANSAC algorithm was performed to find the corresponding equation of the road points. Thanks to the Radius Outlier Removal filter, which the authors used to reduce the noise by removing isolated points and reduce their interference. Meanwhile, the shape of the vehicles was approximated with the help of the Euclidean clustering algorithm presented in [45]. Charroud et al. [46,47] have removed the ground plan of all the LiDAR scan to reduce a huge amount of points, and they have used a Fuzzy K-means clustering technique to extract relevant features from the LiDAR scan. An extension of this work [48] adds a downsampling method to speed up the calculation process of the Fuzzy K-means algorithm.

Descriptors based methods can be considered an interesting idea since they are widely used to extract meaningful knowledge directly from a set of points’ cloud. These methods do enable a separation of each feature point regarding the perturbation caused by noise and varying density, and the change of the appearance of the local region [49]. They also added that four main criteria should be used while performing features description, citing the descriptiveness, robustness, efficiency, and compactness. These criteria involved the reliability, the representativity, the cost of time and the storage space. These descriptors can be used in the points pair-based approach, which is the task of exploiting any relationship between two points like distance or angle between normals (the perpendicular lines to a given object), or boundary to boundary relations, or relations between two lines [50]. Briefly, with respect to this methods, we can cite CoSPAIR [51], PPFH [52], HoPPF [53], PPTFH [54]. Alternatively, they can be included to extract local features. For example, TOLDI [55], BRoPH [49], and 3DHoPD [56].

Camera features. Extracting non-semantic features from the camera sensor is widely treated in the literature. However, this article focuses on methods that work to find pose estimation. Due to the fastness of the ORB compared to other descriptors like SIFT or SURF, many researchers have adopted this descriptor to represent image features. For this purpose, Ref. [57] attempted to extract key features by using an Oriented FAST and rotated BRIEF (ORB) descriptor, to achieve an accurate matching between the extracted map and the original map. Another matching features’ method was proposed in [58], where the authors sort to extract holistic features from front view images by using the ORB layer and BRIEF layer descriptor to find a great candidate node, while local features from downward view images were detected using FAST-9, which are fast enough to cope with this operation. Gengyu et al. [59] extracted ORB features and converted them to visual words based on the DBoW3 approach [60,61] used ImageNet-trained Convolutional Neural Networks (CNN) features (more details in the next sub-section) to extract object landmarks from images, as they are considered to be more powerful for localization tasks. Then, they implement Scale-Invariant Feature Transform (SIFT) for further improvement of the features.

2.3. Deep Learning Features

It is worth mentioning the advantage of working with deep learning methods, which try to imitate the working process of the human brain. Deep learning (DL) is widely encountered in various application domains, such as medical, financial, molecular biology, etc. It can be used for different tasks, such as object detection and recognition, segmentation, classification, etc. In the followings, we survey recent papers that use DL for vehicle localization and mapping.

LiDAR and/or Camera features.One of the most interesting articles done in this field is [11], where the authors provided an overall picture of object detection with LiDAR sensors using deep learning. This survey divides the state-of-art algorithms into three parts: projection-based methods, which project the set of points cloud into a 2D map respecting a specific viewpoint; voxel-based methods, which involve the task of making data more structured and easier to use by discretizing the space into a fixed voxel grid map; finally, point-based methods, which work directly on the set of points’ cloud. Our literature investigation concludes that most of the methods are based on the CNN architecture with different modifications in the preprocessing stage.

- MixedCNN-based Methods: Convolutional Neural Network (CNN) is one of the most common methods used in computer vision. These types of methods use mathematical operations called ’convolution’ to extract relevant features [62]. VeloFCN [63] is a projection-based method and one of the earliest methods that uses CNN for 3D vehicle detection. The authors used a three convolution layer structure to down-sample the input front of the view map, then up-sample with a deconvolution layer. The output of the last procedure is fed into a regression part to create a 3D box for each pixel. Meanwhile, the same results were entered for classification to check if the corresponding pixel was a vehicle or not. Finally, they grouped all candidates’ boxes and filtered them by a Non-Maximum Suppression (NMS) approach. In the same vein, LMNet [64] increased the zone of detection to find road objects by taking into consideration five types of features: reflectance, range, distance, side, height. Moreover, they change the classical convolution by the dilated ones. The Voxelnet [65] method begins with the process of voxelizing the set of points cloud and passing it through the VFE network (explained below) to obtain robust features. After that, a 3D convolutional neural network is applied to group voxels features into a 2D map. Finally, a probability score is calculated using an RPN (Region Proposal Network). The VFE network aims to learn features of points by using a multi-layer-perceptron and a max-pooling architecture to obtain point-wise features. This architecture concatenates features from the MLP output and the MLP + Maxpooling. This process is repeated several times to facilitate the learning. The last iteration is fed to an FCN to extract the final features. BirdNet [66] generates a three-channel bird eye’s view image, which encodes the height, intensity, and density information. After that, a normalization was performed to deal with the inconsistency of the laser beams of the LiDAR devices. BirdNet uses a VGG16 architecture to extract features, and they adopt a Fast-RCNN to perform object detection and orientation. BirdNet+ [67] is an extension of the last work, where they attempted to predict the height and vertical position of the centroid object in addition to the processing of the source (BirdNet) method. This field is also approached by transfer learning, like in Complex-YOLO [68], and YOLO3D [69]. Other CNN-based method include regularized graph CNN (RGCNN) [70], Pointwise-CNN [71], PointCNN [72], Geo-CNN [73], Dynamic Graph-CNN [74] and SpiderCNN [75].

- Other Methods: These techniques are based on different approaches. Ref. [76] is a machine learning-based method where the authors try to voxelize the set of points cloud into 3D grid cells. They extract features just from the non-empty cells. These features are a vector of six components: mean and variance of the reflectance, three shape factors, and a binary occupancy. The authors proposed an algorithm to compute the classification score, which takes in the input of a trained SVM classification weight and features, then a voting procedure is used to find the scores. Finally, a non-maximum suppression (NMS) is used to remove duplicate detection. Interesting work is done in [77], who tried to present a new architecture of learning that directly extracts local and global features from the set of points cloud. The 3D object detection process is independent of the form of the points cloud. PointNet shows a powerful result in different situations. PointNet++ [78] extended the last work of PointNet, thanks to the Furthest Point Sampling (FPS) method. The authors created a local region by clustering the neighbor point and then applied the PointNet method in each cluster region to extract local features. Ref. [79] introduces a novel approach using LiDAR range images for efficient pole extraction, combining geometric features and deep learning. This method enhances vehicle localization accuracy in urban environments, outperforming existing approaches and reducing processing time. Publicly released datasets support further research and evaluation. The research presents PointCLIP [80], an approach that aligns CLIP-encoded point clouds with 3D text to improve 3D recognition. By projecting point clouds onto multi-view depth maps, knowledge from the 2D domain is transferred to the 3D domain. An inter-view adapter improves feature extraction, resulting in better performance in a few shots after fine-tuning. By combining PointCLIP with supervised 3D networks, it outperforms existing models on datasets such as ModelNet10, ModelNet40 and ScanObjectNNN, demonstrating the potential for efficient 3D point cloud understanding using CLIP. PointCLIP V2 [81] enhances CLIP for 3D point clouds, using realistic shape projection and GPT-3 for prompts. It outperforms PointCLIP [80] by , , and accuracy in zero-shot 3D classification. It extends to few-shot tasks and object detection with strong generalization. Code and prompt details are provided. The paper [82] presents a "System for Generating 3D Point Clouds from Complex Prompts and proposes an accelerated approach to 3D object generation using text-conditional models. While recent methods demand extensive computational resources for generating 3D samples, this approach significantly reduces the time to 1–2 min per sample on a single GPU. By leveraging a two-step diffusion model, it generates synthetic views and then transforms them into 3D point clouds. Although the method sacrifices some sample quality, it offers a practical tradeoff for scenarios, prioritizing speed over sample fidelity. The authors provide their pre-trained models and code for evaluation, enhancing the accessibility of this technique in text-conditional 3D object generation. Researchers have developed 3DALL-E [83], an add-on that integrates DALL-E, GPT-3 and CLIP into the CAD software, enabling users to generate image-text references relevant to their design tasks. In a study with 13 designers, the researchers found that 3DALL-E has potential applications for reference images, renderings, materials and design considerations. The study revealed query patterns and identified cases where text-to-image AI aids design. Bibliographies were also proposed to distinguish human from AI contributions, address ownership and intellectual property issues, and improve design history. These advances in textual referencing can reshape creative workflows and offer users faster ways to explore design ideas through language modeling. The results of the study show that there is great enthusiasm for text-to-image tools in 3D workflows and provide guidelines for the seamless integration of AI-assisted design and existing generative design approaches. The paper [84] introduces SDS Complete, an approach for completing incomplete point-cloud data using text-guided image generation. Developed by Yoni Kasten, Ohad Rahamim, and Gal Chechik, this method leverages text semantics to reconstruct surfaces of objects from incomplete point clouds. SDS Complete outperforms existing approaches on objects not well-represented in training datasets, demonstrating its efficacy in handling incomplete real-world data. Paper [85] presents CLIP2Scene, a framework that transfers knowledge from pre-trained 2D image-text models to a 3D point cloud network. Using a semantics-based multimodal contrastive learning framework, the authors achieve annotation-free 3D semantic segmentation with significant mIoU scores on multiple datasets, even with limited labeled data. The work highlights the benefits of CLIP knowledge for understanding 3D scenes and introduces solutions to the challenges of unsupervised distillation of cross-modal knowledge.

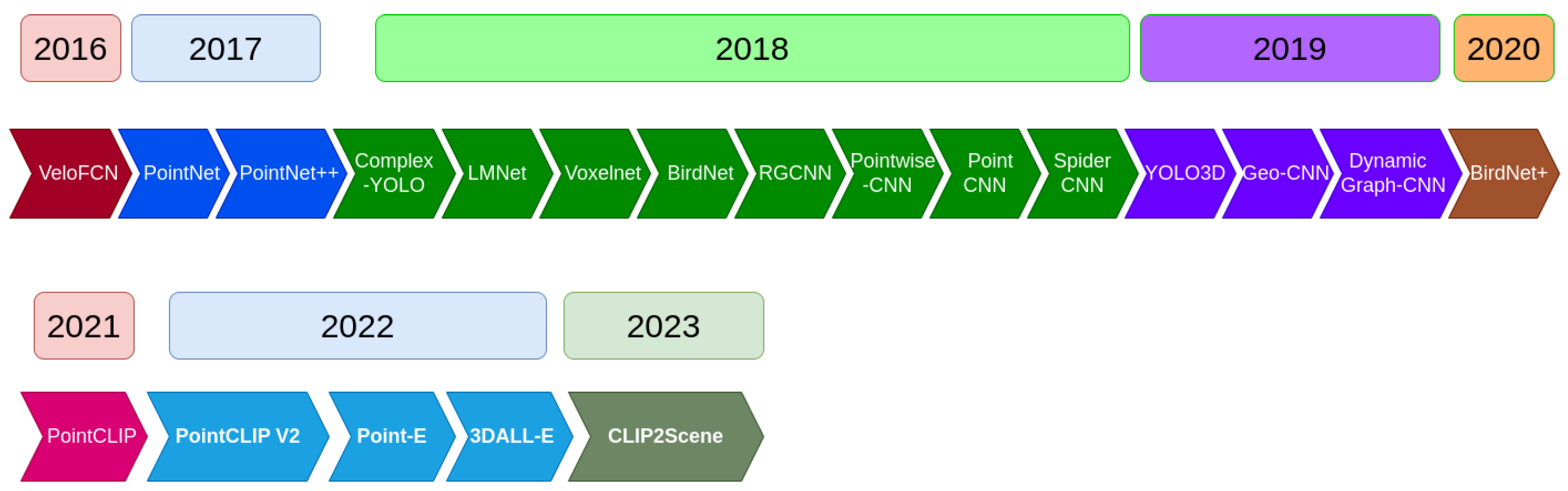

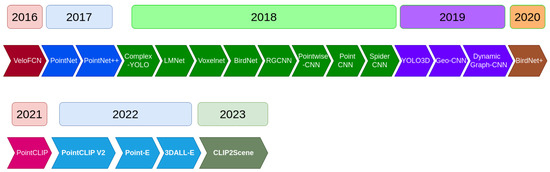

Figure 3 represents a timeline of the most popular 3D object detection algorithms.

Figure 3.

Timeline of 3D object detection algorithms [11].

2.4. Discussion

Table 2 provides a categorization for the surveyed papers in this section. The papers were grouped regarding the extracted features. We noticed that the papers extracted three kinds of object features from the environment: vertical, horizontal, or road curve features in case of semantic type. Also, papers have used non-semantic and deep learning methods to represent any kind of objects that exist in the environment, or to represent only a part of them. Moreover, the table provides some methods and concepts used to extract the features. We have analysed the robustness of the extracted features to help the localization and mapping tasks by using three criteria deduced from our state-of-art investigation.

- Time and energy cost: being easy to detect and easy to use in terms of compilation and execution.

- Representativeness: detecting features that frequently exist in the environment to ensure the matching process.

- Accessibility: being easy to distinguish from the environment.

We have used the column ’Robustness’ as a score given to each cluster of papers. The score is calculated based on the three criteria above and the analysis of experiments in the papers. According to the same table, extracting non-semantic features have the highest robustness score regarding their ability to represent the environment even with less texture, i.e., in the case of few objects in the environment like in the desert. This competence is due to the way the features are extracted. Those methods do not limit themselves to extracting one type of object. However, the map created by those features will not have a meaning. They are just helpful reference points for the localization process only.

On another hand, using semantic features helps to get a passable score to be used in localization tasks since they consume a bit more time and energy for executions because most of the time they are not isolated in the environment. One more thing is that they can not be found in any environment, which hardly affects the localization process. Despite all these negative points, these techniques reduce effectively the huge amount of points data (LiDAR or Camera) compared with the non-semantic ones. Also, those features can be used for other perception tasks.

Deep learning methods also get a passable score regarding their efficiency to represent the environment. Like the non-semantic techniques, the DL approaches ensure the representativeness of the features in all environments. However, the methods consume a lot of time and computational resources to be executed.

2.5. Challenges and Future Directions

In order to localize itself within the environment, the vehicle needs to explore the received information from the sensors. However, the huge amount of data received makes it impossible to be used in real-time localization on the vehicle, since the vehicle needs an instant interaction with the environment, e.g., accelerating, breaking, steering the wheel, etc. That is why the on-board systems need effective feature extraction methods that will distinguish relevant features for better execution of the localization process.

After surveying and analyzing related papers, the following considerations for effective localization were identified:

- Features should be robust against any external effect like weather changes, other moving objects, e.g., trees that move in the wind.

- Provide the possibility of re-use in other tasks.

- The detection system should be capable to extract features even with a few objects in the environment.

- The proposed algorithms to extract features should not hurt the system by requiring long execution time.

- One issue that should be taken into consideration is about safe and dangerous features. Each feature must provide a degree of safety (expressed as percentage), which helps to determine the nature of the feature and where they belong to, e.g., belonging to the road is safer than being on the walls.

Table 2.

Categorization of the state-of-art methods that extract relevant features for localization and mapping purposes.

Table 2.

Categorization of the state-of-art methods that extract relevant features for localization and mapping purposes.

| Paper | Features Type | Concept | Methods | Features-Extracted | Time and Energy Cost | Representativeness | Accessibility | Robustness |

|---|---|---|---|---|---|---|---|---|

| [21,22], [33,36], [37,38]. | - Semantic | - General | - Radon transform - Douglas & Peukers algorithm - Binarization - Hough transform - Iterative-End- Point-Fit (IEPF) - RANSAC | - Road lanes - lines - ridges Edges - pedestrian crossings lines | - Consume a lot | - High | - Hard | - Passable |

| [24,25], [33]. | - Semantic | - General | - The height of curbs between 10 cm and 15 cm - RANSAC | - Curves | - Consume a lot | - High | - Hard | - Passable |

| [26,27], [28,29], [30,31], [32,33], [40]. | - Semantic | - Probabilistic - General | - Probabilistic Calculation - Voxelisation | - Building facades - Poles | - Consume a lot | - Middle | - Hard | - Low |

| [42,43], [44,46], [47,48], [51,52], [53,54], [49,55], [56,57], [58,59], [60]. | - Non-Semantic | - General | - PCA - DAISY - Gaussian kernel - K-medoids - K-means - DBSCAN - RANSAC - Radius Outlier Removal filter - ORB - BRIEFFAST-9 | - All the environment | - Consume less | - High | - Easy | - High |

| [61,62], [63,64], [65,66], [67,68], [69,70], [71,72], [73,74], [75,76], [77,78]. | - Deep-learning | - Probabilistic - Optimization - General | - CNN - SVM - Non-Maximum Suppression - Region Proposal Network - Multi Layer Perceptron - Maxpooling - Fast-RCNN - Transfer learning | - All the environment | - Consume a lot | - High | - Easy | - High |

3. Mapping

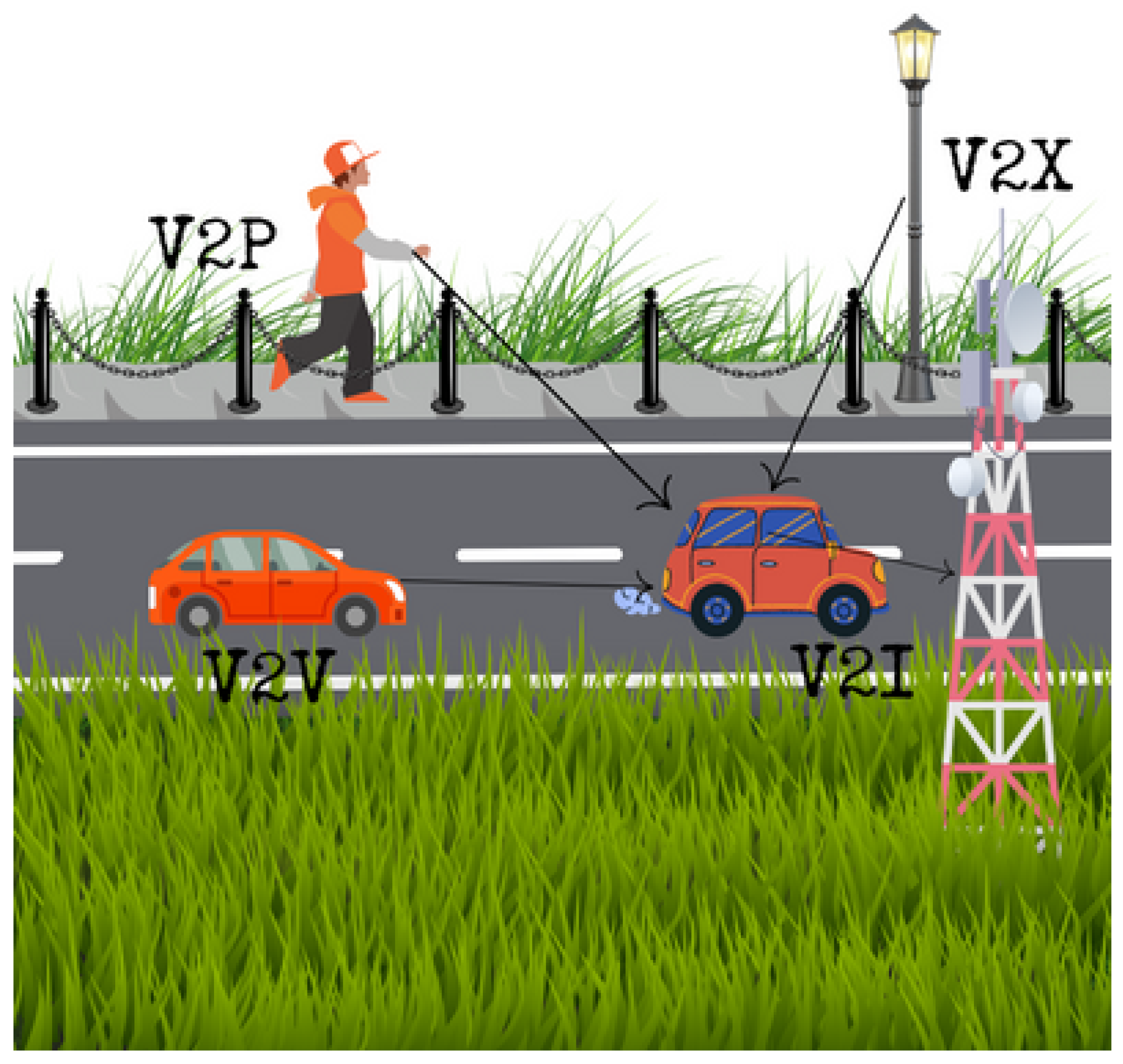

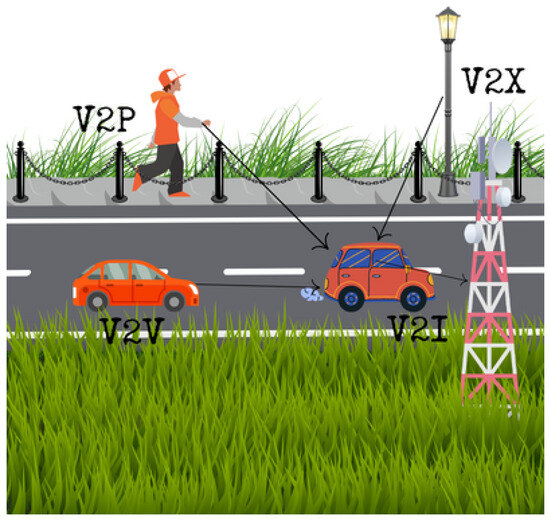

Mapping is the task of finding a relevant representation of the environment’s surroundings. This representation can be generated according to different criteria. For example, in autonomous driving systems, maps play an indispensable role in providing highly precise information about the surrounding of the vehicles, which aids in dealing with vehicles localization, vehicles control, motion planning, perception, and system management. Mapping also helps in better understanding of the environment. Maps seek information from different sensors, which are divided into three groups. The first one employs the Global Navigation Satellite System (GNSS) sensors with an HD map that relies on layers on a Geographic Information System (GIS) [18] (GIS is a framework that provides the possibility of analyzing spatial and geographic data). The second group is based on the range and vision-based sensors such as LiDAR, cameras, RADAR, etc., which help in the creation of a point cloud map. The third group uses cooperative approaches. Each vehicle will generate its local map and then assemble them through Vehicle-to-Vehicle (V2V) communication to generate the global map, which is lower in cost and more flexible and robust. Data received from these sensors are classified into stable objects in time, i.e., immovable, such as buildings, traffic lights, bridges, curbs, pavements, traffic signs, lanes, poles-landmarks. This information is tremendously essential to change lines, avoid obstacles, respect road traffic in general. Furthermore, the dynamic objects change coordinates over time, like vehicles, pedestrians, cyclists. This information is helpful in the context of using V2V or V2I communications. Other than that, it is not recommended to be mapped. Wong et al. [86] added another class named temporary objects, which are features that exist within a time period sense, like parked vehicles, temporary road works, and traffic cones. Maps are prone to be inaccurate due to errors of sensor measurements, hence, that can lead to failures in the positioning of objects on the map, which makes driving impossible in this situation. 10 cm of accuracy is recommended by Seif and Hu [87]. Moreover, the massive amount of data received from different sensors need high storage space and computational processing units. Fortunately, this can be covered by the new generation of computational capacities like NVIDIA cards. Ref. [88] discussed the required criteria for getting a robust map with respect to three aspects: storage efficiency, usability, and centimeter- level accuracy.

Our literature review found that maps for autonomous driving systems can be divided into two categories: offline maps and online maps. We will discuss each type of maps in more detail below.

3.1. Offline Maps

Offline maps (also called a priori or pre-built maps) are generated in advance to help autonomous driving systems in the navigation and localization tasks. According to [89], the turnover resulting from the their usage increased to 1.4$ billion in 2021 and is intended to reach 16.9$ billion by 2030. The number of investments in this field tends to grow daily, and the competition between map manufacturers is at a high level. These companies use different technologies and strategies of partnership and collaboration. In the last few years, major auto manufacturers have accelerated the efforts to produce new generations of advanced driver assistance systems (ADAS). In consequence, developing such great maps is a must for these vehicles.

According to [90], three types of maps can be identified, including digital maps, enhanced digital maps, (HD) high-definition maps. Digital maps (cartography) are topometric maps that encode street elements and depict major road structures, such as Google maps, OpenStreetMap, etc. These maps also provide the possibility of finding the distance from one place to another. However, these maps are useless for autonomous vehicles due to the limited information provided, the low update frequency, and lack of connectivity. So, it cannot be accessed from external devices. The enhanced digital map is a little bit more developed than the first one. Furthermore, from what we identified, these maps are characterized by additional data information, including poles-landmarks, road curvature, lane level, metal barrier. The most important one is the HD map, which is a concept developed by the Mercedes-Benz research planning workshop [91]. This map consists of a 3D representation of the environment which is broken down into five layers, i.e., Base map layer, Geometric map layer, Semantic map layer, Map priors layer, Real-time knowledge layer, as are clearly explained in [90,92].

To build a HD map, we should take into consideration four main principles mentioned in [90,92]. First, mapping a precomputation that facilitates the work of the autonomous driving system in real-time by solving, partially or completely, some problems in the offline stage in a highly accurate manner. The second principle is mapping to improve safety, highlighting the importance of providing accurate information about the surrounding objects to ensure safety, especially if we are talking about level four and five autonomous vehicles, which need more maintenance and surveillance. Alternatively, a map is another tool used at the moment of driving. The third principle is that a map should be robust enough against the dynamic objects that cause run-time trouble. Finally, a map is a global shared state working as a team, where each (AV) system provides its information. So, this idea can solve the problems related to the large computational memory usage as well as and reduce energy. Maps should be underpinned with hardware and software components. As aforementioned, hardware components are the data source of the map, while the software components are intended to analyze and manipulate, and even power the hardware [93].

Maps are prone to errors from various sources, including the change of reality, localization error, inaccurate map, map update error, etc., and we can define an accuracy metric to measure how much the map is an accurate representation of the real-word. According to [94], two metric accuracies are defined:

- Global accuracy: (Absolute accuracy) position of features according to the face of the earth.

- Local accuracy: (Relative accuracy) position of features according to the surrounding elements on the road.

In Table 3, we detail some companies that are developping digital map solutions for autonomous systems.

However, these maps are usually not publicly available and they need to be generated frequently, which give rise to the need for an in-house solution, and which can be created with vehicle sensors and with the help of academic solutions. So, all feature extraction algorithms we have already discussed are candidates for solving localization and mapping tasks. Features that are widely used for the purpose of creating a map are lanes [21,22,36], buildings [22,28,33], curbs [24,25,33], poles [26,27,28,29,30,32,33], or a combination of these. These maps are referred to as feature maps. There is a lot of types of maps in the literature, like the probabilistic occupancy map, which is a lightweight, and low-cost map that gives the probability that a cell is occupied or not, such as the work in [31]. Voxel grid map is another type of maps based on 3D voxelization of the environment; each voxel is equivalent to a pixel in a 2D environment. Also, these maps are used most of the time to discretize 3D points cloud, which reduces the work into voxels and offers more opportunities to employ relevant algorithms [76]. Schreier [95] adds more map representations depending on various levels of abstractness including parametric free space maps, interval maps, elevation maps, stixel world, multi-level surface maps, raw sensor data. Note that it is possible to combine the information from maps to gain a better understanding of the environment scene.

3.2. Online Maps

The localization and mapping problems were first treated separately, and good results were reported. In recent years, a significant advancement known as simultaneous localization and mapping (SLAM) has emerged to address the issue of non-existing mapping in indoor environments, or if there is no access to the map of the environment. This problem is more difficult because we try to estimate the map and find the position of the vehicles at the same time. For this reason, we call it online maps. In reality, there are many unknown environments, especially for the in-house robots. So the robot creates its own map map [96]. Let us look from the mathematics perspective, mainly from a probability and optimization viewpoint. We define the motion control by (vehicle motion information using IMU, or wheel odometry, etc.), the measurement by (providing a description of the environment using LiDAR, camera, etc.) and the state by (the vehicle position). So we can distinguish between two main forms of SLAM problems, with the same importance.

The first one is the online SLAM which estimates only the pose at the time k and the map m. Mathematically, we search:

The second type is the full SLAM, which approximates the entire trajectory and the map m, expressed by:

The online SLAM is just an integration of all past poses from the full SLAM:

The idea behind the online SLAM is to use the Markov assumption (i.e., the current position depends only on the last acquired measurement). With this assumption, SLAM’s methods are also based on the Bayes theorem so that we can write [96,97]:

Then, employing this theorem to the belief and incorporating each term (namely, posterior, likelihood, and marginal likelihood) with its precise definition, including pertinent details. This process is referred to as the Measurement_Update.

where:

- which gives the possibility of making an observation when the vehicle position is and the set of landmarks m are known. Called in the literature the observation_model [97].

- This calculation of the prior is called the Prediction_Update, which represents the best possibility of the state . The term is called motion_model [14,16,97].

- is a normalization term which depends only on the measurement and it can be calculated as given below [97]:

At this moment, let us replace what we got in the calculation of the prior in the Equation (1) and explore that the Marginal_likelihood is only dependent on the to deduce this formulation [14,16]:

Table 3.

Commercial companies are developing digital mapping solutions for self-driving cars.

Table 3.

Commercial companies are developing digital mapping solutions for self-driving cars.

| Map | Original Country | Description/Key Features |

|---|---|---|

| Here is one of the companies that provides HD maps solutions and promise its clients to ensure | ||

| the safety and get the driver truth by providing relevant information to offer vehicles more option | ||

| HERE | Netherlands | and to decide comfortably. Here uses machine learning to validate map data against |

| the real-word in the real-time, this technology achieve around 10–20 cm of the accuracy in the | ||

| identification of the vehicles and their surrounding. Moreover, this map contains three | ||

| main layers Road Model, HD Lane Model, and HD Localisation Model [98,99]. | ||

| Support many applications in an (ADAS) like Hans-free driving, advanced lane guidance, lane split, | ||

| curves speed warning, etc. Also, provide a high precise vehicle positioning with an accuracy of 1m | ||

| Tomtom | Netherlands | or better compared to reality and 15 cm in the relative accuracy. Also, Tomtom take the advantage |

| of using the RoadDNA where it converts the 3D points cloud into a 2D raster image which made | ||

| it much easier to implement in-vehicles. Tomtom consist of three-layer | ||

| Navigation data, Planning data, RoadDNA [100,101]. | ||

| Sanborn | USA | Exploit the data received from different sensors Cameras, LiDAR to generate a high-precise 3D base-map. |

| This map attain 7–10 cm of the absolute accuracy [102]. | ||

| Ushr use only stereo camera imaging techniques to reduce the cost of acquiring data, and they use advanced | ||

| Ushr | USA/Japan | machine vision and machine learning enable to achieve 90% of automation of data processing, |

| also this map has mapped over 200,000 miles under 10 cm level of absolute accuracy [103]. | ||

| NVIDIA map detect semantics road features and provides information about vehicles position with | ||

| NVIDIA | USA | robustness and centimeter-level of accuracy. Also, NVIDIA offers the possibility to build and update |

| a map using sensors available on the car [104]. | ||

| Waymo affirmed that a map for (AV) contain much more information than the traditional one and deserve | ||

| to have a continuous maintain and update, due to its complexity and the huge amount of data received. | ||

| Waymo | USA | Waymo extract relevant features on the road from LiDAR sensor, which help to accurately find |

| the vehicle position by matching the real-time features with the pre-built map features, | ||

| this map attains a 10 cm of accuracy without using a GPS [99]. | ||

| Zenrin is one of the leaders’ companies in the creation of map solution in japan, founded in 1948 | ||

| Zenrin | Japan | and has subsidiaries worldwide in Europe, America, and Asia. The company adopt |

| the Original Equipment Manufacturers (OEM) for the automobile industry. Zenrin offer 3D digital | ||

| maps containing Road networks, POI addresses, Image content, Pedestrian network, etc. [105]. | ||

| Explore the fusion of three modules HD-GNSS, DR engine, and Map fusion to provide high accurate | ||

| NavInfo | China | positioning results. Also, they allow parking the vehicle by a one-click parking system. |

| NavInfo is based on the self-developed HD map engine and provide a scenarios library based on | ||

| HD map, simulation test platform, and other techniques [106]. | ||

| In lvl5, they believe that vehicles did not need to have a LiDAR sensor, unlike Waymo. The company | ||

| Lvl5 | USA | The company focus on cameras sensor and computer vision algorithm to analyze and manipulate |

| videos captured and converted into a 3D map. The HD maps created change multiple times in a day which is | ||

| a big advantage compared with other companies. lvl5 get an absolute accuracy in the range of 5–50 cm [86,107]. | ||

| To obtain a highly accurate map, Atlatec uses cameras sensors to collect data. | ||

| Atlatec | Germany | After that, a loop closure is applied to get a consistent result. They use a combination of artificial |

| intelligent (AI) and manual work to get a detailed map of the road objects. | ||

| This map arrived to 5 cm in the relative accuracy [91,108]. |

The problem of SLAM, as we have noticed above, can be solved by following the two steps: Measurement_Update and Prediction_Update. So any solution to this problem should be a great representation of the observation_model and the motion_model, which will give us the best estimation of the two steps aforementioned. The above explanation was about the probabilistic representation of the SLAM problem [16]. Now, let us change the focus to optimization and formalize the problem. Indeed, optimization is involved in resolving SLAM problems by minimizing a cost function which depends on the pose and the map m with constraints. In the case of Graph SLAM, the cost function can be [14,109]:

where is the set of vehicles poses, m is the set of landmarks that consist the map, is the error function which calculates the distance between the prediction and real observation in the node i, j, is the information matrix.

3.3. Challenges

- Data storage is a big challenge for AVs. We need to find the minimum information required to run the localization algorithm.

- Maps are updated frequently, so an update system should take place here to update and maintain the changes. Also, the vehicle connectivity system should support this task and supply the changes for other vehicles.

- Preserving the information on vehicle localization and ensuring privacy is also a challenge.

4. Localization

Localization is a crucial task within the context of any autonomous system development. This task is indispensable, i.e., to tell the vehicle where it is at each moment in time. Without this information, the vehicles can not avoid collision properly and can not drive in the correct line. At this level, we have seen in Section 2 how to extract relevant features from sensor measurements, and we have investigated different approaches to find the best representation of the environment (Section 3), which means that we have discussed the steps needed before localizing the vehicle. In the remainder of this section, we will depict what is new on localization approaches.

4.1. Dead Reckoning

Dead Reckoning (DR) is one of the oldest methods that determines the position of an object based-on three known pieces of information, including the courses that have been traveled, the distance covered (which can be calculated by using the speed of the object in the trajectory and the time spent within), and lastly a reference point, which is to know the departure point and the estimated drift, if there is. This method does not use any celestial reference, and it is helpful in marine navigation, air navigation, or terrestrial navigation [110,111]. So the coordinates can be found mathematically by:

where is an initial position and , are, respectively, the shortest way and the angle between the current and last position.

4.2. Triangulation

Triangulation strives to estimate vehicles’ position based on geometrical properties. The principal idea is that if we have two known points, we can deduce the coordinate of the third point. For an accurate estimation of vehicles’ positions, we should use at least three satellites. To solve this estimation, the procedure requires two steps as detailed below [110,112].

- Distance estimation:

This is approximately the distance between the transmitter (satellites) and the receiver (vehicle). In the literature, many methods have been implemented to estimate this distance. One of them is based on the Time of Arrival (ToA), which is the time that takes a signal to arrive at the receiver, and it is based on the signal propagation speed. So, the distance can be estimated (distance is equal to speed * time). One of the problems here is that the time should be synchronized between the sender and the receiver, which is difficult. An extension of this method is the Time Difference of Arrival (TDoA), which calculates the difference of time between two signals with different propagation speeds, from which we can deduce the position. There are other methods to do that, including Received Signal Strength Indicator (RSSI), Hop-based, Signal attenuation, Interferometry [110,112].

- Position estimation:

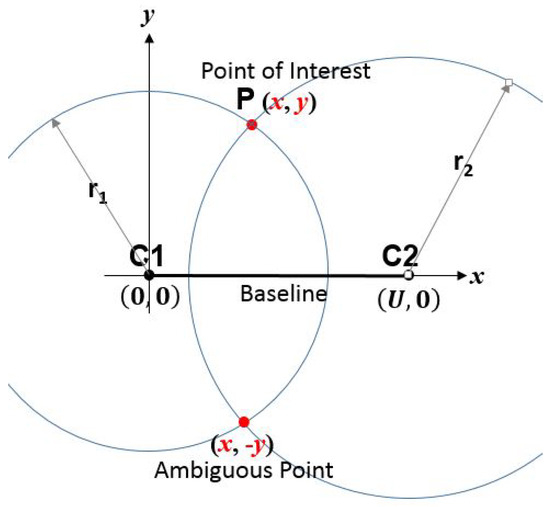

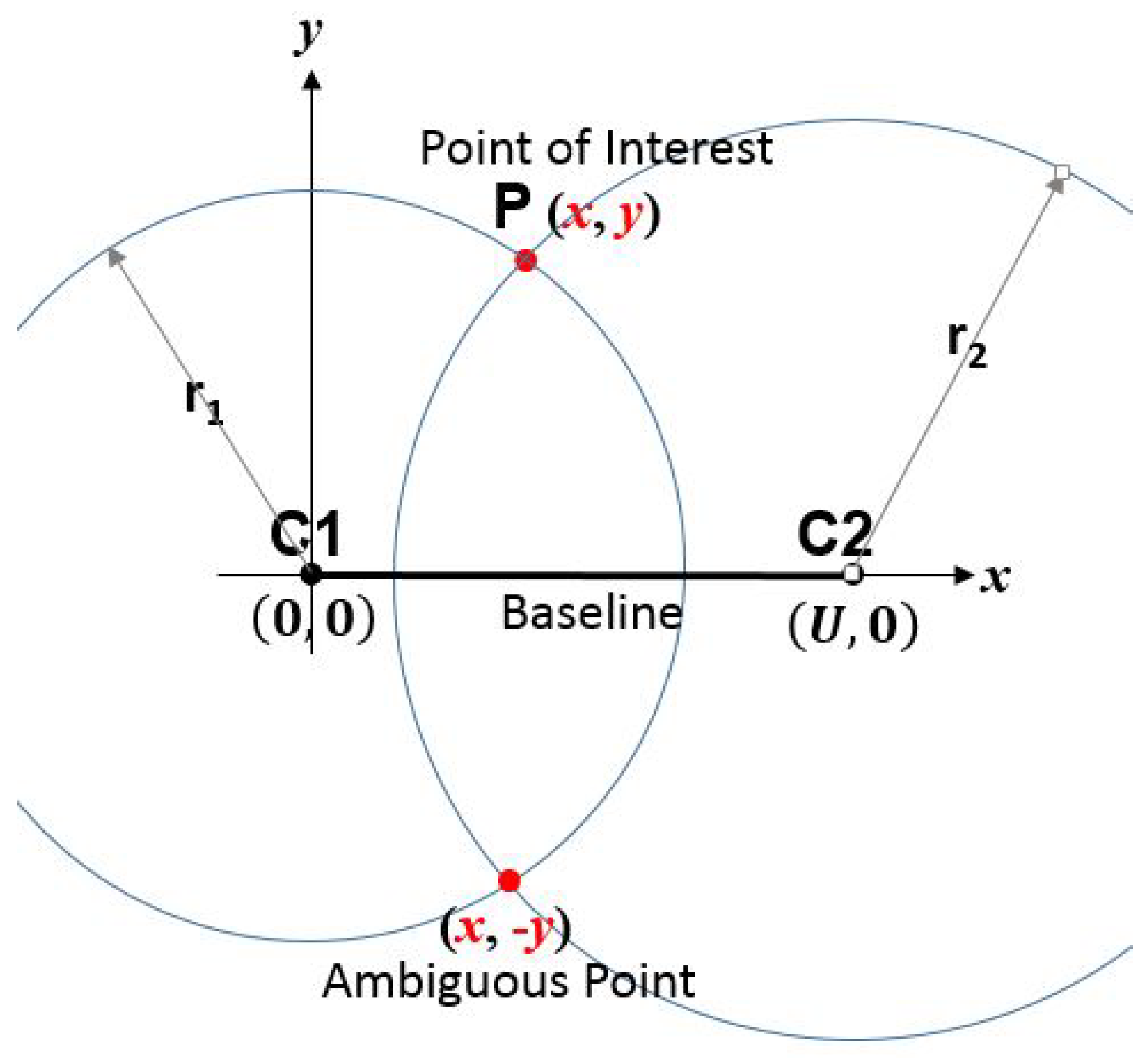

The position of the vehicles can be found based on the geometric properties and based on the distance estimated above. The calculation complexity depends on the dimensionality sought (2D or 3D) and the type of coordinates (Cartesian or spherical). Let us take a small example in 2D Cartesian coordinate system; see Figure 4 [113].

Based on Pythagoras’s theorem:

We conclude that the remaining coordinates can be found based on the distance to the satellites.

Figure 4.

Cartesian 2D example scenario. and are the centers of circles (satellites). P is point of interest (vehicle) with coordinates and , are the distances from the center of circle, respectively from and , to the desired point [113].

Figure 4.

Cartesian 2D example scenario. and are the centers of circles (satellites). P is point of interest (vehicle) with coordinates and , are the distances from the center of circle, respectively from and , to the desired point [113].

4.3. Motion Sensors

Three principal components, namely acceleration, gyroscope, magnetometer, and motion sensor are used to detect object motions. Motions can be a translation over the axis x, y, or z, calculated by integrating the acceleration and computing the difference between the current and last positions. Motion can also be a rotation, which can be calculated by the gyroscope that provides the orientation using the roll, pitch, and yaw [110]. Much research has been done to use the motion sensors like IMU and wheel odometry to localize vehicles, and exploring machine learning algorithms in this field has shown its performance. Various architecture have been proposed. Input Delay Neural Network (IDNN) was used by Noureldin et al. [114] to learn error patterns in GPS outages. A comparative study was performed in [115], where they attempted to find the best guess of the INS during GPS outage, by testing different methods including Radial Basis Function Neural Network (RBFNN), propagation neural network, Higher-Order Neural Networks (HONN), Full Counter Propagation Neural network (Full CPN), back- propagation neural network, Adaptive Resonance Theory- Counter Propagation Neural network (ART-CPN), and the IDNN. Recent work by Dai et al. [116] used Recurrent Neural Networks (RNN) to learn the INS drift error, which is more robust and performs well given that the RNN is intended to solve time series problems. Onyekpe et al. [117] suggested that a slight change in the diameter of the tire or the pressure can lead to a miss-displacement in the odometry. Also, LSTM-based methods have been performed to learn the uncertainties in the wheel speed measurement that appear while the vehicle displacement is more potent than the INS solutions. The same authors extended their work to obtain computational efficiency and robustness against different GPS outages. The Wheel Odometry Neural Network (WhONet) framework justifies the use of the RNN network by examining various networks, including IDNN, GRU, RNN, and LSTM [118].

4.4. Matching Data

It is the task of matching data recorded from a sensor like signals, point cloud, and images with the real-word data reference to extract the autonomous vehicle’s location. Some preliminaries are presented below to enable further discussions in this subsection. The Rigid transform matrix is in this form:

where R is the Rotation matrix, which is an orthogonal matrix with a determinant of (i.e., and the translation vector. In the rest of this subsection we will present some approaches that are based on the matching process.

4.4.1. Fingerprint

This method enables the localization of a vehicle based on matching techniques. Indeed, the process is to create a database based on a reference car equipped with different sensors to record various environmental changes. After that, a” query” car will travel the environment and register its fingerprint, which will be compared with the database and pick the position that has the best match [110,119].

4.4.2. Point Cloud Matching

3D point cloud registration provides a highly accurate source of information that enables it to be employed in various domains, including surface reconstruction, 3D object recognition, and, lastly, localization and mapping in self-driving cars, which is our purpose. To do so, scan registration can present sufficient information for vehicle localization by aligning multiple scans in a unique coordinate system and matching similar parts from the scans. In literature, several methods have been proposed to solve the scan matching. The Iterative Closest Point (ICP) is one of the earliest, proposed in 1992 by Besl and Mckay [120]. Its precision enables it to be very usable simultaneously in the positioning and mapping tasks. The idea behind this is to find a rotation matrix R and a translation vector T that will align the query scan with the reference scan iteratively. In fact, the aim is to minimize the distance between the points from the target scan and their neighbor one from the query scan [121]. M is number of point cloud in a scan:

Note that, in each iteration, we stock the new value of the query scan .

However, this method suffers from some serious limitations and assumptions that can affect the convergence of the algorithm, including the good initialization of the algorithm, e.g., one surface being a subset of another, high computational cost, etc. [122]. As a consequence of these issues, many types of methods have been proposed to improve the ICP algorithm. The article [122] divides these extensions into five stages, depending mainly on the selection of the sampling and the feature metric used like point-to-point or point-to-plan methods, which effectively reduce the number of iterations. In recent years, the Normal Distribution Transformation (NDT) [123] emerged to solve the problem of measurement error and cover the problem of the no-correspondence relationship. Indeed, the idea is to break down the set of points’ cloud into a 3D Voxels representation and assign for each voxel a probability distribution. So, even if we have a millimeter of error far from our real measurements, the NDT algorithm takes place to match far points based on the probability distribution [124]. As a result, some researches have combined the NDT process with the standard ICP algorithm, like in [125]. Another interesting approach, the Robust Point Matching (RPM), has been performed to overcome the problem of the initialization (RPM) and change the traditional correspondence into a matrix. Let be the point in M and be the point in S, so we define the correspondence matrix by:

Thus, according to this method, the problem is to find the Affine transformation and the match matrix that make the best fit. The decomposition can give the transformation:

T is a translation vector, m is a point from the scan, is a matrix that depends on some parameter to be estimated based on a cost function [121,126]. An extension of this method is the thin plate spline robust point matching (TPS-RPM) algorithm, which augments the capability to handle the non-rigid registration [127].

Gaussian mixture models (GMM) family takes its place in this field as evidenced in literature. GMMReg [128] is one of them, where they try to represent the two points cloud into a GMM model to be robust to noise and outliers. Instead of aligning points, which is prone to error, they align distributions. e.g taking the intersection of these two distributions. Then, a standard minimization of the euclidean distance of the two new points cloud was performed to refine the transformation. DeepGMR [129] utilised the strategy of using deep learning methods to learn the correspondence between the GMM component and the transformation. Other methods based on deep learning can be found, like relativeNet [130], PointNetLK [131], Deep closest point (DCP) [132]. A complete survey on point cloud registration methods can be found in [133].

4.4.3. Image Matching

Images contain plenty of information that can be explored to do many things, like object tracking, segmentation, object detection, and so forth. Due to that, the vision-based localization is supported by geospatial information. It is now possible to estimate the poses of vehicles by checking the movement of a point (pixel or features) from one image to another. In the literature, vision-based localization is divided into local methods (or indirect or feature-based) and global methods (or direct or appearance or featureless) [134]. Local ones consider just a part of the local region that characterizes the images in the database and the query image. The global method, or the direct method, uses the movement of the intensity of each pixel without using a pre-processing step, such as feature extraction.

Based on [135], image-based localization can be divided into three groups. Primarily, the video surveillance based-methods track the vehicles based on cameras mounted on road infrastructure, and they determine their position based on cameras calibration after detection. Secondly, they tend to search the similarity between the query image and a pre-built database. So the process is called similarity search. After performing the feature extraction (Section 2), we receive a massive amount of data features that should be reduced to search quickly the similarity in images. Typically, we need to profit from these features as much as possible without losing the generalization. Quantization is one solution for that. This is the process of creating an index, which make it easy to search image by query. It adopt the concept of text research where they express each image by a vector of features, and a dictionary is built based on a large set of features which are extracted from visual documents, then applying a clustering method to reduce the size of the dictionary, each cluster centroid being called a visual word. Hence we can calculate the frequency of a specific visual word in the visual document, which is called the bag of feature (BOF) [136]. Another method called k nearest visual words was used in [137].

The similarity can be obtained by calculating the L2 norm between the identified features. However, this method does not work well in when there are of many descriptors, which means we need to find a robust algorithm that will search for similarity efficiently. Researches like [138,139] turn this problem into an SVM classification task. Ref. [140] presents a new architecture to associate the similarity features, called Multi-Task Learning (MTL). To improve the similarity process, we add another step of ranking, which is the task of underpinning the results of the retrieved data by classifying them into a list of candidates. For further information, the reader is referred to [8].

The last group is the visual odometry (VO) based methods, where the idea is to track the movement between two consecutive frames by matching the overlapping area. This method is widely used in the robotics field.

Let , two image matrices, respectively, of image 1 and 2. The problem is to find a vector where:

and are points, respectively, from image 1 and 2. The position of the vehicle can be calculated by minimizing the photo-metric error. The advantage of this method is that it can be performed even in a low-texture environment. However, it suffers from a high computational cost.

In the literature, we found some cost functions that we can use to find the best transformation vector t, such as to minimize the sum of squared difference (SSD):

Or minimizing the sum of absolute differences (SAD):

Alternatively, maximizing the sum of cross-correlation coefficients.

In the case of changing in the environment condition (i.e illumination), we must normalize another metric:

where , are the mean intensities in each image. More information can be found in [134]. Moreover, the survey [8] divides the set of direct methods into three approaches. First class is Direct vision-based localization (VBL) with prior, which are a group of methods that are built under the assumption of an existing prior (from GPS, magnetic compass). From the methods that hold this assumption, we found the article [141], where they use an initialization from the GPS and compass embedded in a smart-phone to refine the global GPS coordinates. A coarse GPS initialization was given in [142] to perform a particle filter localization, also [143] used an indirect method as pre-processing step to refine estimation pose. The second class of methods is features (from images) to points (point cloud) matching, which find coherence between 2D image features and 3D points cloud, like in [144]. The third class is Pose regression approaches, which learn to regress the visual input data to the corresponding pose using mainly the regression forest method and CNN, like in [145,146].

4.5. Optimization-Based Approches

Optimization is the task of finding the best guess that can minimize or maximize a cost function with respect to defined constraints These problems can exist in various domains, including operations research, economics, computer science, and engineering. In our case, we will use it to solve the localization and mapping problem, particularly the SLAM problem. Solving the SLAM problem is divided into front-end and back-end steps, from an optimization perspective. Front-end is the task of processing the data by extracting features, data association, checking loop closure, etc., in order to build a highly accurate map. The back-end step is responsible for finding the best guess of the locations of the vehicles by minimizing (or maximizing) a cost function [147].

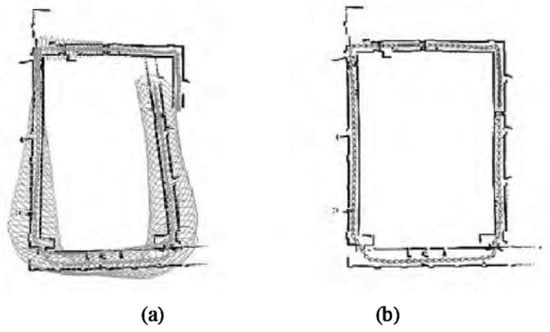

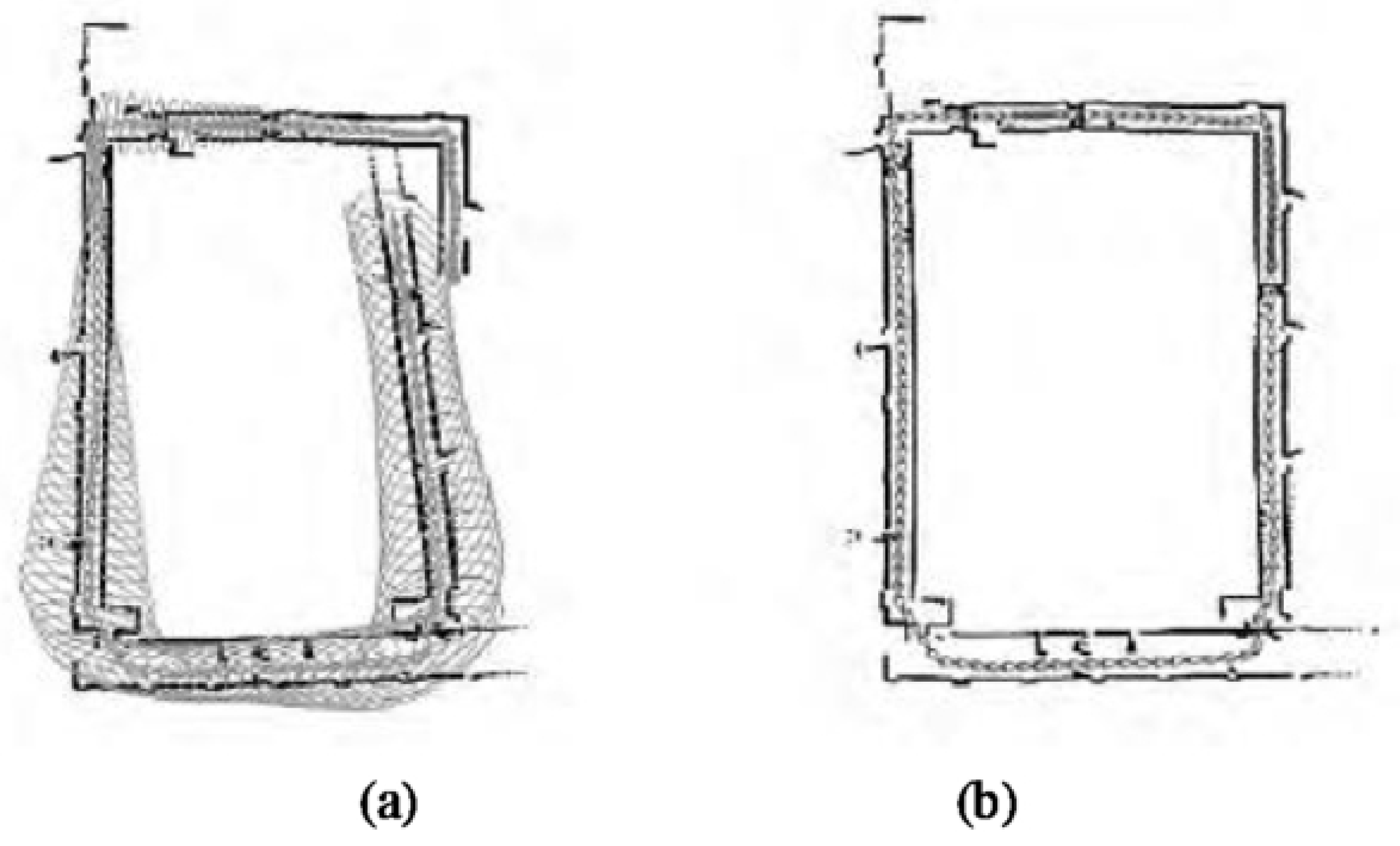

One question is raised here: what is the difference between the loop closure and the re-localization. Loop closure is performed to cover the problem of error of sensors’ measurement, because a measurement taken from any sensor is not what it is in reality. Also, the vehicles are prone to having different issues like drifting, acceleration changes, and weather conditions, which can affect the reliability and credibility of these measurements. Loop closure presents an aid in detecting whether the vehicle can re-visit the same location. If this were the case, the loop will be closed. See the example in Figure 5.

Re-localization is a task performed if the system fails to detect its position in the map. Often, this problem appears when there is an inadequate matching process. Thus we ’re-localize’ through place recognition.

Figure 5.

In a loop closure system, the recognition of a return to a previously visited location enables the correction of any accumulated errors in the system’s map or position estimate. Figure (a) shows an example before applying the loop closure. Figure (b) shows an example after applying the loop closure [148].

Figure 5.

In a loop closure system, the recognition of a return to a previously visited location enables the correction of any accumulated errors in the system’s map or position estimate. Figure (a) shows an example before applying the loop closure. Figure (b) shows an example after applying the loop closure [148].

4.5.1. Bundle Adjustment Based Methods