Development of Tools for the Automatic Processing of Advanced Driver Assistance System Test Data

Abstract

1. Introduction

2. Materials and Methods

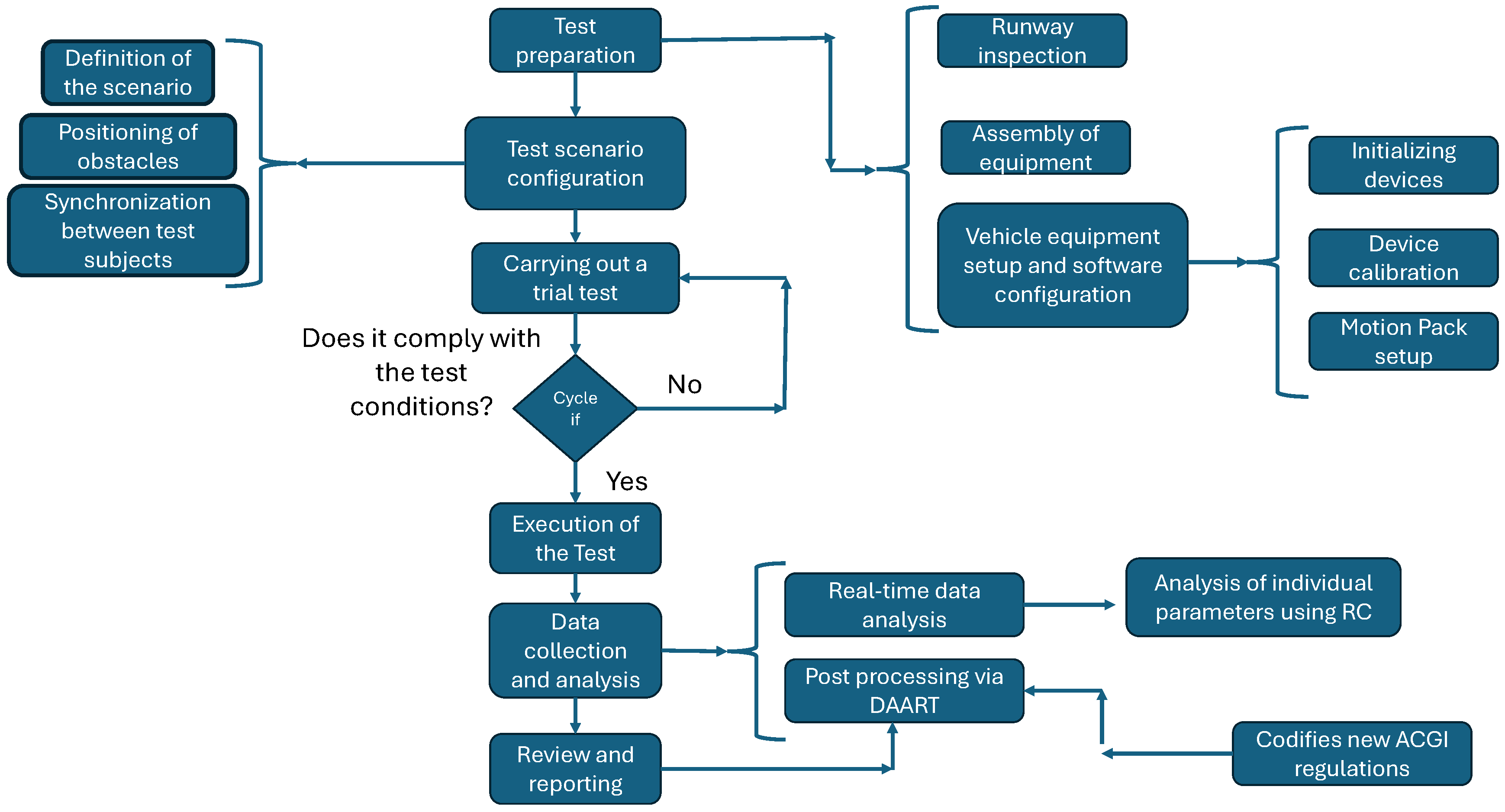

- Vehicle Instrumentation:The initial phase of the data analysis process involves setting up the vehicle with sensors and mechatronic devices to monitor parameters such as speed, acceleration, braking distance, and the responsiveness of the automatic emergency braking (AEB) system. Automating the driving maneuver is crucial to ensure repeatability and reliability of the tests, as human driving cannot achieve this. The setup must comply with specific technical standards for both sensors and data collection methods, as detailed in Section 2.2.

- Test Scenario Preparation:The next step is the preparation of the test scenario, which simulates real-world driving situations. The test track must replicate potential road crash scenarios, such as sudden obstacles or abrupt braking, in strict accordance with applicable regulations. The correct setup of obstacles and simulated vehicles is essential for ensuring the reproducibility and reliability of the collected data.

- Test Execution and Data Collection:The test is then conducted according to the prepared track, simulating emergency situations that activate the AEB system. During the trial, data are recorded in real time, including the approach speed, AEB activation time, vehicle reaction time, and braking distance. Data collection must be precise and synchronized, as errors or inaccuracies could compromise the test interpretation. The raw data are later processed in the post-processing phase, as detailed in Section 2.3.4.

- Data Analysis using DAART and ACGI Tools:After data collection, the post-processing phase is managed by the DAART (Data Analysis and Automated Report Tool) (Figure 1) and ACGI (Automatic Code Generation Intelligence) (Figure 2) tools, two innovative systems introduced and explored in this study. The two tools were developed by the authors using the Matlab R2022b software (sourced by MathWorks Inc., Natick, MA, USA) [14].These tools represent a significant breakthrough in the field of automated data analysis, offering cutting-edge solutions to improve efficiency and reliability in AEB testing. The interaction between these two applications provides an advanced solution for the automated analysis of AEB test data. These tools, developed in collaboration with NTC Porsche Engineering, enable the efficient processing of large volumes of raw data, transforming them into useful information by encoding the relevant regulations and test parameters. Thanks to their modular architecture, they allow for the integration of new functionalities and updates, ensuring the necessary flexibility to adapt to regulatory changes.One of the main features is the ability to perform in-depth analysis of test parameters and generate detailed final reports in a short time, ensuring efficient and organized data management. This allows engineers to easily monitor AEB system performance, identify potential issues or areas for improvement, and verify compliance with regulatory requirements.Specifically, the two tools serve different functions, and through their integration, they ensure a completely immediate, innovative, and automated analysis.The development of these two applications forms the core of the present research project and will be thoroughly examined in Section 2.3.4 and Section 2.3.5.

2.1. Autonomous Emergency Braking (AEB) System

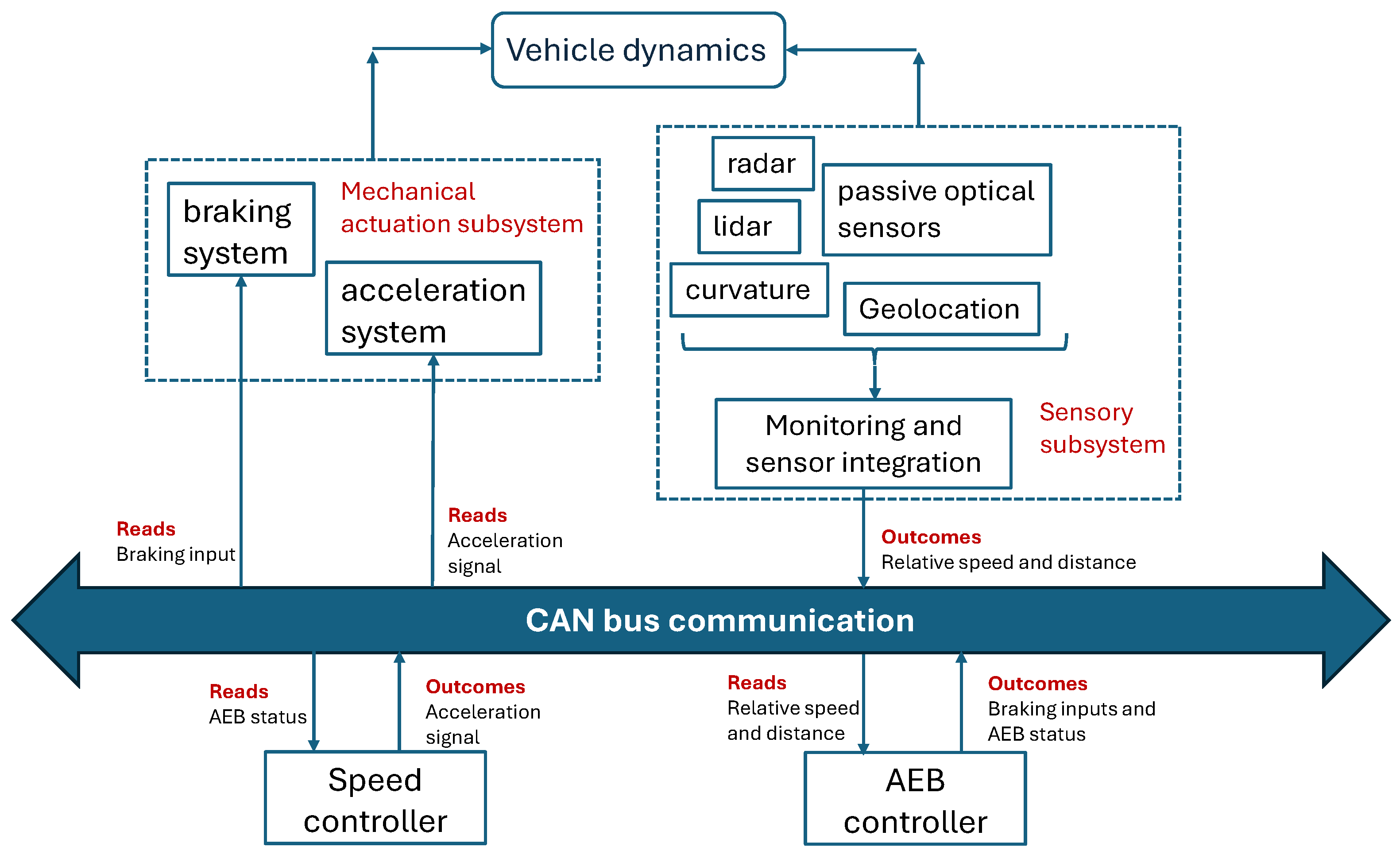

- Perception State:The vehicle uses sensors (radar, cameras, etc.) to detect the surrounding environment, identify obstacles, and measure their distance. The use of multiple sensor types helps reduce false alarms and improves obstacle detection.

- Decision-Making State:The system processes the sensor data to calculate the Time To Collision (TTC), which measures the time remaining before impact (Equation (1)). This parameter is crucial in determining when the system must intervene. A low TTC value indicates an imminent impact, requiring quick action.Based on the instantaneous calculation of this parameter, a vehicle management logic is defined; specifically, if the value is low, the impact is imminent, requiring rapid intervention. The formula represents the generic calculation of the AEB function. However, during the execution of the tests, depending on the type of test and the applicable regulations, different versions of the calculation will be used. This requires the introduction of specific codes in the post-processing, which will be automated by the ACGI system through a dedicated page in the GUI (Figure 4). This page will display all the equations required by the current regulations and will also allow the insertion of new equations to fully automate the process.

- Execution State:If a collision risk is confirmed, the AEB system activates the brakes. The braking can be partial or full, depending on the severity of the situation and the deceleration can reach up to −10 m/s².

- Forward Collision Warning (FCW):This function continuously monitors the area in front of the vehicle and alerts the driver of a potential frontal collision. The alerts can be auditory, visual, or tactile.

- Moderate Deceleration:If the driver does not respond, the system initiates moderate deceleration to further attract the driver’s attention, avoiding immediate emergency braking.

- Automatic Braking:When a collision is imminent and the driver has not responded, the system applies automatic braking in three stages:

- Partial Braking (AEB-P1): Moderate braking action.

- Intermediate Braking (AEB-P2): Increased braking force.

- Full Braking (AEB-FB): Full braking to prevent impact.

2.2. Vehicle Equipment for Performing an AEB Test

- Automotive Dynamic Motion Analyzer (ADMA):The ADMA is a high-precision inertial system used to monitor the vehicle’s movements in real time, measuring accelerations, velocities, positions, and angles. It employs fiber-optic gyroscopes and accelerometers, supported by a GPS receiver for position updates [17]. It interfaces with the Robot Controller, power supply, GPS antenna, and RTK modem.

- Global Positioning System (GPS):The GPS antenna provides precise positioning by receiving satellite signals. The trilateration technique is used to calculate the vehicle’s location, which is essential for monitoring AEB tests. The GPS system is integrated with the ADMA to synchronize movement data with geographical coordinates.

- Radio Modem (Real-Time Kinematic (RTK):RTK enhances GPS accuracy to centimeter-level precision by using a fixed reference station and GPS receiver on the vehicle, crucial for advanced testing such as autonomous braking and trajectory management.

- Robot Controller (RC):The Robot Controller manages all steering, braking, and acceleration actuators, ensuring repeatable and reproducible maneuvers. It also provides real-time feedback to engineers, enabling the monitoring and adjustment of the vehicle’s behavior during testing.

- Steering Robot (SR) and Combined Brake and Accelerator Robot (CBAR):These mechatronic devices control steering and the combination of braking and acceleration, respectively, ensuring the precise and consistent execution of test maneuvers, particularly for complex traffic scenarios.

2.3. Type of AEB Test to Be Performed According to the Regulations

2.3.1. Car-to-Car AEB Test

- Trajectory and Initial Positioning:The subject vehicle must travel in a straight line, with the GVT either stationary (CCRS) or moving (CCRm) in the same direction. Regulations define the correct initial positioning and timing, which may vary across standards. Some regulations also require the monitoring of the vehicle deviation from the target axis.

- Test Start and Execution:Upon test initiation, both the SV and GVT must move at constant speeds within regulation-defined tolerances. The SV’s speed must exceed the GVT’s to simulate collision risk, with speed values and tolerances differing between regulations.

- Test Outcome:The test concludes when one of the following occurs: the SV collides with the GVT and stops shortly after impact; the SV avoids the collision by stopping in time before impact; or the SV fails to correctly detect the GVT and collides, requiring manual intervention to stop the vehicle.

2.3.2. Car-to-Pedestrian AEB Test

- State of the Pedestrian: The pedestrian may be static (stationary) or dynamic (moving across the road), with speed and initial position specified.

- Crossing Side: The pedestrian may cross from the near side or far side or move along the vehicle’s axis.

- Age of the Pedestrian: Tests use dummies representing adults (A) or children (C).

- Percentage of Vehicle Width Impacted: Tests assess impacts at 25%, 50%, or 75% of the vehicle’s width. The Euro NCAP standard defines the reference points for impact speed based on a virtual box around the pedestrian target (EPT) and a corresponding reference line across the front of the vehicle (Figure 7).

2.3.3. Car-to-Cyclist AEB Test

2.3.4. Post Processing of AEB Tests (DAART)

- Common File Group:This group contains 22 MATLAB function files referenced by the regulatory codes. These files are used for analyzing any regulation, as they form the foundational structure for analysis. They contain control parameters, formulas, calculations, and checks necessary to establish the validity of the test.

- Utility File Group:This group consists of 33 functions called within the main interface and primary file group. These functions support the extraction of final results or define crucial steps in calculations through utility functions.

- Regulation File Group:The Regulation File Group includes distinctive codes for various regulations, each of which calls the codes present in the Common File Group to perform a detailed analysis following each individual step. The application includes the encoding of a regulation file for the UNR152, EURO NCAP, KMVSS, NHTSA, GBT, and IIHS standards. Each standard is integrated into the application, including updates and specific versions of each current regulation. The functions contained in this group are, in turn, called by a specific code within the Main File Group, which provides all the necessary information for data analysis.

- Plot File Group:This group contains functions dedicated to defining the layout of the final PDF report. The number of associated plot files depends on the number of encoded standards. Each regulation typically has a separate plot file that includes the characteristic data required by the protocol.

- Main File Group:The Main File Group is responsible for the final processing of results and managing the entire post-processing procedure by invoking each regulatory function and plot. It includes a total of 30 function files, generally activated from the main interface.

- The run file extracted from the robot controller, containing all the data from the executed test;

- The final directory for saving the analysis results and the final report.

- A display showing the ID of the executed test;

- A display showing the name of the test, which allows the identification of the relevant standard and scenario used;

- A display listing the vehicles for analysis present in the database, with their respective geometric measurements. A button is also available to add a new vehicle with all its associated geometric information.

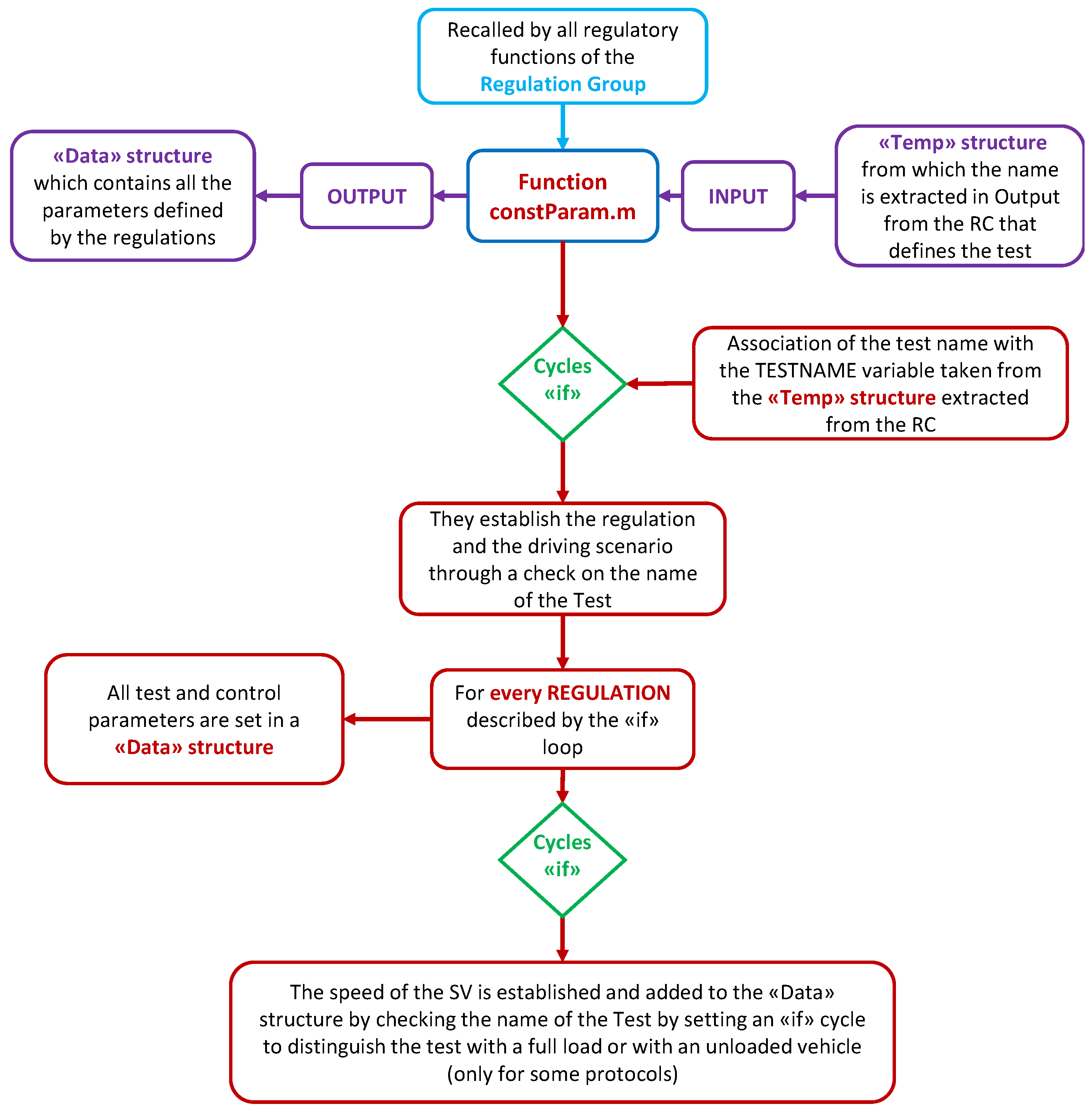

- Sets a variable to which the test name and driving scenario are assigned;

- Defines a series of conditional instructions to identify the various regulations and executed tests. The code uses these loops to determine the type of regulation by analyzing the test code and searching for specific keywords in the protocol name;

- For each conditional loop, the code extracts the subject vehicle (SV) speed from the test name using a basic MATLAB function;

- Within each regulatory loop, it establishes all fundamental properties by providing a list of relevant test parameters, each associated with the value specified by the regulation.

2.3.5. Automatic and Intelligent Compilation of Codes (ACGI)

- Main File Group:A group of 15 primary functions called by the graphical interface to introduce new regulations or test scenarios.

- Utility File Group:A group comprising 22 utility functions that enhance efficiency by reducing code redundancy.

- Main Graphical Interface:The component with which users interact to introduce new regulations, guiding them through the necessary steps without requiring programming skills.

- Auxiliary Graphical Interfaces:A set of 9 interfaces that support specific functions without overwhelming the main graphical display, also offering customizations for reports.

- Specifications related to the regulation to be coded. To facilitate input, the interface offers several TextBoxes where the user can enter the name of the regulation, the speed associated with the subject vehicle, the vehicle load conditions (distinguishing between tests conducted with a fully loaded or unloaded vehicle), and the type of test (differentiated according to the actors involved, as described in the previous sections).

- The directory containing all the functions from the Common File of the DAART code, which need to be modified to implement the new regulation.

- A light indicator (LED) located at the bottom right of the interface. This LED is red if the compilation is incomplete and gradually changes color to green when all fields have been filled out and the code has been correctly compiled.

- A text box, situated next to the LED, that provides detailed information about the status of the compilation.

- A button marked with a question mark, which leads to an auxiliary interface. This interface asks the user whether they would like guidance that explains the interface in Italian or English. Once the language is selected, a PowerPoint presentation will automatically open, explaining step by step how to complete the interface.

- Select the relevant parameters from the pre-set CheckBoxes corresponding to the regulation being coded, and input their values into the associated TextBoxes.

- Next, two conditions may arise:

- 2.1

- All required variables are available: If all parameters are pre-configured, the user can simply click the “compile” button to generate the code.

- 2.2

- Some parameters are missing: If the regulation requires additional variables not present in the CheckBoxes, the user can add them via the “automatic insertion” feature at the bottom left of the interface. The process is intuitive, even for users without prior Matlab experience, and follows these steps:

- 2.2.1

- Write the names of the new variables, which must be filled in a column, in the TextBox of the display;

- 2.2.2

- Select the SET button, which allows all the newly introduced variables to appear on the right side of the interface;

- 2.2.3

- Select the new variables, and for each, input their associated values in the provided TextBoxes.

- 2.2.4

- Once all variables are selected and values entered, the user can proceed to compile the code.

- Add new code sections if the regulation introduces parameters not present in any previous regulation. The new sections are integrated at appropriate positions in the code using utility functions.

- Associate the new regulation with an existing one if a match is found between the new parameters and those already coded.

- Parameter Extraction Tab: This tab will allow for the extraction of parameters to be verified.

- Parameter Selection Tab: In this tab, it will be possible to select the parameters extracted and coded in the previous tab on which a filtering action is desired. It will also be possible to define the parameters associated with the selected digital filter, such as the cut-off frequency and the number of poles of the filter.

- Equation Definition Tab: This tab will allow for the definition of the equation useful for calculating the TTC. In Section 2.1, the general formula of the TTC was defined, but each regulation provides a specific one that can be selected from the GUI, if equal to one already codified, or codified from scratch via an auxiliary interface (Figure 4).

- Temporal Values Selection Tab: Users will be able to choose the values of the parameters necessary to define the start time of the test, the activation time of the FCW (Forward Collision Warning) function, and the activation time of the safety function.

- Regulatory Technical Verifications Tab: This tab will allow for the selection of the technical regulatory verifications to be performed and to associate the relevant limits with each of them.

- Weather Verifications Tab: Users will be able to define the weather verifications imposed by the reference regulations and specify the associated value for each.

- Final Report Modeling Tab: Finally, this tab will allow for the generation of a final graphic report that summarizes the results. It will be possible not only to generate the report including all necessary graphs for analysis but also to model each of them individually by calling auxiliary development interfaces. This allows for the completion of the coding process, enabling a thorough customization of the final report.

3. Results

4. Discussion

- Graph Event:This displays the activation sequence of the FCW audio and video signals, followed by the AEB function. The graph clearly shows that the auditory signal is triggered first, followed by the visual signal and then the AEB activation.

- Time to Collision:This graph shows the evolution of the time to collision between the subject vehicle and the target vehicle during the test. The TTC value decreases continuously until the activation of the safety function, at which point the vehicles begin to distance from each other following the braking of the SV. TTC is crucial for assessing the effectiveness of the safety system and the correct execution of the test. The T0 marker, highlighted in green, indicates the start of the test’s functional phase, characterized by the definition of the TTC.

- Yaw Rate of the Vehicle:This validates the vehicle’s stability during braking. Yaw is controlled by the regulation and is shown to be within acceptable limits during the functional phase, as indicated by the green boundary box.

- Axial Alignment Between Vehicles:This ensures that the subject vehicle and target vehicle remain aligned during the test. The graph confirms perfect alignment, as indicated by the boundary box.

- SV Acceleration:This shows the acceleration variation during braking. The graph illustrates stable deceleration until AEB activation, after which acceleration decreases rapidly.

- Deceleration Requested by the System:This graph shows the deceleration requested by the AEB system, which is crucial for understanding the system’s effectiveness, though not specifically regulated.

- Steering Velocity:The graph shows that steering velocity remains stable until AEB activation, at which point it increases. A green boundary box confirms that it remains within the acceptable range.

- Relative Distance Between Vehicles:Similar to TTC, this graph tracks the distance between the vehicles, showing a continuous decrease, indicating the vehicles were approaching each other during the test.

- Target Vehicle (POV) Speed:This graph tracks the target vehicle’s speed, which is required to stay within regulatory limits. The results show the speed approaching the upper limit of the acceptable range, with some critical deviations at certain points. Adjustments to the POV speed regulation could improve test execution.

- Subject Vehicle (SV) Speed:The graph representing the speed of the subject vehicle shows the speed trend of the VUT throughout the test. This parameter is essential for test evaluation and is required by all regulations, albeit with varying tolerances depending on the protocol applied. In this specific case, an issue is observed in the test execution: the SV speed is below the minimum limit established by the boundary box. This result indicates a test setup error, resulting in a speed outside the regulatory limits. Unlike the POV parameter, where acceptable deviation margins were present, in this case, the excessive deviation cannot be corrected by adjusting the regulation PID but requires direct intervention with respect to the speed. Therefore, the test cannot be considered valid and must be excluded from the final evaluation.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, J.; Zhang, L.; Huang, Y.; Zhao, J. Safety of autonomous vehicle. J. Adv. Transp. 2020, 2020, 8867757. [Google Scholar] [CrossRef]

- Lomartire, S.; Giannoccaro, N.I.; Toma, A. A smart procedure for data analysis relative to a vehicle ‘Coast-down’ test. Int. J. Veh. Syst. Model. Test. 2021, 10, 122–140. [Google Scholar] [CrossRef]

- Colosio, M.; de Andrade, A.; dos Santos, J. Overview of Automotive Component Failures; SAE Technical Paper; Society of Automotive Engineers: Warrendale, PA, USA, 2000. [Google Scholar] [CrossRef]

- Favarò, F.M.; Nader, N.; Eurich, S.O.; Tripp, M.; Varadaraju, N. Examining accident reports involving autonomous vehicles in California. PLoS ONE 2000, 12, e0184952. [Google Scholar] [CrossRef] [PubMed]

- Suarez-Bertoa, R.; Valverde, V.; Clairotte, M.; Pavlovic, J.; Gieckaskiel, B.; Franco, V.; Kregar, Z.; Astorga, C. On-road emissions of passenger cars beyond the boundary conditions of the real-driving emissions test. Environ. Res. 2019, 176, 108572. [Google Scholar] [CrossRef] [PubMed]

- Mandpe, S.; Kadlaskar, S.; Degen, W.; Keppeler, S. On Road Testing of Advanced Common Rail Diesel Vehicles with Biodiesel from the Jatropha Curcas Plant; SAE Technical Paper; Society of Automotive Engineers: Warrendale, PA, USA, 2005. [Google Scholar] [CrossRef]

- Ling, J.; Li, Y.; Li, J.; Yan, Y. Research on production vehicle evaluation method of China VI OBD for ligth-duty vehicles. In Proceedings of the 4th International Conference on Material Science and Technology, Wuhan, China, 22–23 January 2020; Volume 774, pp. 1–8. [Google Scholar]

- Chen, Y.; Sun, Y.; Ding, N.; Chung, W.K.; Qian, H.; Xu, G.; Xu, Y. A real-time vehicle safety system. In Proceedings of the 2012 IEEE/SICE International Symposium on System Integration, Fukuoka, Japan, 16–18 December 2012; pp. 957–962. [Google Scholar]

- Kim, J.J.H.; Lee, J.H.; Choi, J.H.; Jeon, W.J. AEB Simulation Method Based on Vehicle Test Data for Accident Analysis of ADAS Vehicles. Int. J. Automot. Technol. 2024, 25, 261–277. [Google Scholar] [CrossRef]

- European NEW CAR ASSESSMENT PROGRAMME (Euro NCAP) TEST PROTOCOL—AEB Car-to-Car Systems. Available online: https://www.euroncap.com/media/79864/euro-ncap-aeb-c2c-test-protocol-v43.pdf (accessed on 8 September 2024).

- UN Regulation No 152—Uniform Provisions Concerning the Approval of Motor Vehicles with Regard to the Advanced Emergency Braking System (AEBS) for M1 and N1 Vehicles. Available online: http://data.europa.eu/eli/reg/2020/1597/oj (accessed on 1 September 2024).

- Department of Transportation, National Highway Traffic Safety Administration. Available online: https://www.nhtsa.gov/sites/nhtsa.gov/files/2024-04/final-rule-automatic-emergency-braking-systems-light-vehicles_web-version.pdf (accessed on 20 June 2024).

- Korean Vehicle Framework Regulation (KMVSS), KMVSS Regulations to Require Vehicle Category M1/N1 to be Equipped with Aebs (2023). Available online: https://www.safetywissen.com/object/B04/B04.1bb738181qddmw5yymi37488w50eux63778789488/safetywissen?prev=%2Fnews%2FSAFETYNEWS%2F (accessed on 10 October 2024).

- The MathWorks Inc. Optimization Toolbox; Version 9.4 (R2022b); The MathWorks Inc.: Natick, MA, USA, 2024; Available online: https://www.mathworks.com (accessed on 3 May 2024).

- Zhang, S.; Lei, B.; Chen, S.; Sha, L. Research on subjective evaluation method of automatic emergency braking (AEB) for passenger car. E3S Web Conf. 2021, 268, 01037. [Google Scholar] [CrossRef]

- Dollorenzo, M.; Vincenzo, D.; Giannoccaro, N.I.; Palermo, D. Simulation and Post-Processing for Advanced Driver Assistance System (ADAS). Machines 2022, 10, 867. [Google Scholar] [CrossRef]

- AB Dynamics. Available online: https://www.abdynamics.com/en/products/tracktesting/adas-targets/synchro (accessed on 3 May 2024).

- Albrecht, H.; Barickman, F.; Schnelle, S. Advanced Test Tools for ADAS and ADS (Report No. DOT HS 813 083); National Highway Traffic Safety Administration: Washington, DC, USA, 2021.

- Euro NCAP AEB Car-to-Car. Available online: https://www.euroncap.com/en/car-safety/the-ratings-explained/safety-assist/aeb-car-to-car/ (accessed on 23 August 2024).

- Automobile Sot. Available online: https://automobilesoft.net/mycar/automobile-safety/active-safety/aeb/ (accessed on 29 August 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Licci, P.; Giannoccaro, N.I.; Palermo, D.; Dollorenzo, M.; Lomartire, S.; Dodde, V. Development of Tools for the Automatic Processing of Advanced Driver Assistance System Test Data. Machines 2024, 12, 896. https://doi.org/10.3390/machines12120896

Licci P, Giannoccaro NI, Palermo D, Dollorenzo M, Lomartire S, Dodde V. Development of Tools for the Automatic Processing of Advanced Driver Assistance System Test Data. Machines. 2024; 12(12):896. https://doi.org/10.3390/machines12120896

Chicago/Turabian StyleLicci, Pasquale, Nicola Ivan Giannoccaro, Davide Palermo, Matteo Dollorenzo, Salvatore Lomartire, and Vincenzo Dodde. 2024. "Development of Tools for the Automatic Processing of Advanced Driver Assistance System Test Data" Machines 12, no. 12: 896. https://doi.org/10.3390/machines12120896

APA StyleLicci, P., Giannoccaro, N. I., Palermo, D., Dollorenzo, M., Lomartire, S., & Dodde, V. (2024). Development of Tools for the Automatic Processing of Advanced Driver Assistance System Test Data. Machines, 12(12), 896. https://doi.org/10.3390/machines12120896