Thermal Error Transfer Prediction Modeling of Machine Tool Spindle with Self-Attention Mechanism-Based Feature Fusion

Abstract

1. Introduction

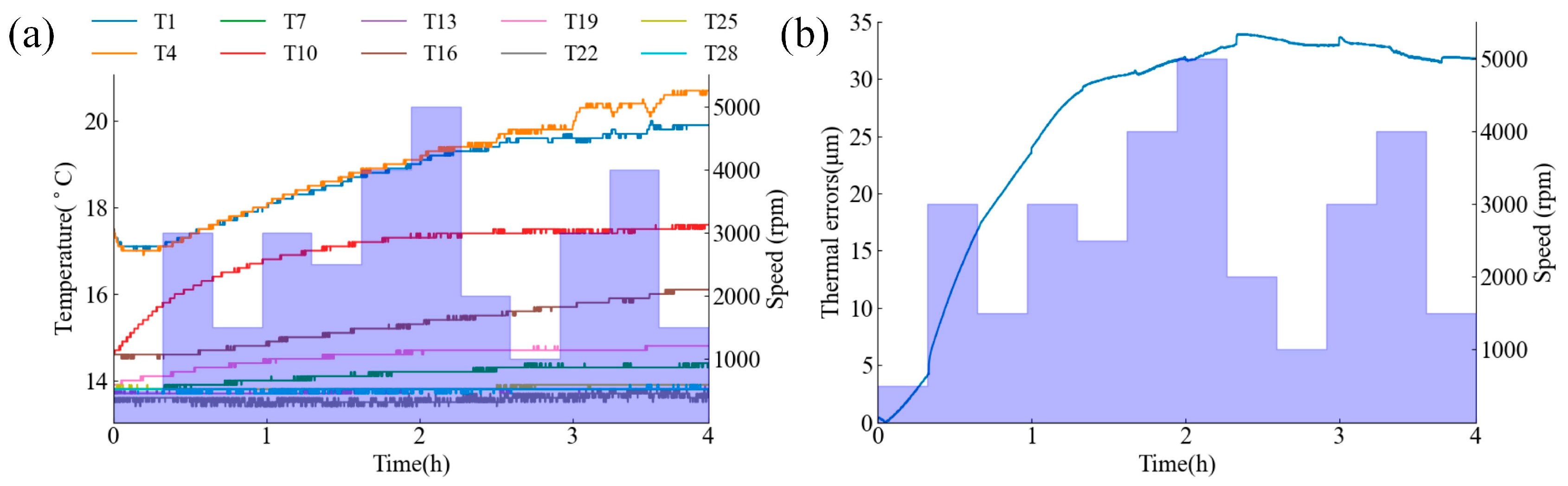

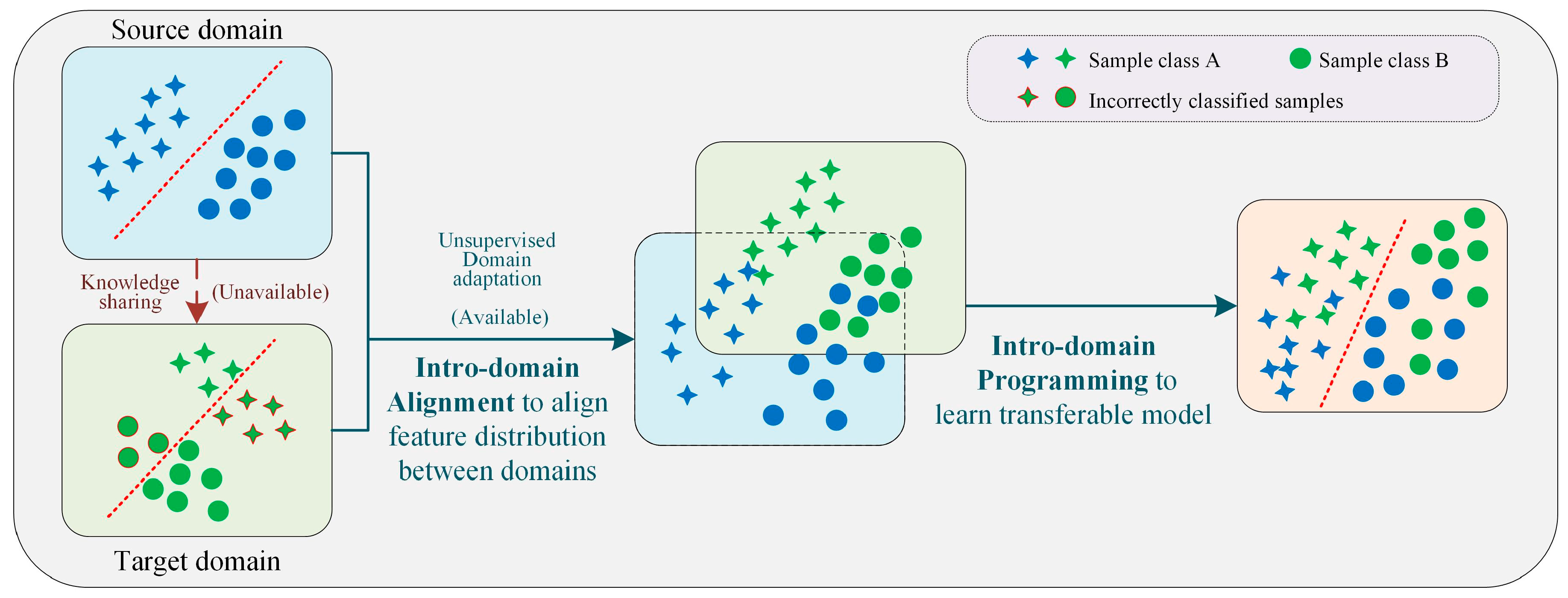

2. Experimental Data of Spindle Thermal Errors

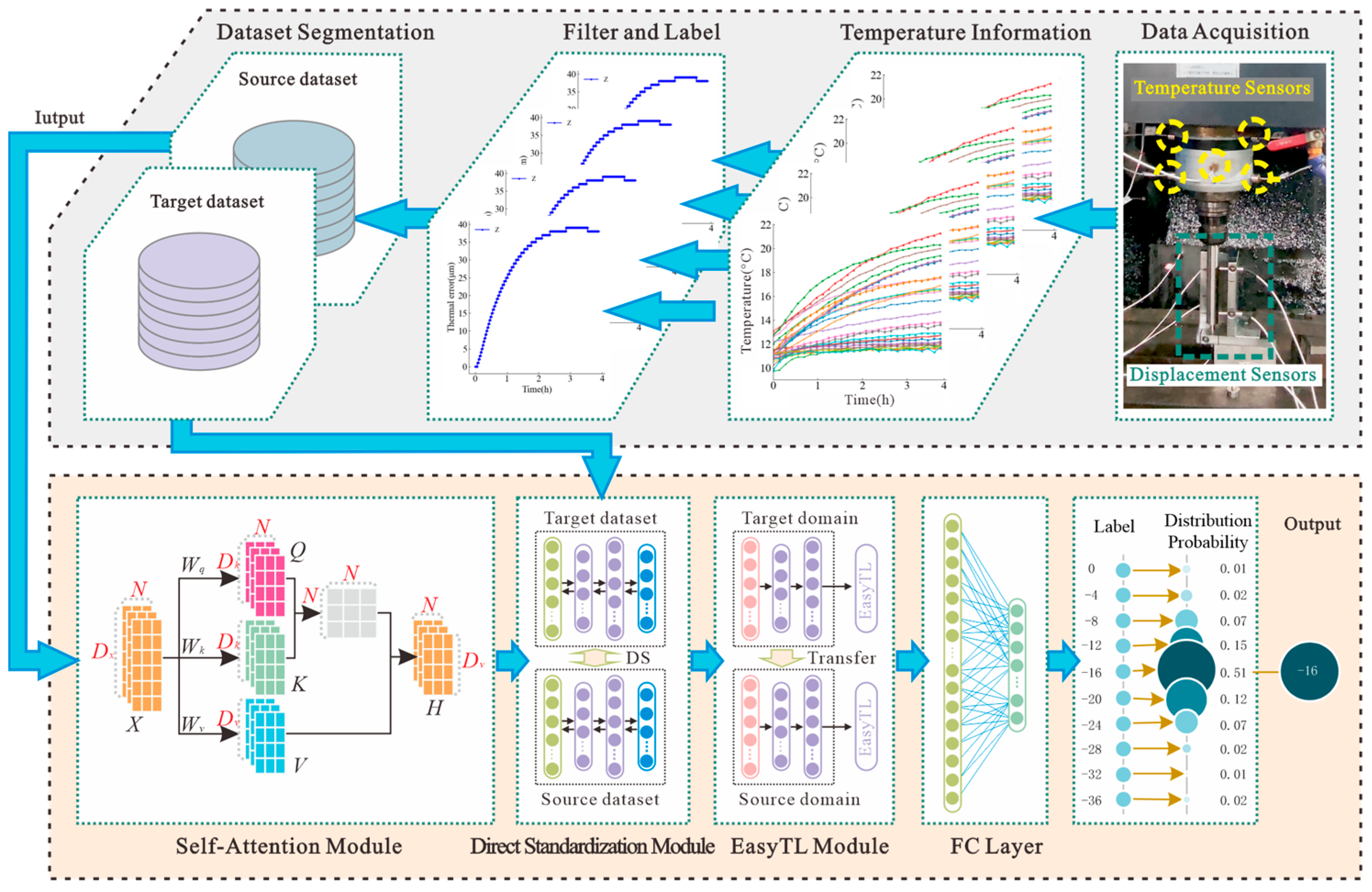

3. SA-DS-EasyTL Error Prediction Model

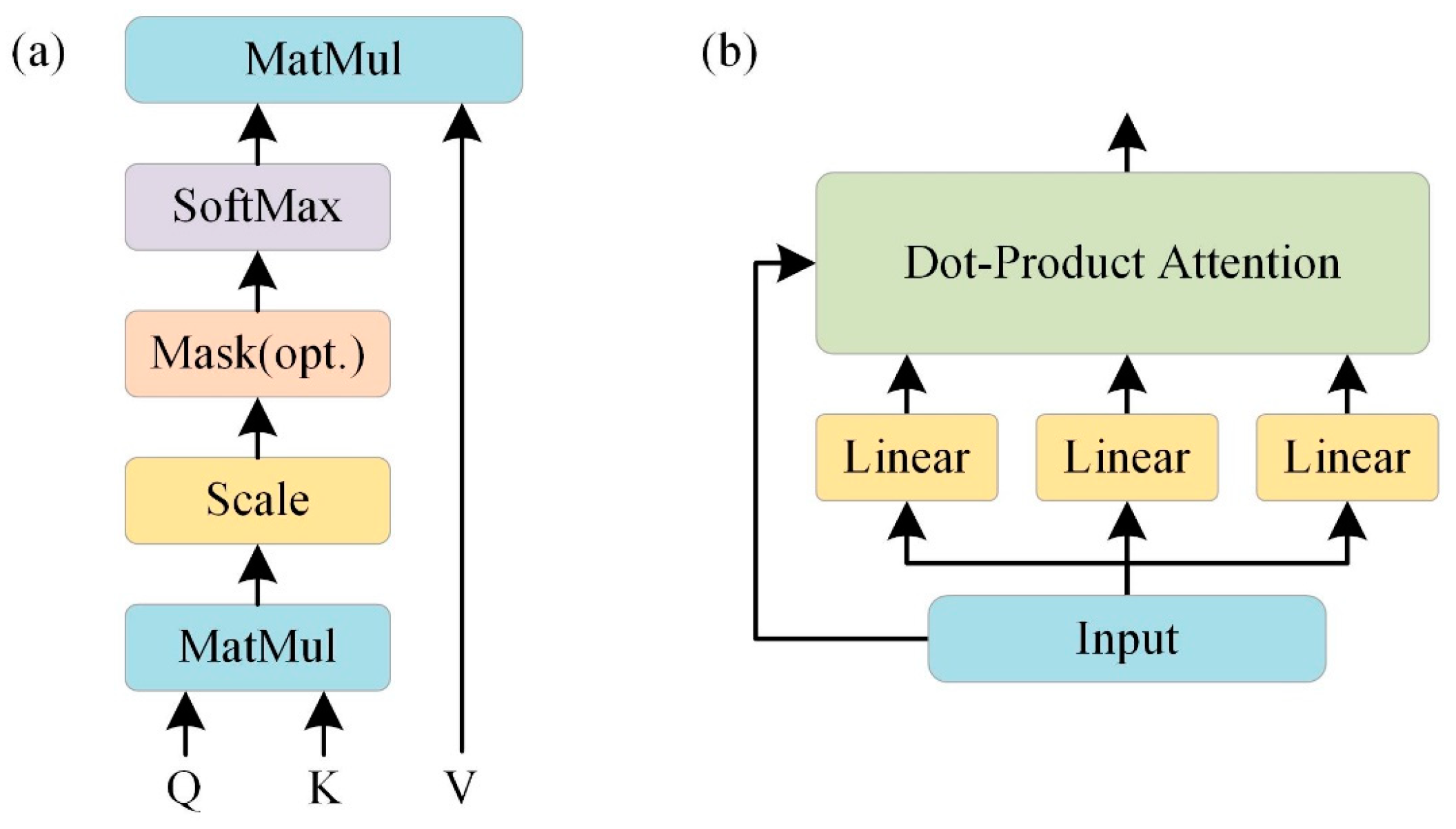

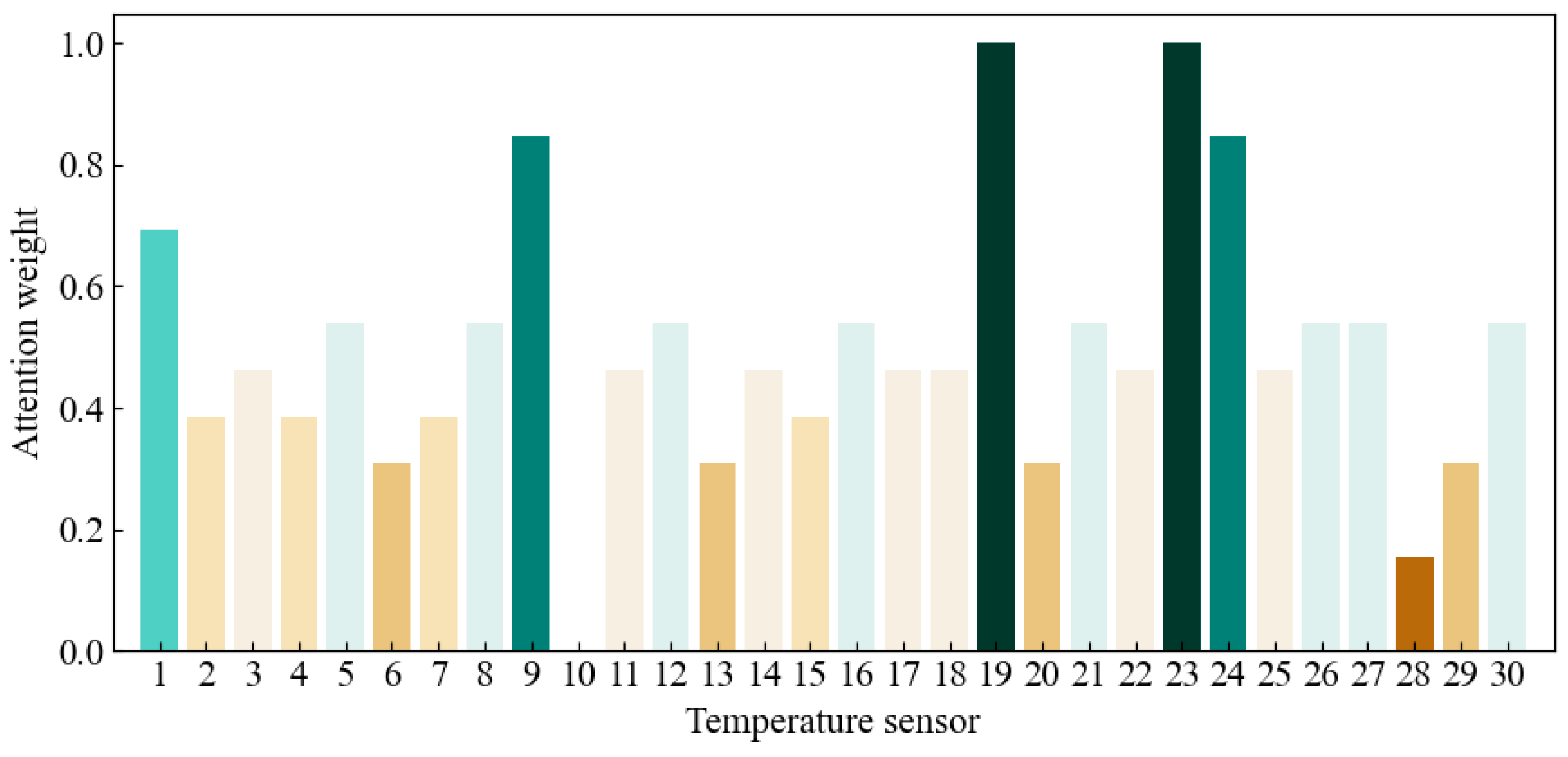

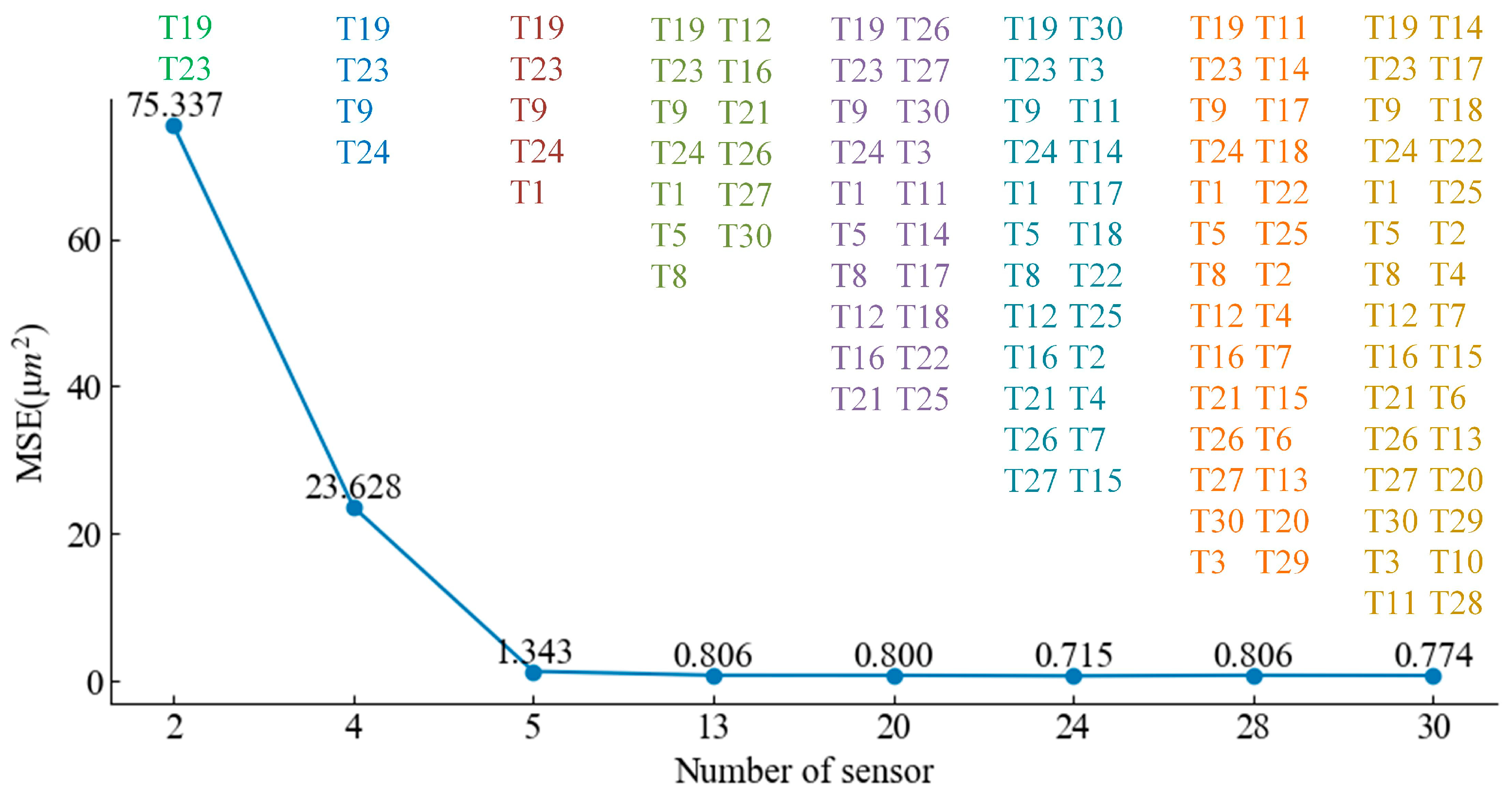

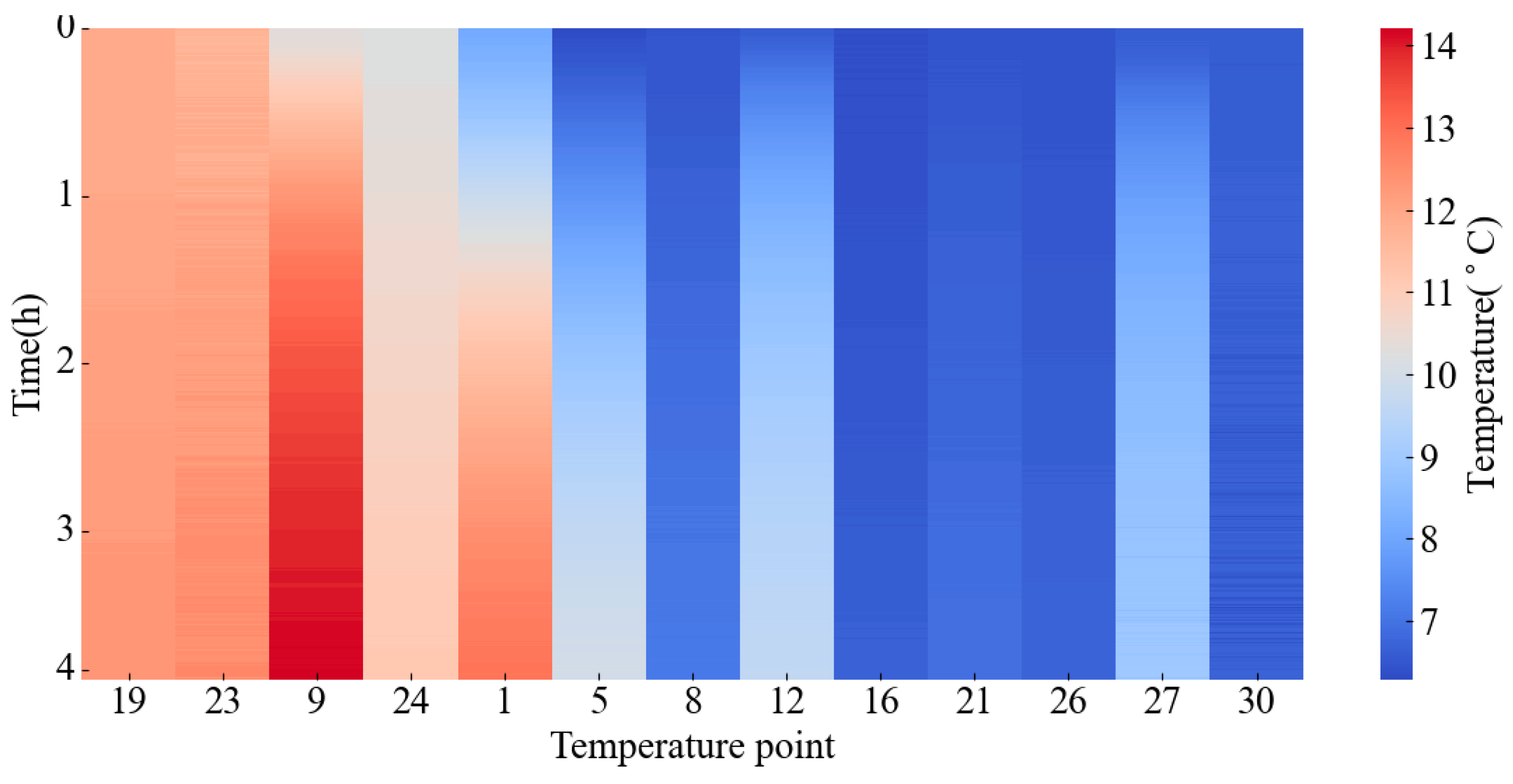

3.1. Temperature Feature Fusion

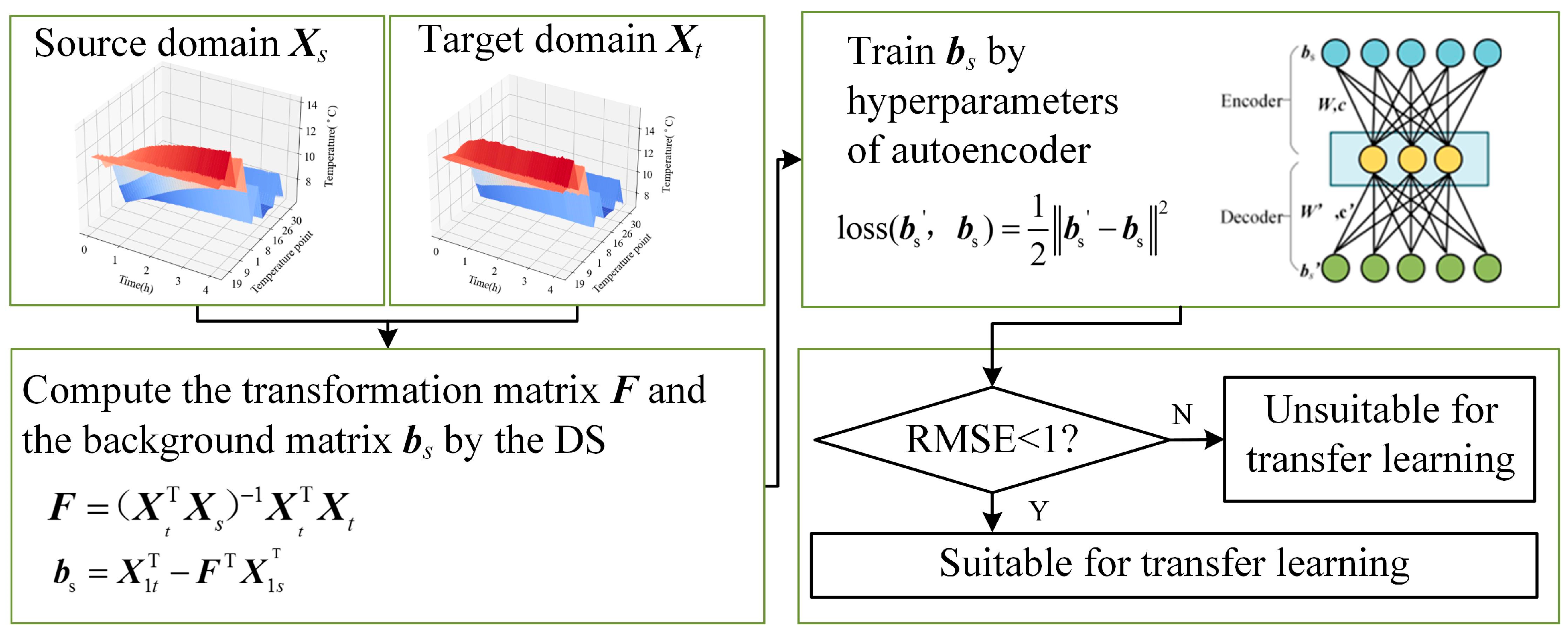

3.2. Spatial Correction with Direct Standardization

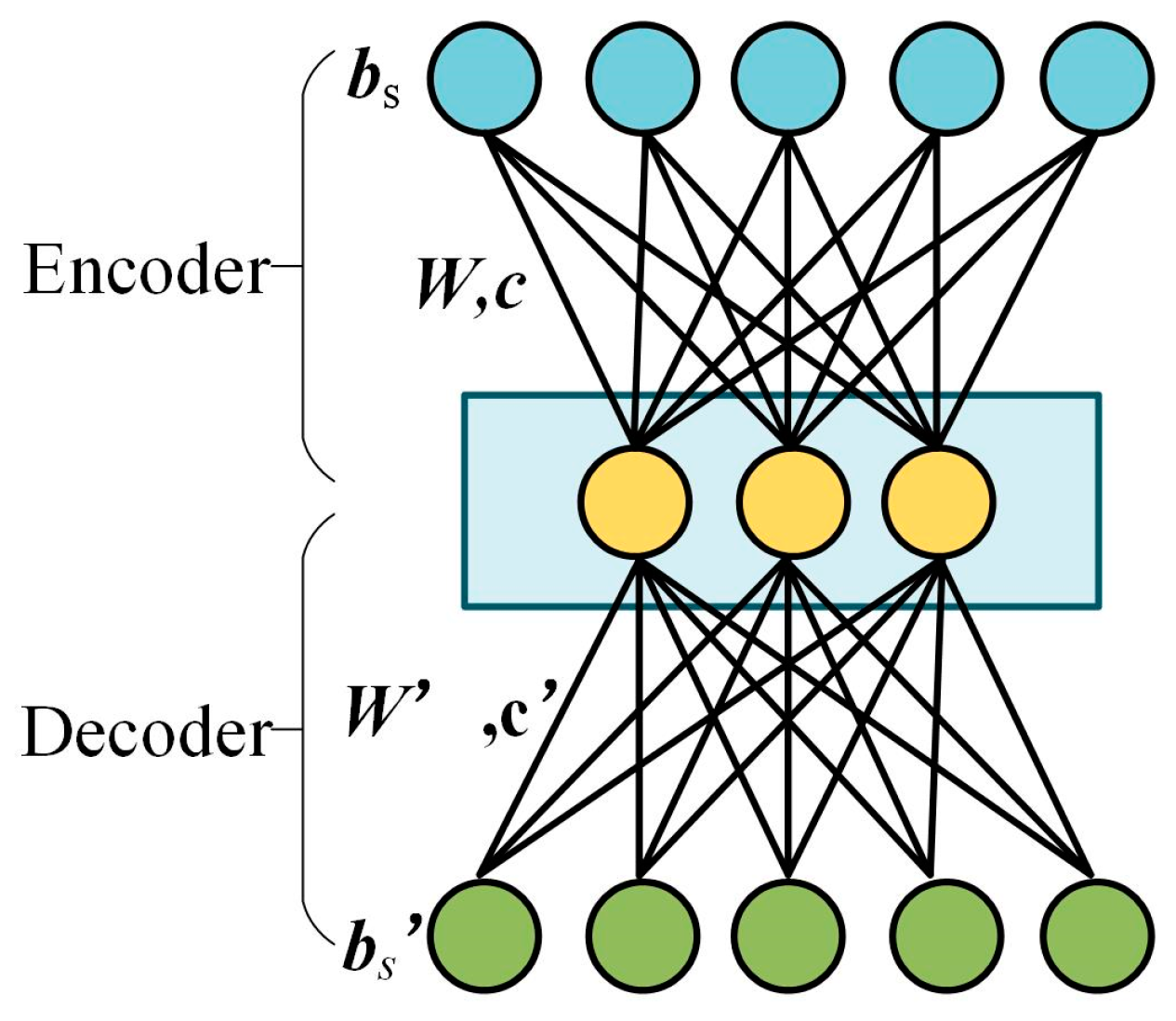

3.3. Transfer Learning with EasyTL

4. Validation of the Transfer Model

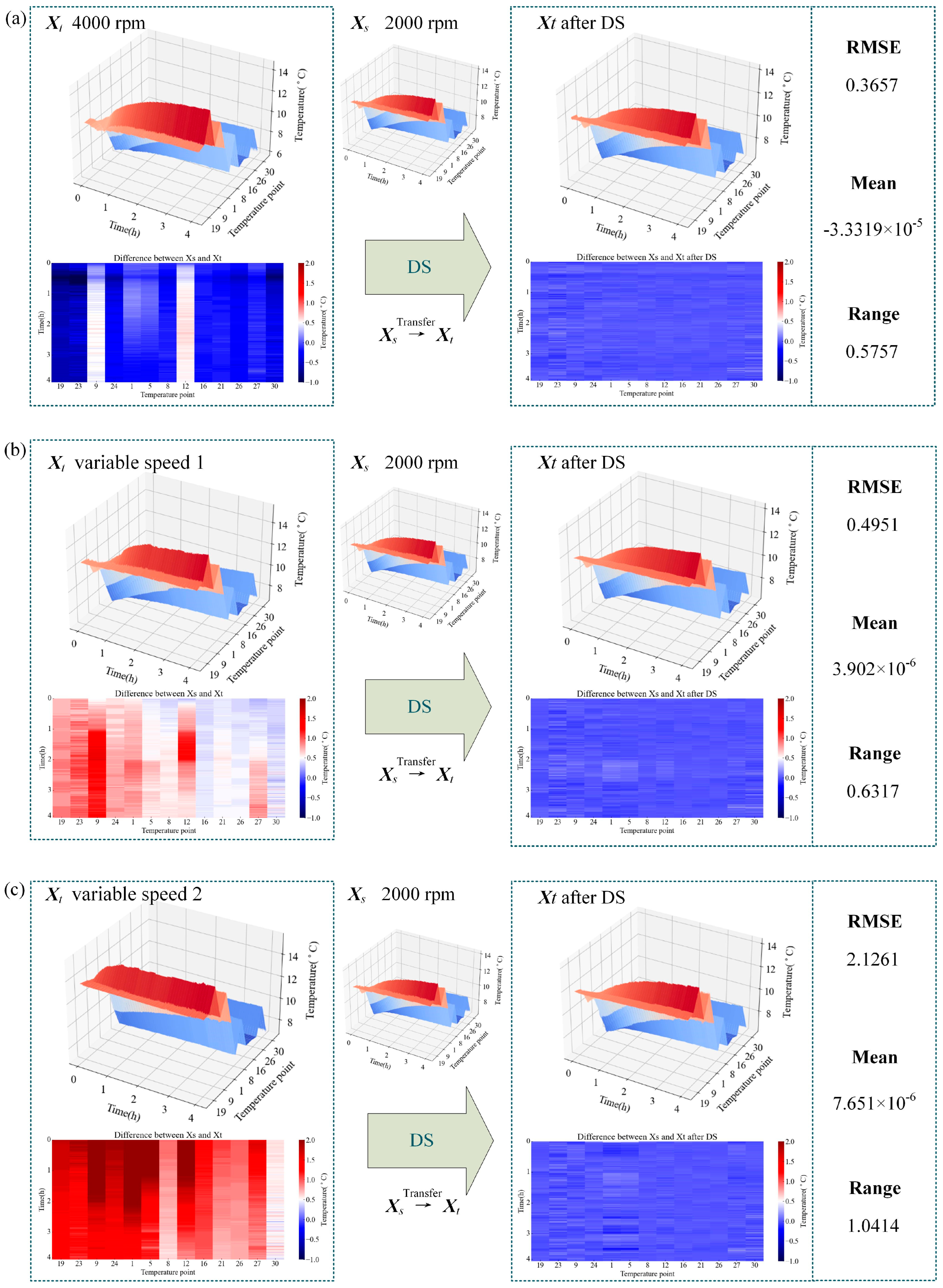

4.1. DS Correction Process

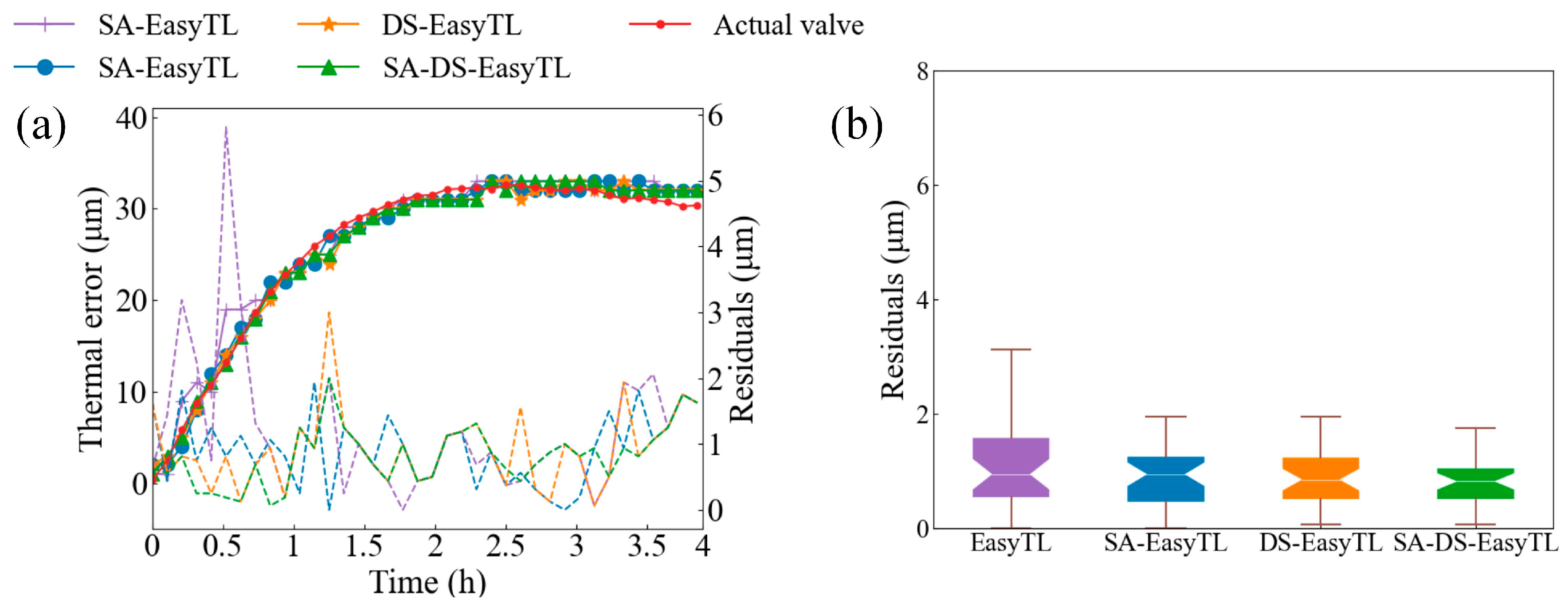

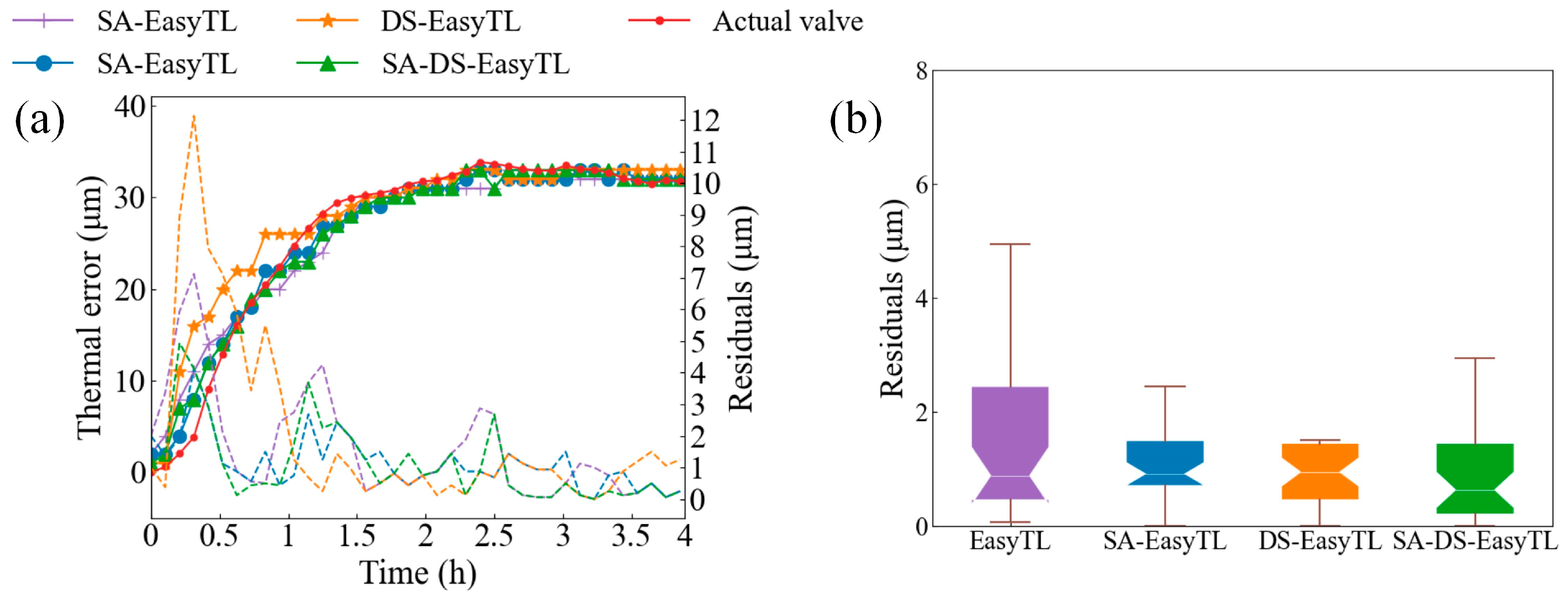

4.2. Validation of the Proposed Method

4.3. Validation of the Transfer Feasibility

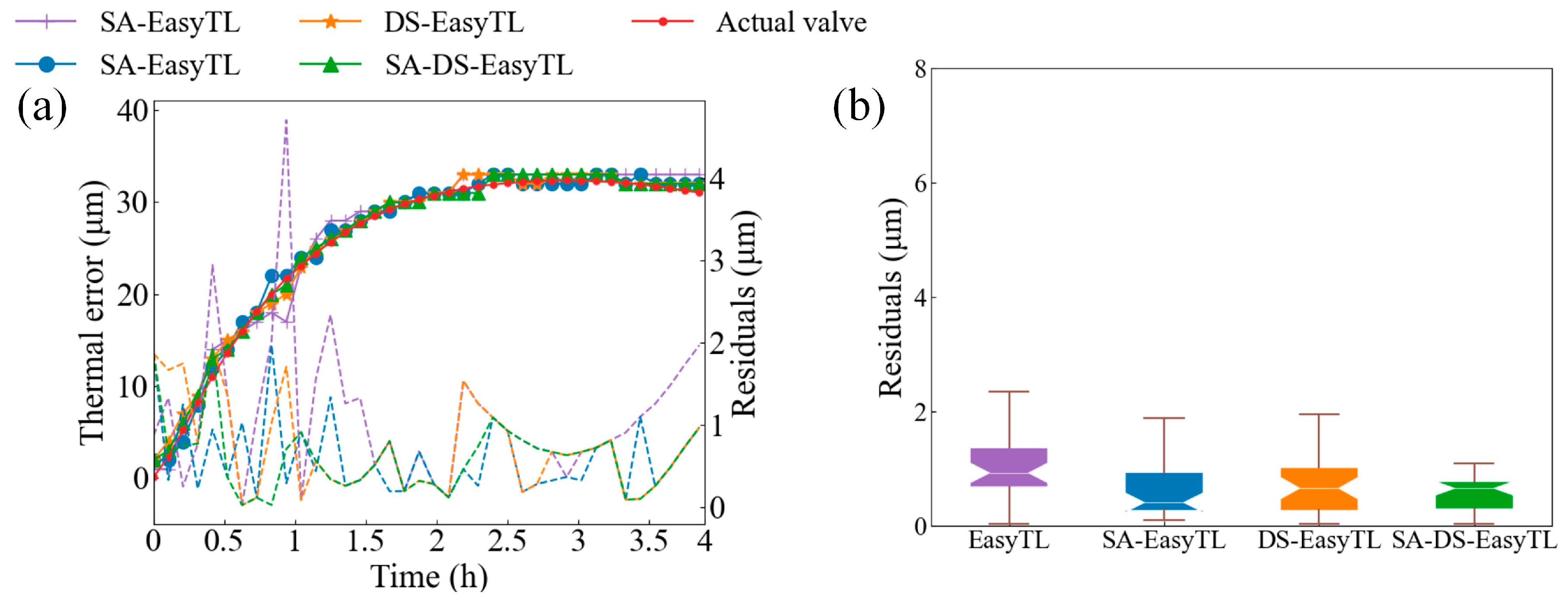

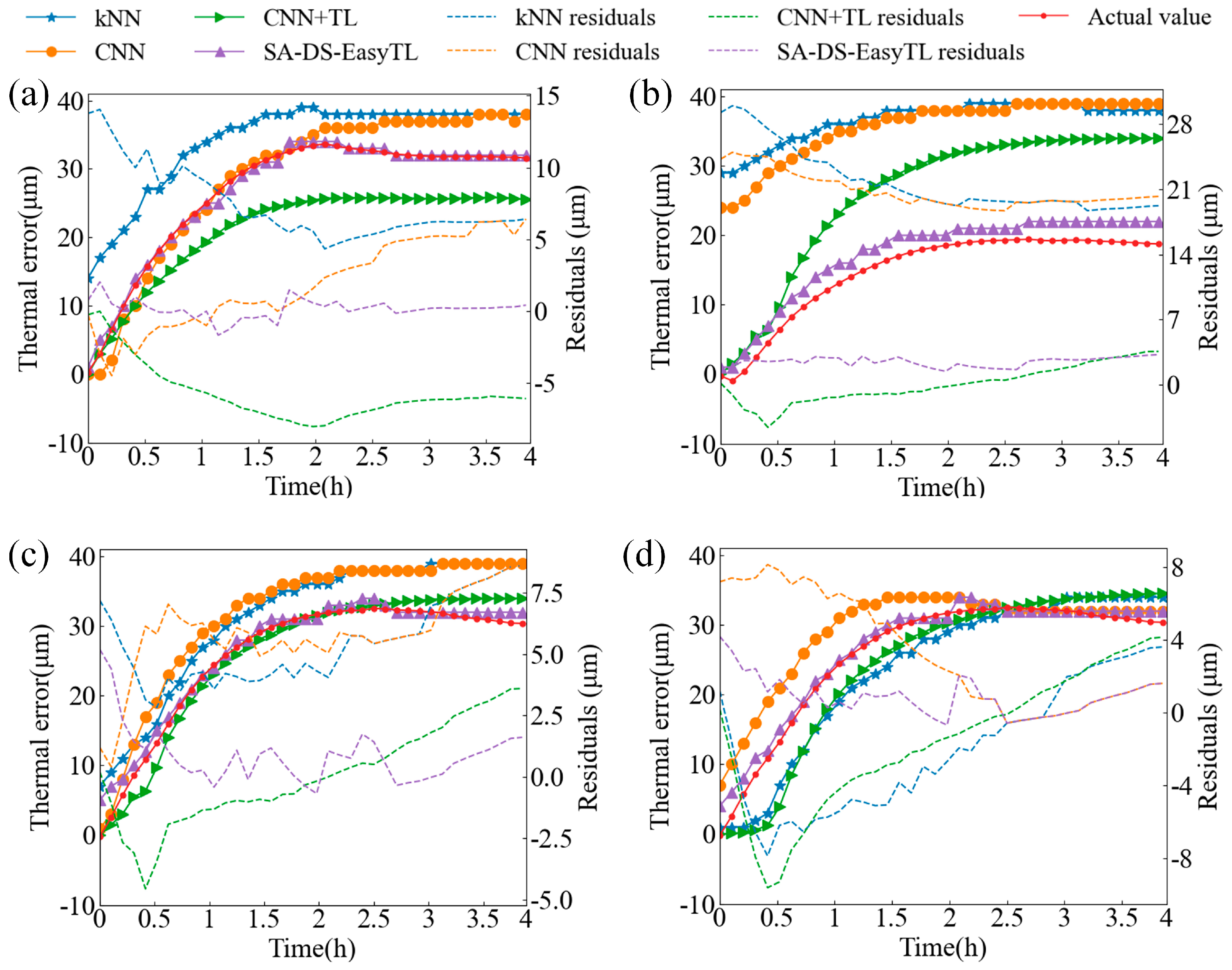

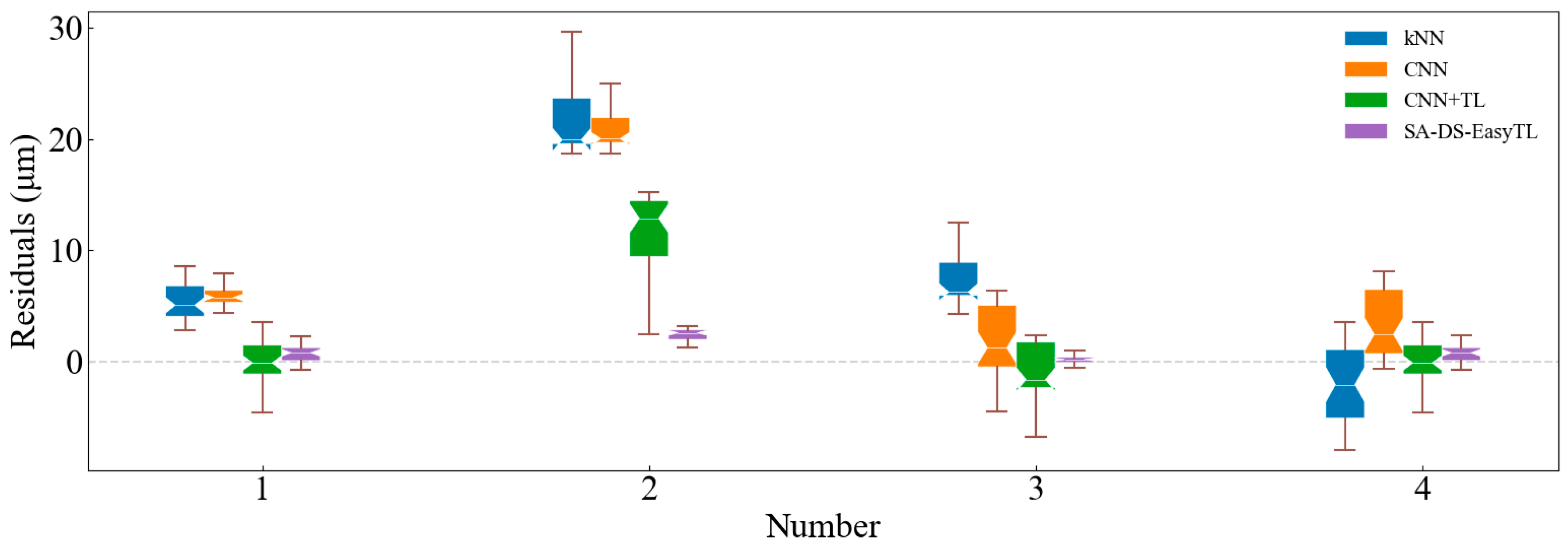

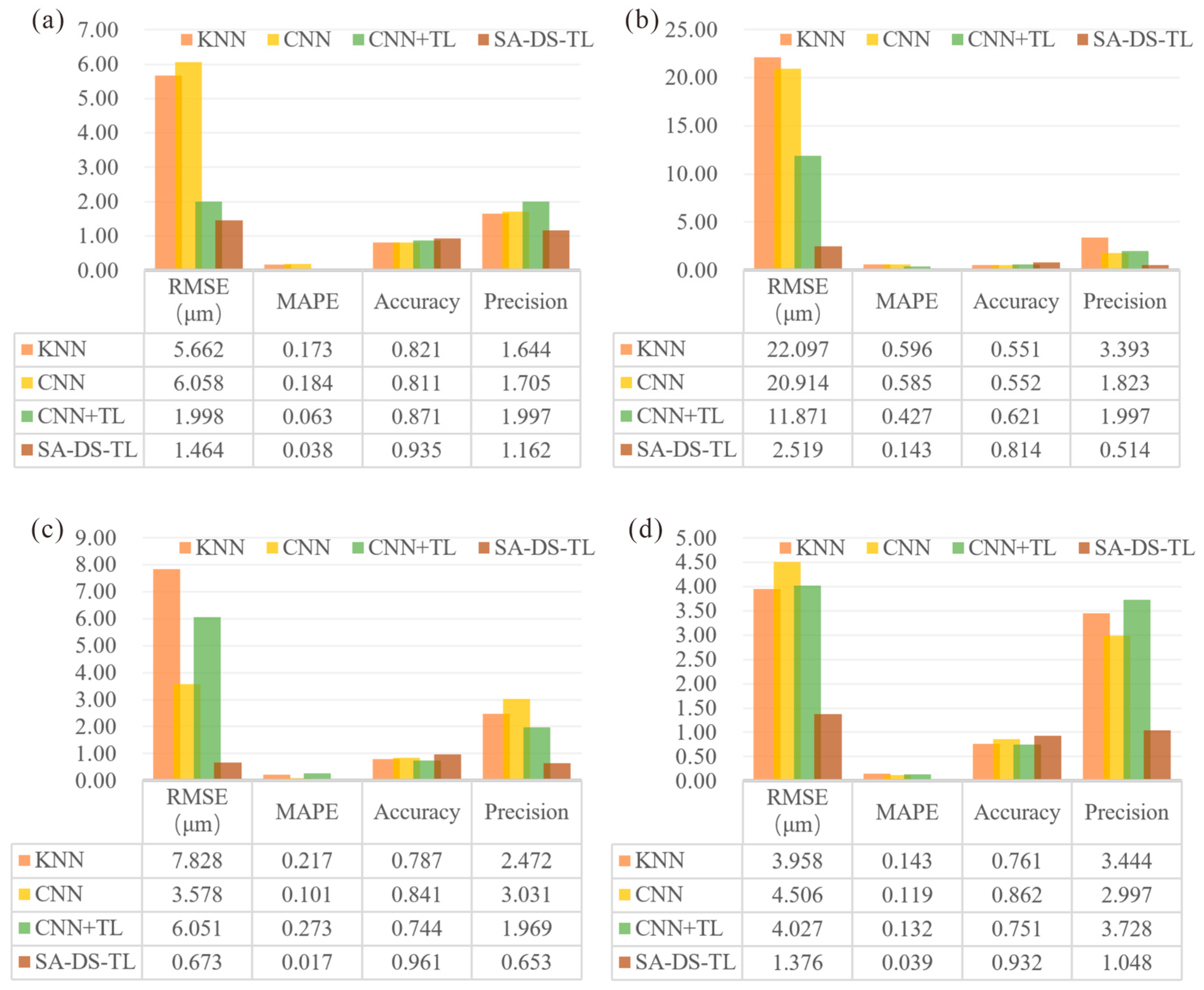

5. Robust Validation of Transfer Model

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mayr, J.; Jedrzejewski, J.; Uhlmann, E.; Donmez, M.A.; Knapp, W.; Härtig, F.; Wendt, K.; Moriwaki, T.; Shore, P.; Schmitt, R.; et al. Thermal issues in machine tools. CIRP Ann.-Manuf. Technol. 2012, 61, 771–791. [Google Scholar] [CrossRef]

- Abdulshahed, A.M.; Longstaff, A.P.; Fletcher, S. The application of ANFIS prediction models for thermal error compensation on CNC machine tools. Appl. Soft Comput. 2015, 27, 158–168. [Google Scholar] [CrossRef]

- Cheng, Y.; Zhang, X.; Zhang, G.; Jiang, W.; Li, B. Thermal error analysis and modeling for high-speed motorized spindles based on LSTM-CNN. Int. J. Adv. Manuf. Technol. 2022, 121, 3243–3257. [Google Scholar] [CrossRef]

- Li, T.-J.; Sun, T.-Y.; Zhang, Y.-M.; Cui, S.-Y.; Zhao, C.-Y. Dynamic memory intelligent algorithm used for prediction of thermal error reliability of ball screw system. Appl. Soft Comput. 2022, 125, 109183. [Google Scholar] [CrossRef]

- Yao, X.-H.; Fu, J.-Z.; Chen, Z.-C. Bayesian networks modeling for thermal error of numerical control machine tools. J. Zhejiang Univ.-Science A 2008, 9, 1524–1530. [Google Scholar] [CrossRef]

- Clough, D.; Fletcher, S.; Longstaff, A.P.; Willoughby, P. Thermal Analysis for Condition Monitoring of Machine Tool Spindles. In Proceedings of the 25th International Congress on Condition Monitoring and Diagnostic Engineering (COMADEM), Huddersfield, UK, 18–20 June 2012. [Google Scholar]

- Brecher, C.; Brozio, M.; Klatte, M.; Lee, T.H.; Tzanetos, F. Application of an Unscented Kalman Filter for Modeling Multiple Types of Machine Tool Errors. In Proceedings of the 50th CIRP Conference on Manufacturing Systems, Taichung, Taiwan, 3–5 May 2017; pp. 449–454. [Google Scholar]

- Tan, F.; Yin, G.; Zheng, K.; Wang, X. Thermal error prediction of machine tool spindle using segment fusion LSSVM. Int. J. Adv. Manuf. Technol. 2021, 116, 99–114. [Google Scholar] [CrossRef]

- Gui, H.; Liu, J.; Ma, C.; Li, M.; Wang, S. Mist-edge-fog-cloud computing system for geometric and thermal error prediction and compensation of worm gear machine tools based on ONT-GCN spatial–temporal model. Mech. Syst. Signal Process. 2023, 184, 109682. [Google Scholar] [CrossRef]

- Chengyang, W.; Sitong, X.; Wansheng, X. Spindle thermal error prediction approach based on thermal infrared images: A deep learning method. J. Manuf. Syst. 2021, 59, 67–80. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, H.C.; Chen, J.H.; Xu, G.D. Spindle thermal error modeling method considering the operating condition based on Long Short-Term Memory. Eng. Res. Express. 2021, 3, 035019. [Google Scholar] [CrossRef]

- Sun, C.; Ma, M.; Zhao, Z.; Tian, S.; Yan, R.; Chen, X. Deep Transfer Learning Based on Sparse Autoencoder for Remaining Useful Life Prediction of Tool in Manufacturing. IEEE Trans. Ind. Inform. 2019, 15, 2416–2425. [Google Scholar] [CrossRef]

- Zhu, M.; Yang, Y.; Feng, X.; Du, Z.; Yang, J. Robust modeling method for thermal error of CNC machine tools based on random forest algorithm. J. Intell. Manuf. 2022, 34, 2013–2026. [Google Scholar] [CrossRef]

- Liao, Y.X.; Huang, R.Y.; Li, J.P.; Chen, Z.Y.; Li, W.H. Dynamic Distribution Adaptation Based Transfer Network for Cross Domain Bearing Fault Diagnosis. Chin. J. Mech. Eng. 2021, 34, 52. [Google Scholar] [CrossRef]

- Katageri, P.; Suresh, B.S.; Pasha Taj, A. An approach to identify and select optimal temperature-sensitive measuring points for thermal error compensation modeling in CNC machines: A case study using cantilever beam. Mater. Today Proc. 2021, 45, 264–269. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, L.; Lou, P.; Jiang, X.; Li, Z. Thermal Error Modeling for Heavy Duty CNC Machine Tool Based on Convolution Neural Network. In Proceedings of the 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 March 2019. [Google Scholar]

- Dai, Y.; Tao, X.; Xuan, L.; Qu, H.; Wang, G. Thermal error prediction model of a motorized spindle considering variable preload. Int. J. Adv. Manuf. Technol. 2022, 121, 4745–4756. [Google Scholar] [CrossRef]

- Liu, J.; Ma, C.; Wang, S. Data-driven thermal error compensation of linear x-axis of worm gear machines with error mechanism modeling. Mech. Mach. Theory 2020, 153, 104009. [Google Scholar] [CrossRef]

- Liu, M.; Gong, Y.; Sun, J.; Tang, B.; Sun, Y.; Zu, X.; Zhao, J. The accuracy losing phenomenon in abrasive tool condition monitoring and a noval WMMC-JDA based data-driven method considered tool stochastic surface morphology. Mech. Syst. Signal Process. 2023, 198, 110410. [Google Scholar] [CrossRef]

- Xia, M.; Shao, H.; Williams, D.; Lu, S.; Shu, L.; de Silva, C.W. Intelligent fault diagnosis of machinery using digital twin-assisted deep transfer learning. Reliab. Eng. Syst. Saf. 2021, 215, 107938. [Google Scholar] [CrossRef]

- Zhuang, F.Z.; Qi, Z.Y.; Duan, K.Y.; Xi, D.B.; Zhu, Y.C.; Zhu, H.S.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q.A. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Liu, J.L.; Ma, C.; Gui, H.Q.; Wang, S.L. Transfer learning-based thermal error prediction and control with deep residual LSTM network. Knowl.-Based Syst. 2022, 237, 107704. [Google Scholar] [CrossRef]

- Kuo, P.-H.; Tu, T.-L.; Chen, Y.-W.; Jywe, W.-Y.; Yau, H.-T. Thermal displacement prediction model with a structural optimized transfer learning technique. Case Stud. Therm. Eng. 2023, 49, 103323. [Google Scholar] [CrossRef]

- Gao, M.; Qi, D.; Mu, H.; Chen, J. A Transfer Residual Neural Network Based on ResNet-34 for Detection of Wood Knot Defects. Forests 2021, 12, 212. [Google Scholar] [CrossRef]

- Guo, L.; Lei, Y.; Xing, S.; Yan, T.; Li, N. Deep Convolutional Transfer Learning Network: A New Method for Intelligent Fault Diagnosis of Machines With Unlabeled Data. IEEE Trans. Ind. Electron. 2019, 66, 7316–7325. [Google Scholar] [CrossRef]

- Wiessner, M.; Blaser, P.; Böhl, S.; Mayr, J.; Knapp, W.; Wegener, K. Thermal test piece for 5-axis machine tools. Precis. Eng.-J. Int. Soc. Precis. Eng. Nanotechnol. 2018, 52, 407–417. [Google Scholar] [CrossRef]

- Hsieh, Y.L.; Cheng, M.H.; Juan, D.C.; Wei, W.; Hsu, W.L.; Hsieh, C.J. On the Robustness of Self-Attentive Models. In Proceedings of the 57th Annual Meeting of the Association-for-Computational-Linguistics (ACL), Florence, Italy, 28 July–2 August 2019; pp. 1520–1529. [Google Scholar]

- Zhao, S.; Qiu, Z.; He, Y. Transfer learning strategy for plastic pollution detection in soil: Calibration transfer from high-throughput HSI system to NIR sensor. Chemosphere 2021, 272, 129908. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.Q.; Yu, H.; Huang, M.Y.; Yang, Q. Easy transfer learning by exploiting intra-domain structures. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 1210–1215. [Google Scholar]

| Number | Dataset | Speed (rpm) | Working Condition |

|---|---|---|---|

| 1 | Training dataset | 2000 | Cold machine |

| Test dataset | 4000 | Cold machine | |

| 2 | Training dataset | 2000 | Cold machine |

| Test dataset | 4000 | Not cold machine | |

| 3 | Training dataset | 2000 | Cold machine |

| Test dataset | Variable speed 1 | Cold machine | |

| 4 | Training dataset | Variable speed 1 | Cold machine |

| Test dataset | 4000 | Cold machine |

| Model | KNN | CNN | CNN+TL | SA-DS-EasyTL |

|---|---|---|---|---|

| Time (s) | 1.885 | 131.932 | 68.773 | 10.335 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, Y.; Fu, G.; Mu, S.; Lu, C.; Wang, X.; Wang, T. Thermal Error Transfer Prediction Modeling of Machine Tool Spindle with Self-Attention Mechanism-Based Feature Fusion. Machines 2024, 12, 728. https://doi.org/10.3390/machines12100728

Zheng Y, Fu G, Mu S, Lu C, Wang X, Wang T. Thermal Error Transfer Prediction Modeling of Machine Tool Spindle with Self-Attention Mechanism-Based Feature Fusion. Machines. 2024; 12(10):728. https://doi.org/10.3390/machines12100728

Chicago/Turabian StyleZheng, Yue, Guoqiang Fu, Sen Mu, Caijiang Lu, Xi Wang, and Tao Wang. 2024. "Thermal Error Transfer Prediction Modeling of Machine Tool Spindle with Self-Attention Mechanism-Based Feature Fusion" Machines 12, no. 10: 728. https://doi.org/10.3390/machines12100728

APA StyleZheng, Y., Fu, G., Mu, S., Lu, C., Wang, X., & Wang, T. (2024). Thermal Error Transfer Prediction Modeling of Machine Tool Spindle with Self-Attention Mechanism-Based Feature Fusion. Machines, 12(10), 728. https://doi.org/10.3390/machines12100728