A Control Architecture for Developing Reactive Hybrid Remotely Operated Underwater Vehicles

Abstract

:1. Introduction

2. Materials and Methods

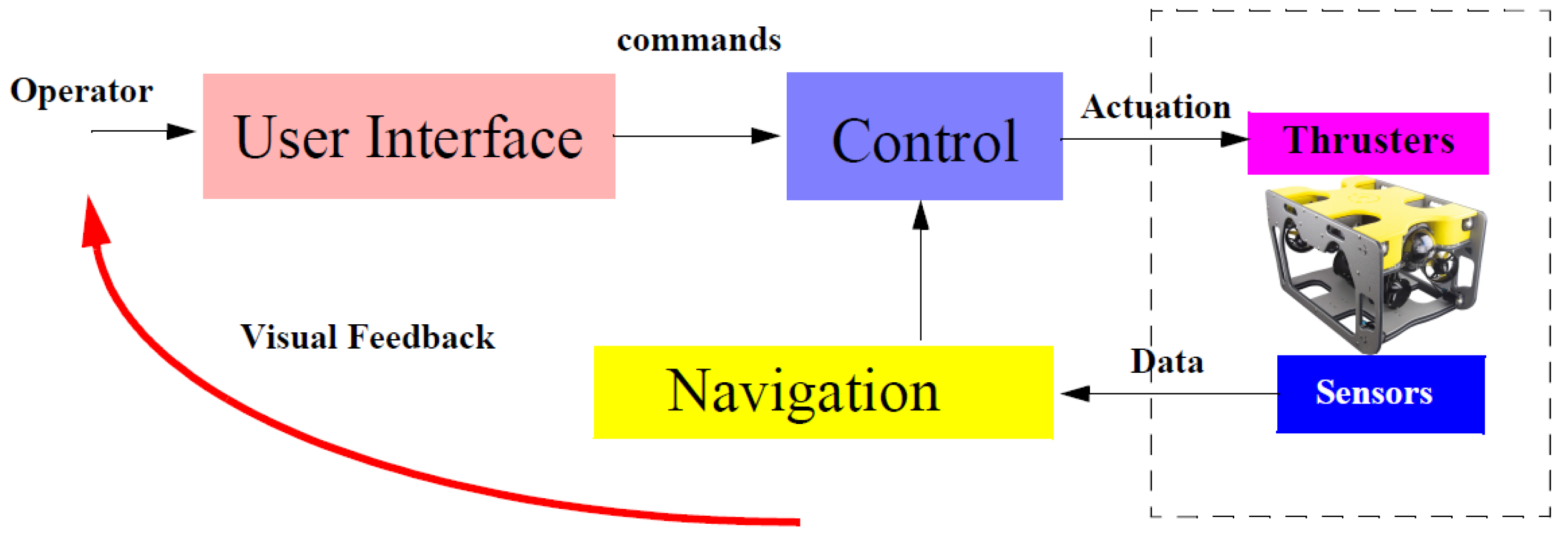

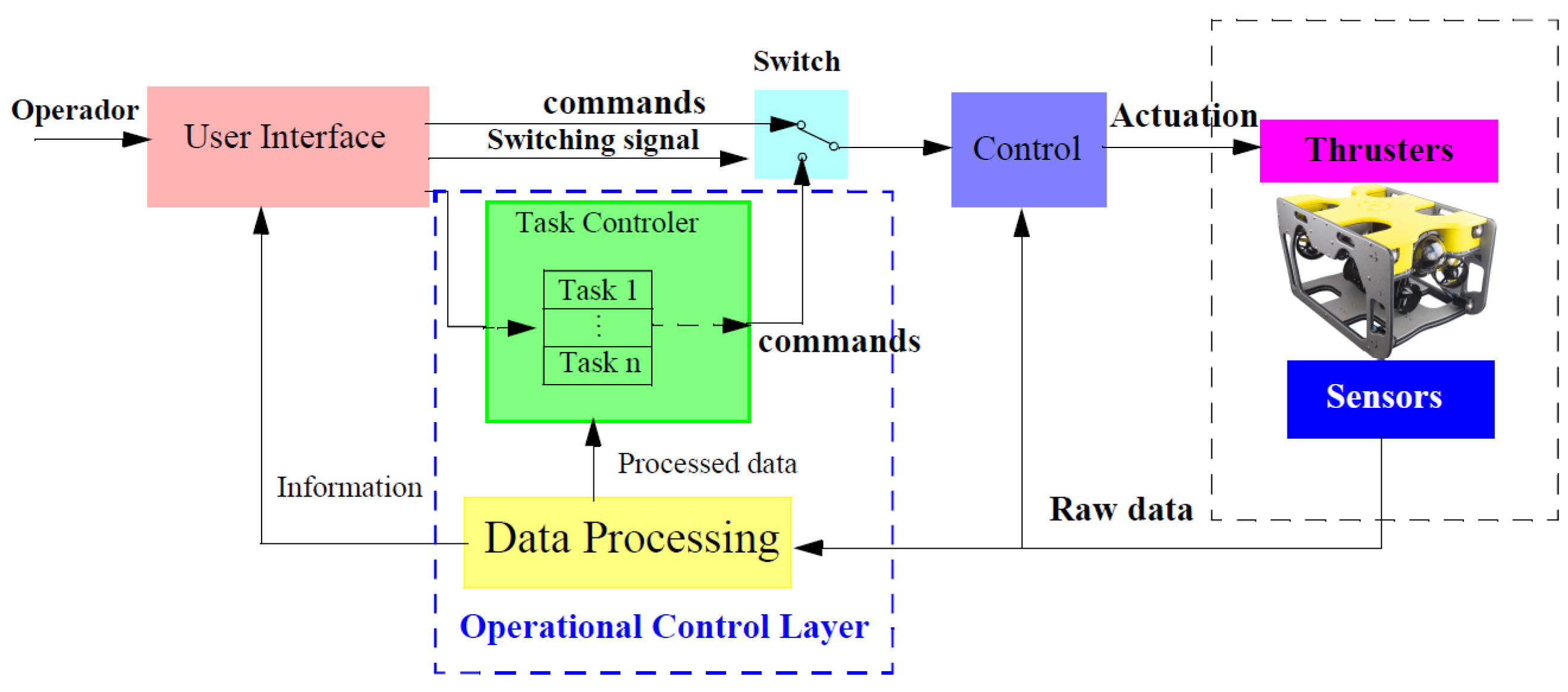

2.1. Transforming an ROV in a Reactive Hybrid ROV

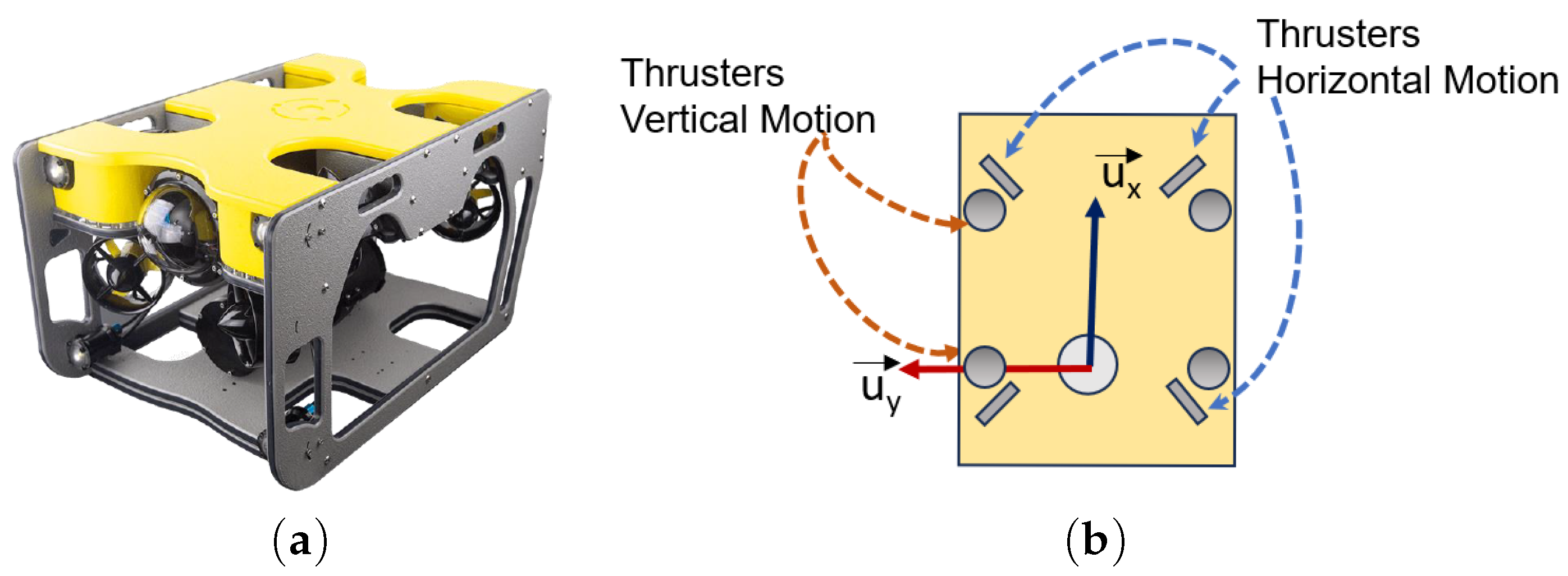

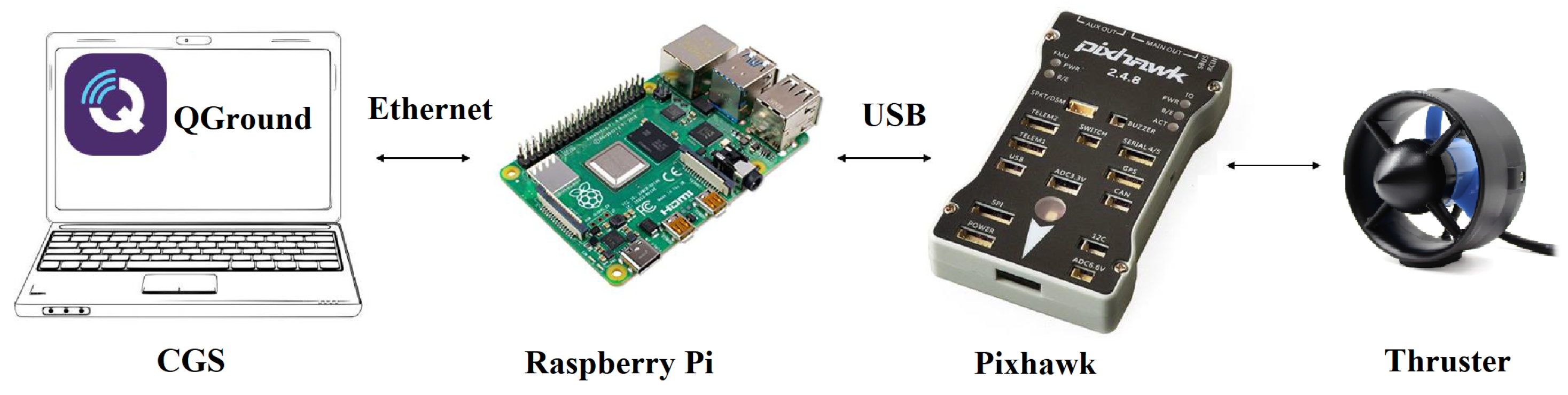

2.1.1. Sibiu PRO: A Small-Scale Commercial ROV

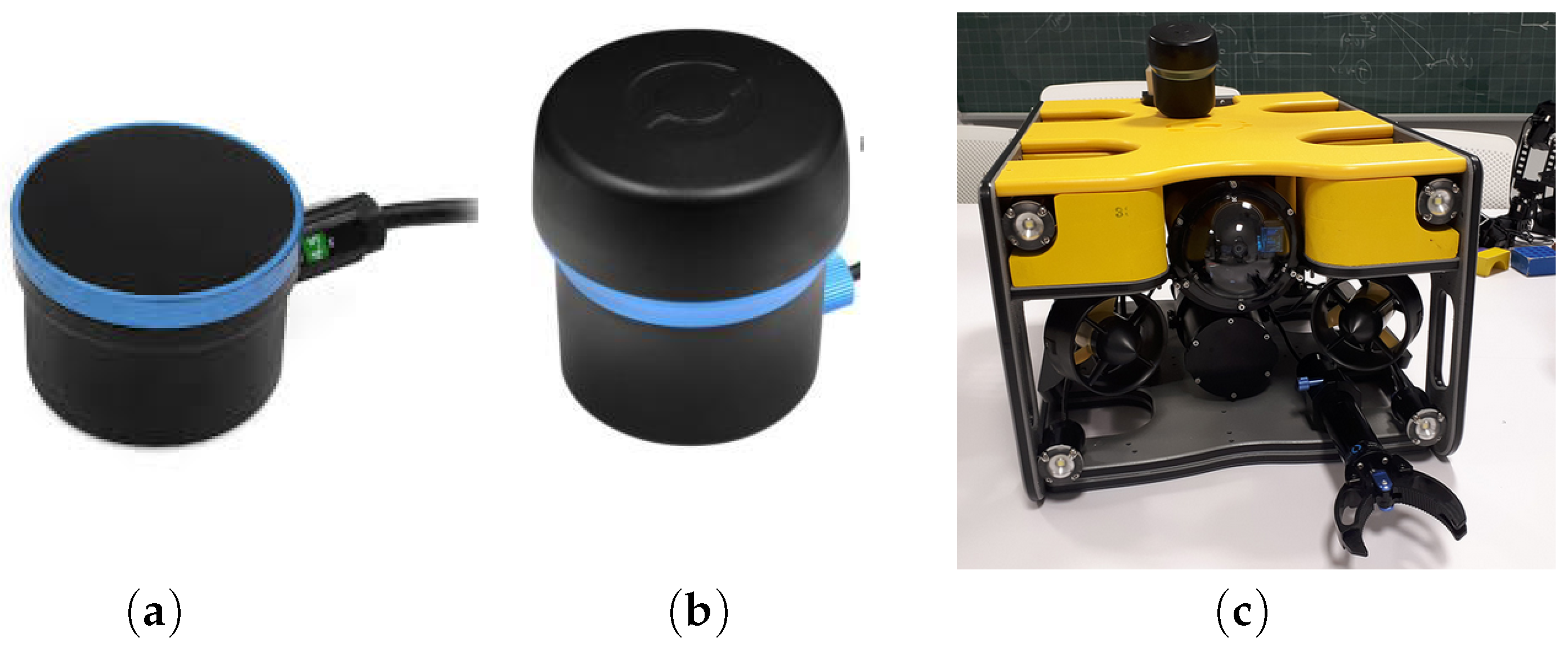

2.1.2. From a ROV to a HROV

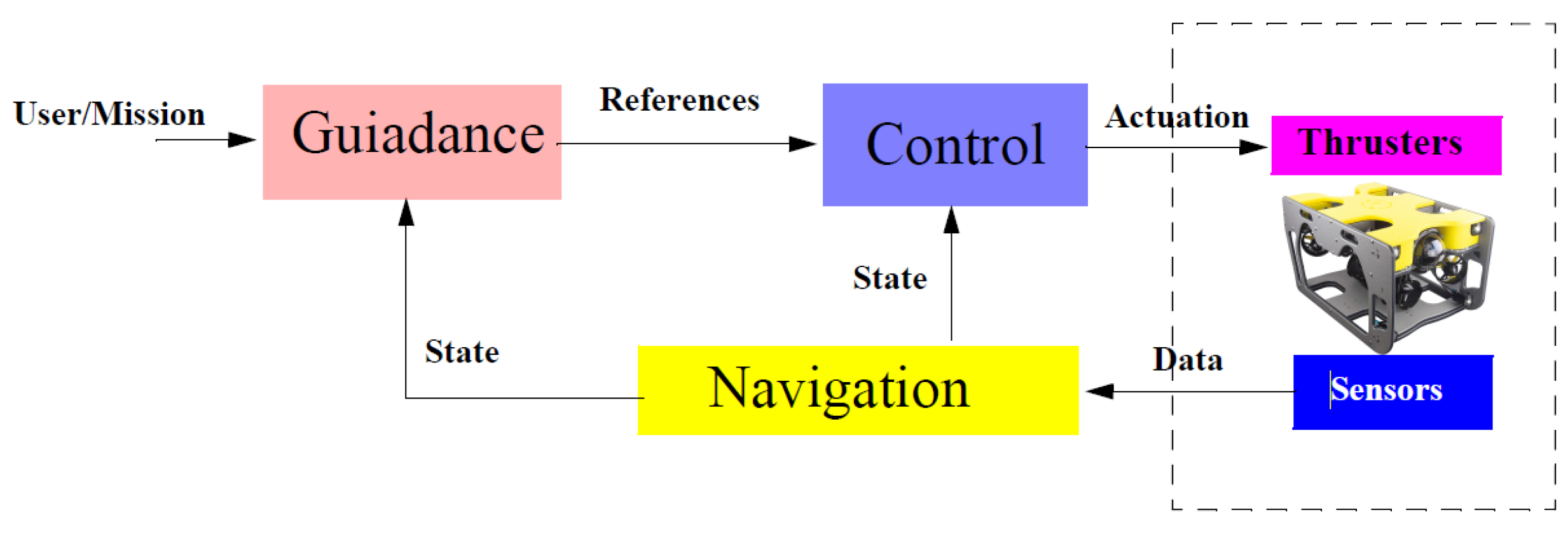

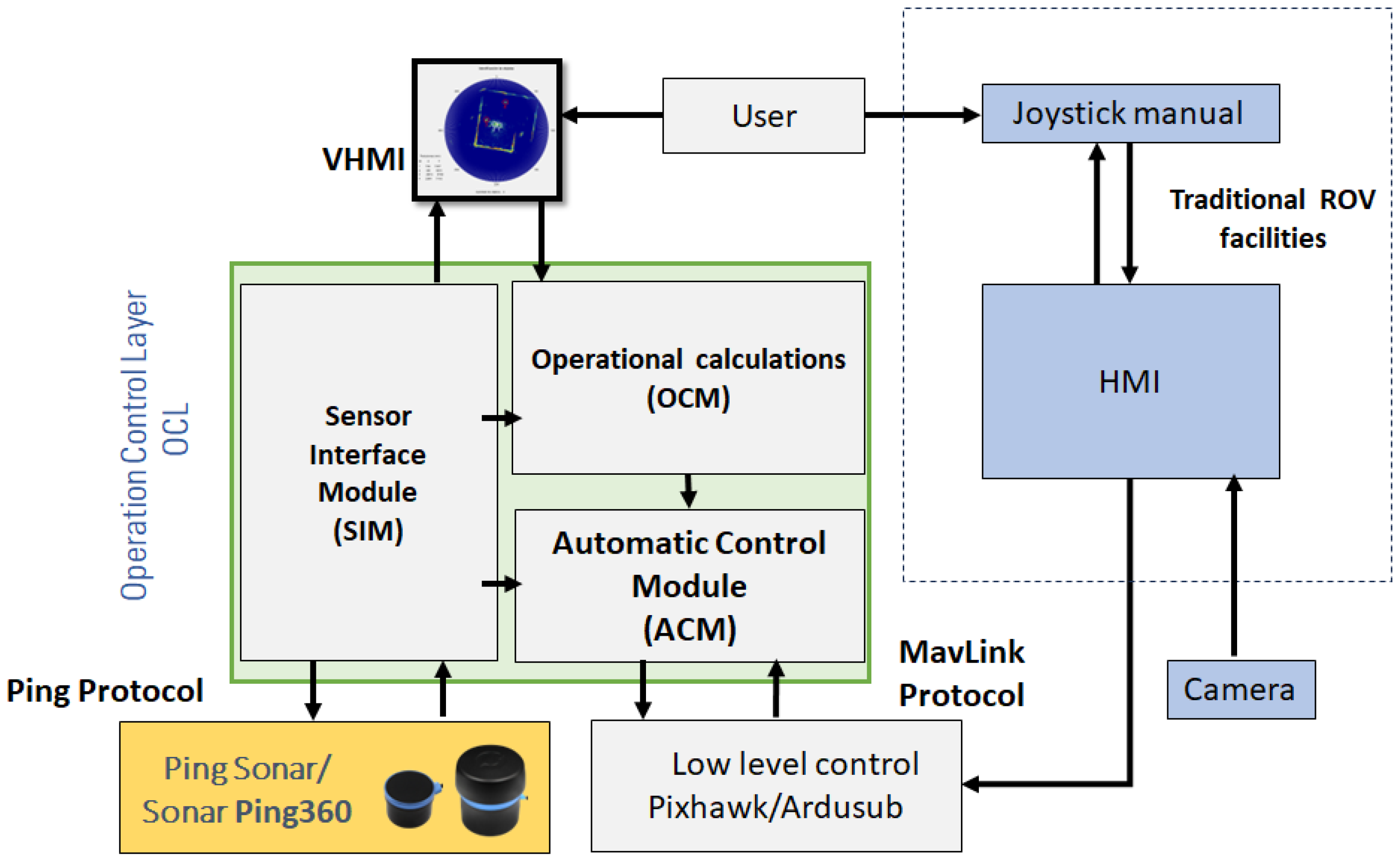

2.2. HROV Control Architecture: The Operational Control Layer

2.3. Sensor Interface Module

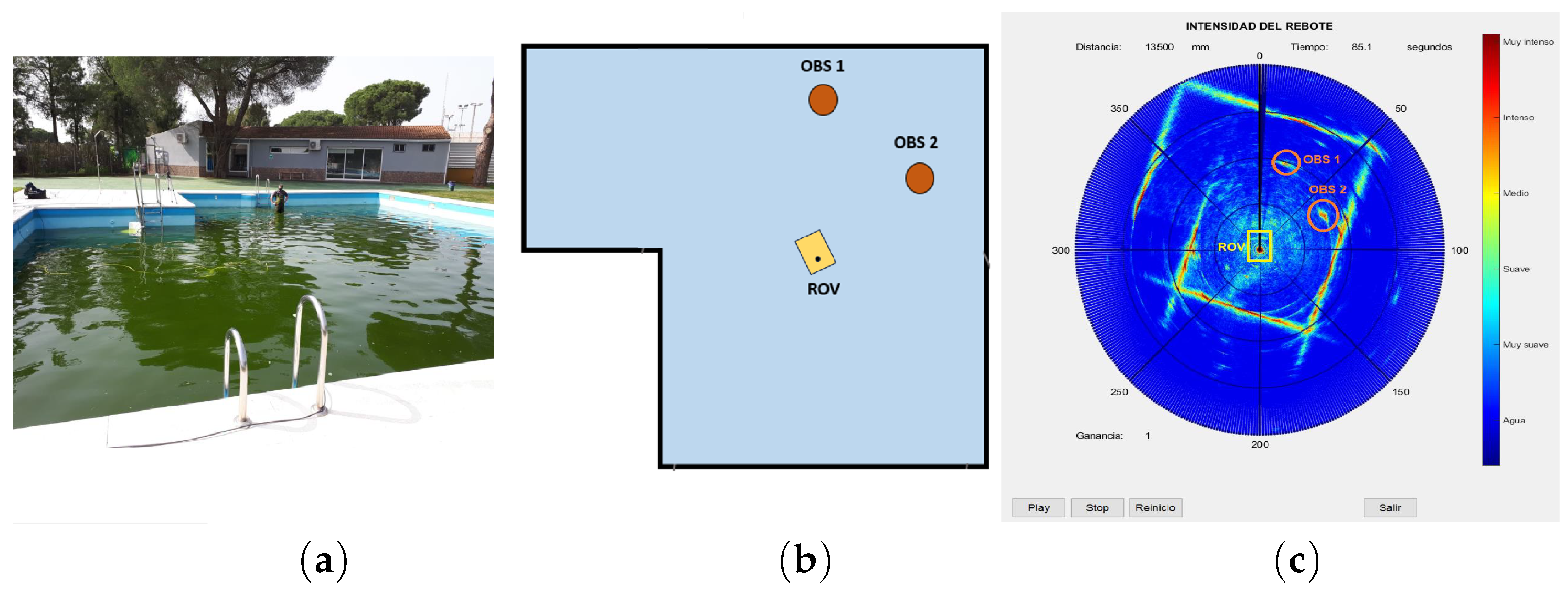

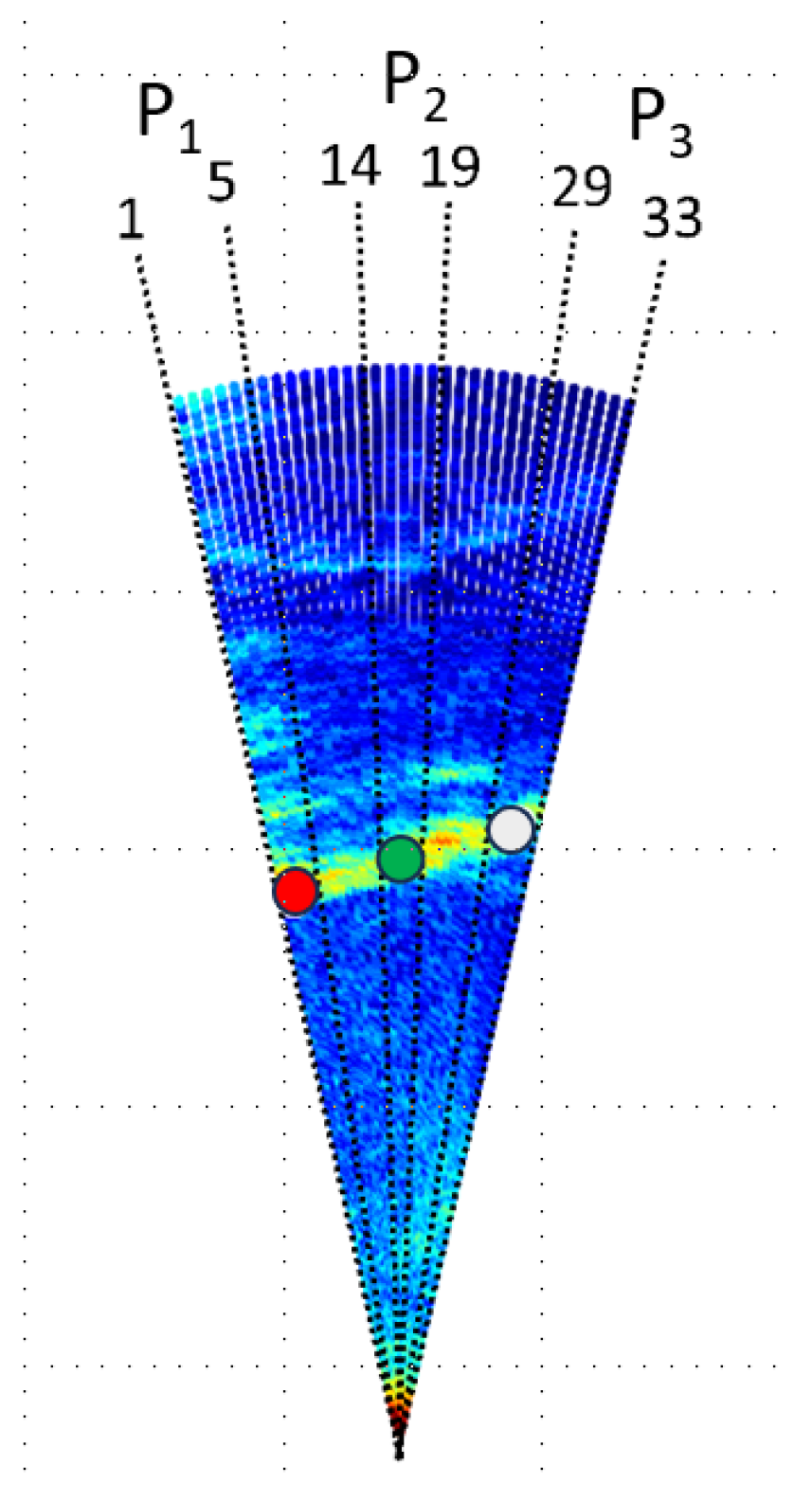

2.3.1. Object Detection

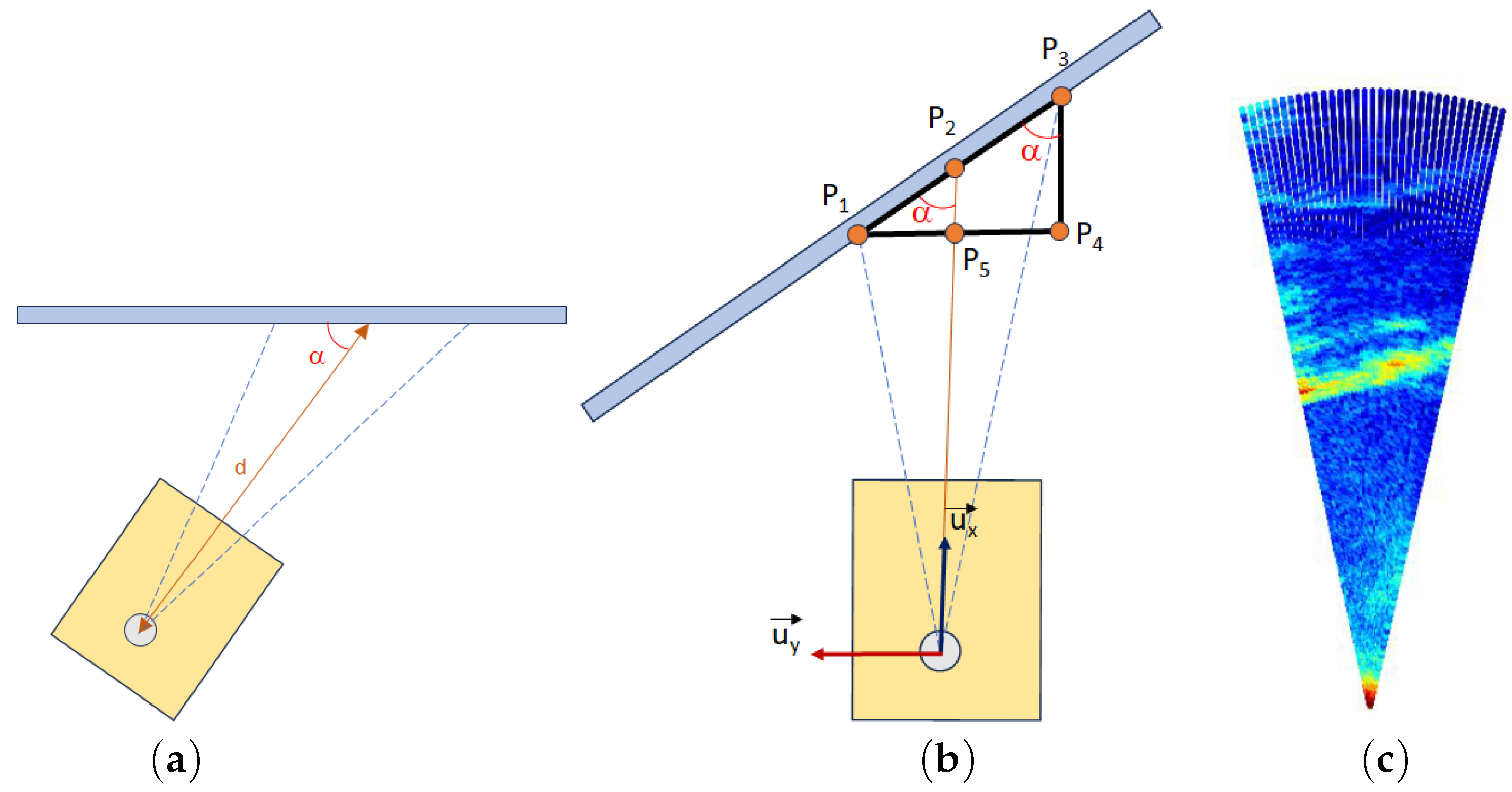

2.3.2. Estimating Orientation and Distance to a Wall

2.4. Automatic Control Module

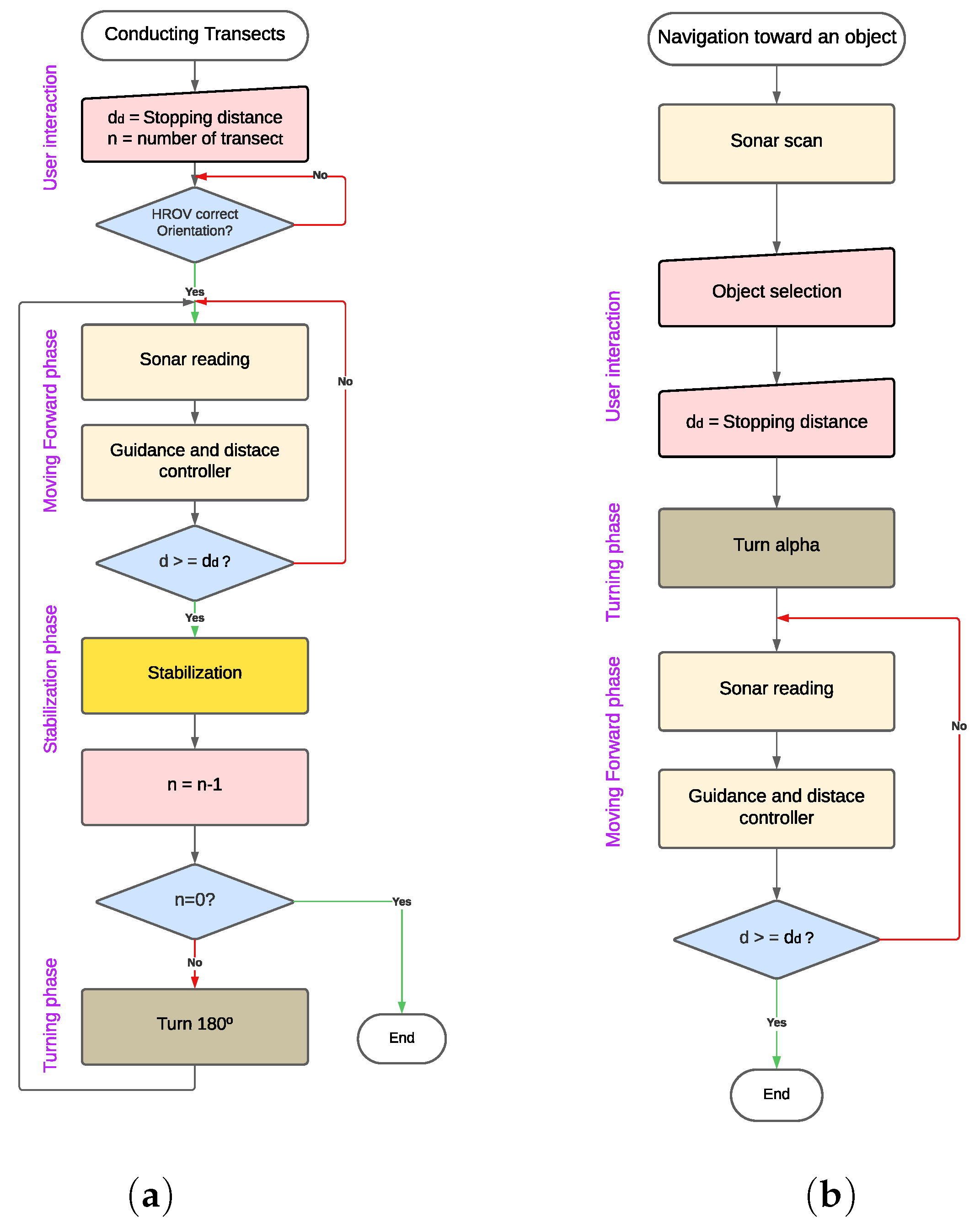

2.4.1. Automatic Task Execution

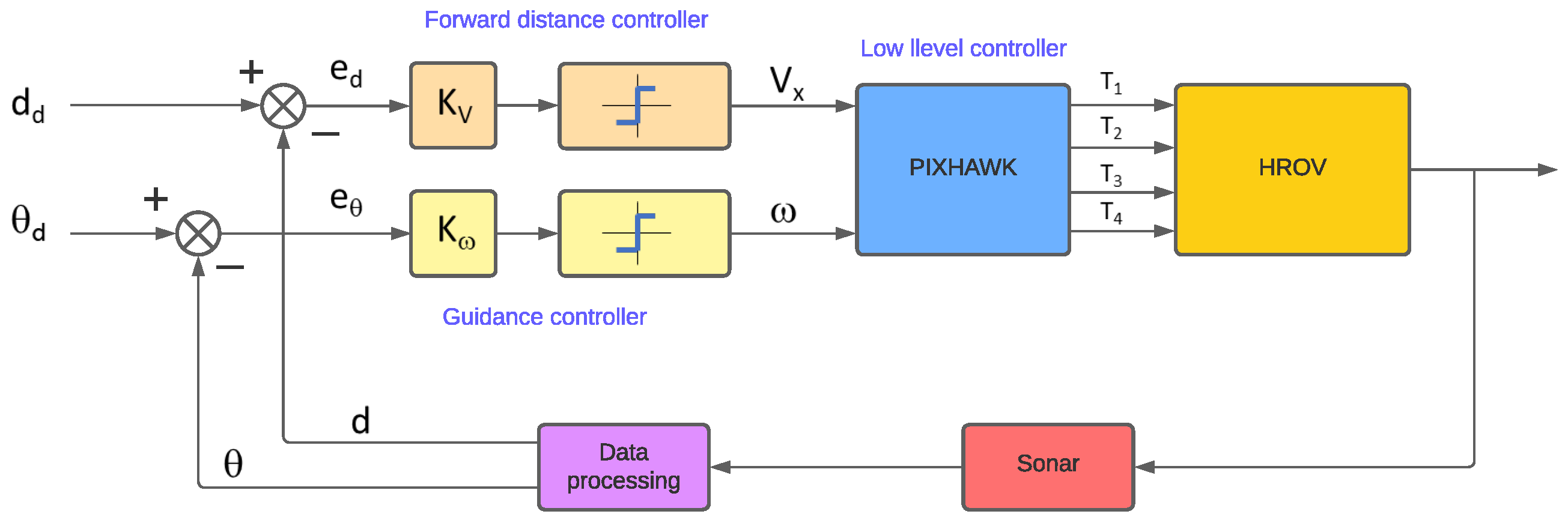

2.4.2. Guidance and Forward Distance Controller

3. Results

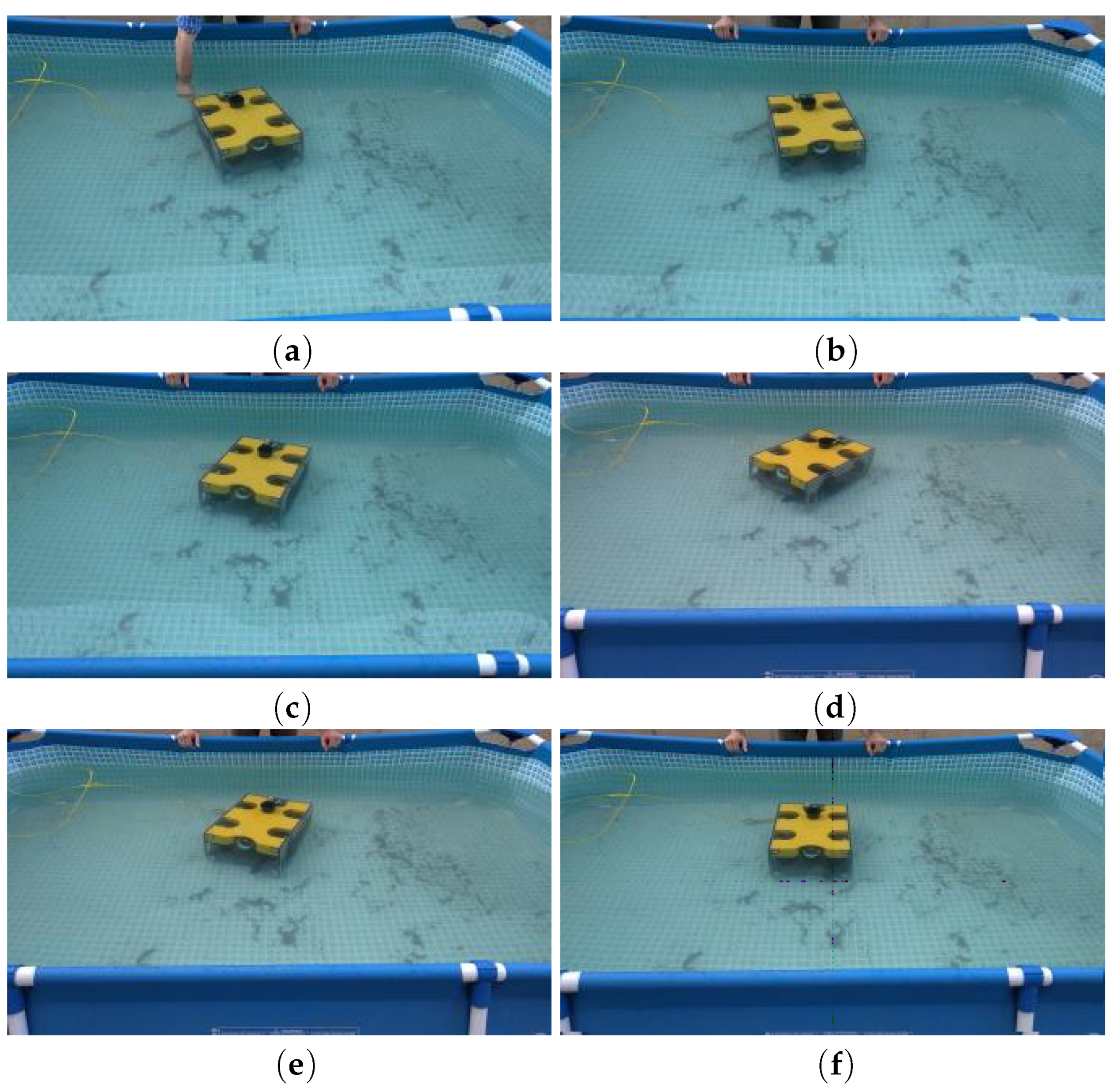

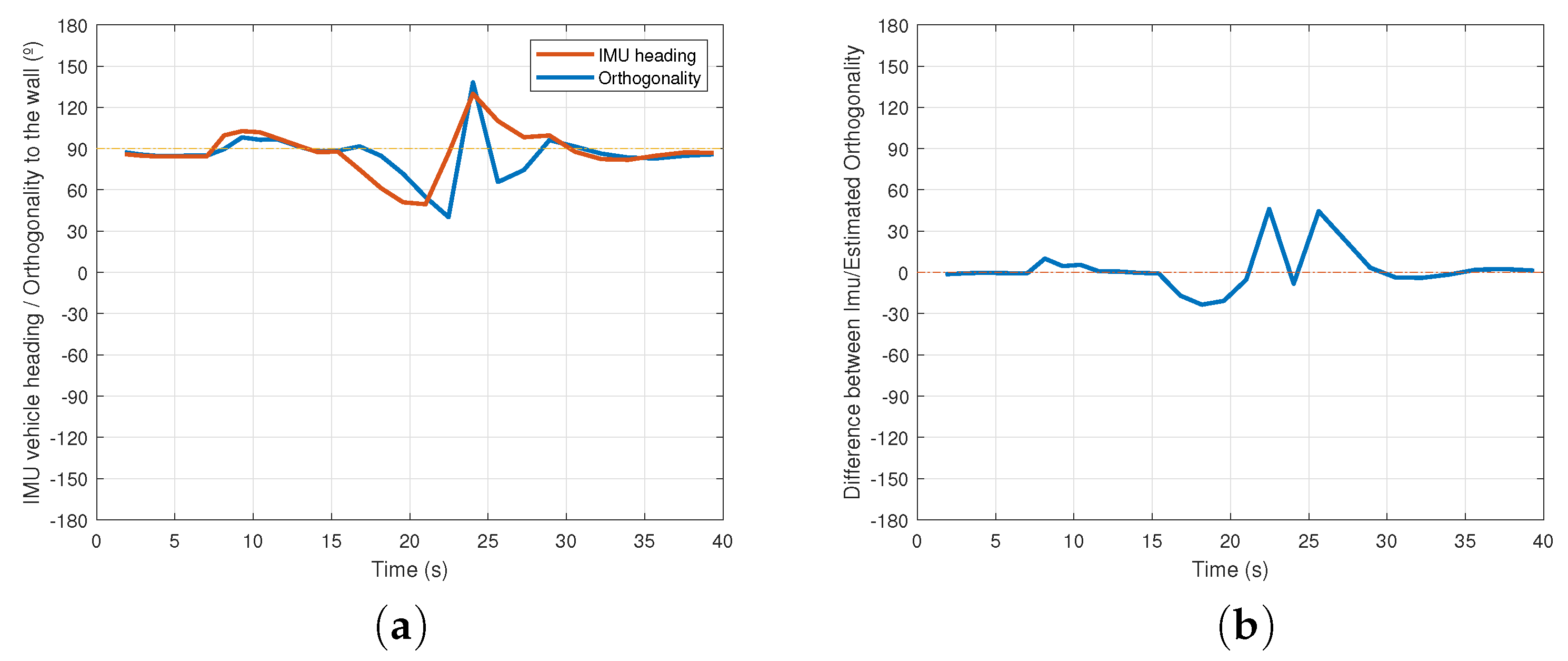

3.1. Controlling Vehicle Orientation

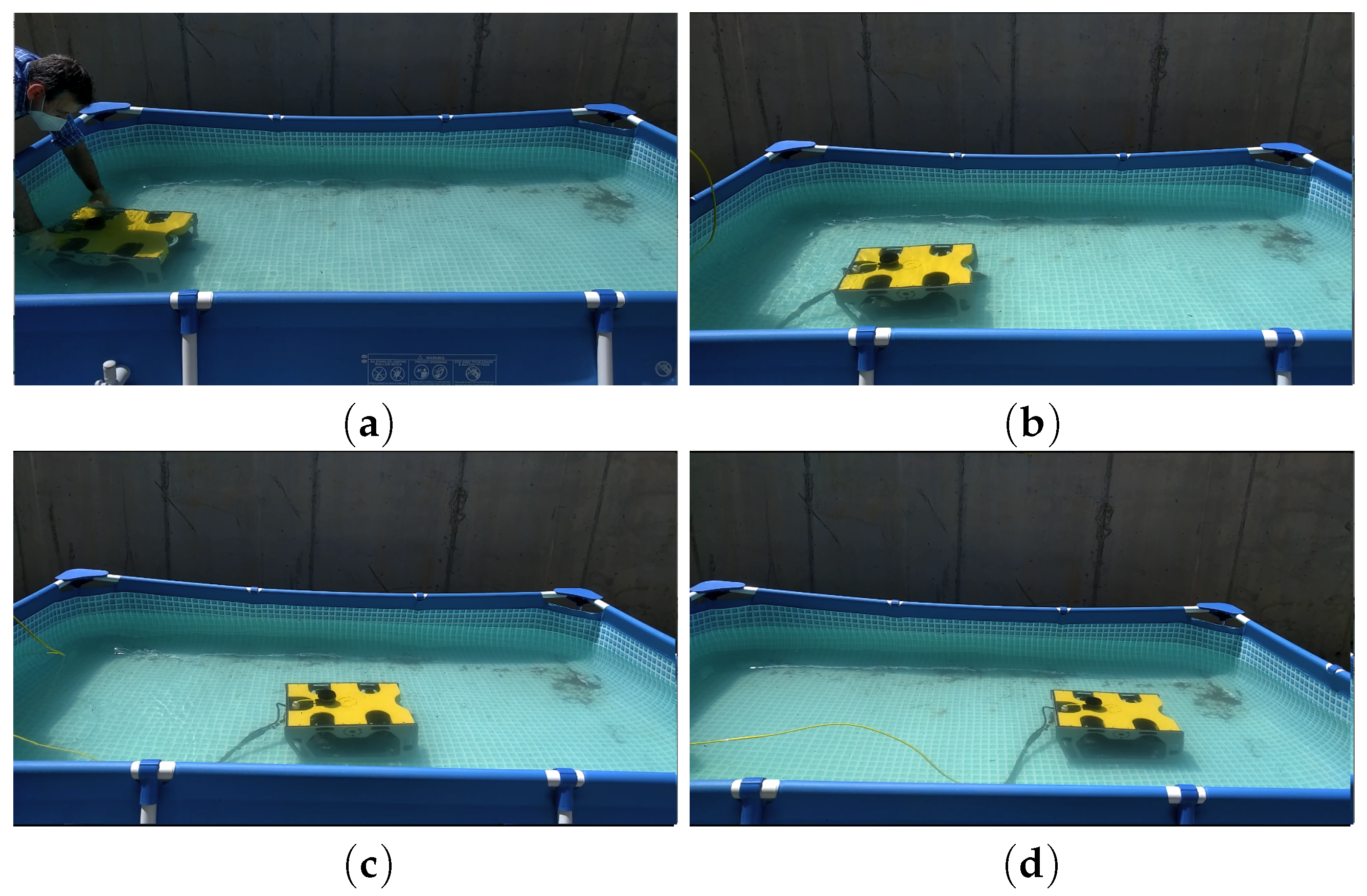

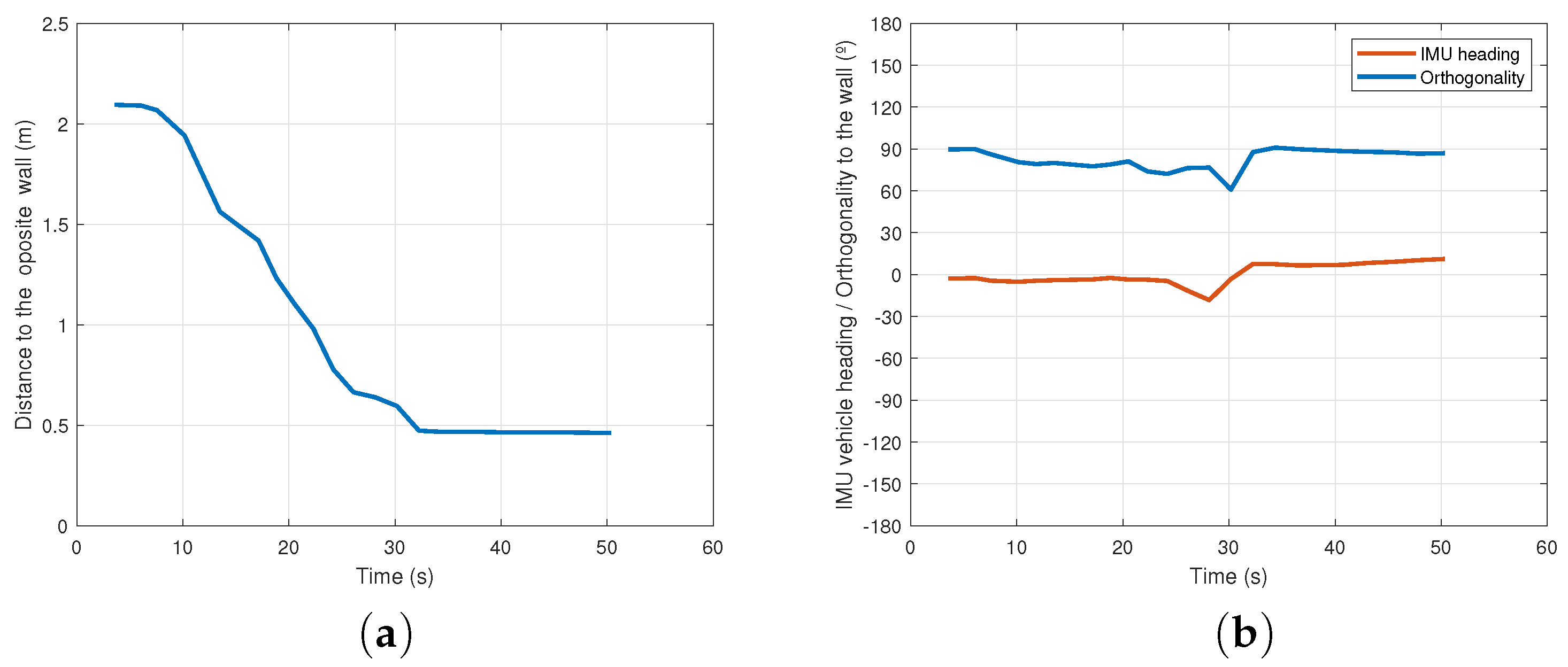

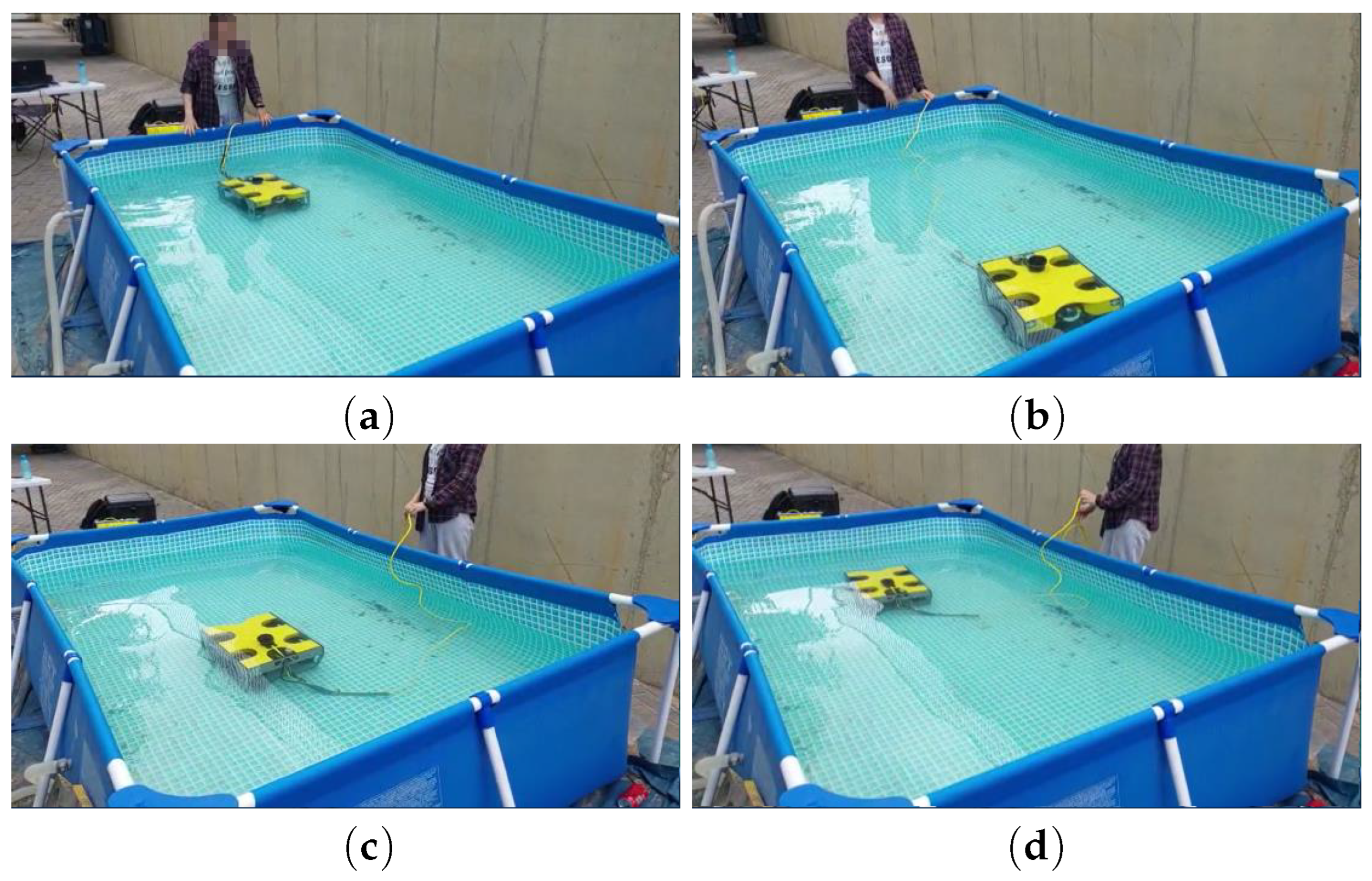

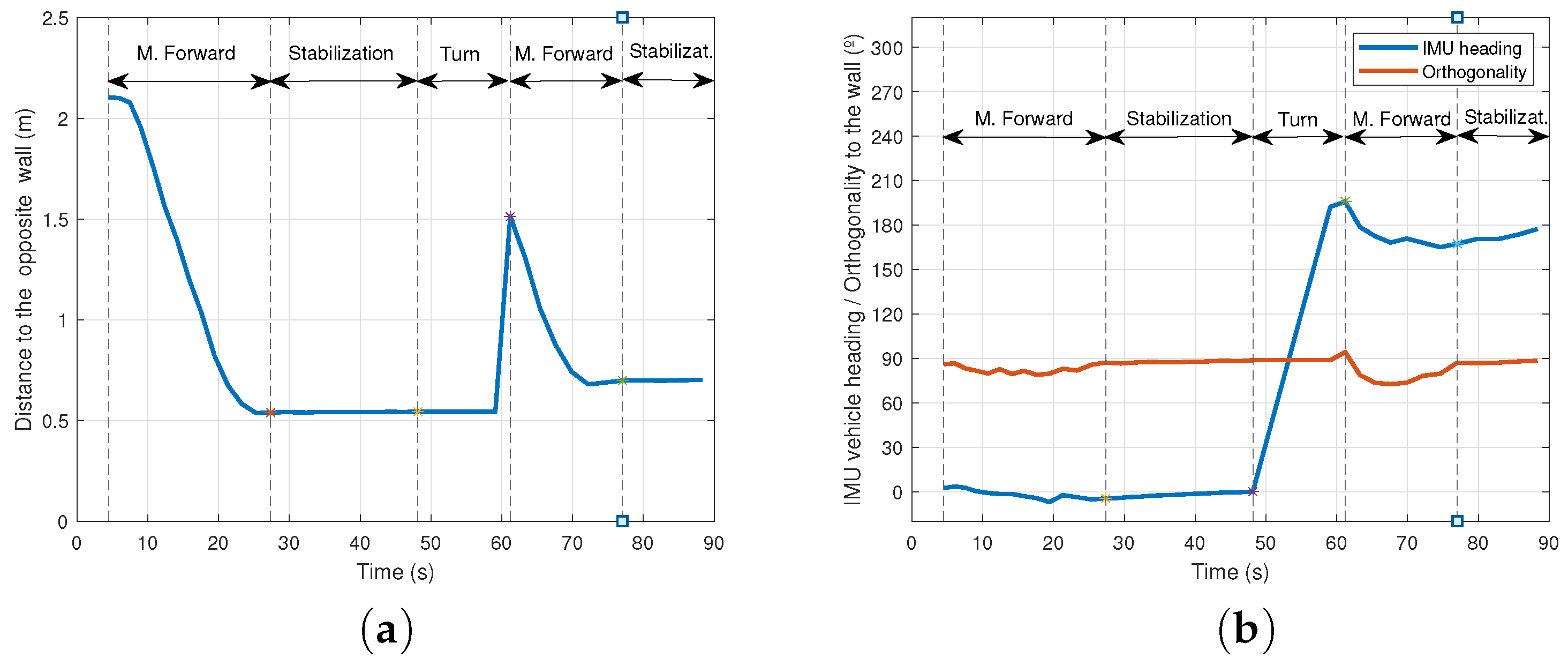

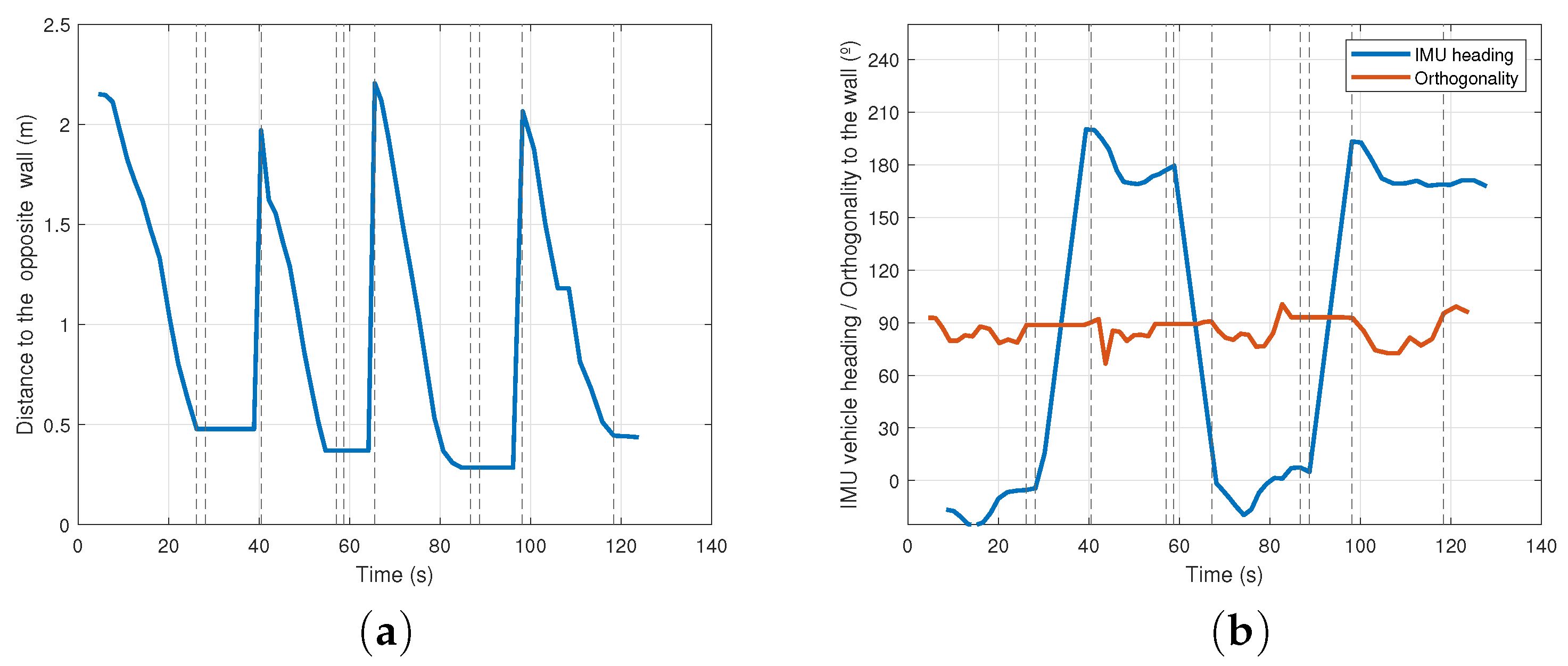

3.2. Conducting Transects

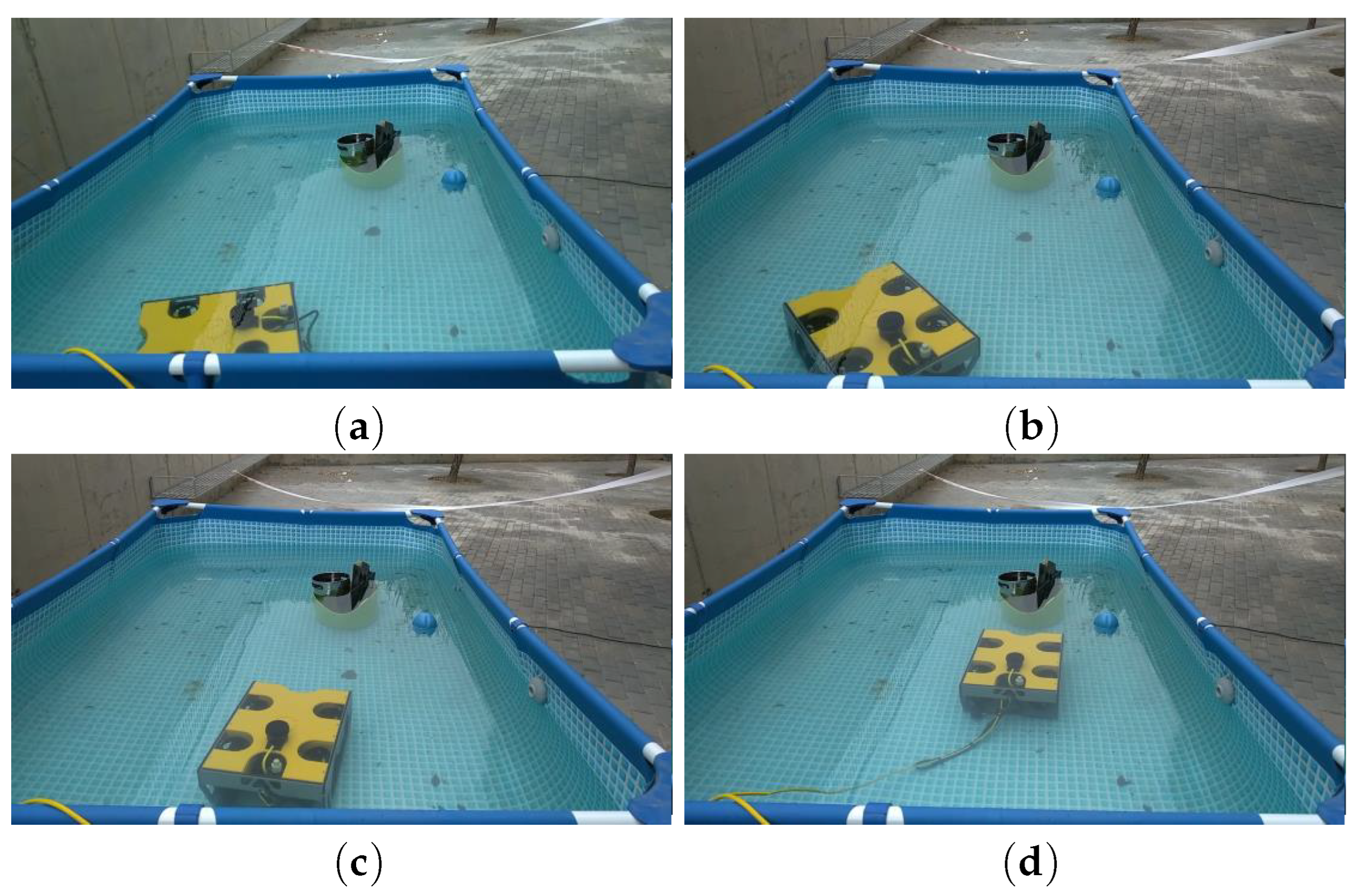

3.3. Navigation toward a Selected Object

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Petillot, Y.R.; Antonelli, G.; Casalino, G.; Ferreira, F. Underwater robots: From remotely operated vehicles to intervention-autonomous underwater vehicles. IEEE Robot. Autom. Mag. 2019, 26, 94–101. [Google Scholar] [CrossRef]

- Sánchez, P.; Papaelias, M.; Márquez, F. Autonomous underwater vehicles: Instrumentation and measurements. IEEE Instrum. Meas. Mag. 2020, 23, 105–114. [Google Scholar] [CrossRef]

- Ramírez, I.S.; Bernalte Sánchez, P.J.; Papaelias, M.; Márquez, F.P.G. Autonomous underwater vehicles and field of view in underwater operations. J. Mar. Sci. Eng. 2021, 9, 277. [Google Scholar] [CrossRef]

- Karlsen, H.; Amundsen, H.; Caharija, W.; Ludvigsen, M. Autonomous Aquaculture: Implementation of an autonomous mission control system for unmanned underwater vehicle operations. In Proceedings of the OCEANS 2021: San Diego–Porto, San Diego, CA, USA, 20–23 September 2021; pp. 1–10. [Google Scholar]

- Osen, O.L.; Sandvik, R.I.; Rogne, V.; Zhang, H. A novel low cost ROV for aquaculture application. In Proceedings of the OCEANS 2017-Anchorage, Anchorage, AK, USA, 18–21 September 2017; pp. 1–7. [Google Scholar]

- Huvenne, V.A.I.; Robert, K.; Marsh, L.; Iacono, C.L.; Bas, T.L.; Wynn, R.B. ROVs and AUVs. In Submarine Geomorphology; Springer International Publishing: Cham, Switzerland, 2018; pp. 93–108. [Google Scholar]

- Balaban, M.O.; Soriano, M.G.; Ruiz, E.G. Using image analysis to predict the weight of Alaskan salmon of different species. J. Food Sci. 2010, 75, 157–162. [Google Scholar] [CrossRef] [PubMed]

- Página Oficial de la Unión Europea. Available online: https://ec.europa.eu/commission/presscorner/detail/en/IP_12_955 (accessed on 30 October 2023).

- Serpa, D.; Ferreira, P.; Ferreira, H.; Fonseca, L.C.; Dinis, M.T.; Duarte, P. Modelling the growth of white seabream and gilthead seabreamin semi-intensive earth production ponds using the Dynamic Energy Budget approach. J. Sea Res. 2013, 76, 135–145. [Google Scholar] [CrossRef]

- Gutiérrez-Estrada, J.C.; Pulido-Calvo, I.; Castro-Gutiérrez, J.; Peregrín, A.; López-Domínguez, S.; Gómez-Bravo, F.; Garrocho-Cruz, A.; Rosa-Lucas, I. Fish abundance estimation with imaging sonar in semi-intensive aquaculture ponds. Aquac. Eng. 2022, 97, 102235. [Google Scholar] [CrossRef]

- Gutiérrez-Estrada, J.C.; de Pedro, E.; López-Luque, R.; Pulido-Calvo, I. Comparison between traditional methods and artificial neural networks for ammonia concentration forecasting in an eel intensive rearing system. Aquac. Eng. 2004, 31, 183–203. [Google Scholar] [CrossRef]

- Oficial Web Page of SINTEF. Available online: https://www.sintef.no/en/ (accessed on 30 October 2023).

- Oficial Web Page of SIMRAD Subsea. Available online: https://www.kongsberg.com/es/maritime/contact/simrad/ (accessed on 30 October 2023).

- Klepaker, R.; Vestgå, K.; Hallset, J.; Balchen, J. The application of a free-swimming ROV in aquaculture. IFAC Proc. Vol. 1987, 20, 181–185. [Google Scholar] [CrossRef]

- Karpov, K.; Bergen, M.; Geibel, J. Monitoring fish in California Channel Islands marine protected areas with a remotely operated vehicle: The first five years. Mar. Ecol. Prog. Ser. 2012, 453, 159–172. [Google Scholar] [CrossRef]

- Rundtop, P.; Frank, K. Experimental evaluation of hydroacoustic instruments for ROV navigation along aquaculture net pens. Aquac. Eng. 2016, 74, 143–156. [Google Scholar] [CrossRef]

- Osen, O.; Leinan, P.; Blom, M.; Bakken, C.; Heggen, M.; Zhang, H. A novel sea farm inspection platform for norwegian aquaculture application. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018; pp. 1–8. [Google Scholar]

- Gómez Bravo, F.; Garrocho Cruz, A.; Gutiérrez Estrada, J.C.; Pulido Calvo, I.; Peregrín Rubio, A.; López Domínguez, S.; Castro Gutiérrez, J. Processing acoustic images for the exploitation of fish farms. Instrum. Viewp. 2023, 22, 69–70. [Google Scholar]

- Centelles, D.; Soriano, A.; Marin, R.; Sanz, P. Wireless HROV Control with Compressed Visual Feedback Using Acoustic and RF Links. J. Intell. Robot. Syst. 2020, 99, 713–728. [Google Scholar] [CrossRef]

- Sánchez, J. Towards a Multimodal Interface for the Specification of Intervention Tasks in Underwater Robotics. Ph.D. Thesis, Universitat Jaume I, Castellón de la Plana, Spain, 2021. [Google Scholar]

- Oficial Web Page of Double Eagle Sarov. Available online: https://www.saab.com/products/doubleeagle (accessed on 30 October 2023).

- Oficial Web Page of Nereus. Available online: https://www.whoi.edu/oceanus/feature/new-hybrid-deep-sea-vehicle-is-christened-nereus/ (accessed on 30 October 2023).

- IEEE Web Page of Aquanaut. Available online: https://spectrum.ieee.org/meet-aquanaut-the-underwater-transformer (accessed on 30 October 2023).

- Web Page of Ocean One. Available online: https://khatib.stanford.edu/ocean-one.html (accessed on 30 October 2023).

- Web Page of HROV-Arch. Available online: https://robotik.dfki-bremen.de/en/research/projects/hrov-arch (accessed on 30 October 2023).

- Web Page of MERBOTS. Available online: https://www.irs.uji.es/merbots/welcome-merbots-project-website (accessed on 30 October 2023).

- Oficial Web Page of KTTSeaDrones. Available online: https://kttseadrones.wixsite.com/kttseadrones (accessed on 30 October 2023).

- Oficial Web Page of Pixhawk. Available online: https://pixhawk.org/ (accessed on 30 October 2023).

- IEEE Web Page of Qgroundcontrol. Available online: http://qgroundcontrol.com/ (accessed on 30 October 2023).

- Oficial Web Page of Mavlink Protocol. Available online: https://mavlink.io/en/ (accessed on 30 October 2023).

- Oficial Web Page of Ardusub. Available online: https://www.ardusub.com/ (accessed on 30 October 2023).

- Oficial Web Page of Ardupilot. Available online: https://ardupilot.org/ (accessed on 30 October 2023).

- Feng, L.; Fangchao, Q. Research on the hardware structure characteristics and EKF filtering algorithm of the autopilot PIXHAWK. In Proceedings of the 2016 Sixth International Conference on Instrumentation & Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 21–23 July 2016; pp. 228–231. [Google Scholar]

- Technical Guide Web Page of Ping Echosounder Sonar. Available online: https://bluerobotics.com/learn/ping-sonar-technical-guide/ (accessed on 30 October 2023).

- Oficial Web Page of Ping360. Available online: https://bluerobotics.com/store/sonars/imaging-sonars/ping360-sonar-r1-rp/ (accessed on 30 October 2023).

- Whitcomb, L.; Jakuba, M.; Kinsey, J.; Martin, S.; Webster, S.; Howl, J.; Taylor, C.; Gomez-Ibanez, D.; Yoerger, D. Navigation and control of the Nereus hybrid underwater vehicle for global ocean science to 10,903 m depth: Preliminary results. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 594–600. [Google Scholar]

- Johansson, B.; Siesjö, J.; Furuholmen, M. Seaeye Sabertooth, a hybrid AUV/ROV offshore system. In Proceedings of the SPE Offshore Europe Conference and Exhibition, Aberdeen, UK, 6–8 September 2011; p. SPE-146121. [Google Scholar]

- Khatib, O.; Yeh, X.; Brantner, G.; Soe, B.; Kim, B.; Ganguly, S.; Stuart, H.; Wang, S.; Cutkosky, M.; Edsinger, A.; et al. Ocean one: A robotic avatar for oceanic discovery. IEEE Robot. Autom. Mag. 2016, 23, 20–29. [Google Scholar] [CrossRef]

- Manley, J.; Halpin, S.; Radford, N.; Ondler, M. Aquanaut: A new tool for subsea inspection and intervention. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018; pp. 1–4. [Google Scholar]

- Pi, R.; Esteba, J.; Cieslak, P.; Palomeras, N.; Sanz, P.J.; Marín, R.; Ridao, P. OPTIHROV: Optically Linked Hybrid Autonomous/Remotely Operated Vehicle, Beyond Teleoperation in a New Generation of Underwater Intervention Vehicles. In Proceedings of the OCEANS 2023-Limerick, Limerick, Ireland, 5–8 June 2023; pp. 1–7. [Google Scholar]

- Hildebrandt, M.; Gaudig, C.; Christensen, L.; Natarajan, S.; Carrio, J.; Paranhos, P.; Kirchner, F. A validation process for underwater localization algorithms. Int. J. Adv. Robot. Syst. 2014, 11, 138. [Google Scholar] [CrossRef]

- Sanz, P.J.; Marín, R.; Peñalver, A.; Fornas, D.; Centelles, D. Merbots project: Overal description, multisensory autonomous perception and grasping for underwater robotics interventions. In Proceedings of the Actas De Las XXXVIII Jornadas De Automática, Gijón, Spain, 6–8 September 2017. [Google Scholar]

- Cuesta, F.; Ollero, A. Intelligent Mobile Robot Navigation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Oficial Web Page of Ping Protocol. Available online: https://docs.bluerobotics.com/ping-protocol/ (accessed on 30 October 2023).

- Mucherino, A.; Papajorgji, P.; Pardalos, P.; Mucherino, A.; Papajorgji, P.; Pardalos, P. K-nearest neighbor classification. In Data Mining in Agriculture; Springer: New York, NY, USA, 2009; pp. 83–106. [Google Scholar]

- Makavita, C.; Nguyen, H.; Ranmuthugala, D. Fuzzy gain scheduling based optimally tuned PID controllers for an unmanned underwater vehicle. Int. J. Conceptions Electron. Commun. Eng. 2014, 2, 7–13. [Google Scholar]

- A Control Architecture for Developing Reactive Hybrid ROVs, Experimental Results. Available online: https://youtu.be/Y9xjwm2RRV4 (accessed on 2 November 2023).

| Feature | Value |

|---|---|

| Weight | 16 kg |

| Size | 0.52 × 0.39 × 0.29 m |

| Maximum Depth | 300 m |

| Maximum Speed | 3 knots (1.54 m/s) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gómez-Bravo, F.; Garrocho-Cruz, A.; Marín-Cañas, O.; Pulido-Calvo, I.; Gutierrez-Estrada, J.C.; Peregrín-Rubio, A. A Control Architecture for Developing Reactive Hybrid Remotely Operated Underwater Vehicles. Machines 2024, 12, 1. https://doi.org/10.3390/machines12010001

Gómez-Bravo F, Garrocho-Cruz A, Marín-Cañas O, Pulido-Calvo I, Gutierrez-Estrada JC, Peregrín-Rubio A. A Control Architecture for Developing Reactive Hybrid Remotely Operated Underwater Vehicles. Machines. 2024; 12(1):1. https://doi.org/10.3390/machines12010001

Chicago/Turabian StyleGómez-Bravo, Fernando, Alejandro Garrocho-Cruz, Olga Marín-Cañas, Inmaculada Pulido-Calvo, Juan Carlos Gutierrez-Estrada, and Antonio Peregrín-Rubio. 2024. "A Control Architecture for Developing Reactive Hybrid Remotely Operated Underwater Vehicles" Machines 12, no. 1: 1. https://doi.org/10.3390/machines12010001

APA StyleGómez-Bravo, F., Garrocho-Cruz, A., Marín-Cañas, O., Pulido-Calvo, I., Gutierrez-Estrada, J. C., & Peregrín-Rubio, A. (2024). A Control Architecture for Developing Reactive Hybrid Remotely Operated Underwater Vehicles. Machines, 12(1), 1. https://doi.org/10.3390/machines12010001