1. Introduction

Within machining processes, cutting tools are subjected to different stresses, which cause tool wear and deformation. Said wear has an impact on the quality of the supplies produced due to poor surface finishes and problems with the dimensional accuracy of the parts, which negatively impact producers due to the cost of production. Tools account for up to 25% of the total cost and up to 20% of machinery downtime. Additionally, it is estimated that cutting tools are used between 70 and 80% of their useful life [

1], which increases the need for systems to accurately evaluate cutting tool wear, as costs can be reduced by 10–40% by maximizing tool utilization [

2]. These wear monitoring and evaluation systems have been developed for decades, using a variety of physical properties and sensors in order to obtain information that indicates the condition of the tools used, in addition to using different methodologies and analysis techniques for extracting information from the measurements taken. However, despite the proposed systems, there is still a need for the development of new methodologies that provide processes and machines with options for the detection or analysis of the evolution of tool wear according to the diverse needs of the processes.

Over the years, several physical properties have been used for the detection and analysis of wear on cutting tools in computer numerical control (CNC) machinery, such as current, vibrations, forces, acoustic emissions (AE), sound and temperature, as well as the use of artificial vision systems, roughness or finish analyses and, recently, the use of magnetic stray flux. Obtaining information on the condition of the tool through machine elements is ideal due to the relationship between the variables measured on the machine and the evolution of wear; in this sense, the spindle motor current is commonly analyzed in motor current signature analyses (MCSA). Zou et al. [

3] employed an MCSA to generate an online system from the spindle motor current with a bispectrum signal modulation (BSM) algorithm by identifying the magnitude and phase of the BSM to distinguish between the effects of three levels of wear upon changes in the depth of cut and workpiece diameter of an A3 steel piece, although only one of the obtained features could identify the wear for each depth of cut. On the other hand, Marani et al. [

4] implemented a feature extraction of current signals where the root mean square (RMS) values of the tests were obtained and a network with a long short-term memory (LSTM) model was used to predict the evolution of tool wear on a coated carbide tool used for the turning of steel alloy, comparing the accuracy of 30 different architectures to obtain the best suited for the proposed experiment.

Likewise, vibration signals are one of the most exploited measurements for wear detection. Tabaszewski et al. [

5] used triaxial accelerometers to distinguish between two tool states at different cutting speeds during turning of EN-GJL-250 with carbide cutting inserts using different intelligent techniques and selecting the most appropriate one, which was a classification and regression tree (CART) with a 2.06% error. Patange et al. [

6] used an accelerometer and machine learning (ML) techniques based on trees to classify six types of wear under fixed machining parameters while turning a stainless steel workpiece on a manual lathe; the authors performed a statistical feature extraction, selection and classification, obtaining the best results with a random forest (RF) model, with 92.6% accuracy. In both cases, the placement of the sensor was close to the cutting tool, which is quite invasive of the process.

Regarding the use of force signals, the work of Bombiński et al. [

7] shows the use of triaxial sensors placed in the vicinity of the carbide tools employed to cut NC10 steel and 40 HNM steel, which were able to detect accelerated wear using waveform comparison algorithms automatically online based on the operator’s consideration of when the tool life ends during training. As an example of a work using AE and sound, Salodkar [

8] placed a sensor on the tool shank and used a fuzzy neural network (FNN) to predict wear online for the machining of En31 alloy steel. Considering the machining parameters used, positive results were achieved for the prediction of seven flank wear states. Casal-Guisande et al. [

9] used sound and process variables for risk assessment in the use of two cutting tools for machining aluminum, with favorable results when compared with experts’ opinions, although the methodology requires further validation and optimization.

Another variable used is temperature; in the work presented by Rakkiyannan et al. [

10], a sensor was designed and placed on a high-speed steel cutting tool and the changes in temperature and deformation provided information on the state of the tool while cutting mild steel. The results were corroborated with thermographic images, and three levels were successfully detected with a span of 1.2 mm due to the sensor’s degradation. On the other hand, Brili et al. [

11] made use of thermographs of a tool within the work area after different durations of cutting to assess whether the tool was in a condition to continue operating. They combined computer vision and deep learning (DL) to obtain a 96% accuracy, identifying the most appropriate time lap for the image acquisition.

Similarly, machine vision and image analysis systems have grown in popularity; in the work presented by Sawangsri et al. [

12], a charge-coupled device (CCD) camera was used in the working area to evaluate the progressive wear of the tools when machining SCM440 alloy steel by comparing the number of pixels of the tool before and after being used. The estimations were validated against SMr2 (valley material portion) values measured with a microscope. Bagga et al. [

13] employed an artificial neural network (ANN) considering the machining parameters for the turning of AISI 4140 steel and used images captured inside the working area of the carbide tools at specific time intervals. The worn area was obtained by processing the images by filtering, enhancement, thresholding and calculating the number of pixels in the area, and the remaining useful life was determined with two activation functions, sigmoid and rectified linear unit (ReLU), achieving an accuracy of 86.5% and 93.3%, respectively.

Regarding surface finish analyses, this task can be performed with specialized equipment; however, Shen and Kiow [

14] used image analysis of the machined part to extract features and predict tool wear for the turning of AISI 1045 carbon steel using coated carbide inserts with a recurrent neural network (RNN). The surface roughness was predicted with a 92.75% effectiveness from the average gray level value, standard deviation and entropy, and the surface roughness was predicted with 64.59% accuracy.

Likewise, the fusion of information from different sensors to obtain better features has grown in popularity within these systems, allowing the creation of more complex and efficient systems. In this sense, Kou et al. [

15] used vibration and current signals to generate RGB images that were combined with infrared images of the tool to distinguish between six levels of wear under variation of the machining parameters using information related to the tool and the machine to train a convolutional neural network (CNN). The method was able to classify the wear with 91% accuracy, but with a longer training time in comparison with other methods and with lower accuracy. Bagga et al. [

16] employed force and vibration signals to predict the level of wear in tools with variations in cutting parameters for the turning of EN8 carbon steel using an ANN, and validated this method by comparing the predicted value with the direct measurement of wear for all experiments, obtaining a mean percentage difference of 3.48%.

Kuntoğlu and Sağlam [

17] used force, vibration, AE, temperature and current signals to predict tool wear and breakage to design an online monitoring system for the turning of AISI 5140 carbon steel with coated carbide tools. They reported that the ability of temperature and AE to detect wear was 74% effective, and force, AE and vibrations were able to predict breakage. Current had a low contribution to predictions in their experiments. Similarly, the results reported by Hoang et al. [

18] indicated the ability of AE and vibrations to predict wear and roughness in the machining of SCM440 steel, combining a Gaussian process regression and adaptive neuro-fuzzy inference system (GPR-ANFIS) algorithms to process RMS values of the signals, achieving an average prediction accuracy of 97.57% in online monitoring with the proposed methodology.

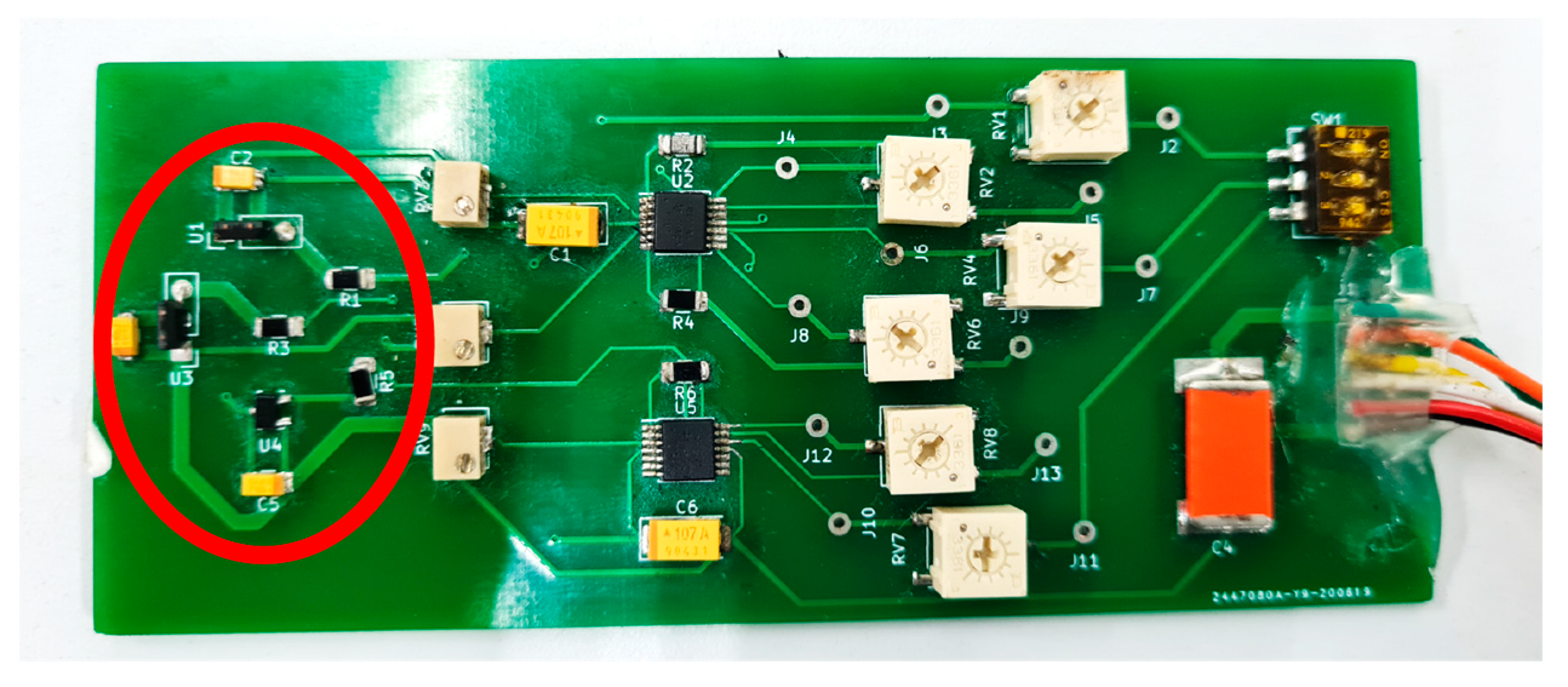

Jaen-Cuellar et al. [

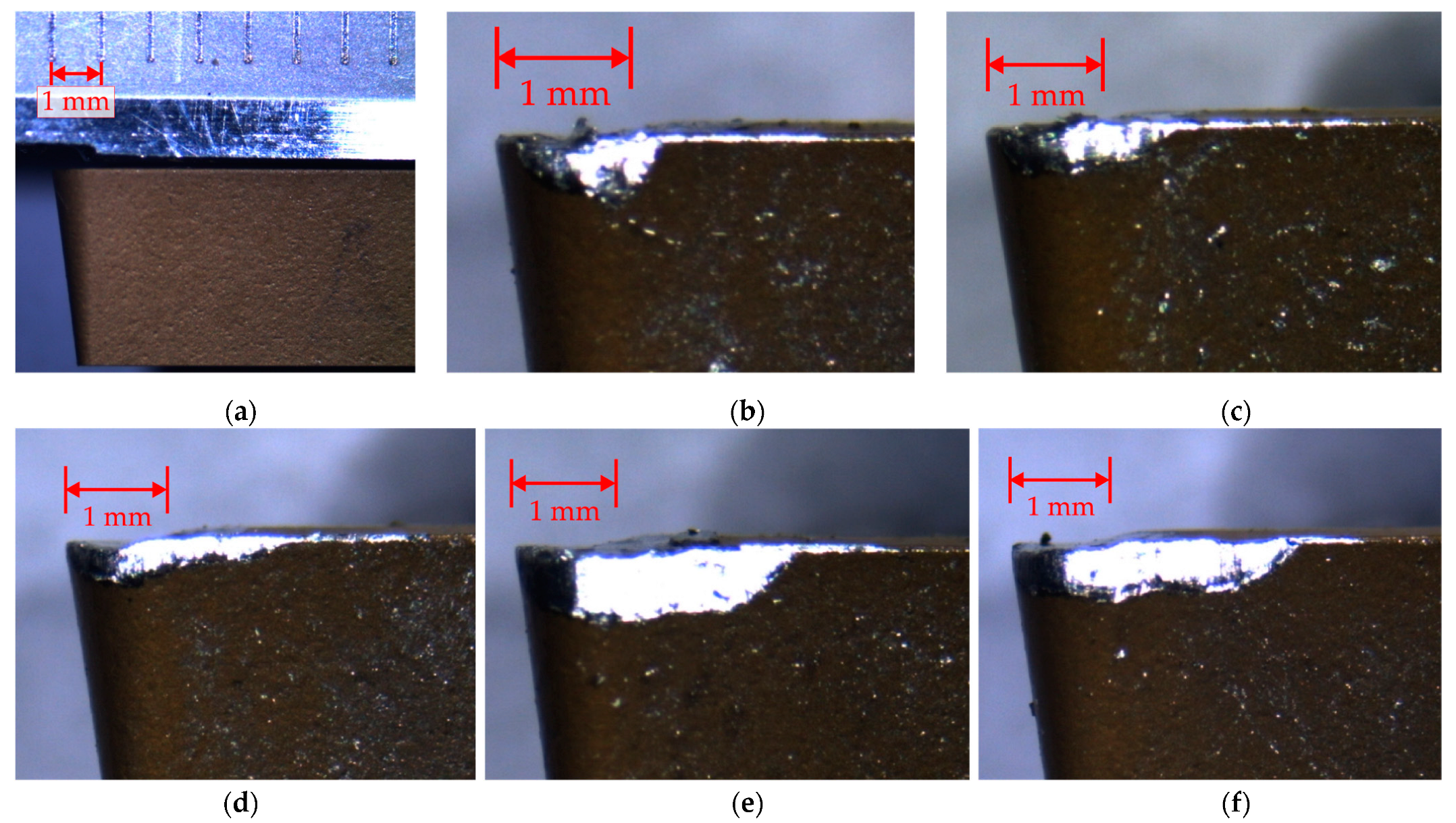

19] reported the use of stray flux and current for the detection of three levels of wear with individual variation of two machining parameters for aluminum 6061 turning with coated carbide tools by means of feature extraction, dimensionality reduction and classification with an ANN, achieving a top performance of 94.4%. Diaz-Saldaña et al. [

20] employed stray flux signals and image analyses of the surface finish to identify three levels of wear on coated carbide tools with variation in cutting speed in aluminum 6061 turning, obtaining a good differentiation between all the conditions when applying histogram peak counts to the images and feature extraction on the stray flux signals.

It is important to highlight the invasive nature of the sensors for image and sound capture that must be placed in the work area. Temperature, vibration or force sensors must also be placed in the proximity of the cutting tool, an aspect that greatly limits their implementation due to the necessary adaptations to the work area and cutting tasks for an adequate measurement of wear.

On the other hand, the techniques used for processing the information obtained for the identification, evaluation or prediction of cutting tool wear are varied. Several literature reviews have identified the main techniques, tools and trends for processing [

21,

22,

23,

24]. Firstly, signals are processed directly in the time domain [

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18] or techniques or transforms are used for their analyses in the frequency [

3,

5,

18,

19,

20] or time-frequency [

19,

20] domains. From here, several techniques are used to obtain features that allow the analysis to be carried out in a better way, such as the use of statistical indicators [

3,

4,

5,

6,

8,

14,

18,

19,

20], time or time-frequency transforms for direct feature extraction [

3,

4,

5,

19,

20] and, in some cases, methods for the selection of the most appropriate features or dimensionality reduction such as heuristic techniques [

5,

6,

22], linear discriminant analysis (LDA) [

19] or principal component analysis (PCA). Subsequently, classification or decision-making techniques tend to use intelligent systems such as different types of neural networks [

8,

13,

14,

16,

19,

25], support vector machines (SVM) [

21,

22,

23,

24], hidden Markov models (HMM) [

21,

22,

23,

24], fuzzy systems [

8,

9,

17,

18] and DL systems [

4,

11,

14,

15].

Based on the above, it is possible to note that some of the current research on tool wear makes use of a single variable as the source of information, while others follow the trend towards the use of multiple sensors and the fusion of their information, which in some cases comes from the same source (i.e., part of the machine, tool or workpiece). In both cases, there is a trend towards the use of intelligent systems that allow automatic handling of information for the identification, classification or prediction of tool wear, and process the information in the time and/or frequency domain to obtain characteristics that help to gain a better understanding of the phenomena. The use of intelligent techniques is of great value when processing large amounts of information generated by fused sensors, allowing a more adequate manipulation and interpretation of the data. Another point to note is the implementation of the methodologies, since they can be performed online, allowing process monitoring [

3,

4,

5,

7,

8,

9,

10,

11,

12,

14,

15,

16,

17,

18,

19], or offline once the machining of a part has been carried out [

6,

13,

20,

26]. This is an important aspect, since in methodologies that use vision systems or images, the machining process must be stopped for the acquisition of images of the tool or they may be taken after the process; however, this depends on the needs and planning of the process.

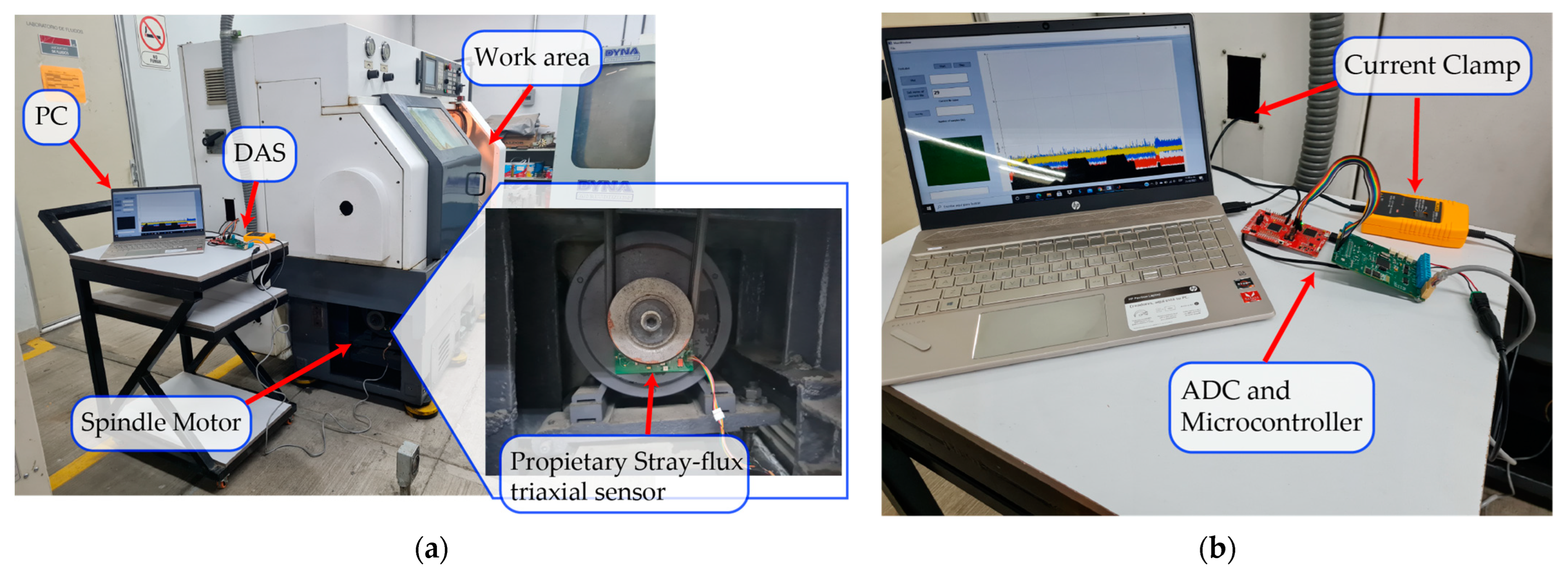

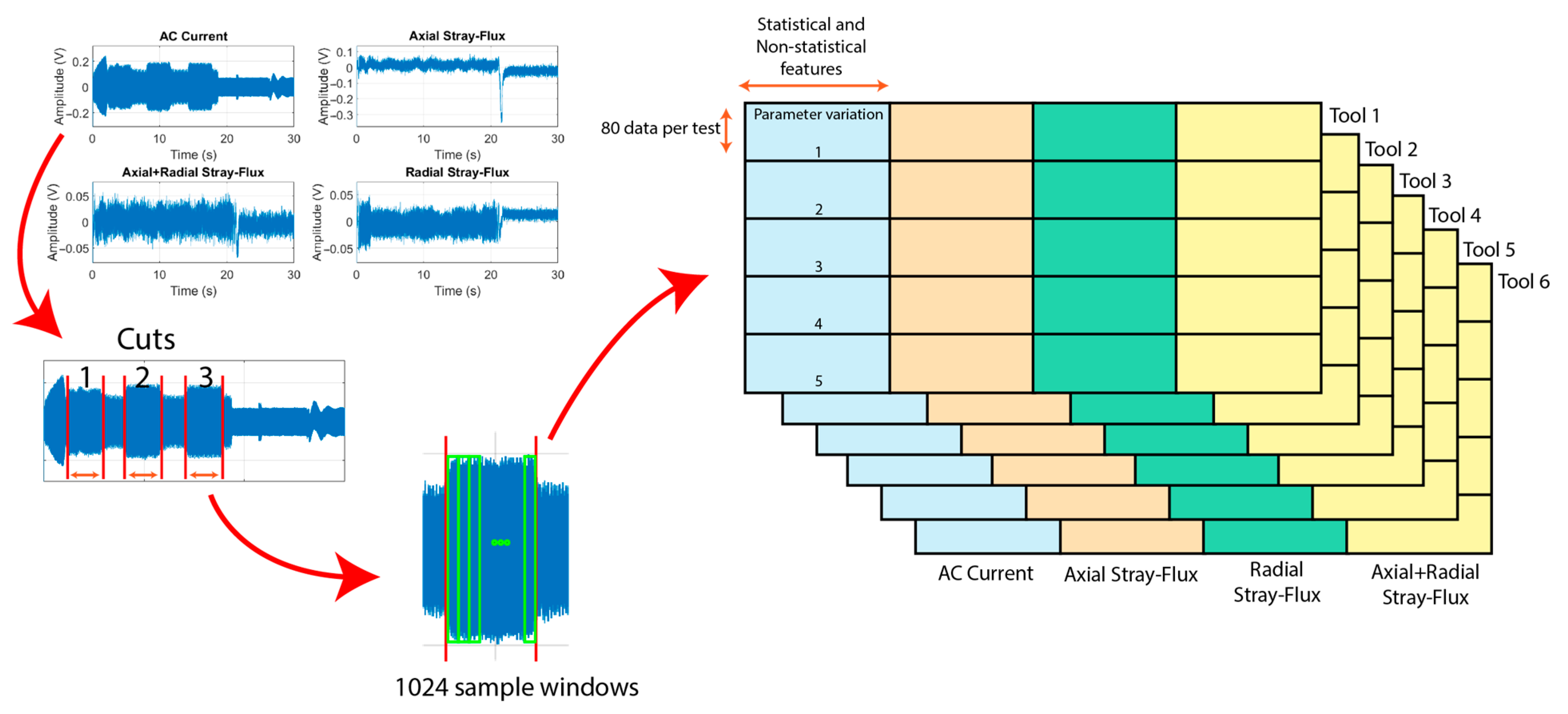

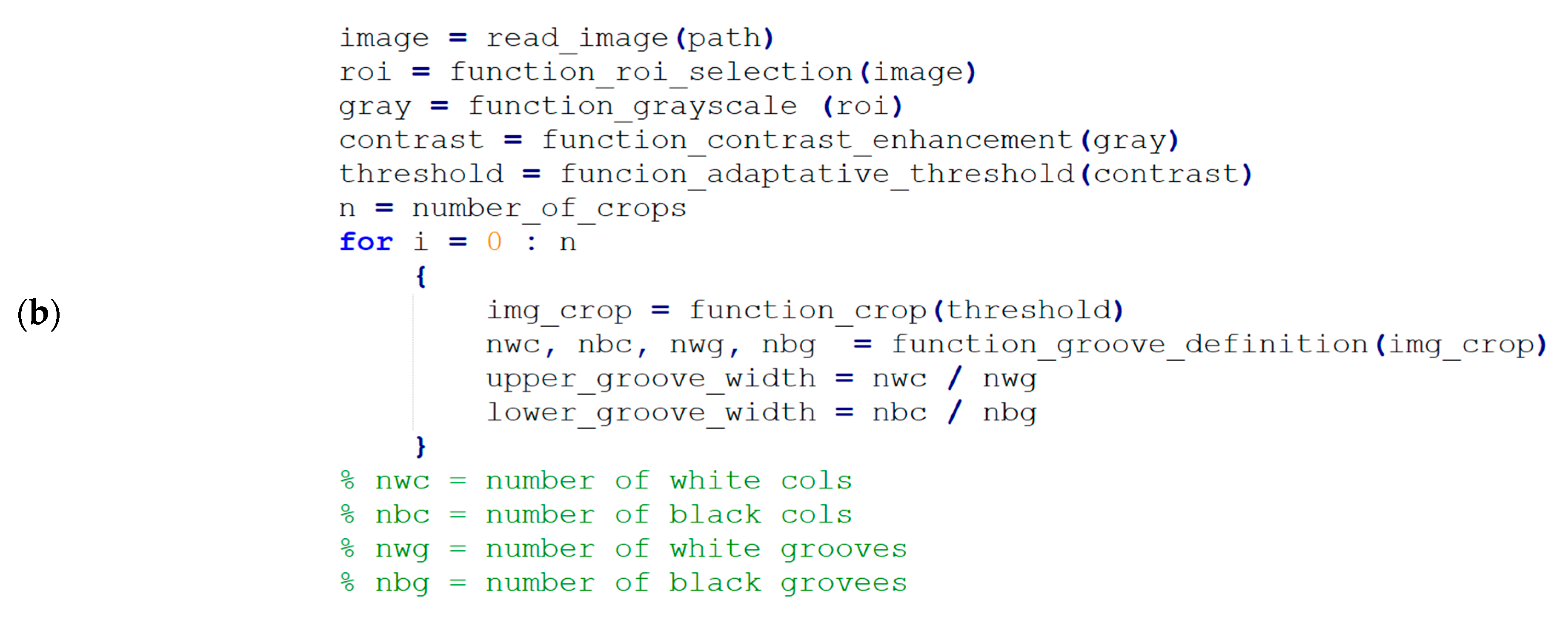

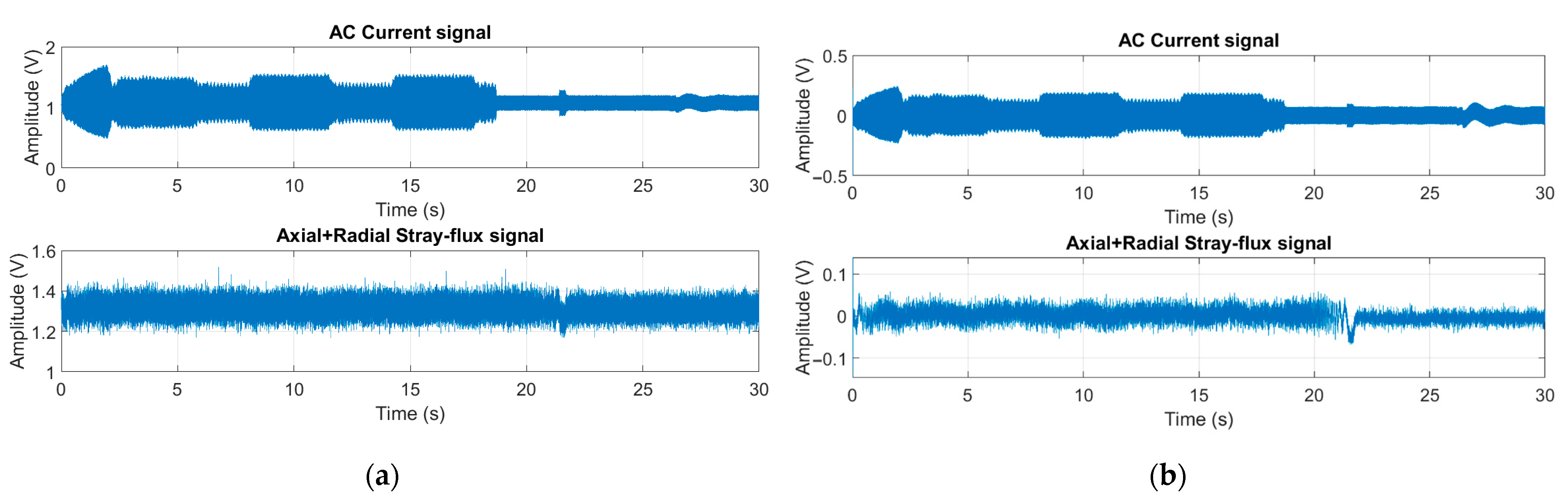

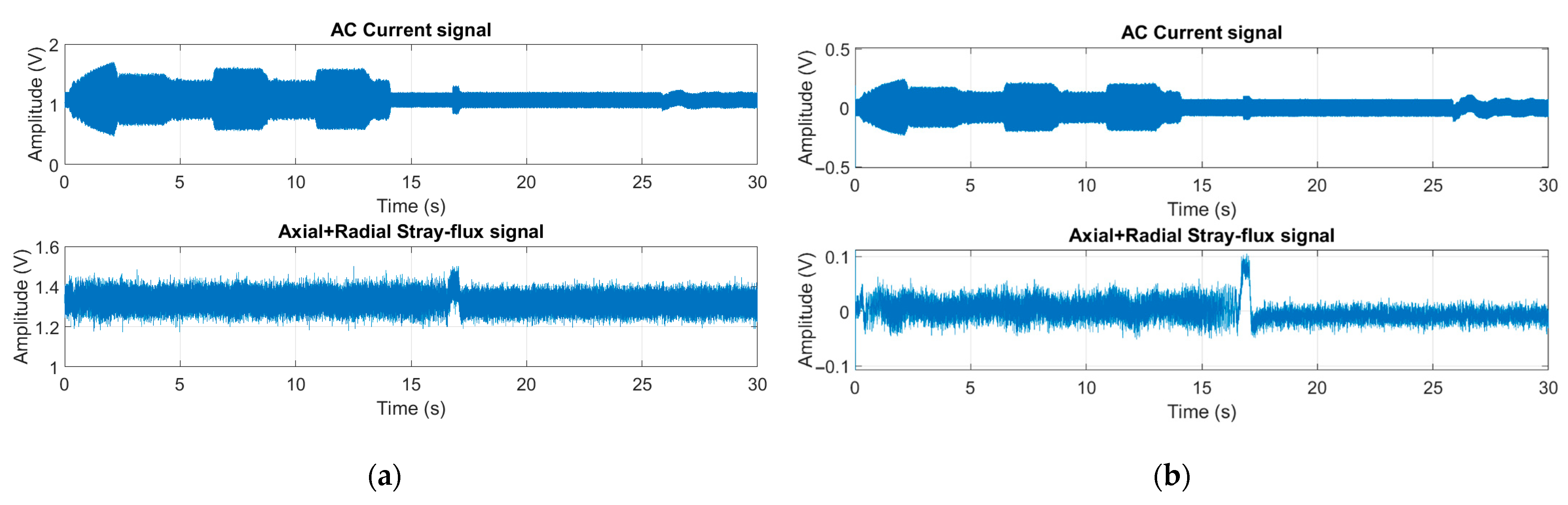

Considering the foregoing, the main contribution of this research is the development of a system for the detection of the level of wear in cutting tools used in a CNC lathe by analyzing the information obtained directly from the spindle motor of the machine using non-invasive current and stray flux sensors, as well as from images of the surface finish of the machined parts. The proposed system allows the analysis of the signals individually and together, allowing it to be adapted to the user’s needs to evaluate the wear online or after the process is finished, all through the use of the fast Fourier transform (FFT), the discrete wavelet transform (DWT), statistical and non-statistical indicators, dimensionality reduction by linear discriminant analysis (LDA) and a feed-forward neural network (FFNN) for wear classification under the individual variations of the cutting speed and the feed rate of the tool.

5. Discussion

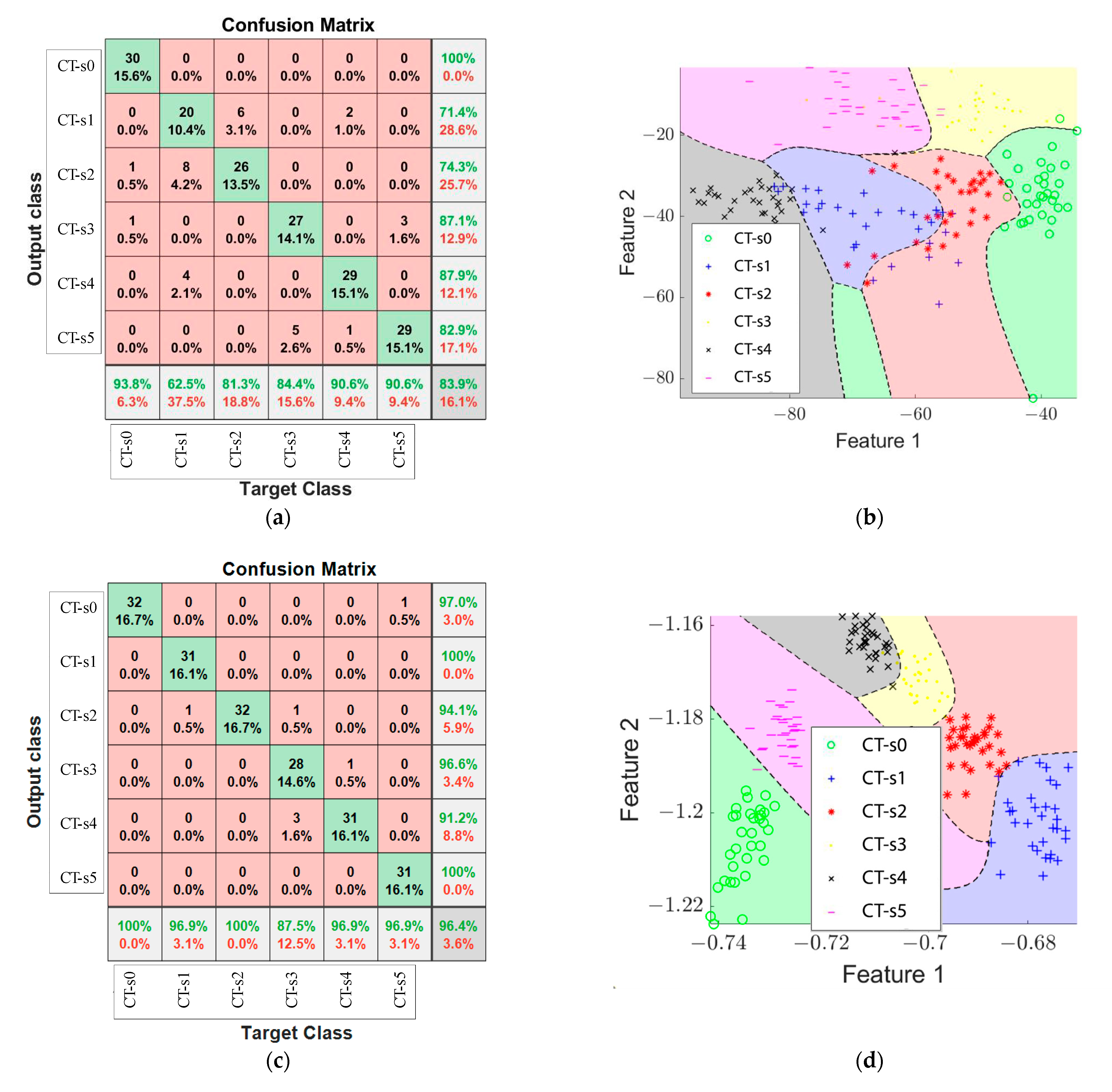

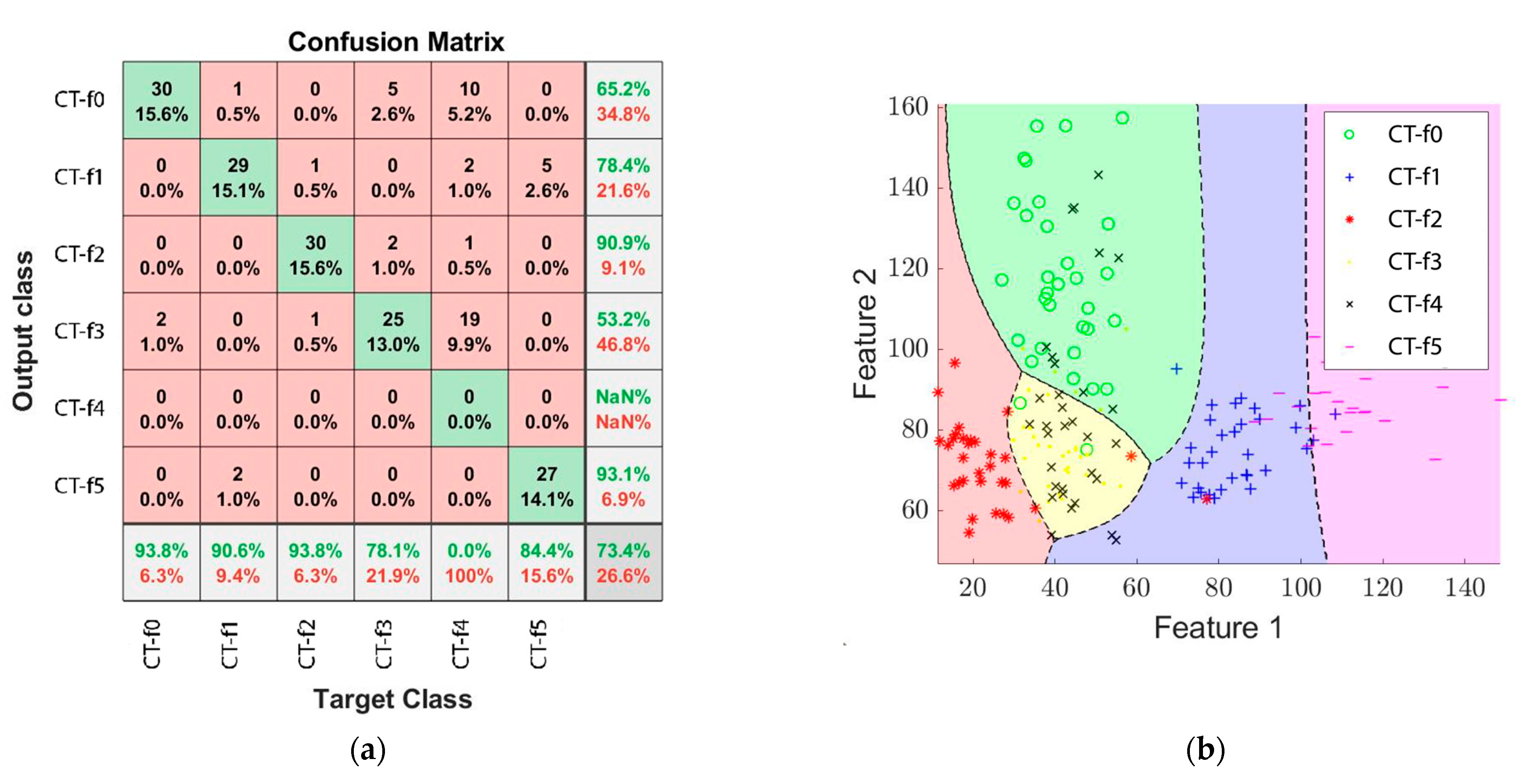

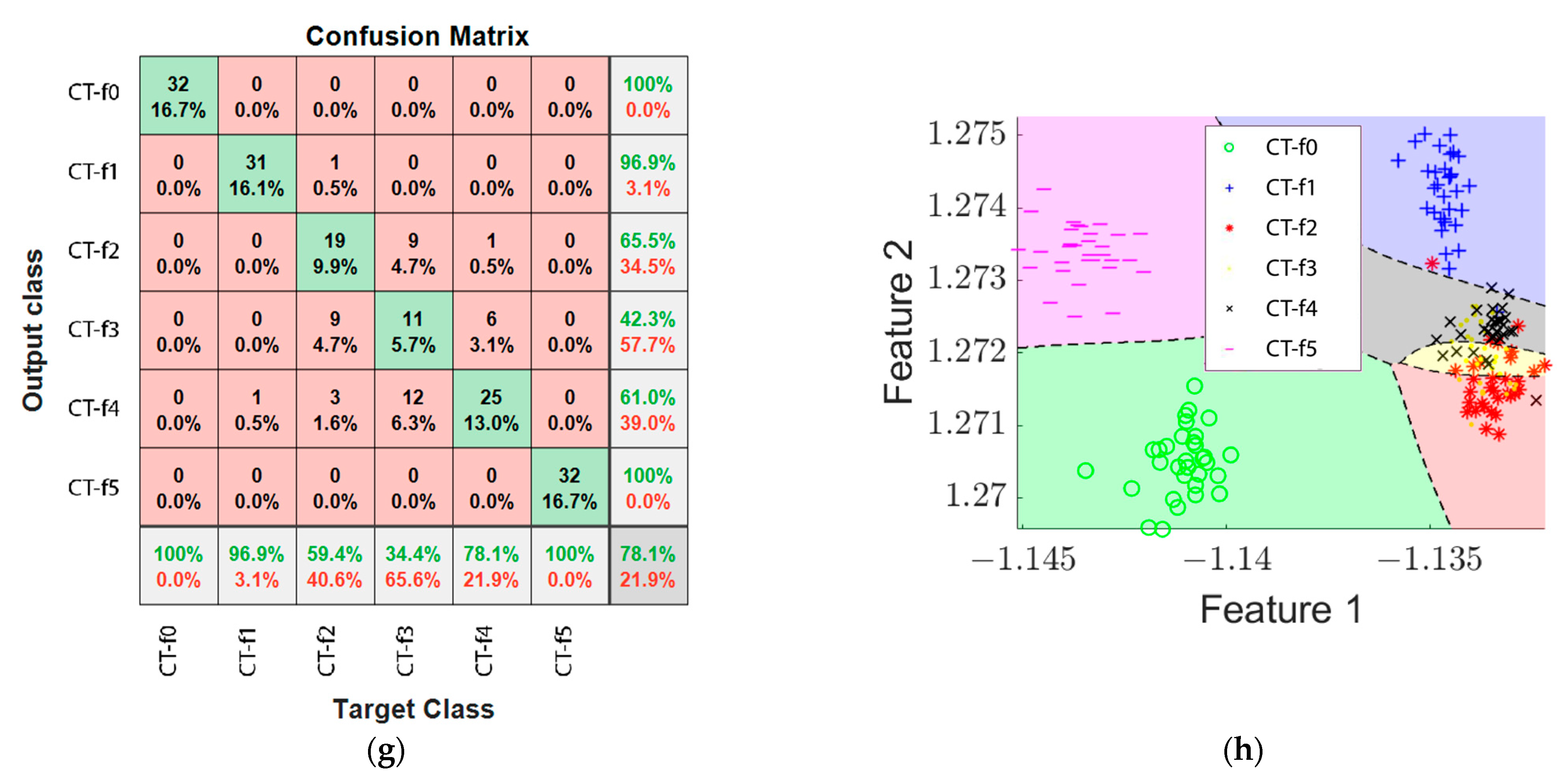

The results shown in

Table 6 provide the opportunity to analyze the effectiveness of wear identification in the cutting speed variation experiments by processing information from different sources with the proposed methodology. For this case study, it can be observed that the use of the current signal only leads to small improvements in the identification of wear with the proposed methodology, which was increased by 7% when combined with the information from images and less than 2.5% when fused with the stray flux. The accuracy of the results was decreased by 0.8% when fusing current signals with images and stray flux information compared to when current data was not included. On the contrary, it can be seen that the use of magnetic stray flux has a greater influence on the correct identification of wear, achieving the best results for all the conditions when comparing to the analysis of the signals individually for these experiments.

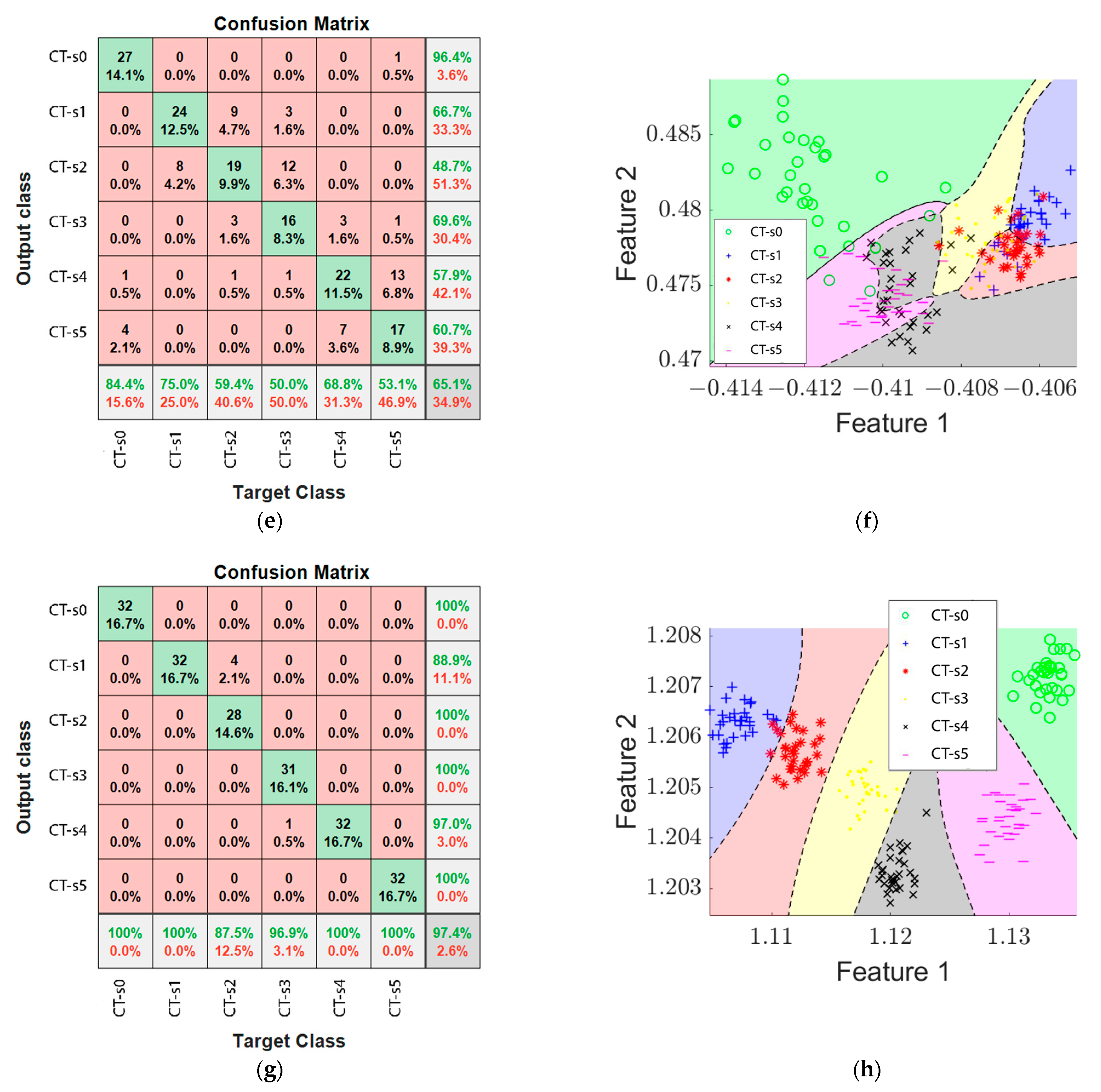

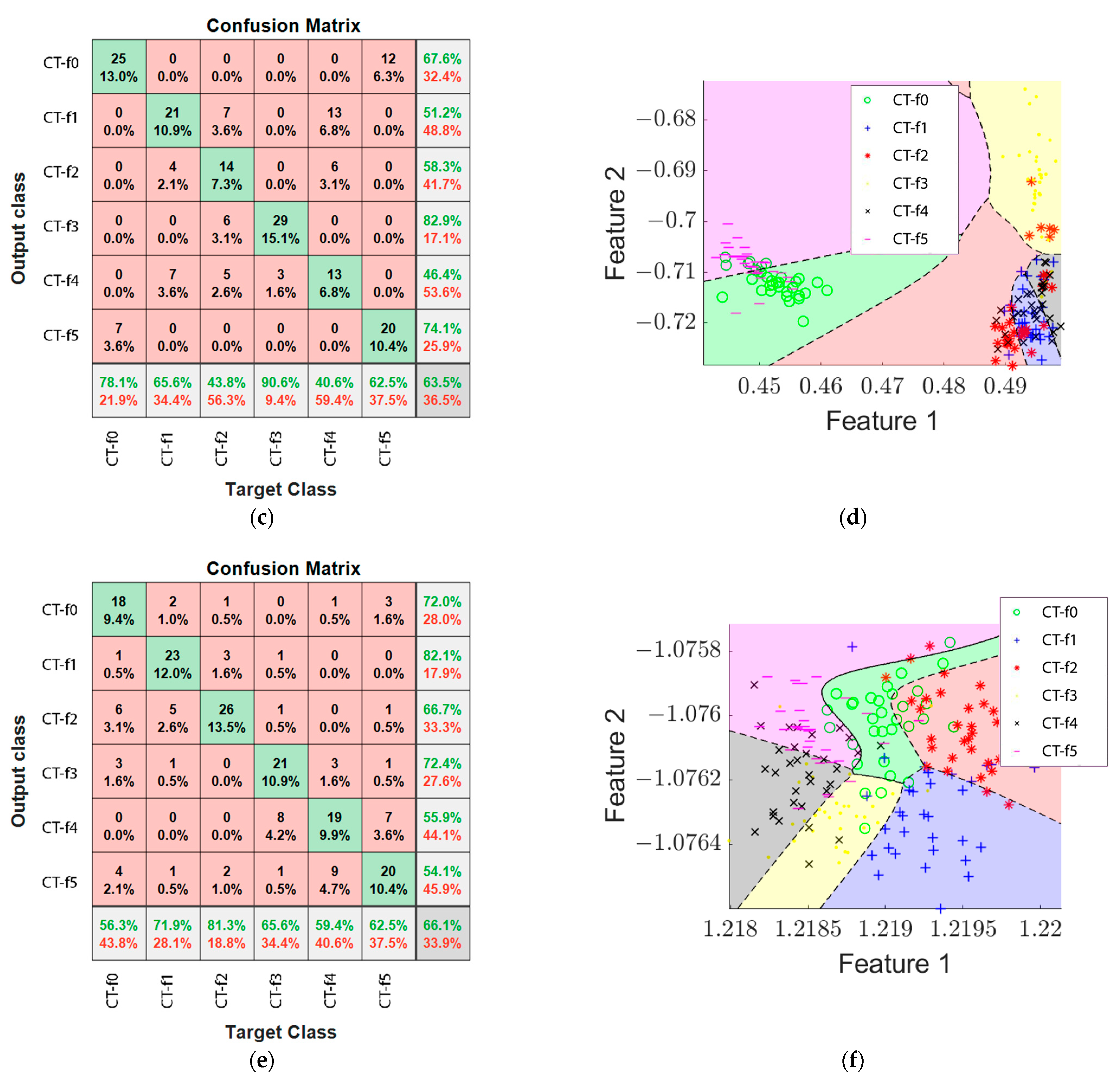

On the other hand,

Table 7 presents the analysis results of the feed rate tests. With these results, it is possible to observe that for the tool feed rate experiments, the lowest contribution to wear identification accuracy is from the magnetic stray flux signal, adding 3.32% to the surface images accuracy and 4.48% when merged with the current data, but reducing the effectiveness by 2.8% when fused with image and current data. On the contrary, the image analysis presented the highest accuracy for identification of tool wear for the feed rate variations in contrast to the other signals.

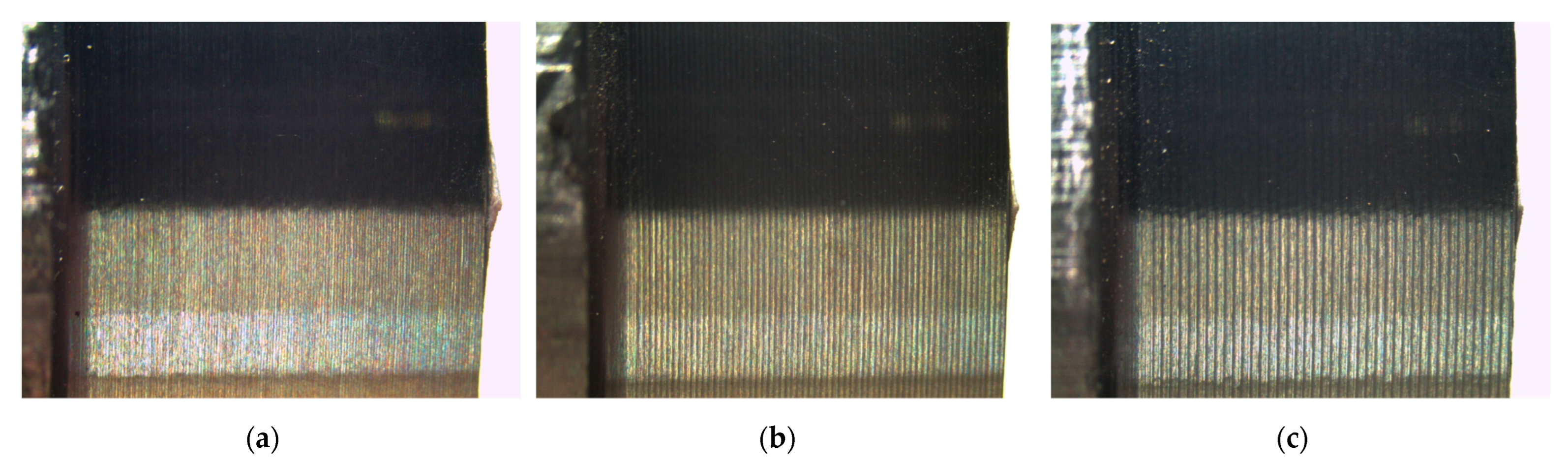

When comparing the results obtained for both case studies, it is possible to observe an overall reduction in efficiency for the classification in the tool feed rate tests, this could be caused due to the effect of the variation in this parameter in the cutting process, as it changes the speed at which the tool travels through the workpiece and causes changes in the spacing of the cuts and the appearance of the grooves in addition to the effect of tool wear. In contrast, in the cutting speed case, the feed rate is constant and the changes in the grooves are caused only by tool wear.

Table 8 presents a brief comparison of the results of the proposed methodology with some previously reported in the literature, where some of the sensors are invasive. The main differences between our work and most of the reported works are the non-invasive nature of the sensors used, the possibility to choose the signal to analyze during the machining process or after it is finished and the quality of the pieces that are evaluated. In addition, the number of variations for each of the machining parameters was individually modified to evaluate the effect they have on the detection of wear of cutting tools with the objective of generating a system that can correctly identify wear regardless of the changes in the parameters.

These results show the potential of this methodology, as the effectiveness of the identification of tool wear through the fusion of different signals was increased. In addition, it is a non-invasive methodology for the machining process as it uses signals captured during the machining operations without the need to interrupt them and images of the surface finish of the parts are captured once operations are finished.

6. Conclusions

This paper proposes a non-invasive methodology based on the fusion of signals from the spindle motor of a CNC lathe and the analysis of the surface finish of the machined parts for the generation of a system. This allows the identification of wear with the individual variation of two important machining parameters with the possibility to analyze a single source or a combination of them according to the user’s needs and preferences without interrupting the process.

For the variation in cutting speed, the proposed methodology was able to identify the six levels of tool wear with an accuracy of 77.50% after analysis of the workpiece surface, 89.78% after analysis of stray flux signals and 73.02% after AC current signal analysis, with a peak efficiency of 95.02% after analysis of the fusion of surface images and stray flux signals.

For the variation in tool feed rate, the proposed system achieved a 76.34% effectiveness when using image analysis, 63.12% when analyzing stray flux signals and 73.0% when analyzing AC current signals, achieving a peak accuracy of 82.84% when analyzing both surface images and current signals.

For each of the case studies, it was possible to observe a different contribution of the signals for wear classification, achieving better results by including the magnetic stray flux within the information to be analyzed for the case of cutting speed tests and a better classification by including image analysis for the tool feed rate tests.

Additionally, it was noticed that the variation in the tool feed rate affects the formation of grooves when removing material from the workpiece, on top of the effect of tool wear, which makes it more difficult for the proposed system to correctly identify and classify tool wear in the tools used.

In future work, other non-invasive sensors, such as sound or AE, could be used to increase the number of information sources for a more robust analysis. Information processing could also be improved by applying other indicators and extracting more information or features from the surface images or by applying different classification techniques backed by the literature in order to improve the effects of using cutting parameters in the identification of tool wear. Additionally, with the knowledge of the effect of the tool feed rate on groove formation, a new approach could be developed to improve the wear identification while varying this parameter.