Online Hand Gesture Detection and Recognition for UAV Motion Planning

Abstract

:1. Introduction

- (i)

- poor adaptability of hand gestures design can lead to a high rate of nonrecognition;

- (ii)

- unsatisfying hand gesture detection and recognition results affect the real-time performance and stability of the system;

- (iii)

- UAV’s complex flight tasks assisted by hand gesture interaction in an unknown and cluttered environment have not been considered well.

- To improve the adaptability of hand gestures, an IMU data glove with a high signal-to-noise ratio and high transmission rate was developed, and a public hand gesture set was designed for interaction between hand gestures and UAV.

- To enhance the effectiveness and robustness of the system, a new asynchronous hand gesture detection and recognition method was proposed, which cascaded two high-precision classifiers.

- To overcome the problem of UAV’s complex flight tasks in unknown and cluttered environments, an online hand gesture detection and recognition method was innovatively applied to UAV motion planning, which realized complex flight tasks asynchronously.

2. Related Work

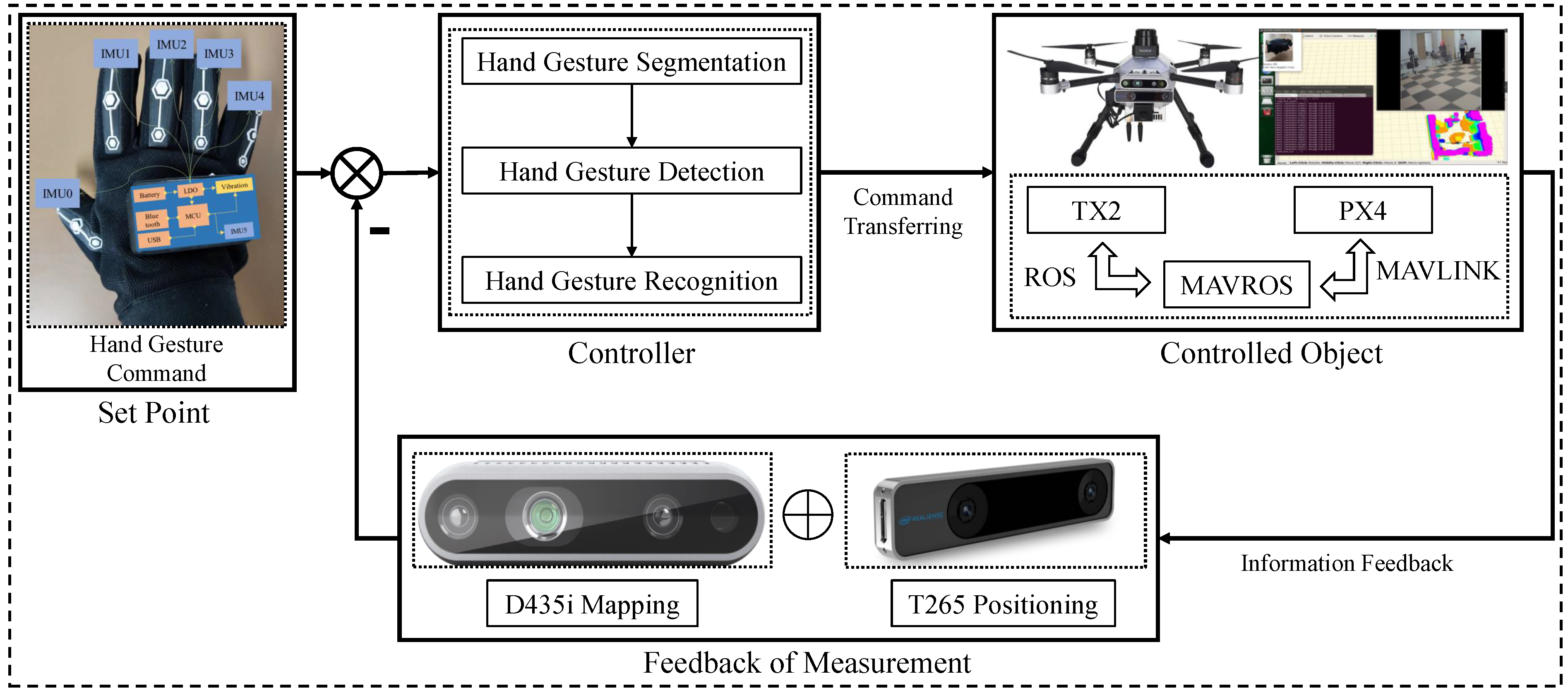

3. Materials and Methods

3.1. IMU Data Glove

3.1.1. Central Control and Wireless Transmission Module

3.1.2. Distributed Multi-Node IMU Module

3.1.3. Vibration Motor Module

3.2. Hand Gesture Set Design

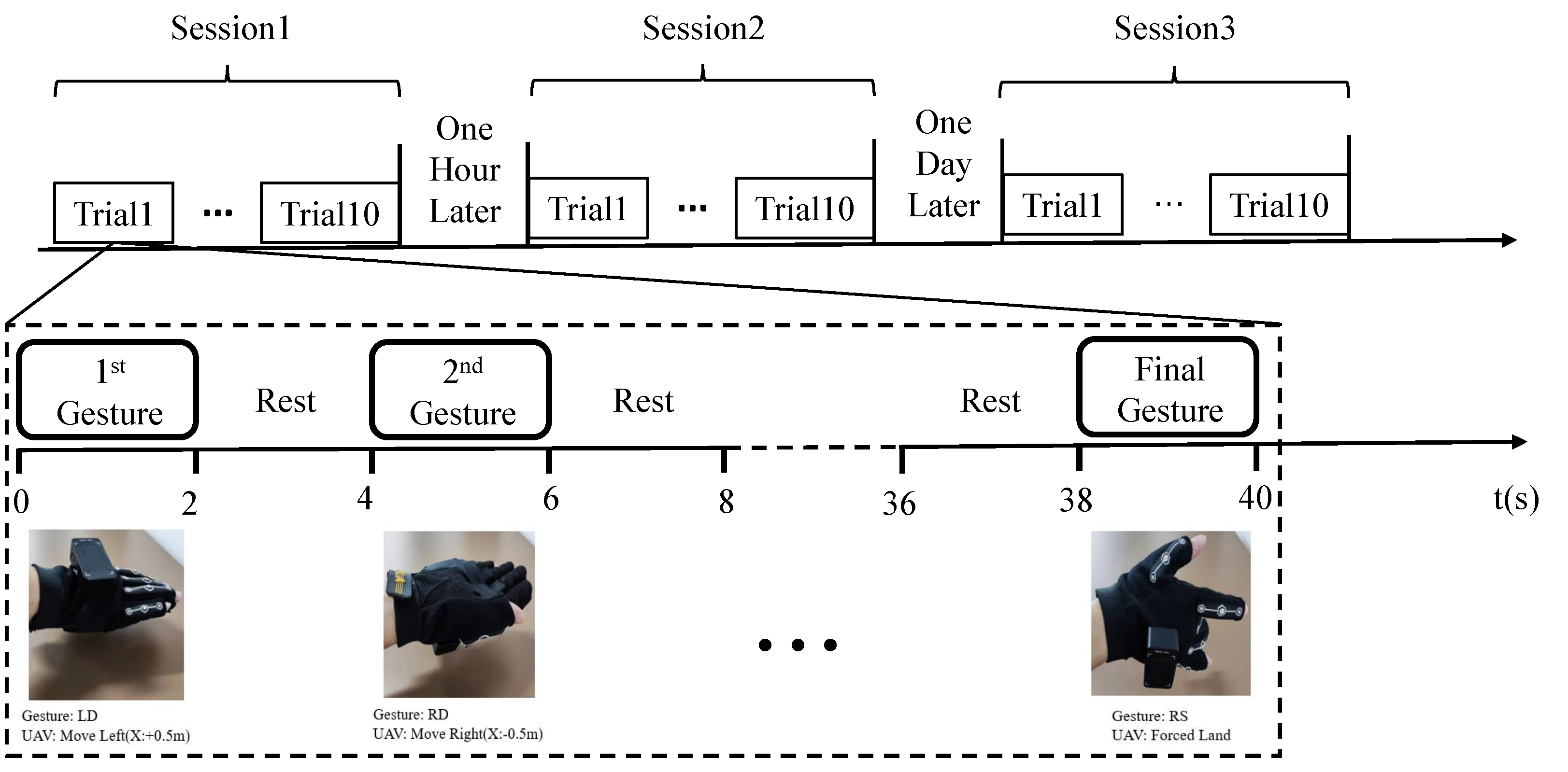

3.3. Dataset Generation

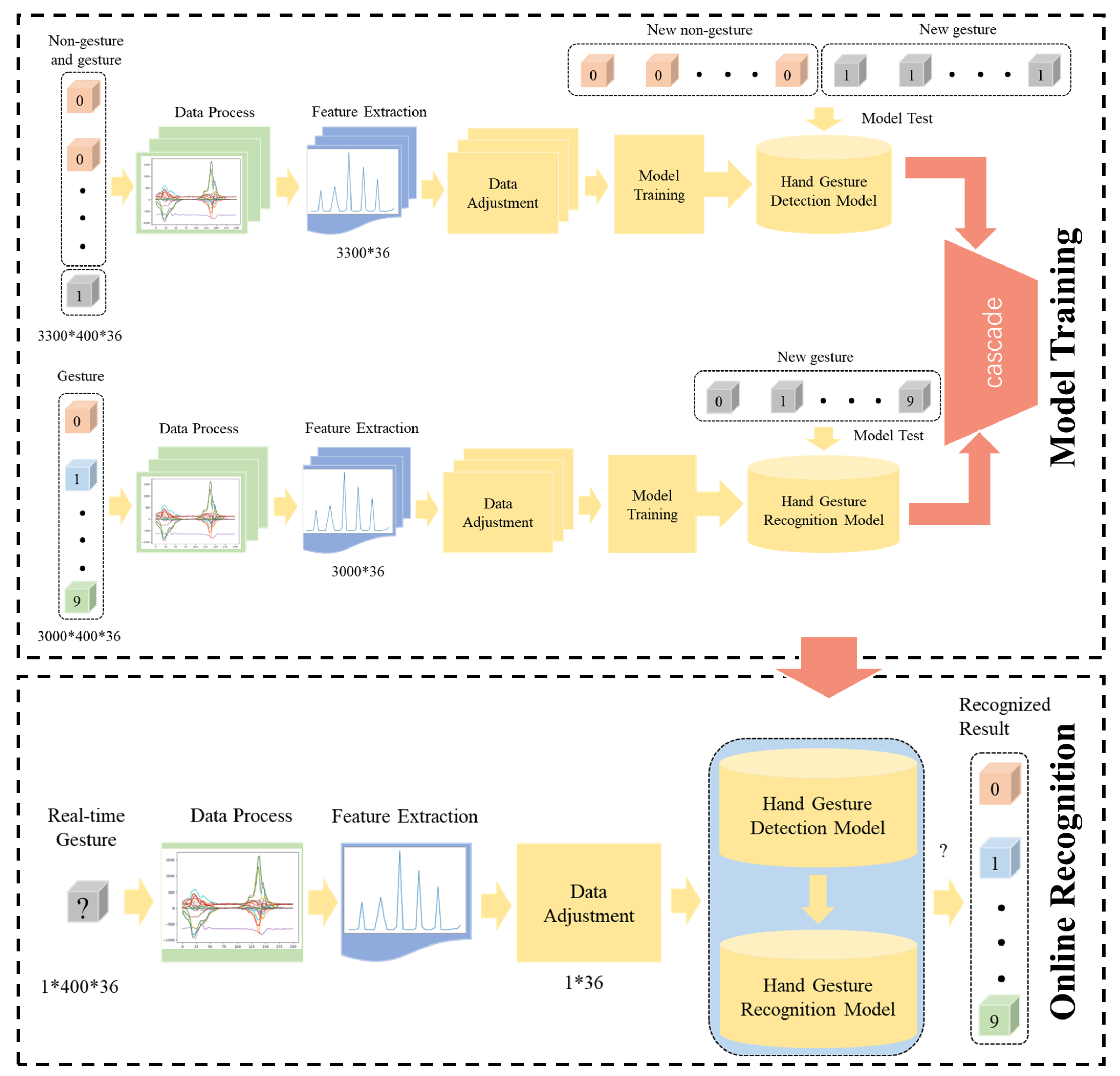

3.4. Hand Gesture Detection and Recognition

| Algorithm 1 Online Hand Gesture Detection and Recognition |

|

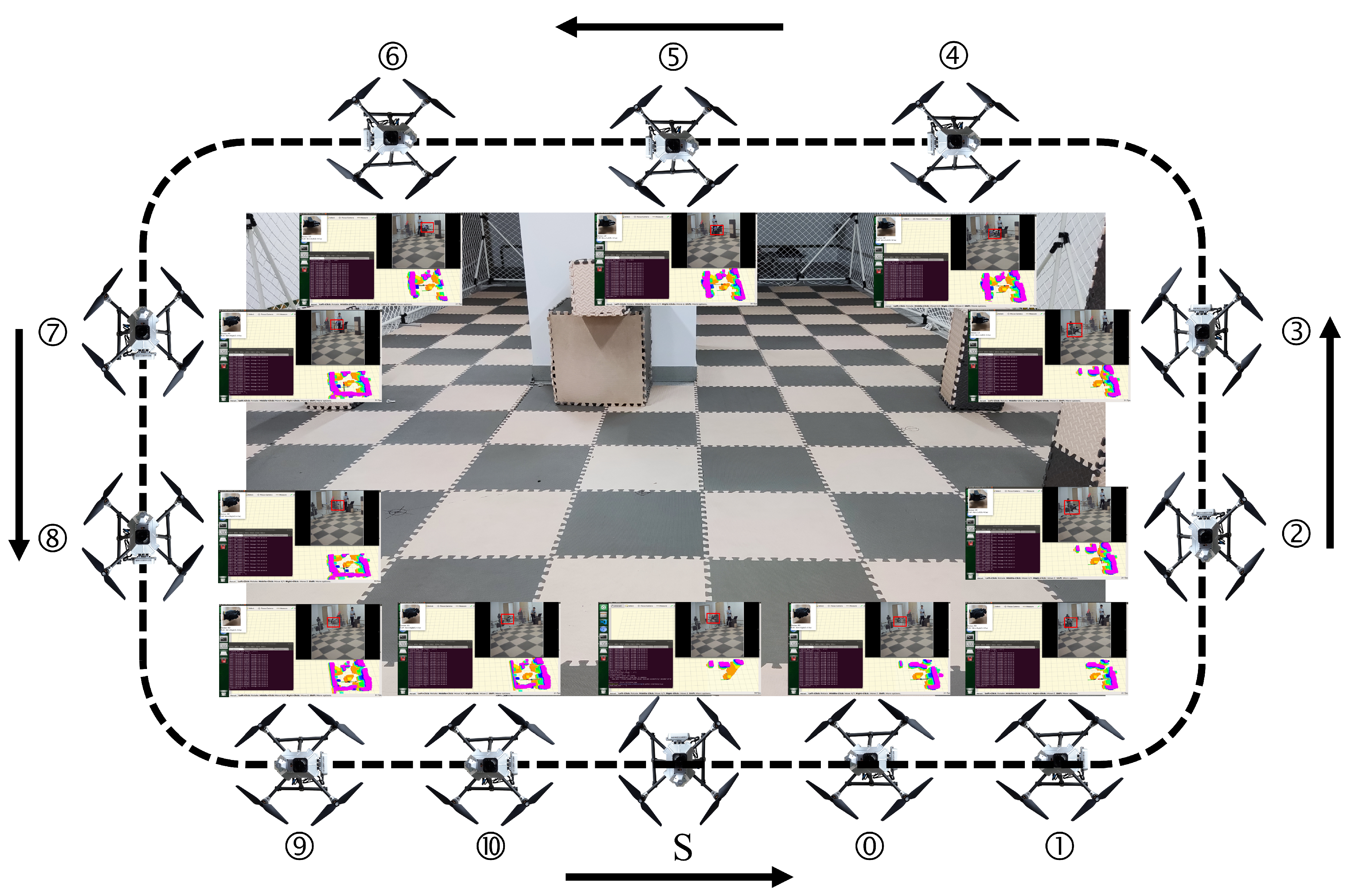

4. Experimental Results and Analysis

4.1. Comparison of Classification Accuracy for Different Classifiers and Hand Gestures

4.2. Comparison of Online Hand Gesture Recognition Performance under Different Hand Gesture Detection Methods

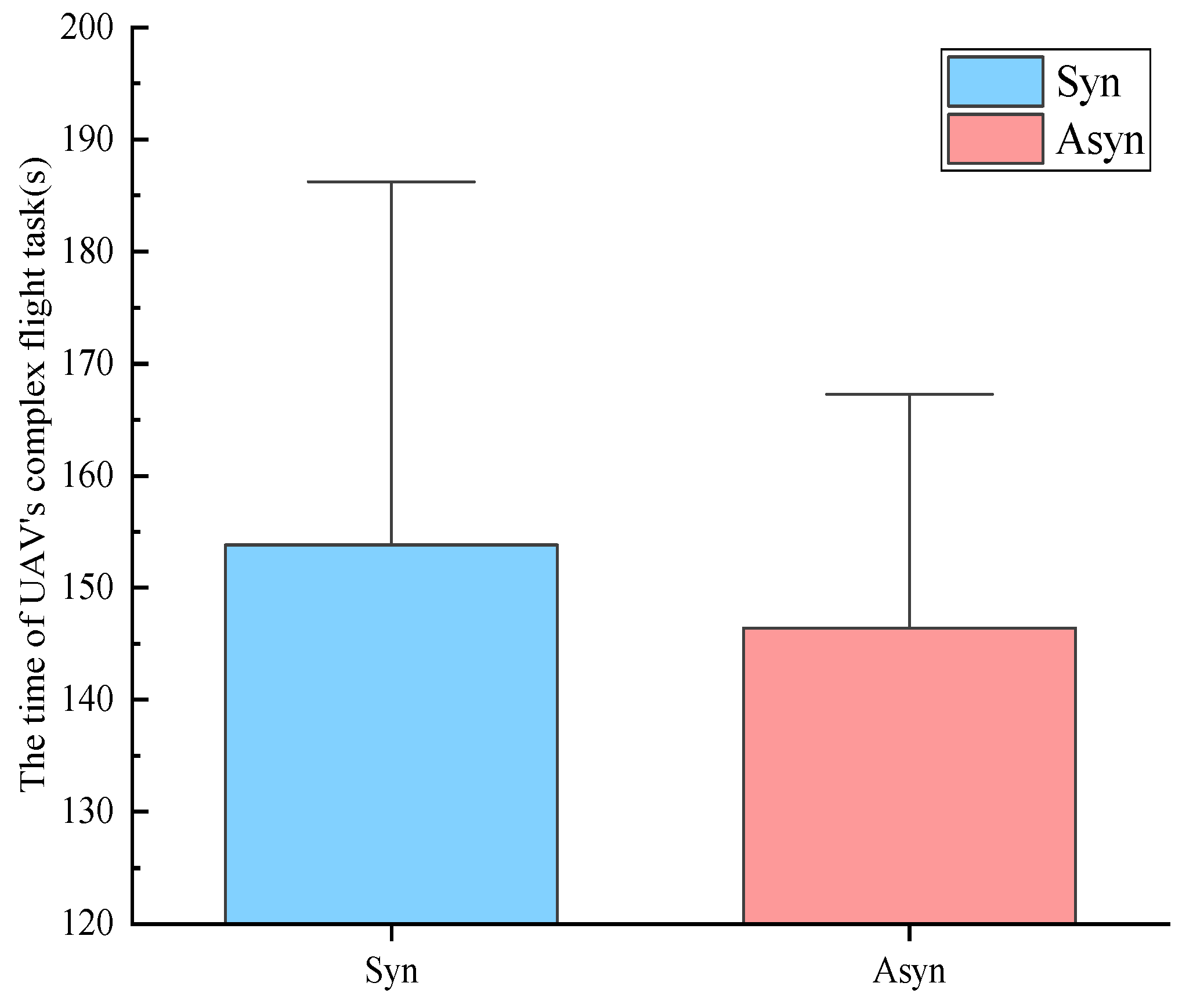

4.3. Comparison of Online Interaction Performance under Different Hand Gesture Detection Methods

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Oneata, D.; Cucu, H. Kite: Automatic speech recognition for unmanned aerial vehicles. arXiv 2019, arXiv:1907.01195. [Google Scholar]

- Smolyanskiy, N.; Gonzalez-Franco, M. Stereoscopic first person view system for drone navigation. Front. Robot. AI 2017, 4, 11. [Google Scholar] [CrossRef]

- How, D.N.T.; Ibrahim, W.Z.F.B.W.; Sahari, K.S.M. A Dataglove Hardware Design and Real-Time Sign Gesture Interpretation. In Proceedings of the 2018 Joint 10th International Conference on Soft Computing and Intelligent Systems (SCIS) and 19th International Symposium on Advanced Intelligent Systems (ISIS), Toyama, Japan, 5–8 December 2018; pp. 946–949. [Google Scholar]

- Ilyina, I.A.; Eltikova, E.A.; Uvarova, K.A.; Chelysheva, S.D. Metaverse-Death to Offline Communication or Empowerment of Interaction? In Proceedings of the 2022 Communication Strategies in Digital Society Seminar (ComSDS), Saint Petersburg, Russia, 13 April 2022; pp. 117–119. [Google Scholar]

- Serpiva, V.; Karmanova, E.; Fedoseev, A.; Perminov, S.; Tsetserukou, D. DronePaint: Swarm Light Painting with DNN-based Gesture Recognition. In Proceedings of the ACM SIGGRAPH 2021 Emerging Technologies, Virtual Event, USA, 9–13 August 2021; pp. 1–4. [Google Scholar]

- Liu, C.; Szirányi, T. Real-time human detection and gesture recognition for on-board uav rescue. Sensors 2021, 21, 2180. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Chen, X.; Li, Q.; Zhang, X.; Zhou, P. A hand gesture recognition framework and wearable gesture-based interaction prototype for mobile devices. IEEE Trans. Hum. Mach. Syst. 2014, 44, 293–299. [Google Scholar] [CrossRef]

- Zhou, S.; Zhang, G.; Chung, R.; Liou, J.Y.; Li, W.J. Real-time hand-writing tracking and recognition by integrated micro motion and vision sensors platform. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 1747–1752. [Google Scholar]

- Yang, K.; Zhang, Z. Real-time pattern recognition for hand gesture based on ANN and surface EMG. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 24–26 May 2019; pp. 799–802. [Google Scholar]

- Krisandria, K.N.; Dewantara, B.S.B.; Pramadihanto, D. HOG-based Hand Gesture Recognition Using Kinect. In Proceedings of the 2019 International Electronics Symposium (IES), Surabaya, Indonesia, 27–28 September 2019; pp. 254–259. [Google Scholar]

- Li, J.; Liu, X.; Wang, Z.; Zhang, T.; Qiu, S.; Zhao, H.; Zhou, X.; Cai, H.; Ni, R.; Cangelosi, A. Real-Time Hand Gesture Tracking for Human–Computer Interface Based on Multi-Sensor Data Fusion. IEEE Sens. J. 2021, 21, 26642–26654. [Google Scholar] [CrossRef]

- Mummadi, C.K.; Philips Peter Leo, F.; Deep Verma, K.; Kasireddy, S.; Scholl, P.M.; Kempfle, J.; Van Laerhoven, K. Real-time and embedded detection of hand gestures with an IMU-based glove. Proc. Inform. 2018, 5, 28. [Google Scholar] [CrossRef]

- Makaussov, O.; Krassavin, M.; Zhabinets, M.; Fazli, S. A low-cost, IMU-based real-time on device gesture recognition glove. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3346–3351. [Google Scholar]

- Zhang, X.; Yang, Z.; Chen, T.; Chen, D.; Huang, M.C. Cooperative sensing and wearable computing for sequential hand gesture recognition. IEEE Sens. J. 2019, 19, 5775–5783. [Google Scholar] [CrossRef]

- Jiang, S.; Lv, B.; Guo, W.; Zhang, C.; Wang, H.; Sheng, X.; Shull, P.B. Feasibility of wrist-worn, real-time hand, and surface gesture recognition via sEMG and IMU sensing. IEEE Trans. Ind. Inform. 2017, 14, 3376–3385. [Google Scholar] [CrossRef]

- Neto, P.; Pereira, D.; Pires, J.N.; Moreira, A.P. Real-time and continuous hand gesture spotting: An approach based on artificial neural networks. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 178–183. [Google Scholar]

- Simão, M.A.; Neto, P.; Gibaru, O. Unsupervised gesture segmentation by motion detection of a real-time data stream. IEEE Trans. Ind. Inform. 2016, 13, 473–481. [Google Scholar] [CrossRef]

- Li, Q.; Huang, C.; Yao, Z.; Chen, Y.; Ma, L. Continuous dynamic gesture spotting algorithm based on Dempster–Shafer Theory in the augmented reality human computer interaction. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14, e1931. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Bae, J. Deep learning based real-time recognition of dynamic finger gestures using a data glove. IEEE Access 2020, 8, 219923–219933. [Google Scholar] [CrossRef]

- Choi, Y.; Hwang, I.; Oh, S. Wearable gesture control of agile micro quadrotors. In Proceedings of the 2017 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Daegu, Republic of Korea, 16–18 November 2017; pp. 266–271. [Google Scholar]

- Yu, Y.; Wang, X.; Zhong, Z.; Zhang, Y. ROS-based UAV control using hand gesture recognition. In Proceedings of the 2017 29th Chinese Control And Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 6795–6799. [Google Scholar]

- Yu, C.; Fan, S.; Liu, Y.; Shu, Y. End-Side Gesture Recognition Method for UAV Control. IEEE Sens. J. 2022, 22, 24526–24540. [Google Scholar] [CrossRef]

- Zhou, B.; Gao, F.; Wang, L.; Liu, C.; Shen, S. Robust and efficient quadrotor trajectory generation for fast autonomous flight. IEEE Robot. Autom. Lett. 2019, 4, 3529–3536. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, Z.; Ye, H.; Xu, C.; Gao, F. Ego-planner: An esdf-free gradient-based local planner for quadrotors. IEEE Robot. Autom. Lett. 2020, 6, 478–485. [Google Scholar] [CrossRef]

- Han, H.; Yoon, S.W. Gyroscope-based continuous human hand gesture recognition for multi-modal wearable input device for human machine interaction. Sensors 2019, 19, 2562. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Zhang, Z. Hand gesture recognition using sEMG signals based on support vector machine. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 24–26 May 2019; pp. 230–234. [Google Scholar]

- Chen, Y.; Luo, B.; Chen, Y.L.; Liang, G.; Wu, X. A real-time dynamic hand gesture recognition system using kinect sensor. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 2026–2030. [Google Scholar]

- Lian, K.Y.; Chiu, C.C.; Hong, Y.J.; Sung, W.T. Wearable armband for real time hand gesture recognition. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 2992–2995. [Google Scholar]

- Wang, N.; Chen, Y.; Zhang, X. The recognition of multi-finger prehensile postures using LDA. Biomed. Signal Process. Control 2013, 8, 706–712. [Google Scholar] [CrossRef]

- Joshi, A.; Monnier, C.; Betke, M.; Sclaroff, S. A random forest approach to segmenting and classifying gestures. In Proceedings of the 2015 11Th IEEE International Conference And Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; Volume 1, pp. 1–7. [Google Scholar]

- Jia, R.; Yang, L.; Li, Y.; Xin, Z. Gestures recognition of sEMG signal based on Random Forest. In Proceedings of the 2021 IEEE 16th Conference on Industrial Electronics and Applications (ICIEA), Chengdu, China, 1–4 August 2021; pp. 1673–1678. [Google Scholar]

- Liang, X.; Ghannam, R.; Heidari, H. Wrist-worn gesture sensing with wearable intelligence. IEEE Sens. J. 2018, 19, 1082–1090. [Google Scholar] [CrossRef]

- Dellacasa Bellingegni, A.; Gruppioni, E.; Colazzo, G.; Davalli, A.; Sacchetti, R.; Guglielmelli, E.; Zollo, L. NLR, MLP, SVM, and LDA: A comparative analysis on EMG data from people with trans-radial amputation. J. Neuroeng. Rehabil. 2017, 14, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Liu, X.; Yu, J.; Zhang, L.; Zhou, X. Research on Multi-modal Interactive Control for Quadrotor UAV. In Proceedings of the 2019 IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, AB, Canada, 9–11 May 2019; pp. 329–334. [Google Scholar]

- Lin, B.S.; Hsiao, P.C.; Yang, S.Y.; Su, C.S.; Lee, I.J. Data glove system embedded with inertial measurement units for hand function evaluation in stroke patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2204–2213. [Google Scholar] [CrossRef] [PubMed]

- Xu, P.F.; Liu, Z.X.; Li, F.; Wang, H.P. A Low-Cost Wearable Hand Gesture Detecting System Based on IMU and Convolutional Neural Network. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Virtual, 1–5 November 2021; pp. 6999–7002. [Google Scholar]

- Hu, B.; Wang, J. Deep learning based hand gesture recognition and UAV flight controls. Int. J. Autom. Comput. 2020, 17, 17–29. [Google Scholar] [CrossRef]

| ID | Hand Gesture | Comment | ID | Hand Gesture | Comment |

|---|---|---|---|---|---|

| S1 | LD 1 |  | S2 | RD 2 |  |

| S3 | UM 3 |  | S4 | DM 4 |  |

| S5 | HF 5 |  | S6 | CF 6 |  |

| S7 | TU 7 |  | S8 | OS 8 |  |

| S9 | LF 9 |  | S10 | RS 10 |  |

| ID | Hand Gesture | Comment |

|---|---|---|

| C1 | HF+LD 1 |  |

| C2 | HF+RD 2 |  |

| C3 | HF+UM 3 |  |

| C4 | HF+DM 4 |  |

| ID | Hand Gesture | Flight Motion |

|---|---|---|

| S1 | LD | Move Left |

| S2 | RD | Move Right |

| S3 | UM | Move Up |

| S4 | DM | Move Down |

| S5 | HF | Wait for Combination |

| S6 | CF | Disarm |

| S7 | TU | Take off on High |

| S8 | OS | Arm |

| S9 | LF | Hover |

| S10 | RS | Forced Land |

| C1 | HF+LD | Move Forward |

| C2 | HF+RD | Move Backward |

| C3 | HF+UM | Turn Left |

| C4 | HF+DM | Turn Right |

| Subject | GNB | RF | SVM | KNN | LDA |

|---|---|---|---|---|---|

| Subject 1 | 100.00 | 100.00 | 99.14 | 100.00 | 99.14 |

| Subject 2 | 96.55 | 90.83 | 84.91 | 100.00 | 55.39 |

| Subject 3 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Subject 4 | 99.14 | 82.50 | 80.39 | 99.14 | 96.55 |

| Subject 5 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Subject 6 | 92.24 | 91.67 | 72.41 | 88.58 | 93.10 |

| Subject 7 | 100.00 | 100.00 | 100.00 | 100.00 | 99.14 |

| Subject 8 | 98.28 | 100.00 | 98.28 | 98.28 | 97.41 |

| Subject 9 | 98.28 | 98.33 | 93.75 | 98.28 | 96.55 |

| Subject 10 | 100.00 | 98.33 | 100.00 | 100.00 | 100.00 |

| Avg. | 98.45 | 96.17 | 92.89 | 98.43 | 93.73 |

| Subject | RF | SVM | KNN | LDA | GNB |

|---|---|---|---|---|---|

| Subject 1 | 98.33 | 93.33 | 71.67 | 93.33 | 88.33 |

| Subject 2 | 85.00 | 91.67 | 91.67 | 88.33 | 83.33 |

| Subject 3 | 100.00 | 100.00 | 96.67 | 96.67 | 100.00 |

| Subject 4 | 95.00 | 86.67 | 83.33 | 90.00 | 90.00 |

| Subject 5 | 98.33 | 98.33 | 98.33 | 95.00 | 98.33 |

| Subject 6 | 93.33 | 81.67 | 86.67 | 86.67 | 80.00 |

| Subject 7 | 98.33 | 95.00 | 90.00 | 95.00 | 95.00 |

| Subject 8 | 100.00 | 95.00 | 88.33 | 96.67 | 95.00 |

| Subject 9 | 96.67 | 90.00 | 83.33 | 78.33 | 78.33 |

| Subject 10 | 93.33 | 93.33 | 90.00 | 96.67 | 91.67 |

| Avg. | 95.83 | 92.50 | 88.00 | 91.67 | 90.00 |

| Detection Method | Subject | Recognition Accuracy (%) | Recognition Time (ms) | Total Time (s) |

|---|---|---|---|---|

| Syn 1 | Subject 1 | 87 | 8.0 | 3.0488 |

| Subject 2 | 87 | 7.9 | 3.0533 | |

| Subject 3 | 100 | 8.0 | 3.0485 | |

| Subject 4 | 63 | 7.9 | 3.0485 | |

| Subject 5 | 80 | 7.3 | 3.0119 | |

| Avg. | 83 | 7.8 | 3.0422 | |

| Asyn 2 | Subject 1 | 83 | 7.6 | 3.5267 |

| Subject 2 | 77 | 7.5 | 2.4560 | |

| Subject 3 | 100 | 8.5 | 2.8597 | |

| Subject 4 | 100 | 6.8 | 3.1599 | |

| Subject 5 | 100 | 7.2 | 2.8525 | |

| Avg. | 92 | 7.5 | 2.9710 |

| Subject | The Efficiency of Synchronous Interaction | The Efficiency of Asynchronous Interaction |

|---|---|---|

| Subject 1 | 0.4323 | 0.4027 |

| Subject 2 | 0.4655 | 0.5037 |

| Subject 3 | 0.5038 | 0.5043 |

| Subject 4 | 0.3771 | 0.4314 |

| Subject 5 | 0.5036 | 0.5039 |

| Avg. | 0.4564 | 0.4692 |

| Research | [35] | [12] | [13] | [36] | This Work |

|---|---|---|---|---|---|

| Components | MSP430, 27 IMUs, Bluetooth | Intel’s Edison, 5 IMUs, Bluetooth and WiFi | Arduino Nano 33BLE, 5 IMUs, Bluetooth and USB | STM32F103RCT6, 15 IMUs, Bluetooth | STM32L151CCT, 5 IMUs, USB and Bluetooth |

| Model | k-means clustering | Naïve Bayes, MLP, RF | RNN | CNN | GNB + RF |

| Offline average recognition accuracy | 70.22% for three tasks from 15 healthy subjects and 15 stroke patients | 92% for 22 distinct hand gestures from 57 participants | 95% for 8 classes decoding task from 3 subjects | 98.79% for 53 hand gestures from 22 subjects | 98.45% for gesture and non-gesture; 95.83% for 10 hand gestures from 10 subjects |

| Research | [16] | [17] | [18] | [19] | This Work |

|---|---|---|---|---|---|

| hand gesture segmentation | analyze each frame from the glove sensors | unsupervised threshold-based segmentation | based on Dempster–Shafer theory | detect the start/end of a hand gesture sequence by estimating a scalar value | threshold detection and interval of inactivity |

| hand gesture recognition | two ANNs in series | HMM | based on two LSTM layers | GNB cascading with RF | |

| real-time performance | computational time is about 9 min for 10 hand gestures | average segmentation delay is 263 ms | based on the evidence reasoning, the delay between spotting and recognition is eliminated | no more than 12 ms to recognize the completed hand gesture in real-time | about 7.8 ms to recognize the completed hand gesture |

| segmentation or recognition accuracy | over 99% for a library of 10 gestures and over 96% for a library of 30 gestures | segmentation accuracy can rise to 100% at a window size of 24 frames and average oversegmentation error is 2.70% | the recognition accuracy (95.2%) after spotting is higher than the accuracy (96.7%) of simultaneous recognition with spotting | offline recognition accuracy is 100% | online recognition accuracy up to 92% |

| Research | [20] | [21] | [37] | [22] | This Work |

|---|---|---|---|---|---|

| hand gesture command | forward, backward, left, right, ascent, descent, hovering, rotating clockwise, rotating anticlockwise | take off, land, height down, hover, height up, pilot | move forward, move backward, turn left, turn right, move up, move down, turn clockwise, turn anticlockwise, special movement 1, special movement 2 | basic commands: throttle up, throttle down, pitch down, pitch up, roll left, roll right, yaw left, yaw right, flag, no command; mode switching commands: arm, disarm, position mode, hold mode, return mode | move left, move right, move up, move down, wait for combination, disarm, take off on high, hover, forced land, move forward, move backward, turn left, turn right |

| UAV flight | basic action flight | basic action flight | basic action flight | simple flight mission, artificial collision avoidance | complex flight task, automatic collision avoidance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, C.; Zhang, H.; Pei, Y.; Xie, L.; Yan, Y.; Yin, E.; Jin, J. Online Hand Gesture Detection and Recognition for UAV Motion Planning. Machines 2023, 11, 210. https://doi.org/10.3390/machines11020210

Lu C, Zhang H, Pei Y, Xie L, Yan Y, Yin E, Jin J. Online Hand Gesture Detection and Recognition for UAV Motion Planning. Machines. 2023; 11(2):210. https://doi.org/10.3390/machines11020210

Chicago/Turabian StyleLu, Cong, Haoyang Zhang, Yu Pei, Liang Xie, Ye Yan, Erwei Yin, and Jing Jin. 2023. "Online Hand Gesture Detection and Recognition for UAV Motion Planning" Machines 11, no. 2: 210. https://doi.org/10.3390/machines11020210

APA StyleLu, C., Zhang, H., Pei, Y., Xie, L., Yan, Y., Yin, E., & Jin, J. (2023). Online Hand Gesture Detection and Recognition for UAV Motion Planning. Machines, 11(2), 210. https://doi.org/10.3390/machines11020210