A SLAM-Based Localization and Navigation System for Social Robots: The Pepper Robot Case

Abstract

1. Introduction

- Its review of the recently developed Pepper robot navigation systems for indoor environments.

- Its development of a method for generating useful 2D metric maps directly from the data collected by Pepper’s onboard sensors, which overcomes the limitations in the current ROS-based map production systems.

- Its development of efficient navigation and localization systems for navigating complex environments, using Pepper’s limited sensor suite.

- Its presentation of a set of efficiency metrics to assess the developed systems.

- Its assessment of user acceptability for the developed navigation system.

2. Related Works

- Navigation method: This involves the methods and algorithms that have been employed in the developed application. The employed navigation methods can be geometric-based, semantic-based, or hybrid approaches.

- Employed sensors: different types of sensors can be integrated in the navigation task, with diverse levels of computing complexity.

- Development environment: Pepper robot applications can be developed using either Chorgraphe or ROS. Each environment has its own advantages and drawbacks.

- System’s efficiency: this involves analyzing the developed robot navigation efficiency in terms of success rate, absolute trajectory error (ATE), localization accuracy, map production, and user acceptability.

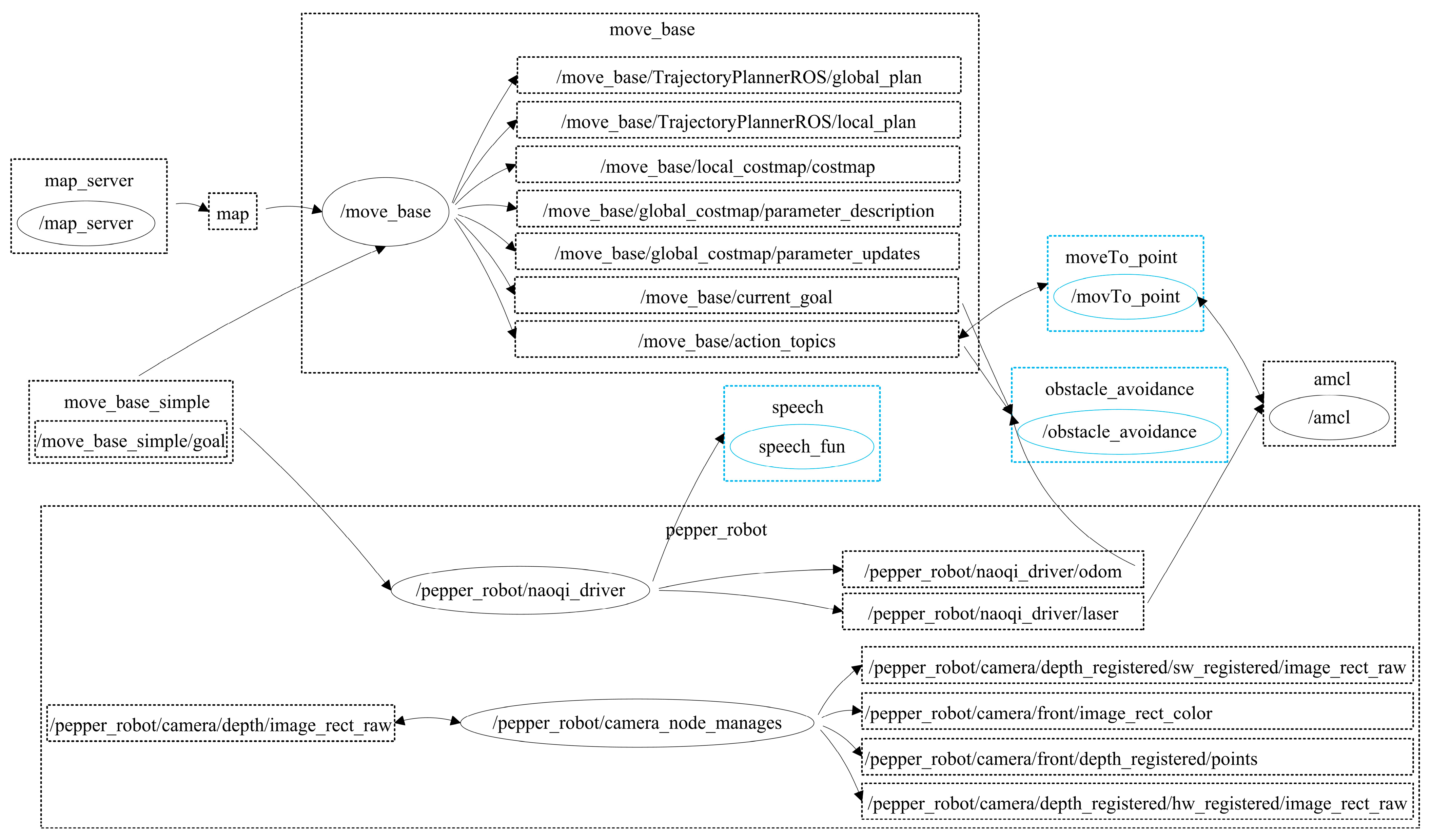

3. Proposed Pepper Navigation System

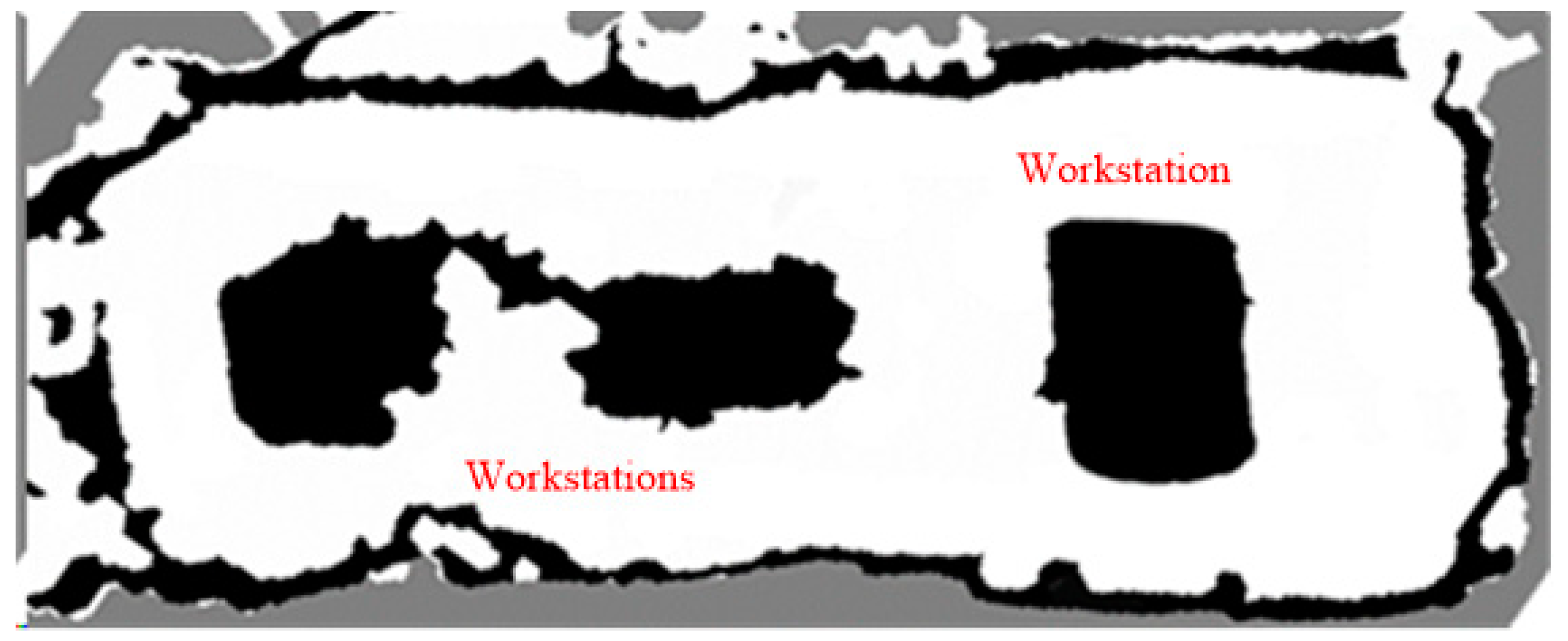

3.1. Map Production Function

3.2. Navigation and Localization Functions

3.3. Human–Robot Interaction Function

4. Experiments and Results

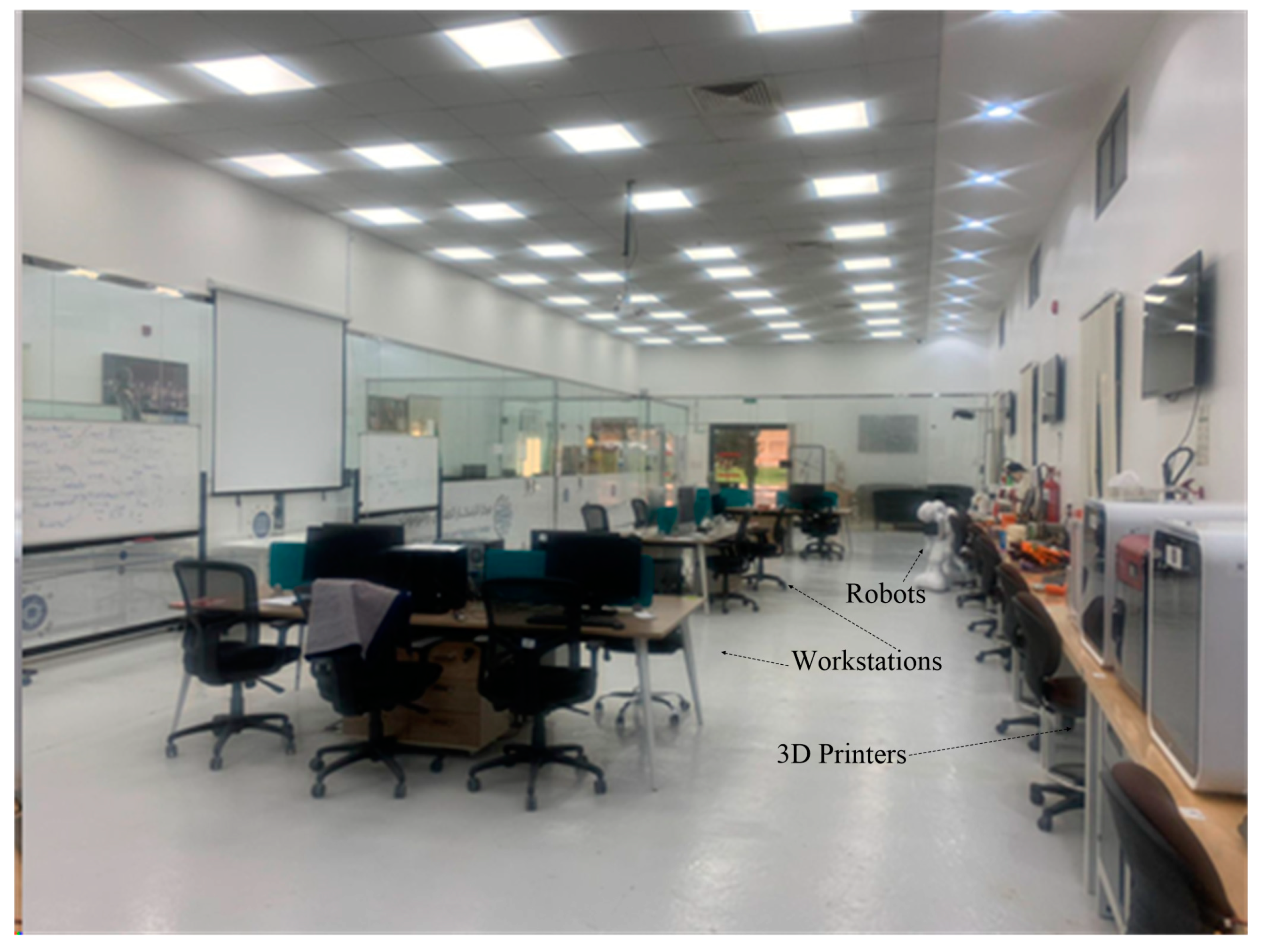

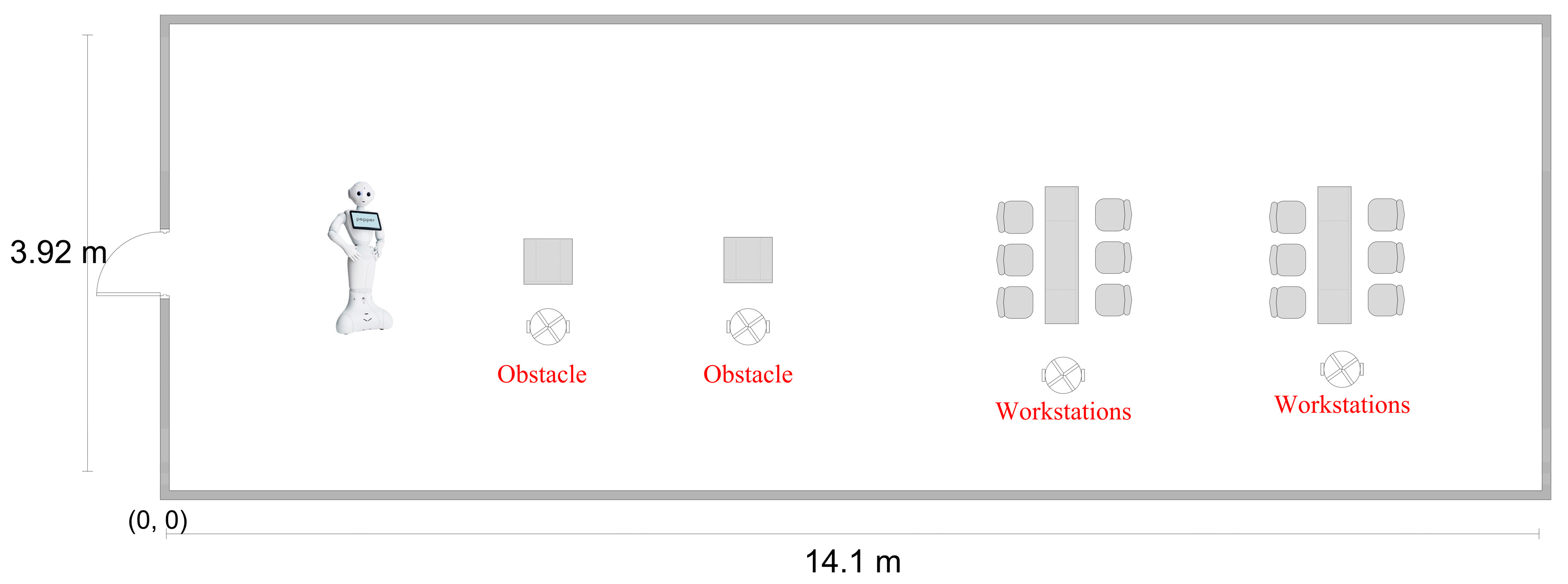

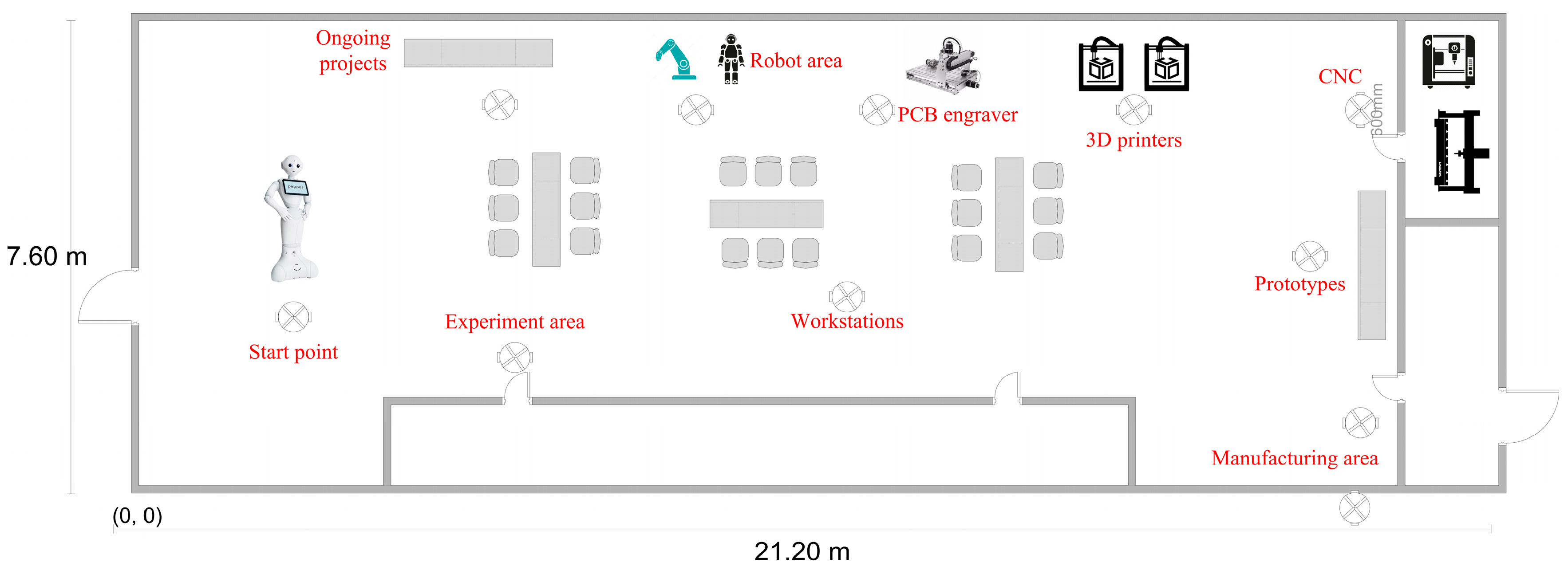

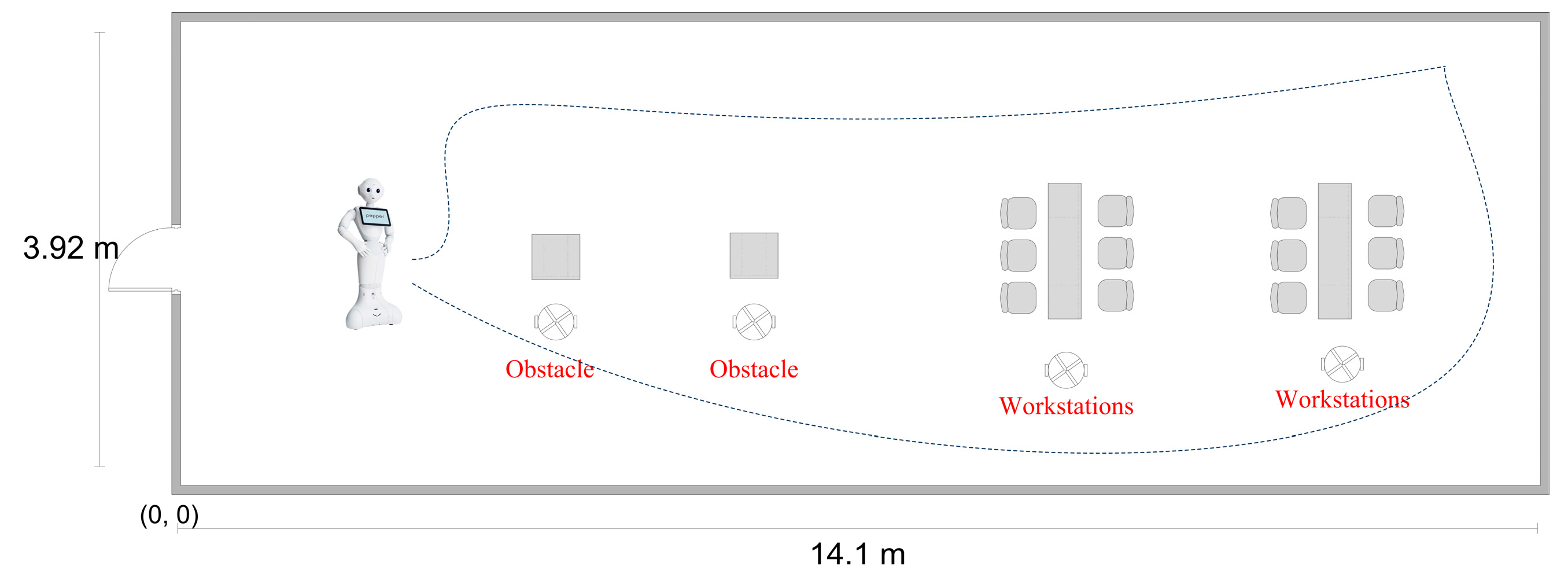

4.1. Experimental Setup

4.2. Results

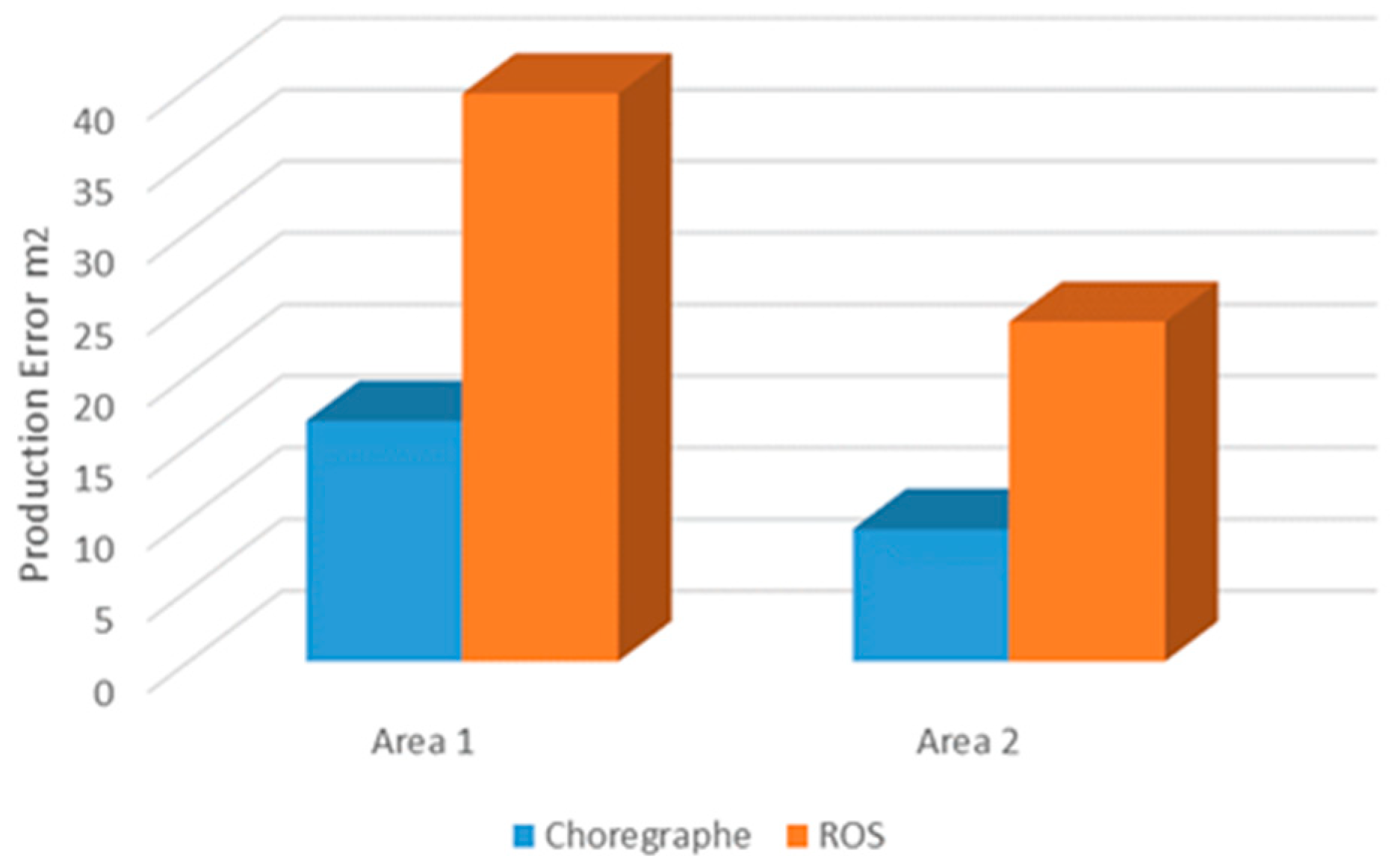

- Map production task: this assesses the accuracy of the produced map for the area of interest.

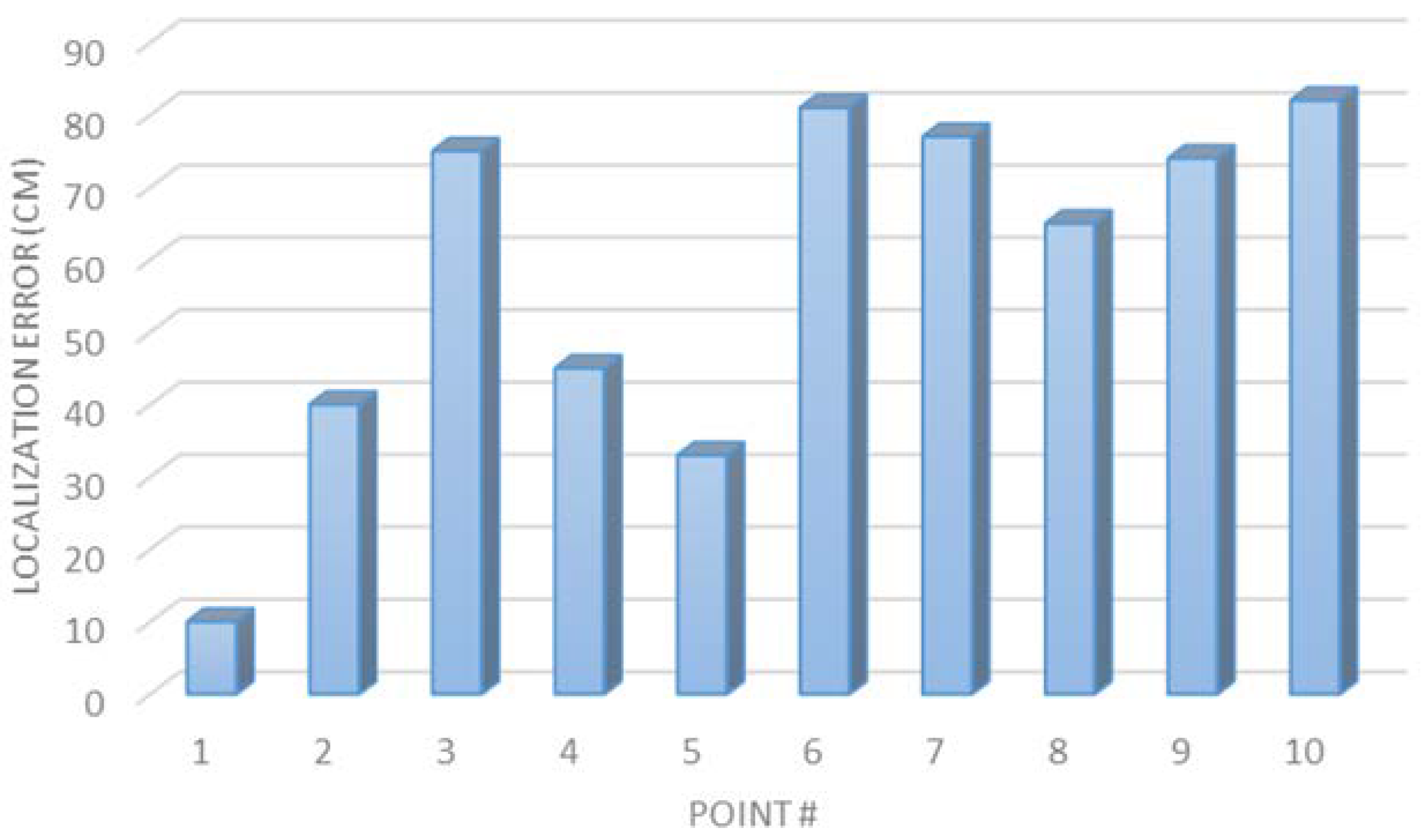

- Robot localization error: this estimates the difference between the robot’s actual position and its estimated position.

- Absolute trajectory error (ATE): this shows the average trajectory error for the robot when traveling in the area of interest.

- Success rate: This measures the robot’s ability to reach its goal. In addition, the success rate involves the number of collisions and timeouts.

- Robot path: this shows Pepper’s safe path to navigate from one reference point to another in the area of interest.

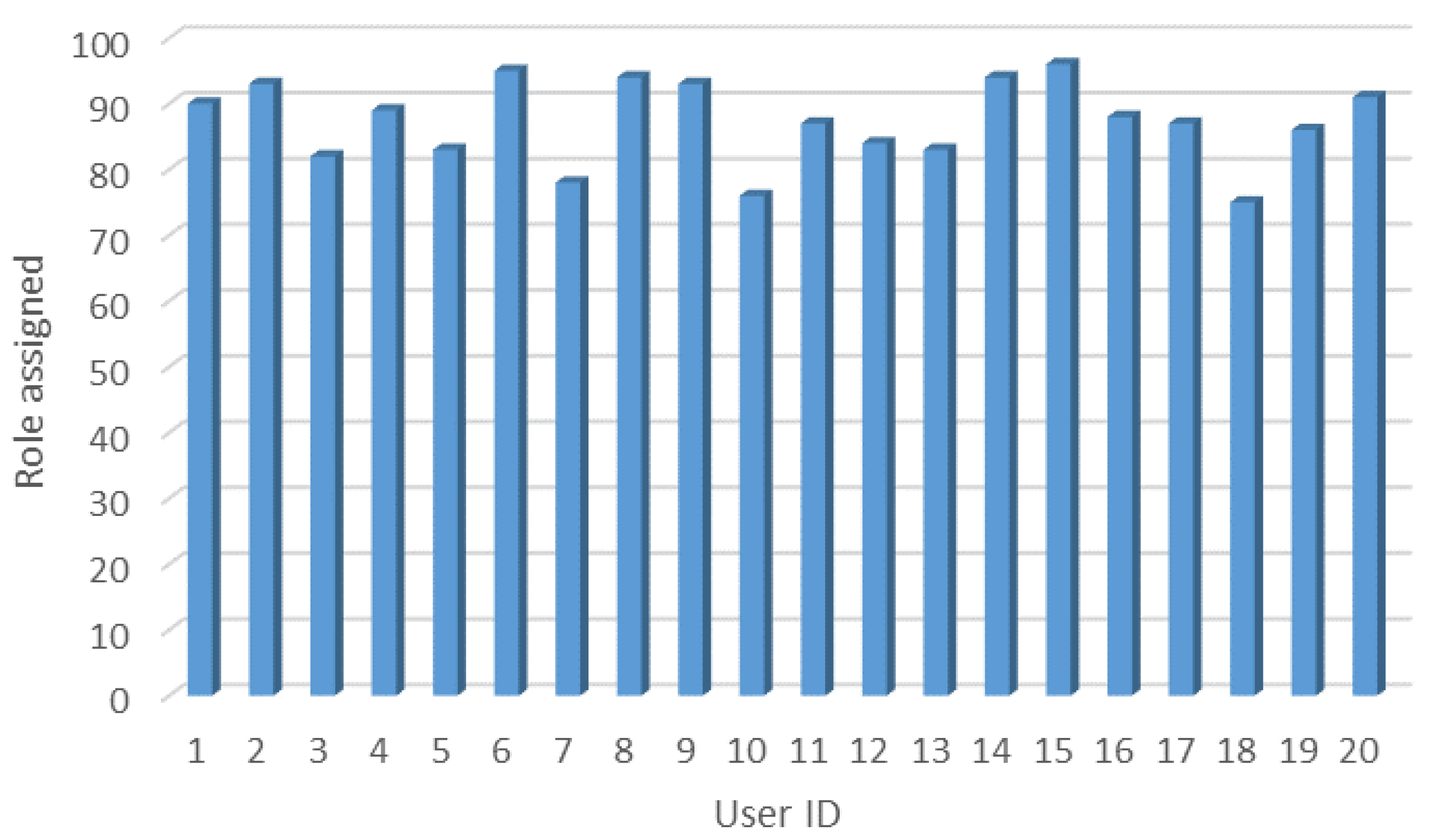

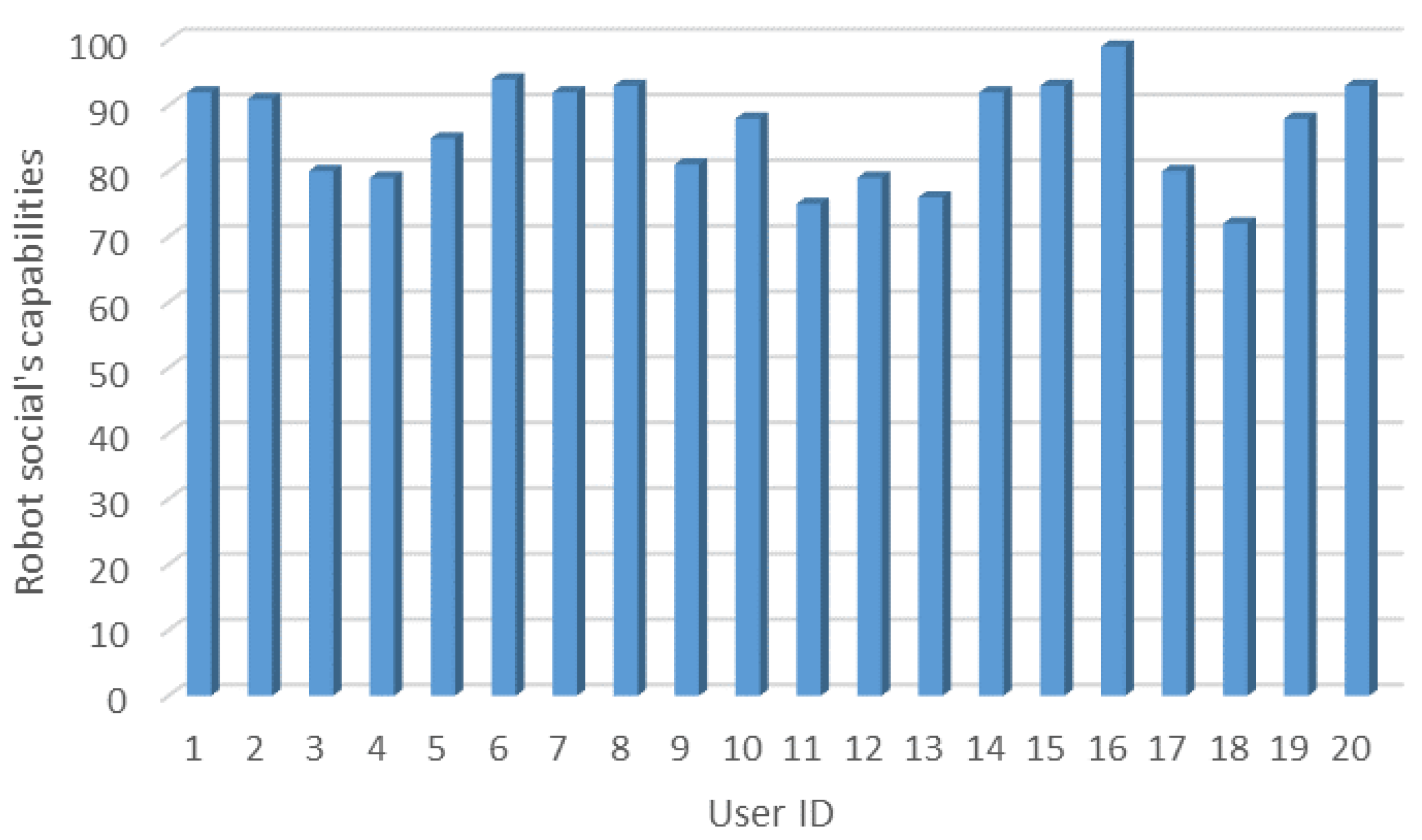

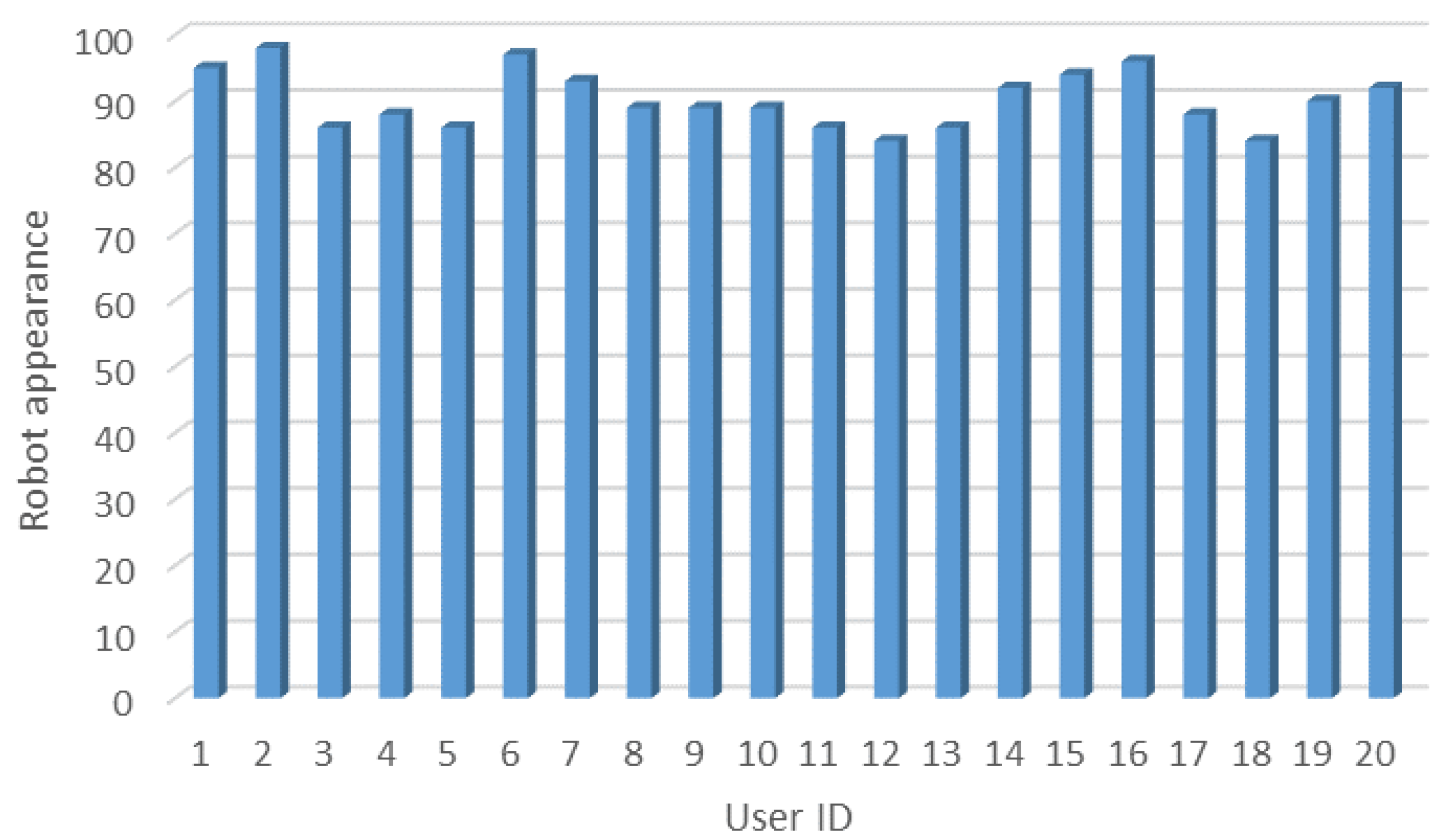

- User acceptability: this refers to the overall acceptability of the social robot navigation system by its users.

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Krägeloh, C.U.; Bharatharaj, J.; Kutty, S.K.S.; Nirmala, P.R.; Huang, L. Questionnaires to Measure Acceptability of Social Robots: A Critical Review. Robotics 2019, 8, 88. [Google Scholar] [CrossRef]

- Kim, P.; Chen, J.; Kim, J.; Cho, Y.K. SLAM-driven intelligent autonomous mobile robot navigation for construction applications. In Workshop of the European Group for Intelligent Computing in Engineering; Springer: Cham, Switzerland, 2018; pp. 254–269. [Google Scholar] [CrossRef]

- Ross, R.; Hoque, R. Augmenting GPS with Geolocated Fiducials to Improve Accuracy for Mobile Robot Applications. Appl. Sci. 2019, 10, 146. [Google Scholar] [CrossRef]

- Alhmiedat, T.A.; Abutaleb, A.; Samara, G. A Prototype Navigation System for Guiding Blind People Indoors using NXT Mindstorms. Int. J. Online Biomed. Eng. (iJOE) 2013, 9, 52. [Google Scholar] [CrossRef]

- Alamri, S.; Alshehri, S.; Alshehri, W.; Alamri, H.; Alaklabi, A.; Alhmiedat, T. Autonomous maze solving robotics: Algorithms and systems. Int. J. Mech. Eng. Robot. Res. 2021, 10, 12. [Google Scholar] [CrossRef]

- Efstratiou, R.; Karatsioras, C.; Papadopoulou, M.; Papadopoulou, C.; Lytridis, C.; Bazinas, C.; Papakostas, G.A.; Kaburlasos, V.G. Teaching Daily Life Skills in Autism Spectrum Disorder (ASD) Interventions Using the Social Robot Pepper. In Proceedings of the International Conference on Robotics in Education (RiE); Springer: Cham, Switzerland, 2020; pp. 86–97. [Google Scholar] [CrossRef]

- De Jong, M.; Zhang, K.; Roth, A.M.; Rhodes, T.; Schmucker, R.; Zhou, C.; Ferreira, S.; Cartucho, J.; Veloso, M. Towards a robust interactive and learning social robot. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 883–891. [Google Scholar]

- Schrum, M.; Park, C.H.; Howard, A. Humanoid therapy robot for encouraging exercise in dementia patients. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; IEEE: Manhattan, NY, USA, 2019; pp. 564–565. [Google Scholar]

- Corallo, F.; Maresca, G.; Formica, C.; Bonanno, L.; Bramanti, A.; Parasporo, N.; Giambò, F.M.; De Cola, M.C.; Buono, V.L. Humanoid Robot Use in Cognitive Rehabilitation of Patients with Severe Brain Injury: A Pilot Study. J. Clin. Med. 2022, 11, 2940. [Google Scholar] [CrossRef]

- Ziouzios, D.; Rammos, D.; Bratitsis, T.; Dasygenis, M. Utilizing Educational Robotics for Environmental Empathy Cultivation in Primary Schools. Electronics 2021, 10, 2389. [Google Scholar] [CrossRef]

- Getson, C.; Nejat, G. Socially Assistive Robots Helping Older Adults through the Pandemic and Life after COVID-19. Robotics 2021, 10, 106. [Google Scholar] [CrossRef]

- Gómez, C.; Mattamala, M.; Resink, T.; Ruiz-Del-Solar, J. Visual SLAM-Based Localization and Navigation for Service Robots: The Pepper Case. In Robot World Cup; Springer: Cham, Switzerland, 2018; pp. 32–44. [Google Scholar] [CrossRef]

- Suddrey, G.; Jacobson, A.; Ward, B. Enabling a pepper robot to provide automated and interactive tours of a robotics laboratory. arXiv 2018, arXiv:1804.03288. [Google Scholar]

- Nussey, S. EXCLUSIVE SoftBank Shrinks Robotics Business, Stops Pepper Production—Sources. Reuters, 29 June 2021. Available online:https://www.reuters.com/technology/exclusive-softbank-shrinks-robotics-business-stops-pepper-production-sources-2021-06-28/ (accessed on 16 January 2023).

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Hoshino, Y.; Peng, C.-C. Path Smoothing Techniques in Robot Navigation: State-of-the-Art, Current and Future Challenges. Sensors 2018, 18, 3170. [Google Scholar] [CrossRef]

- Gul, F.; Rahiman, W.; Alhady, S.S.N.; Chen, K. A comprehensive study for robot navigation techniques. Cogent Eng. 2019, 6, 1632046. [Google Scholar] [CrossRef]

- Alenzi, Z.; Alenzi, E.; Alqasir, M.; Alruwaili, M.; Alhmiedat, T.; Alia, O.M. A Semantic Classification Approach for Indoor Robot Navigation. Electronics 2022, 11, 2063. [Google Scholar] [CrossRef]

- Crespo, J.; Castillo, J.C.; Mozos, O.M.; Barber, R. Semantic Information for Robot Navigation: A Survey. Appl. Sci. 2020, 10, 497. [Google Scholar] [CrossRef]

- Joo, S.-H.; Manzoor, S.; Rocha, Y.G.; Bae, S.-H.; Lee, K.-H.; Kuc, T.-Y.; Kim, M. Autonomous Navigation Framework for Intelligent Robots Based on a Semantic Environment Modeling. Appl. Sci. 2020, 10, 3219. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, W.; Yao, Z.; Li, M.; Liang, Z.; Cao, Z.; Zhang, H.; Huang, Q. Design of a Hybrid Indoor Location System Based on Multi-Sensor Fusion for Robot Navigation. Sensors 2018, 18, 3581. [Google Scholar] [CrossRef]

- Moezzi, R.; Krcmarik, D.; Hlava, J.; Cýrus, J. Hybrid SLAM modelling of autonomous robot with augmented reality device. Mater. Today Proc. 2020, 32, 103–107. [Google Scholar] [CrossRef]

- Bista, S.R.; Ward, B.; Corke, P. Image-Based Indoor Topological Navigation with Collision Avoidance for Resource-Constrained Mobile Robots. J. Intell. Robot. Syst. 2021, 102, 1–24. [Google Scholar] [CrossRef]

- Silva, J.R.; Simão, M.; Mendes, N.; Neto, P. Navigation and obstacle avoidance: A case study using Pepper robot. In Proceedings of the IECON 2019-45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019; IEEE: Manhattan, NY, USA, 2019; Volume 1, pp. 5263–5268. [Google Scholar]

- Nardi, F.; Lázaro, M.T.; Iocchi, L.; Grisetti, G. Generation of Laser-Quality 2D Navigation Maps from RGB-D Sensors. In Robot World Cup; Springer: Cham, Switzerland, 2019; pp. 238–250. [Google Scholar] [CrossRef]

- Perera, V.; Pereira, T.; Connell, J.; Veloso, M. Setting up pepper for autonomous navigation and personalized interaction with users. arXiv 2017, arXiv:1704.04797. [Google Scholar]

- Bera, A.; Randhavane, T.; Prinja, R.; Kapsaskis, K.; Wang, A.; Gray, K.; Manocha, D. The emotionally intelligent robot: Improving social navigation in crowded environments. arXiv 2019, arXiv:1903.03217. [Google Scholar]

- Chen, D.; Ge, Y. Multi-Objective Navigation Strategy for Guide Robot Based on Machine Emotion. Electronics 2022, 11, 2482. [Google Scholar] [CrossRef]

- Allegra, D.; Alessandro, F.; Santoro, C.; Stanco, F. Experiences in Using the Pepper Robotic Platform for Museum Assistance Applications. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: Manhattan, NY, USA, 2018; pp. 1033–1037. [Google Scholar]

- Lázaro, M.T.; Grisetti, G.; Iocchi, L.; Fentanes, J.P.; Hanheide, M. A Lightweight Navigation System for Mobile Robots. In Proceedings of the Iberian Robotics Conference; Springer: Cham, Switzerland, 2018; pp. 295–306. [Google Scholar] [CrossRef]

- Dugas, D.; Nieto, J.; Siegwart, R.; Chung, J.J. NavRep: Unsupervised Representations for Reinforcement Learning of Robot Navigation in Dynamic Human Environments. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Manhattan, NY, USA, 2021; pp. 7829–7835. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. ICRA Workshop on Open Source Software; IEEE: Kobe, Japan, 2009; Volume 3, p. 5. [Google Scholar]

- Alhmiedat, T.; Aborokbah, M. Social Distance Monitoring Approach Using Wearable Smart Tags. Electronics 2021, 10, 2435. [Google Scholar] [CrossRef]

- Gao, Y.; Huang, C.-M. Evaluation of Socially-Aware Robot Navigation. Front. Robot. AI 2022, 8, 420. [Google Scholar] [CrossRef] [PubMed]

- Nishimura, M.; Yonetani, R. L2B: Learning to Balance the Safety-Efficiency Trade-off in Interactive Crowd-aware Robot Navigation. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NE, USA, 25–29 October 2020; IEEE: Manhattan, NY, USA, 2020; pp. 11004–11010. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; IEEE: Manhattan, NY, USA; 2012; pp. 573–580. [Google Scholar]

- Chanseau, A.; Dautenhahn, K.; Walters, M.L.; Koay, K.L.; Lakatos, G.; Salem, M. Does the Appearance of a Robot Influence People’s Perception of Task Criticality? In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; IEEE: Manhattan, NY, USA, 2018; pp. 1057–1062. [Google Scholar]

- Onyeulo, E.B.; Gandhi, V. What Makes a Social Robot Good at Interacting with Humans? Information 2020, 11, 43. [Google Scholar] [CrossRef]

- Groot, R. Autonomous Exploration and Navigation with the Pepper Robot. Master’s Thesis, Utrecht University, Utrecht, The Netherlands, 2018. [Google Scholar]

- Ghiță, A.; Gavril, A.F.; Nan, M.; Hoteit, B.; Awada, I.A.; Sorici, A.; Mocanu, I.G.; Florea, A.M. The AMIRO Social Robotics Framework: Deployment and Evaluation on the Pepper Robot. Sensors 2020, 20, 7271. [Google Scholar] [CrossRef] [PubMed]

| Research Work | Navigation Method | Employed Sensors | Development Environment | System’s Efficiency |

|---|---|---|---|---|

| [12] | ORB-SLAM | LiDAR, RGB-Depth, and odometry | ROS | ATE: 0.4095 m |

| [13] | SLAM | LiDAR | ROS/NAOQi | NA |

| [22] | IBVS | RGB-Depth camera | ROS | Success rate: 80% |

| [23] | SLAM | LiDAR and odometry | Choregraphe | Success rate: 70% |

| [24] | SLAM | RGB-Depth camera | ROS | Translated error: 0.115 m |

| [25] | SLAM | LiDAR and odometry sensors | ROS + IBM service | NA |

| [26] | NAOQi | LiDAR | Choregraphe | Emotion detection accuracy: 85.33% |

| [27] | SLAM | LiDAR | Choregraphe | NA |

| [28] | SLAM | LiDAR | Choregraphe | NA |

| [29] | Monte Carlo localization and Dijkstra | Odometry and laser scanner | C++-based environment | NA |

| [30] | reinforcement learning | LiDAR | NavRepSim environment | Success rate: 76% |

| Function | Input | Output |

|---|---|---|

| Obstacle avoidance | Rangefinder sensor data | New route through move_base |

| Move-to-point | Area ID from the list of stations | The area’s coordinates are sent to the move_base |

| Speech | User interface function | Short speech |

| Experiment Testbed | ATE (x Coordinate) | ATE (y Coordinate) |

|---|---|---|

| Area 1 | 54 | 31 |

| Area 2 | 66 | 51 |

| Experiment Testbed | Success Rate | Collision Rate | Timeout |

|---|---|---|---|

| Area 1 | 0.92 | 0.01 | 0.001 |

| Area 2 | 0.89 | 0.18 | 0.115 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alhmiedat, T.; Marei, A.M.; Messoudi, W.; Albelwi, S.; Bushnag, A.; Bassfar, Z.; Alnajjar, F.; Elfaki, A.O. A SLAM-Based Localization and Navigation System for Social Robots: The Pepper Robot Case. Machines 2023, 11, 158. https://doi.org/10.3390/machines11020158

Alhmiedat T, Marei AM, Messoudi W, Albelwi S, Bushnag A, Bassfar Z, Alnajjar F, Elfaki AO. A SLAM-Based Localization and Navigation System for Social Robots: The Pepper Robot Case. Machines. 2023; 11(2):158. https://doi.org/10.3390/machines11020158

Chicago/Turabian StyleAlhmiedat, Tareq, Ashraf M. Marei, Wassim Messoudi, Saleh Albelwi, Anas Bushnag, Zaid Bassfar, Fady Alnajjar, and Abdelrahman Osman Elfaki. 2023. "A SLAM-Based Localization and Navigation System for Social Robots: The Pepper Robot Case" Machines 11, no. 2: 158. https://doi.org/10.3390/machines11020158

APA StyleAlhmiedat, T., Marei, A. M., Messoudi, W., Albelwi, S., Bushnag, A., Bassfar, Z., Alnajjar, F., & Elfaki, A. O. (2023). A SLAM-Based Localization and Navigation System for Social Robots: The Pepper Robot Case. Machines, 11(2), 158. https://doi.org/10.3390/machines11020158