Online Condition Monitoring of Industrial Loads Using AutoGMM and Decision Trees

Abstract

:1. Introduction

- The conception of a novel data-driven algorithm combining AutoGMM and decision tree (DTree).

- The application of the proposed AutoGMM-Dtree algorithm to the condition monitoring of real industrial loads, characterized by a daily periodic working cycle.

- The procedure to train the proposed algorithm in a real industrial context and its subsequent validation.

- By leveraging the benefits of the AutoGMM and the DTree, the proposed approach allows (i) the online clustering and time allocation of nominal operating conditions; (ii) the online identification of already-classified and new anomalous conditions; (iii) the online acknowledgment of new operating modes of the monitored industrial asset.

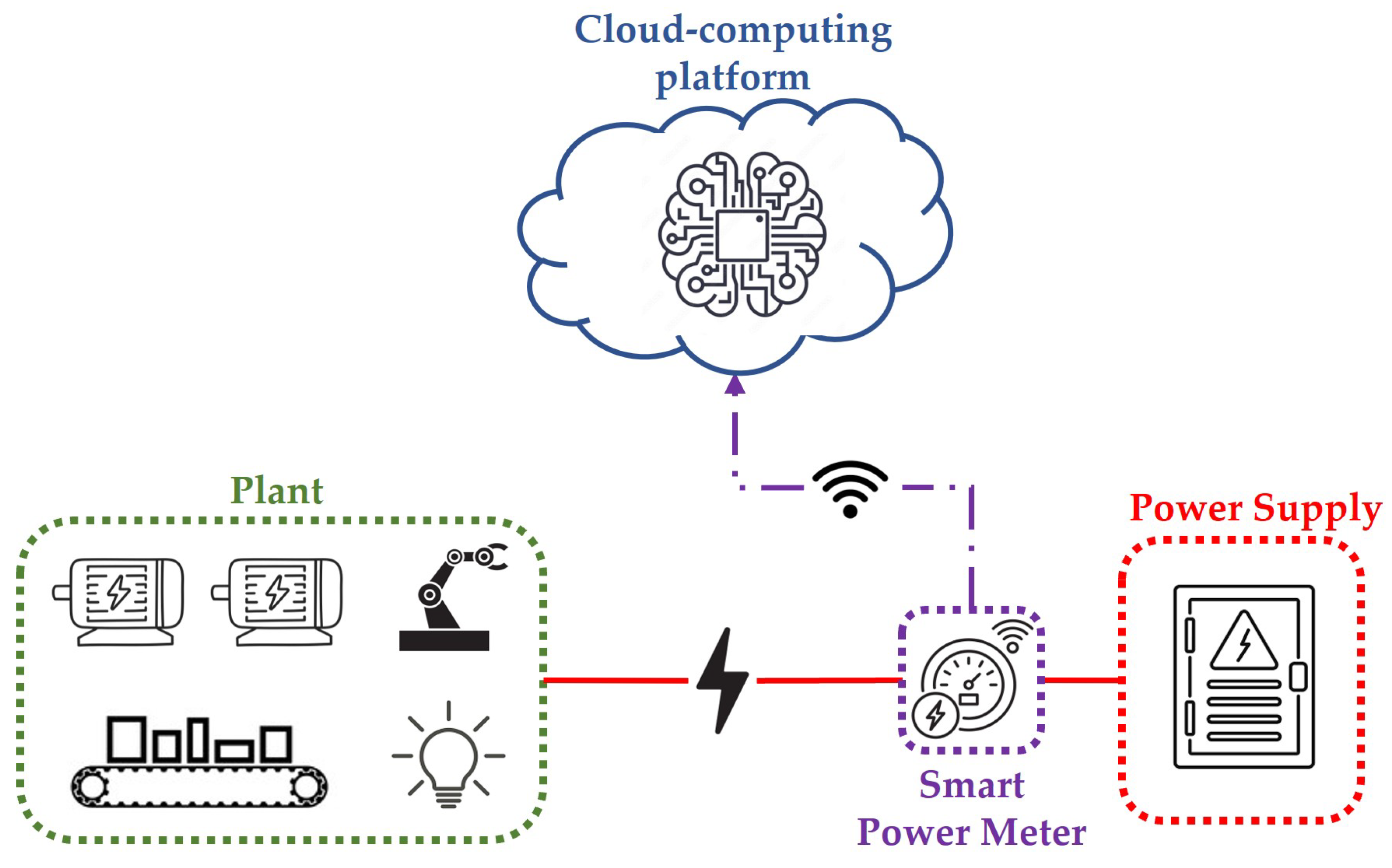

2. Objective of the Work and Its Industrial Application

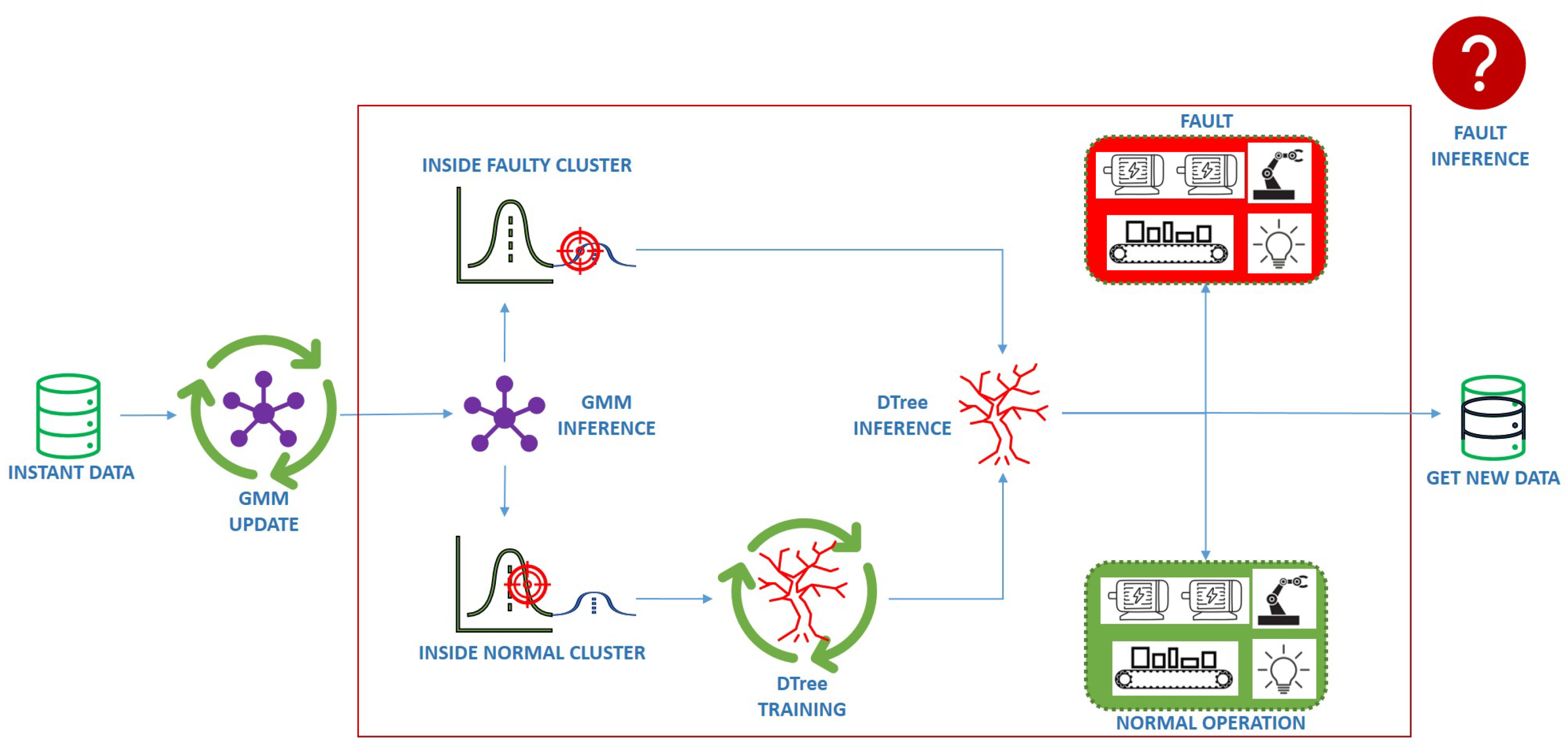

3. The Proposed AutoGMM-DTree Methodology

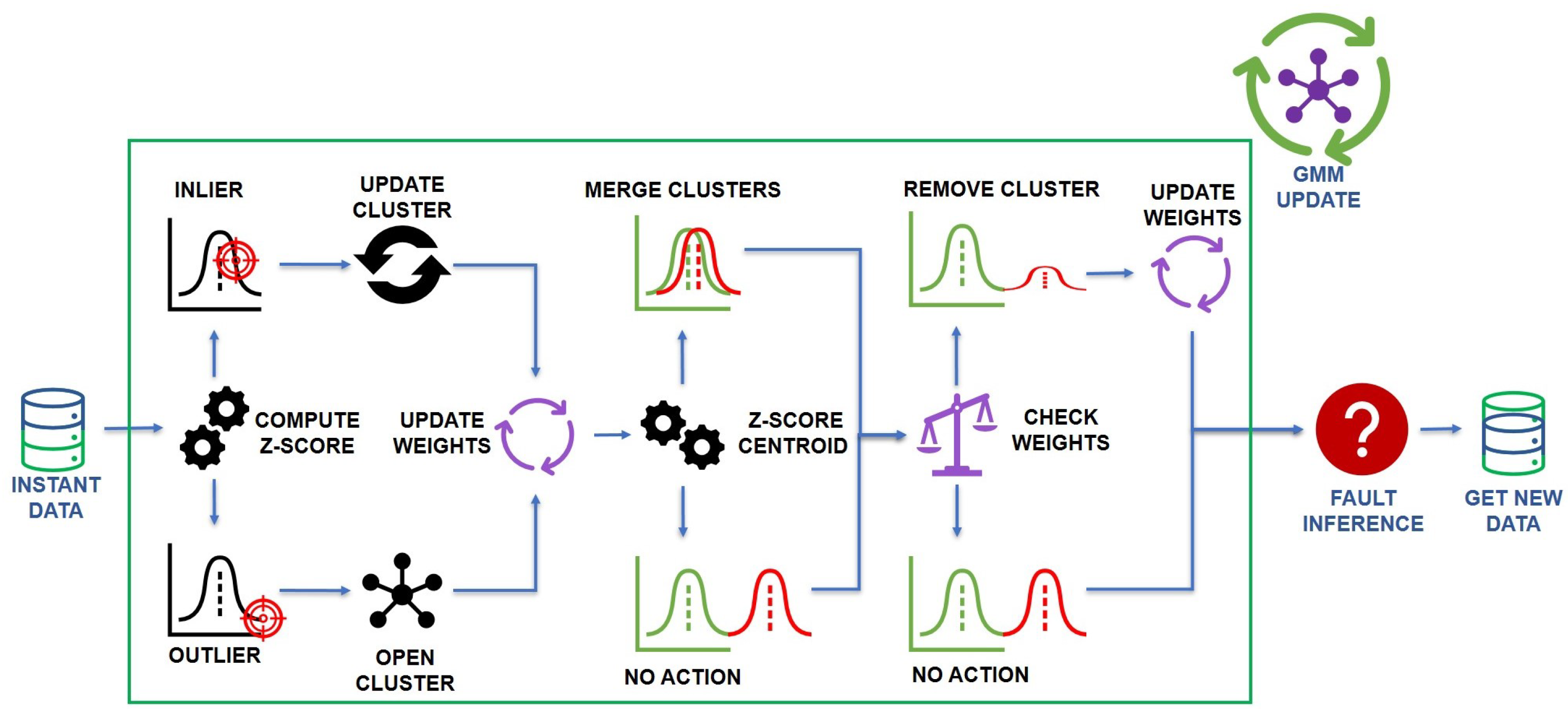

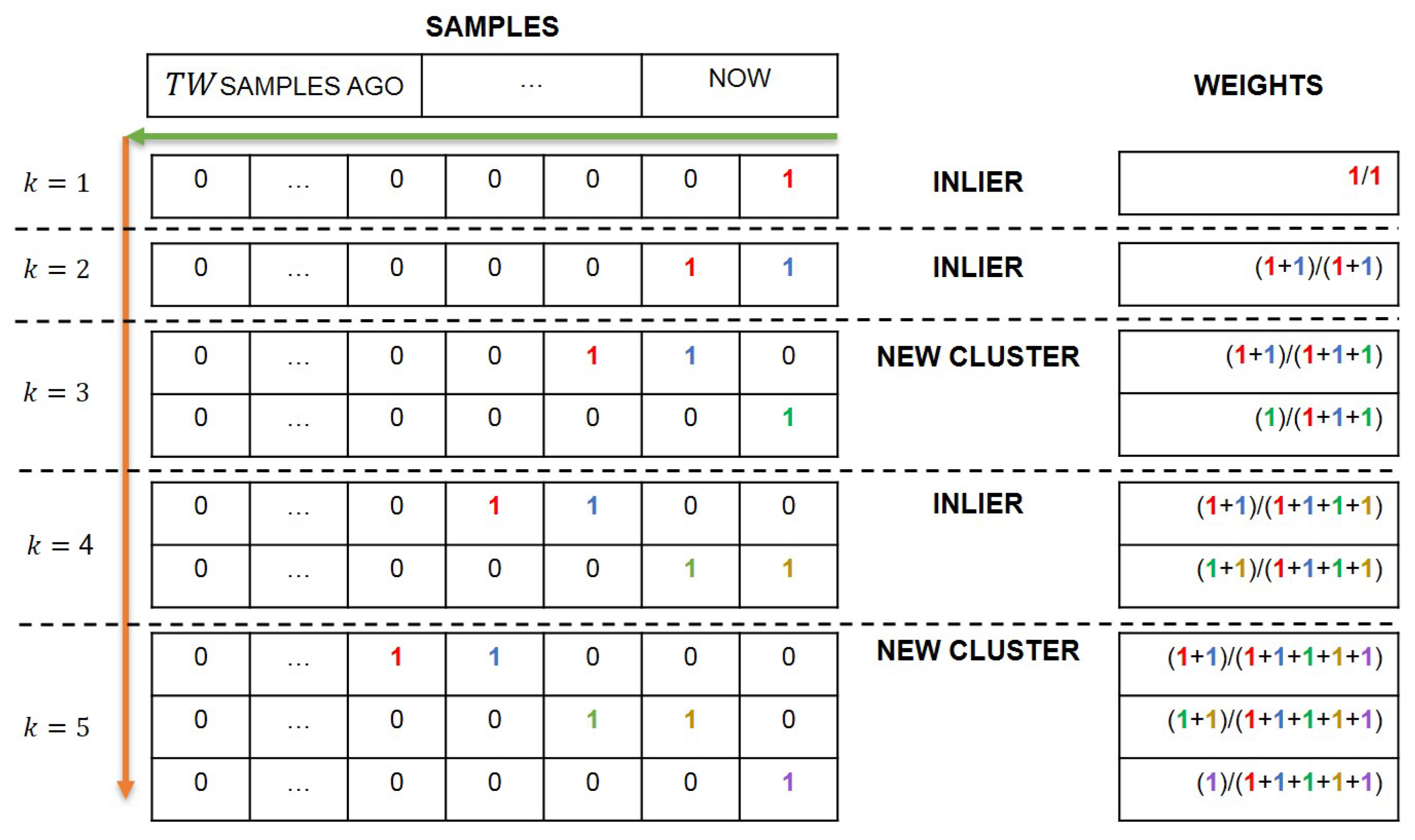

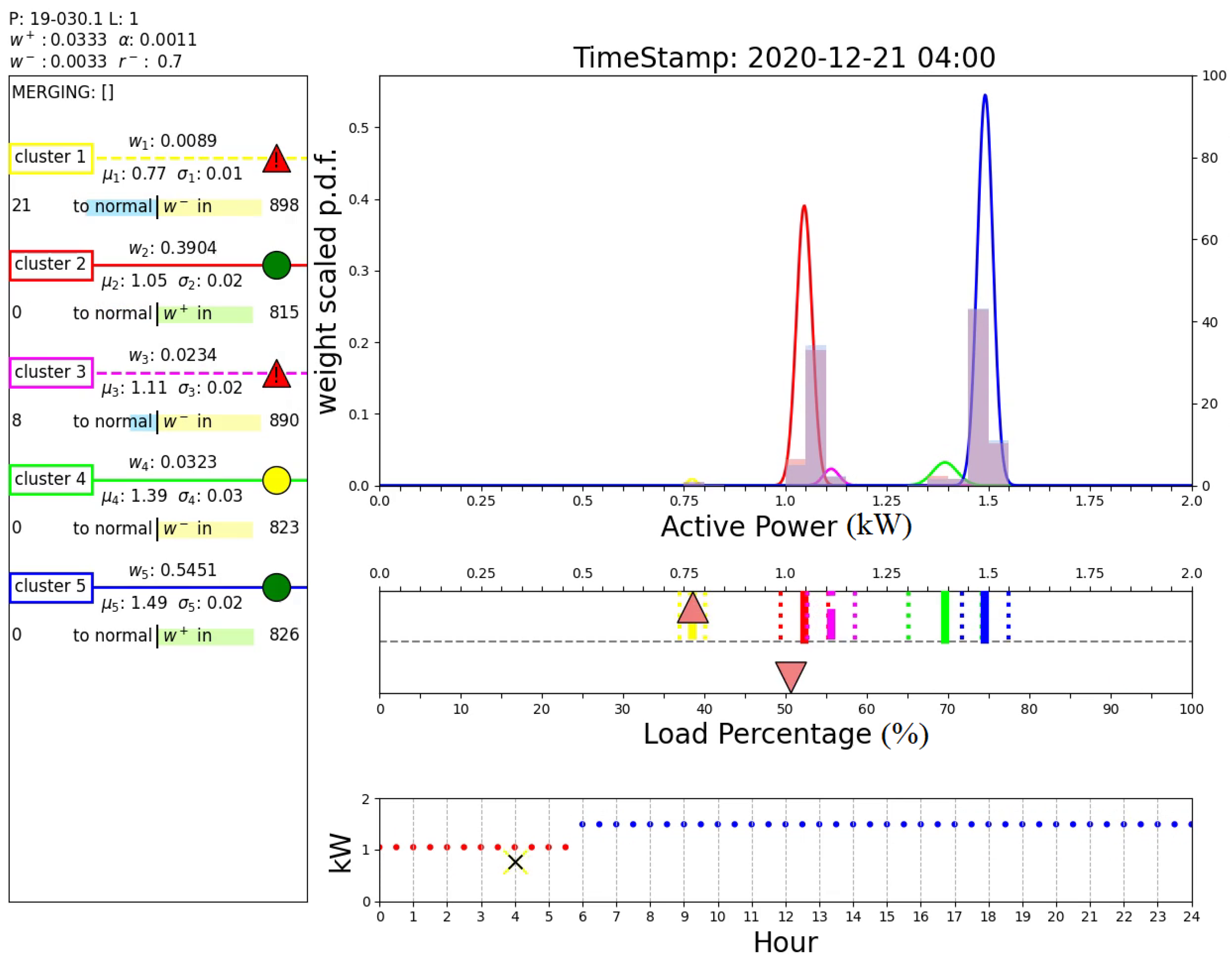

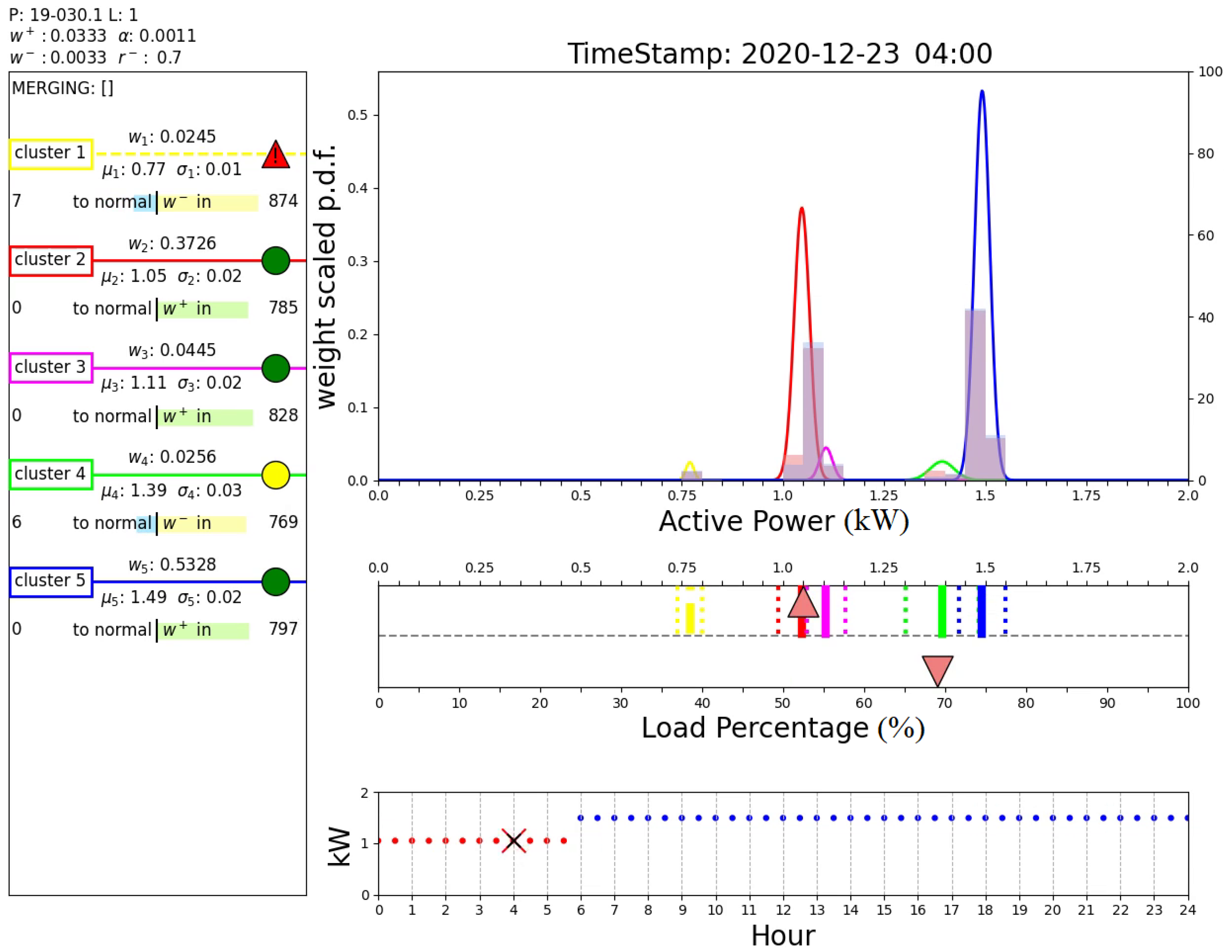

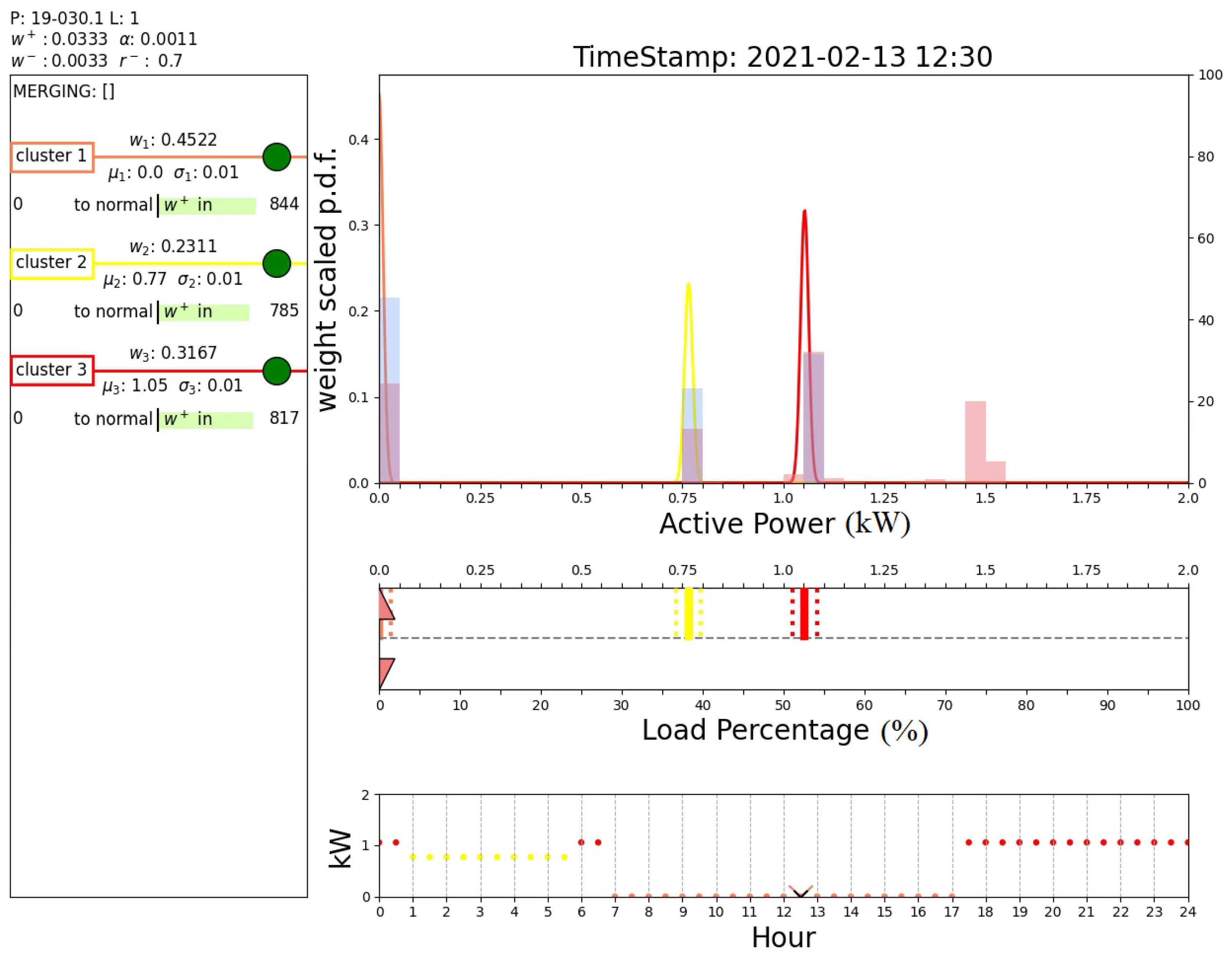

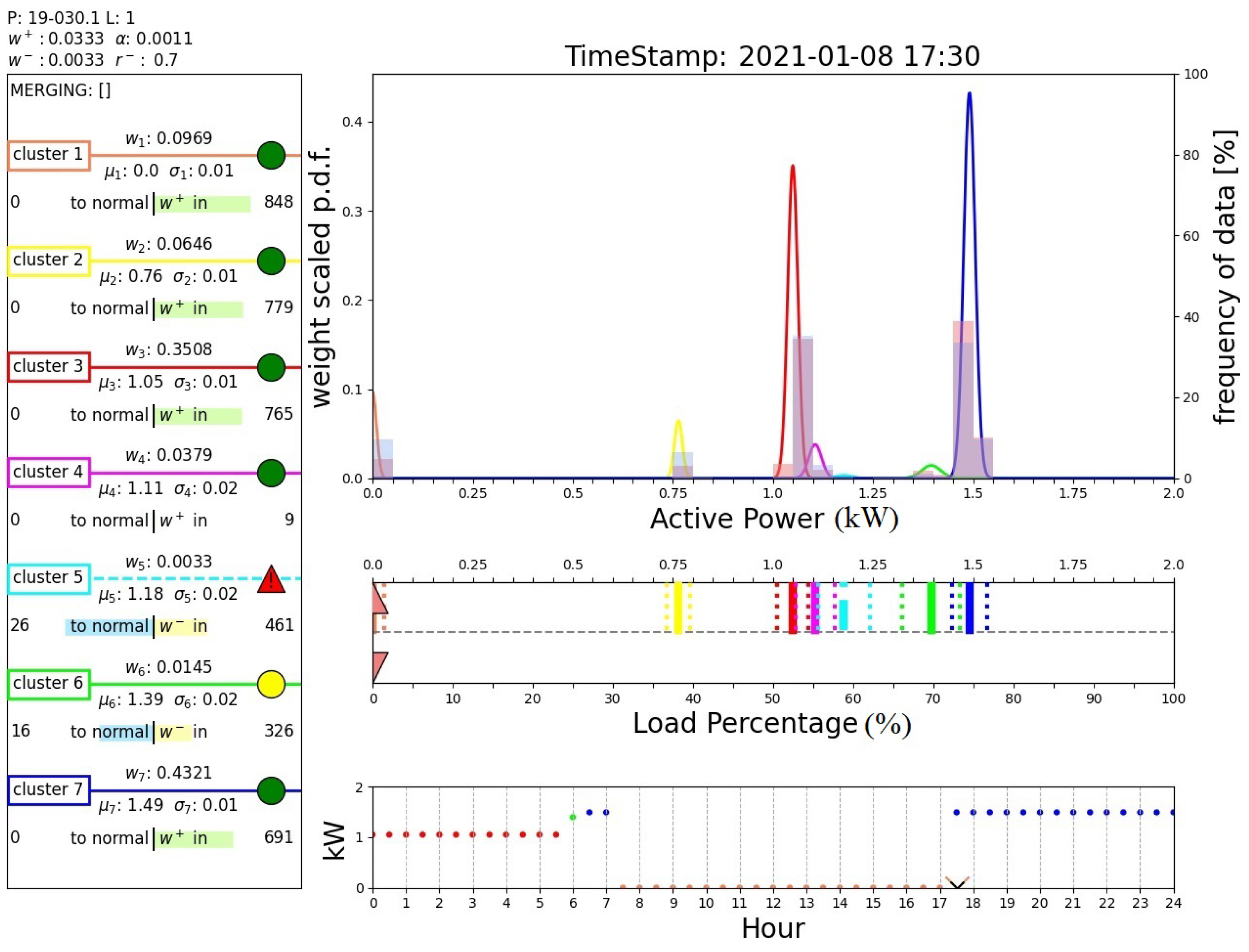

3.1. AutoGMM-Based Method for Operating Mode Clustering

- Mean (): It represents the center of the Gaussian distribution and defines the location of the peak or center of the cluster.

- Variance (): It defines the width of the Gaussian distribution.

- Weight (): It determines the weight or importance of the Gaussian distribution. It represents the probability of a data point belonging to the i-th cluster.

3.1.1. Procedure for the Cluster Update

3.1.2. Procedure for Cluster Removal and Mergers

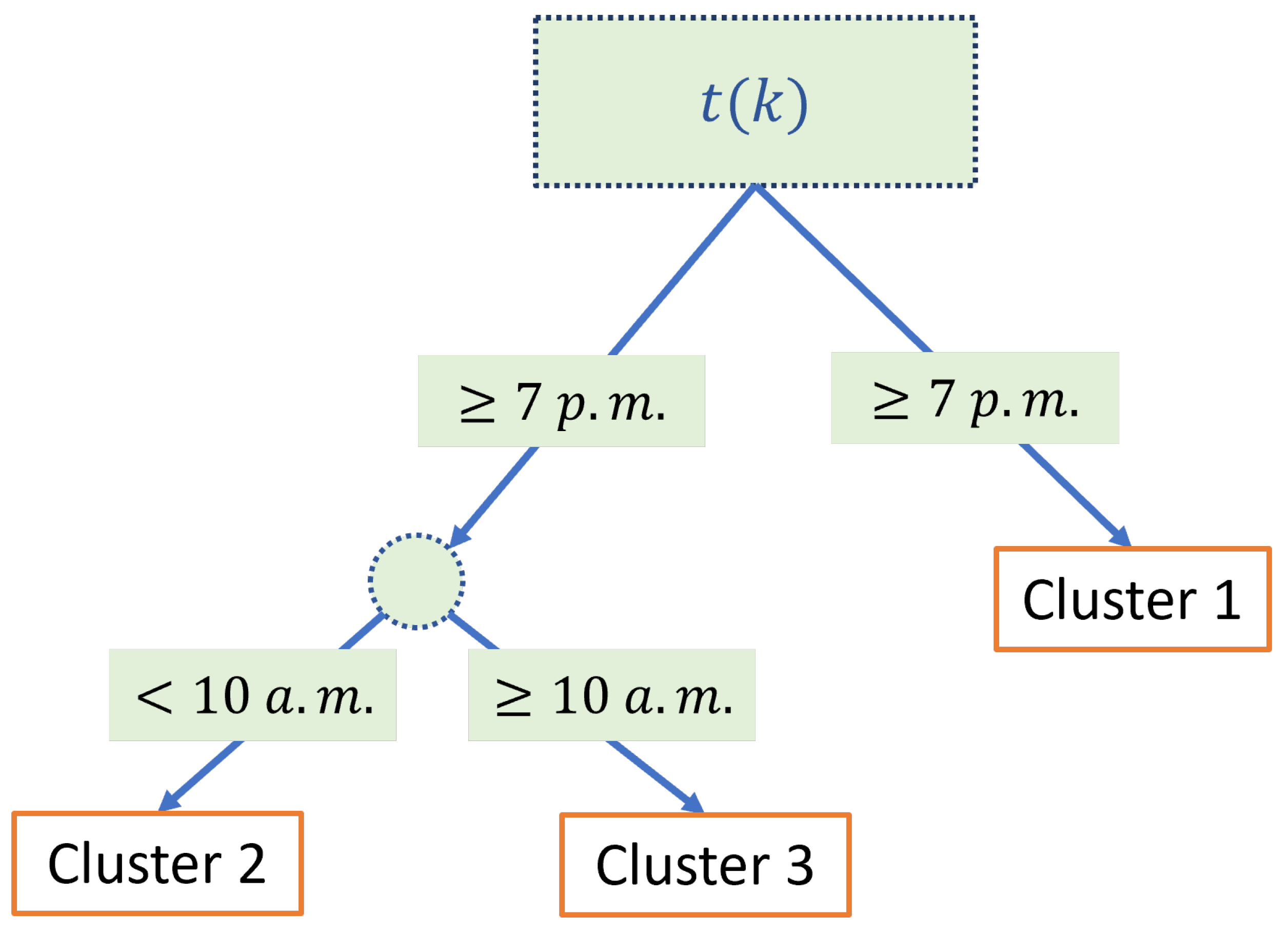

3.2. Anomaly and Novelty Detection Based on DTree

4. Experiments and Results

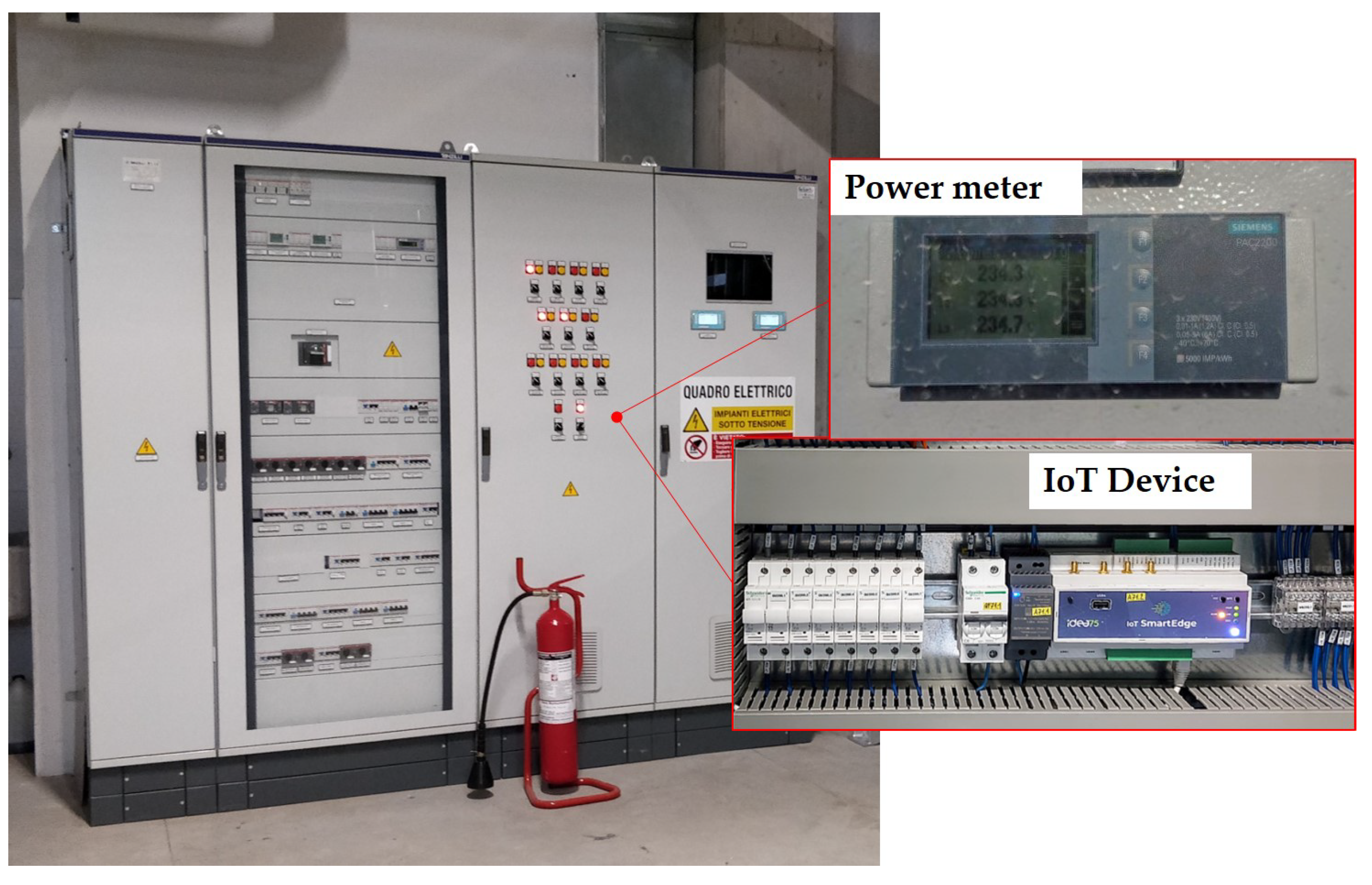

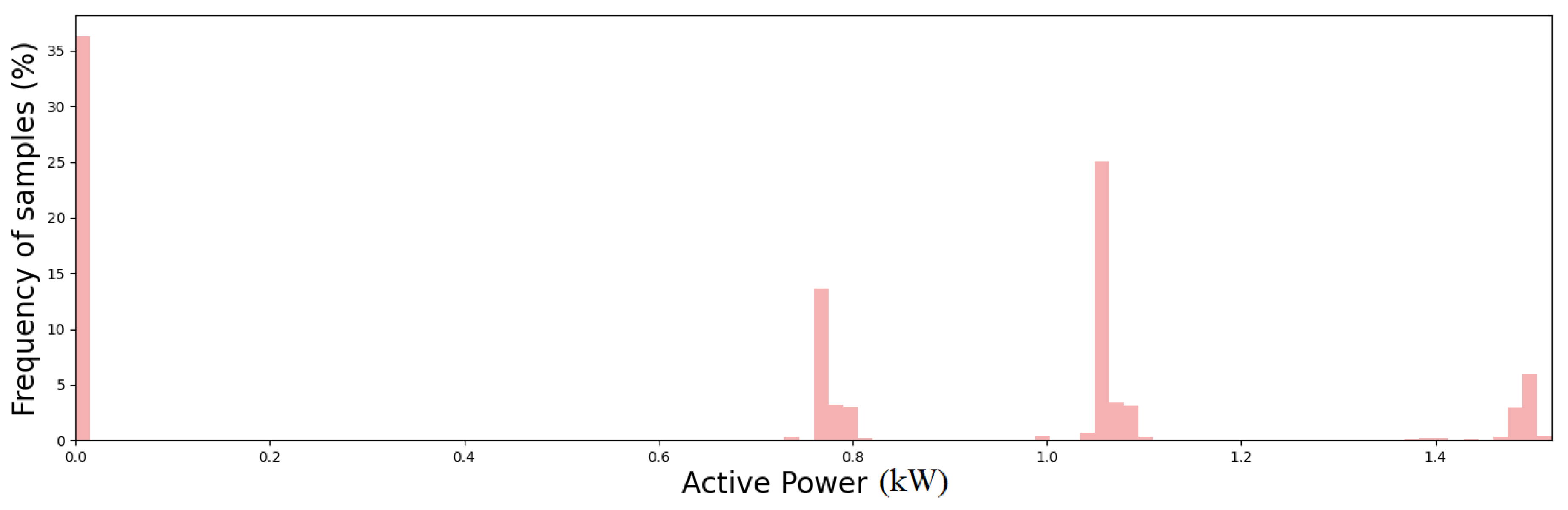

4.1. Case Study and Experimental Setup

4.2. Performance Comparison with a Conventional 2D GMM

4.3. Results on the Experimental Setup

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CM | Condition monitoring |

| AutoGMM | Automated Gaussian mixture model |

| GMM | Gaussian mixture model |

| DTree | Decision tree |

| ML | Machine learning |

| NILM | Non-intrusive load monitoring |

References

- Yan, H.C.; Zhou, J.H.; Pang, C.K. Gaussian mixture model using semisupervised learning for probabilistic fault diagnosis under new data categories. IEEE Trans. Instrum. Meas. 2017, 66, 723–733. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, Q.; Li, D.; Kang, D.; Lv, Q.; Shang, L. Hierarchical anomaly detection and multimodal classification in large-scale photovoltaic systems. IEEE Trans. Sustain. Energy 2018, 10, 1351–1361. [Google Scholar] [CrossRef]

- Nandi, S.; Toliyat, H.A.; Li, X. Condition monitoring and fault diagnosis of electrical motors—A review. IEEE Trans. Energy Convers. 2005, 20, 719–729. [Google Scholar] [CrossRef]

- Lee, J.; Wu, F.; Zhao, W.; Ghaffari, M.; Liao, L.; Siegel, D. Prognostics and health management design for rotary machinery systems—Reviews, methodology and applications. Mech. Syst. Signal Process. 2014, 42, 314–334. [Google Scholar] [CrossRef]

- Tipaldi, M.; Iervolino, R.; Massenio, P.R. Reinforcement learning in spacecraft control applications: Advances, prospects, and challenges. Annu. Rev. Control 2022, 54, 1–23. [Google Scholar] [CrossRef]

- Martínez, J.C.; Gonzalez-Longatt, F.; Amenedo, J.L.R.; Tricarico, G. Cyber-physical Framework for System Frequency Response using Real-time simulation Phasor Measurement Unit based on ANSI C37.118. In Proceedings of the IEEE Power & Energy Society General Meeting (PESGM), Orlando, FL, USA, 25 September 2023; pp. 1–5. [Google Scholar]

- Zinoviev, G.; Udovichenko, A. The calculating program method of the integrated indicator of grid electromagnetic compatibility with consumers combination of non-sinusoidal currents. In Proceedings of the International Multi-Conference on Engineering, Computer and Information Sciences (SIBIRCON), Novosibirsk, Russia, 18–22 September 2017; pp. 481–484. [Google Scholar]

- Qureshi, M.; Ghiaus, C.; Ahmad, N. A blind event-based learning algorithm for non-intrusive load disaggregation. Int. J. Electr. Power Energy Syst. 2021, 129, 106834. [Google Scholar] [CrossRef]

- Hiruta, T.; Maki, K.; Kato, T.; Umeda, Y. Unsupervised learning based diagnosis model for anomaly detection of motor bearing with current data. Procedia CIRP 2021, 98, 336–341. [Google Scholar] [CrossRef]

- Qiu, Z.; Yuan, X.; Wang, D.; Siwen, F.; Wang, Q. Physical model driven fault diagnosis method for shield Machine hydraulic system. Measurement 2023, 220, 113436. [Google Scholar] [CrossRef]

- Jiang, L.; Sheng, H.; Yang, T.; Tang, H.; Li, X.; Gao, L. A New Strategy for Bearing Health Assessment with a Dynamic Interval Prediction Model. Sensors 2023, 18, 7696. [Google Scholar] [CrossRef]

- Brescia, E.; Massenio, P.R.; Di Nardo, M.; Cascella, G.L.; Gerada, C.; Cupertino, F. Nonintrusive Parameter Identification of IoT-Embedded Isotropic PMSM Drives. IEEE J. Emerg. Sel. Top. Power Electron. 2023, 11, 5195–5207. [Google Scholar] [CrossRef]

- Brescia, E.; Massenio, P.R.; Di Nardo, M.; Cascella, G.L.; Gerada, C.; Cupertino, F. Parameter Estimation of Isotropic PMSMs Based on Multiple Steady-State Measurements Collected During Regular Operations. IEEE Trans. Energy Convers. 2023, 1–16. [Google Scholar] [CrossRef]

- Afridi, Y.S.; Hasan, L.; Ullah, R.; Ahmad, Z.; Kim, J.M. LSTM-Based Condition Monitoring and Fault Prognostics of Rolling Element Bearings Using Raw Vibrational Data. Machines 2023, 11, 531. [Google Scholar] [CrossRef]

- Zoha, A.; Gluhak, A.; Imran, M.A.; Rajasegarar, S. Non-intrusive load monitoring approaches for disaggregated energy sensing: A survey. Sensors 2012, 12, 16838–16866. [Google Scholar] [CrossRef] [PubMed]

- Surucu, O.; Gadsden, S.A.; Yawney, J. Condition Monitoring using Machine Learning: A Review of Theory. Expert Syst. Appl. 2023, 221, 119738. [Google Scholar] [CrossRef]

- Massenio, P.R.; Rizzello, G.; Naso, D.; Yawney, J. Fuzzy Adaptive Dynamic Programming Minimum Energy Control Of Dielectric Elastomer Actuators. In Proceedings of the IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), New Orleans, LA, USA, 23–26 June 2019; pp. 1–6. [Google Scholar]

- Himeur, Y.; Ghanem, K.; Alsalemi, A.; Bensaali, F.; Amira, A. Artificial intelligence based anomaly detection of energy consumption in buildings: A review, current trends and new perspectives. Appl. Energy 2021, 287, 116601. [Google Scholar] [CrossRef]

- Pan, H.; Yin, Z.; Jiang, X. High-dimensional energy consumption anomaly detection: A deep learning-based method for detecting anomalies. Energies 2022, 15, 6139. [Google Scholar] [CrossRef]

- Widodo, A.; Yang, B.S. Support vector machine in machine condition monitoring and fault diagnosis. Mech. Syst. Signal Process. 2007, 21, 2560–2574. [Google Scholar] [CrossRef]

- Ao, S.I.; Gelman, L.; Karimi, H.R.; Tiboni, M. Advances in Machine Learning for Sensing and Condition Monitoring. Appl. Sci. 2022, 12, 12392. [Google Scholar] [CrossRef]

- Yu, G.; Li, C.; Sun, J. Machine fault diagnosis based on Gaussian mixture model and its application. Int. J. Adv. Manuf. Technol. 2010, 48, 205–212. [Google Scholar] [CrossRef]

- Javed, M.R.; Shabbir, Z.; Asghar, F.; Amjad, W.; Mahmood, F.; Khan, M.O.; Virk, U.S.; Waleed, A.; Haider, Z.M. An Efficient Fault Detection Method for Induction Motors Using Thermal Imaging and Machine Vision. Sustainability 2022, 14, 9060. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, H.; Ding, S.; Zhang, X. Power consumption predicting and anomaly detection based on transformer and K-means. Front. Energy Res. 2021, 9, 779587. [Google Scholar] [CrossRef]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; Volume 2, pp. 28–31. [Google Scholar]

- Chaleshtori, A.E.; Aghaie, A. A novel bearing fault diagnosis approach using the Gaussian mixture model and the weighted principal component analysis. Reliab. Eng. Syst. Saf. 2024, 242, 109720. [Google Scholar] [CrossRef]

- Costa, V.G.; Pedreira, C. Recent advances in decision trees: An updated survey. Artif. Intell. Rev. 2023, 56, 4765–4800. [Google Scholar] [CrossRef]

- Shivahare, B.D.; Suman, S.; Challapalli, S.S.N.; Kaushik, P.; Gupta, A.D.; Bibhu, V. Survey Paper: Comparative Study of Machine Learning Techniques and its Recent Applications. In Proceedings of the 2022 2nd International Conference on Innovative Practices in Technology and Management (ICIPTM), Gautam Buddha Nagar, India, 23–25 February 2022; pp. 449–454. [Google Scholar]

- Adams, S.; Beling, P.A. A survey of feature selection methods for Gaussian mixture models and hidden Markov models. Artif. Intell. Rev. 2019, 52, 1739–1779. [Google Scholar] [CrossRef]

- Seeja, G.; Doss, A.S.A.; Hency, V.B. A Novel Approach for Disaster Victim Detection Under Debris Environments Using Decision Tree Algorithms With Deep Learning Features. IEEE Access 2023, 11, 54760–54772. [Google Scholar] [CrossRef]

- Ming, D.; Zhu, Y.; Qi, H.; Wan, B.; Hu, Y.; Luk, K.D.K. Study on EEG-based mouse system by using brain-computer interface. In Proceedings of the IEEE International Conference on Virtual Environments, Human-Computer Interfaces and Measurements Systems, Hong Kong, China, 11–13 May 2009; pp. 236–239. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brescia, E.; Vergallo, P.; Serafino, P.; Tipaldi, M.; Cascella, D.; Cascella, G.L.; Romano, F.; Polichetti, A. Online Condition Monitoring of Industrial Loads Using AutoGMM and Decision Trees. Machines 2023, 11, 1082. https://doi.org/10.3390/machines11121082

Brescia E, Vergallo P, Serafino P, Tipaldi M, Cascella D, Cascella GL, Romano F, Polichetti A. Online Condition Monitoring of Industrial Loads Using AutoGMM and Decision Trees. Machines. 2023; 11(12):1082. https://doi.org/10.3390/machines11121082

Chicago/Turabian StyleBrescia, Elia, Patrizia Vergallo, Pietro Serafino, Massimo Tipaldi, Davide Cascella, Giuseppe Leonardo Cascella, Francesca Romano, and Andrea Polichetti. 2023. "Online Condition Monitoring of Industrial Loads Using AutoGMM and Decision Trees" Machines 11, no. 12: 1082. https://doi.org/10.3390/machines11121082

APA StyleBrescia, E., Vergallo, P., Serafino, P., Tipaldi, M., Cascella, D., Cascella, G. L., Romano, F., & Polichetti, A. (2023). Online Condition Monitoring of Industrial Loads Using AutoGMM and Decision Trees. Machines, 11(12), 1082. https://doi.org/10.3390/machines11121082