An Augmented Reality-Assisted Disassembly Approach for End-of-Life Vehicle Power Batteries

Abstract

:1. Introduction

- (1)

- The design of an instance segmentation-based AR approach for disassembly scenes, which improves the scene perception capability of the AR-assisted disassembly system by identifying and segmenting each stage of the disassembly task.

- (2)

- The analysis of the AR-assisted disassembly approach from the perspective of scene awareness and AR-aided guidance. The proposed approach enables the automatic updating of disassembly instructions, improving disassembly efficiency and reducing operational burden on workers.

- (3)

- The feasibility of the proposed method is validated through a prototype system in case study products for industrial application. Experiments are carried out in various practical scenarios.

2. Related Work

2.1. AR and Its Applications in Manufacturing

2.2. Deep Learning Approach for Recycling and Disassembly

3. Methodology

3.1. Overview of the Proposed Method

- (1)

- Manual and semi-automatic limitations: the inconsistencies in disassembly would be caused by human involvement. Manual or semi-automated disassembly have limitations in terms of scalability, throughput, and safety.

- (2)

- Imminent need for automation: the increasing amount of waste power batteries necessitates a swift transition to automated processes. The demand for efficient and effective approaches is driven by environmental concerns and economic assessment.

- (3)

- Variability in power battery types: end-of-life power batteries come in a wide range of sizes and configurations due to their diverse applications. The variability in power battery types adds complexity to the disassembly process, making a standardised approach challenging.

- (4)

- Flexibility requirement: the practical disassembly method should be adaptable to the wide range of retired power batteries, regarding their types and conditions. Overlaying AR visualisations onto the disassembly workspace could provide step-by-step instructions to the operation, so that the efficiency of the disassembly process is significantly enhanced.

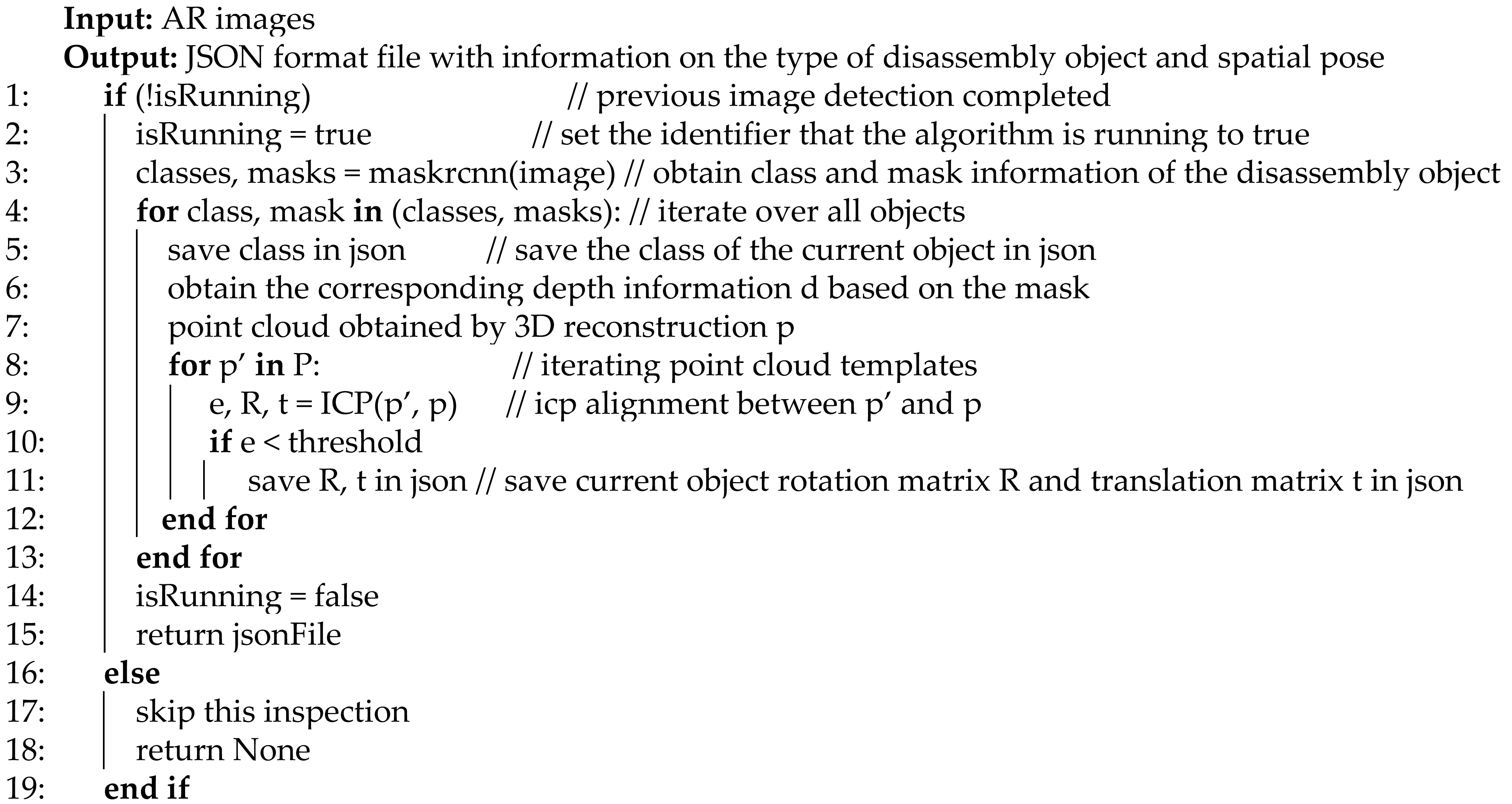

| Algorithm 1 Scene perception based on instance segmentation. |

|

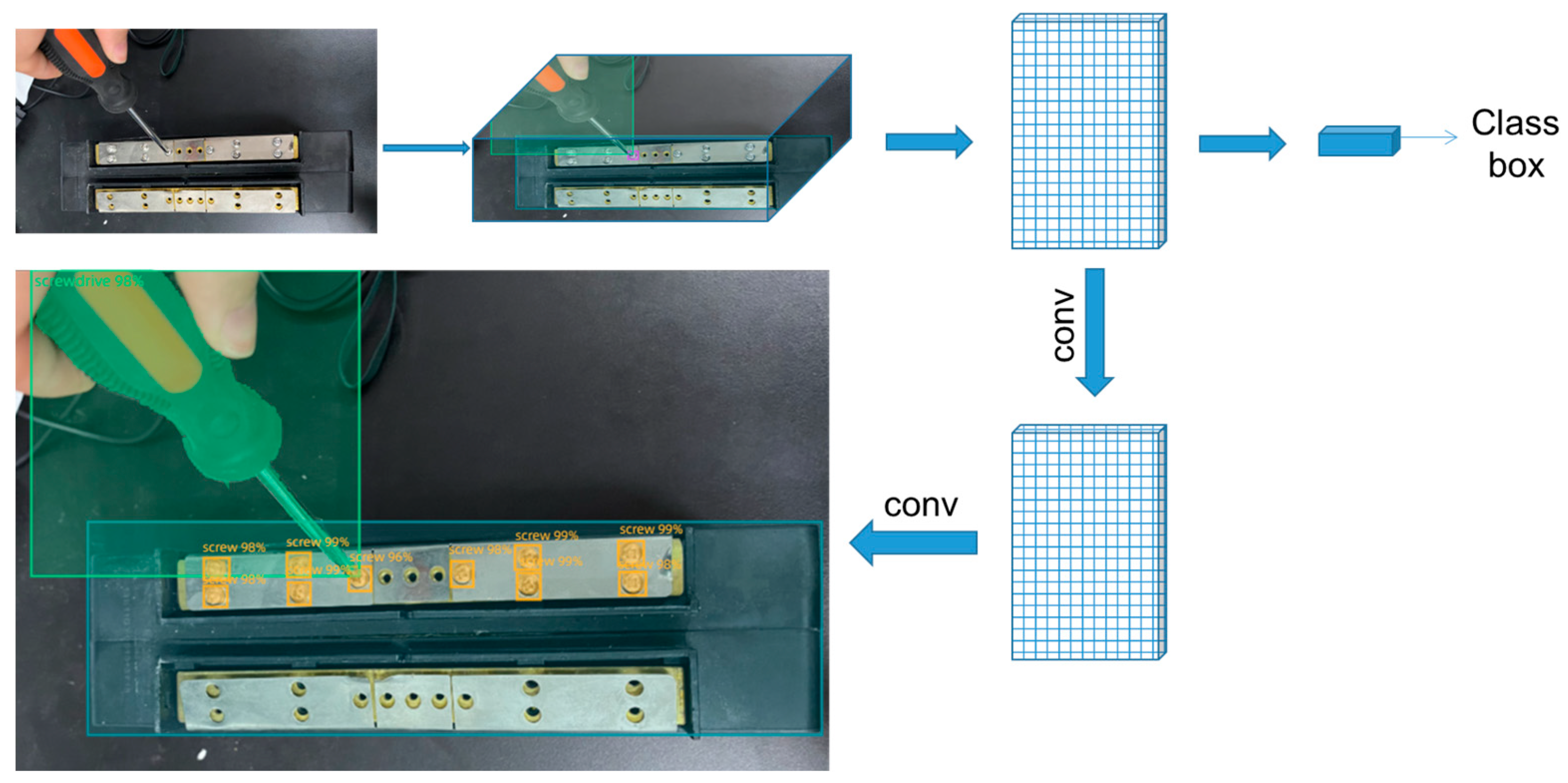

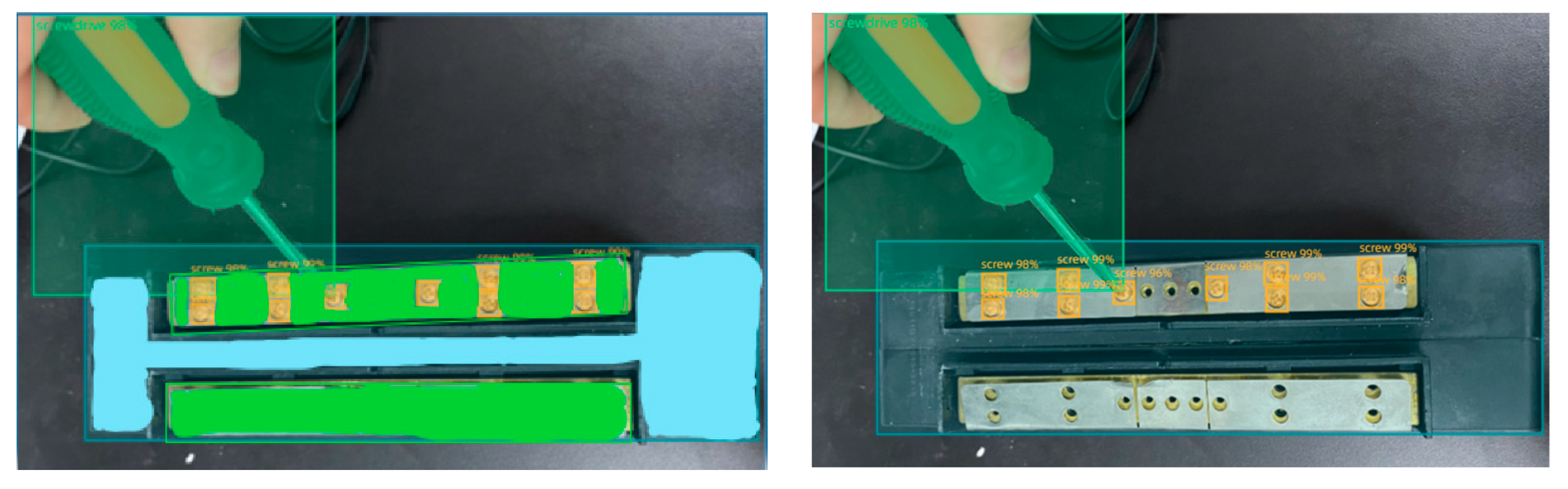

3.2. Instance Segmentation and Post Estimation of Target Disassembly Parts

3.2.1. Instance Segmentation of Disassembly Parts

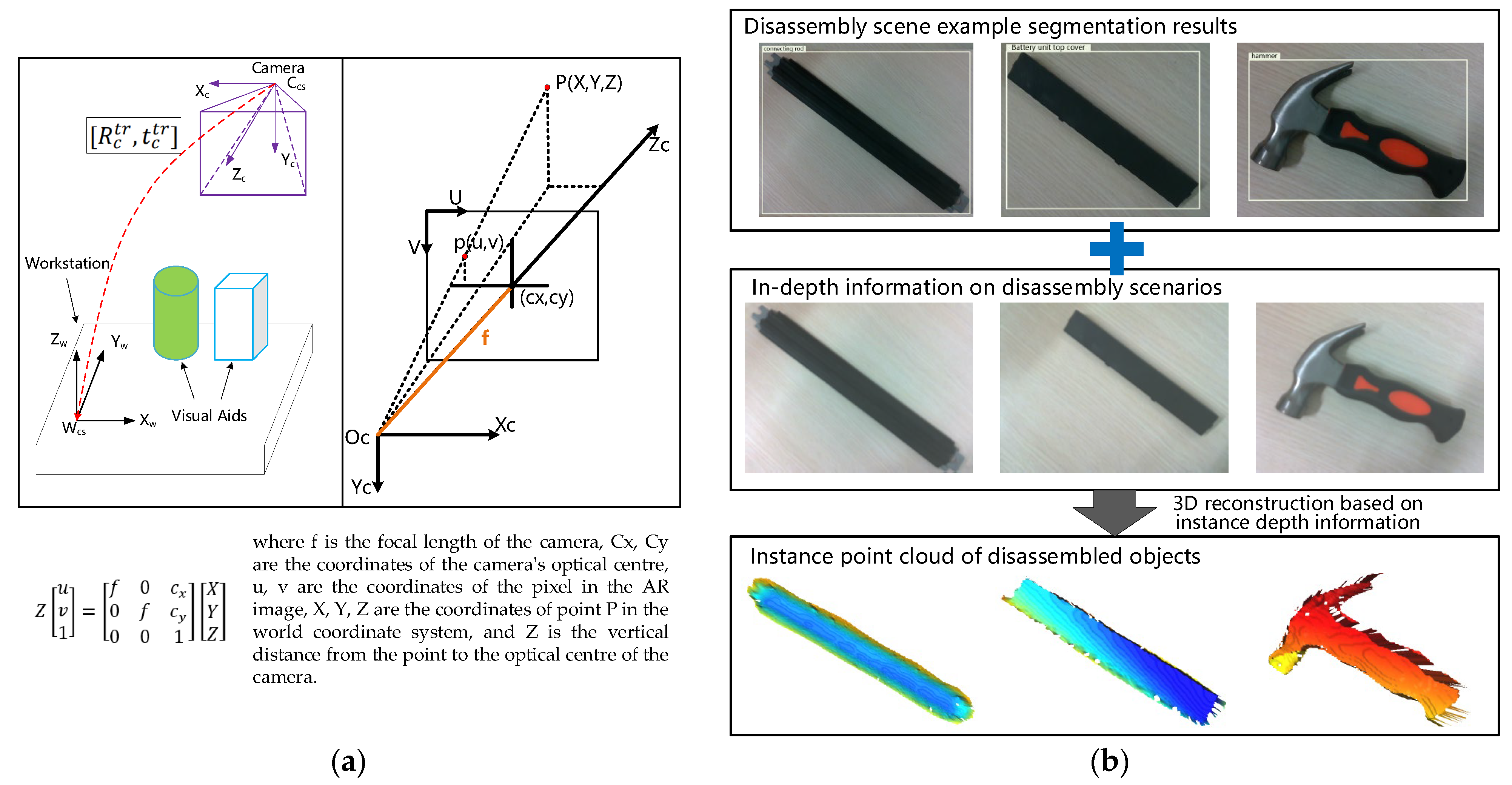

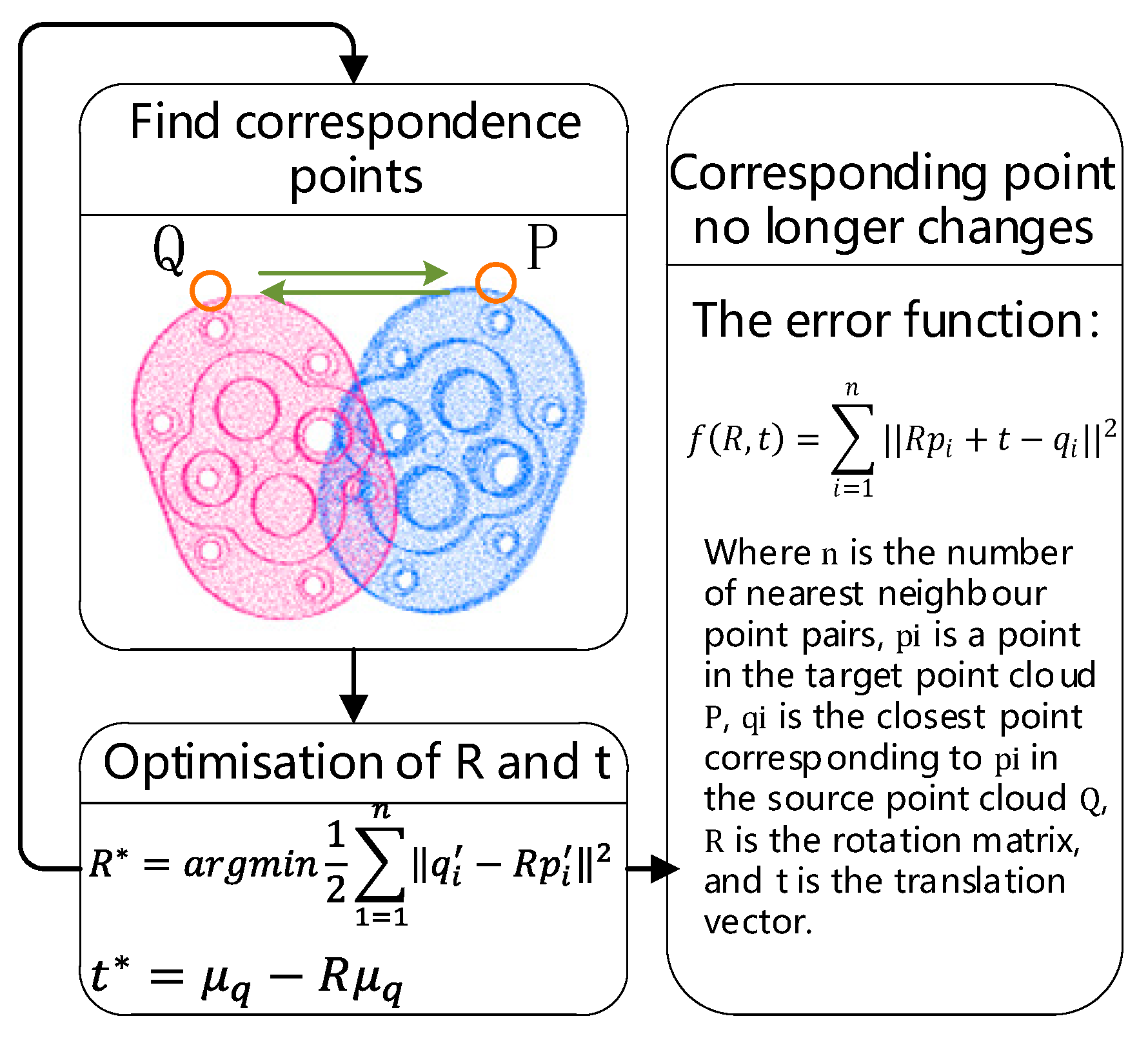

3.2.2. Attitude Estimation of Disassembly Parts

3.3. Disassembly Instructions

3.3.1. Scene Awareness in the Disassembly Process

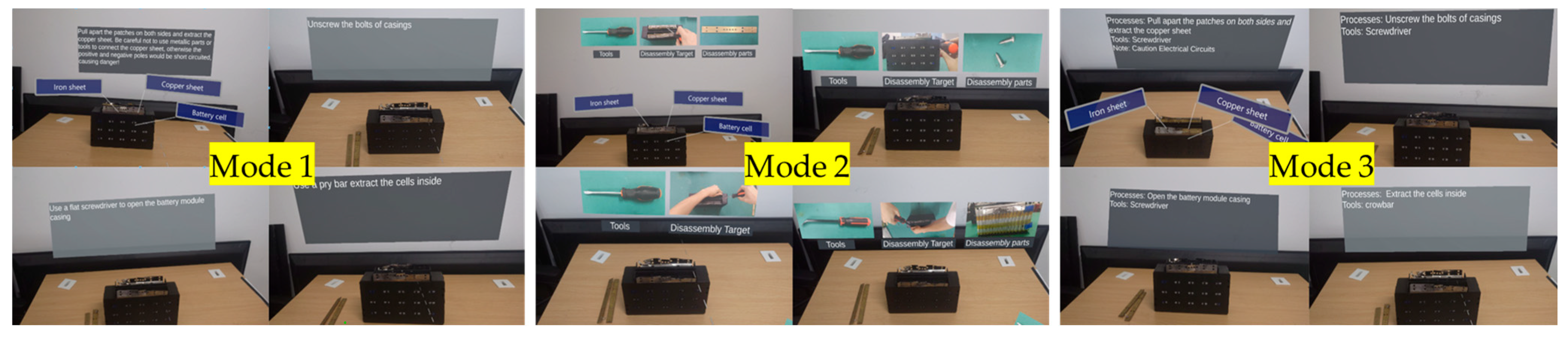

3.3.2. AR-Assisted Disassembly Processes

4. Experimental Results and Discussion

4.1. Disassembly System Prototype

4.2. Case Validation

4.2.1. Disassembly Scene Perception

4.2.2. AR Disassembly System Evaluation

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. List of the Questionnaire

References

- Athanasopoulou, L.; Bikas, H.; Papacharalampopoulos, A.; Stavropoulos, P.; Chryssolouris, G. An industry 4.0 approach toelectric vehicles. Int. J. Comput. Integr. Manuf. 2023, 36, 334–348. [Google Scholar] [CrossRef]

- Bibra, E.M.; Connelly, E.; Dhir, S.; Drtil, M.; Henriot, P.; Hwang, I.; Le Marois, J.B.; McBain, S.; Paoli, L.; Teter, J. Global EV Outlook2022: Securing Supplies for an Electric Future 2022. Available online: https://www.iea.org/events/global-ev-outlook-2022 (accessed on 30 October 2023).

- Xu, W.; Cui, J.; Liu, B.; Liu, J.; Yao, B.; Zhou, Z. Human-robot collaborative disassembly line balancing considering the safestrategy in remanufacturing. J. Clean. Prod. 2021, 324, 129158. [Google Scholar] [CrossRef]

- Poschmann, H.; Brueggemann, H.; Goldmann, D. Disassembly 4.0: A review on using robotics in disassembly tasks as a way of automation. Chem. Ing. Tech. 2020, 92, 341–359. [Google Scholar] [CrossRef]

- Tresca, G.; Cavone, G.; Carli, R.; Cerviotti, A.; Dotoli, M. Automating bin packing: A layer building matheuristics for cost effective logistics. IEEE Trans. Autom. Sci. Eng. 2022, 19, 1599–1613. [Google Scholar] [CrossRef]

- Reljić, V.; Milenković, I.; Dudić, S.; Šulc, J.; Bajči, B. Augmented reality applications in industry 4.0 environment. Appl. Sci. 2021, 11, 5592. [Google Scholar] [CrossRef]

- Masood, T.; Egger, J. Augmented reality in support of Industry 4.0—Implementation challenges and success factors. Robot. Comput.-Integr. Manuf. 2019, 58, 181–195. [Google Scholar] [CrossRef]

- Wang, X.; Ong, S.; Nee, A. Real-virtual components interaction for assembly simulation and planning. Robot. Comput.-Integr. Manuf. 2016, 41, 102–114. [Google Scholar] [CrossRef]

- Palmarini, R.; Erkoyuncu, J.A.; Roy, R.; Torabmostaedi, H. A systematic review of augmented reality applications in maintenance. Robot. Comput.-Integr. Manuf. 2018, 49, 215–228. [Google Scholar] [CrossRef]

- Ferraguti, F.; Pini, F.; Gale, T.; Messmer, F.; Storchi, C.; Leali, F.; Fantuzzi, C. Augmented reality based approach for on-line quality assessment of polished surfaces. Robot. Comput.-Integr. Manuf. 2019, 59, 158–167. [Google Scholar] [CrossRef]

- Hu, J.; Zhao, G.; Xiao, W.; Li, R. AR-based deep learning for real-time inspection of cable brackets in aircraft. Robot. Comput.-Integr. Manuf. 2023, 83, 102574. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Unmanned Aerial Vehicle (UAV) path planning and control assisted by Augmented Reality (AR): The case of indoor drones. Int. J. Prod. Res. 2023, 1–22. [Google Scholar] [CrossRef]

- Van Lopik, K.; Sinclair, M.; Sharpe, R.; Conway, P.; West, A. Developing augmented reality capabilities for industry 4.0 small enterprises: Lessons learnt from a content authoring case study. Comput. Ind. 2020, 117, 103208. [Google Scholar] [CrossRef]

- Liu, Y.; Li, S.; Wang, J.; Zeng, H.; Lu, J. A computer vision-based assistant system for the assembly of narrow cabin products. Int. J. Adv. Manuf. Technol. 2015, 76, 281–293. [Google Scholar] [CrossRef]

- Wang, X.; Ong, S.; Nee, A.Y.C. Multi-modal augmented-reality assembly guidance based on bare-hand interface. Adv. Eng. Inform. 2016, 30, 406–421. [Google Scholar] [CrossRef]

- de Souza Cardoso, L.F.; Mariano, F.C.M.Q.; Zorzal, E.R. Mobile augmented reality to support fuselage assembly. Comput. Ind. Eng. 2020, 148, 106712. [Google Scholar] [CrossRef]

- Fang, W.; Fan, W.; Ji, W.; Han, L.; Xu, S.; Zheng, L.; Wang, L. Distributed cognition based localization for AR-aided collaborative assembly in industrial environments. Robot. Comput.-Integr. Manuf. 2022, 75, 102292. [Google Scholar] [CrossRef]

- Zhu, Z.; Liu, C.; Xu, X. Visualisation of the digital twin data in manufacturing by using augmented reality. Procedia CIRP 2019, 81, 898–903. [Google Scholar] [CrossRef]

- Parsa, S.; Saadat, M. Human-robot collaboration disassembly planning for end-of-life product disassembly process. Robot. Comput.-Integr. Manuf. 2021, 71, 102170. [Google Scholar] [CrossRef]

- Li, S.; Zheng, P.; Zheng, L. An AR-assisted deep learning-based approach for automatic inspection of aviation connectors. IEEE Trans. Ind. Inform. 2020, 17, 1721–1731. [Google Scholar] [CrossRef]

- Ben Abdallah, H.; Jovančević, I.; Orteu, J.J.; Brèthes, L. Automatic inspection of aeronautical mechanical assemblies by matching the 3D CAD model and real 2D images. J. Imaging 2019, 5, 81. [Google Scholar] [CrossRef]

- Jia, C.; Liu, Z. Collision detection based on augmented reality for construction robot. In Proceedings of the 2020 5th International Conference on Advanced Robotics and Mechatronics (ICARM), Shenzhen, China, 18–21 December 2020; pp. 194–197. [Google Scholar]

- Liu, Q.; Liu, Z.; Xu, W.; Tang, Q.; Zhou, Z.; Pham, D.T. Human-robot collaboration in disassembly for sustainable manufacturing. Int. J. Prod. Res. 2019, 57, 4027–4044. [Google Scholar] [CrossRef]

- Schoettler, G.; Nair, A.; Ojea, J.A.; Levine, S.; Solowjow, E. Meta-reinforcement learning for robotic industrial insertion tasks. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2021; pp. 9728–9735. [Google Scholar]

- Kong, S.; Liu, C.; Shi, Y.; Xie, Y.; Wang, K. Review of application prospect of deep reinforcement learning in intelligent manufacturing. Comput. Eng. Appl. 2021, 57, 49–59. [Google Scholar]

- Ding, D.; Ding, Z.; Wei, G.; Han, F. An improved reinforcement learning algorithm based on knowledge transfer and applications in autonomous vehicles. Neurocomputing 2019, 361, 243–255. [Google Scholar] [CrossRef]

- Du, Z.J.; Wang, W.; Yan, Z.Y.; Dong, W.; Wang, W. A Physical Human-Robot Interaction Algorithm Based on Fuzzy Reinforcement Learning for Minimally Invasive Surgery Manipulator. Robot 2017, 39, 363–370. [Google Scholar]

- Jin, Z.-H.; Liu, A.-D.; Lu, L. Hierarchical Human-robot Cooperative Control Based on GPR and Deep Reinforcement. Acta Autom. Sin. 2022, 48, 2352–2360. [Google Scholar]

- Chen, L.; Jiang, S.; Liu, J.; Wang, C.; Zhang, S.; Xie, C.; Liang, J.; Xiao, Y.; Song, R. Rule mining over knowledge graphs via reinforcement learning. Knowl.-Based Syst. 2022, 242, 108371. [Google Scholar] [CrossRef]

- Zhao, X.; Li, C.; Tang, Y.; Cui, J. Reinforcement learning-based selective disassembly sequence planning for the end-of-life products with structure uncertainty. IEEE Robot. Autom. Lett. 2021, 6, 7807–7814. [Google Scholar] [CrossRef]

- Zhao, X.; Zhao, H.; Chen, P.; Ding, H. Model accelerated reinforcement learning for high precision robotic assembly. Int. J. Intell. Robot. Appl. 2020, 4, 202–216. [Google Scholar] [CrossRef]

- Luo, J.; Solowjow, E.; Wen, C.; Ojea, J.A.; Agogino, A.M. Deep reinforcement learning for robotic assembly of mixed deformable and rigid objects. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2062–2069. [Google Scholar]

- Inoue, T.; De Magistris, G.; Munawar, A.; Yokoya, T.; Tachibana, R. Deep reinforcement learning for high precision assembly tasks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 819–825. [Google Scholar]

- Arana-Arexolaleiba, N.; Urrestilla-Anguiozar, N.; Chrysostomou, D.; Bøgh, S. Transferring human manipulation knowledge to industrial robots using reinforcement learning. Procedia Manuf. 2019, 38, 1508–1515. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, H.; Chang, Q.; Wang, L.; Gao, R.X. Recurrent neural network for motion trajectory prediction in human-robot collaborative assembly. CIRP Ann. 2020, 69, 9–12. [Google Scholar] [CrossRef]

- Moutselos, K.; Berdouses, E.; Oulis, C.; Maglogiannis, I. Recognizing occlusal caries in dental intraoral images using deep learning. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1617–1620. [Google Scholar]

- Xiao, J.; Liu, G.; Wang, K.; Si, Y. Cow identification in free-stall barns based on an improved Mask R-CNN and an SVM. Comput. Electron. Agric. 2022, 194, 106738. [Google Scholar] [CrossRef]

- Zhu, G.; Piao, Z.; Kim, S.C. Tooth detection and segmentation with mask R-CNN. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; pp. 70–72. [Google Scholar]

- Rashid, U.; Javid, A.; Khan, A.R.; Liu, L.; Ahmed, A.; Khalid, O.; Saleem, K.; Meraj, S.; Iqbal, U.; Nawaz, R. A hybrid mask RCNN-based tool to localize dental cavities from real-time mixed photographic images. PeerJ Comput. Sci. 2022, 8, e888. [Google Scholar] [CrossRef]

| First Round (Unit: Second) | Second Round (Unit: Second) | Third Round (Unit: Second) | Average Time (Unit: Second) | |

|---|---|---|---|---|

| Group A | 325 | 316 | 307 | 316 |

| Group B | 277 | 271 | 268 | 272 |

| Group C | 261 | 260 | 276 | 265 |

| Group D | 267 | 263 | 260 | 263 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Liu, B.; Duan, L.; Bao, J. An Augmented Reality-Assisted Disassembly Approach for End-of-Life Vehicle Power Batteries. Machines 2023, 11, 1041. https://doi.org/10.3390/machines11121041

Li J, Liu B, Duan L, Bao J. An Augmented Reality-Assisted Disassembly Approach for End-of-Life Vehicle Power Batteries. Machines. 2023; 11(12):1041. https://doi.org/10.3390/machines11121041

Chicago/Turabian StyleLi, Jie, Bo Liu, Liangliang Duan, and Jinsong Bao. 2023. "An Augmented Reality-Assisted Disassembly Approach for End-of-Life Vehicle Power Batteries" Machines 11, no. 12: 1041. https://doi.org/10.3390/machines11121041

APA StyleLi, J., Liu, B., Duan, L., & Bao, J. (2023). An Augmented Reality-Assisted Disassembly Approach for End-of-Life Vehicle Power Batteries. Machines, 11(12), 1041. https://doi.org/10.3390/machines11121041