1. Introduction

The number of hungry people has been mounting due to the COVID-19 pandemic, and surpassed 80 million in 2021, as reported by World Health Organization (WHO) [

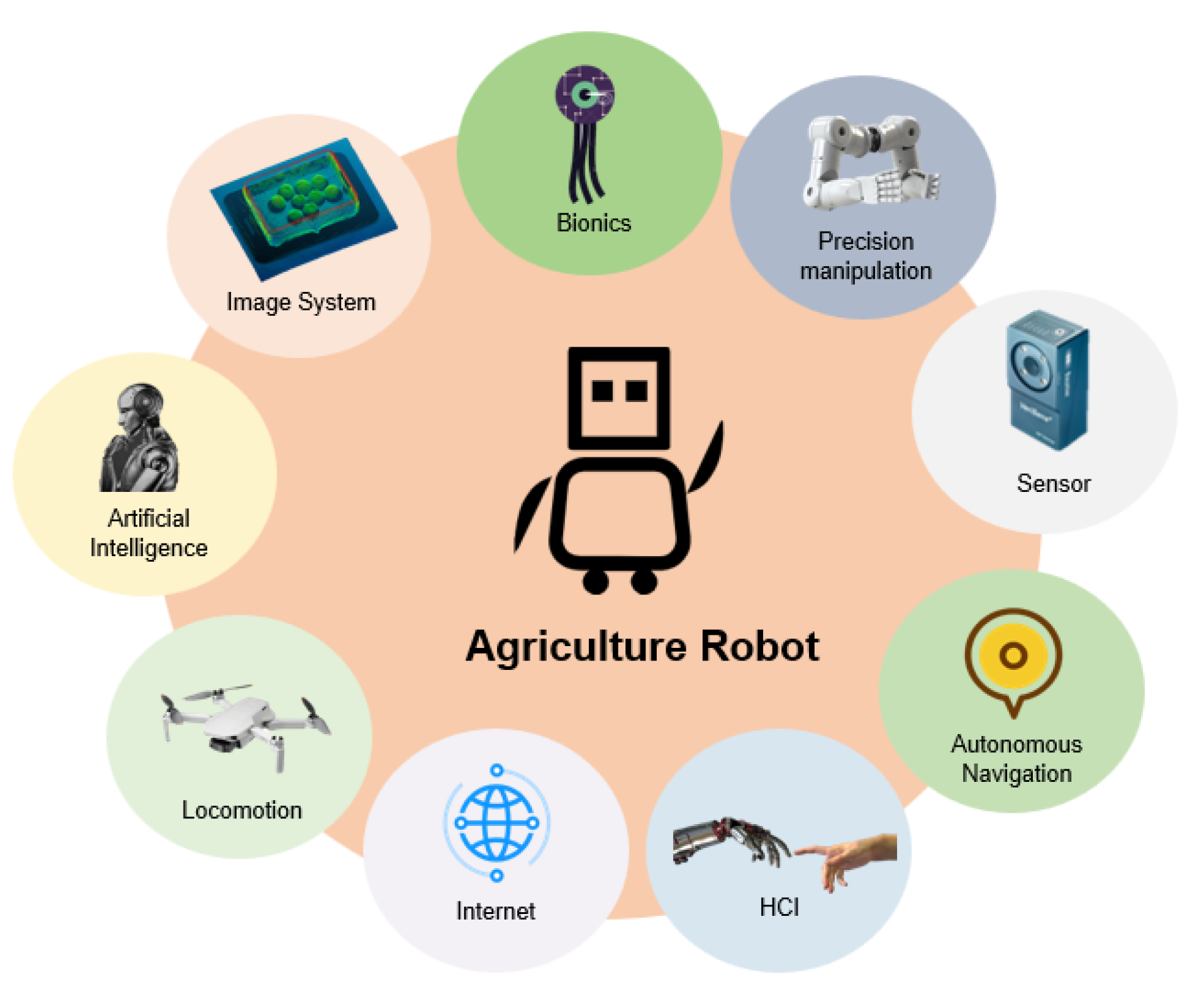

1]. Moreover, to handle the challenges of the aggravation of population ageing and acceleration of the pace of life, traditional labour-intensive and risky farm work should be empowered by more automated control work for promising outcomes. In the aspect of academic study, many researchers have dedicated significant efforts to studying agricultural robots, especially during the COVID-19 pandemic, as shown in

Figure 1. Therefore, it is reasonable to explore agriculture further using advanced technology in order to keep promoting the current status. It should be mentioned that agriculture robots and intelligent automatic systems are usually equipped with versatile sensing and fast-learning units [

2], which provide encouraging capabilities. In addition, much effort has been put towards achieving complete automation and improving the operating efficiency of agriculture robots.

Agriculture robots generally refer to machines designed for farming production use [

3]. As an integral member of the robot family, they usually possess advanced perception abilities, autonomous decision-making abilities, control, and precise execution abilities. Furthermore, they can achieve accurate and efficient production goals even under complex, harsh, and dangerous environments. In Ref. [

4], the authors proposed a mechanism for considering the coupling effect of temperature and pressure, and investigated the accuracy of the flow characteristics, which is helpful in the development of agriculture robots.

Rovira et al. [

5] developed navigation algorithms based on perception, which is the critical technology used for navigation. A UAV designed by Alsalam et al. [

6] for agriculture implemented a configuration approach to fulfil decision-making. In terms of control, Zhang et al. [

7] developed high-precision control to enable field robots to achieve efficient phenotyping. Due to their soft properties, tomatoes must be picked carefully. Therefore, Wang et al. [

8] designed a flexible end effector to pick tomatoes, with a successful rate of 86%. These advancements in agriculture robots have inspired progress in other types of robots, such as industrial robots. Inspired by these applications, in [

9] the authors proposed a new method for quantifying the energy consumption of pneumatic systems that combines air pressure, volume, and temperature.

Due to the practical requirements for labor-saving and efficient agricultural production, the categories of agriculture robots have been continuously expanding, and their application scenarios have become more diversified. In light of their different objects, agricultural robots are usually divided into field robots [

10], fruit and vegetable robots [

11], and animal husbandry robots [

12]. Furthermore, based on an analysis of the relevant literature, the research on agricultural robots mainly involves field robots and fruit and vegetable robots, especially in the harvesting domain. Although different agriculture robots are characterized by their respective application scenarios, they bear a number of similarities in core technologies. For example, a stable mobile platform, multi-sensor collaboration, advanced visual image processing technology, sophisticated algorithms, and flexible locomotion control are usually indispensable in constitute an agricultural robot. Moreover, other related techniques are presented together in

Figure 2.

The rest of this manuscript is structured as follows. An overall introduction to the implementation of agricultural robots is provided in

Section 2. In

Section 3,

Section 4 and

Section 5, field robots, fruit and vegetable robots, and animal husbandry robots are introduced in terms of their features, functions, categories, and applications.

Section 6 provides a comparative review of agriculture robots based on several criteria.

Section 7 discusses the legislative aspects of agricultural robots. In

Section 8, a detailed discussion is offered to clarify the existing challenges and recent noteworthy advancements in agricultural robots. Finally,

Section 9 draws a concise conclusion and offers an outlook for the future of agricultural robots.

2. Implementation of Agricultural Robots

With the rapid evolution of robotics, innovating in the field of agricultural robots continues, and they are widely used in diverse agricultural production areas. In general, agriculture robots can be catalogued into three types based on their application scenarios, ranging from fields and orchards to farms. Moreover, agricultural production is a long-term cycle. Seeding, planting, nurturing, harvesting, and processing are crucial steps towards agricultural industrialization. Therefore, agricultural robots can be classified using the industrial chain as well (

Figure 3).

The conditions for agricultural production are versatile and complex, which requires agriculture robots to be equipped with outstanding adaptability, precise navigation, and obstacle avoidance ability. Therefore, they are mainly manufactured with four parts to conduct their assignments: a vision system, a control system, mechanical actuators, and a mobile platform, as shown in

Figure 4.

Accordingly, these four parts exert their own influence on agricultural production. First, the vision system can transform captured data into images using various cameras, such as thermal, RGBD, TOF, and multi-spectral cameras. Thermal images are conducive to detecting hidden vegetables, as proven by Hespeler et al. [

20]. Second, the control system is the brain of the robot, playing an instrumental role decision-making and motion planning. Third, advanced mechanical actuators are a prerequisite for precise operation, especially for tender fruits and vegetables. Lastly, mobile platforms enable robots to navigate, avoid obstacles, perform detection, and carry out tasks [

21,

22,

23,

24,

25] (

Figure 5).

3. Field Robots

Field robots usually refer to autonomous, decision-making, mechatronic, and mobile operation devices that can accomplish various crop production tasks semi-automatically or automatically. In this section, 35 pieces of literature are reviewed, including 25 robots and their respective modes of locomotion. Most field robots are designed to locomote using wheels; the use of caterpillars and drones is rare. Interestingly, the implementation of drones is centered on crop protection via spraying of pesticides. Typical assignments include tilling, seeding, crop protection, information collection, and harvesting [

31].

3.1. Tillage Robots

Tillage robots refer to intelligent machines that are utilised to cultivate the land. As we all know, tillage is a monotonous and labour-intensive task. Tillage robots can free farmers from heavy labour while enhancing the efficiency and quality of cultivation, and play an instrumental role in digital agriculture.

The machinery of tillage robots is relatively developed owing to arduous exploration. As a result, recent advancements in tillage robot technology mainly lie in updating robot systems. Owing to severe population ageing, the Japanese are quite concerned about the automation of agriculture production. Early in 2013, Tamaki et al. [

32] developed a robotic system with three robots for large-scale paddy farming. In the automated system, the first element is a tillage robot navigated by RTK-GNSS and inertia measurement unit (IMU) or GPS compass that can locomote between the paddy fields. In a way, this invention previewed Japan’s promising future of agriculture robots. In 2021, Panarin [

33] developed existing software for tilling robots, partly aiming to ensure adaptation between software systems and manufactured robots. Furthermore, customer requirements have been fully satisfied by using ROS (Robot Operating System) [

33] and adapting digital robots to their surroundings. In addition to typical tillage robots, robotic tractors contribute to tilling operations to a great extent [

34,

35]. Recently, John Deere rolled out an electric robot tractor called Sesam 2, which can produce 300 kW (400 hp) of power and play a key role in both tilling and harvesting. Moreover, it is able to achieve synergy with several other robots [

36].

3.2. Seeding Robots

Sowing is the primary process in field production. Therefore, seed-sowing robots are conducive to sowing seeds in exact positions, saving both time and cost for farmers.

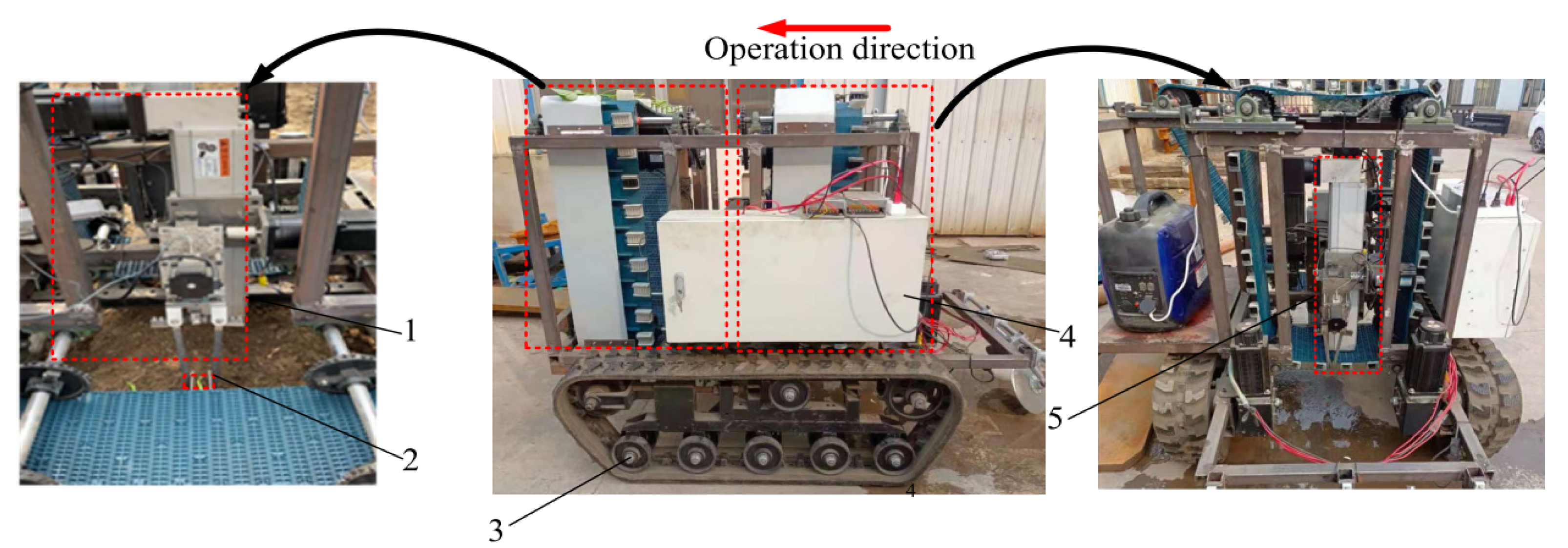

To date, many functional seeding robots have been invented and put into extensive practice. The precision seeding robot for wheat shown in

Figure 6 [

37] was designed with four wheels, servo motors, and stepper motors. According to the trial results, its seeding rate surpassed 93% in typical sowing speed. In [

18], the authors proposed a seeding robot that can dig soil, plant seeds, and cover them with soil. The function of adding fertiliser and watering is available as well. In 2019, Raj et al. [

38] designed and tested an automatic robot for seeding and microdose fertilising. It was expected that the robot could plant different seeds, and the trial outcome displayed outstanding prototype performance. Kumar et al. [

28] developed an intelligent seed-sowing robot controlled by an IoT system which was able to achieve complete seeding automation. Stepper motors and DC motors were utilised to power the robot.

3.3. Crop Protection Robots

Generally speaking, traditional crop protection involves spraying poisonous pesticides manually, which adversely affects farmers’ health. In order to diminish exposure to pesticides, an intelligent robotic system [

39] was developed to automatically spray pesticides based on a control algorithm for navigation and a high-efficiency trajectory calculating algorithm. Deshmukh et al. [

40] studied and analyzed a multi-purpose pesticide spraying robotic system using a fuzzy control system; it was able to locate infected plants and then spray the appropriate pesticide.

However, ordinary crop protection tends to incur overuse of pesticides, which raises production expenses and does harm to the environment [

41]. More precise crop protection methods are expected to cope with this issue. One well-known piece of equipment is the Yamaha R-MAX, developed by the Japanese, which is a leading platform in aerial pesticide spraying [

42]. Ghafar et al. [

43] developed a cost-saving spraying robot to satisfy the need for spraying pesticides and fertilizers, shown in

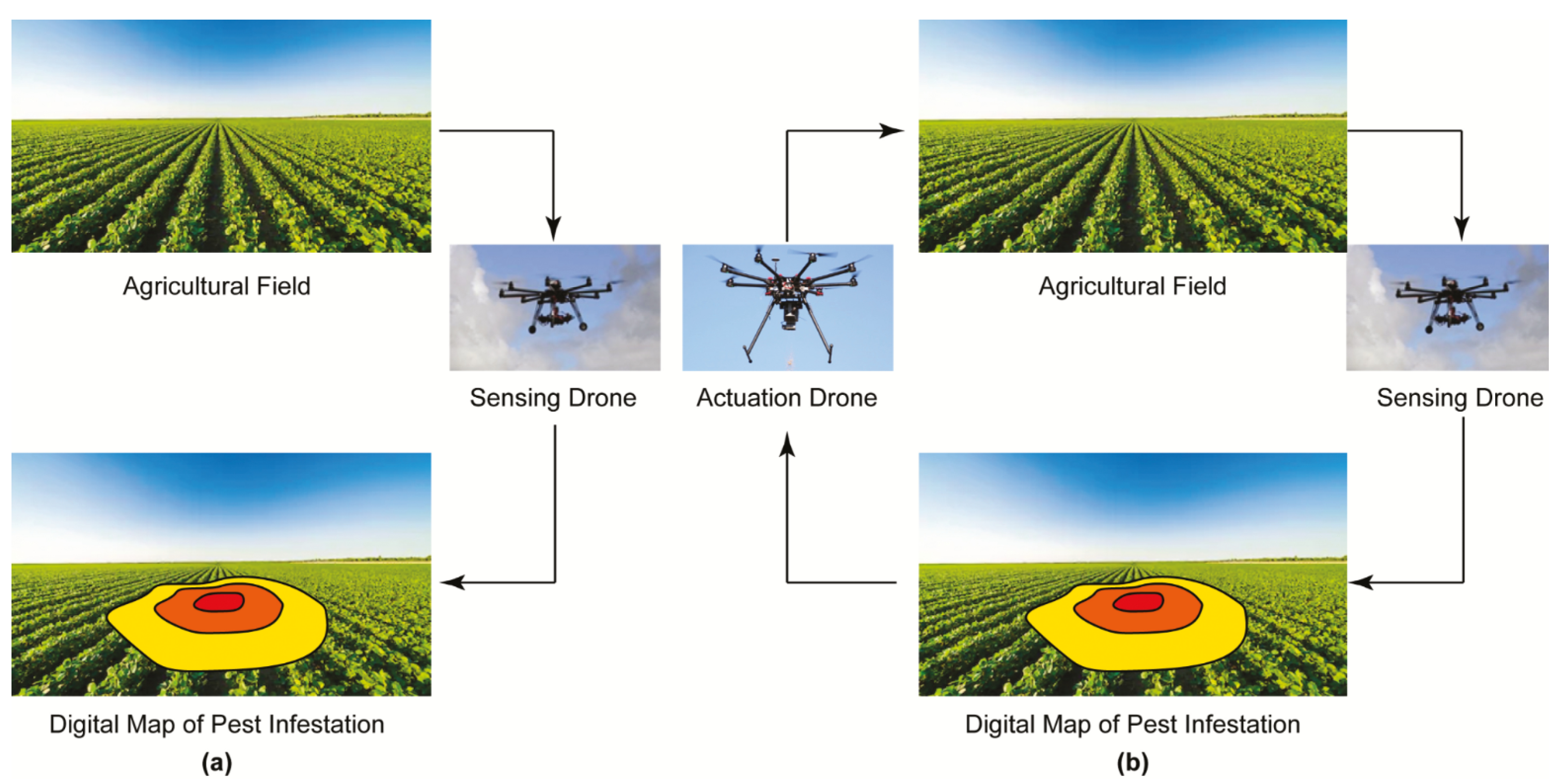

Figure 7. As shown in

Figure 8, Iost et al. [

44] introduced novel technologies for small drones, including sensing and actuation drones. By collaborating with each other, the intelligent system provides sustainable pest control. A new modular system for precision farming was put forward in [

45] based on the technology of individual nozzles and computer vision. The application of these machines can both protect the environment and save farming expenses.

Apart from pest control, overall environmental management pertains to crop protection as well. Martini et al. [

46] proposed an automated gardening robot that can automatically plant and monitor soil and water. Similarly, based on DTMF, Srivastava et al. [

47] developed a multi-function field robot that can measure soil moisture, irrigate and spray pesticides, etc. Furthermore, it can be controlled remotely. Sori et al. [

13] developed a robot for weeding in paddy fields. This robot, equipped with two wheels, touch sensors, and a turning azimuth sensor, can pull weeds by stirring up the soil and blocking sunlight, potentially improving crop yield in view of experimental results.

Several advanced technologies have been applied to crop protection robots, such as the ant colony algorithm, trajectory method, and optimized robot systems. An et al. [

48] proposed a more precise and efficient ant colony algorithm for plant protection robots, aiming to improve the reliability and accuracy of path planning. For the agricultural development of crop planting in western China, a region characterized by fragmentary cropland, Ma et al. [

49] determined a novel trajectory method by analyzing the arrangement of crops using the ant colony algorithm. In

Figure 9, Nascimento et al. [

50] optimized an autonomous pesticide spraying robot system, enabling the sprayer to detect rows and activate nozzles.

3.4. Field Information-Collecting Robots

Although collecting information in the field can be laborious and gruelling, the data gathered as a result assists farmers in making invisible decisions. In light of this, field information-collecting robots have been developed to accomplish this assignment. At the University of Saskatchewan, Bayati et al. [

51] developed, implemented, and verified a field-based high-throughput plant phenotyping mobile robotic platform to monitor Canola plants. Wide-range images of plant canopies can be gathered and analyzed by the platform automatically. This innovation has been demonstrated to improve the productivity of farms while decreasing costs in the long run. As shown in

Figure 10, Cubero [

52] developed a field robot called Robhortic for detecting pests and diseases in horticultural crops. After three trials in carrot fields, its performance was outstanding, with a detection rate of 66.4% and 59.8% in the laboratory and field, respectively. An ROS-driven mobile robot [

53] was specially designed to navigate the area and collect phenotyping data, with an error rate of only 6.6% and 4% for plot volume and canopy height, respectively. Ultimately, this work has aided in expediting the evolution of agricultural robots, especially in phenotype monitoring.

The technological development of information-collecting robots has included breakthroughs in neural network algorithms and visual navigation. Gu et al. [

54] enhanced a field information-collecting robot’s convolutional neural network algorithm by manipulating the path tracking to ensure stable movement, minor deviation, and human–machine separation.

3.5. Crop Harvesting Robots

As is well known, rice cutter machines have been available for many years. Based on the existing mechanical framework [

55,

56,

57], many algorithms have been developed to automate such harvesters. In 2022, Geng et al. [

16] developed an automatic corn harvester system able to fulfil trial requirements with a deviation rate of 95.4% at normal harvester speeds. Notably, these advancement represent a benchmark for improving the automatic row alignment process. Li et al. [

58] developed and applied a deep-learning algorithm based on ICNet to assist a robotic harvester with accurate obstacle detection in real-time, as shown in

Figure 11. This automatic harvester, equipped with a pruned model, was able to realize collision avoidance with a success rate of 96.6% at an average proceeding speed. Considering the deficiencies of the current navigation algorithms used in harvester robots, Li et al. [

59] developed an enhanced detection algorithm that reached a success rate of 94.6%, higher than the least squares method. However, precise corner detection was hard to attain. Having improved the PSO algorithm, Pooranam [

60] invented a robotic swarm harvester to help farmers with large-scale reaping, threshing, and cleaning. Using a simple mathematical operation, they were able to optimize course of harvesting. Considering the large overshoot and long convergence time caused by large initial heading errors, Wang et al. [

61] explored a novel trajectory planning algorithm for harvesting robots that could enhance stability, thereby improving operational performance.

4. Fruit and Vegetable Robots

Manpower cannot fully meet the rapid requirements of the agricultural products market. Alternatively, smart robotics can be an efficient solution to increase the planting areas for the markets in combination with changes in cultivation, preservation, and processing technology. In this section, five major types of fruit and vegetable robots are be introduced, including transplanting robots, patrolling robots, pesticide spraying robots, gardening robots, and picking robots.

4.1. Transplanting Robots

With regard to transplanting performance, accuracy and stability are two critical indicators. Therefore, Jin et al. [

62] proposed an advanced control approach using manipulators for hydraulic transplanting robots. As a result, the control accuracy and stability of transplanting was improved. Yang et al. [

63] developed a transplanting robot with three degrees of freedom. A subsequent trial was conducted, and the result showed that the transplanting robot could achieve a success rate of 95.3% even as the acceleration reached 30 m/s

. Han et al. [

64] constructed and evaluated a multi-task transplanting robot that could reach a success ratio of 90% even at a speed of 960 plants/min per gripper. Future research that integrates agronomic and mechanical requirements is expected. Furthermore, the design of more inexpensive products for smallholder farmers is anticipated. In

Figure 12, Liu et al. [

65] designed an advanced transplanting robot for sweet potatoes which was distinguished by two-degree-of-freedom path control. Notably, this machine can automatically implement diverse transplanting strategies in light of different terrain types. The minimum qualified rate of seedling erection angle and planting depth was 94.7% and 94.8%, respectively, satisfying the practical requirements of mechanical transplanting of sweet potatoes.

4.2. Fruit and Vegetable Patrolling Robots

Fruit and vegetable patrolling robots usually navigate autonomously, collect various information, and finally transmit feedback gathered information to farmers. The data they gather incorporates fruit and vegetable maturity, environmental parameters, and pests. Based on colour proportion analysis, Zhou et al. [

66] improved a scouting robot to detect tomatoes and measure their maturity using YOLOV4. It is worth mentioning that the identification accuracy rate is extremely high, reaching 95%, and the detection speed is more than 5 frames/s in the natural greenhouse. Iida et al. [

67] designed an information-collecting robot to collect environmental information, such as CO2 content, temperature, and humidity. In addition, they validated the usefulness of the proposed robot through a prototype.

Wang et al. [

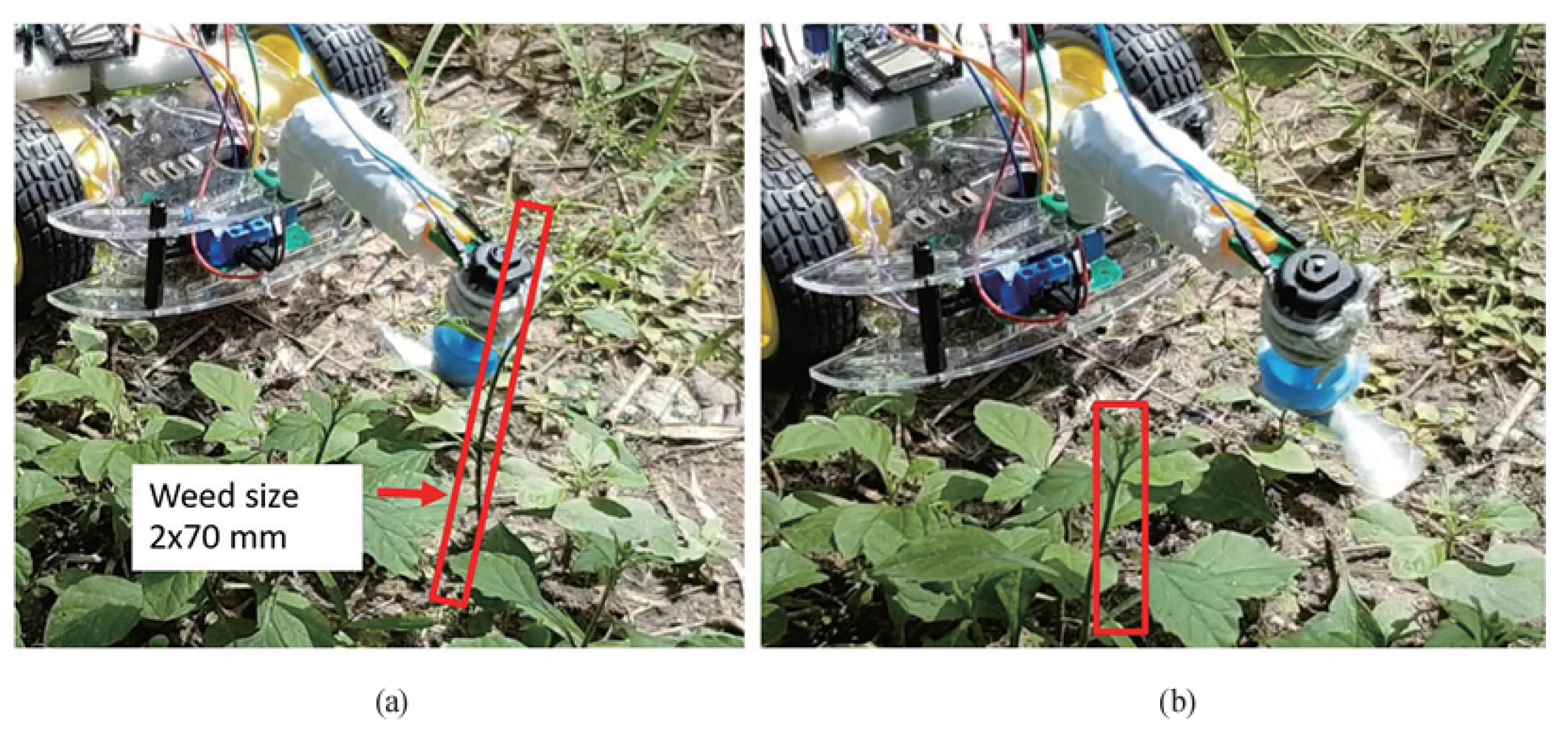

68] developed a patrolling robot on the basis of the Web of Things, which can send processed warning information to users, thus instructing farmers to plant scientifically. Introducing the Web of Things to agricultural production is beneficial in intelligent planting applications. To detect early pests, Martin et al. [

69] developed an ROS-based architecture dubbed Robotframework (

Figure 13) that successfully combines various robotic skills, such as navigation and manipulation. These innovative solutions enable the possibility of new mobile robotic manipulators.

4.3. Pesticide Spraying Robots

Similar to spraying pesticides on field crops, spraying pesticides on fruits and vegetables is a burden on the environment due to excessive spraying ranges. Therefore, many pesticide spraying robots have been designed to achieve more precise spraying via various methods, such as servo-controlled nozzles, flow control systems, and ultrasonic sensors. A great deal of research effort and attention has been focused n the area of pesticide spraying robots.

Cantelli et al. [

30] invented an autonomous spraying robot containing two parts, a vehicle and a spraying control system. Then, experimental tests were conducted to prove that synergy between the two parts could achieve a safer and more precise spraying operation. Bhat et al. designed a semi-autonomous robot [

70] able to climb Areca Nut trees and then spray pesticides using servo-controlled nozzles. In this way, higher quality and output can be attained. This additionally solves problems involving the limitations of human labor. An autonomous pesticide sprayer [

71] was developed and implemented to spray pesticides precisely while incorporating obstacle avoidance ability. Moreover, it can be applied to different crops, including pineapples, tomatoes, rock melons, and more. Further exploration was considered from the perspective of spraying pressure, waterproof structure, and upgrading of the monitoring system.

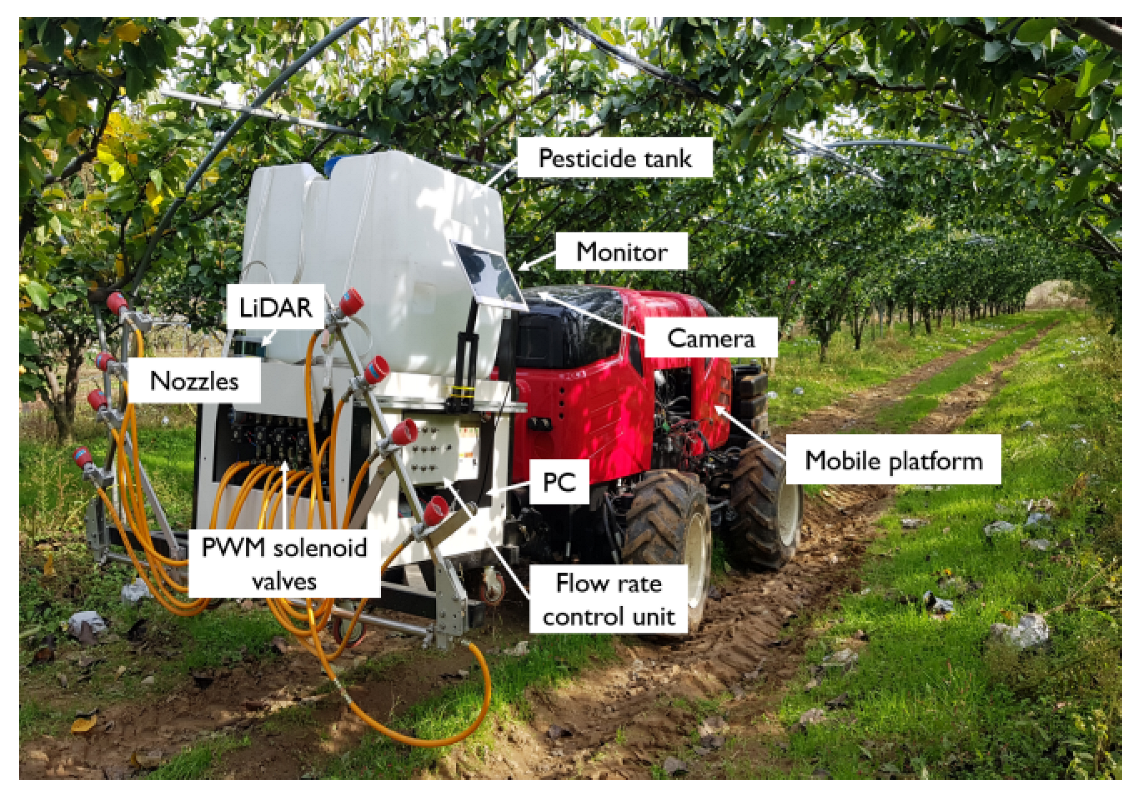

Oberti et al. [

14] developed a modular agriculture robot in the CROPS project [

14] to achieve autonomous disease detection and selective pesticide spraying operation, which could mitigate pesticide overuse. In addition, they designed the first fully automatic selective system for spraying pesticides geared towards specialty crops. As shown in

Figure 14, Seol et al. [

72] proposed a flow control system for a smart spraying robot using semantic segmentation. Thereafter, contrastive field experiments were carried out, demonstrating that the proposed system outperformed existing control approaches. Tewari et al. [

73] developed a robotic selective sprayer using sensitive ultrasonic sensors. Based on ultrasonic sensing technology, the nozzles spray exclusively toward the tree canopy, reducing pesticide usage in orchards by 26%. A robotic spraying system based on the SegNet model was proposed in [

74] to spray pesticides in orchards, composed of hardware configuration, semantic segmentation, and depth data fused with trained RGB data. In field experiments, their environmentally friendly spraying robot showed satisfactory properties.

Compared with typical pesticide-spraying robots, the “X-Bot” designed by Ozgul and Celik is much smaller [

75], as shown in

Figure 15. The semi-automatic mobile robot presents potential energy savings and can spray pesticides and repel insects without any human assistance.

It is worth mentioning that the remote control can be attained through Bluetooth communication. Mane et al. [

76] proposed a pesticide spraying robot with an interface controller for feasible remote control. Moreover, they fabricated and tested a prototype that was able to satisfy all major requirements.

4.4. Gardening Robots

Due to the dynamic circumstances generated by seasonal changes and plant growth, a garden with unique characteristics is challenging for autonomous gardening robot systems [

77,

78,

79,

80,

81,

82]. The garden map for robot navigation applications is influenced during gardening robots’ cutting of hedges, because its appearance and geometry are changed at this stage. Hence, the existence of pitches and the gardening robot movements plan should be considered in navigation techniques. A great deal of research attention has been focused on this area.

In the TrimBot 2020 project, a robotic lawn mower was proposed, the first outdoor robot intended to trim bushes and prune roses. Strisciuglio et al. [

83] pioneered a prototype using innovative path planning and visual servo systems. A robotic irrigation system [

84] was specially developed to irrigate indoor gardens. An Arduino microcontroller increases the water flow when a moisture sensor detects dry soil. Moreover, an automatic fertilizer sprayer was developed to compensate for one deficiency of this work.

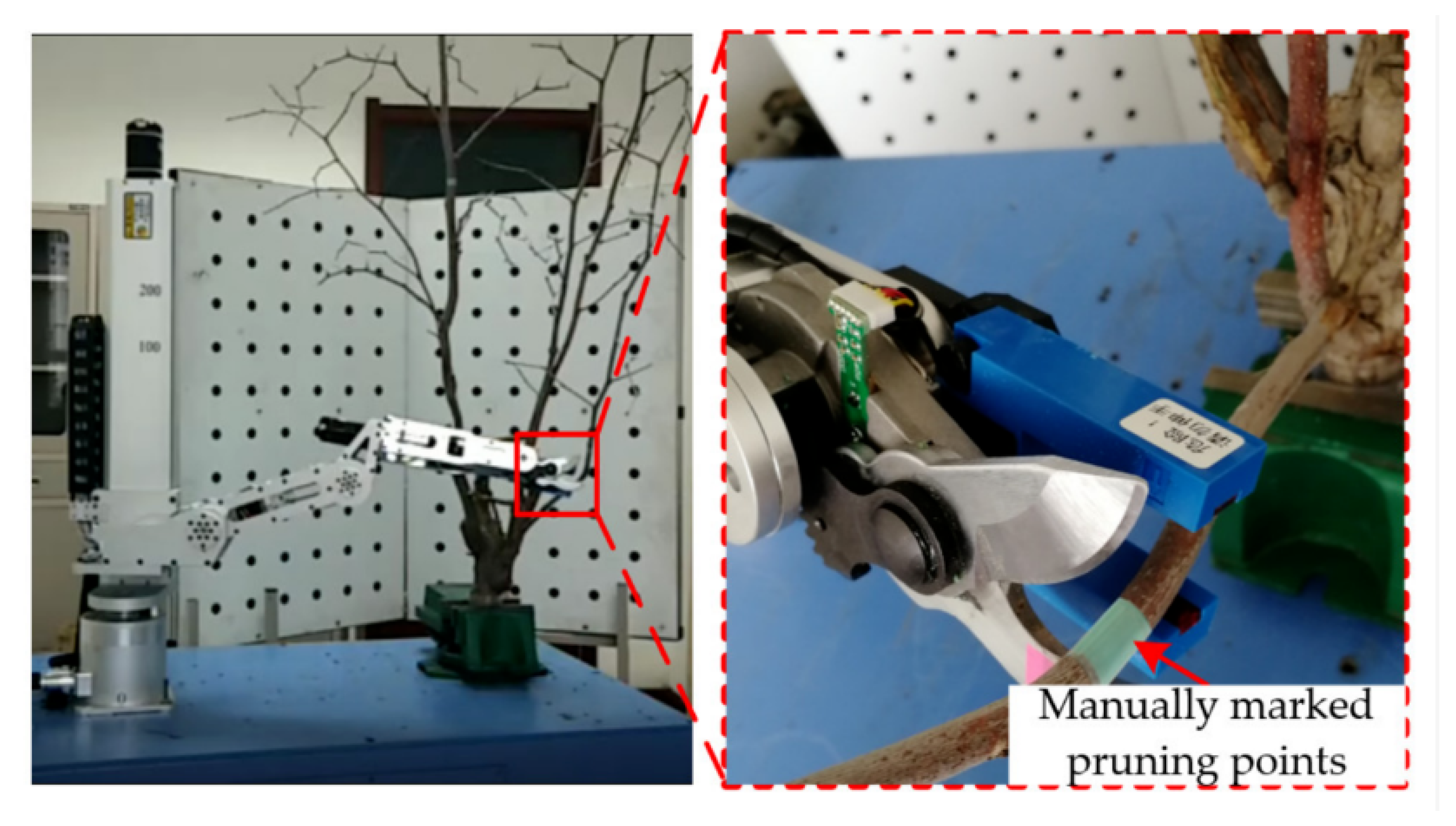

In [

85], a pruning manipulator with five degrees of freedom was designed for jujube, as shown in

Figure 16. Subsequently, a performance test was conducted, verifying the excellent properties of the automatic equipment, with minimal positioning error and an average success rate higher than 85.16%.

Based on an automatic irrigation system, small-sized gardening robots [

86] have been designed and tested to assist people in growing plants, as shown in

Figure 17. Thanks to the use of sprinkler controllers and moisture sensors, plants were able to grow faster by as much as 20% under the care of gardening robots. In future work, more sensors are expected to be accessed, and image-based machine learning can be highlighted. Islam et al. [

87] designed a multi-functional gardening system that could be implemented on rooftops and nurseries. This semi-autonomous assistance system can supply water and detect leaf disease. However, the gardening robot is limited by the solar charging system, which is unavailable with insufficient daylight. Cheung et al. [

88] at the City University of Hong Kong designed an automatic mobile gardening system made up of four parts, namely, a monitoring kit, artificial intelligent classifier, mobile application, and cloud storage, with the aim of increasing planting efficiency.

4.5. Fruit and Vegetable Picking Robots

Fruit and vegetable picking robots usually refer to automatic machines designed for large-scale detection and picking of fruits and vegetables in modern agriculture [

89,

90,

91,

92]. Robotic harvesters are classified into bulk and selective robotic harvesters [

93], and include kiwi-picking robots, apple-picking robots, strawberry-picking robots, tomato-picking robots, and more [

11]. In addition, numerous examples have proven that fruit and vegetable picking robots have become a prevalent topic among agriculture robots.

Williams et al. [

27] developed a kiwi fruit-picking robot. This type of kiwi fruit-picking robot consists of a machine vision system, end effectors, and four harvesting arms. Specifically, the robot employs a convolution neural network (CNN) which performs semantic segmentation on images of the canopy. However, due to obstructions and loss, only 51% of kiwi fruits were successfully picked by the novel robotic kiwifruit harvesting system in the test orchard.

With regard to apple-harvesting robots, Kuznetsova et al. [

94] developed a machine vision system based on a YOLOv3 algorithm with pre- and post-processing for detecting apples. By employing pre-and post-processing, the fruit detection rate increased from 9.1% to 90.8% compared with standard YOLOv3. Notably, only 19ms was required to detect each apple; objects mistaken for apples accounted for 7.8%, while 9.2% of apples were unrecognized. A complete and totally autonomous picking robot [

26] was implemented by the agricultural R&D-company Octinion to detect and pick ripe fruits without damaging them. The efficiency of the prototype was high, picking strawberries in only 4 s. Based on RGB-D, Li et al. [

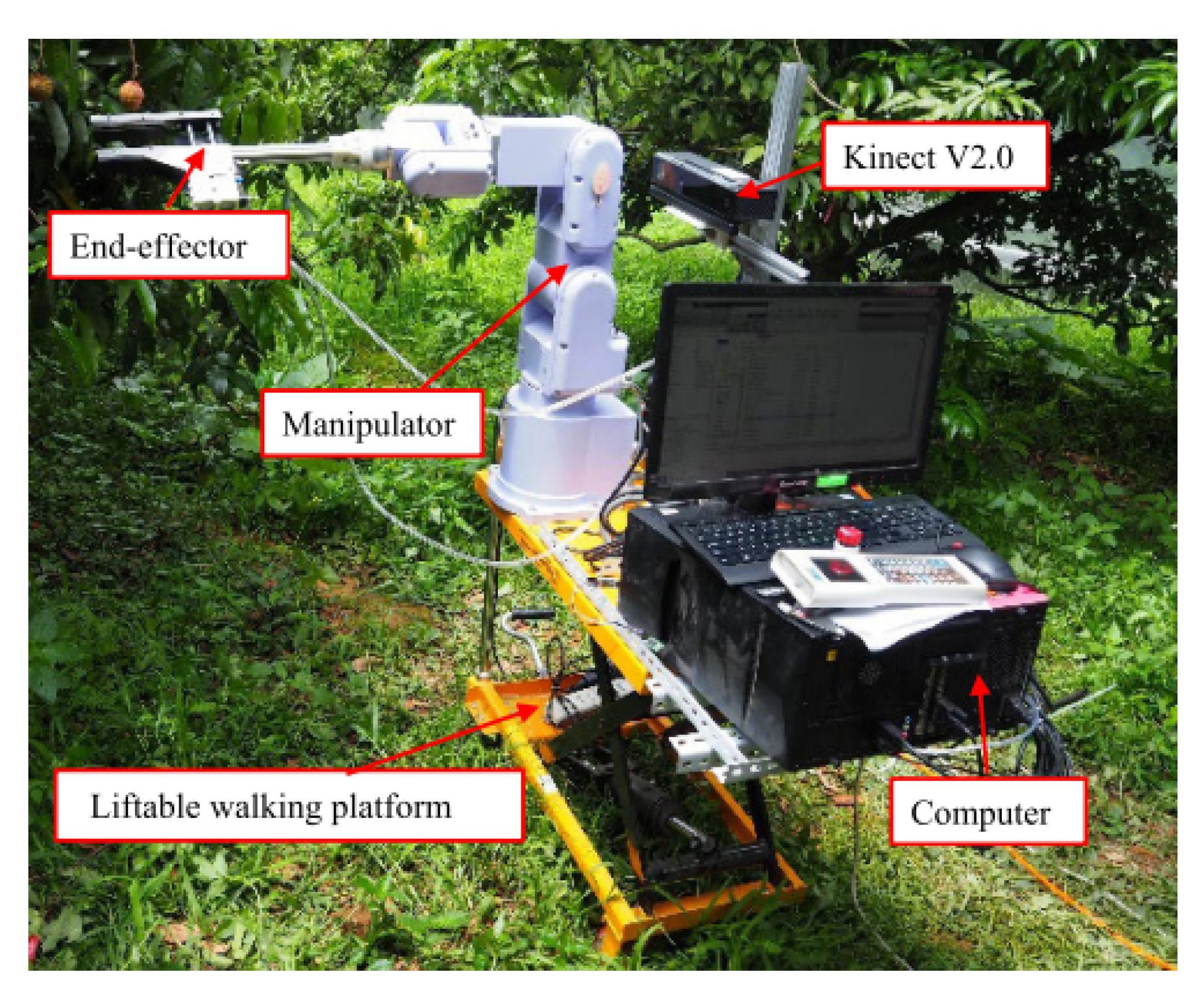

95] invented a trustworthy algorithm for harvesting robots (

Figure 18) to automatically locate lychee clusters, facilitating collection in large-scale environments. In field experiments, only 0.464 s was required to deal with a single lychee string.

Within the overall procedure for harvesting sweet peppers, a new robotic harvester was developed by Lehnert [

96]. Using a vision-based algorithm, a 3D localisation and grasp selection method, and an end-effector, it obtained a harvesting success rate of 58%, a grasping success rate of 81%, and a detachment success rate of 90% for the sweet pepper, which represents a breakthrough. Although these results cannot yet satisfy commercial needs, it is possible to foresee a promising future for this autonomous sweet pepper harvester. Based on deep learning, thermal images were used in [

20] to detect chili peppers in complex environments, which is more efficient than RGB images. Using thermal cameras can make the harvesting process more efficient; this study opens up new possibilities for harvesting in low-light environments.

Distinct from rigid grippers, flexible soft grippers can gently interact with objects. Peng et al. [

97] summarized the advancements and relative excellence of soft robotic grippers in vegetable and fruit picking and their robustness in adapting to different requirements. Then, they briefly introduced the notion and status of soft robotic grippers. They concluded that progress on the development of soft grippers has been made in materials, chemistry, and other multidisciplinary areas, and that challenges remain in manipulating methods, controllability, and mechanical design.

5. Animal Husbandry Robots

Due to lengthy investment cycles and the high stakes of animal husbandry production, animal husbandry tends to suffer massive crises. When a crisis situation occurs, the yield of meat and dairy declines accordingly, increasing the expense of production. Therefore, the need for a more intelligent strategy that can manage farms efficiently has begun to catch the public’s eye.

5.1. Breeding Robots

Poultry and livestock breeding is an essential part of agricultural production, with generous profits. Therefore, enhancing poultry and livestock breeding can be of high significance. Disinfection is the most fundamental, valuable, and comprehensive way to improve breeding, and has attracted extensive attention among the various methods used to improve breeding.

To deal with the labour-intensive work of disinfection, Feng et al. [

98] designed an efficient disinfecting robot. Afterwards, an experiment was conducted to test its performance, and the results showed that the disinfecting robot satisfied the basic requirements very well. In 2021, Feng et al. [

99] upgraded a robot that can be controlled automatically and remotely for disinfectant-spraying in poultry houses. Accordingly, this research serves as technical support for intelligent production.

Based on the Internet of Things, Li et al. [

100] developed an intelligent device to monitor the environment of enclosed henhouses, trying to find the relationship between the production environment and laying rate. In general, they found that suitable temperature and increased ventilation are two key factors for laying hens. Li et al. [

101] employed internet technology in chicken breeding. The intelligent and remotely controlled system they developed can monitor the chickens and update real-time information through various sensors. Hence, the chicken house in the woods can become an organic whole through the use of such a network.

5.2. Animal Feeding Robot

Feeding livestock and poultry on time is another labour-intensive assignment, and it is difficult to accurately determine the quantities of fodder. The automation of animal feeding can reduce the costs of both feed and labour while eliminating feed waste. In light of this, roboticizing the process becomes the universal direction in animal feeding.

Peng et al. [

102] proposed and designed a robotic pig-feeding system to decrease the demand for artificial labour and guarantee a pleasant environment for pig breeding, leading to a great improvement in production efficiency. In Nepal, Karn et al. [

103] introduced a feeding system for cattle that followed the pre-determined trajectory and placed the feed by the fence. In the designed work environment, the developed robotic vehicle enabled successful operation. On the basis of force feedback, Rumba et al. [

104] proposed an iterative pile-pushing algorithm to estimate the feed-pushing robot path in dairy cattle farms. Notably, the related change can contribute to intelligent dairy farms.

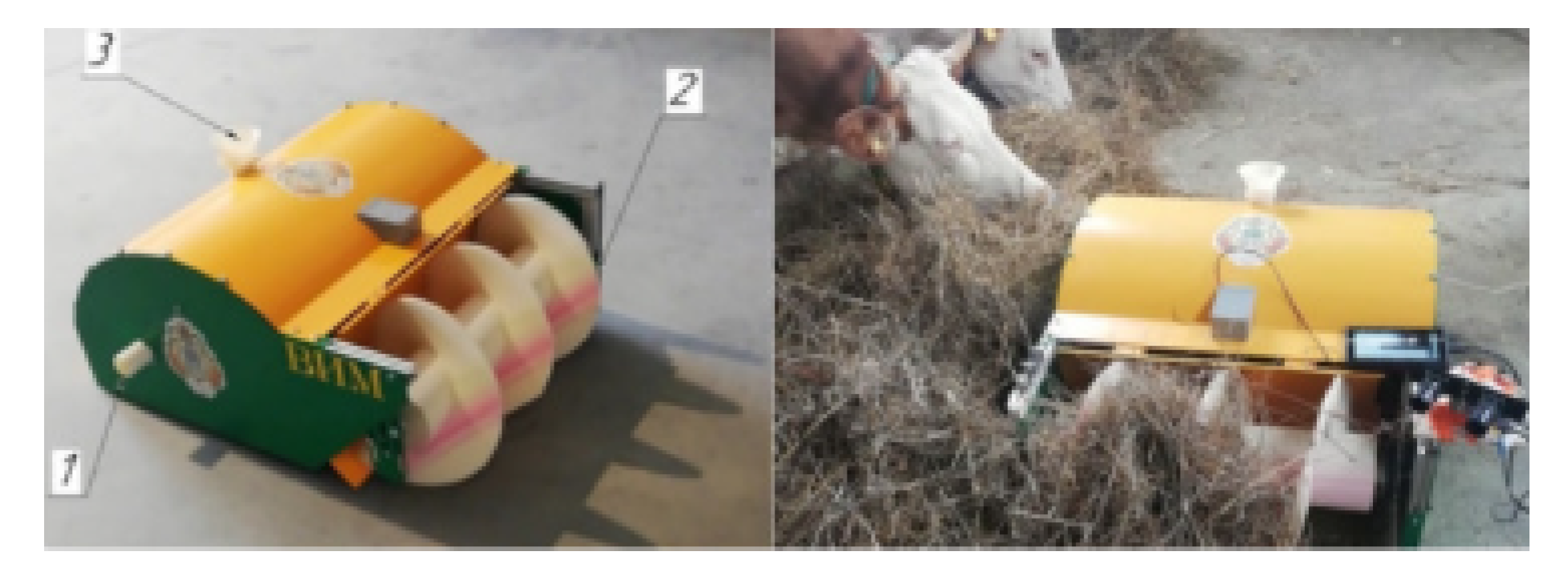

As shown in

Figure 19, Pavkin et al. [

105] conceived a robotic feeding pusher to enable robot modernization. Later, they developed an experimental model to test their simulation model; the results showed that the automatic robot can significantly facilitate the process of feeding by conducting labor-intensive operations. However, the animals at the feed table posed a challenge for the accuracy of the vision system. In

Figure 20, Tian et al. [

106] designed a pusher robot able to navigate automatically using a 3D lidar system. Moreover, they proposed an advanced obstacle avoidance method to overcome problems in complicated open situations. These developments contribute to intelligent dairy farming.

5.3. Milking Robots

Usually, milking is performed in specified sessions, and the cows cannot determine the timing of being milked. However, the advent of AMS has revolutionized the process and even the whole dairy industry, helping farmers to gain much more respectable returns. Nowadays, milking robots are available throughout the day, and farm management has evolved in a more organized way. Hence, many scientists have taken an interest in this topic.

Sitkowska et al. [

107] developed an automatic robot system (AMS) to allow farmers to monitor cow performance traits. By analyzing the data collected through AMS, researchers were able to find the relationships between performance features and milk yield, improving milk yield and economic benefit. They pointed out that the optimal milking plan referred to milking at a frequency ranging from 2.6 to 2.8 per day and milking speed of 2.6 kg/min. Iweka [

108] developed an NIRS sensing system for a milking robot to determine the quality of non-homogenized milk from the perspective of SCC and three main milk constituents. This can provide farmers with feedback control, contributing to high-quality milk and precision dairy farming.

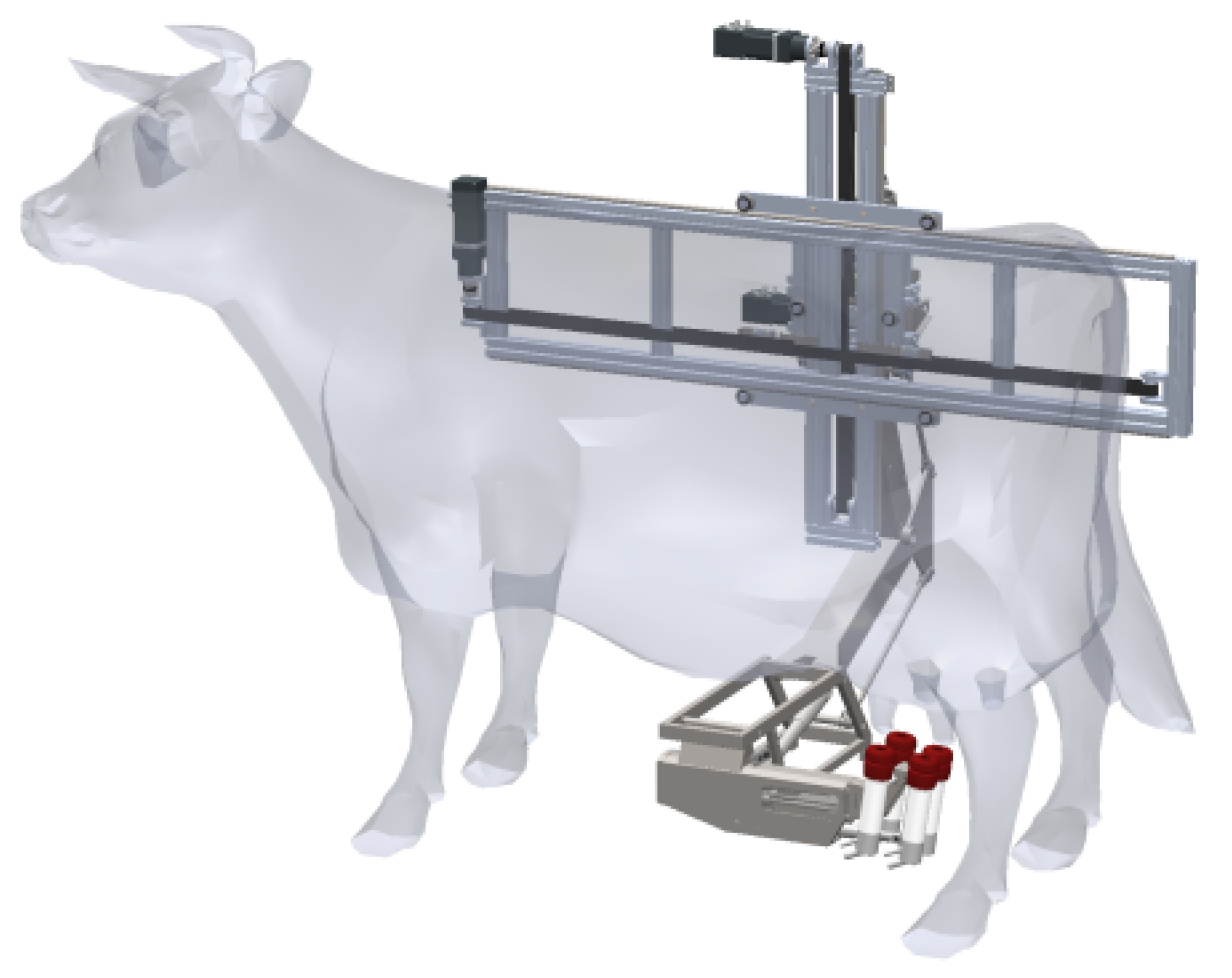

The vision system plays a paramount role in automatic milking. A novel milking robot [

109] (

Figure 21) with RGBD cameras, image segmentation, and an algorithm was designed for achieving automatic milking. Although elementary, the idea proved feasible in the initial experiment. Nonetheless, further engineering improvements can be expected, and further validation in real scenarios remains required. A new 3D vision system that can locate the milking cups precisely was developed by Akhloufi [

110], and was able to improve the performance of milking robots. Pal et al. [

111] conceived an intelligent vision system for milking systems using the technologies of RGBD imaging, thermal imaging, and ToF camera imaging. Moreover, the application scenarios of this advanced detection system included the sheds and carousel parlors.

5.4. Egg Collecting Robots

Collecting eggs in large-scale poultry houses is dirty and dull; autonomous equipment can considerably improve this situation. As shown in

Figure 22, Vroegindeweij et al. [

112] successfully developed and evaluated a mobile robot that can navigate autonomously, keep an eye on the poultry, avoid obstacles, and collect eggs, which indicates a bright future for intelligent poultry houses.

6. A Comparative Review of Agricultural Robots Based on Several Criteria

In this section, a comparative review of agriculture robots is provided on the basis of several criteria, including the mode of locomotion (wheels, caterpillars, drones), the size of robots (small, medium, large), application scenarios (indoor, outdoor, or both), sensors employed (GPS, RGB, IR, LiDAR, etc.), autonomy (autonomous, semi-autonomous, manual), and the distinction between research prototypes and commercial products.

After conducting a thorough analysis, we find that most agriculture robots are designed to locomote using wheels, while the use of caterpillars and drones is rare, as shown in

Table 1.

Based on the current study, we find that smaller-sized robots have received the most attention from researchers (

Table 2). For example, in [

32], Pikulkaew Tangtisanon introduced a small gardening robot with a decision-making watering system. However, larger-sized robots have been studied by many researchers as well.

Indoor agriculture has become a popular research trend in recent years, and indoor robots have consequently received a great deal of attention (

Table 3). In [

120], Marsela Polic et al. proposed a robotic system for indoor organic farming. A networked autonomous gardening system with applications in urban/indoor precision agriculture was described by Nikolaus Correll et al. in [

121].

All robots perceive signals through a sensor, with RGB (Red-Green-Blue) sensors, vision sensors, and GNSS being the most common (

Table 4). There exist other sensors that allow robots to identify their surroundings, such as light detection and ranging (LiDAR) sensors, multispectral sensors, infrared (IR) sensors, etc.

Most of the agriculture robots described in this review are autonomous, as shown in (

Table 5). The broad use of fully autonomous agricultural robots reduces labor costs and greatly increases the efficiency of farming work. Meanwhile, semi-autonomous robots are an indispensable element of agricultural robots. For instance, in [

75] Ege Ozgul and Ugur Celik offered a semi-autonomous robot named “X-Bot” to improve efficiency and precision in agricultural tasks. On the other hand, manual robots were rarely found in our review.

Last but not least, the distinction between research prototypes and commercial products is worth mentioning. Currently, the commercialization of agricultural robots is insufficient and needs further exploration, as shown in (

Table 6). In our review, many different research prototypes were found. For instance, in [

33] the creation of software for robots intended for spot mechanical tillage was a major focus of researchers Roman N. Panarin and Lubov A. Khvorova. In addition, there has been a great deal of research on the use of commercial robotic products in agriculture, as can be seen in [

31,

43,

135].

7. Legislative Aspects of Agriculture Robots

In reviewed publications related to agriculture robots, we have seen the rapid evolution of technologies and the resulting potential economic benefits. However, widespread application is hampered by inconsistent and nonstandard legislative codes, which originate from dynamic and complicated environments, various manipulation objects, and gaps among countries [

135,

136,

137]. This has led to considerable controversy [

138]. In order to manage agriculture robots and boost large-scale applications, efforts are being made to update existing laws [

139]. Hence, future work in this area is encouraged, with the aiming of safeguarding effective operation in all cases.

8. Discussion

This section concludes and discusses the recent advancements with respect to agriculture robots, as well as the challenges and present trends in related research work. Nowadays, agricultural robots are being developed rapidly thanks to theoretical innovations and various cutting-edge technologies, including multi-modal perception, decision-making, control, and execution abilities. These advancements presage a bright future for precision agriculture and intelligent farming.

However, because the agricultural environment is complicated and dynamic, many more agricultural robots are either employed in small-scale applications or remain in the prototype phase, including pesticide spraying robots, gardening robots, and strawberry picking robots [

26,

67,

83] described in this review. Moreover, other problems involving agriculture robots have barely been explored or remain in the nascent stage, such as energy consumption, accuracy of GNSS, the cost of robot fabrication, and the maintenance of robots or robotic systems [

25,

140,

141,

142,

143,

144]. For instance, it is well known that the vision system is regarded as the “eyes” of agriculture robots, and there is a considerable tradeoff between the cost and quality of cameras for agriculture use, as stated by Khan et al. [

113]. Furthermore, in most developing countries land use for agriculture is usually not as intensive as is the case for large-scale farmland in developed countries. In addition, farmers may not hold sufficient capital to purchase efficient agriculture robots. Therefore, it is reasonable to expect more affordable and high-quality agriculture robots.

Apart from autonomous machinery, advanced control systems, and socioeconomic factors, it is worthwhile to consider interaction with the agri-environment. New approaches are required to facilitate more natural and user-friendly interaction in agricultural production. Breakthroughs have been made in human–robot interaction, such as modelling, semantic action recognition, and risk-averse optimization approaches [

145,

146,

147,

148,

149]. Nevertheless, further exploration is needed due to unstructured and uncontrollable objects and environments. Moreover, based on the publications reviewed here, future efforts should be devoted to agronomics, sensors, and the realization of full automation.

9. Conclusions

This study has reviewed the current status and applications of various agricultural robots by categorizing three primary types of agricultural robots, namely, field robots, fruit and vegetable robots, and animal husbandry robots. About fourteen kinds of robots have been described in detail in terms of their features, functions, and applications. In addition, we have discussed the challenges accompanying the advancement of agricultural robots. Hopefully, this review can provide inspiration for researchers to grasp the future trends in the study of agricultural robots, which include but are not limited to human–robot interaction, agronomics, sensors, and the realization of full automation.

Author Contributions

Conceptualization, C.C., J.F., H.S. and L.R.; methodology, C.C.; software, C.C.; validation, C.C., J.F., H.S. and L.R.; formal analysis, C.C., J.F., H.S. and L.R.; investigation, C.C., J.F., H.S. and L.R.; resources, C.C., J.F. , H.S. and L.R.; data curation, C.C., J.F., H.S.and L.R.; writing—original draft preparation, C.C., J.F., H.S. and L.R.; writing—review and editing, C.C., J.F., H.S. and L.R.; visualization, C.C., J.F., H.S. and L.R.; supervision, C.C., J.F., H.S. and L.R.; project administration, C.C., J.F., H.S. and L.R.; funding acquisition, C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported in part by the National Natural Science Foundation of China under Grant No. 32101624, in part by the China Postdoctoral Science Foundation under Grant No.2021M701393, and in part by the Shandong Provincial Natural Science Foundation under Grant No. ZR2021QE248.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors are thankful for the participation of our lab members and for their valuable contribution of time to proofreading.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- The Latest State of Food Security and Nutrition Report Shows the World Is Moving Backwards in Efforts to Eliminate Hunger and Malnutrition. Available online: https://www.who.int/news/item/06-07-2022-un-report–global-hunger-numbers-rose-to-as-many-as-828-million-in-2021/ (accessed on 28 October 2022).

- Hoffmann, M.; Simanek, J. The merits of passive compliant joints in legged locomotion: Fast learning, superior energy efficiency and versatile sensing in a quadruped robot. J. Bionic Eng. 2017, 14, 1–14. [Google Scholar] [CrossRef]

- Reddy, N.V.; Reddy, A.; Pranavadithya, S.; Kumar, J.J. A critical review on agricultural robots. Int. J. Mech. Eng. Technol. 2016, 7, 183–188. [Google Scholar]

- Shi, Y.; Chang, J.; Zhang, Q.; Liu, L.; Wang, Y.; Shi, Z. Gas Flow Measurement Method with Temperature Compensation for a Quasi-Isothermal Cavity. Machines 2022, 10, 178. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Saiz-Rubio, V.; Cuenca-Cuenca, A. Augmented perception for agricultural robots navigation. IEEE Sens. J. 2020, 21, 11712–11727. [Google Scholar] [CrossRef]

- Alsalam, B.H.Y.; Morton, K.; Campbell, D.; Gonzalez, F. Autonomous UAV with vision based on-board decision making for remote sensing and precision agriculture. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MO, USA, 4–11 March 2017; pp. 1–12. [Google Scholar]

- Zhang, Z.; Kayacan, E.; Thompson, B.; Chowdhary, G. High precision control and deep learning-based corn stand counting algorithms for agricultural robot. Auton. Robot. 2020, 44, 1289–1302. [Google Scholar] [CrossRef]

- Wang, G.; Yu, Y.; Feng, Q. Design of end-effector for tomato robotic harvesting. IFAC-PapersOnLine 2016, 49, 190–193. [Google Scholar] [CrossRef]

- Shi, Y.; Cai, M.; Xu, W.; Wang, Y. Methods to evaluate and measure power of pneumatic system and their applications. Chin. J. Mech. Eng. 2019, 32, 1–11. [Google Scholar] [CrossRef]

- Kayacan, E.; Zhang, Z.Z.; Chowdhary, G. Embedded High Precision Control and Corn Stand Counting Algorithms for an Ultra-Compact 3D Printed Field Robot. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018; Volume 14, p. 9. [Google Scholar]

- Wang, Z.; Xun, Y.; Wang, Y.; Yang, Q. Review of smart robots for fruit and vegetable picking in agriculture. Int. J. Agric. Biol. Eng. 2022, 15, 33–54. [Google Scholar]

- Skvortsov, E.; Bykova, O.; Mymrin, V.; Skvortsova, E.; Neverova, O.; Nabokov, V.; Kosilov, V. Determination of the applicability of robotics in animal husbandry. Turk. Online J. Des. Art Commun. 2018, 8, 291–299. [Google Scholar] [CrossRef]

- Sori, H.; Inoue, H.; Hatta, H.; Ando, Y. Effect for a paddy weeding robot in wet rice culture. J. Robot. Mechatronics 2018, 30, 198–205. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Tona, E.; Hočevar, M.; Baur, J.; Pfaff, J.; Schütz, C.; et al. Selective spraying of grapevines for disease control using a modular agricultural robot. Biosyst. Eng. 2016, 146, 203–215. [Google Scholar] [CrossRef]

- Pribadi, W.; Prasetyo, Y.; Juliando, D.E. Design of fish feeder robot based on arduino-android with fuzzy logic controller. Int. Res. J. Adv. Eng. Sci 2020, 5, 47–50. [Google Scholar]

- Geng, A.; Hu, X.; Liu, J.; Mei, Z.; Zhang, Z.; Yu, W. Development and Testing of Automatic Row Alignment System for Corn Harvesters. Appl. Sci. 2022, 12, 6221. [Google Scholar]

- Wagner, H.J.; Alvarez, M.; Kyjanek, O.; Bhiri, Z.; Buck, M.; Menges, A. Flexible and transportable robotic timber construction platform–TIM. Autom. Constr. 2020, 120, 103400. [Google Scholar] [CrossRef]

- Raj, R.; Aravind, A.; Akshay, V.; Chandy, M.; Sharun, N. A seed planting robot with two control variables. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; pp. 1025–1028. [Google Scholar]

- Katzschmann, R.K.; DelPreto, J.; MacCurdy, R.; Rus, D. Exploration of underwater life with an acoustically controlled soft robotic fish. Sci. Robot. 2018, 3, eaar3449. [Google Scholar] [CrossRef]

- Hespeler, S.C.; Nemati, H.; Dehghan-Niri, E. Non-destructive thermal imaging for object detection via advanced deep learning for robotic inspection and harvesting of chili peppers. Artif. Intell. Agric. 2021, 5, 102–117. [Google Scholar] [CrossRef]

- Guevara, D.J.; Gené-Mola, J.; Gregorio, E.; Torres-Torriti, M.; Reina, G.; Cheein, F.A. Comparison of 3D scan matching techniques for autonomous robot navigation in urban and agricultural environments. J. Appl. Remote Sens. 2021, 15, 24508. [Google Scholar] [CrossRef]

- Guevara, L.; Michałek, M.M.; Cheein, F.A. Collision risk reduction of N-trailer agricultural machinery by off-track minimization. Comput. Electron. Agric. 2020, 178, 105757. [Google Scholar] [CrossRef]

- Auat Cheein, F.; Torres-Torriti, M.; Hopfenblatt, N.B.; Prado, Á.J.; Calabi, D. Agricultural service unit motion planning under harvesting scheduling and terrain constraints. J. Field Robot. 2017, 34, 1531–1542. [Google Scholar] [CrossRef]

- Prado, Á.J.; Torres-Torriti, M.; Yuz, J.; Cheein, F.A. Tube-based nonlinear model predictive control for autonomous skid-steer mobile robots with tire–terrain interactions. Control Eng. Pract. 2020, 101, 104451. [Google Scholar] [CrossRef]

- Guevara, J.; Cheein, F.A.A.; Gené-Mola, J.; Rosell-Polo, J.R.; Gregorio, E. Analyzing and overcoming the effects of GNSS error on LiDAR based orchard parameters estimation. Comput. Electron. Agric. 2020, 170, 105255. [Google Scholar] [CrossRef]

- De Preter, A.; Anthonis, J.; De Baerdemaeker, J. Development of a robot for harvesting strawberries. IFAC-PapersOnLine 2018, 51, 14–19. [Google Scholar] [CrossRef]

- Williams, H.A.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Kumar, P.; Ashok, G. Design and fabrication of smart seed sowing robot. Mater. Today Proc. 2021, 39, 354–358. [Google Scholar] [CrossRef]

- Viegas, C.; Chehreh, B.; Andrade, J.; Lourenço, J. Tethered UAV with combined multi-rotor and water jet propulsion for forest fire fighting. J. Intell. Robot. Syst. 2022, 104, 1–13. [Google Scholar] [CrossRef]

- Cantelli, L.; Bonaccorso, F.; Longo, D.; Melita, C.D.; Schillaci, G.; Muscato, G. A small versatile electrical robot for autonomous spraying in agriculture. AgriEngineering 2019, 1, 391–402. [Google Scholar] [CrossRef]

- Lowenberg-DeBoer, J.; Huang, I.Y.; Grigoriadis, V.; Blackmore, S. Economics of robots and automation in field crop production. Precis. Agric. 2020, 21, 278–299. [Google Scholar] [CrossRef]

- Tamaki, K.; Nagasaka, Y.; Nishiwaki, K.; Saito, M.; Kikuchi, Y.; Motobayashi, K. A robot system for paddy field farming in Japan. IFAC Proc. Vol. 2013, 46, 143–147. [Google Scholar] [CrossRef]

- Panarin, R.N.; Khvorova, L.A. Software Development for Agricultural Tillage Robot Based on Technologies of Machine Intelligence. In Proceedings of the International Conference on High-Performance Computing Systems and Technologies in Scientific Research, Automation of Control and Production, Barnaul, Russia, 15–16 May 2020; Springer: Cham, Sweizerland, 2021; pp. 354–367. [Google Scholar]

- Backman, J.; Linkolehto, R.; Lemsalu, M.; Kaivosoja, J. Building a Robot Tractor Using Commercial Components and Widely Used Standards. IFAC-PapersOnLine 2022, 55, 6–11. [Google Scholar] [CrossRef]

- Jeon, C.W.; Kim, H.J.; Yun, C.; Han, X.; Kim, J.H. Design and validation testing of a complete paddy field-coverage path planner for a fully autonomous tillage tractor. Biosyst. Eng. 2021, 208, 79–97. [Google Scholar] [CrossRef]

- Heidrich, J.; Gaulke, M.; Golling, M.; Alaydin, B.O.; Barh, A.; Keller, U. 324-fs Pulses From a SESAM Modelocked Backside-Cooled 2-μm VECSEL. IEEE Photonics Technol. Lett. 2022, 34, 337–340. [Google Scholar] [CrossRef]

- Lin, H.; Dong, S.; Liu, Z.; Yi, C. Study and experiment on a wheat precision seeding robot. J. Robot. 2015, 2015. [Google Scholar]

- Bhimanpallewar, R.N.; Narasingarao, M.R. AgriRobot: Implementation and evaluation of an automatic robot for seeding and fertiliser microdosing in precision agriculture. Int. J. Agric. Resour. Gov. Ecol. 2020, 16, 33–50. [Google Scholar] [CrossRef]

- Meshram, A.T.; Vanalkar, A.V.; Kalambe, K.B.; Badar, A.M. Pesticide spraying robot for precision agriculture: A categorical literature review and future trends. J. Field Robot. 2022, 39, 153–171. [Google Scholar] [CrossRef]

- Deshmukh, D.; Pratihar, D.K.; Deb, A.K.; Ray, H.; Bhattacharyya, N. Design and Development of Intelligent Pesticide Spraying System for Agricultural Robot. In Proceedings of the International Conference on Hybrid Intelligent Systems, online, 14–16 December 2020; Springer: Cham, Sweizerland, 2020; pp. 157–170. [Google Scholar]

- Shang, Y.; Hasan, M.K.; Ahammed, G.J.; Li, M.; Yin, H.; Zhou, J. Applications of nanotechnology in plant growth and crop protection: A review. Molecules 2019, 24, 2558. [Google Scholar] [CrossRef]

- Cheng, R.P.; Tischler, M.B.; Schulein, G.J. R-MAX Helicopter State-Space Model Identification for Hover and Forward-Flight. J. Am. Helicopter Soc. 2006, 51, 202–210. [Google Scholar] [CrossRef]

- Ghafar, A.S.A.; Hajjaj, S.S.H.; Gsangaya, K.R.; Sultan, M.T.H.; Mail, M.F.; Hua, L.S. Design and development of a robot for spraying fertilizers and pesticides for agriculture. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Iost Filho, F.H.; Heldens, W.B.; Kong, Z.; de Lange, E.S. Drones: Innovative technology for use in precision pest management. J. Econ. Entomol. 2020, 113, 1–25. [Google Scholar] [CrossRef]

- Terra, F.P.; Nascimento, G.H.d.; Duarte, G.A.; Drews, P.L., Jr. Autonomous agricultural sprayer using machine vision and nozzle control. J. Intell. Robot. Syst. 2021, 102, 1–18. [Google Scholar] [CrossRef]

- Martini, N.P.D.A.; Tamami, N.; Alasiry, A.H. Design and Development of Automatic Plant Robots with Scheduling System. In Proceedings of the 2020 International Electronics Symposium (IES), Surabaya, Indonesia, 29–30 September 2020; pp. 302–307. [Google Scholar]

- Srivastava, A.; Vijay, S.; Negi, A.; Shrivastava, P.; Singh, A. DTMF based intelligent farming robotic vehicle: An ease to farmers. In Proceedings of the 2014 International Conference on Embedded Systems (ICES), Coimbatore, India, 3–5 July 2014; pp. 206–210. [Google Scholar]

- An, Z.; Wang, C.; Raj, B.; Eswaran, S.; Raffik, R.; Debnath, S.; Rahin, S.A. Application of New Technology of Intelligent Robot Plant Protection in Ecological Agriculture. J. Food Qual. 2022, 2022, 1257015. [Google Scholar] [CrossRef]

- Jiayi, M.; Bugong, S. The Exploration of the trajectory planning of plant protection robot for small planting crops in western mountainous areas. In Proceedings of the 2018 IEEE International Conference of Intelligent Robotic and Control Engineering (IRCE), Lanzhou, China, 24–27 August 2018; pp. 42–45. [Google Scholar]

- do Nascimento, G.H.; Weber, F.; Almeida, G.; Terra, F.; Drews, P.L.J. A perception system for an autonomous pesticide boom sprayer. In Proceedings of the 2019 Latin American Robotics Symposium (LARS), 2019 Brazilian Symposium on Robotics (SBR) and 2019 Workshop on Robotics in Education (WRE), Rio Grande, Brazil, 23–25 October 2019; pp. 86–91. [Google Scholar]

- Bayati, M.; Fotouhi, R. A mobile robotic platform for crop monitoring. Adv. Robot. Autom. 2018, 7, 1000186. [Google Scholar] [CrossRef]

- Cubero, S.; Marco-Noales, E.; Aleixos, N.; Barbé, S.; Blasco, J. Robhortic: A field robot to detect pests and diseases in horticultural crops by proximal sensing. Agriculture 2020, 10, 276. [Google Scholar] [CrossRef]

- Iqbal, J.; Xu, R.; Sun, S.; Li, C. Simulation of an autonomous mobile robot for LiDAR-based in-field phenotyping and navigation. Robotics 2020, 9, 46. [Google Scholar] [CrossRef]

- Gu, Y.; Li, Z.; Zhang, Z.; Li, J.; Chen, L. Path tracking control of field information-collecting robot based on improved convolutional neural network algorithm. Sensors 2020, 20, 797. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Qiao, H. Motor-cortex-like recurrent neural network and multi-tasks learning for the control of musculoskeletal systems. IEEE Trans. Cogn. Dev. Syst. 2020, 14, 424–436. [Google Scholar] [CrossRef]

- Qi, W.; Liu, X.; Zhang, L.; Wu, L.; Zang, W.; Su, H. Adaptive sensor fusion labeling framework for hand pose recognition in robot teleoperation. Assem. Autom. 2021, 41, 393–400. [Google Scholar] [CrossRef]

- Qi, W.; Su, H.; Aliverti, A. A smartphone-based adaptive recognition and real-time monitoring system for human activities. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 414–423. [Google Scholar] [CrossRef]

- Li, Y.; Iida, M.; Suyama, T.; Suguri, M.; Masuda, R. Implementation of deep-learning algorithm for obstacle detection and collision avoidance for robotic harvester. Comput. Electron. Agric. 2020, 174, 105499. [Google Scholar] [CrossRef]

- Li, B.; Yang, Y.; Qin, C.; Bai, X.; Wang, L. Improved random sampling consensus algorithm for vision navigation of intelligent harvester robot. Ind. Robot. Int. J. Robot. Res. Appl. 2020, 47, 881–887. [Google Scholar] [CrossRef]

- Pooranam, N.; Vignesh, T. A Swarm Robot for Harvesting a Paddy Field. In Nature-Inspired Algorithms Applications; Wiley: Hoboken, NJ, USA, 2021; pp. 137–156. [Google Scholar]

- Wang, L.; Liu, M. Path tracking control for autonomous harvesting robots based on improved double arc path planning algorithm. J. Intell. Robot. Syst. 2020, 100, 899–909. [Google Scholar] [CrossRef]

- Jin, X.; Chen, K.; Zhao, Y.; Ji, J.; Jing, P. Simulation of hydraulic transplanting robot control system based on fuzzy PID controller. Measurement 2020, 164, 108023. [Google Scholar] [CrossRef]

- Yang, Q.; Jia, C.; Sun, M.; Zhao, X.; He, M.; Mao, H.; Hu, J.; Addy, M. Trajectory planning and dynamics analysis of greenhouse parallel transplanting robot. Int. Agric. Eng. J. 2020, 29, 64–76. [Google Scholar]

- Han, L.; Mao, H.; Kumi, F.; Hu, J. Development of a multi-task robotic transplanting workcell for greenhouse seedlings. Appl. Eng. Agric. 2018, 34, 335–342. [Google Scholar] [CrossRef]

- Liu, Z.; Lv, Z.; Zheng, W.; Wang, X. Trajectory Control of Two-Degree-of-Freedom Sweet Potato Transplanting Robot Arm. IEEE Access 2022, 10, 26294–26306. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, P.; Dai, G.; Yan, J.; Yang, Z. Tomato Fruit Maturity Detection Method Based on YOLOV4 and Statistical Color Model. In Proceedings of the 2021 IEEE 11th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Jiaxing, China, 27–31 July 2021; pp. 904–908. [Google Scholar]

- Iida, K.; Nakamura, S. Mobile Robot for Environmental Measurement in Greenhouse. J. Inst. Ind. Appl. Eng. Vol 2020, 8, 33–38. [Google Scholar] [CrossRef][Green Version]

- Wang, L.; Jia, L.; Wang, J.; Zhou, Q. The early-warning and inspection system for intelligent greenhouse based on internet of things. J. Phys. Conf. Ser. 2021, 1757, 12151. [Google Scholar] [CrossRef]

- Martin, J.; Ansuategi, A.; Maurtua, I.; Gutierrez, A.; Obregón, D.; Casquero, O.; Marcos, M. A Generic ROS-Based Control Architecture for Pest Inspection and Treatment in Greenhouses Using a Mobile Manipulator. IEEE Access 2021, 9, 94981–94995. [Google Scholar] [CrossRef]

- Bhat, A.G. Arecanut tree-climbing and pesticide spraying robot using servo controlled nozzle. In Proceedings of the 2019 Global Conference for Advancement in Technology (GCAT), Bangalore, India, 18–20 October 2019; pp. 1–4. [Google Scholar]

- Kassim, A.; Termezai, M.; Jaya, A.; Azahar, A.; Sivarao, S.; Jafar, F.; Jaafar, H.; Aras, M. Design and development of autonomous pesticide sprayer robot for fertigation farm. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 545–551. [Google Scholar] [CrossRef]

- Seol, J.; Kim, J.; Son, H.I. Field evaluations of a deep learning-based intelligent spraying robot with flow control for pear orchards. Precis. Agric. 2022, 23, 712–732. [Google Scholar] [CrossRef]

- Tewari, V.; Chandel, A.K.; Nare, B.; Kumar, S. Sonar sensing predicated automatic spraying technology for orchards. Curr. Sci. 2018, 115, 1115–1123. [Google Scholar] [CrossRef]

- Kim, J.; Seol, J.; Lee, S.; Hong, S.W.; Son, H.I. An intelligent spraying system with deep learning-based semantic segmentation of fruit trees in orchards. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3923–3929. [Google Scholar]

- Ozgul, E.; Celik, U. Design and implementation of semi-autonomous anti-pesticide spraying and insect repellent mobile robot for agricultural applications. In Proceedings of the 2018 5th International Conference on Electrical and Electronic Engineering (ICEEE), Istanbul, Turkey, 3–5 May 2018; pp. 233–237. [Google Scholar]

- Mane, S.S.; Pawar, N.N.; Patil, S.A.; Shirsath, D. Automatic farmer friendly pesticide spraying robot with camera surveillance system. Int. Res. J. Eng. Technol. (IRJET) 2020, 7, 5347–5349. [Google Scholar]

- Qi, W.; Su, H. A cybertwin based multimodal network for ecg patterns monitoring using deep learning. IEEE Trans. Ind. Informatics 2022, 18, 6663–6670. [Google Scholar] [CrossRef]

- Su, H.; Zhang, J.; Fu, J.; Ovur, S.E.; Qi, W.; Li, G.; Hu, Y.; Li, Z. Sensor fusion-based anthropomorphic control of under-actuated bionic hand in dynamic environment. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 2722–2727. [Google Scholar]

- Huang, J.; Li, G.; Su, H.; Li, Z. Development and continuous control of an intelligent upper-limb neuroprosthesis for reach and grasp motions using biological signals. IEEE Trans. Syst. Man, Cybern. Syst. 2021, 52, 3431–3441. [Google Scholar] [CrossRef]

- Chen, J.; Qiao, H. Muscle-synergies-based neuromuscular control for motion learning and generalization of a musculoskeletal system. IEEE Trans. Syst. Man, Cybern. Syst. 2020, 51, 3993–4006. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Li, Z.; Chen, Z.; Ferrigno, G.; De Momi, E. Deep neural network approach in EMG-based force estimation for human–robot interaction. IEEE Trans. Artif. Intell. 2021, 2, 404–412. [Google Scholar] [CrossRef]

- Qi, W.; Su, H.; Chen, F.; Zhou, X.; Shi, Y.; Ferrigno, G.; De Momi, E. Depth Vision Guided Human Activity Recognition in Surgical Procedure using Wearable Multisensor. In Proceedings of the 2020 5th International Conference on Advanced Robotics and Mechatronics (ICARM), Shenzhen, China, 18–21 December 2020; pp. 431–436. [Google Scholar]

- Strisciuglio, N.; Tylecek, R.; Blaich, M.; Petkov, N.; Biber, P.; Hemming, J.; van Henten, E.; Sattler, T.; Pollefeys, M.; Gevers, T.; et al. Trimbot2020: An outdoor robot for automatic gardening. In Proceedings of the ISR 2018; 50th International Symposium on Robotics, Munich, Germany, 20–21 June 2018; pp. 1–6. [Google Scholar]

- Lamsen, F.C.; Favi, J.C.; Castillo, B.H.F. Indoor Gardening with Automatic Irrigation System using Arduino Microcontroller. ASEAN Multidiscip. Res. J. 2022, 10, 131–148. [Google Scholar]

- Zhang, B.; Chen, X.; Zhang, H.; Shen, C.; Fu, W. Design and Performance Test of a Jujube Pruning Manipulator. Agriculture 2022, 12, 552. [Google Scholar] [CrossRef]

- Tangtisanon, P. Small gardening robot with decision-making watering system. Sens. Mater. 2019, 31, 1905–1916. [Google Scholar] [CrossRef]

- Islam, A.; Saha, P.; Rana, M.; Adnan, M.M.; Pathik, B.B. Smart gardening assistance system with the capability of detecting leaf disease in MATLAB. In Proceedings of the 2019 IEEE Pune Section International Conference (PuneCon), Pune, India, 18–20 December 2019; pp. 1–6. [Google Scholar]

- Cheung, C.S. The Automated Gardening System with an Artificial Intelligent Classifier to Detect Growth Stages of Lettuce. Ph.D. Thesis, City University of Hong Kong, Hong Kong, China, 2020. [Google Scholar]

- Villacrés, J.F.; Auat Cheein, F. Detection and characterization of cherries: A deep learning usability case study in Chile. Agronomy 2020, 10, 835. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Delpiano, J.; Vougioukas, S.; Cheein, F.A. Comparison of convolutional neural networks in fruit detection and counting: A comprehensive evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Cheein, F.A.; Guevara, J.; Llorens, J.; Sanz-Cortiella, R.; Escolà, A.; Rosell-Polo, J.R. Fruit detection, yield prediction and canopy geometric characterization using LiDAR with forced air flow. Comput. Electron. Agric. 2020, 168, 105121. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Llorens, J.; Morros, J.R.; Ruiz-Hidalgo, J.; Vilaplana, V.; et al. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 2019, 187, 171–184. [Google Scholar] [CrossRef]

- Fountas, S.; Mylonas, N.; Malounas, I.; Rodias, E.; Hellmann Santos, C.; Pekkeriet, E. Agricultural robotics for field operations. Sensors 2020, 20, 2672. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Using YOLOv3 algorithm with pre-and post-processing for apple detection in fruit-harvesting robot. Agronomy 2020, 10, 1016. [Google Scholar] [CrossRef]

- Li, J.; Tang, Y.; Zou, X.; Lin, G.; Wang, H. Detection of fruit-bearing branches and localization of litchi clusters for vision-based harvesting robots. IEEE Access 2020, 8, 117746–117758. [Google Scholar] [CrossRef]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous sweet pepper harvesting for protected cropping systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef]

- Peng, Y.; Liu, Y.; Yang, Y.; Yang, Y.; Liu, N.; Sun, Y. Research progress on application of soft robotic gripper in fruit and vegetable picking. Trans. Chin. Soc. Agric. Eng. 2018, 34, 11–20. [Google Scholar]

- Feng, Q.C.; Wang, X. Design of disinfection robot for livestock breeding. Procedia Comput. Sci. 2020, 166, 310–314. [Google Scholar] [CrossRef]

- Feng, Q.; Wang, B.; Zhang, W.; Li, X. Development and Test of Spraying Robot for Anti-epidemic and Disinfection in Animal Housing. In Proceedings of the 2021 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 19 August 2021; pp. 24–29. [Google Scholar]

- Li, H.; Li, M.; Li, J.; Zhan, K.; Liu, X. The Environment Intelligent Monitoring and Analysis for Enclosed Layer House with Four Overlap Tiers Cages in Winter. In Proceedings of the International Conference on Computer and Computing Technologies in Agriculture; Springer: Berlin/Heidelberg, Germany, 2017; pp. 292–300. [Google Scholar]

- Li, W.; Cao, Y.; Cui, J.; Li, D. Design of Automatic Breeding System for Chickens under the Forest. In Proceedings of the 8th International Conference on Management and Computer Science (ICMCS 2018), Shenyang, China, 10–12 August 2018; Atlantis Press: Dordrecht, The Netherlands, 2018; pp. 657–661. [Google Scholar]

- Peng, L.; Jiang, Z. Intelligent automatic pig feeding system based on PLC. Rev. Científica Fac. Cienc. Vet. 2020, 30, 2479–2490. [Google Scholar]

- Karn, P.; Sitikhu, P.; Somai, N. Automatic cattle feeding system. In Proceedings of the 2nd International Conference on Engineering and Technology, KEC Conference 2019, Lalitpur, Nepal, 26 September 2019. [Google Scholar]

- Rumba, R.; Nikitenko, A. Development of free-flowing pile pushing algorithm for autonomous mobile feed-pushing robots in cattle farms. Eng. Rural. Dev. 2018, 958–963. [Google Scholar] [CrossRef]

- Pavkin, D.Y.; Shilin, D.V.; Nikitin, E.A.; Kiryushin, I.A. Designing and Simulating the Control Process of a Feed Pusher Robot Used on a Dairy Farm. Appl. Sci. 2021, 11, 10665. [Google Scholar] [CrossRef]

- Tian, F.; Wang, X.; Yu, S.; Wang, R.; Song, Z.; Yan, Y.; Li, F.; Wang, Z.; Yu, Z. Research on Navigation Path Extraction and Obstacle Avoidance Strategy for Pusher Robot in Dairy Farm. Agriculture 2022, 12, 1008. [Google Scholar] [CrossRef]

- Sitkowska, B.; Piwczyński, D.; Aerts, J.; Waśkowicz, M. Changes in milking parameters with robotic milking. Arch. Anim. Breed. 2015, 58, 137–143. [Google Scholar] [CrossRef]

- Iweka, P.; Kawamura, S.; Mitani, T.; Koseki, S. Non-destructive online real-time milk quality determination in a milking robot using near-infrared spectroscopic sensing system. Arid Zone J. Eng. Technol. Environ. 2018, 14, 121–128. [Google Scholar]

- Borla, N.; Kuster, F.; Langenegger, J.; Ribera, J.; Honegger, M.; Toffetti, G. Teat Pose Estimation via RGBD Segmentation for Automated Milking. arXiv 2021, arXiv:2105.09843. [Google Scholar]

- Akhloufi, M. 3D vision system for intelligent milking robot automation. In Proceedings of the Intelligent Robots and Computer Vision XXXI: Algorithms and Techniques, Burlingame, CA, USA, 23–24 January 2012; SPIE: Bellingham, WA, USA, 2014; Volume 9025, pp. 168–177. [Google Scholar]

- Pal, A.; Rastogi, A.; Myongseok, S.; Ryuh, B.S. Algorithm design for teat detection system methodology using TOF, RGBD and thermal imaging in next generation milking robot system. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Republic of Korea, 28 June–1 July 2017; pp. 895–896. [Google Scholar]

- Vroegindeweij, B.A.; Blaauw, S.K.; IJsselmuiden, J.M.; van Henten, E.J. Evaluation of the performance of PoultryBot, an autonomous mobile robotic platform for poultry houses. Biosyst. Eng. 2018, 174, 295–315. [Google Scholar] [CrossRef]

- Khan, N.; Medlock, G.; Graves, S.; Anwar, S. GPS Guided Autonomous Navigation of a Small Agricultural Robot with Automated Fertilizing System; Technical Report, SAE Technical Paper; SAE: Warrendale, PA, USA, 2018. [Google Scholar] [CrossRef]

- Hayashi, S.; Ganno, K.; Ishii, Y.; Tanaka, I. Robotic harvesting system for eggplants. Jpn. Agric. Res. Q. JARQ 2002, 36, 163–168. [Google Scholar] [CrossRef]

- Onosato, M.; Tadokoro, S.; Nakanishi, H.; Nonami, K.; Kawabata, K.; Hada, Y.; Asama, H.; Takemura, F.; Maeda, K.; Miura, K.; et al. Disaster information gathering aerial robot systems. In Rescue Robotics; Springer: Cham, Swizerland, 2009; pp. 33–55. [Google Scholar]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations. Part 2: Operations and systems. Biosyst. Eng. 2017, 153, 110–128. [Google Scholar] [CrossRef]

- Chetan Kumar S, T.; Nandeesh, P.; Naveen, M.; Vineet K, G. Multi Purpose Agricultural Robot. Ph.D Thesis, Visvesvaraya Technological University, Karnataka, India, 2015. [Google Scholar]

- Miermeister, P.; Lächele, M.; Boss, R.; Masone, C.; Schenk, C.; Tesch, J.; Kerger, M.; Teufel, H.; Pott, A.; Bülthoff, H.H. The cablerobot simulator large scale motion platform based on cable robot technology. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 3024–3029. [Google Scholar]

- Chesser, P.C.; Post, B.K.; Roschli, A.C.; Lind, R.F.; Boulger, A.M.; Love, L.J.; Gaul, K.T. Fieldable Platform for Large-Scale Deposition of Concrete Structures; Technical Report; Oak Ridge National Lab. (ORNL): Oak Ridge, TN, USA, 2018. [Google Scholar]

- Polic, M.; Ivanovic, A.; Maric, B.; Arbanas, B.; Tabak, J.; Orsag, M. Structured ecological cultivation with autonomous robots in indoor agriculture. In Proceedings of the 2021 16th International Conference on Telecommunications (ConTEL), Zagreb, Croatia, 30 June–2 July 2021; pp. 189–195. [Google Scholar]

- Correll, N.; Arechiga, N.; Bolger, A.; Bollini, M.; Charrow, B.; Clayton, A.; Dominguez, F.; Donahue, K.; Dyar, S.; Johnson, L.; et al. Indoor robot gardening: Design and implementation. Intell. Serv. Robot. 2010, 3, 219–232. [Google Scholar] [CrossRef]

- Ivanovic, A.; Polic, M.; Tabak, J.; Orsag, M. Render-in-the-loop aerial robotics simulator: Case Study on Yield Estimation in Indoor Agriculture. arXiv 2022, arXiv:2203.00490. [Google Scholar]

- Conceição, T.; Neves dos Santos, F.; Costa, P.; Moreira, A.P. Robot localization system in a hard outdoor environment. In Proceedings of the Iberian Robotics Conference, Sevilla, Spain, 22–24 November 2017; Springer: Cham, Swizerland, 2018; pp. 215–227.

- Dong, F.; Petzold, O.; Heinemann, W.; Kasper, R. Time-optimal guidance control for an agricultural robot with orientation constraints. Comput. Electron. Agric. 2013, 99, 124–131. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-time fruit recognition and grasping estimation for robotic apple harvesting. Sensors 2020, 20, 5670. [Google Scholar] [CrossRef]

- Weiss, U.; Biber, P. Plant detection and mapping for agricultural robots using a 3D LIDAR sensor. Robot. Auton. Syst. 2011, 59, 265–273. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, L.; Xiang, L.; Wu, Q.; Jiang, H. Automatic non-destructive growth measurement of leafy vegetables based on kinect. Sensors 2018, 18, 806. [Google Scholar] [CrossRef]

- Wei, X.; Jia, K.; Lan, J.; Li, Y.; Zeng, Y.; Wang, C. Automatic method of fruit object extraction under complex agricultural background for vision system of fruit picking robot. Optik 2014, 125, 5684–5689. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.; Bradford, J.; Murden, D. Comparison of airborne multispectral and hyperspectral imagery for estimating grain sorghum yield. Trans. ASABE 2009, 52, 641–649. [Google Scholar] [CrossRef]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A large-scale semantic weed mapping framework using aerial multispectral imaging and deep neural network for precision farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Constantinou, I.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. Design and development of a semi-autonomous agricultural vineyard sprayer: Human–robot interaction aspects. J. Field Robot. 2017, 34, 1407–1426. [Google Scholar] [CrossRef]

- Adamides, G. User interfaces for human-robot interaction: Application on a semi-autonomous agricultural robot sprayer. Ph.D. Thesis, Open University of Cyprus, Latsia, Cyprus, 2016. [Google Scholar]

- Lytridis, C.; Kaburlasos, V.G.; Pachidis, T.; Manios, M.; Vrochidou, E.; Kalampokas, T.; Chatzistamatis, S. An Overview of Cooperative Robotics in Agriculture. Agronomy 2021, 11, 1818. [Google Scholar] [CrossRef]

- Benos, L.; Bechar, A.; Bochtis, D. Safety and ergonomics in human-robot interactive agricultural operations. Biosyst. Eng. 2020, 200, 55–72. [Google Scholar] [CrossRef]

- McCracken, C. Old MacDonald Had a Trust: How Market Consolidation in the Agricultural Industry, Spurred on by a Lack of Antitrust Law Enforcement, Is Destroying Small Agricultural Producers. Wm. Mary Bus. L. Rev. 2021, 13, 575. [Google Scholar]

- Rahmadian, R.; Widyartono, M. Autonomous Robotic in Agriculture: A Review. In Proceedings of the 2020 Third International Conference on Vocational Education and Electrical Engineering (ICVEE), Surabaya, Indonesia, 3–4 October 2020; pp. 1–6. [Google Scholar]

- Poonguzhali, S.; Gomathi, T. Design and implementation of ploughing and seeding of agriculture robot using IOT. In Soft Computing Techniques and Applications; Springer: Cham, Swizerland, 2021; pp. 643–650. [Google Scholar]

- Benos, L.; Sørensen, C.G.; Bochtis, D. Field Deployment of Robotic Systems for Agriculture in Light of Key Safety, Labor, Ethics and Legislation Issues. Curr. Robot. Rep. 2022, 3, 49–56. [Google Scholar] [CrossRef]

- Wisse, M.; Chiang, T.C.; van der Hoorn, G. D1. 14: Best Practices in Developing Open Platforms for Agri-Food Robotics–Updated Final Version. 2020. Available online: https://agrobofood.eu/wp-content/uploads/2022/06/D1.14-Best-Practices-in-developing-open-platforms-updated-final-version_PU.pdf (accessed on 28 October 2022).

- Villacrés, J.; Cheein, F.A. In-field piecewise regression based prognosis of the IPC in electrically powered agricultural machinery. Comput. Electron. Agric. 2022, 202, 107324. [Google Scholar] [CrossRef]

- Schmidt, J.R.; Cheein, F.A. Prognosis of the energy and instantaneous power consumption in electric vehicles enhanced by visual terrain classification. Comput. Electr. Eng. 2019, 78, 120–131. [Google Scholar] [CrossRef]

- Schmidt, J.R.; Cheein, F.A. Assessment of power consumption of electric machinery in agricultural tasks for enhancing the route planning problem. Comput. Electron. Agric. 2019, 163, 104868. [Google Scholar] [CrossRef]

- Pablo Vasconez, J.; Carvajal, D.; Auat Cheein, F. On the design of a human-robot interaction strategy for commercial vehicle driving based on human cognitive parameters. Adv. Mech. Eng. 2019, 11, 168781401986271. [Google Scholar] [CrossRef]

- Cheein, F.A.; Torres-Torriti, M.; Rosell-Polo, J.R. Usability analysis of scan matching techniques for localization of field machinery in avocado groves. Comput. Electron. Agric. 2019, 162, 941–950. [Google Scholar] [CrossRef]

- Seyyedhasani, H.; Peng, C.; Jang, W.j.; Vougioukas, S.G. Collaboration of human pickers and crop-transporting robots during harvesting–Part I: Model and simulator development. Comput. Electron. Agric. 2020, 172, 105324. [Google Scholar] [CrossRef]

- Guevara, L.; Rocha, R.P.; Cheein, F.A. Improving the manual harvesting operation efficiency by coordinating a fleet of N-trailer vehicles. Comput. Electron. Agric. 2021, 185, 106103. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Admoni, H.; Cheein, F.A. A methodology for semantic action recognition based on pose and human-object interaction in avocado harvesting processes. Comput. Electron. Agric. 2021, 184, 106057. [Google Scholar] [CrossRef]

- Vásconez, J.P.; Cheein, F.A.A. Workload and production assessment in the avocado harvesting process using human-robot collaborative strategies. Biosyst. Eng. 2022, 223, 56–77. [Google Scholar] [CrossRef]

- Rysz, M.; Mehta, S.S. A risk-averse optimization approach to human-robot collaboration in robotic fruit harvesting. Comput. Electron. Agric. 2021, 182, 106018. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).