1. Introduction

Defect detection is an important part of a product’s quality control [

1,

2]; more and more manufacturers are paying more attention to the surface quality of products and have put forward more stringent requirements. The purpose of surface defect detection is to find whether defects on the surfaces of products are related to functional defects and the aesthetics of products. Defects will affect product yield, resulting in reduced production efficiency and waste of raw materials. In addition, a product’s surface defects will affect users’ intuitive feeling. Defects in some critical facilities, such as vehicles, buildings, precision equipment, etc., may lead to severe economic losses and casualties. Good product quality is an essential prerequisite for manufacturers to achieve a high market share. Many enterprises still rely on human resources for product surface quality inspection. Depending on human resources will not only bring an economic burden to enterprises, but efficiency and accuracy cannot be guaranteed. Heavy work will also harm the health of workers. Therefore, it is of great significance to propose a scheme that can replace human resources and realize automatic and intelligent defect inspection tasks [

3].

With the rapid development of information technology, machine vision has been applied in many industrial production and inspection fields, including those of fabrics [

4], glass [

5], multicrystalline solar wafers [

6], and steel [

7]. In order to solve the above problems, researchers have proposed detection methods from various aspects. Song, K. et al. proposed a feature descriptor template named AECLBPs in a steel surface inspection system [

7]. K. L. Mak proposed a novel defect detection scheme based on morphological filters for automated defect detection in woven fabrics [

8]. X. Kang et al. proposed a universal and adaptive fabric defect detection algorithm based on sparse dictionary learning [

9]. Lucia, B. et al. proposed a feature extraction phase by using Gabor Filters and PCA for texture defect feature detection [

10]. These defect feature extractors were artificially designed, and researchers could quickly select the defect feature extractors according to their experience. Therefore, defect detection performance depends heavily on manually defined defect feature representations. New changes in the feature morphologies of defects will lead to a reduction in the defect recognition performance [

11].

In the last decade, due to the rapid development of the Internet, big data, and the computing equipment industry, convolutional neural networks (CNNs) have become a new trend for machine-vision-related tasks [

12]. Due to the computing power of convolutional neural networks, they are trained on large amounts of data. A CNN can allow artificially designed feature extractors to be avoided and can automatically learn a large number of useful detailed and semantic features. Therefore, a network model can obtain good generalization performance. CNNs have been applied to many research fields, such as object detection [

13,

14], human posture recognition [

15], super-resolution [

16], autonomous driving [

17], etc.

These findings have inspired researchers to apply CNNs to vision-based industrial inspection tasks. CNN-based defect inspection tasks can be divided into two categories: defect classification and defect localization.

(1) Defect classification approaches: Yi Li et al. proposed an end-to-end surface defect recognition system that was constructed by using the symmetric surround saliency map and a CNN [

18]. Fu et al. proposed a defect classification CNN model, which emphasized the training of low-level features and incorporated multiple receptive fields to achieve fast and accurate steel surface defect classification [

11]. T. Benbarrad et al. studied the impact of image compression on the classification performance of steel surface defects with a CNN [

19]. Myeongso Kim et al. proposed a CNN-based transfer learning method for defect classification in semiconductor manufacturing [

20].

(2) Defect localization approaches: Unlike in defect classification, defect location can provide specific location information of defects in the detected target, which is a task requiring a higher accuracy of defect identification. Ren et al. presented a CNN model for performing surface defect inspection tasks, and feature transferal from pre-trained models was used in the model [

21]. T Wang et al. proposed a CNN model for product quality control [

22]. Mei Zhang et al. proposed a one-class classifier for image defect detection [

23]. Yibin Huang et al. proposed a compact CNN model for surface defect inspection, and the model consisted of a lightweight (LW) bottleneck and a decoder [

24]. D. Tabernik et al. proposed a segmentation-based CNN model for segmenting surface anomalies [

25]. Saliency detection aims to detect the most interesting object in an image [

26,

27,

28,

29,

30], which is close to the target of defect inspection. Yibin Huang et al. proposed a deep hierarchical structured convolutional network for detecting magnetic tile surface defect saliency [

31].

In the construction of a defect location model, to obtain the location information of defects, it is necessary to combine an encoder and decoder and fuse low-level features in the decoding process to generate a higher-resolution feature map. Designing a more effective feature extraction and fusion architecture is a hot research direction for defect location. U-Net is a widely used encoder–decoder architecture [

32], and it was first applied to biomedical image segmentation. A typical U-Net structure consists of a contracting path to capture semantic context information and a symmetric expanding path that enables precise localization. Inspired by this structure, many researchers have designed similar architectures for the defect inspection task.

Semantic information is crucial in object recognition tasks, including defect localization, which is related to the physical meaning of the target. Semantic information is richer in a convolutional neural network’s deeper layer. On the contrary, the shallow layer has weaker semantic information. Some existing methods have built models by designing decoder structures based on high-level semantic features [

24,

25]. On this basis, some researchers used these semantic features at individual scales to create a decoder structure. Researchers have paid little attention to features with weaker semantic information at the individual scale. It is worth noting that these features have more detailed information, and they are helpful clues for capturing accurate defect location information. In addition, some existing defect localization CNN models do not use high-level semantic and detailed low-level features well.

To settle these problems, we propose a novel CNN model for obtaining fast and accurate pixel-level defect localization. Our model is constructed on a pre-trained lightweight SqueezeNet model. We propose three effective techniques for achieving the effective extraction of semantic and detailed information, fusion, and supervision. The proposed model is applied to our defect inspection system. To verify the validity of this model, a pixel-level defect dataset, USB-SG, was collected and labeled in this paper, and it contained a total of 683 images with five defect types. Comparative experiments verify the effectiveness and superiority of the proposed model. The proposed model runs at a speed of over 30 FPS when processing images on an NVIDIA 1080TI GPU, which could satisfy the demand for a real-time defect inspection task. The main contributions of this paper can be summarized as follows:

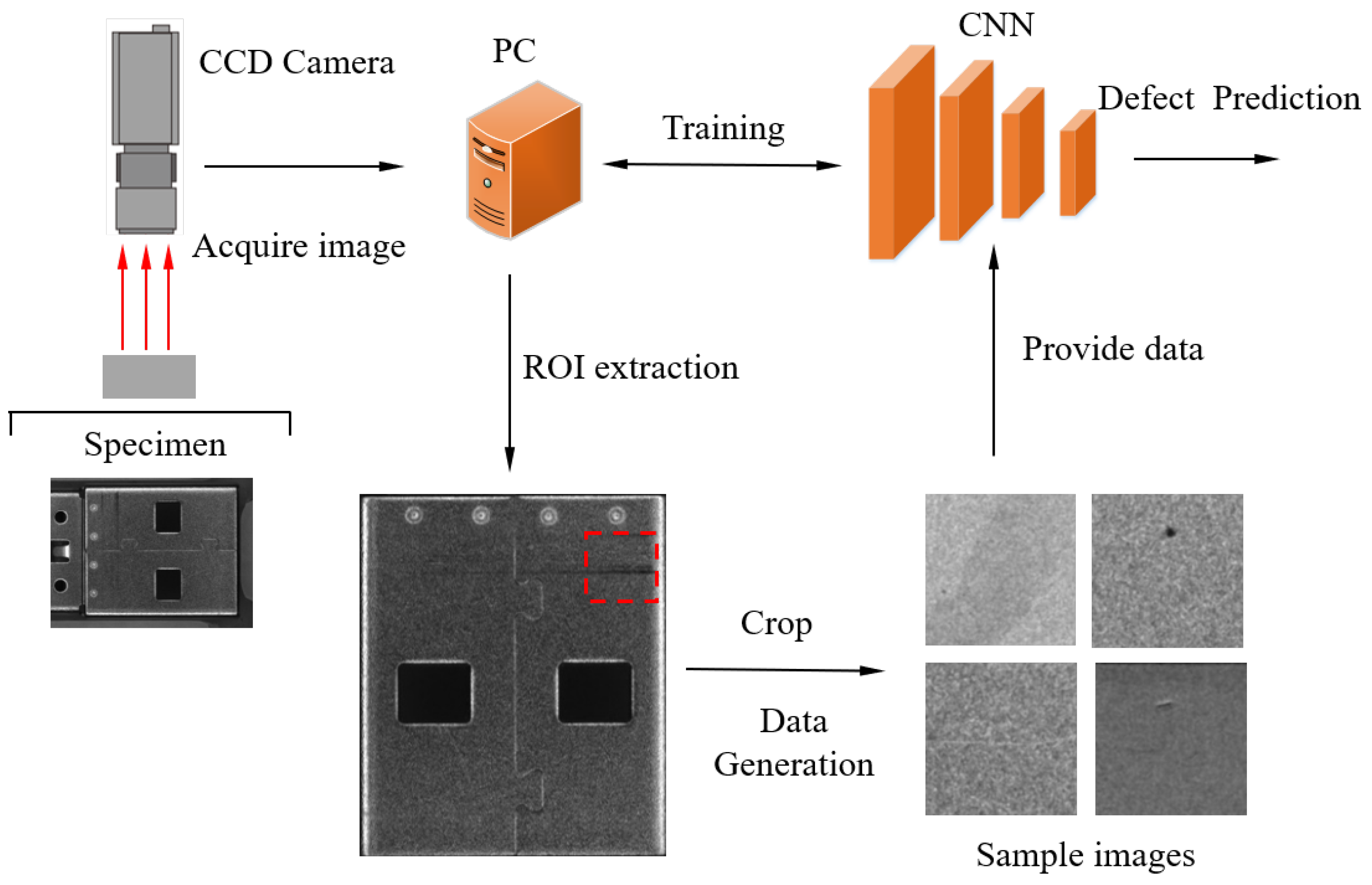

To improve the automation and intelligence levels of defect inspection, this paper proposes a defect inspection system based on a CNN. The proposed model is built based on a lightweight SqueezeNet model.

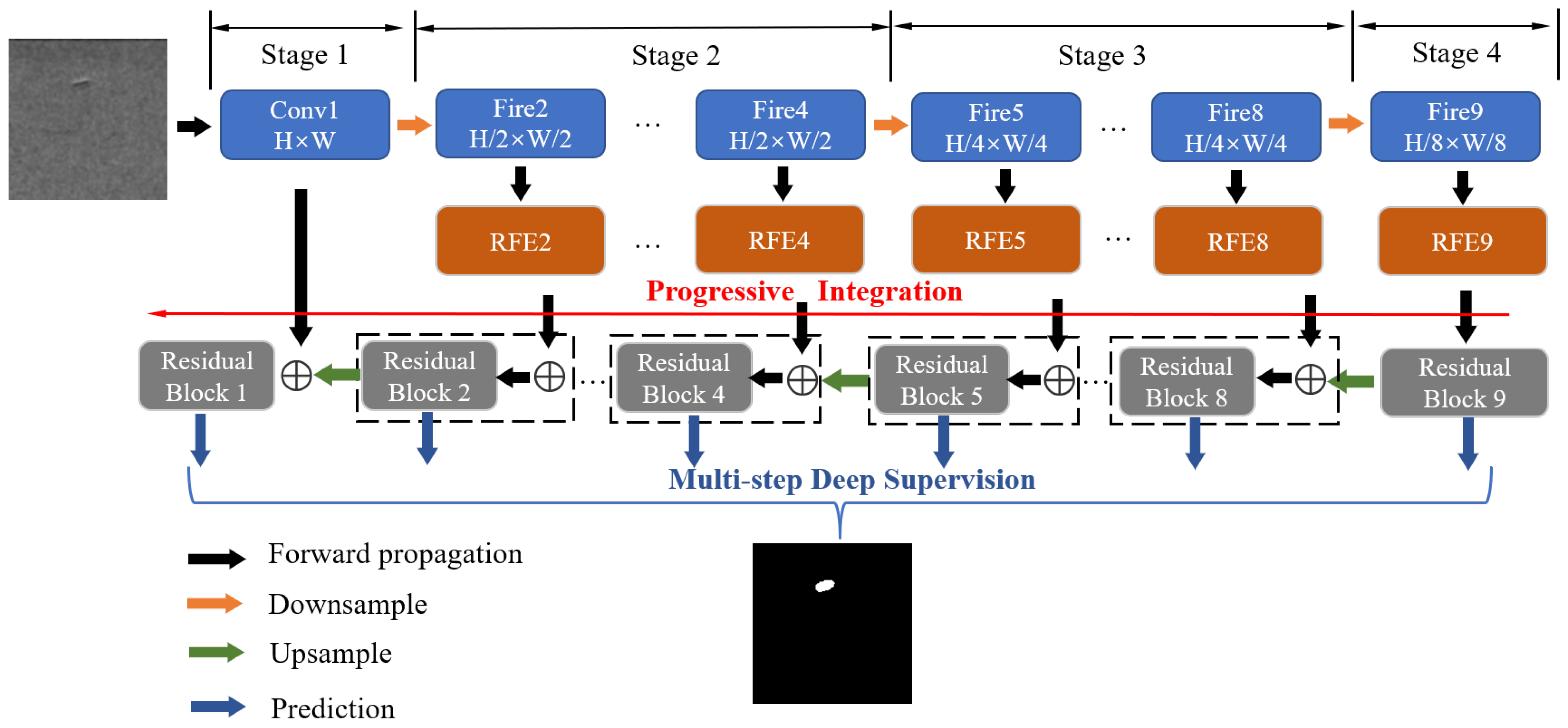

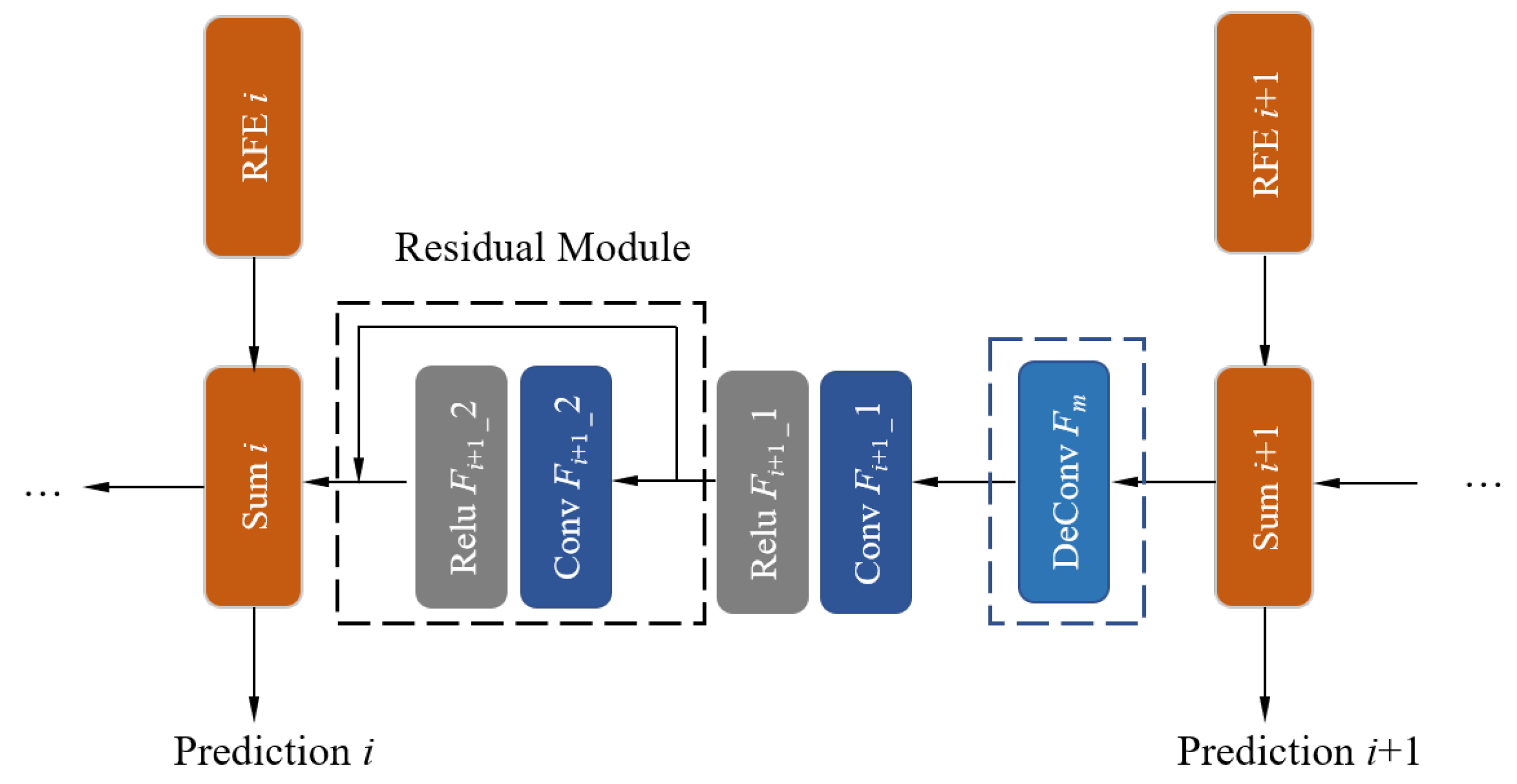

We design three effective techniques to improve the performance of the proposed model. Firstly, rich feature extraction blocks are used to capture both semantic and detailed information at different scales. Then, a residual-based progressive feature fusion structure is used to fuse the extracted features at different scales. Finally, the fusion results of defects are supervised in multiple fusion steps.

To verify the effectiveness of the proposed model, we manually labeled a pixel-level defect dataset, USB-SG, which included markings of the defect locations. The dataset included five defect types—dents, scratches, spots, stains, and normal—with a total of 684 images.

Our approach could obtain higher detection accuracy compared with that of other machine learning and deep-learning-based methods. The running speed of this model was able to reach real time, and it has wide application prospects in industrial inspection tasks.

The rest of this paper is organized as follows. In

Section 2, the defect inspection system and proposed dataset are introduced. The proposed method is introduced in

Section 3. The implementation details, ablation study, and experimental comparisons of our proposed model are presented in

Section 4. Finally,

Section 5 concludes the paper.

4. Experiments

This section first introduces the details of the experimental setup and the evaluation indicators. An ablation study was conducted in order to verify the effectiveness of the proposed optimization strategy. Fair quantitative comparison experiments were organized to compare the performance with that of other existing detection models, which could demonstrate the superiority of the proposed model.

4.1. Implementation Details

The dataset used in the experiment was USB-SG; detailed information on the training set and test set is shown in

Table 1, and no data augmentation techniques were used. All of the experiments are carried out with the same dataset settings. The experiments were implemented on the publicly available Caffe deep learning platform [

39]. We chose the stochastic gradient descent (SGD) policy to train the model. The weight decay was set to

, and the momentum was set to

. The batch size was set to 16, which meant that 16 sample images were computed per iteration. The base learning rate was set to 0.01. The hardware for the experiments was Intel-i7 CPU and NVIDIA 1080Ti GPU.

4.2. Evaluation Index

To comprehensively evaluate the performance of the defect location model, four indexes were used to analyze the detection results of the model. They were the precision, recall,

, mean absolute error (MAE), and Dice coefficient [

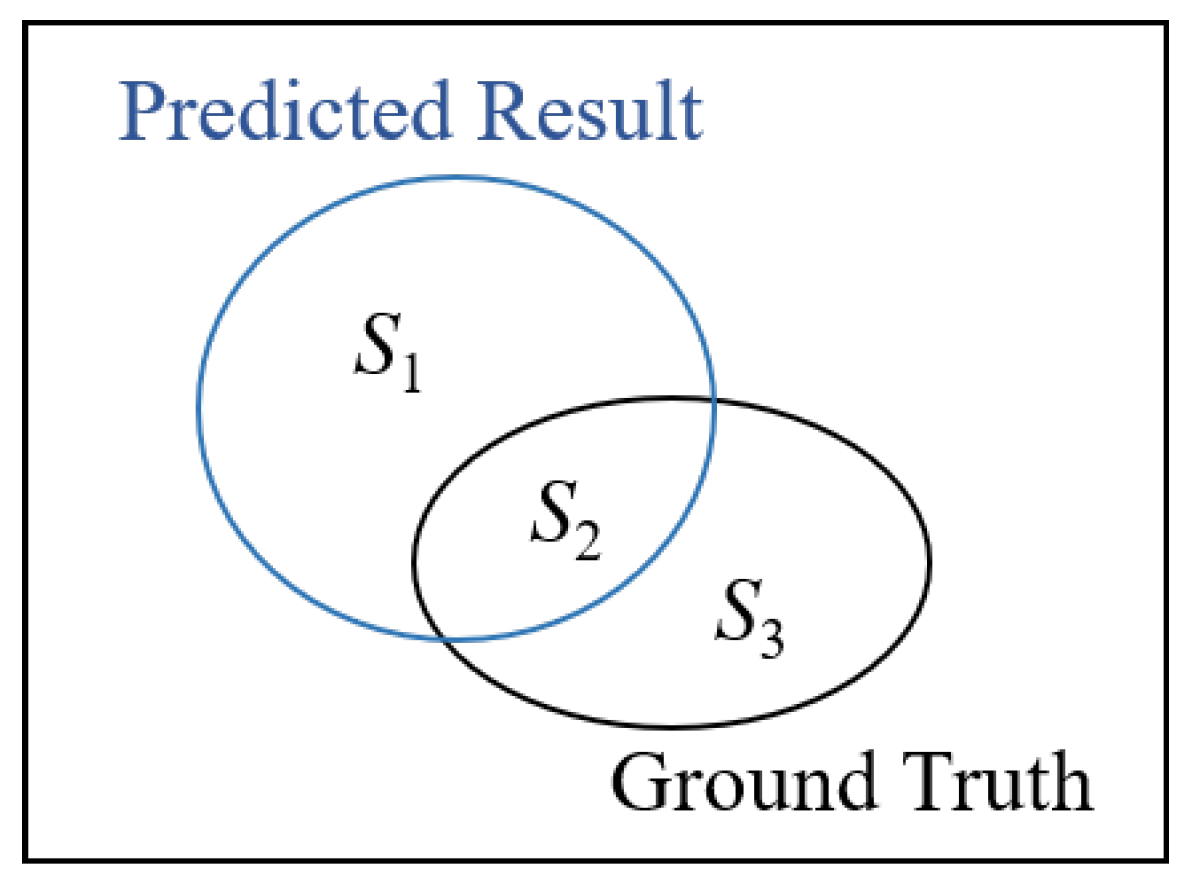

40]. To better illustrate the calculation process for these indexes, a diagram of the predicted results and ground truth is shown in

Figure 7.

was included in the predicted region, but not included in the ground truth;

was included in both the predicted region and the ground truth;

was included in the ground truth, but not included in the predicted region. The Dice coefficient was defined as

The precision and recall were calculated as

Because precision and recall are inversely correlated, precision is generally more important than recall.

was used to keep a balance between precision and recall, and it was defined as

was set to 0.3 in our experiment. The mean absolute error (MAE) was used to measure the difference between the predicted result and the ground truth, and it was defined as

It is noted that the model with the highest and lowest MAE had the best performance.

4.3. Ablation Study

In this part, we discuss the three proposed model optimization strategies in detail and organize systematic experiments to verify their effectiveness.

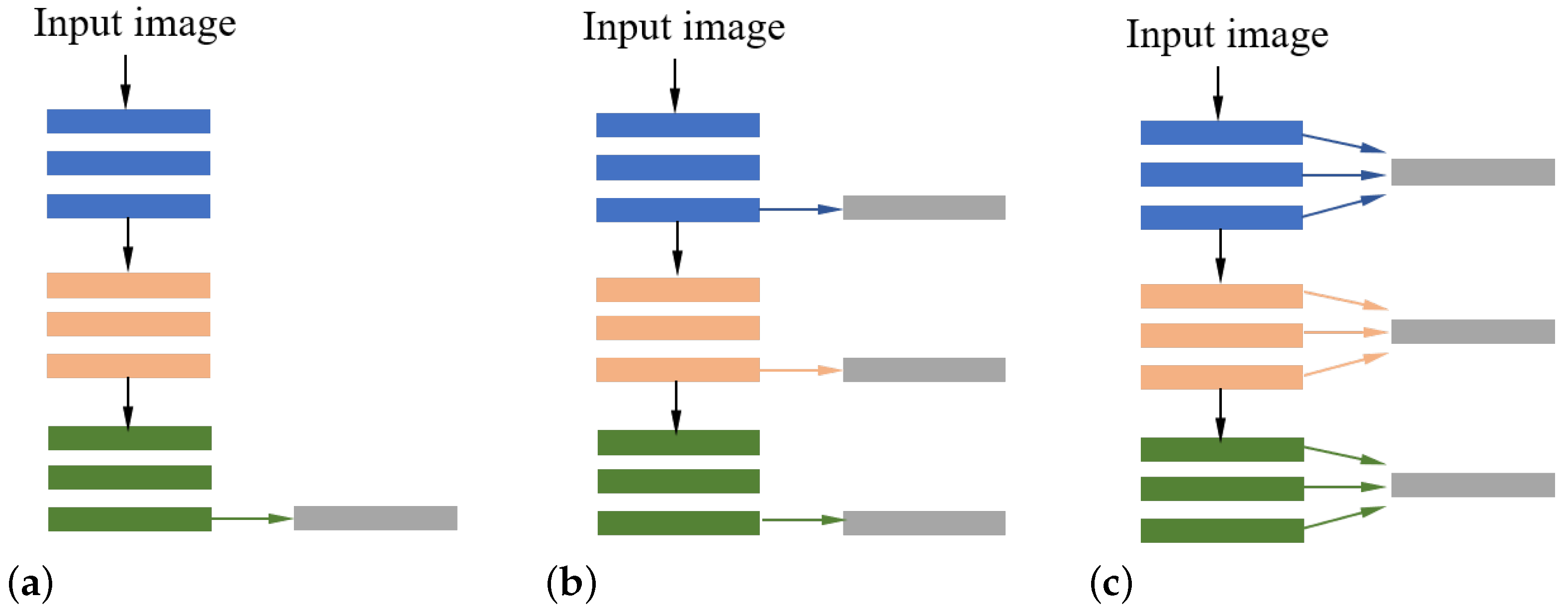

4.3.1. Rich Feature Extraction

The proposed RFE block could extract semantic and detailed information at all stages, which is helpful in accurately capturing the defects. In

Section 3.1, we explained the differences between the proposed method and two existing schemes. The experimental comparisons are shown in

Table 2. For a fair comparison, all of the models were constructed with the SqueezeNet backbone. It can be seen from the experimental results that the proposed RFE had a higher

/Dice coefficient and lower MAE-1/MAE-2 than the other two schemes (SQ-a and SQ-b). It can be concluded that the proposed RFE block was more effective than the other two existing schemes.

4.3.2. Residual-Based Progressive Integration

To explore the effectiveness of RPI, two pairs of comparisons are conducted. The experimental comparisons are shown in

Table 3. Firstly, a widely used feature fusion method, Unet [

32] (SQ-Unet), was compared with our SQ-RPI. The experimental results showed that RPI could achieve higher values of

(0.6781 vs. 0.6340) and the Dice coefficient (0.6515 vs. 0.6057) and a lower MAE (0.0170 vs. 0.0174, 0.0090 vs. 0.0094). Through the experimental results of the second pair, it could be found that, on the basis of the proposed SQ-RFE model, the addition of RPI could improve

(0.6862 vs. 0.6702) and the Dice coefficient (0.6640 vs. 0.6484) and could decrease the MAE (0.0158 vs. 0.0167, 0.0084 vs. 0.0088). Through the comparative experiment on the two different baseline models (SQ-Unet and SQ-RFE), it could be concluded that the proposed RPI was effective and robust. In addition, it was proved that the structure of RPI had a good generalization ability and can be applied to similar tasks.

4.3.3. Multi-Step Deep Supervision

In order to analyze the influence of MsDS on the model performance and find the optimal amount of supervision, a series of comparative experiments were organized, and they are shown in

Table 4. First, the model performance of SQ-REF-RPI without MsDS was compared with that of SQ-REF-RPI-MsDS. Then, comparisons of the model performance of SQ-REF-RPI-MsDS with different amounts of supervision

M are provided.

It was observed that the application of MsDS on SQ-RFE-RPI improved the performance, even with different amounts of supervision. In more detail, the model performed best when the amount of supervision was . As the amount of supervision M increased, the performance tended to deteriorate. It should be noted that the features of the last predictions (predictions 9, 8, and 7) contained less detailed information; thus, they had a greater difference from the ground truth. Paying more attention to the coarse predictions in the training process will affect the performance of other predictions.

The above experimental results confirmed that the performance was best when there were five supervisions in the model. In order to analyze the relationship between the different predictions, five different predictions were computed, as shown in

Table 5. It was observed that from

to

,

and the Dice coefficient increased, and the MAE decreased. This was because

had the most abundant semantic information and most detailed information, which indicated that the process of learning defect features in the model was from coarse to refined.

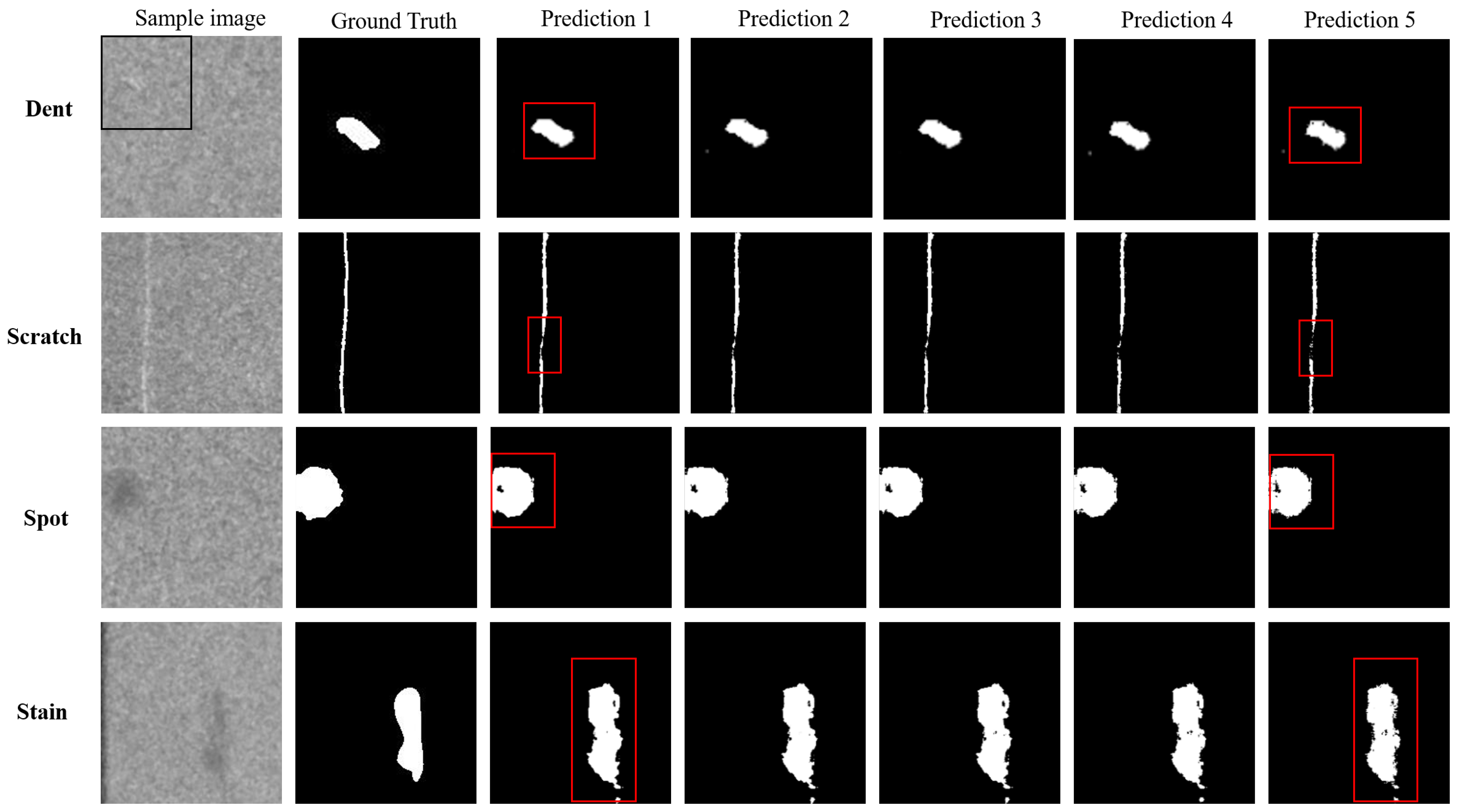

In addition to the quantitative analysis, visual comparison experiments for different predictions were conducted, as shown in

Figure 8. All four defect types were compared to make the experiments more comprehensive. The first row contains dent-type defects. It was observed that from Prediction 5 to 1, the defective regions became integral, the holes were reduced, and the edges became smoother. A similar situation can be observed in the third and fourth rows. The second row contains scratch-type defects, whose characteristic morphology is slender stripes. From Prediction 5 to 1, it was noted that the disconnected part was gradually connected so that the defect region became complete. In conclusion, the proposed model could provide more accurate predictions by using RPI to integrate semantic and detailed information.

4.3.4. Loss Function

In our model, we chose the most commonly used cross-entropy as our loss function. In recent years, the loss function has also been a hot topic for researchers. Xiaoya Li et al. proposed Dice loss to associate training examples with dynamically adjusted weights to de-emphasize easy-negative examples [

41]. The Dice loss is defined as

where

b indicates the weights, and it is usually set to 1; the rest of the variables were mentioned in

Section 3.3.

K. He et al. proposed focal loss [

42]. When an image contains a large number of negative data samples, most of the loss and gradient will be dominated by negative samples. Focal loss prevents the vast number of easy negatives from overwhelming the detector during training. The focal loss is defined as

where

a and

are the weights, and the rest of the variables were mentioned in

Section 3.3.

To explore the influence of the above two loss functions on the proposed model, we conducted comparative experiments while using different loss functions. The setup was the same for all experiments, except for the loss function. The experimental results are shown in

Table 6. Cross-entropy was adopted as the loss function in the proposed model due to its stability and trainability. Dice loss was implemented by using the default parameter, and focal loss was adopted with two sets of parameters: (1)

and

; (2)

and

. From the experimental results, it could be found that the performance when using Dice loss was slightly lower than that when using cross-entropy. Focal loss (setting

and

) achieved better performance than that of cross-entropy; when

and

, the performance became worse. This was because setting an appropriate weight in focal loss, such as

and

, could suppress the loss of negative samples and enhance the learning effect of positive samples. In the future, focal loss can be applied to the proposed model to boost the defect detection performance.

4.4. Comparisons with Other Models

To verify the effectiveness of the proposed model, we compared our model with other defect/object localization models. To make the experimental comparison more comprehensive, traditional non-deep learning (DL)-based and DL-based methods were included. The non-DL-based methods included AC [

27], BMS [

28], FT [

43], GMR [

44], HC [

29], LC [

45], MBP [

46], MSS [

47], PHOT [

48], RC [

29], Rudinac [

49], SF [

30], and SR [

50]. The deep-learning-based methods included the widely used U-Net [

32], Rec [

51], SegNet [

52], and LPBF-Net [

53]. All of the experimental results were either reproduced from the source code provided by the authors or implemented based on the original article. A comparative evaluation of the different defect/object localization models on USB-SG defect dataset is shown in

Table 7.

These non-DL-based methods used hand-crafted feature extractors to realize object detection/localization tasks. Among these artificial design feature extraction methods, Rudinac, SF, and SR were the three best methods, as they had the highest and the lowest MAE. It was observed that the values of almost all non-DL-based methods were around 0.2, and the MAE values were around . It could be concluded that the performance of most of the detection methods was very poor, and these methods were not adequate for the task of defect inspection. The reason behind this was that these methods were based on hand-crafted feature extractors, which had a certain subjectivity. As a result, the generalization ability and robustness of the models were limited. These deep-learning-based methods (U-Net, Rec, SegNet, and LPBF-Net) performed better than the methods mentioned above. U-Net is a very influential model that has been widely used in many fields and scenes. Through the experimental comparison, it could be found that the model proposed in this paper had higher values (0.7057 vs. 0.6388 vs. 0.6883 vs. 0.6862 vs. 0.6968), lower MAE (0.0151 vs. 0.0183 vs. 0.0162 vs. 0.0160 vs. 0.0157, 0.0080 vs. 0.0098 vs. 0.0085 vs. 0.0084 vs. 0.0082 ) and higher Dice coefficient values (0.6854 vs. 0.6217 vs. 0.6789 vs. 0.6797 vs 0.6806) than those of the other two deep learning models. This was because this paper designed a proper defect feature extraction, integration, and supervision scheme that can accomplish high-precision defect detection tasks.

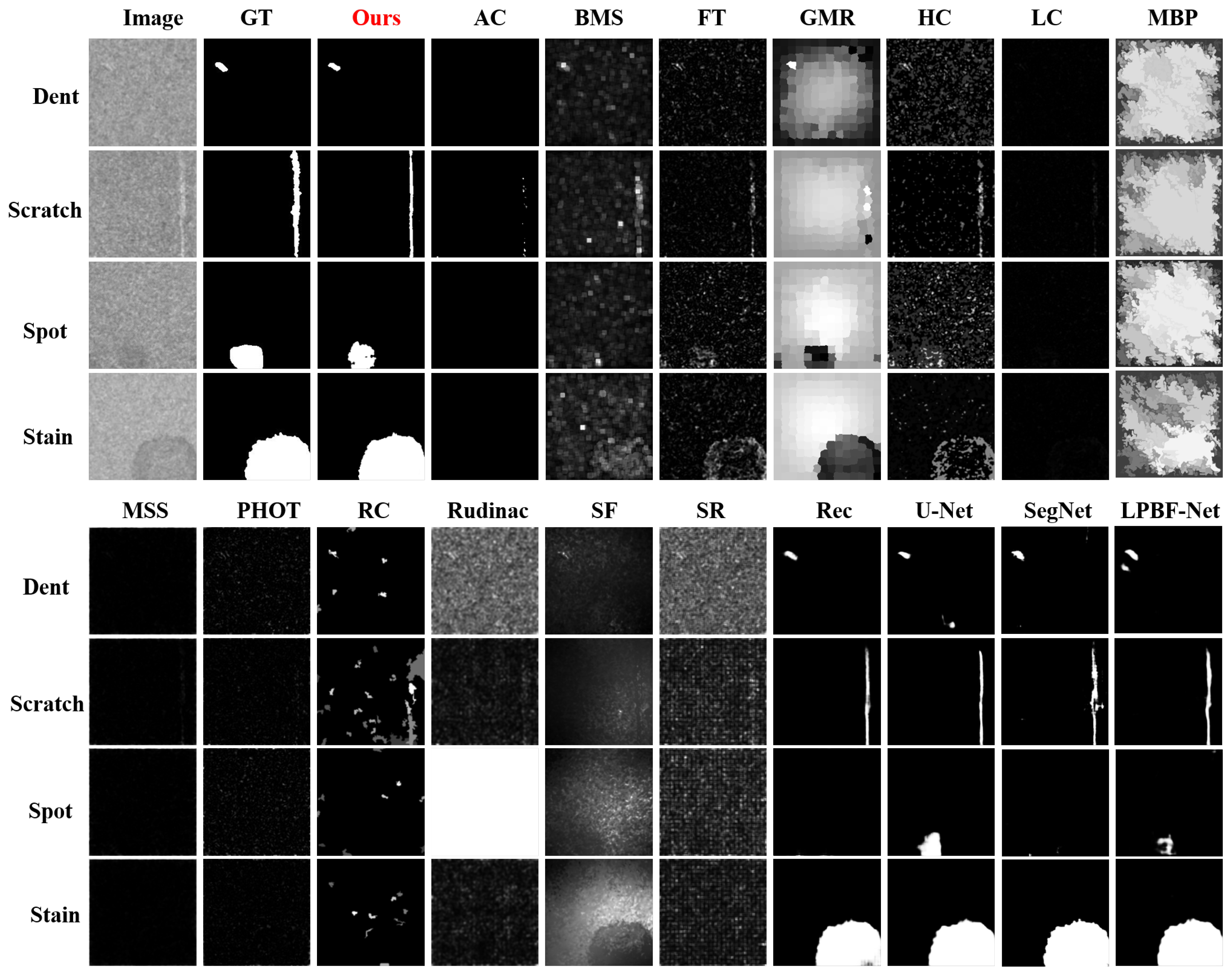

In addition to the quantitative comparison of

, the MAE, and the Dice coefficient, visual results are also compared in this paper to make the experimental evaluation more comprehensive. The experimental results are shown in

Figure 9, where the four defect types are compared. The first column of the upper half of the graph is the input image, the second is the annotated ground truth, the third is the results presented in this paper, and the remaining part shows the results of other methods. It can be seen that the detection results of many methods were pure black, such as those of AC, LC, MSS, and PHOT, which indicated that these methods failed to find defective areas. Other methods, such as BMS, FT, HC, and RC, found partial defect information, but they could not suppress non-defective areas. In addition, some methods, such as GMR, Rudinac, and SF, failed to correctly identify defective and non-defective areas, misidentifying non-defective areas as defective areas. The proposed USB-SG defect dataset had the characteristics of large differences in defect size. For example, the size of the dents was less than 0.5 mm, and the size of the stains was usually more than 5 mm. The traditional hand-crafted feature-extractor-based methods could not adapt to multi-scale features. In addition, there were difficulties such as weak defect features and unclear edges, which also affected the detection performance.

Compared to traditional methods, deep-learning-based approaches performed better. In the detection of dent-type defects, our model could completely detect the defective areas and suppress the non-defective areas. U-Net and LPBF-Net misidentified the features of the non-defective areas as defective. In the results for scratches, it could be seen that the integrity of the model proposed in this paper was better, while Rec, U-Net, and SegNet were worse. In the results for spots, it could be seen that Rec, SegNet, and LPBF-Net failed to identify defective areas, and the integrity of U-Net’s results was not as good as that of ours. In the detection results for stains, Rec could not find defective regions completely, and some defective areas were misjudged as non-defective regions. Based on the above comparison, it can be concluded that the proposed method had better detection results than those of other methods and could accurately distinguish defective from non-defective areas. However, the method proposed in this paper also has room for further improvement. For example, the completeness of the spot sample detection results was not satisfactory.

In order to test the computational efficiency of the model, the model was tested on a computer equipped with an NVIDIA 1080Ti GPU. By calculating the average value many times, it is found that the model could process more than 40 pixel images per second, thus achieving real-time speed. The processing speed of this model was much faster than that of manual inspection and has broad application prospects in the field of industrial surface defect inspection.

5. Conclusions

To improve the automation level of defect detection and promote the application of intelligent detection methods in product quality monitoring, in this paper, a defect inspection system was proposed to solve the problem of a defect inspection task for USB connectors. A defect dataset, USB-SG, which contained pixel-level defect locations, was collected and manually labeled. We proposed a defect inspection model that included three model optimization techniques. Firstly, a rich feature extraction block was designed to extract semantic and detailed information. Then, the feature fusion performance and the generalization ability of the model were improved through residual-based progressive integration. Finally, multi-step deep supervision was applied to improve the final prediction performance. The experimental results showed that the proposed model had better detection performance than that of other models; reached 0.7057, and the MAE was as low as 0.008. In addition, our model could achieve real-time processing speeds (>40 FPS ) on an NVIDIA 1080Ti GPU. Our proposed defect inspection system has a wide range of application prospects in industrial inspection tasks and can be used as a model for related researchers as technical support.

In the future, we plan to study further from two perspectives: First, considering that it takes many human resources to annotate the pixel-level ground truth, we plan to study a weakly supervised method to reduce the demand for manual annotations. Second, we intend to investigate more general inspection systems that can be adapted to defect inspection tasks for a variety of products.