Deep Reinforcement Learning Based on Social Spatial–Temporal Graph Convolution Network for Crowd Navigation

Abstract

:1. Introduction

2. Literature Review

2.1. Crowd Navigation

2.2. Relational Reasoning

2.3. Model Based Reinforcement Learning

3. Methods

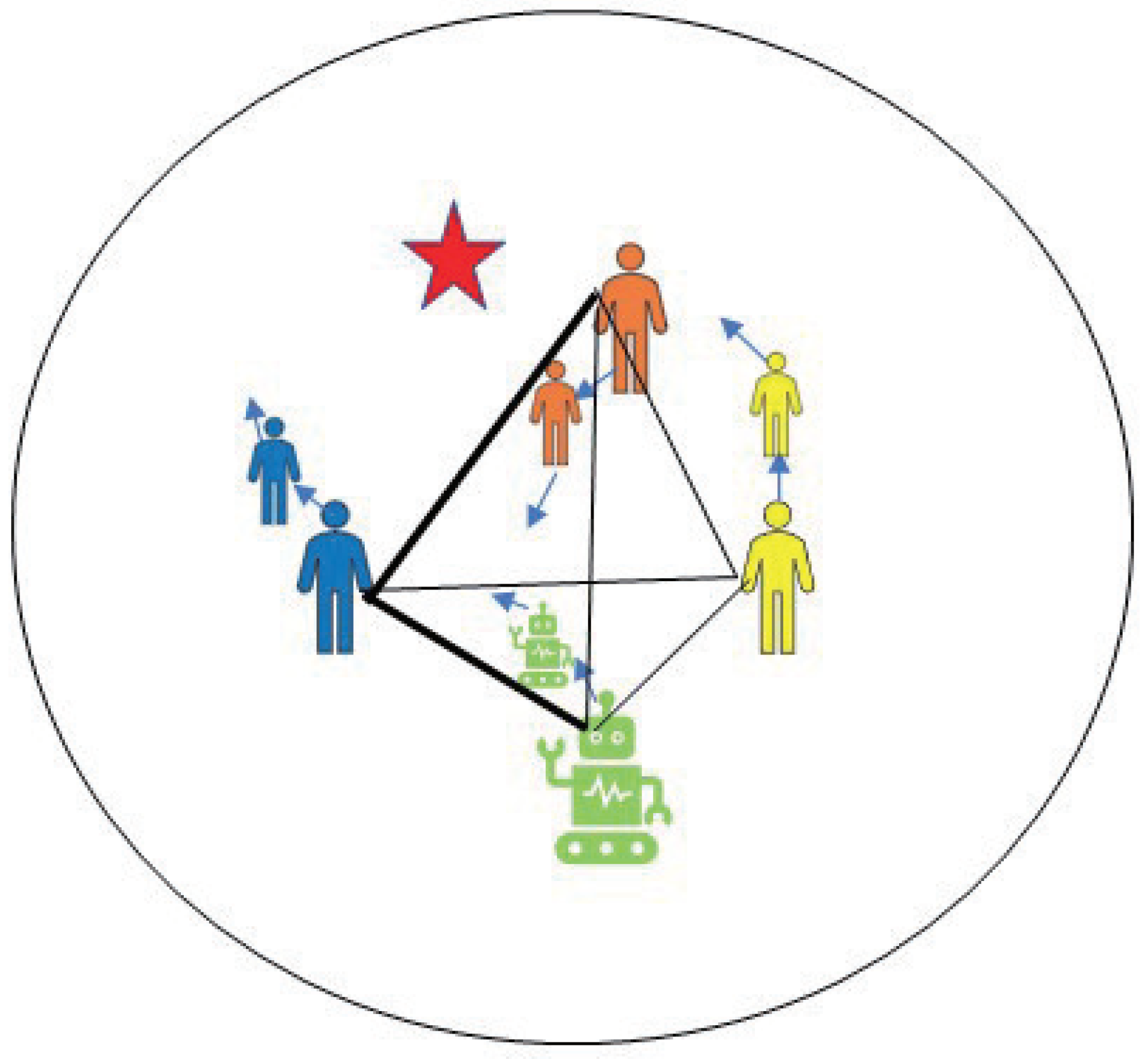

3.1. Collision Avoidance with Deep Reinforcement Learning

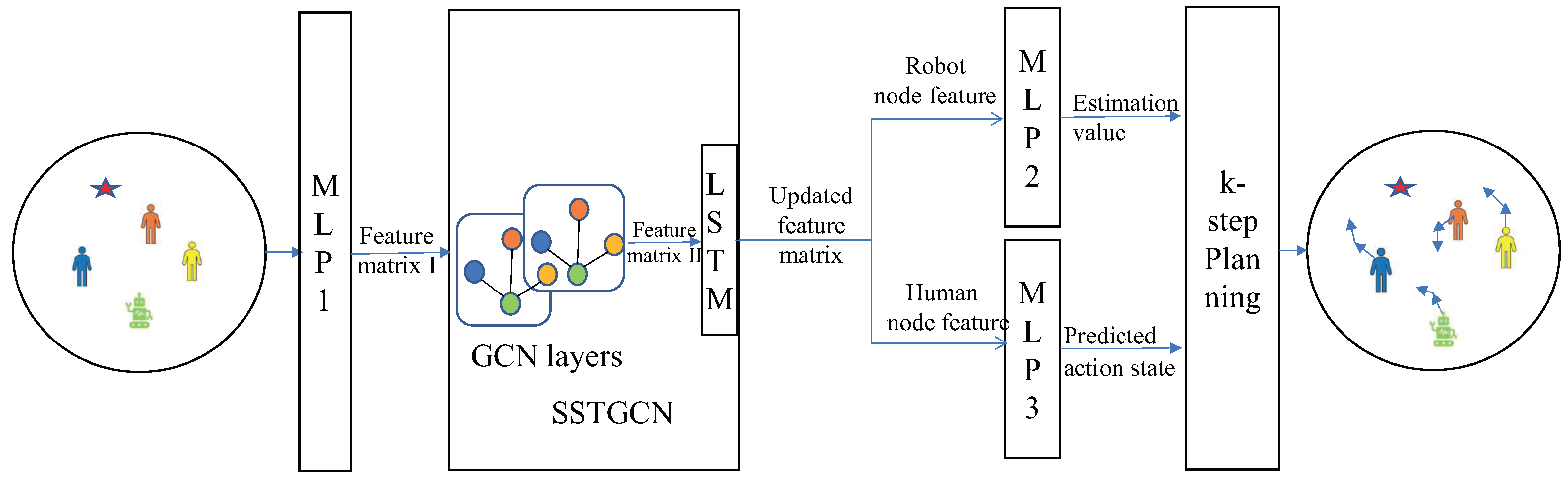

3.2. Graph-Based Crowd Representaion and Learning

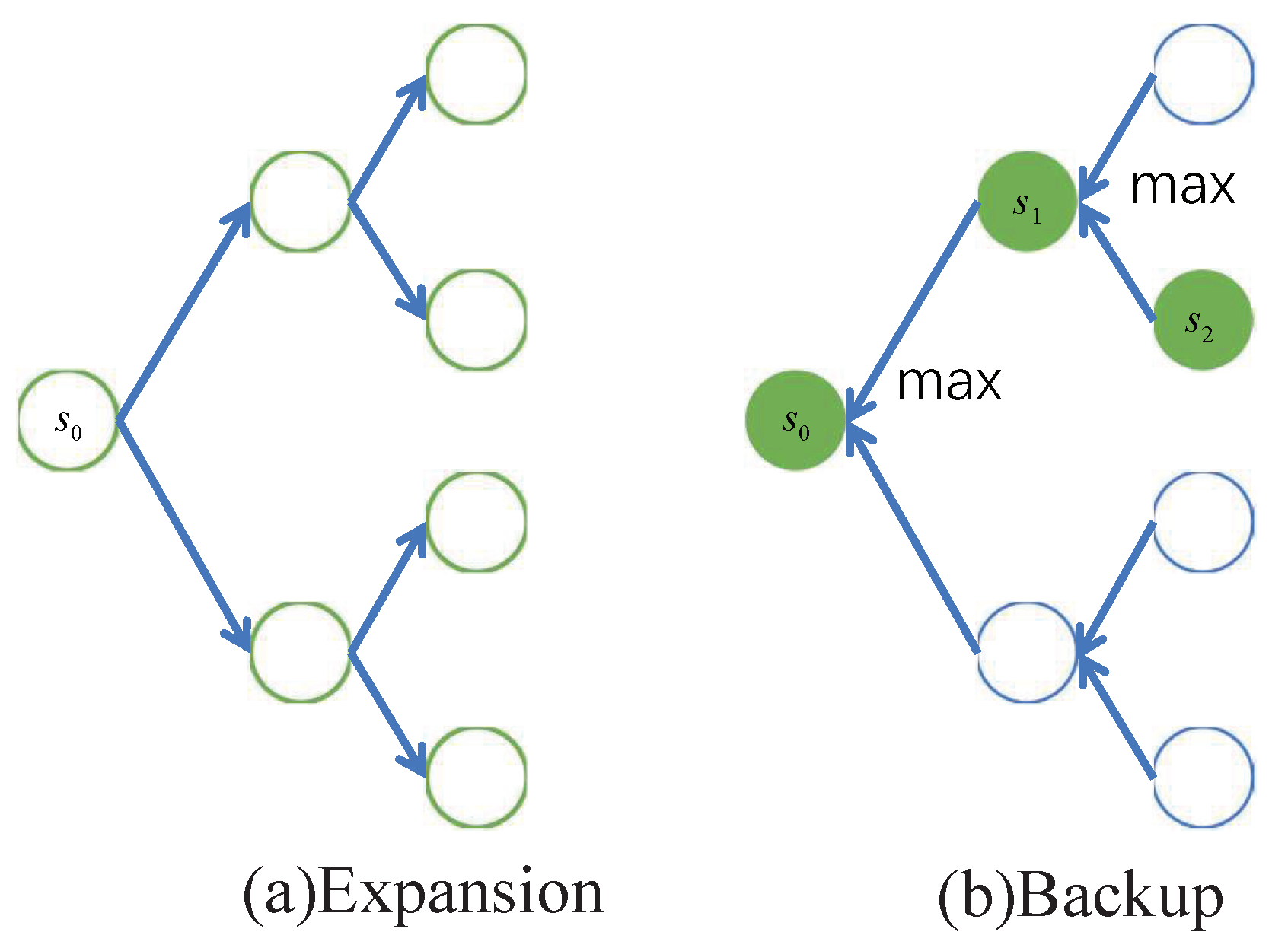

3.3. Robot Forward Planning and Learning

3.4. Joint Value Estimation and State Human Learning

| Algorithm 1. This table shows the training process of the algorithm, which includes |

| Imitation learning and Reinforcement learning |

| Deep learning forand |

| /* Imitation learning */ |

| 1: Input: collected state-value pair |

| 2: for epoch ← 1 to num of epochs do |

| 3: |

| 4: e=MSE |

| 5: w=backprop |

| 6: end |

| /* Reinforcement learning */ |

| 7: for episode ← 1 to num of episodes do |

| 8: while not reach goal, collide or timeout do |

| 9: where |

| 10: Get and after execute |

| 11: Store transition in B |

| 12: Sample random minibatch of transition in B |

| 13: Transfer the state matrix to SSTGCN for value estimation |

| 14: |

| 15: Minimizing for updating |

| 16: Minimizing for updating |

| 17: Update target value network |

| 18: end for |

| 19: return , |

4. Experiments

4.1. Simulation Environment and Experimental Parameter Setting

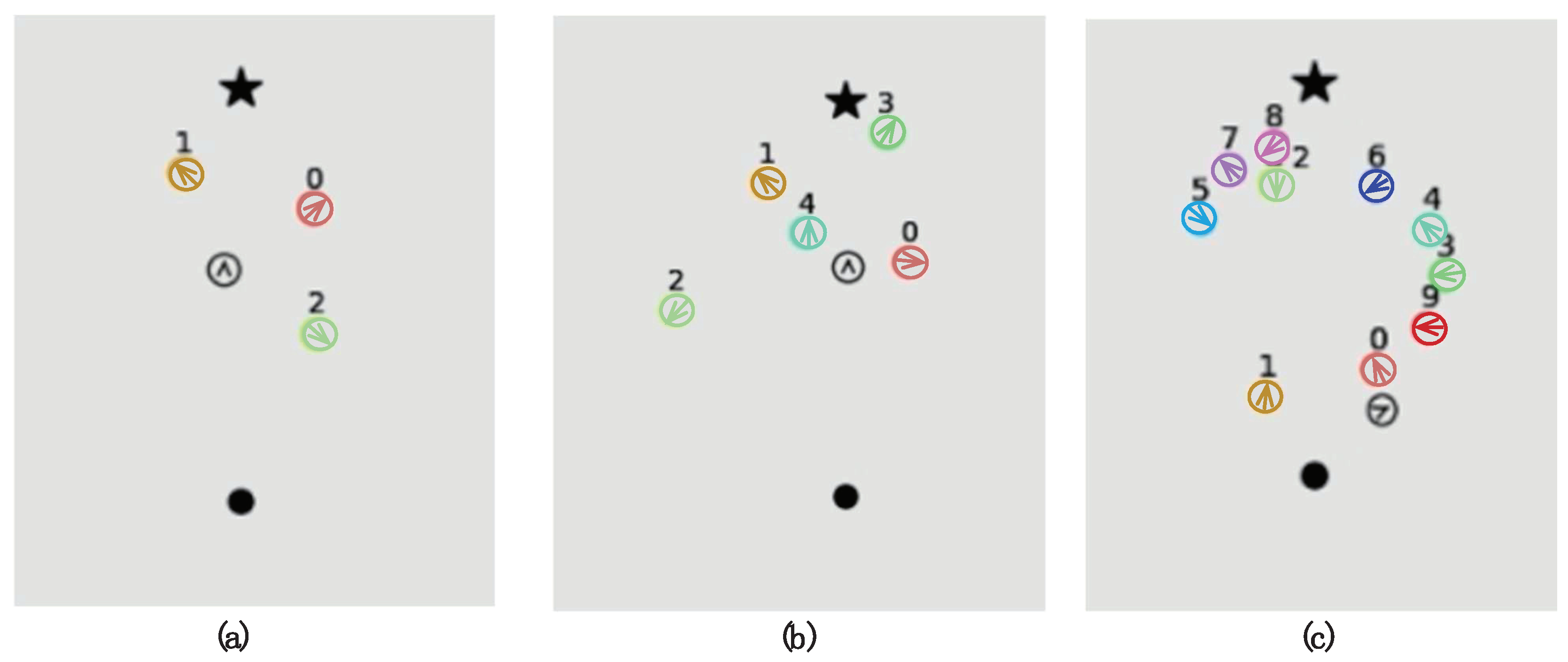

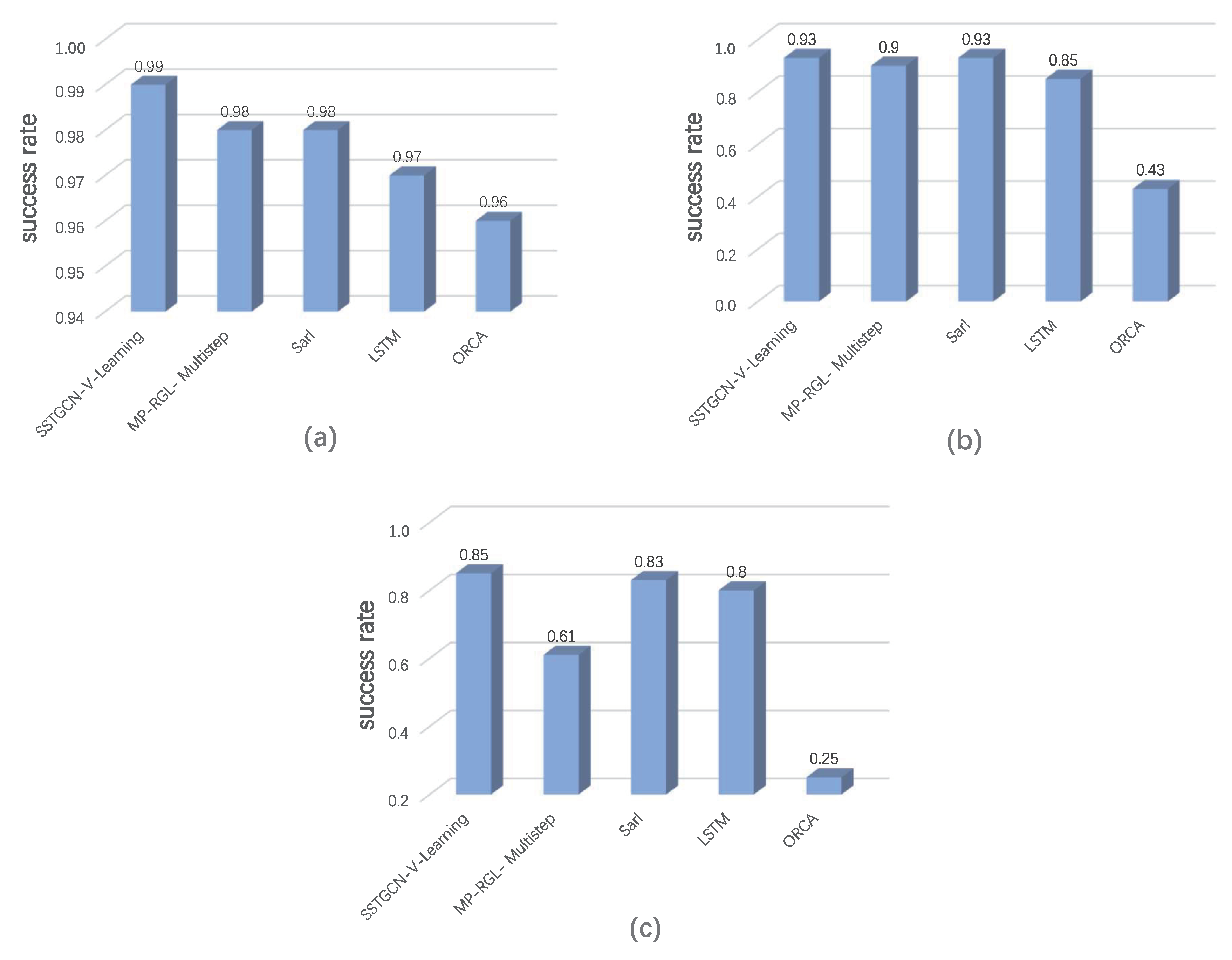

4.2. Navigation Obstacle Avoidance Performance Test

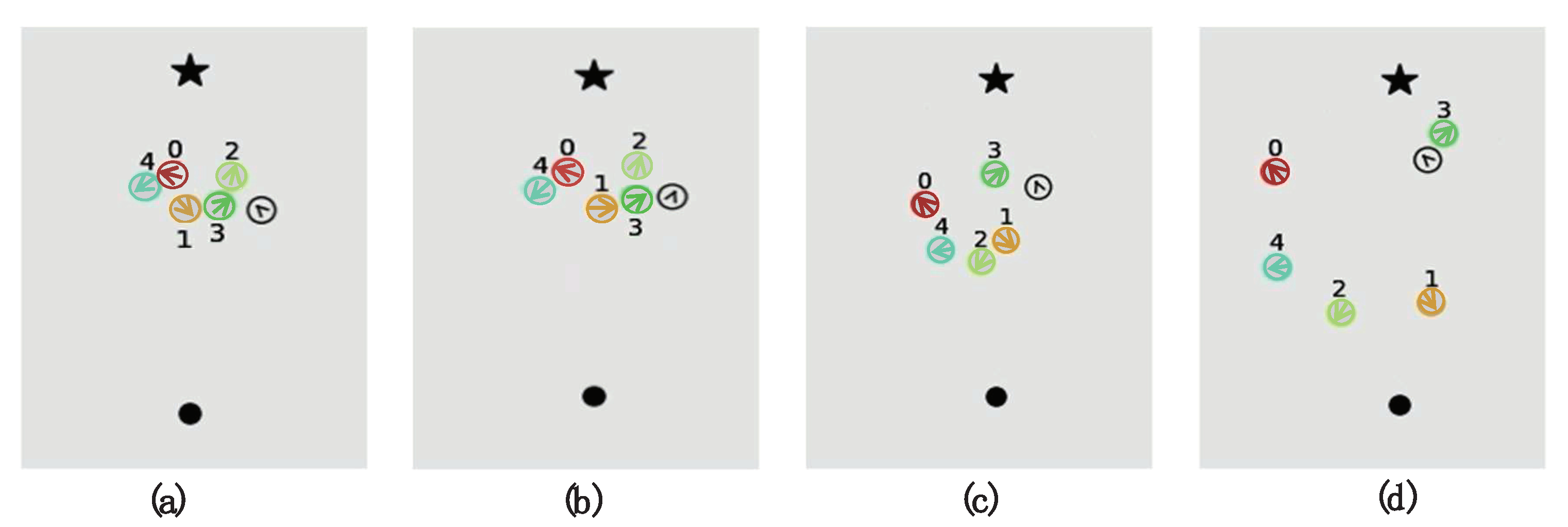

4.3. Qualitative Evaluation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bai, H.; Cai, S.; Ye, N.; Hsu, D.; Lee, W.S. Intention-aware online POMDP planning for autonomous driving in a crowd. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 454–460. [Google Scholar]

- Kretzschmar, H.; Spies, M.; Sprunk, C.; BurgardAuthor, W. Socially compliant mobile robot navigation via inverse reinforcement learning. Int. J. Robot. Res. 2016, 35, 1289–1307. [Google Scholar] [CrossRef]

- Vemula, A.; Muelling, K.; Oh, J. Modeling cooperative navigation in dense human crowds. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1685–1692. [Google Scholar]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23. [Google Scholar] [CrossRef]

- Phillips, M.; Likhachev, M. Sipp: Safe interval path planning for dynamic environments. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5628–5635. [Google Scholar]

- Kim, S.; Guy, S.J.; Liu, W.; Wilkie, D.; Lau, R.W.; Lin, M.C.; Manocha, D. Brvo: Predicting pedestrian trajectories using velocity-space reasoning. Int. J. Robot. Res. 2015, 34, 201–207. [Google Scholar] [CrossRef]

- Trautman, P.; Krause, A. Unfreezing the robot: Navigation in dense, interacting crowds. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 797–803. [Google Scholar]

- Ferrer, G.; Garrell, A.; Sanfeliu, A. Robot companion: A social-force based approach with human awareness-navigation in crowded environments. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1688–1694. [Google Scholar]

- Ferrer, G.; Sanfeliu, A. Behavior estimation for a complete framework for human motion prediction in crowded environments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5940–5945. [Google Scholar]

- Mehta, D.; Ferrer, G.; Olson, E. Autonomous navigation in dynamic social environments using Multi-Policy Decision Making. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1190–1197. [Google Scholar]

- Chen, Y.F.; Liu, M.; Everett, M.; How, J.P. Decentralized non-communicating multiagent collision avoidance with deep reinforcement learning. In Proceedings of the2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 285–292. [Google Scholar]

- Everett, M.; Chen, Y.F.; How, J.P. Motion planning among dynamic, decision-making agents with deep reinforcement learning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3052–3059. [Google Scholar]

- Chen, C.; Liu, Y.; Kreiss, S.; Alahi, A. Crowd-robot interaction: Crowd-aware robot navigation with attention-based deep reinforcement learning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6015–6022. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 2017, 20, 61–80. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Grover, A.; Al-Shedivat, M.; Gupta, J.; Burda, Y.; Edwards, H. Learning policy representations in multiagent systems. In Proceedings of the 35th International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 1802–1811. [Google Scholar]

- Chen, C.; Hu, S.; Nikdel, P.; Mori, G.; Savva, M. Relational graph learning for crowd navigation. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2021; pp. 10007–10013. [Google Scholar]

- Helbing, D.; Molnar, P. Social force model for pedestrian dynamics. Phys. Rev. E 1995, 51, 4282. [Google Scholar] [CrossRef] [PubMed]

- Ferrer, G.; Zulueta, A.G.; Cotarelo, F.H.; Sanfeliu, A. Robot social-aware navigation framework to accompany people walking side-by-side. Auton. Robot. 2017, 41, 775–793. [Google Scholar] [CrossRef]

- Van den Berg, J.; Lin, M.; Manocha, D. Reciprocal velocity obstacles for real-time multi-agent navigation. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 1928–1935. [Google Scholar]

- Berg, J.V.D.; Guy, S.J.; Lin, M.; Manocha, D. Reciprocal n-body collision avoidance. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2011; pp. 3–19. [Google Scholar]

- Long, P.; Liu, W.; Pan, J. Deep-learned collision avoidance policy for distributed multiagent navigation. IEEE Robot. Autom. Lett. 2017, 2, 656–663. [Google Scholar] [CrossRef]

- Tai, L.; Zhang, J.; Liu, M.; Burgard, W. Socially compliant navigation through raw depth inputs with generative adversarial imitation learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1111–1117. [Google Scholar]

- Chen, Y.F.; Everett, M.; Liu, M.; How, J.P. Socially aware motion planning with deep reinforcement learning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1343–1350. [Google Scholar]

- Chen, Y.; Liu, C.; Shi, B.; Liu, M. Robot Navigation in Crowds by Graph Convolutional Networks With Attention Learned From Human Gaze. IEEE Robot. Autom. Lett. 2020, 5, 2754–2761. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Ibrahim, M.S.; Mori, G. Hierarchical relational networks for group activity recognition and retrieval. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 721–736. [Google Scholar]

- Vemula, A.; Muelling, K.; Oh, J. Social attention: Modeling attention in human crowds. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4601–4607. [Google Scholar]

- Kipf, T.; Fetaya, E.; Wang, K.C.; Welling, M.; Zemel, R. Neural relational inference for interacting systems. In Proceedings of the 35th International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 2688–2697. [Google Scholar]

- Mohamed, A.; Qian, K.; Elhoseiny, M.; Claudel, C. Social-stgcnn: A social spatio-temporal graph convolutional neural network for human trajectory prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14424–14432. [Google Scholar]

- Finn, C.; Levine, S. Deep visual foresight for planning robot motion. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2786–2793. [Google Scholar]

- Oh, J.; Singh, S.; Lee, H. Value prediction network. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018; pp. 1–6. [Google Scholar]

- Everett, M.; Chen, Y.F.; How, J.P. Collision avoidance in pedestrian-rich environments with deep reinforcement learning. IEEE Access 2021, 9, 10357–10377. [Google Scholar] [CrossRef]

- Battaglia, P.W.; Hamrick, J.B.; Bapst, V.; Sanchez-Gonzalez, A.; Zambaldi, V.; Malinowski, M.; Tacchetti, A.; Raposo, D.; Santoro, A.; Faulkner, R.; et al. Relational inductive biases, deep learning, and graph networks. arXiv 2018, arXiv:1806.01261. [Google Scholar]

- Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin, Germany, 2012; pp. 37–45. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

| Hardware and Software | Parameter |

|---|---|

| ARM | 32GB |

| CPU | Intel Xeon Silver 4210 |

| GPU | NVIDIA GeForce RTX 3080 with 10 GB |

| Operating System | Ubuntu 18.04 |

| Method | Human Number | Success Rate | Collision Rate | Navigation Time (s) | Total Reward |

|---|---|---|---|---|---|

| 3 | 0.99 | 0.01 | 10.12 | 0.3305 | |

| SSTGCN-V-Learning | 5 | 0.93 | 0.03 | 11.49 | 0.3017 |

| 10 | 0.85 | 0.13 | 14.01 | 0.1150 | |

| 3 | 0.98 | 0.02 | 10.67 | 0.3104 | |

| MP-RGL- Multistep | 5 | 0.90 | 0.05 | 11.05 | 0.2920 |

| 10 | 0.61 | 0.07 | 16.44 | 0.0754 | |

| 3 | 0.98 | 0.01 | 10.51 | 0.3148 | |

| SARL | 5 | 0.93 | 0.05 | 12.48 | 0.2203 |

| 10 | 0.83 | 0.10 | 14.38 | 0.1348 | |

| 3 | 0.97 | 0.02 | 10.91 | 0.3065 | |

| LSTM | 5 | 0.85 | 0.14 | 10.98 | 0.1895 |

| 10 | 0.80 | 0.19 | 13.38 | 0.1044 | |

| 3 | 0.96 | 0.03 | 10.87 | 0.3127 | |

| ORCA | 5 | 0.43 | 0.57 | 10.93 | 0.0615 |

| 10 | 0.25 | 0.30 | 17.66 | −0.1499 |

| Human Number | Depth | Success Rate | Navigation Time (s) | Training Time (h) |

|---|---|---|---|---|

| 3 | 1 | 0.99 | 10.12 | 19 |

| 2 | 0.99 | 9.79 | 30 | |

| 5 | 1 | 0.93 | 11.49 | 22.8 |

| 2 | 0.96 | 11.28 | 37 | |

| 10 | 1 | 0.85 | 14.01 | 29.25 |

| 2 | 0.87 | 13.86 | 49 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Ruan, X.; Huang, J. Deep Reinforcement Learning Based on Social Spatial–Temporal Graph Convolution Network for Crowd Navigation. Machines 2022, 10, 703. https://doi.org/10.3390/machines10080703

Lu Y, Ruan X, Huang J. Deep Reinforcement Learning Based on Social Spatial–Temporal Graph Convolution Network for Crowd Navigation. Machines. 2022; 10(8):703. https://doi.org/10.3390/machines10080703

Chicago/Turabian StyleLu, Yazhou, Xiaogang Ruan, and Jing Huang. 2022. "Deep Reinforcement Learning Based on Social Spatial–Temporal Graph Convolution Network for Crowd Navigation" Machines 10, no. 8: 703. https://doi.org/10.3390/machines10080703

APA StyleLu, Y., Ruan, X., & Huang, J. (2022). Deep Reinforcement Learning Based on Social Spatial–Temporal Graph Convolution Network for Crowd Navigation. Machines, 10(8), 703. https://doi.org/10.3390/machines10080703