A Lightweight Two-End Feature Fusion Network for Object 6D Pose Estimation

Abstract

:1. Introduction

- (1)

- A two-end feature fusion pose estimation network is proposed, which can fully fuse RGB and point cloud features to estimate object pose, and can handle the pose estimation problem in the occlusion case.

- (2)

- The depthwise separable convolution is integrated into the 6D pose estimation network, thus reducing the model storage space and speeding up the model inference, and better results are obtained.

- (3)

- Better performance of 6D pose estimation is achieved on Linemod and Occlusion Linemod datasets.

2. Related Work

2.1. Template-Based Methods

2.2. Correspondence-Based Methods

2.3. RGBD-Based Methods

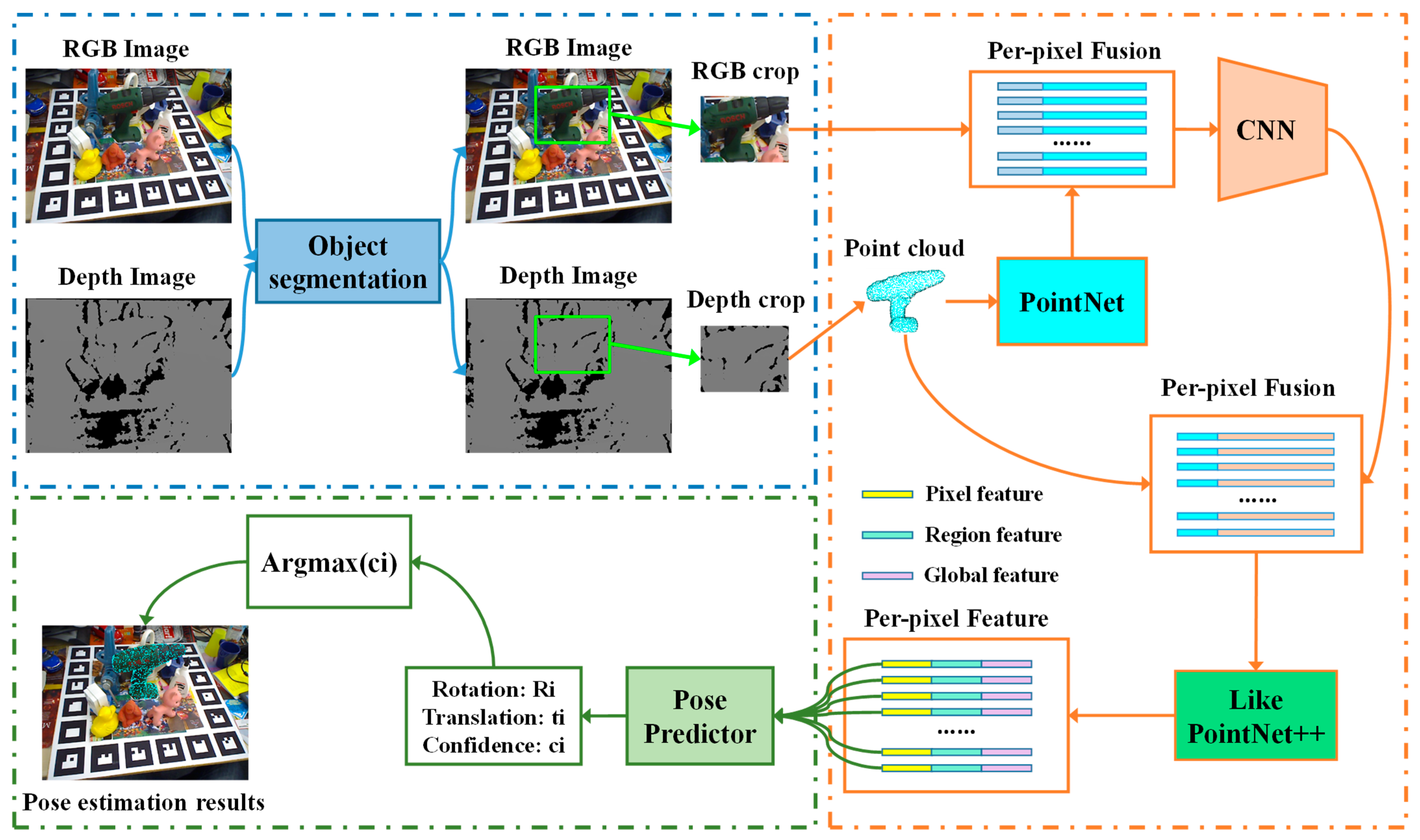

3. Methodology

3.1. Semantic Segmentation

3.2. Image Feature Extraction and Feature Fusion

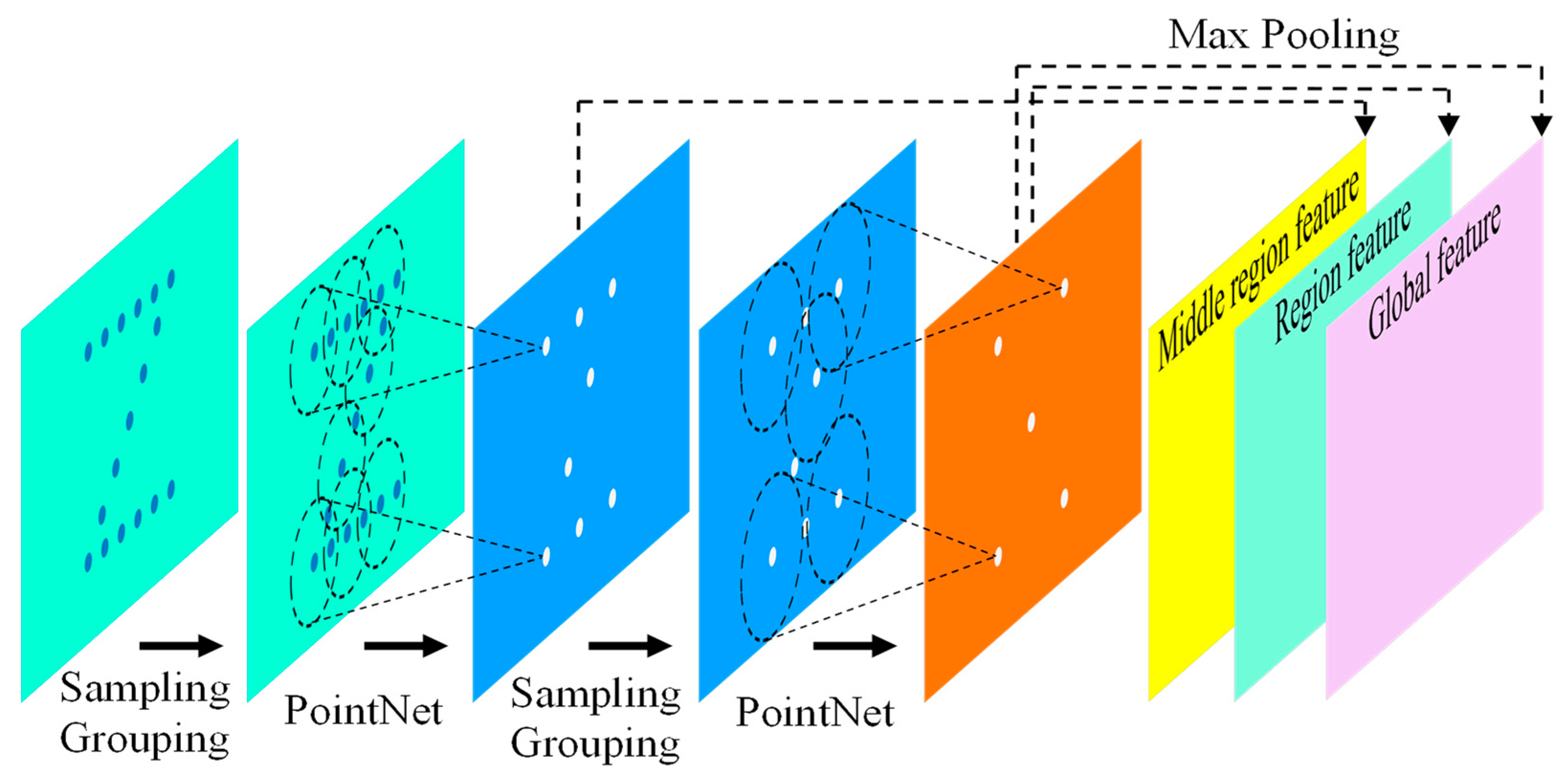

3.3. Point Cloud Feature Extraction and Feature Fusion

3.4. Pose Estimation and Pose Optimization

3.5. Reduce the Amount of Model Parameters

4. Experiments

4.1. Datasets

- (1)

- Linemod dataset: The Linemod dataset is a benchmark dataset for object pose estimation. The dataset contains 13 video frame images of weakly textured objects. It has the characteristics of cluttered scenes, illumination variations, and weakly textured objects. It makes the object pose estimation algorithm more challenging on this dataset.

- (2)

- Occlusion Linemod dataset: The Occlusion Linemod dataset was created by adding annotations to each scene of the Linemod dataset and each image has different degrees of occlusion. Severely occluded object pose estimation is the challenge for this dataset.

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Results on the Benchmark Datasets

4.4.1. Result on the Linemod Dataset

4.4.2. Result on the Occlusion Linemod Dataset

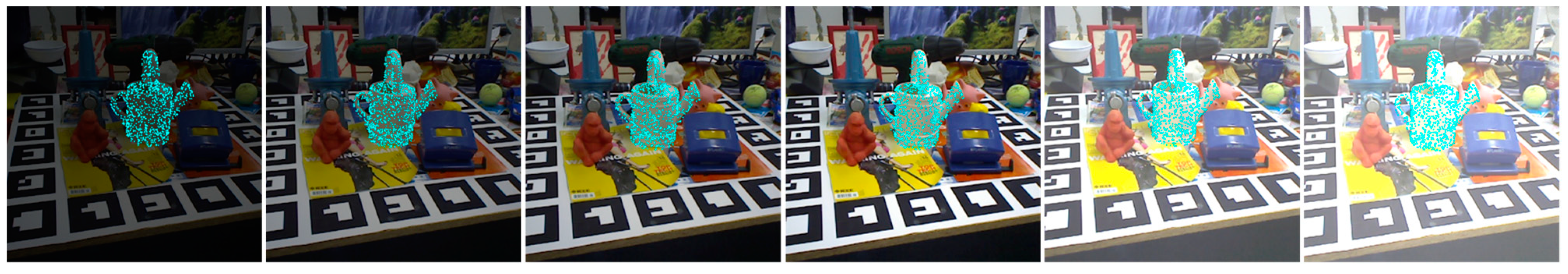

4.4.3. Noise Experiment

4.5. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bay, H.; Tuytelaars, T.; Gool, L.V. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Rothganger, F.; Lazebnik, S.; Schmid, C.; Ponce, J. 3D object modeling and recognition using local affine-invariant image descriptors and multiview spatial constraints. Int. J. Comput. Vis. 2006, 66, 231–259. [Google Scholar] [CrossRef] [Green Version]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Ng, P.C.; Henikoff, S. SIFT: Predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003, 31, 3812–3814. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hinterstoisser, S.; Lepetit, V.; Ilic, S.; Holzer, S.; Bradski, G.; Konolige, K.; Navab, N. Model based training, detection and pose estimation of texture-less 3d objects in heavily cluttered scenes. In Proceedings of the Asian Conference on Computer Vision, Daejeon, Korea, 5–9 November 2012. [Google Scholar]

- Rios-Cabrera, R.; Tuytelaars, T. Discriminatively Trained Templates for 3D Object Detection: A Real Time Scalable Approach. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Hinterstoisser, S.; Cagniart, C.; Ilic, S.; Sturm, P.; Navab, N.; Fua, P.; Lepetit, V. Gradient Response Maps for Real-Time Detection of Textureless Objects. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 876–888. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sundermeyer, M.; Marton, Z.C.; Durner, M.; Brucker, M.; Triebel, R. Implicit 3D Orientation Learning for 6D Object Detection from RGB Images. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhu, M.; Derpanis, K.G.; Yang, Y.; Brahmbhatt, S.; Zhang, M.; Phillips, C.; Lecce, M.; Daniilidis, K. Single image 3D object detection and pose estimation for grasping. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. Epnp: An accurate o (n) solution to the pnp problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef] [Green Version]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Pavlakos, G.; Zhou, X.; Chan, A.; Derpanis, K.G.; Daniilidis, K. 6-DoF object pose from semantic keypoints. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Li, Z.; Wang, G.; Ji, X. CDPN: Coordinates-Based Disentangled Pose Network for Real-Time RGB-Based 6-DoF Object Pose Estimation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Tekin, B.; Sinha, S.N.; Fua, P. Real-Time Seamless Single Shot 6D Object Pose Prediction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zakharov, S.; Shugurov, I.; Ilic, S. DPOD: 6D Pose Object Detector and Refiner. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Hu, Y.; Hugonot, J.; Fua, P.; Salzmann, M. Segmentation-Driven 6D Object Pose Estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Peng, S.; Liu, Y.; Huang, Q.; Zhou, X.; Bao, H. PVNet: Pixel-wise Voting Network for 6DoF Object Pose Estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Song, C.; Song, J.; Huang, Q. HybridPose: 6D Object Pose Estimation Under Hybrid Representations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Tremblay, J.; To, T.; Sundaralingam, B.; Xiang, Y.; Fox, D.; Birchfield, S. Deep object pose estimation for semantic robotic grasping of household objects. In Proceedings of the 2018 Conference on Robot Learning (CoRL), Zürich, Switzerland, 29–31 October 2018. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Xiang, Y.; Schmidt, T.; Narayanan, V.; Fox, D. PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes. arXiv 2017, arXiv:1711.00199. [Google Scholar]

- Kehl, W.; Manhardt, F.; Tombari, F.; Ilic, S.; Navab, N. SSD-6D: Making RGB-Based 3D Detection and 6D Pose Estimation Great Again. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001. [Google Scholar]

- Romero-Ramire, F.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Fractal Markers: A New Approach for Long-Range Marker Pose Estimation Under Occlusion. IEEE Access 2019, 7, 169908–169919. [Google Scholar] [CrossRef]

- Hu, P.; Kaashki, N.N.; Dadarlat, V.; Munteanu, A. Learning to Estimate the Body Shape Under Clothing from a Single 3-D Scan. IEEE Trans. Ind. Inform. 2021, 17, 3793–3802. [Google Scholar] [CrossRef]

- Wang, C.; Xu, D.; Zhu, Y.; Martín-Martín, R.; Lu, C.; Fei-Fei, L.; Savarese, S. DenseFusion: 6D Object Pose Estimation by Iterative Dense Fusion. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Qi, C.R.; Li, Y.; Hao, S.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Wohlhart, P.; Lepetit, V. Learning descriptors for object recognition and 3D pose estimation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Brachmann, E.; Krull, A.; Michel, F.; Gumhold, S.; Shotton, J.; Rother, C. Learning 6D Object Pose Estimation Using 3D Object Coordinates. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Kehl, W.; Milletari, F.; Tombari, F.; Ilic, S.; Navab, N. Deep learning of local RGB-D patches for 3D object detection and 6D pose estimation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Tejani, A.; Tang, D.; Kouskouridas, R.; Kim, T.-K. Latent-class hough forests for 3D object detection and pose estimation. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Drost, B.; Ulrich, M.; Navab, N.; Ilic, S. Model globally, match locally: Efficient and robust 3D object recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Li, C.; Bai, J.; Hager, G.D. A unified framework for multi-view multi-class object pose estimation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Tulsiani, S.; Malik, J. Viewpoints and keypoints. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Mousavian, A.; Anguelov, D.; Flynn, J.; Košecká, J. 3D Bounding Box Estimation Using Deep Learning and Geometry. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, Y.; Sun, W.; Huang, H.; Liu, J.; Fan, H.; Sun, J. PVN3D: A Deep Point-Wise 3D Keypoints Voting Network for 6DoF Pose Estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhou, G.; Yan, Y.; Wang, D.; Chen, Q. A Novel Depth and Color Feature Fusion Framework for 6D Object Pose Estimation. IEEE Trans Multimed. 2020, 23, 1630–1639. [Google Scholar] [CrossRef]

- Wada, K.; Sucar, E.; James, S.; Lenton, D.; Davison, A.J. MoreFusion: Multi-object Reasoning for 6D Pose Estimation from Volumetric Fusion. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Xu, D.; Anguelov, D.; Jain, A. PointFusion: Deep Sensor Fusion for 3D Bounding Box Estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, J.L. Frustum PointNets for 3D Object Detection from RGB-D Data. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Li, Y.; Qi, H.; Dai, J.; Wei, Y. Fully convolutional instance-aware semantic segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

| PoseCNN | PVNet | CDPN | SSD6D- ICP | Point- Fusion | Dense- Fusion | PVN3D | Ours (per) | Ours (iter) | |

|---|---|---|---|---|---|---|---|---|---|

| ape | 77.0 | 43.6 | 64.4 | 65.0 | 70.4 | 92.3 | 97.3 | 95.1 | 99.0 |

| benchvise | 97.5 | 99.9 | 97.8 | 80.0 | 80.7 | 93.2 | 99.7 | 97.5 | 99.9 |

| camera | 93.5 | 86.9 | 91.7 | 78.0 | 60.8 | 94.4 | 99.6 | 99.0 | 99.1 |

| can | 96.5 | 95.5 | 95.9 | 86.0 | 61.1 | 93.1 | 99.5 | 97.4 | 98.7 |

| cat | 82.1 | 79.3 | 83.8 | 70.0 | 79.1 | 96.5 | 99.8 | 98.6 | 99.6 |

| driller | 95.0 | 96.4 | 96.2 | 73.0 | 47.3 | 87.0 | 99.3 | 97.1 | 100.0 |

| duck | 77.7 | 52.6 | 66.8 | 66.0 | 63.0 | 92.3 | 98.2 | 97.5 | 99.2 |

| eggbox | 97.1 | 99.2 | 99.7 | 100.0 | 99.9 | 99.8 | 99.8 | 99.9 | 100.0 |

| glue | 99.4 | 95.7 | 99.6 | 100.0 | 99.3 | 100.0 | 100.0 | 99.8 | 100.0 |

| holepuncher | 52.8 | 82.0 | 85.8 | 49.0 | 71.8 | 92.1 | 99.9 | 98.0 | 99.4 |

| iron | 98.3 | 98.9 | 97.9 | 78.0 | 83.2 | 97.0 | 99.7 | 98.9 | 99.3 |

| lamp | 97.5 | 99.3 | 97.9 | 73.0 | 62.3 | 95.3 | 99.8 | 97.8 | 99.9 |

| phone | 87.7 | 92.4 | 90.8 | 79.0 | 78.8 | 92.8 | 99.5 | 98.5 | 99.4 |

| average | 88.6 | 86.3 | 89.9 | 79.0 | 73.7 | 94.3 | 99.4 | 98.1 | 99.5 |

| PVNet | Hinterstoisser | Michel | PoseCNN | Densefusion | ANDC | Ours | |

|---|---|---|---|---|---|---|---|

| ape | 15.8 | 81.4 | 80.7 | 76.2 | 73.2 | 68.4 | 79.4 |

| can | 63.3 | 94.7 | 88.5 | 87.4 | 88.6 | 92.6 | 89.7 |

| cat | 16.6 | 55.2 | 57.8 | 52.2 | 72.2 | 77.9 | 80.7 |

| driller | 65.6 | 86.0 | 94.7 | 90.3 | 92.5 | 95.1 | 94.4 |

| duck | 25.2 | 79.7 | 74.4 | 77.7 | 59.6 | 62.1 | 76.9 |

| eggbox | 50.1 | 65.5 | 47.6 | 72.2 | 94.2 | 96.0 | 96.9 |

| glue | 49.6 | 52.1 | 73.8 | 76.7 | 92.6 | 93.5 | 95.5 |

| holepuncher | 39.6 | 95.5 | 96.3 | 91.4 | 78.7 | 83.6 | 80.8 |

| average | 40.7 | 76.3 | 76.7 | 78.0 | 81.4 | 83.6 | 86.8 |

| Noise Range (mm) | 0.0 | 5.0 | 10.0 | 15.0 | 20.0 | 25.0 | 30.0 |

|---|---|---|---|---|---|---|---|

| Accuracy | 99.5% | 99.5% | 99.4% | 99.3% | 99.2% | 99.1% | 99.1% |

| Densefusion (per-Pixel) | Densefusin (Iterative) | Ours (per-rs) | Ours (per-mo) | Ours (iter-rs) | Ours (iter-mo) | |

|---|---|---|---|---|---|---|

| ape | 79.5 | 92.3 | 95.1 | 95.6 | 99.0 | 98.0 |

| benchvise | 84.2 | 93.2 | 97.5 | 97.6 | 99.9 | 99.1 |

| camera | 76.5 | 94.4 | 99.0 | 98.9 | 99.1 | 98.9 |

| can | 86.6 | 93.1 | 97.4 | 97.2 | 98.7 | 98.7 |

| cat | 88.8 | 96.5 | 98.6 | 98.6 | 99.6 | 99.4 |

| driller | 77.7 | 87.0 | 97.1 | 97.0 | 100.0 | 99.3 |

| duck | 76.3 | 92.3 | 97.5 | 97.4 | 99.2 | 99.1 |

| eggbox | 99.9 | 99.8 | 99.9 | 99.8 | 100.0 | 99.4 |

| glue | 99.4 | 100.0 | 99.8 | 99.8 | 100.0 | 99.5 |

| holepuncher | 79.0 | 92.1 | 98.0 | 97.6 | 99.4 | 98.9 |

| iron | 92.1 | 97.0 | 98.9 | 99.0 | 99.3 | 99.4 |

| lamp | 92.3 | 95.3 | 97.8 | 97.8 | 99.9 | 99.6 |

| phone | 88.0 | 92.8 | 98.5 | 98.4 | 99.4 | 99.2 |

| average | 86.2 | 94.3 | 98.1 | 98.0 | 99.5 | 99.1 |

| ResNet152 + PSPNet | ResNet101 + PSPNet | ResNet50 + PSPNet | ResNet34 + PSPNet | ResNet18 + PSPNet | MobileNetV2 + PSPNet | |

|---|---|---|---|---|---|---|

| Space(MB) | 376 | 313.6 | 237.8 | 133.9 | 93.6 | 49.3 |

| Run-time(ms/frame) | 243 | 161 | 120 | 91 | 75 | 45 |

| Train-time(h/epoch) | 3.1 | 2.5 | 2.1 | 1.09 | 0.65 | 0.35 |

| Parameter | 184,111,680 | 152,916,544 | 115,036,736 | 63,083,072 | 42,900,416 | 24,542,336 |

| Accuracy | 99.5% | 99.5% | 99.5% | 99.4% | 99.5% | 99.1% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zuo, L.; Xie, L.; Pan, H.; Wang, Z. A Lightweight Two-End Feature Fusion Network for Object 6D Pose Estimation. Machines 2022, 10, 254. https://doi.org/10.3390/machines10040254

Zuo L, Xie L, Pan H, Wang Z. A Lightweight Two-End Feature Fusion Network for Object 6D Pose Estimation. Machines. 2022; 10(4):254. https://doi.org/10.3390/machines10040254

Chicago/Turabian StyleZuo, Ligang, Lun Xie, Hang Pan, and Zhiliang Wang. 2022. "A Lightweight Two-End Feature Fusion Network for Object 6D Pose Estimation" Machines 10, no. 4: 254. https://doi.org/10.3390/machines10040254

APA StyleZuo, L., Xie, L., Pan, H., & Wang, Z. (2022). A Lightweight Two-End Feature Fusion Network for Object 6D Pose Estimation. Machines, 10(4), 254. https://doi.org/10.3390/machines10040254