Abstract

During actual operations, Automatic Guided Vehicles (AGV) will inevitably encounter the phenomena of overexposure or shadowy areas, and unclear or even damaged guide wires, which interfere with the identification of guide wires. Therefore, this paper aims to solve the shortcomings of existing technology at the software level. Firstly, a Fast Guide Filter (FGF) is adopted with the two-dimensional gamma function with variable parameters, and an image preprocessing algorithm in a complex illumination environment is designed to get rid of the interference of illumination. Secondly, an ant colony edge detection algorithm is proposed, and the guide wire is accurately extracted by secondary screening combined with the guide wire characteristics; A variable universe Fuzzy Sliding Mode Control (FSMC) algorithm is designed as a lateral motion control method to realize the accurate tracking of AGV. Finally, the experimental platform is used to comprehensively verify the series of algorithms designed in this paper. The experimental results show that the maximum deviation can be limited to 1.2 mm, and the variance of the deviation is less than 0.2688 mm2.

1. Introduction

In recent years, with the development of vision-guided Automatic Guided Vehicle (AGV) systems, fast and accurate guide wire detection has attracted extensive research attention [1,2]. Lead detection is usually realized by images captured by a camera installed on the vehicle, and then the collected image is processed to extract meaningful information, such as the position, road mark, road boundary, and guidance direction. As the most basic traffic sign, a guide line boundary is the most important constraint restricting AGV driving. If the technology for recognition of the guide lines can be improved, the extraction of other information will be solved [3]. In addition, the identification and extraction of guide wires can not only provide motion guidance for AGV, but can also be applied to the active safety control system of other manned vehicles [4]. However, with the nonuniformity of pavement structures, damaged and stained guide wires, and changes in light, the detection of guide wires has become more and more difficult.

The identification technology of guide wires is mainly divided into three steps: image preprocessing, guide wire extraction, and navigation deviation calculation. Many scholars have carried out research on image processing under complex lighting conditions. Xing and other scholars proposed an illumination adaptive image segmentation method based on a relaxed constraint support vector machine (SVM) classifier and a kernel function to distinguish the original color features and illumination artifacts of road images. Different illumination regions were successfully partitioned and processed accordingly. However, this research method is highly dependent on color, and it is necessary to establish accurate and complete SVM classification learning samples in advance [5]. Wang designed an adaptive image enhancement algorithm based on Retinex theory, extracted the illumination component through the weighted sum of a multiscale Gaussian function, and finally used postprocessing for image enhancement. However, the edge preserving ability of Gaussian filter is very poor, so it cannot be used directly for lead extraction [6]. Huang proposed an adaptive image enhancement method based on chicken swarm optimization. The design objective function is the gray information entropy of the image, and the image enhancement problem is transformed into the problem of obtaining the optimal solution of the objective function [7]. This method is similar to many optimization algorithms. The continuous optimization and adjustment steps greatly reduce the real-time performance, so it is difficult to apply the algorithm to AGV. Liu and others extracted the illumination component through a multiscale Gaussian function, used the gamma function with fixed parameters to reasonably adjust the brightness value according to the uneven illumination area, and finally completed the adaptive brightness correction of the uneven illumination image. The algorithm has high real-time performance, but the edge preservation ability of Gaussian function filtering is very poor; for guide wire recognition, the preservation of edge details takes precedence over the preservation of color. Therefore, the effect of the simple use of a multiscale Gaussian function is not even as good as that of a low-scale Gaussian function alone [8]. At the same time, the parameters in the design of two-dimensional gamma function are solidified, which makes the image enhancement effect inconsistent under different lighting conditions. Scholars such as Shun proposed a physical illumination model. As long as the parameters in the model are correctly estimated, the low-illumination image can be directly restored by solving the model, but it depends on the accuracy of parameter estimation. At the same time, the algorithm has certain limitations for generating pseudocolor images in extremely dark areas [9]. Oh estimated the reflectivity through the brightness channel, used a nonlinear reflectivity reconstruction function to adaptively control the contrast, and reconstructed the brightness channel to reduce the number of halo artifacts in the processed image, but the applicability of the model needs to be further verified [10].

The control problem of path lateral tracking can be divided into two parts: the selection of preview points and lateral motion control. The driver preview model was first proposed by academician Guo. The driver’s awareness was expressed by establishing the driver’s preview model, and finally an in-loop controller considering the driver’s input was formed. Ding and other scholars established the driver model according to the optimal curvature under single-point preview. After selecting a preview point according to the vehicle speed, they obtained the optimal curvature to find the optimal path approaching the preview point [11]. Dong modified the steering wheel angle based on the traditional optimal preview driver model and designed an improved preview driver model [12]. Ren and other scholars established the vehicle lateral deviation dynamic and a yaw angle error model based on single-point preview [13]. Most studies, including the above studies, use the method based on vehicle speed and preview distance to calculate the preview point, but do not describe in detail how to find a reasonable preview point on the specified path—only using the preview point for subsequent motion control. At the same time, single-point preview has certain limitations. When the current attitude angle deviation of the vehicle is too large or the path curvature is too large, it is difficult to find a suitable preview point. At present, there is little research on multipoint preview. Zhao and others selected multiple preview points at equal intervals after selecting the first preview point; Xu and other scholars from the University of Michigan [14] proposed a path tracking algorithm based on optimal preview, introduced the multipoint preview road curvature in the finite time window into the augmented state vector, and reconstructed the nonlinear optimal control problem into an augmented LQR problem, but did not introduce the calculation of preview points in detail [15]. The lateral control scheme of path tracking includes a geometric model, a kinematic model, and a dynamic model. The geometric model relies on geometric models such as the relationship between vehicle attitude and position and Ackerman steering. The kinematic model further considers the motion equation of the vehicle, but does not involve the physical properties of the vehicle and the force on the vehicle. In short, the path tracking control method based on a geometric/kinematic model ignores the dynamic characteristics of the vehicle system and has a limited scope of application. Therefore, in order to obtain more accurate tracking control effect, the dynamic characteristics of the vehicle must be considered when designing the path tracking control, so as to control the accurate tracking of the vehicle under the condition of large curvature. At present, the algorithms applied based on a dynamic model mainly include PID control [16,17,18], fuzzy control [19,20,21], sliding mode control [22,23], and model predictive control [24,25,26]. Many authors have also applied PID and LQR to the control of Quadrotor, and the experimental results have shown that PID has good real-time performance but poor robustness, while LQR has better robustness and small steady-state error, but there is a delay problem [27,28]. Various algorithms have unique advantages; model predictive control, especially, has been widely used [29]. Although sliding mode control does not need to establish an accurate dynamic model and uses nonlinear theory to deal effectively with multidimensional complex coupling problems, the chatter phenomenon hinders its further application. Therefore, many scholars have carried out research on the disadvantages of buffeting, and many scholars use neural networks to adaptively approximate the uncertainty, so as to optimize it [30,31]. However, many deep learning algorithms, including neural networks, need a large number of accurate and reasonable training datasets, and the datasets have to be obtained by other algorithms. Chen and Zhang combined fuzzy control and sliding mode control, and adjusted the gain coefficient of switching term by using fuzzy rules, so as to achieve better control [32,33]. In addition to the improvement of the chatter phenomenon, we must focus on the analysis of its self-adaptability—that is, fuzzy control can change the parameters in SMC, which greatly improves the adaptability to different working conditions, and can achieve better control through the adjustment of parameters by fuzzy rules under different working conditions.

2. Establishment of Light Pretreatment Model

The main purpose of image preprocessing is to remove irrelevant information that interferes with the extraction of information of interest, restore the original information of the region of interest in the image, enhance the detectability of useful information, and improve the reliability of information matching, feature recognition, and region extraction. Generally, the main purpose of image preprocessing is to filter out all kinds of noise that may exist in the image to make the processed image smoother and clearer. However, the purpose of image preprocessing in this paper is to correct the image brightness, darken the area significantly higher than the average brightness, and brighten the area significantly lower than the average brightness, so as to get rid of the influence of light and make the final image brightness more uniform.

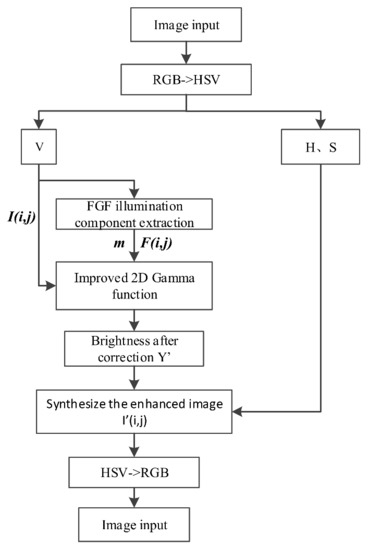

The image preprocessing process in a complex lighting environment is shown in Figure 1. When the system works, the camera with a frame rate of 30 fps continuously provides the image source as the system input. When the image processor receives the image, it first extracts the region of interest (ROI) according to the preview distance to avoid the processing of useless regions, so as to speed up the processing speed. In fact, the size of the ROI depends on factors such as camera angle and height. Generally speaking, the larger the ROI, the better. However, the larger the ROI, the lower the real-time performance. Therefore, the computing power of the processor should be considered to reasonably select the ROI. Then image preprocessing is carried out. Inspired by the Retinex algorithm, in order to solve the problem that uneven illumination in ROI area will interfere with the extraction, an image correction algorithm based on fast guided filtering and variable-scale two-dimensional gamma function is proposed. Due to only the HSV color space model meeting the requirements for brightness in this paper, there are parameters related to the transmittance or reflectance of the object, so it is necessary to transform the color space. Firstly, the RGB color space is converted to HSV color space, and the lightness component V is adjusted according to the illumination distribution. According to the distribution of illumination components, the overly bright or dark parts in the image are corrected by a variable-scale two-dimensional gamma function, so that the processed image is close to the image under uniform illumination. Finally, the adjusted v component is combined the with h and s components again, The processed image is obtained for subsequent lead extraction.

Figure 1.

Image preprocessing flowchart.

Inspired by the Retinex algorithm and introducing the guided filter with faster processing speed and stronger edge preservation to replace the traditional Retinex Gaussian filter, this paper designs an illumination component extraction algorithm based on a fast guided filter. I is the guide image and p is the input image. The input image p is processed to obtain the output image, so that the gradient of the output image is similar to the guide image, while the gray or brightness is similar to the input image. The algorithm mainly consists of the following steps:

- (1)

- Calculate the average value of each window of the guide image I and the filtered input image p and store it in meanofi and meanofp. In this paper, the guide image is the same as the filtered input image. At this time, the guide filtering is an edge preserving filtering operation, which can better ensure that the edge details remain in the original state while extracting the illumination component.

- (2)

- The variance of I and the covariance of I–P are calculated and stored in varofI and covofIp for the next least-squares optimization solution.

- (3)

- The least-squares method is optimized to solve a and b to minimize the reconstruction error between the output image and the original image. The regularization parameters used in this paper is ε = 0.05. If the variance is much less than ε, it is regarded as a flat region. On the contrary, the gradient is maintained in the region.

- (4)

- Calculate the mean value of each window in a and b and save it in meanofa and meanofb. The local window radius r used in this paper is 16 and the subsampling ratio is 4.

- (5)

- q = meanofa.∗I + meanofb, where q is the light component finally extracted.

In the above algorithm, there is a process of average value of each window in the image. Here, the box filtering method is used [34].

After the light component is extracted from the original image, it needs to be adjusted according to the brightness of the light at each pixel—that is, with different degrees of brightness correction according to the brightness of the light component in each region, so as to get rid of the influence of the light component. Gamma correction is a nonlinear global correction method. Nowadays, many scholars use a two-dimensional gamma function for image correction to effectively correct the uneven brightness of an image. On this basis, this paper makes some improvements and extensions, so that the adjustment force depends on the brightness of the illumination component, in order to improve the adaptability and rationality of the algorithm.

In general, gamma correction has the following standard forms:

where is the input image (original v), is the output image, and γ is the control parameter; the image darkens as a whole when , the image is lightened as a whole when , and the algorithm doesn’t make sense when . Scholars often use to calculate γ. The gray value of white is 255 and the gray value of black is 0; the reason why the average value 128 is taken as the mean value of the illumination component and the base number 2 has not been clearly explained by scholars, but is often determined according to experience.

Based on the above research, this paper makes some adjustments to the traditional two-dimensional gamma function and establishes a relationship with the gray value distribution characteristics of the image to be processed, so that the image enhancement algorithm designed in this paper can have different adjustment intensity and strong environmental adaptability under different conditions. The value of γ is calculated as follows:

where m is the luminance mean value of the illumination component f (i, j). Using m instead of the fixed value 128 can more reasonably represent the mean value of the current illumination component rather than the absolute mean value in the theoretical sense. Another change is the use of variable scales α. Instead of the fixed base 2, α the calculation is derived as follows.

Take the expected value of the improved gamma function formula, which should be equal to 128 in theory. The derivation is as follows:

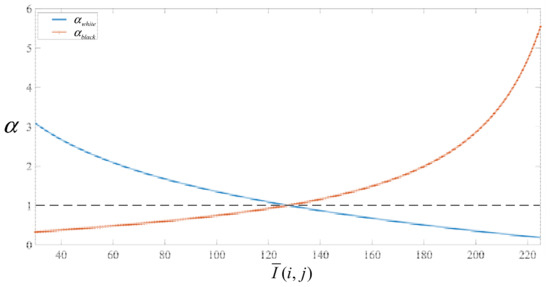

Two limit cases are taken for analysis; the illumination component F(i, j) is all black or all white. At this time, two limit cases can be obtained for α values:

The two equations are represented by curves, as shown in Figure 2. Considering that the gray value of the actual image is not greater than 225 or less than 25, 25 and 225 are selected as the upper and lower limits of the interval of independent variables (the algorithm cannot be applied to extremely bright and dark scenes beyond this range). When the average gray value of the original image is 128, the two curves coincide. In this paper, the curve above the dotted line is taken as α. When the mean value of illumination is less than 128, we use the αwhite curve to calculate; otherwise, it is an αblack curve calculation. The advantage of this is that it can establish a relationship with the average gray value of the original image, so that the intensity of the image correction changes with the content of the original image. Images that are too bright or too dark will be adjusted significantly, while more balanced images will almost maintain the original state.

Figure 2.

The α value curve.

3. Extraction Method of Guide Wire against Line Interference

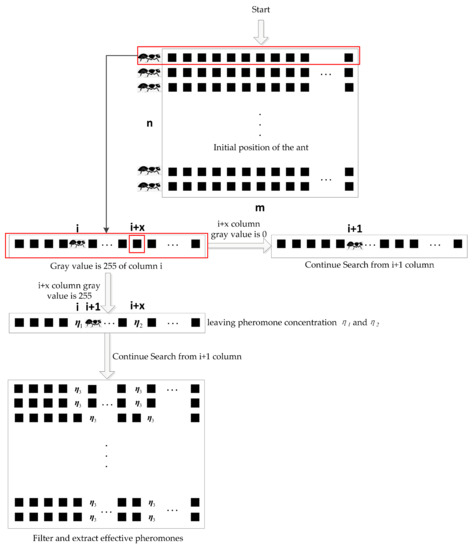

An ant colony algorithm is designed to extract the edge of guide wire. The pheromone released when the ant colony passes through the path is used as the judgment basis of edge points, screened through volatilization and aggregation mechanism, and the finally retained pheromone is extracted as the guide edge. The specific steps of the ant colony-like algorithm designed in this paper are as follows:

- (1)

- Initial position. The number of pixel rows is determined according to the resolution of the collected image, so that the number of ants is equal to the number of rows—that is, only one ant is placed in each row. The initial position of the ant is the leftmost or rightmost column of the image. This paper takes the leftmost column as an example.

- (2)

- Transfer. It is stipulated that each ant can only walk to the right. When passing the first point with a gray value of 255 (column I), record the number of columns I, leaving pheromone concentration η1. If there is no pixel with a gray value of 255 in the subsequent I + X column, volatilize the pheromone in column I and continue to search right from column I + 1. If there are points with a gray value of 255 in the I + X column, record the following numbers and leave pheromones η2; at the same time, set the pheromone concentration in column I to η2 and continue to search to the right, with I + 1 as the starting column. When ants pass through pheromones η2, continue to judge the gray value separated by X pixels until it exceeds the range of the image matrix. X is related to the width of the guide wire, which is the number of pixels occupied by the guide wire in each row of the collected image. The possible small distortion or “far small near large” of the guide wire in the image is generally expressed by the range tolerance band, and the specific value is obtained through calibration.

- (3)

- Share. If the difference in the number of pheromone columns left by ants in adjacent rows is less than 3, the pheromone concentration will be η1 and η2 set to η3. If the pheromone concentration is η3, and the number of rows in the blank area is less than y, set the pheromone concentration of blank pixels in the same column to η3 so as to form a complete line, and a complete closed contour will be formed later. Assume that the gray value of the pixel where η3 is located is set to 255 and the rest is set to 0, so as to distinguish the extracted line from the background. This step is mainly to remove the interference scatter while retaining the arc; y is related to the damage and staining degree of the guide wire in the actual site, and the specific value is obtained through calibration.

All the above detailed processes are shown in the Figure 3:

Figure 3.

Flowchart of lead extraction based on ant-like algorithm.

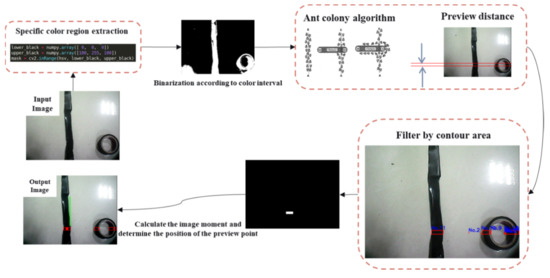

The above algorithm is only a preliminary, rough extraction of all lines including the edge of the guide wire; when there are messy lines on the ground with a width similar to the guide wire, relying only on the above algorithm cannot ensure that only the guide wire is accurately extracted and other interference lines can be eliminated. Therefore, based on the above algorithm, this paper carries out secondary screening of guide wires, i.e., by limiting the contour area to a specific range to get rid of the line interference, similar to guide wires. The specific steps are as follows: determine the upper and lower limits of y-axis coordinates in combination with the preview distance in all the obtained edges, take this range as the two horizontal edges of the contour area, take the edge lines preliminarily extracted by the ant colony-like algorithm in the previous step as the two vertical edges of the contour, and then filter according to the contour area. Firstly, the library “numpy” is used to count the number of contour areas, and then the OpenCV library is used to calculate the area of each area extracted by the ant colony-like algorithm. Only the contour with an area within a certain interval is regarded as the guide area, and the guide parameters will be calculated in the next step. The certain interval means the width of the guide wire.

In short, the lead extraction process is divided into two parts after binarization. The first part is the preliminary extraction by the ant colony algorithm, and the second part is the secondary screening based on the contour area method. The complete steps of extracting the entire leader are shown in the Figure 4:

Figure 4.

Overall flow of lead extraction algorithm.

4. Calculation of Guidance Deviation

After the guide wire is extracted, the guidance parameters need to be extracted to guide the AGV. At present, most scholars use the method of inverse perspective transformation and geometric derivation to obtain the actual deviation value. Although this method can obtain the actual deviation value more accurately, it has high requirements for computational force and is not suitable for low-cost AGV. Therefore, this paper proposes a method to calculate the guidance deviation by using the image moment.

Image information description and extraction is one of the key problems with image recognition. Simpler and more representative data is more suitable as description data. With the continuous development of image processing technology, image-invariant moment has gradually become the most commonly used description means in academic and industrial scenes. It has been widely used in many fields, such as pattern recognition, target classification, target recognition, and so on. Different kinds of geometric features in images can usually be described by image-invariant moments.

For each pixel in an image, its position in the image is fixed, so its position can be represented by two-dimensional coordinates (x, y), and the gray image can also be represented by two-dimensional gray image density function. Hu first proposed the concept of Hu moment invariant in 1961. The Hu moment invariant is a highly concentrated image feature with translation, gray scale, and rotation invariance. The principle of the Hu moment is as follows:

The p + q order geometric moment mpq and central moment μpq of an image with size m*n are, respectively:

where f(i, j) represents the gray value of the image at the pixel point(i, j); m00 is the 0th order moment, reflecting the quality of the target area by the sum of the gray levels of the target image area. The first-order moments m10 and m01 of the image are used to determine the grayscale center of the image, so the centroid coordinates of the image can be obtained.The center moment μpq can represent the distribution of the overall gray scale of the image relative to its gray center of mass. Therefore, whether it is a regular area contour or not, the centroid coordinates can be used to indicate the gray barycenter of the guide wire.

After the image moment is used to obtain the gray center of the guide wire, the difference is determined via the absolute center coordinates of the image (when the resolution of the image captured by the camera is constant and the image width is constant, the absolute center position coordinates are half of the image width). Finally, the guidance deviation in the image is obtained.

Since bird’s-eye view transformation is not performed in this article, the actual deviations corresponding to different y-coordinates of the guidance deviation in the image are different. Therefore, it is necessary to establish a mapping relationship table between the image deviation and the actual deviation to convert the image deviation into the actual deviation.

5. Lateral Motion Controller

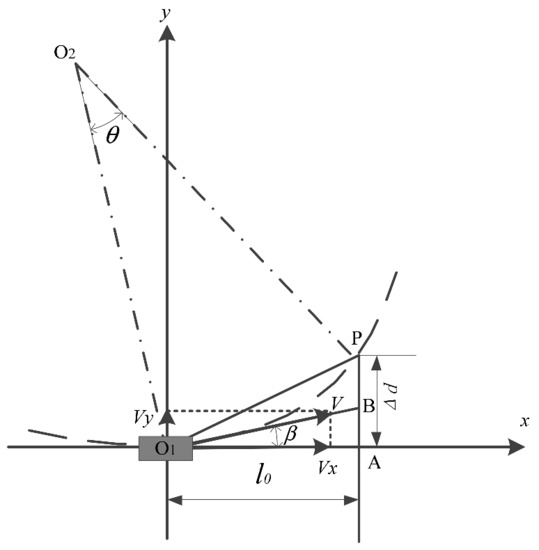

Assuming that the vehicle is moving in a steady state under nonlimiting conditions, first select an appropriate preview distance, and then the image processing module calculates the distance (lateral deviation) between the preview point and the corresponding point of the desired trajectory center. Then, the optimal expected front wheel angle is derived according to the obtained lateral deviation and the vehicle dynamics model, and finally the required front wheel angle (control quantity) calculated by the lateral motion control is used to control the vehicle to follow the desired path. The above process is the best preview control theory. Taking into account the low-speed motion characteristics of the AGV studied in this article, the motion state of the car in the future for a period of time can be regarded as the steady-state motion under nonlimiting conditions, as shown in Figure 5.

Figure 5.

The vehicle track under steady circular motion.

As shown in Figure 5, xO1y is the vehicle coordinate system, point O1 is the current vehicle centroid position, l0 is the preview distance, point A is the x-axis coordinate of the preview position determined according to the preview distance, P is the combination of the x coordinate of point A and the image position of the preview point determined by the y-coordinate obtained after recognition, β is the side slip angle of the vehicle’s center of mass, Δd is the lateral deviation of the vehicle and the target trajectory centerline, and O2 is the intersection of the vertical line of the resultant velocity v and the vertical line of O1P.

Assuming that within time tp, the car makes a steady circular motion and travels from the starting point O1 to the target point P at a yaw rate, its trajectory can be regarded as an arc with O2 as the center of the arc and R as the radius O1P.

The speed v of the vehicle can be decomposed into longitudinal speed vx and lateral speed vy:

If tp is the preview time, then the longitudinal distance traveled by the car can be expressed as follows:

In the steady state of the car, the longitudinal speed vx of the car can be considered to be much greater than the lateral speed vy [34]. Under this condition, the vehicle can be regarded as traveling along a circular arc with a radius of R. Under this condition, the steady-state yaw rate wd is shown by the following formula [35]:

The direction of the vehicle’s total speed v is tangential to the arc, and values of∠PO1B and ∠PO2O2 can be obtained according to the geometric relationship:

According to the geometric relationship, the relationship with ∠PGC, the right-angled side PC, and the right-angled side OC can be derived as follows:

Combining the above formulas, the value of the angle θ of rotation around the steering center can be obtained:

Substituting Equation (14) into Equation (10), the ideal yaw rate can be expressed as the following equation:

The vehicle’s center of mass slip angle is the angle between the direction of the vehicle’s center of mass velocity and the front of the vehicle. Since the AGV often travels at low speeds, there is basically no risk of “sideslip, drift”, etc., so the center of mass slip angle can be approximated to 0. Therefore, the ideal yaw rate can be simplified as follows:

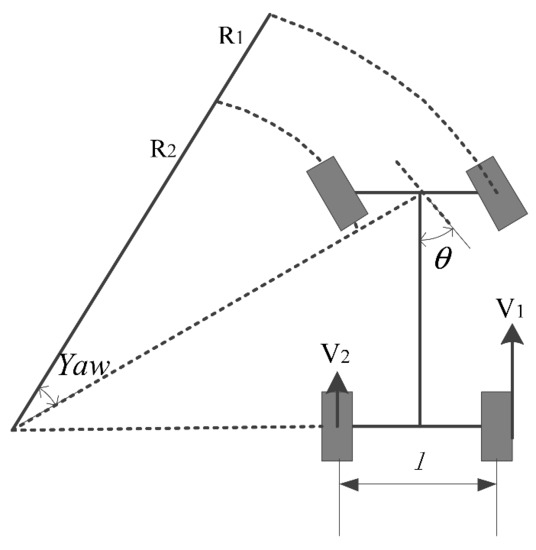

After the desired yaw rate is calculated, the current actual yaw rate needs to be estimated, as shown in the Figure 6.

Figure 6.

Actual yaw rate estimation.

This article estimates based on the wheel speed difference method, and the final actual yaw rate can be calculated by the following formula:

where v1 is the outer wheel speed, v2 is the inner wheel speed, l is the track, and δf is the actual front wheel rotation angle.

Assuming that the vehicle is steering in a steady state with a small steering angle, the dynamics equation of the vehicle are as shown in the following equation [36]:

In Equation (18), m represents the mass of the whole vehicle; vx and vy represent the longitudinal speed and lateral speed of the vehicle when driving, respectively; ω represents the yaw rate of the vehicle; lf and lr represent the distance from the front and rear wheels to the center of mass of the vehicle, respectively; Fyf and Fyr represent the lateral force of the front and rear wheels, respectively; and Iz is the moment of inertia at the center of mass of the vehicle. When the vehicle is in a nonlimiting condition, the tire is in the linear region. At this time, the relationship between the lateral force and the tire can be approximately expressed as follows:

The slip angle of the front and rear wheels of the vehicle can be expressed as follows:

The side slip angle of the center of mass when the vehicle is turning at a small angle can be expressed as: . Using Equations (18)–(20), we can get an equation for the first-order differential relationship between the yaw rate w and the side slip angle β of the center of mass:

where Cf and Cr are the cornering stiffness of the front and rear wheels, respectively.

For an uncertain system, when both external disturbance and internal parameter perturbation are considered, the system state equation can be expressed as follows:

where ΔA and ΔB is the parameter perturbation within the system, E(t) is the disturbance including the uncertainty of the system and the external interference, x is the state quantity, and u is the control quantity.

According to Equation (22), we can get:

where

, , , , , .

According to the above formula and combined with the controlled system studied in this article, the state equation is as shown in the following formula:

where f(wr) can be expressed by the side slip angle of the center of mass and the yaw rate:

The gain value of the sliding mode control reaching law is:

where η > 0.

Because it is not possible to directly control the center of mass slip angle, and under normal circumstances, when the vehicle is unstable, the ideal value and the actual value of the center of mass slip angle will produce a large error, the yaw rate is more reflective than the wheel angle of the vehicle as a whole. Therefore, the difference between the actual yaw rate and the ideal yaw rate is selected as the tracking error of the system:

where wd is the ideal yaw rate and wr is the actual yaw rate. One can design the sliding surface (switching surface) of the controller as follows:

One can derive the switch function as follows:

The sliding mode control law is designed as follows:

To prove the stability of the initially designed sliding mode controller, the Lyapunov function is as follows:

which is derived as follows:

Substituting the sliding mode control law of Equation (31) into Equation (33), we get:

where η > 0, so the designed control law meets the stability requirements.

Substituting this into Equation (29) produces the following equation:

where wr and wd can be obtained by formula x and formula y, respectively. Let ; since the vehicle is moving in an approximately uniform circular motion at this time, the yaw angular acceleration , so the control variable front wheel angle can be expressed as follows:

According to the sliding mode control law,

The switching coefficient K(t) determines the speed at which the moving point not on the sliding surface approaches the sliding surface. The larger the K(t), the faster the moving point approaches the sliding surface, which is the response of the control. The faster it is, the poorer the steady-state convergence, which will cause more jittering; if K(t) is smaller, the speed of the moving point approaching the sliding mode surface is slower, and the dynamic convergence is poorer. The jitter of sliding mode control is mainly caused by the approach term K(t)sgn(s), so it is necessary to limit the jitter phenomenon of the sliding mode controller. In this paper, a fuzzy controller with variable universe is designed to adjust the switching gain coefficient K(t).

According to Equation (36), although the existence of the sign function can effectively eliminate the unknown interference, it inevitably leads to chatter. The main drawback of the chatter phenomenon has been solved to a certain extent through the use of FSMC, which has become more common. Compared with various types of deep learning algorithms such as neural networks, fuzzy control has excellent adaptive approximation and no need for precise prior knowledge, which makes it easier to be widely used. Therefore, this paper adopts adaptive fuzzy control based on switching fuzzification to improve the trajectory of the system near the sliding mode surface, which not only enables the system to adapt to various working conditions, but also leads to better elimination of different interference intensities, so through fuzzy approximation the continuity of the discrete sign function can effectively reduce chatter.

The variable switching gain designed in this paper uses fuzzy rules to adjust the switching gain according to the relative position and movement trend of the system and the sliding mode surface.

Taking S and as the fuzzy input quantity and k as the output quantity, the corresponding fuzzy language variables are {NB NM NS ZO PS PM PB}. If > 0, the current state of the sliding mode function is the same as the change trend, and there is a trend away from the sliding mode surface. At this time, the switching gain k(t) should increase. If < 0, the system is already approaching the sliding mode surface, and the switching gain k should be reduced. At the same time, the size of || also needs to be considered to further rationally design fuzzy rules. When || is large, |k| should also have a large amount of change, and vice versa.

In the design of a fuzzy controller, the design of fuzzy rules is an important part of determining its performance. This article gives 49 fuzzy rules for the switching gain coefficient k(t) in Table 1:

Table 1.

Fuzzy rule table [37].

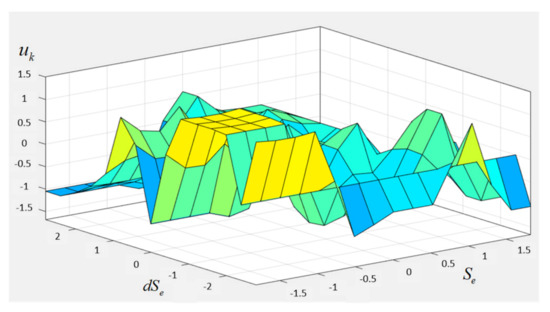

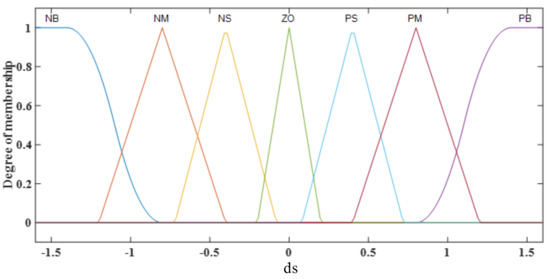

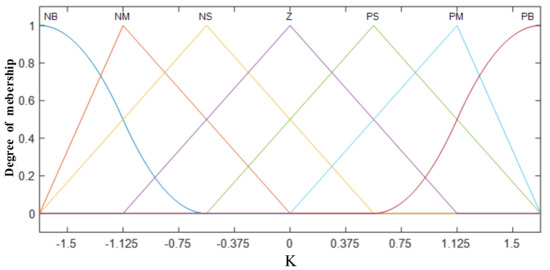

According to the above fuzzy rules, the corresponding relationship between the two input and output fuzzy sets and their quantity range are shown in Figure 7:

Figure 7.

Corresponding relationship of input and output fuzzy sets of fuzzy control.

This paper uses a product inference engine, a single value fuzzer, and a center average defuzzer. The output y(x) of the fuzzy system can be written as follows:

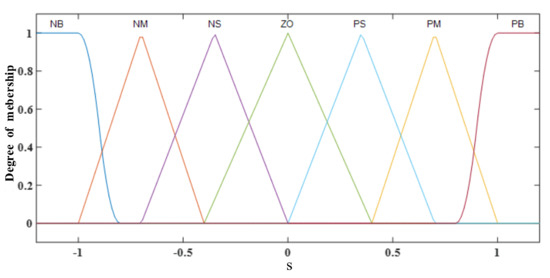

In this section, the switching function s(t) is used as the input of the fuzzy system, where is a fuzzy set, is a fuzzy set is the membership function of si, the Z-type membership function is used by μNB, the S-type membership function is used by μPB, and the rest of the fuzzy variables are all triangular membership functions. The initial membership function is shown in the Figure 8, Figure 9 and Figure 10:

Figure 8.

The s membership function diagram.

Figure 9.

The ds membership function diagram.

Figure 10.

K membership function diagram.

According to the principle of fuzzy control, in order to make a fuzzy system infinitely and accurately approximate a changing function, in theory, it needs an infinite number of fuzzy rules to realize it, which is almost impossible to achieve. Also, when setting the fuzzy domain interval, the initial domain interval is difficult to choose. Therefore, a more accurate approximation can be achieved through adaptive adjustment of the universe under the premise that the number of rules and the membership function remain unchanged.

According to the abovementioned fuzzy controller design, the input and output domains are scaled and adjusted. This paper uses the exponential expansion factor in the function model to adaptively adjust the fuzzy universe of three variables:

where x is the input variable of the controller and λ is the scale factor, which determines the degree of scaling. After repeated trials and adjustments, the expansion factor λ of the input domain in this article is 0.6, and the expansion factor λ of the output domain is 0.3.

Due to the introduction of the expansion factor of the input and output domains, the initial domain designed in the previous paragraph needs to be adjusted according to the expansion factor, so that the product of the adjusted domain and the expansion factor is centered on the initial domain.

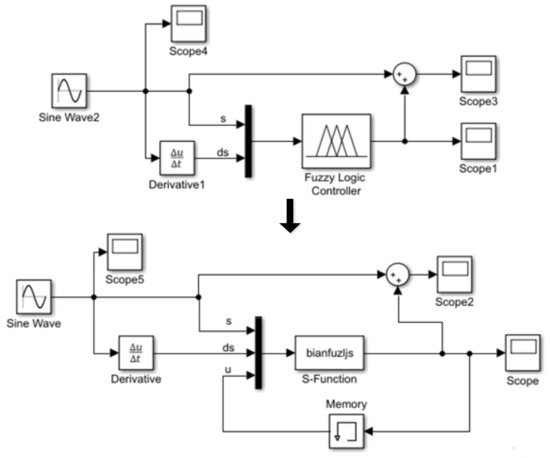

Since the fuzzy logic module in Matlab/Simulink cannot realize the variable universe, we used the S-function function to replace the original fuzzy logic module, as shown in Figure 11. It can be seen that the variable universe FSMC has added a feedback link, and the controller output (control quantity) at the previous moment is used as the independent variable of the variable universe expansion factor. The variable domain can adjust the corresponding fuzzy domain according to the actual input and output range, and the domain can be expanded in time when the output control quantity increases, so that the control quantity is not restricted by the maximum domain scope, and it is enough to ensure the stability of the system. When the output control quantity becomes smaller, the time shrinkage within the universe increases the control accuracy, guarantees the steady-state convergence, and finally realizes more precise and rapid control.

Figure 11.

Structure comparison of variable universe FSMC and traditional FSMC.

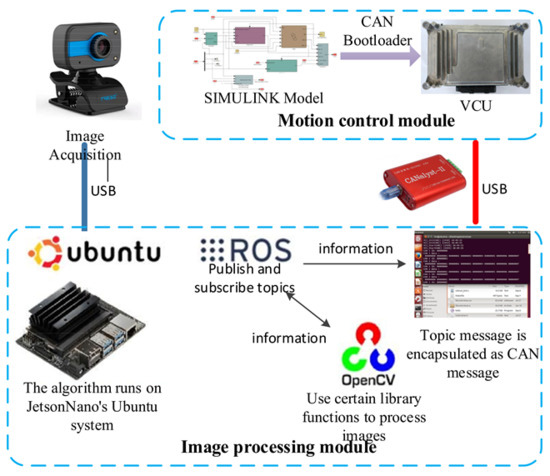

6. Construction of Vision-Guided AGV Test Platform and Real Vehicle Tests

The software implementation is divided into two parts. The upper image processing algorithm is developed based on the ROS framework. Messages are transmitted between different nodes through topic publishing and subscription. The upper layer packages the final calculated key variables as topic messages, and then packages some topic messages (such as navigation deviation) as can messages and sends them to the CAN bus for VCU to read and call. The core code of image preprocessing, leader extraction algorithm, and topic message of each node are written in C++ and Python, and some library functions in OpenCV library are used to complete the image processing work. The lower motion control is based on the rapid prototyping development method; this uses Matlab/Simulink to build the motion control algorithm, generates the automatic code, and finally burns the program into VCU. The overall architecture of the software system is shown in Figure 12.

Figure 12.

Software system architecture.

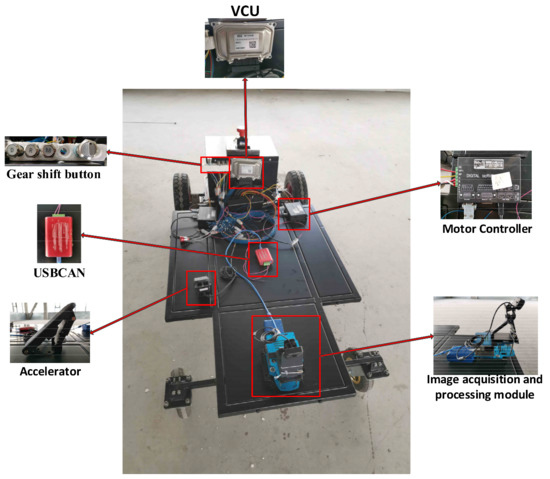

The hardware system of vision-guided AGV mainly includes a body, power supply, vision system, motion control module, and manipulation module. The test chassis is a 1/4 mini vehicle model, with length * width of 1.2 m * 0.6 m; considering the safety in the test stage, the maximum speed is 2 m/s. The power supply is a 24 V lithium iron phosphate battery, the motor is a servo motor with rated power of 400 W, the lower controller is a 24 V vehicle controller (VCU) with slow charge wake-up and can wake-up, the upper controller is the Jetson Nano product of NVIDIA, and the camera is a UVC drive-free camera head. Among the above components, the VCU and motor are powered by a 24 V battery pack, the image processing module is powered by a separate 12 V power supply, and the image acquisition module is powered by USB. The vision system mainly includes an image acquisition module (camera), an image processing module (host), etc. The number of camera pixels is 640 * 480 and the number of frames is 30 fps. With this number of frames, insufficient image processing speed will lead to abnormal vehicle driving. The motion control module mainly includes a vehicle controller, drive motor, motor controller, etc. The connection or communication mode between the above modules is shown in Figure 13. The camera provides system input by collecting the front path image, and is connected with the image processing module through USB. The image processing module processes the collected images in real time, uses the usbcan tool supporting Ubuntu system to send the calculated navigation deviation to the CAN bus through the CAN bus, reads the can message by the VCU, completes the motion control by the VCU, sends the left and right desired speeds to the motor controller through the can, and finally completes the drive control of the motor by the two motor controllers. This drives the Automatic Guided Vehicle (AGV) to follow.

Figure 13.

Hardware layout of test platform.

The whole hardware layout is shown in Figure 13.

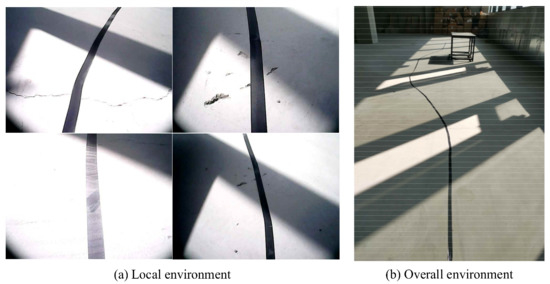

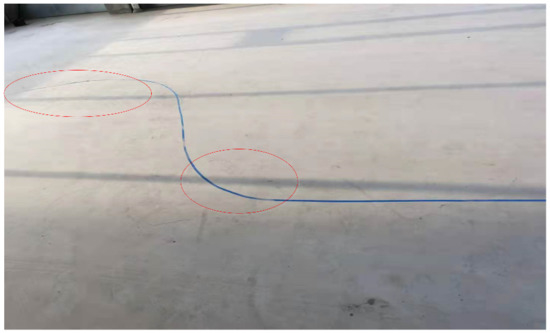

In this paper, a real vehicle test is carried out in a warehouse scene. In order to verify the operation effect of the algorithm designed in this paper under extreme conditions, interference items are added to the existing scene, such as selecting the area with strong light and dark contrast for testing, adding messy lines on the ground, etc. The test scenario after layout is shown in Figure 14.

Figure 14.

“Double line shifting” test scenario.

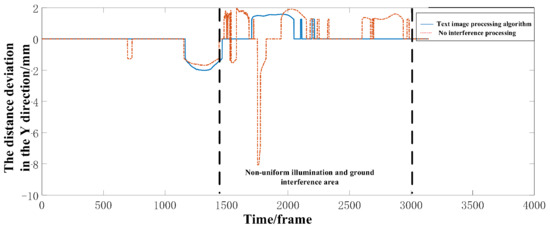

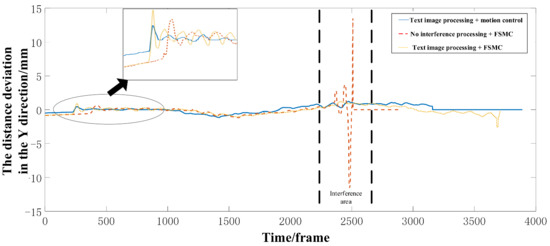

In this paper, a width range of 15 pixels is regarded as 0 error during the test. The test results in this scene are shown in Figure 15.

Figure 15.

Comparison of real vehicle operation effect with and without anti-interference treatment.

In Figure 15, the Y-axis is the deviation in distance between the center line of the vehicle and the center of the guide wire, the X-axis is the number of frames of image acquisition, and the test route is approximately a single moving line. The motion control algorithms used are variable universe fuzzy sliding mode control designed in this paper. In terms of image processing, non-interference processing is used to compare with the image processing algorithm in this paper. The image recognition method without anti-interference processing has no preprocessing, and uses traditional canny edge detection to extract the guide wires. Therefore, this comparison can reflect the stability and reliability of the image recognition algorithm designed in this paper. After the image preprocessing algorithm and guide line extraction algorithm designed in this paper, the preview points basically fall on the guide line. Only a few frames of small-scale error recognition appear in the interference area, which is reflected in the driving error curve—that is, there are limited times of small-scale jump, and the rest drive stably along the guide line. The leader recognition algorithm without anti-interference processing has poor performance obtaining the preview point, and is very vulnerable to the external environment. As shown in Figure 15, although the preview point will not deviate from the guide line in the area with uniform illumination, the preview point frequently deviates from the guide line after entering the interference area, resulting in a continuous jump of the calculated navigation error, which seriously interferes with the normal driving of AGV.

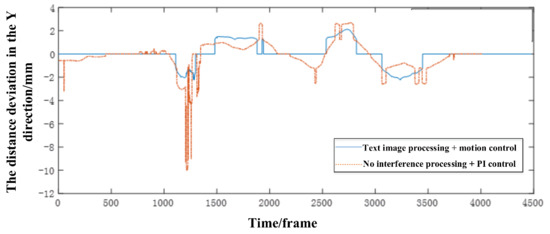

In order to comprehensively show the actual control effect of the image processing algorithm and motion control algorithm designed in this paper, another group of comparative tests are carried out on the test route of approximate double shift line, as shown in Figure 16. The parameters of PI control are determined after repeated debugging, and a set of optimal parameters is determined based on the weighted sum of deviation variance and average deviation. Firstly, a series of anti-interference image processing algorithms and variable universe fuzzy sliding mode control algorithm are used for testing. It can be seen that there is no error jump in the whole test process; the following can be completed quickly when there is a curve ahead, and the maximum error is within ± 2 mm in the whole test process. After that, the guide wire identification algorithm without anti-interference processing and PI control are used to test the same site. There is a large continuous jump at the first bend, and there are many false identifications in the whole test process, resulting in more sudden changes and a large amplitude of driving error. In terms of motion quality, the steady-state accuracy is lower than that of variable universe FSMC, which is difficult to maintain stably. The transient characteristics are also poor, the stabilization time is longer than that of the variable universe FSMC, and the PI control parameters need to be continuously adjusted according to the actual operating environment, so it is difficult to use the same set of parameters to deal with all scenarios, while the variable universe FSMC can adapt to various working conditions without manual adjustment, which is convenient for layout and use in different scenarios.

Figure 16.

Comparison of real vehicle operation effect under overall algorithm.

In order to test the control effect of the motion control algorithm under a large curvature path and the identification under the condition that the guide wire is not obvious, another comparative test is also carried out on the scene shown in Figure 17. The guide route is “S-bend,” the interference area in the route has local pollution and line interference, and there is uneven illumination or strong light reflection in some sections.

Figure 17.

“S-bend” test scenario.

In this scenario, the FSMC algorithm is first used to compare with the variable universe FSMC algorithm designed in this paper. It can be seen from Figure 18 that, when a large area rate curve appears in front, it is equivalent to giving a pulse to the sliding mode system. When the error suddenly appears, the response of the variable universe FSMC is not only faster, but also quicker to limit the error. Moreover, it can further reduce the frequency and amplitude of chatter based on FSMC, and further improve the motion quality of AGV. After the 3000th frame of the experiment, the FSMC algorithm has a large steady-state error and finally fails to converge to the zero deviation position. Due to the timely adjustment of the scope of the universe, the variable universe FSMC algorithm can quickly and accurately converge to the steady state and maintain this state. Secondly, in this scenario, the series of image processing algorithms designed in this paper are verified and compared with the algorithm without anti-interference processing. There are many interferences in the interference area, such as uneven illumination and unclear guide wires. The guide wire identification algorithm without anti-interference processing will have frequent “false identification,” resulting in large left and right yaw of AGV in the interference area, or even interruption in the middle, failing to travel a complete path, seriously interfering with the normal driving of AGV. The ant colony algorithm will fit the qualified broken lines so as to avoid the wrong identification due to the contamination of some lines. At the same time, the preprocessing algorithm designed in this paper can deal with the impact of uneven illumination and ensure the correct identification of guide wires.

Figure 18.

Comparison of real vehicle operation effect.

It can be seen from Table 2 that, in the single line shifting test shown in Figure 15, when the motion control algorithms are the same and both are equipped with variable universe FSMC, the deviation in the driving process is significantly reduced. After the image processing algorithm designed in this paper, the maximum deviation is only 2.0125 mm and the variance of the deviation is 0.5345 mm2, compared with 6.05 mm and 0.6313 mm2, respectively, for the noninterference processing algorithm. The significant reduction of the maximum deviation shows that the image processing algorithm designed in this paper does not have the wrong identification of the preview point when encountering external interference, and can make the preview point located on the guide line as much as possible. The reduction of deviation variance shows that preview point is rarely deviated from the guide line. Compared with the noninterference processing algorithm, the deviation false detection frequency of preview point is greatly reduced.

Table 2.

Comprehensive performance test results.

In the double shift line test shown in Figure 16, when the series of algorithms designed in this paper are used for image processing and motion control, compared with noninterference image processing and PI motion control, the maximum deviation is reduced by 7.8125 mm and the variance of deviation is reduced by 0.9661 mm2. After the algorithms designed in this paper are used for the upper and lower algorithms, the actual motion effect of AGV changes more significantly. The stability of the Automatic Guided Vehicle (AGV) tracking process is greatly improved, and the amplitude and frequency of driving deviation are reduced. The experiment shows that the error accumulation of each link will eventually lead to the infinite amplification of the final error, which will greatly affect the normal operation of AGV.

In the “S-bend” route test shown in Figure 18, when the series of algorithms designed in this paper is used for image processing and motion control, compared with the replacement of motion control with FSMC, the maximum deviation is reduced by 1.375 mm and the variance of deviation is reduced by 0.0399 mm2, indicating that we have further effectively improved the control accuracy and motion quality on the basis of the FSMC algorithm. Using a noninterference image processing algorithm combined with a FSMC motion control algorithm, there is a large range of continuous fluctuation in the interference area, resulting in a maximum deviation of 13.5 mm and a variance of deviation of 1.0783. Therefore, anti-interference image processing and high-precision motion control under visual navigation scheme are necessary, and the above algorithm designed in this paper has been greatly improved.

7. Conclusions

With the increasing requirements for automation and intelligence in today’s society, Automatic Guided Vehicles (AGV) will play a more and more important role in efficient automatic transportation. The visual guidance mode has gradually become one of the most important and popular perception modes. In order to ensure that Automatic Guided Vehicles (AGV) can continue to drive stably according to guide lines in extreme cases such as uneven illumination, line interference, and unclear or even damaged guide lines, and to improve the motion quality and robustness of visual guidance AGV, this paper focused on the difficulty of accurately extracting the guide wire when a vision-guided AGV runs in a complex working environment, and explored the applications of machine vision technology in the field of AGV. Navigation deviation and lateral motion control were also studied, providing a new method and ideas for the further development of vision-guided AGV from the two aspects of image recognition and motion control.

Author Contributions

Conceptualization, L.X., X.S. and H.Z.; methodology, L.X. and J.L.; software, L.X., J.L. and H.Z.; validation, L.X., J.L. and H.Z.; formal analysis, J.L., H.Z. and X.S.; investigation, L.X.; resources, H.Z.; data curation, X.S.; writing—original draft preparation, L.X., J.L. and H.Z.; writing—review and editing, L.X., J.L., X.S. and H.Z.; visualization, J.L.; supervision, H.Z.; project administration, X.S. and H.Z.; funding acquisition, X.S. and H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by The Initial funding for Advanced Talents at Jiangsu University, grant number 13JDG034 and The APC was funded by Jiangsu University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Han, J.; Kim, D.; Lee, M.; Sunwoo, M. Enhanced road boundary and obstacle detection using a downward-looking LIDAR sensor. IEEE Trans. Veh. Technol. 2012, 61, 971–985. [Google Scholar] [CrossRef]

- Saunders, R. Towards autonomous creative systems: A computational approach. Cogn. Comput. 2012, 4, 216–225. [Google Scholar] [CrossRef]

- Czubenko, M.; Kowalczuk, Z.; Ordys, A. Autonomous driver based on an intelligent system of decision-making. Cogn. Comput. 2015, 7, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rodŕguez, L.F.; Ramos, F. Development of computational models of emotions for autonomous agents: A review. Cogn. Comput. 2014, 6, 351–375. [Google Scholar] [CrossRef]

- Wu, X.; Sun, C.; Zou, T.; Li, L.; Wang, L.; Liu, H. SVM-based image partitioning for vision recognition of Automatic Guided Vehicle (AGV) guide paths under complex illumination conditions. Robot. Comput. Integr. Manuf. 2019, 61, 1–14. [Google Scholar]

- Wang, Y. Adaptive Image Enhancement Based on the Retinex Theory. Master’s Thesis, Dalian University of Technology, Dalian, China, 2015. [Google Scholar]

- Huang, Y. Research on Image EnhancementMethod Based on Chicken Colony. Master’s Thesis, Chongqing University of Posts and Telecommunications, Chongqing, China, 2018. [Google Scholar]

- Liu, Z.; Wang, D. Adaptive Adjustment Algorithm for Non-Uniformlllumination Images Based on 2D Gamma Function. Trans. Beijing Inst. Technol. 2016, 36, 2. [Google Scholar]

- Yu, S.; Zhu, H. Low-Illumination Image Enhancement Algorithm Based on a Physical Lighting Model. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 28–37. [Google Scholar] [CrossRef]

- Oh, J.; Hong, M.-C. Adaptive Image Rendering Using a Nonlinear Mapping-Function-Based Retinex Model. Sensors 2019, 19, 969. [Google Scholar] [CrossRef] [Green Version]

- Ding, N.; Rang, X.; Zhang, H. Driver Model for Single Track Vehicle Based on Single Point Preview Optimal Curvature Model. J. Mech. Eng. 2008, 44, 220–223. [Google Scholar] [CrossRef]

- Dong, T. Lane Keeping System Based on Improved Preview Driver Model. Automoble Appl. Technol Ogy 2019, 24, 143–145. [Google Scholar]

- Ren, D.; Cui, S.; Wu, H. Preview Control for Lane Keeping and lts Steady-state Error Analysis. Automot. Eng. 2016, 38, 192–199. [Google Scholar]

- Xu, S.; Peng, H. Design, analysis, and experiments of preview path tracking control for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 21, 48–58. [Google Scholar] [CrossRef]

- Xiong, L.; Yang, X.; Zhuo, G.; Leng, B.; Zhang, R. Review on Motion Control of Autonomous Vehicles. J. Mech. Eng. 2020, 56, 127–143. [Google Scholar]

- Zhao, X.; Chen, H. A study on lateral control method for the path tracking of intelligent vehicles. Automot. Eng. 2011, 33, 382–387. [Google Scholar]

- Xu, X.; Min, J.; Wang, F.; Ma, S.; Tao, T. Design of differential braking control system of travel trailer based on multi-objective PID. J. Jiangsu Univ. (Nat. Sci. Ed.) 2020, 41, 172–180. [Google Scholar]

- Marino, R.; Scalzi, S.; Netto, M. Nested PID steering control for lane keeping in autonomous vehicles. Control. Eng. Pract. 2011, 19, 1459–1467. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, Z.; Niu, Z.; Ding, N. Design and verification of integrated control strategy for tractor-semitrailer AFS /DYC. J. Jiangsu Univ. (Nat. Sci. Ed.) 2018, 39, 530–536. [Google Scholar]

- Liu, Y.; Yang, X.; Xia, J. Obstacle avoidance and attitude control of AUV based on fuzzy algorithm17. J. Jiangsu Univ. (Nat. Sci. Ed.) 2021, 42, 655–660. [Google Scholar]

- Jiang, H.; Zhou, X.; Li, A. Analysis of human-like steering control driver model for intelligent vehicle. J. Jiangsu Univ. (Nat. Sci. Ed.) 2021, 42, 373–381. [Google Scholar]

- Tagne, G.; Talj, R.; Charara, A. Higher-order sliding mode control for lateral dynamics of autonomous vehicles, with experimental validation. In Proceedings of the Intelligent Vehicles Symposium, Gold Coast, QLD, Australia, 23–26 June 2013; pp. 678–683. [Google Scholar]

- Jiang, H.; Cao, F.; Zhu, W. Control method of intelligent vehicles cluster motion based on SMC. J. Jiangsu Univ. (Nat. Sci. Ed.) 2018, 39, 385–390. [Google Scholar]

- Falcone, P.; Borrelli, F.; Tseng, H.E.; Asgari, J.; Hrovat, D. Linear time-varying model predictive control and its application to active steering systems: Stability analysis and experimental validation. Int. J. Robust Nonlinear Control. 2008, 18, 862–875. [Google Scholar] [CrossRef]

- Falcone, P.; Tseng, H.E.; Borrelli, F.; Asgari, J.; Hrovat, D. MPC-based yaw and lateral stabilisation via active front steering and braking. Veh. Syst. Dyn. 2008, 46, 611–628. [Google Scholar] [CrossRef]

- Yu, W.; Zhou, S. Cooperative control of damping and body height based on model prediction. J. Jiangsu Univ. (Nat. Sci. Ed.) 2021, 42, 513–519. [Google Scholar]

- Demirhan, M.; Premachandra, C. Development of an Automated Camera-Based Drone Landing System. IEEE Access 2020, 8, 202111–202121. [Google Scholar] [CrossRef]

- Shehzad, M.; Bilal, A.; Ahmad, H. Position & Attitude Control of an Aerial Robot (Quadrotor) with Intelligent PID and State feedback LQR Controller: A Comparative Approach. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 340–346. [Google Scholar]

- Gong, J.; Jiang, Y.; Xu, W. Model Predictive Control for Self-Driving Vehicles; Beijing Institute of Technology Press: Beijing, China, 2014. [Google Scholar]

- Ji, X.; He, X.; Lv, C.; Liu, Y.; Wu, J. Adaptive-neural-network-based robust lateral motion control for autonomous vehicle at driving limits. Control. Eng. Pract. 2018, 76, 41–53. [Google Scholar] [CrossRef]

- Ge, S.S.; Lee, T.H.; Hang, C.C.; Zhang, T. Stable Adaptive Neural Network Control; Kluwer Academic Publishers: Boston, MA, USA, 2001. [Google Scholar]

- Wai, R.-J.; Lin, C.-M.; Hsu, C.-F. Adaptive fuzzy sliding-mode control for electrical servo drive. Fuzzy Sets Syst. 2004, 143, 295–310. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Liang, J. Stability Research of Distributed Drive Electric Vehicle by Adaptive Direct Yaw Moment Control. IEEE Access 2019, 7, 2169–3536. [Google Scholar] [CrossRef]

- Liu, W. Research and Application of Digital Matting Technique Based on Sp-Graph-Cut. Master’s Thesis, Northeastern University, Boston, MA, USA, 2015. [Google Scholar]

- Chen, W.; Tang, D.; Wang, H.; Wang, J.; Xia, G. A Class of Driver Directional Control Model Based on Trajectory Prediction. J. Mech. Eng. 2016, 52, 106–115. [Google Scholar] [CrossRef]

- Zhang, J. Research on Lateral Control of Intelligent Pure Electric Vehicles Based on EPS. Master’s Thesis, Hefei University of Technology, Hefei, China, 2018. [Google Scholar]

- Zhang, H.; Liang, J.; Jiang, H.; Cai, Y.; Xu, X. Lane line recognition based on improved 2D-gamma function and variable threshold Canny algorithm under complex environment. Meas. Control. 2020, 53, 1694–1708. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).