Abstract

Advances in technology and digitalization offer huge potential for improving the performance of production systems. However, human limitations often function as an obstacle in realizing these improvements. This article argues that the discrepancy between potential and reality is caused by design features of technologies, also called ironies of automation. To this, the psychological mechanisms that cause these ironies are illustrated by the example of operators of a chocolate wrapping machine, and their effect is explained by basic theories of engineering psychology. This article concludes that engineers need to understand these basic theories and interdisciplinary teamwork is necessary to improve the design of digital technologies and enable harmonious, high-performance human–machine cooperation.

1. Introduction

Progress is moving at a fast pace. The integration of new technologies such as machine learning or digital twins promise immense efficiency and productivity enhancement. However, these promises tend not to come true when the only question engineers and designers learn to ask is ‘which tasks can be automated?’.

Almost four decades ago, Ironies of Automation [1] was published. This influential work was meant to alert the public to the various ironies caused by advances in automation technology. It describes the three main reasons why highly automated systems do not reach their potential and human intervention will be needed for the foreseeable future to prevent and fix failures; namely: (1) Automation is meant to replace unreliable and inefficient human operators, but at the same time it is designed by unreliable humans. (2) When dependable and efficient automation fails, unreliable humans are still needed to detect and compensate this failure. (3) Human takeover in case of automation failure requires detailed understanding of the current situation, extensive process knowledge to deduct the correct action and the skills to execute it, but prolonged passive monitoring hampers the building and maintaining of said knowledge and skills [1]. Even now, almost forty years later, these ironies of automation have not been resolved. In fact, technological progress has made them even more poignant [2,3].

In our opinion, the main reason for the current problems of automated systems that engineers and designers seldomly possess knowledge about human cognitive capabilities and processes and thus cannot develop systems that reconcile technical possibilities with human needs. The reason for this is that cross-domain knowledge acquisition is sparse and effortful. Even impactful, decades-old publications, such as the Ironies of Automation [1], have largely remained within the psychological research community. Scientific papers are accessible to the public, but motivated readers might find them impossible to understand due to their specialized terminology, or struggle to transfer and apply research findings to their own work. However, it becomes steadily clearer that modern, highly complex systems cannot succeed unless the viewpoint that humans are an unnecessary source of variance and error is challenged and system and interaction design is reshaped to allow human operators to unlock the full potential of technical innovations. This article aims to take the first step in this endeavor by explaining the ironies caused by the rising level of automation. We will detail what challenges automated systems pose for machine operators and introduce psychological theories that explain how these challenges are rooted in system design. To boost interdisciplinary understanding and transfer, the underlying mechanisms of human behavior are discussed at examples from food production. As is necessary, the discussion is supplemented by further examples from well-researched domains such as aviation. The final section provides a brief outlook on useful cognitive engineering methods for creating better human-centered operator support systems and human–machine interfaces (HMI).

2. Challenges for Machine Operators in Industrial Production

The search for scientific solutions that enable humans to cope with rising complexity of production systems dates as far back as the start of the 20th century, when scientific management theory was introduced [4]. Even though multiple methods and interventions for making complexity manageable have been tried since then, most have not only failed to solve the problems, but instead intensified them [5]. Current production plants have all the characteristics of a complex system: untransparent dependencies between a large number of variables, dynamic system behavior, and multiple conflicting goals [6,7]. This means that machine operators interact with systems whose reaction they can predict partially, or not at all, as they are only partly aware of relevant variables and their interactions. In food packaging, machine stoppages occur frequently, since the processing properties of bio-based materials change with different environmental conditions. For example, it is well known that chocolate temperature and machine speed are among the variables that affect the packaging of chocolate bars. However, most machine operators do not know that outside temperature and air humidity in the facility may affect packaging as well. For example, chocolate bars cast at a relatively high temperature can be packed successfully, if the cooling temperature is reduced. During a hot summer, the temperature reduction would likely need to be greater than during winter, even when the production site is equipped with air conditioning. However, when humidity is high, condensation might bead on the extra-cold chocolate bars, which may cause reduced traction on conveyor belts and thus change handling characteristics. However, even if all relevant properties were known and a metric for the interaction existed, it still would not be possible to predict an optimal parameter set. While environmental conditions can be monitored closely, many material properties can only be measured indirectly or not at all, and thus, sensor measurements that would allow for automatic handling are not (yet) available.

Working under these conditions, operators often struggle to find and fix the cause of production stops, especially because they often receive little-to-no specialized job training [8]. To facilitate work, current assistance systems try to analyze machine data to present instructions for fixing the fault [9]. However, these instructions often lead operators to treat fault symptoms (e.g., they remove jammed chocolate bars) instead of eliminating the fault cause (e.g., adjust an incorrectly placed guide rail in the product feed). When the fault cause persists, it often causes repeated production stops. As fault symptoms can be caused by many different fault causes, operators need to understand the complex interaction of productions parameters and machine settings to diagnose and remove fault causes [8]. Current production systems provide operators with a wealth of data but makes it virtually impossible for operators to prioritize and understand it. The causes are outdated design philosophies that prevail despite huge advances in graphical visualization and data processing. One example for this is the continued use of single-sensor single-indicator displays (SSSI displays) [10]. This type of display presents each sensor measurement in isolation, forcing operators to execute sequential observations, thus taxing their working memory immensely and complicating control tasks [11]. To facilitate successful task execution, data should be presented in context with other task-relevant information, and characteristics of human information processing should be used to visualize interrelations in an easily understandable way.

However, machine builders’ motivation to optimize design is often low, even when engineers know that unsuitable operator actions are limiting production performance. This reluctance is caused by the persistent opinion that humans will soon be eliminated from factories. Automation specialists envision perfect, fault free, fully automated production systems. Even if the vision of unmanned production (dark factory [12]) has been dismissed for the meantime as technologically and economically unrealistic [13], many retain the mindset that it is potentially possible in the far future and refrain from considering human needs in the design of new innovative solutions. This mindset not only severely limits the performance of current production systems, but also fails to recognize the changed role humans need to fulfil in highly automated systems: the role of experienced experts, decisionmakers and coordinators [14,15,16]. One of the main reasons why humans will continue to play an important role in production is stated in the first irony of automation.

2.1. System Designers View Human Operators as Unreliable and Inefficient, but Designers Are Also Human, and Thus Also Unreliable

An often-stated argument for reducing human involvement in production systems is the opinion that human behavior is unpredictable and error-prone at best, and malevolent, and destructive at worst. Human error is considered as the primary source of food contamination in industrial production [17]. Strict adherence to procedure for cleaning and disinfection are prerequisites to ensure food safety and manual work steps are prone to being forgotten or executed wrongly. However, upon a closer look, operators’ violation of formal procedures often seem very rational when considering the actual workload and timing constraints [18], and human behavior is rarely a product of chance. In most cases, operators do not simply decide to act against procedures, but instead judge that following procedure will produce undesirable results, e.g., missing the production quota. Leveson [19] states that higher system reliability (e.g., an input steadily producing the same output) is not equivalent to higher system safety. Indeed, reliability and safety might even be opposing factors. Decisions and subsequent actions result from the combination of characteristics of the production system (i.e., the food processing plant) and work and life experience of operators. When critical situations or accidents occur, human failure is often blamed in hindsight, despite being only one of several contributing factors [20]. In fact, human failure often has a systemic origin, which means failures may be caused, facilitated, or enabled by the work system [21]. In complex systems, several latent failures are always present, they only turn into active failures when unforeseen combinations of circumstances circumvent all redundancies and layers of defense [20]. Thus, it is necessary to take a more systemic approach to error analysis and to investigate whether system design or systemic reasons are fully or partly responsible for critical situations and accidents, instead of simply blaming flawed human nature [19].

When building a new system, designers try to anticipate all possible operating situations. Despite the complexity of the system, people typically believe they understand its functional principles very well, but their knowledge is often imprecise, incoherent, and shallow [22]. This illusion of understanding can be traced back to the fact that people think about systems in too-abstract terms [23]. Even the most diligent person cannot consider all possible influences, or foresee all the functional changes that might affect plant dynamics [5,24], such as later modifications during maintenance.

Decision-making in complex systems is a joint process of designer, automation, and operator [25]. While only automation and operator are actively involved in the situation, designers shape the workflow by allocating tasks to automation and operator, regulating the availability of information, and limiting actions. While the system automation is fixed once its programming is complete, human behavior is variable. Operators adapt the system to maximize production and minimize failures [20]. When systems designers place extensive restrictions on these adaptations to maximize system reliability, recovery from emerging failures becomes almost impossible and system safety declines. An example of this is the landing accident of a Lufthansa Airbus A321 at Warsaw airport in 1993. Upon landing the automatic breaking system was not activated and the plane crashed. The cause was not technical failure, but a design decision. The automated breaking system was designed to be activated after detection of ground contact. Due to heavy crosswind and a wet runway, neither the minimum weight on each main landing gear strut, nor the minimum speed of the wheels was detected, and manual override of the block was not allowed [26]. While all components functioned as they were intended to (i.e., reliably) the restriction on the pilot’s adaption of the system to the unforeseen circumstances let latent design failures lead to a catastrophic accident.

Human reasoning may be faulty and incomplete, but designers of automated systems are also human. Thus, it is highly unlikely that any man-made system will ever be completely fault-free and then even still, unforeseen interactions between perfectly functioning components or unforeseen operating conditions may lead to failures [19]. Consequently, operator intervention is indispensable to compensating design faults. Thus, new solutions should be developed with the awareness that flexible human intervention will always be necessary and thus operators need degrees of freedom in selecting their actions. However, intervening correctly when automation fails is quite a challenge.

2.2. Takeover in Abnormal Situations: Experienced Operators Become Inexperienced Ones but Need to Be More Rather Than Less Skilled

It is often assumed that new technologies facilitate operator jobs, but in fact, automation has a dual influence on task demands [14,15,27]. When the system works smoothly, the operator’s role is limited to monitoring and executing easy manual tasks that cannot (yet) be automated. However, when faults occur, operators are confronted with complex planning and problem-solving tasks. When production stops, operators instantly need to switch from monotonous monitoring duty to fully alert troubleshooting. This results in a rapid change from low to high demands as operators need to be fully aware of all relevant information and the possible consequences of their actions to decide on the correct remedial or compensatory action.

In the past, function allocation by substitution has been used to exchange human tasks for automated ones using a rationale concerning who does what best [28]; for example, ‘while machines are good at performing according to strict rules, humans are good at improvising’. Digital assistance solutions, such a machine learning system that monitors cleaning processes and alerts operators to anomalies as proposed by Yang [29], often take over the complete process while expecting that operators deal with failures when notified. However, this approach fails to consider that performance in the remaining tasks might be influenced by the loss of the other tasks [30]. A human–machine system is not simply the sum of its parts; instead, the integration of different functions into a whole often causes unforeseen side-effects [28].

2.3. Through Prolonged Passive Monitoring Operators Lose the Necessary Activation and in the Long Term the Necessary Competence for Effective Takeover

Automation often pushes operators into a passive role while providing little to no feedback about its own mechanisms and actions. This black-box approach can lead to fatal consequences, such as the rollercoaster-ride-like flight of an Airbus A310 on approach to Moskva-Sheremetyevo Airport on 11 February 1991. When the landing process needed to be aborted, the crew activated emergency take-off mode. As the pilot thought the climbing angle was too steep, he intervened manually. However, this caused the plane to go into a high-power climb until it stalled and tumbled toward the ground. It could be stabilized only shortly before crashing. This repeated several times, until the crew understood that the pilot’s manual correction had been categorized as outside influence and was thus counteracted by the automated flight mode [31]. Without a system message that an outside influence was detected and compensated, the flight crew had no opportunity to understand the capacities and limits of automation. Interaction and feedback are key factors to successful learning [32,33]. The crew needed to repeat its actions multiple times to understand how the pilot’s behavior affected plane automation. However, when control functions become automated, operators spend most of their time with passive monitoring and have few opportunities to interact with the system. This has two major consequences: First, operators who are no longer actively involved in a task experience monotony, which may lead to reduced attention paid to the situation and thus inhibit the active processing of information. Second, the small amount of interaction with the system and the resulting lack of learning opportunities leads to a decay in competencies. In the following subsections, both impacts are discussed in more detail.

2.3.1. Situation Awareness and Out-of-the-Loop Performance Problems

The short-term consequences of a passive operator role are a feeling of monotony due to repetitive work tasks and underload of work, which results in reduced vigilance (i.e., reduced alertness and attention). The reduction of vigilance leads to reduced situation awareness [34]. Situation awareness consists of three steps: First, the perception of elements of the current situation. Second, the comprehension of the current situation; and third, the projection of a future status [35]. Good situation awareness allows operators to quickly find a solution when automated regulation fails. In dynamic systems, the situation changes continuously even without operator intervention [7]. Consequently, situation awareness needs to be updated continuously, meaning that operators need to perceive all process changes and adapt their predictions accordingly. In practice, this means an operator of a chocolate packaging machine first needs to survey the production line to perceive all elements of the current situation. He looks at the location and orientation of chocolate bars on the conveyor belt, the overall cleaning condition of the packaging machine, the soiling of specific machine components, and the filling level of the buffer storage. During the next step the operator needs to combine these elements into a coherent picture of the current situation. If skewed and overturned chocolate bars on the conveyor belt appear, this could indicate improper adjustment of the height and speed of the conveyor belts in relation to each other, while general soiling might indicate the need for a short production stop for cleaning. In contrast, more pronounced soiling on guiding rails might indicate improper adjustment of machine components to chocolate bar height and width. Usually, a mix of all factors in varying degrees is present and operators need to assess which fault cause is the most likely. Additionally, the filling level of the buffer storage indicates how time-critical decision and intervention are. As a final step the operator then needs to predict how all possible interventions will affect future system performance; for example, whether stopping production for a thorough cleaning might overtax the capacity of the buffer storage or if calling a technician to fix the guiderails might cause an even longer production stop. Already being aware of all these possibilities, when a fault occurs, the operator can almost instantly decide what to do and has the packaging machine working again in almost no time. However, automation of active control tasks makes constant attention to the process unnecessary, and thus humans often cease to update their situation awareness. This causes out-of-the-loop performance problems [36], meaning that operators may not be aware of all fault possibilities, detect problems late and need to delay problem diagnosis until they have reoriented themselves [34]. While an operator perceives all information, integrates it into a coherent picture, and predicts the consequences of possible actions, packaging is halted, and the delay might already be enough to overtax buffer capacity. Consequently, the rest of the production process might have to be stopped at great financial cost.

A common approach to avoiding this is integrating instructions for action into fault messages delivered by the HMI or an assistance system. This ostensibly solves the problem of delayed reaction, as operators will know what to do, while allowing them to skip the three levels of situation awareness (i.e., perception, comprehension, and projection). This enables fast execution of remedial action. However, to check whether the instructions provided by the system are suitable to the current situation, operators nevertheless need to build situation awareness. Baring the motivation or time to do this, the automated suggestions replace active information-seeking and processing, and decisions are strongly biased in favor of the automated suggestions. This tendency is called automation bias [37], and does not influence performance negatively, as long as the provided instructions are correct [38]. At this point, the first irony surfaces again, as it is extremely unlikely that system designers have weighed all possible operational situations, interrelated production parameters, and changing environmental conditions to suggest the most optimal solution for every possible situation. Thus, there is always some remaining measure of uncertainty, which may result in suboptimal or plainly wrong instructions. When operators accept these instructions uncritically, they show over-trust in the assistance system.

2.3.2. System Knowledge and Decay of Competence

When operators need to find and evaluate action alternatives and fix causes of production stops, Rasmussen’s skills–rules–knowledge framework [39,40,41] can be used to describe how the availability of system knowledge decides on their success and the regularity of practice on their speed.

The framework describes how skill-based, rule-based, and knowledge-based behavior can be used to solve problems. The kind of behavior used depends on the familiarity of the situation. During new and unfamiliar situations, knowledge-based behavior is required. Here, the environment is analyzed, the goal is formulated, and a plan based on empirical observation, or a mental model of the system is developed. In our example, the goal is to adjust the placement of a print image to the middle of a package. An operator, for whom the print image is an unfamiliar problem, would likely try different actions, such as changing placement parameters in the user interface and then looking at the produced packages to see whether the correction reached its goal. Alternatively, the operator could deduce which components are involved in the lateral placement of the label and predict how they need to be modified to achieve the necessary correction. This step-by-step procedure is rather slow. Additionally, only one task at a time can be executed, as knowledge-based behavior uses limited cognitive resources, such as attention and mental effort. Through practice, an unknown situation becomes a set of routine tasks that can be executed faster, while task execution may revert back to the knowledge-based level when there is insufficient opportunity for practice.

Our operator could now use the previous experience to build a rule like ‘if the label is displaced slightly to the right, change the film transport to cut the film 2 mm later’. Thus, when the problem emerges again, rule-based behavior is possible. During rule-based behavior, stored rules or procedures are used to consciously select a set of subroutines. As rule-related actions and consequences are well known, usually no feedback is needed to know that the problem is solved. While rule activation is still a conscious process, the problem can be solved without active reasoning or trial and error.

If displacement of the label is a routine problem that emerges multiple times a day, skill-based behavior allows the correction to seem almost automated. Skill-based behavior is composed of physical activities that follow an intention and are executed in smooth, automated patterns that need next to no conscious control. As soon the operator notices the displacement, his fingers navigate to the correct menu on the HMI, and he taps the correct spot to execute the adjustment almost without a thought.

However, most production problems are more complex than a displaced label and need to be solved with a mix of skill-based, rule-based, and knowledge-based behavior. While the rules and the necessary steps to execute them might be relatively straightforward, deciding which rule to apply is not as easy, such as in the case of a complex system where multiple competing goals need to be satisfied [6,7]. Keeping the system stable within the boundaries of safe and economic operation while maintaining an acceptable workload for operators requires the continuous weighting and prioritization of production goals [18]. When packaging chocolate bars into pouches, operators need to adjust sealing parameters so that the machine produces firmly and tightly sealed packages that can withstand the strains of transportation. However, sealing shouldn’t be so firm that consumers cannot open the packages comfortably anymore. There are several possible combinations of sealing temperature, time, and pressure that fit these quality specifications [42]. In addition to physical limitations and process safety, economic operation also limits the operable parameter range. Raising the sealing temperature may speed up the process and thus allow for a higher production output but raising it above a certain threshold may melt the chocolate within or destroy the multilayer film. Operators need to weigh these conflicting goals and decide on a course of action. In the absence of formal knowledge on the interaction of sealing parameters, operators may use knowledge-based behavior to learn which parameter set achieves quality, safety, and production standards. This requires regular interaction with the system and optimization of parameters using a trial-and-error approach [43]. However, maladjusted parameters increase the number of rejects and thus lead to financial loss.

A currently favored solution for the optimization problem is the use of digital technologies, especially machine learning, to automatically provide optimal parameter settings. Again, these systems push operators out of the loop and thus favor automation bias. Again, this works well as long as the automatically generated parameter set is indeed optimal. Manual optimization of parameters is often unwanted either by corporate policy or by design, while the flawed human designer cannot account for all possible scenarios. However, even highly competent operators depend on practice opportunities to retain their system knowledge. This was illustrated in a study by Childs et al. [44] that measured pilots’ flight-skill retention. When pilots did not practice their manual flying skills, written test scores as well as cognitive procedural flight skill were reduced drastically after a period of only eight months. Transferred back to our operators, this means that even if needed, in the long run, manual optimization becomes impossible when there are no practice opportunities.

3. Avoiding the Ironies of Automation by a New Approach to Interaction Design

The previous sections outlined, in detail, which problems can be caused by suboptimal system design. This section rounds up the topic by providing a brief outlook on how cognitive engineering methods can be used to avoid the ironies of automation. While it is possible to address all mentioned problems on multiple levels, such as hardware design, or operator training, in order to remain brief, this section only touches on design implications for human–machine–interfaces (HMI) and assistance systems for exemplary problems.

To counteract the irony of the unreliable human designer, design concepts need to stop providing easy yet highly limiting solutions to complex problems and thus prevent any human interventions, including helpful ones. When designing new technology, it is necessary to keep in mind that operators work in a complex system. The law of requisite variety states that only variability in reaction can regulate the response of a highly variable system [45]. In other words: complex systems need complex control options. Consequently, assistance technology should be designed to allow operators as much freedom of action as possible. Only then can they adapt the system to deal with unforeseen situations or changes in the production setting. Naturally, it is not possible to afford operators total freedom of action. This is prevented by work safety concerns and legal regulation as well as economic needs. Additionally, it would almost surely overtax operators’ capacities. The optimal scope of action needs to be assessed on a case-by-case basis by considering the likelihood and cost of possible mistakes. For instance, producing pharmaceutical drugs allows for a smaller scope of action than producing chocolate. To this end, requirements and risk analysis should not exclusively focus on how human errors could cause problems, but also on the question ‘what information does the operator need to react well’. This can be achieved by using psychological methods for work analysis, such as cognitive work analysis [46], which do not focus exclusively on the sequence of actions necessary to accomplish a goal, but also take constraints imposed by the environment and cognitive processes of users into account.

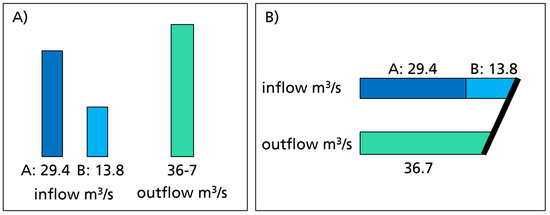

To counteract the lack of situation awareness and avoid automation bias, assistance technology should support information acquisition and integration instead of only focusing on action execution. To build and maintain situation awareness, operators need continuous access to current process data and to receive feedback on a system’s state and actions. However, this information should not be ‘dumped’ on operators. It needs to be organized according to the task structure and should allow for reinstatement of crucial elements of situation awareness at a glance. In practice, this means that results from work analysis can be used to assess task-specific informational needs and related design options. Single-sensor single-indicator displays like the one depicted in Figure 1A) require slow and effortful knowledge-based reasoning even for well-known tasks. In this case, the operator needs to sum up the two inflow streams into a tank and compare the result to its outflow to decide whether the tank is currently filling up or not. An alternative way of representing the data uses representation aiding. The goal is to support decision-making by facilitating information search and integration [47]. To reach this goal, emergent features (i.e., features that are dependent on the identity of the parts they result from and are perceptually salient [48]) can be used to provide clear signals that can be associated with rule-based behavior. Emergent features can be easily perceived from afar and allow operators to understand system states without the need for cognitive processing. An example of an emergent feature is the bold line in Figure 1B). It connects to the combined inflow from two sources into a tank and to its outflow. That the line is sloped to the right indicates that inflow is bigger than outflow, alerting the operator that the tank is filling up.

Figure 1.

Example of a single-sensor single-indicator visualization (A) and a visualization with emergent features (B).

Although representation aiding offers the potential for quick reinstatement of situation awareness (i.e., the awareness that the tank is filling up), it does not offer any clues on how to deal with the situation and thus does not counteract the fact that highly automated systems offer few learning and practice opportunities. To remedy this, assistance systems can be used to provide system knowledge and knowledge about faults, ideally in a way that promotes learning. An example for benefits of such learning can be seen in a study by Jackson and Wall [49], in which operators were able to reduce downtimes by over 80%. After being taught about typical product variations and faults, they were able to adjust production parameters in expectation of changed material characteristics and thus prevent the incidence of faults. Assistance systems can provide knowledge by providing situation-specific advice through case-based reasoning, offering learning opportunities in the form of tutorials, or simulation for testing interventions before execution, to name only a few options.

An especially interesting approach is the use of ecological interface design [50]. It aims to support operators in predicting the consequences of a situation and identifying possible actions by visualizing system relations in a way that helps operators to judge the available options for action and their consequences. To reach this goal, it is crucial to visualize not only individual data points, but also dependencies between parameters and with the environment.

It is obvious that cognitive psychology offers many methods and ideas how to design systems that respect and support human cognitive needs. However, creating high-performance design solutions also requires intimate knowledge of machines, materials, and processes. Only when knowledge from psychology and engineering are brought together can new and future innovations reach their full potential.

4. Conclusions

The design of technical systems influences human performance, for better and for worse. As technical possibilities evolve rapidly, new technologies offer tremendous potential for high-performance systems. However, current system performance is stunted by the performance deficits of human operators. These deficits are not inherent to human nature but are instead caused by an approach to digitalization and technical innovation that is indifferent to human needs and provides easy solutions that ultimately heighten the ironies of automation. The consequence of these ironies is not that we should halt innovation or need to stop automating production; instead, characteristics of human cognition need to be considered a key factor in the design of technological innovations. Through the consideration of the characteristics of both man and machine, in addition to the context in which they work together, human capabilities can be integrated harmonically into production systems and improve performance instead of lowering it. Being aware of the psychological basics, engineers can draw inspiration from a wealth of psychological theories and methods to enhance the design of technical innovation. Combining this knowledge with the possibilities of digitalization results in machines and assistance systems that support operators in dealing with more and more complex production settings. This will allow operators to grow more instead of less competent and play their role in realizing the full potential of digital technologies in highly automated production systems.

However, system designers and engineers first need to understand these problems and the impact of their design choices. Then, they can start to rethink their assumptions, methods, and goals and devise designs that help operators cope with the high task demands in complex systems. To this end, cross-domain knowledge-sharing needs to become commonplace. Psychological knowledge such as the basic theories of engineering psychology cited in this text should be readily available and prepared for non-domain experts in cross-domain publications. Going a step further, selected interdisciplinary topics could be integrated into the engineering curriculum to shape a new mindset for interaction design that stops human–machine competition and instead enables successful human–machine cooperation.

Author Contributions

Conceptualization, R.P.; writing—original draft preparation, R.P.; writing—review and editing, L.O.; visualization, R.P.; supervision, L.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bainbridge, L. Ironies of Automation. Automatica 1983, 19, 775–779. [Google Scholar] [CrossRef]

- Baxter, G.; Rooksby, J.; Wang, Y.; Khajeh-Hosseini, A. The Ironies of Automation: Still Going Strong at 30? In Proceedings of the 30th European Conference on Cognitive Ergonomics, Edinburgh, UK, 28 August 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 65–71. [Google Scholar]

- Strauch, B. Ironies of Automation: Still Unresolved After All These Years. IEEE Trans. Hum. Mach. Syst. 2017, 48, 419–433. [Google Scholar] [CrossRef]

- Taylor, F.W. Scientific Management; Harper and Row: New York, NY, USA, 1947. [Google Scholar]

- Hollnagel, E. Coping with Complexity: Past, Present and Future. Cogn. Technol. Work 2012, 14, 199–205. [Google Scholar] [CrossRef]

- Fischer, A.; Greiff, S.; Funke, J. The Process of Solving Complex Problems. J. Probl. Solving 2012, 4, 19–42. [Google Scholar] [CrossRef]

- Kluge, A. Controlling Complex Technical Systems: The Control Room Operator’s Tasks in Process Industries. In The Acquisition of Knowledge and Skills for Taskwork and Teamwork to Control Complex Technical Systems; Springer: Dordrecht, The Netherlands, 2014; pp. 11–47. ISBN 978-94-007-5048-7. [Google Scholar]

- Müller, R.; Oehm, L. Process Industries versus Discrete Processing: How System Characteristics Affect Operator Tasks. Cogn. Technol. Work 2019, 21, 337–356. [Google Scholar] [CrossRef]

- Klaeger, T.; Schult, A.; Oehm, L. Using Anomaly Detection to Support Classification of Fast Running (Packaging) Processes. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019. [Google Scholar]

- Goodstein, L.P. Discriminative Display Support for Process Operators. In Human Detection and Diagnosis of System Failures; Rasmussen, J., Rouse, W.B., Eds.; Springer: Boston, MA, USA, 1981; pp. 433–449. ISBN 978-1-4615-9232-7. [Google Scholar]

- Smith, P.J.; Bennett, K.B.; Stone, R.B. Representation Aiding to Support Performance on Problem-Solving Tasks. Rev. Hum. Factors Ergon. 2006, 2, 74–108. [Google Scholar] [CrossRef]

- Kagermann, H.; Wahlster, W.; Helbig, J. Recommendations for Implementing the Strategic Initiative INDUSTRIE 4.0 Final Report of the Industrie 4.0 Working Group; Forschungsunion: Berlin, Germany, 2013. [Google Scholar]

- Kinkel, S.; Friedewald, M.; Hüsing, B.; Lay, G.; Lindner, R. Arbeiten in Der Zukunft–Strukturen Und Trends Der Industriearbeit—Zukunftsreport; Büro Für Technikfolgen-Abschätzung beim Deutschen Bundestag: Berlin, Germany, 2007. [Google Scholar]

- Hirsch-Kreinsen, H. Wandel von Produktionsarbeit–“Industrie 4.0”. WSI-Mitt. 2014, 67, 421–429. [Google Scholar] [CrossRef]

- Hirsch-Kreinsen, H. Digitalisation and Low-Skilled Work. WISO Diskurs 2016, 19, 2016. [Google Scholar]

- Kagermann, H. Chancen von Industrie 4.0 nutzen. In Industrie 4.0 in Produktion, Automatisierung und Logistik; Bauernhansl, T., ten Hompel, M., Vogel-Heuser, B., Eds.; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2014; pp. 603–614. ISBN 978-3-658-04681-1. [Google Scholar]

- Mauermann, M. Methode Zur Analyse von Reinigungsprozessen in Nicht Immergierten Systemen der Lebensmittelindustrie; Technische Universität Dresden: Dresden, Germany, 2012. [Google Scholar]

- Rasmussen, J. Risk Management in a Dynamic Society: A Modelling Problem. Saf. Sci. 1997, 27, 183–213. [Google Scholar] [CrossRef]

- Leveson, N.G. Applying Systems Thinking to Analyze and Learn from Events. Saf. Sci. 2011, 49, 55–64. [Google Scholar] [CrossRef]

- Cook, R.I. How Complex Systems Fail; Cognitive Technologies Laboratory, University of Chicago: Chicago, IL, USA, 1998. [Google Scholar]

- Whittingham, R.B. The Blame Machine: Why Human Error Causes Accidents; Elsevier, Butterworth-Heinemann: Oxford, UK, 2004; ISBN 978-0-7506-5510-1. [Google Scholar]

- Rozenblit, L.; Keil, F. The Misunderstood Limits of Folk Science: An Illusion of Explanatory Depth. Cogn. Sci. 2002, 26, 521–562. [Google Scholar] [CrossRef] [PubMed]

- Alter, A.L.; Oppenheimer, D.M.; Zemla, J.C. Missing the Trees for the Forest: A Construal Level Account of the Illusion of Explanatory Depth. J. Personal. Soc. Psychol. 2010, 99, 436. [Google Scholar] [CrossRef] [PubMed]

- Perrow, C. Normal Accidents: Living with High Risk Technologies—Updated Edition; Princeton University Press: Princeton, NJ, USA, 2011; ISBN 978-1-4008-2849-4. [Google Scholar]

- Rasmussen, J.; Goodstein, L.P. Decision Support in Supervisory Control of High-Risk Industrial Systems. Automatica 1987, 23, 663–671. [Google Scholar] [CrossRef][Green Version]

- Report on the Accident to Airbus A320-211 Aircraft in Warsaw on 14 September 1993; Main Commission Aircraft Accident Investigation Warsaw: Warsaw, Poland, 1994.

- Windelband, L.; Fenzl, C.; Hunecker, F.; Riehle, T.; Spöttl, G.; Städtler, H.; Hribernik, K.; Thoben, K.-D. Zukünftige Qualifikationsanforderungen Durch Das “Internet Der Dinge“ in Der Logistik. In Frequenz.net (Hrsg.): Alifikationserfordernisse Durch das Internet der Dinge in der Logistik. Zusammenfassung der Studienergebnisse; Bremer Institut für Produktion und Logistik GmbH (BIBA): Bremen, Germany, 2011; pp. 5–9. [Google Scholar]

- Hollnagel, E.; Woods, D.D. Cognitive Systems Engineering: New Wine in New Bottles. Int. J. Hum. Comput. Stud. 1999, 51, 339–356. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Jensen, B.B.B.; Nordkvist, M.; Rasmussen, P.; Pedersen, B.; Kokholm, A.; Jensen, L.; Gernaey, K.V.; Krühne, U. Anomaly Analysis in Cleaning-in-Place Operations of an Industrial Brewery Fermenter. Ind. Eng. Chem. Res. 2018, 57, 12871–12883. [Google Scholar] [CrossRef]

- Dekker, S.W.A.; Woods, D.D. MABA-MABA or Abracadabra? Progress on Human-Automation Co-Ordination. Cogn. Technol. Work 2002, 4, 240–244. [Google Scholar] [CrossRef]

- Flugunfalluntersuchungsstelle. Aircraft Incident Report Airbus A-310-304 near Moscow on February 11, 1991 File-No.: & X 002-0/91; Flugunfalluntersuchungsstelle beim Luftfahrt-Bundesamt: Braunschweig, Germany, 1994. [Google Scholar]

- Hattie, J.; Timperley, H. The Power of Feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Mory, E.H. Feedback Research Revisited. In Handbook of Research on Educational Communications and Technology; Routledge: London, UK, 2013; pp. 738–776. [Google Scholar]

- Endsley, M.R.; Kiris, E.O. The Out-of-the-Loop Performance Problem and Level of Control in Automation. Hum. Factors 1995, 37, 381–394. [Google Scholar] [CrossRef]

- Endsley, M.R. Design and Evaluation for Situation Awareness Enhancement. Proc. Hum. Factors Soc. Annu. Meet. 1988, 32, 97–101. [Google Scholar] [CrossRef]

- Endsley, M.R. The Application of Human Factors to the Development of Expert Systems for Advanced Cockpits. Proc. Hum. Factors Soc. Annu. Meet. 1987, 31, 1388–1392. [Google Scholar] [CrossRef]

- Mosier, K.L.; Skitka, L.J. Human Decision Makers and Automated Decision Aids: Made for Each Other? In Automation and Human Performance: Theory and Applications; CRC Press: New York, NY, USA, 1996; p. 120. [Google Scholar]

- Parasuraman, R.; Manzey, D.H. Complacency and Bias in Human Use of Automation: An Attentional Integration. Hum. Factors J. Hum. Factors Ergon. Soc. 2010, 52, 381–410. [Google Scholar] [CrossRef] [PubMed]

- Rasmussen, J. The Human Data Processor as a System Component. Bits and Pieces of a Model; Riso-M-1722; Danish Atomic Energy Commission: Roskilde, Denmark, 1974. [Google Scholar]

- Rasmussen, J. Skills, Rules, and Knowledge; Signals, Signs, and Symbols, and Other Distinctions in Human Performance Models. IEEE Trans. Syst. Man Cybern. 1983, SMC-13, 257–266. [Google Scholar] [CrossRef]

- Rasmussen, J. Information Processing and Human-Machine Interaction. An Approach to Cognitive Engineering; North-Holland Series in System Science and Engineering; North-Holland: New York, NY, USA, 1986; ISBN 978-0-444-00987-6. [Google Scholar]

- Kott, M.; Oehm, L.; Klaeger, T. Nahtqualität im Prozess bewerten Fleischwirtschaft. Fleischwirtschaft 2019, 9, 40–41. [Google Scholar]

- Rasmussen, J. Human Errors. A Taxonomy for Describing Human Malfunction in Industrial Installations. J. Occup. Accid. 1982, 4, 311–333. [Google Scholar] [CrossRef]

- Childs, J.M.; Spears, W.D.; Prophet, W.W. Private Pilot Flight Skill Retention 8, 16, and 24 Months Following Certification; Embry-Riddle Aeronautical University: Daytona Beach, FL, USA, 1983. [Google Scholar]

- Ashby, W.R. An Introduction to Cybernetics; Chapman & Hall: London, UK, 1956. [Google Scholar]

- Vicente, K.J. Cognitive Work Analysis: Toward Safe, Productive, and Healthy Computer-Based Work; CRC Press: Boca Raton, FL, USA, 1999. [Google Scholar]

- Bennett, K.B. Representation Aiding: Complementary Decision Support for a Complex, Dynamic Control Task. IEEE Control Syst. 1992, 12, 19–24. [Google Scholar] [CrossRef]

- Pomerantz, J.R. Visual Form Perception: An Overview. In Pattern Recognition by Humans and Machines: Visual Perception; Academic Press: Cambridge, MA, USA, 1986; Volume 2, pp. 1–30. [Google Scholar]

- Jackson, P.R.; Wall, T.D. How Does Operator Control Enhance Performance of Advanced Manufacturing Technology. Ergonomics 1991, 34, 13. [Google Scholar] [CrossRef]

- Vicente, K.J.; Rasmussen, J. Ecological Interface Design: Theoretical Foundations. IEEE Trans. Syst. Man Cybern. 1992, 22, 589–606. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).