Human-like Attention-Driven Saliency Object Estimation in Dynamic Driving Scenes

Abstract

1. Introduction

- (1)

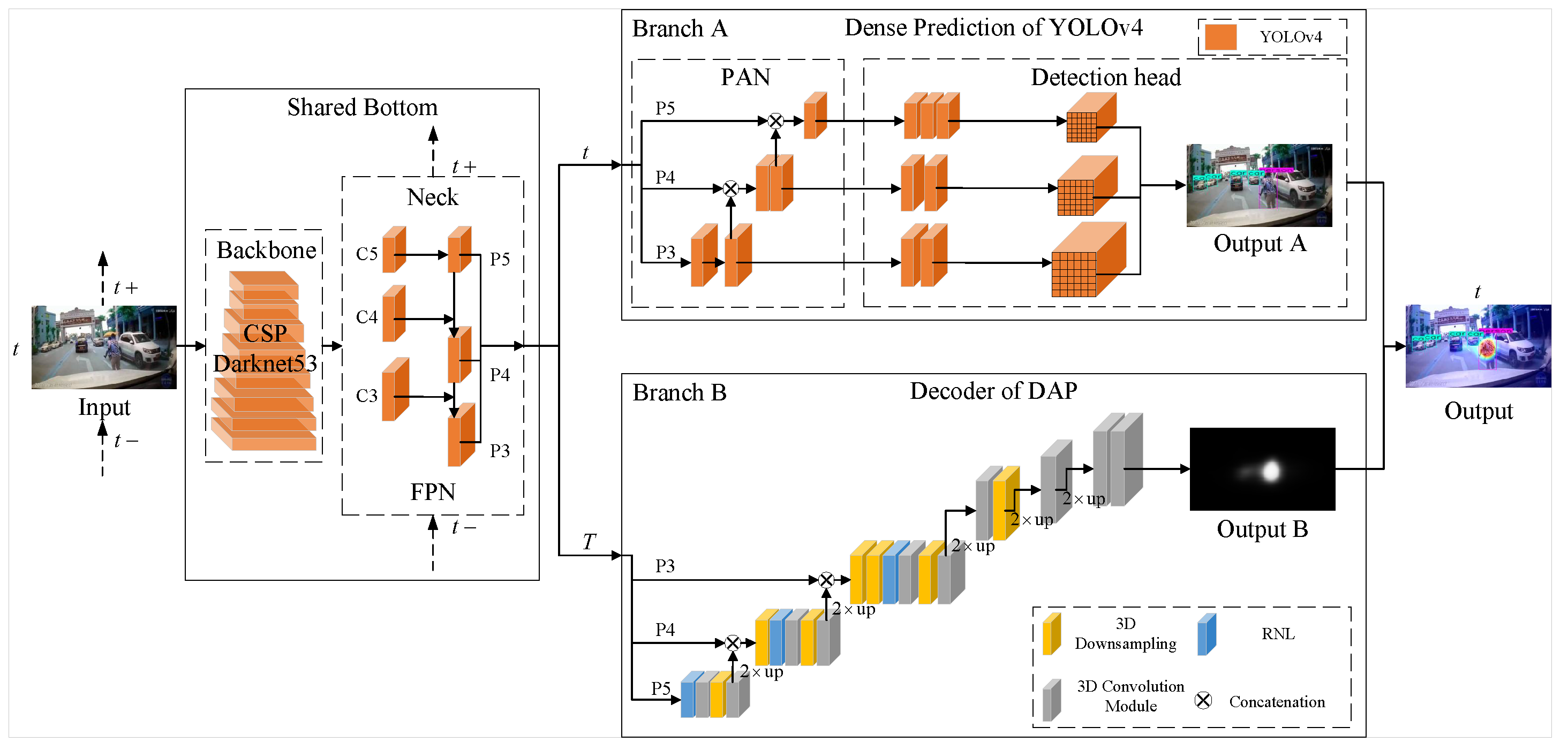

- Inspired by the human attention mechanism, we propose a human-like attention-driven SOE method based on a shared-bottom multi-task structure in dynamic driving scenes that can predict and detect the saliency, category, and location of objects in real time.

- (2)

- We propose a U-shaped encoder–decoder DAP network that is capable of performing feature-level fusion with any object detection network, achieving good portability and avoiding the disadvantage of repeatedly extracting bottom-level features.

- (3)

- We combine faster R-CNN and YOLOv4 with DAP to create SOE-F and SOE-Y, respectively. The experimental results on the DADA-2000 dataset demonstrate that our method can predict driver attention distribution and identify and locate salient objects in driving scenes with greater accuracy than competing methods.

2. Related Works

2.1. Driver Attention Prediction

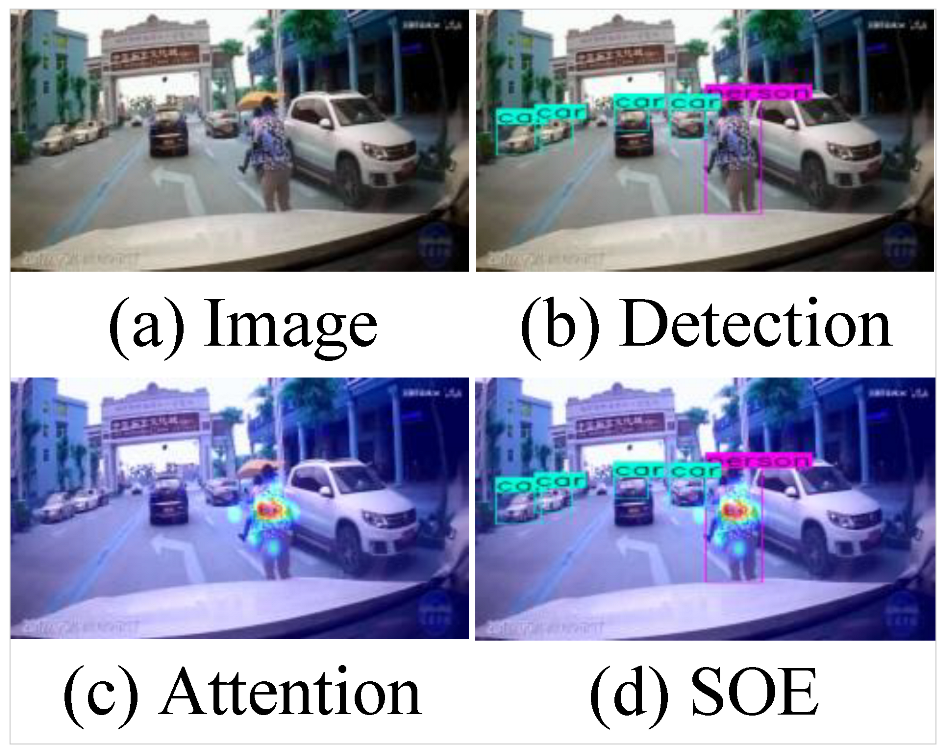

2.2. Saliency Object Estimation

2.3. Multi-Task Learning and Domain Adaption

3. Methods

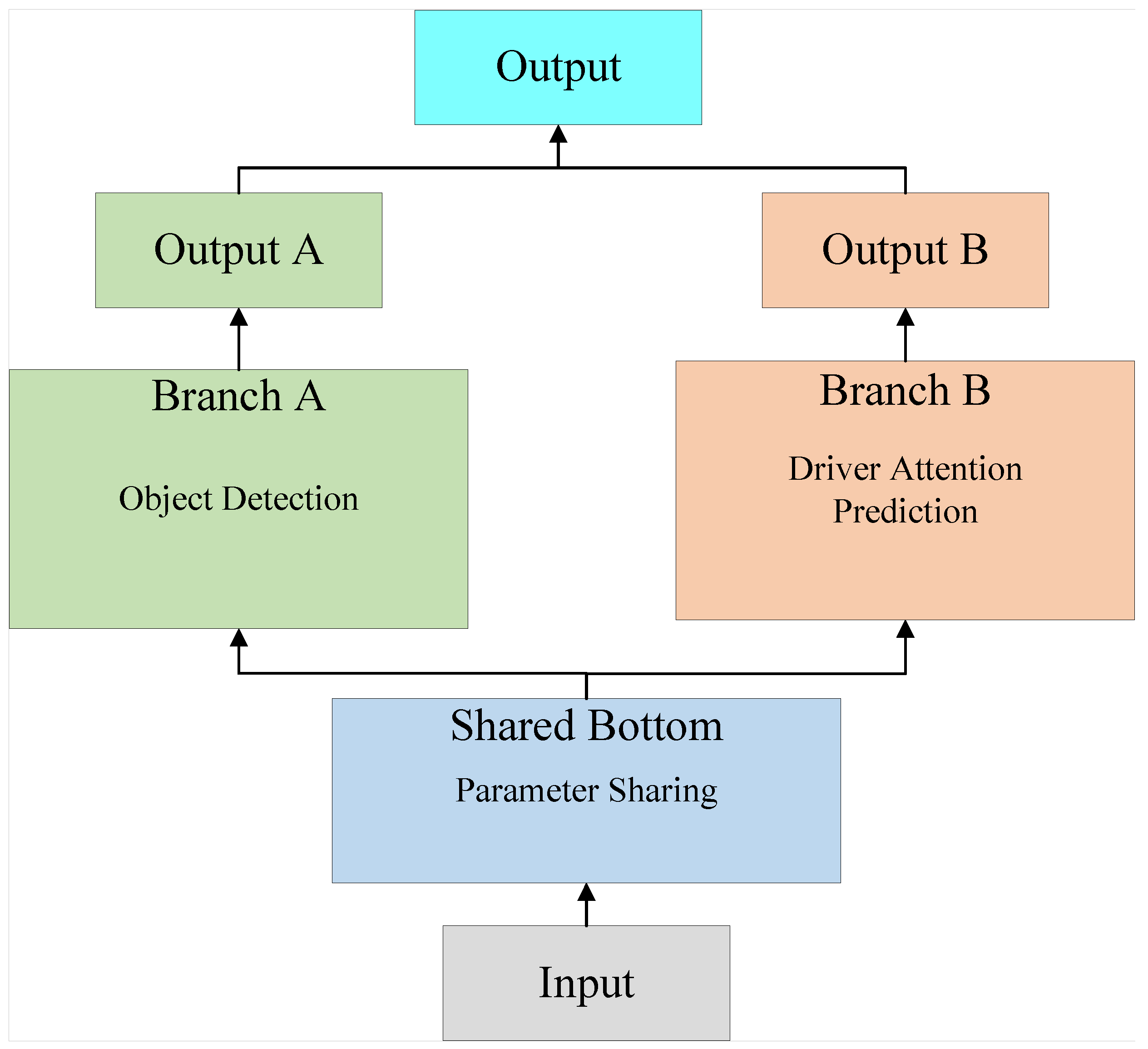

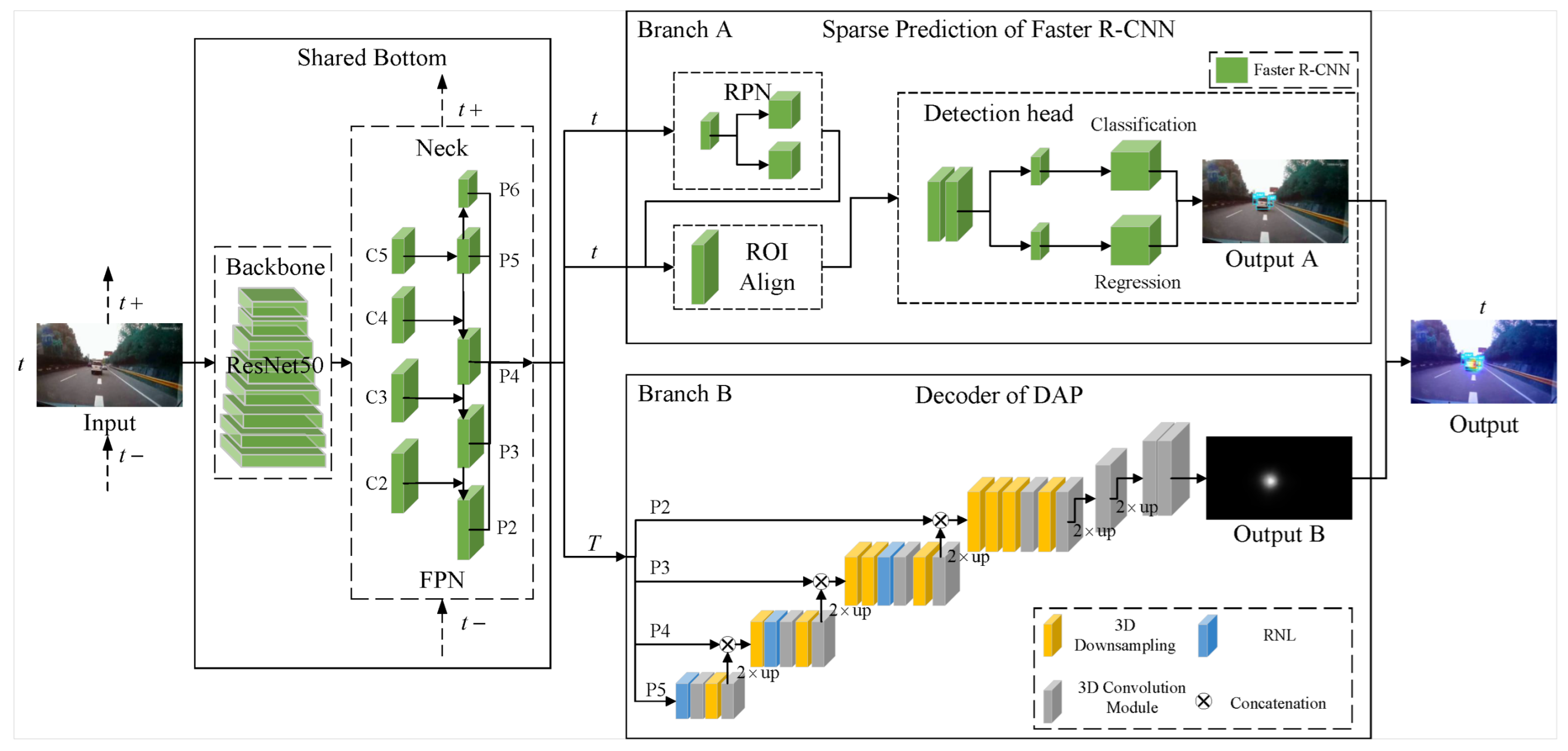

3.1. Saliency Object Estimation Framework

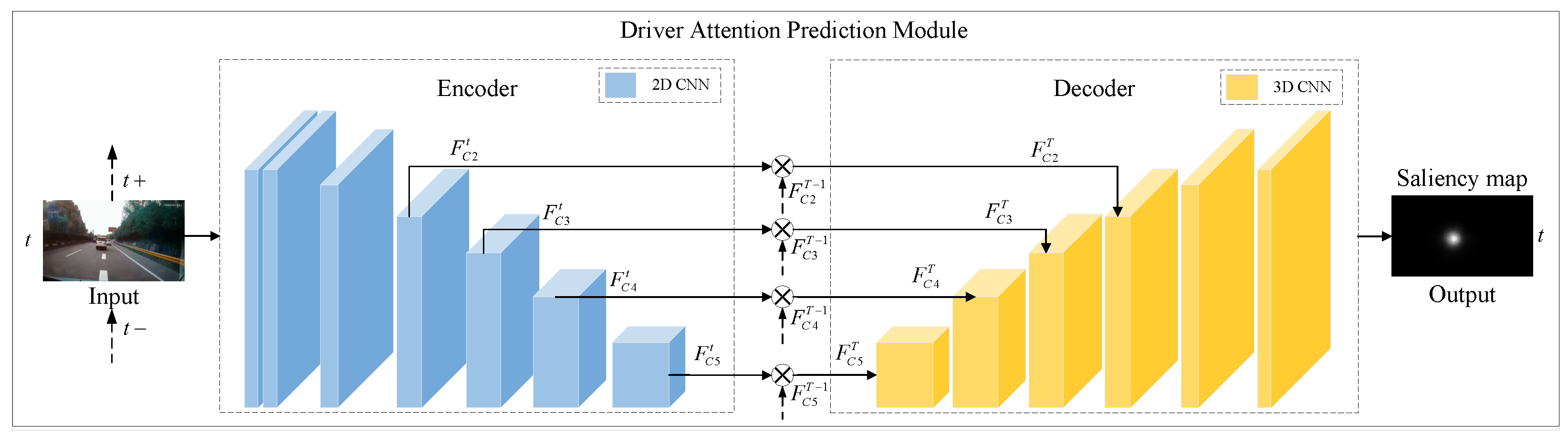

3.2. Driver Attention Prediction Module

3.3. Saliency Object Estimation Network

3.4. Loss Functions

4. Experiments

4.1. Experiment Setup

4.2. Performance Comparison

4.3. Ablation Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Suman, V.; Bera, A. RAIST: Learning Risk Aware Traffic Interactions via Spatio-Temporal Graph Convolutional Networks. arXiv 2020, arXiv:2011.08722. [Google Scholar]

- Wolfe, J.M.; Horowitz, T.S. Five factors that guide attention in visual search. Nat. Hum. Behav. 2017, 1, 58. [Google Scholar] [CrossRef]

- Zhang, Z.; Tawari, A.; Martin, S.; Crandall, D. Interaction graphs for object importance estimation in on-road driving videos. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 8920–8927. [Google Scholar]

- Wang, W.; Shen, J.; Guo, F.; Cheng, M.M.; Borji, A. Revisiting video saliency: A large-scale benchmark and a new model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4894–4903. [Google Scholar]

- Alletto, S.; Palazzi, A.; Solera, F.; Calderara, S.; Cucchiara, R. Dr (eye) ve: A dataset for attention-based tasks with applications to autonomous and assisted driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 54–60. [Google Scholar]

- Fang, J.; Yan, D.; Qiao, J.; Xue, J.; Wang, H.; Li, S. Dada-2000: Can driving accident be predicted by driver attentionƒ analyzed by a benchmark. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4303–4309. [Google Scholar]

- Xia, Y.; Zhang, D.; Kim, J.; Nakayama, K.; Zipser, K.; Whitney, D. Predicting driver attention in critical situations. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 658–674. [Google Scholar]

- Deng, T.; Yan, H.; Qin, L.; Ngo, T.; Manjunath, B. How do drivers allocate their potential attention? driving fixation prediction via convolutional neural networks. IEEE Trans. Intell. Transp. Syst. 2019, 21, 2146–2154. [Google Scholar] [CrossRef]

- Li, Q.; Liu, C.; Chang, F.; Li, S.; Liu, H.; Liu, Z. Adaptive Short-Temporal Induced Aware Fusion Network for Predicting Attention Regions Like a Driver. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18695–18706. [Google Scholar] [CrossRef]

- Caruana, R. Multitask learning. Mach. Learn. 1997, 28, 41–75. [Google Scholar] [CrossRef]

- Droste, R.; Jiao, J.; Noble, J.A. Unified image and video saliency modeling. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 419–435. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lai, Q.; Wang, W.; Sun, H.; Shen, J. Video saliency prediction using spatiotemporal residual attentive networks. IEEE Trans. Image Process. 2019, 29, 1113–1126. [Google Scholar] [CrossRef] [PubMed]

- Min, K.; Corso, J.J. Tased-net: Temporally-aggregating spatial encoder-decoder network for video saliency detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2394–2403. [Google Scholar]

- Palazzi, A.; Abati, D.; Solera, F.; Cucchiara, R. Predicting the Driver’s Focus of Attention: The DR (eye) VE Project. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1720–1733. [Google Scholar] [CrossRef] [PubMed]

- Fang, J.; Yan, D.; Qiao, J.; Xue, J.; Yu, H. DADA: Driver attention prediction in driving accident scenarios. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4959–4971. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Gao, M.; Tawari, A.; Martin, S. Goal-oriented object importance estimation in on-road driving videos. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5509–5515. [Google Scholar]

- Xu, D.; Ouyang, W.; Wang, X.; Sebe, N. Pad-net: Multi-tasks guided prediction-and-distillation network for simultaneous depth estimation and scene parsing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 675–684. [Google Scholar]

- Gao, Y.; Ma, J.; Zhao, M.; Liu, W.; Yuille, A.L. Nddr-cnn: Layerwise feature fusing in multi-task cnns by neural discriminative dimensionality reduction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3205–3214. [Google Scholar]

- Chang, W.G.; You, T.; Seo, S.; Kwak, S.; Han, B. Domain-specific batch normalization for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7354–7362. [Google Scholar]

- Ma, J.; Zhao, Z.; Yi, X.; Chen, J.; Hong, L.; Chi, E.H. Modeling task relationships in multi-task learning with multi-gate mixture-of-experts. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1930–1939. [Google Scholar]

- Khattar, A.; Hegde, S.; Hebbalaguppe, R. Cross-domain multi-task learning for object detection and saliency estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3639–3648. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Huang, G.; Bors, A.G. Region-based non-local operation for video classification. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 10010–10017. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Li, J.; Xia, C.; Song, Y.; Fang, S.; Chen, X. A data-driven metric for comprehensive evaluation of saliency models. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 190–198. [Google Scholar]

- Bylinskii, Z.; Judd, T.; Oliva, A.; Torralba, A.; Durand, F. What do different evaluation metrics tell us about saliency models? IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 740–757. [Google Scholar] [CrossRef] [PubMed]

- Perry, J.S.; Geisler, W.S. Gaze-contingent real-time simulation of arbitrary visual fields. In Proceedings of the Human Vision and Electronic Imaging VII, San Jose, CA, USA, 19 January 2002; SPIE: Bellingham, WA, USA, 2002; Volume 4662, pp. 57–69. [Google Scholar]

- Jiang, M.; Huang, S.; Duan, J.; Zhao, Q. Salicon: Saliency in context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1072–1080. [Google Scholar]

- Zhang, K.; Chen, Z. Video saliency prediction based on spatial-temporal two-stream network. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 3544–3557. [Google Scholar] [CrossRef]

- Cornia, M.; Baraldi, L.; Serra, G.; Cucchiara, R. A deep multi-level network for saliency prediction. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3488–3493. [Google Scholar]

| Methods | Fixation Point Map | Saliency Map | ||||

|---|---|---|---|---|---|---|

| NSS↑ | AUC-J↑ | s-AUC↑ | SIM↑ | CC↑ | KL↓ | |

| SALICON [34] | 2.71 | 0.91 | 0.65 | 0.30 | 0.43 | 2.17 |

| Two-Stream [35] | 1.48 | 0.84 | 0.64 | 0.14 | 0.23 | 2.85 |

| MLNet [36] | 0.30 | 0.59 | 0.54 | 0.07 | 0.04 | 11.78 |

| BDD-A [7] | 2.15 | 0.86 | 0.63 | 0.25 | 0.33 | 3.32 |

| DR(eye)VE [16] | 2.92 | 0.91 | 0.64 | 0.32 | 0.45 | 2.27 |

| ACLNet [4] | 3.15 | 0.91 | 0.64 | 0.35 | 0.48 | 2.51 |

| SCAFNet [17] | 3.34 | 0.92 | 0.66 | 0.37 | 0.50 | 2.19 |

| ASIAF-Net [9] | 3.39 | 0.93 | 0.78 | 0.36 | 0.49 | 1.66 |

| SOE-Y | 3.38 | 0.93 | 0.81 | 0.34 | 0.50 | 1.64 |

| SOE-F | 3.47 | 0.93 | 0.84 | 0.34 | 0.51 | 1.60 |

| Methods | Model Size of Shared Bottom (MB) | Model Size of Branch A (MB) | Model Size of Branch B (MB) | Model Size (MB) | Runtime (s) |

|---|---|---|---|---|---|

| SOE-Y | 170.2 | 86.8 | 31.4 | 288.4 | 0.03 |

| SOE-F | 110.1 | 57.4 | 15.2 | 182.7 | 0.08 |

| Methods | Model Size (MB) | Runtime (s) |

|---|---|---|

| SALICON [34] | 117 | 0.5 |

| Two-Stream [35] | 315 | 20 |

| ACLNet [4] | 250 | 0.02 |

| DR(eye)VE [16] | 155 | 0.03 |

| SOE-Y | 288.4 | 0.03 |

| SOE-F | 182.7 | 0.08 |

| Methods | Fixation Point Map | Saliency Map | ||||

|---|---|---|---|---|---|---|

| NSS↑ | AUC-J↑ | s-AUC↑ | SIM↑ | CC↑ | KL↓ | |

| SOE-F (without RNL) | 3.447 | 0.931 | 0.839 | 0.340 | 0.513 | 1.608 |

| SOE-F | 3.471 | 0.932 | 0.840 | 0.340 | 0.514 | 1.596 |

| SOE-Y (without RNL) | 3.363 | 0.929 | 0.813 | 0.341 | 0.502 | 1.641 |

| SOE-Y | 3.384 | 0.929 | 0.814 | 0.343 | 0.503 | 1.637 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, L.; Ji, B.; Guo, B. Human-like Attention-Driven Saliency Object Estimation in Dynamic Driving Scenes. Machines 2022, 10, 1172. https://doi.org/10.3390/machines10121172

Jin L, Ji B, Guo B. Human-like Attention-Driven Saliency Object Estimation in Dynamic Driving Scenes. Machines. 2022; 10(12):1172. https://doi.org/10.3390/machines10121172

Chicago/Turabian StyleJin, Lisheng, Bingdong Ji, and Baicang Guo. 2022. "Human-like Attention-Driven Saliency Object Estimation in Dynamic Driving Scenes" Machines 10, no. 12: 1172. https://doi.org/10.3390/machines10121172

APA StyleJin, L., Ji, B., & Guo, B. (2022). Human-like Attention-Driven Saliency Object Estimation in Dynamic Driving Scenes. Machines, 10(12), 1172. https://doi.org/10.3390/machines10121172