1. Introduction

Grasping objects is a canonical robotics problem in manipulator operation, which can be used in assembling [

1], welding [

2], and so on. Especially, vision-based robotic grasping has been studied for many years with great process. That is, through visual perception technology, select the appropriate grasping posture to grasp the objects. However, due to the different sizes and shapes of objects, the chaotic stacking of objects, and the inability to collide between objects during the grasping process, achieving fast and accurate grasping is still a very challenging topic. According to [

3], the vision-based robotic grasping mainly includes three essential tasks: object localization, object pose estimation and grasp estimation.

Early robotic grasping research focused on matching 3D models of objects to estimate the pose of the object. The process is as follows: in the offline stage, the virtual camera is used to render around the 3D model to extract the features of the object in each camera pose. Then, the features and the corresponding pose transformation relationship between the object and the camera are saved in the grasping database. In the online stage, after the camera captures the image, performs feature extraction, compares the extracted features with the features extracted in the offline stage, and selects the one with the highest similarity as the pose transformation relationship between the camera and the object. In the traditional methods, artificially designed features are used for feature matching. The main artificial features include SIFT [

4], Linemod [

5] and PPF [

6]. However, these feature-based methods fail when the objects do not have rich textures. Even when multiple objects with rich textures are in the scene, creating a template for each object is necessary. In addition, it is not always possible to obtain a 3D model of the object. With the significant progress of deep learning in image processing, vision and learning-based techniques are applied to estimate the pose of the unknown objects [

7]. For example, to estimate the 6D object pose directly, PoseCNN [

8] is proposed. The features are extracted by a multi-layer convolutional neural network, and two fully convolutional networks are used to estimate the 3D translation of the object. To calculate the 3D rotation, a quaternion representation is regressed by the fully connected networks. According to [

9], PVNet is used to vote on the projected 2D feature points, and then find their corresponding relationship to calculate the 6D pose of the object. However, most pose estimation methods based on deep learning require large-scale computing resources, which limits the application of robotic grasping in the real world.

Many researchers study the direct estimation of grasping poses. For example, the Generative Grasping Convolutional Neural Network (GG-CNN) [

10] is proposed by Douglas Morrison et al. It is learned from the Cornell Grasp Dataset and predicts a pixel-wise grasp quality. DexNet [

11,

12,

13] learns from a dataset that includes over 10,000 object models and 2.5 million parallel jaw grasps and achieves good performance in grasping unknown objects. The 3D-CNN [

14] converts irregular point clouds into a regularly distributed neural network for processing. To extract the 3D spatial structure features, PointNet [

15] is built to process the input point cloud data directly. The current methods assume that objects in a scene are scattered. However, occlusions usually occur. In the cluttered environment, since objects are self-occluded or occluded by other objects, it is still challenging to design effective grasping strategies for stacked objects. Refs. [

16,

17,

18,

19] combine deep learning and reinforcement learning for robotic grasping, which map RGB-D images to specific action policies. Kalashnikov et al. propose a scalable reinforcement learning grasping method QT-Opt [

20], with a final grasping accuracy of around 96%. In their method, the robot has been trained 800,000 grasping attempts, and the training process is as long as 3000 h. Mahler et al. use the Dex-Net 4.0 behavior strategy to clean up 25 unknown items at an average grasping speed of 300 times/h, which proved that the model can highly adaptable in unknown environments [

21]. However, these methods require many data sources and often need significant time and resources to collect the data.

To realize the fast and accurate grasping of the robot in the cluttered environment, the synergies of two primitive actions (pushing and grasping) based on a single fixed viewpoint have been applied to robotic grasping and achieve a good performance. For instance, based on the fixed viewpoint camera data, ref. [

22] proposed a visual push-to-grasp cooperation strategy to improve the grasping success rate in cluttered and occluded environments. However, the pushing action may cause objects to collide with each other, which is not suitable for grasping fragile objects.

The above methods all belong to the scope of passive perception. That is, the pose of the camera is fixed. Insufficient data information captured by the camera, which lacks the information backwards, makes it difficult to decide the grasp when there is no the full object geometry. While pushing can separate objects from each other, it is not suitable for scenarios where objects cannot collide with each other during the grasping process. In addition, the push action may cause the object to move outside the fixed camera’s FOV, making it challenging to remove it from the scene.

The active vision framework is proposed to solve the problem of fixed single-viewpoint methods, i.e., by actively moving the camera to the best viewpoint [

7]. A multi-view method is proposed by Douglas Morrison et al. [

23] to select informative viewpoints based on the distribution of grasping poses caused by clutter and occlusions. Ref. [

24] explores the relationship between viewpoints and grasp performance. They propose a smart viewpoint selection algorithm. When an object’s rough grasping pose is known, an optimal viewpoint is calculated to improve grasping accuracy. A reinforcement learning technique is employed by Calli et al. [

25] to obtain a viewpoint optimization policy that optimizes the viewpoint to improve the quality of the synthesized grasp over time to raise the success rates. An active vision strategy is proposed in [

26] to optimize the viewpoint based on extremum seeking control. According to the strategy, the data quality of the underlying algorithm can be improved. In addition to actively adjusting viewpoint of camera, the method of actively changing the scene also achieves good grasp performance. For example, ref. [

27] proposes a strategy for separating objects from their surrounding clutter consisting of previously unseen objects through lateral pushing motions. It is designed to separate a single specific target, not all objects in a complex environment. Through a literature survey, we found that the success rate of using active visual perception methods was improved compared to passive visual perception methods. However, in the active multi-view method, the robot must move at least twice to grasp the object. As the object is grasped away from the workspace, the complexity in the scene is reduced. The robot can obtain the grasping pose without adjusting the camera pose. Therefore, it is redundant to adjust the camera pose in all the time, resulting in low efficiency of grasping in many scenarios.

In the above methods, the robot cannot independently adjust the viewpoint according to the scene, which leads to the a low success rate of grasping and scene clearing rate when grasping objects in a cluttered environment. Inspired by human dexterity, the robot should have the ability to decide whether it needs to adjust the viewpoint to obtain a better grasp pose according to the scene. Therefore, we propose a Viewpoint Adjusting and Grasping Synergy (VAGS) strategy to enable the robot to adjust the viewpoint of the camera independently. To summarize, the main contributions of the paper are:

We propose a deep reinforcement learning-based VAGS strategy for fast and accurate grasping in cluttered scenes. To the best of our knowledge, the strategy is the first to synergize viewpoint adjusting and directly grasping through self-supervised trials. Furthermore, we have shown through experiments that the strategy is rather effective and provides good results for robotic grasping.

A DAES method based on -greedy is proposed to speed up the training of VAGS. Different from the traditional -greedy action exploration strategy in which the robot explores the entire workspace, the robot only selects pixels with objects as grasping actions to suppress unreasonable actions.

A staged training scheme is proposed to address the problem that viewpoint adjusting and direct grasping cannot be synergized during synchronous training due to the different output channels of the direct grasping network and the viewpoint adjustment network. The scheme provides a new training method for the synergy of two action primitives.

3. Method

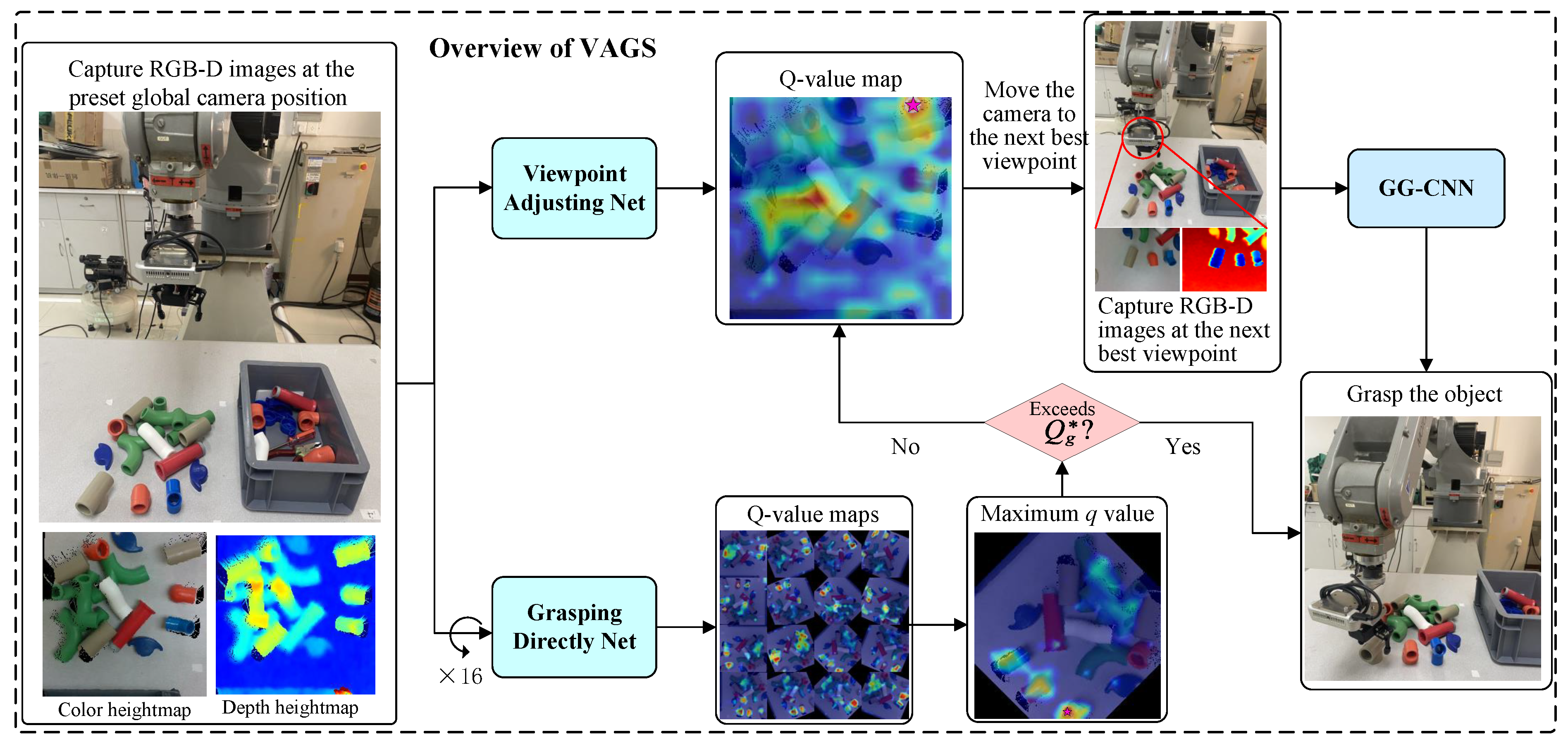

This section discusses the overall learning-based grasping framework and the details.

It is a synergy optimization problem to adjust the viewpoint and grasp the object using a robot. The synergy optimization problem is modeled as a Markov Decision Process

, which includes state space

S, action space

A, transition probability function

P, and reward function

R. In this paper, off-policy Q-learning is used to train a synergy strategy that chooses the best primitive actions (viewpoint adjusting and grasping) by maximizing the Q-function. In the synergy strategy, the state

at time

t is defined as a pair of state maps, consisting of a color heightmap and a depth heightmap. The color heightmap and a depth heightmap are computed by the following steps: First, the eye-in-hand camera captures the color and depth images at a preset global camera position where the entire workspace can be observed. Second, to avoid the effect of resolution, the color and depth images are projected onto a 3D point cloud and converted into the robot coordinate system. Then, the converted point cloud projected vertically towards gravity, constructing a heightmap image representation with both color (RGB) and height-from-bottom (D) channels, as shown in

Figure 2. Based on the size of the workspace (0.448 m

2) and the physical size represented by each pixel (2 mm), we set the resolution of heightmaps as

.

For the action

, inspired by [

22], we define as (

1):

where

is the action (e.g., viewpoint adjusting or grasping directly) with the 3D pose

q. The details of the action are defined as follows:

Viewpoint Adjusting: q denotes the pose of the camera mounted on the end of the robot. The pose of the camera contains six degrees of freedom: . is the camera positions, and is the camera orientations. To reduce complexity and improve training efficiency, we simplify the pose of the camera to three of freedom: , and is preset. We parameterize the simplified pose of the camera with the pixel of the heightmap image representation of the state . Based on experience, z is set 25 cm above the pixel of the depth heightmap. r and c represent the row and column coordinates of the pixel point, respectively. So q is mapping to p.

Grasping: refer to [

22]; a top-down paralleled-jaw gripper’s center position is represented by

q, and one of

grasping orientations is the grasping angle of the gripper. When grasping the object, the center point of the gripper jaw moves 3 cm below

q (in the direction of gravity). The overview of our proposed grasping system is shown in

Figure 2. An RGB-D camera is mounted at the end of the robot. When the camera is moved to a preset global camera position, the color and depth images of are captures and then converted to a color height map and a depth height map. Then, the height maps are used as the input of the viewpoint adjusting net to obtain a

q-value map. At the same time, the height maps are rotated 16 times, each rotation angle increases by 22.5 degrees, and be used as the input of the grasping directly net to obtain 16

q-value maps. If the maximum grasping

q value output by the grasping directly net is greater than the grasping threshold, the robot grasps the object directly. Otherwise, the camera is moved to the next viewpoint according to the output of the viewpoint adjusting net and capturing the depth image. We have demonstrated in experiments that when there are few objects in the scene, the grasping success rate of grasping detection using Grasping Directly Net (GDNet) is not much different from that using GG-CNN for grasping detection. In addition, our graphics card is only 8 GB. So if we use GDNet for grasping detection at the next viewpoint, it will exceed the graphics card’s memory. Therefore, the GG-CNN which pretrained on the cornell grasping dataset is used to predict the grasping pose at the next viewpoint and then the robot performs the grasping action.

3.1. Optimal Action Value Function and Policy

The strategy network includes Viewpoint Adjustment Net (VANet) and Grasping Directly Net (GDNet). The parallel 121-layer DenseNet [

28] is their backbone network, which is pretrained on ImageNet [

29] to extract features from the color heightmap and depth heightmap. Concatenate the features extracted by densenet as the input of the following network, including 2 additional

convolutional layers, nonlinear activation functions (ReLU) [

30], and spatial batch normalization [

31]. They are used for further feature embedding. Finally, the bilinear interpolation layer is used to obtain the

q value maps of the same size and resolution as the heightmaps. The heightmaps representing

are used as input to the networks. The

q value output by the GDNet at a pixel

p represents the grasping quality score for executing grasping action at the pixel

p. In addition, the

q value output by the VANet at a pixel

p represents the score for executing viewpoint adjusting action at the pixel

p.

The loss of the GDNet and VANet has the same loss function [

22], defined as:

where

is the weight parameter of the current network,

is the weight parameter of the target network,

is the output of the current network in the state and action

,

is the output of the target network.

3.2. Training Details

The output channels of the GDNet and VANet are different which the GDNet is 16 and VANet is 1. We only consider whether the grasping is successful for the reward function setting, not the grasping time. If we train GDNet and VANet together, the robot always grasps the object after adjusting the viewpoint to obtain the maximum reward. Therefore, we train the model in three stages. The GDNet is trained in the first stage. The VANet is trained in the second stage. In the last stage, the models are alternately trained in scenes with 1 to 10 objects to improve the synergy between GDNet and VANet. The training time of the entire model takes about 15 h.

(1) Grasping Directly: To train the GDNet, the clutter of random stacking in the scene needs to be reduced. Therefore, at this stage, no more than 5 objects are selected. Their colors and shapes are randomly chosen to increase the robustness of the network in the scene during training. The grasping reward function is defined as:

As shown in (

3), when the object is successfully grasped, the reward is 1. Otherwise, the reward is 0.

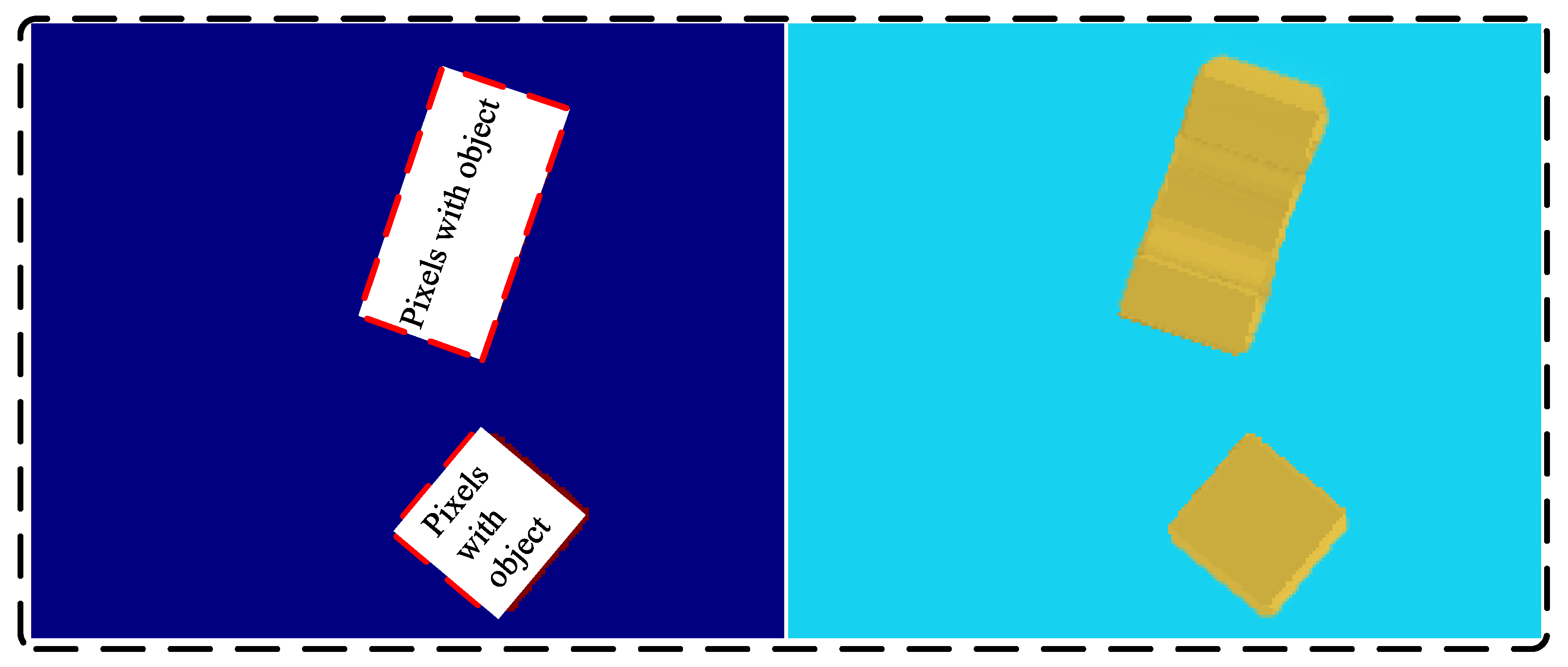

We train the strategy with the prioritized experience replay [

32] and

-greedy exploration strategy. However, in the traditional exploration strategy based on

-greedy algorithm, the entire workspace is often used for the exploration of space. When the robot explores the action space, it will perform many invalid actions, resulting in low learning efficiency. In robotic grasping tasks, grasping is only feasible when the grasping point is located on the object. Therefore, a Dynamic Action Exploration Space (DAES) method is proposed to suppress unreasonable actions of the robot. Specifically, the robot only selects the pixels with objects as grasping points, which is shown in (

4):

where

represents the exploration probability, and

g is a random number generated from a uniform distribution in

. If

, the grasping points and the grasp rotation with regard to the z-axis are predicted by the GDNet. The pixel coordinates of the maximum grasp score output by the network are transformed by coordinates as the grasping point. And the index of the

q value map where the largest grasping score value located is multiplied by 22.5° (

°) as the grasp rotation angle with regard to the z-axis. Otherwise, a pixel in the pixel set of the objects, as shown by

S in (

4), will be selected as the grasping point after the coordinate transformation. At the same time, an integer between 0 and 15 is randomly selected and multiplied by 22.5° as the grasp rotation with regard to the z-axis.

As shown in

Figure 3, there are two objects in the workspace. If the entire workspace is used for the exploration, the robot needs to explore 802,816 (

) actions. According to our DAES method, the count of the pixels of the object to obtain the action space of 61,968. The exploration space of the robot is significantly reduced, which can help accelerate the convergence of network training.

(2) Viewpoint Adjusting: In this stage of training, the parameters of the GDNet model obtained in the first stage are fixed. And randomly place 1 to 10 objects in the scene. The heightmaps captured by the camera at the global camera position are input to the VANet to predict a

q value map. At the same time, as shown in

Figure 2, the heightmaps are rotated and input to the GDNet to predict 16

q value maps. If the maximum grasping score output by the GDNet is greater than the grasping threshold

, the robot grasps the object directly. Otherwise, the pixel coordinate where the maximum grasp score of the VANet output is located will be selected as the next best viewpoint of the camera after the coordinate transformation. The camera will be moved to the target viewpoint to observe the local scene, obtaining a better grasping pose. The reward function in this stage is shown in (

5):

where

represents the largest grasping score output by the GDNet after the execution of viewpoint adjusting and then grasping. In addition,

represents the largest grasping score output by the GDNet before the execution of viewpoint adjusting then grasping the object. When the robot executes viewpoint adjusting and then grasping action, the reward is

if the object is successfully grasped and

. It means that the robot grasps the object successfully and creates a better condition to grasp the next object directly. So the reward is maximum, which is determined by the condition created. The other procedures are the same as the training in the first stage.

(3) Alternating Training: In the first stage of training, the GDNet is trained in the scene with fewer objects and a lower stacking. While the VANet is trained in the scene with more objects and a higher stacking. It will lead to the problem of distribution mismatch because the GDNet is trained before VANet training and in scenarios with fewer objects. It cannot accurately predict the new scene with more objects. Therefore, at this stage, the VANet and the GDNet are alternately trained to enhance the synergy of the two action primitives.

Figure 4 shows the training process.

In

Figure 4,

are the weight parameters of the VANet trained in the first stage, and

are the weight parameters of the GDNet trained in the second stage. The camera with the robot’s end is moved to the global camera position to capture RGB-D images. Then they are converted to color heightmap and depth heightmap. As shown in

Figure 2, the heightmaps are rotated and input into the GDNet. If the largest grasping score output by the GDNet is greater than the grasping threshold

,

represents grasping directly. Otherwise,

represents viewpoint adjusting. If

is grasping directly, after the robot executes the grasping action to interact with the environment, the reward

and the next state

will be obtained according to (2). Then store

as a set of experiences into the grasping directly experience pool. A group of experiences different from the currently stored experience will be randomly selected from the experience pool and input to the target GDNet to obtain the target value

. Then the parameters of the GDNet are updated according to the parameter update method described in the first stage. If

is viewpoint adjusting, update the parameters of the VANet such as by updating the parameters of the GDNet.

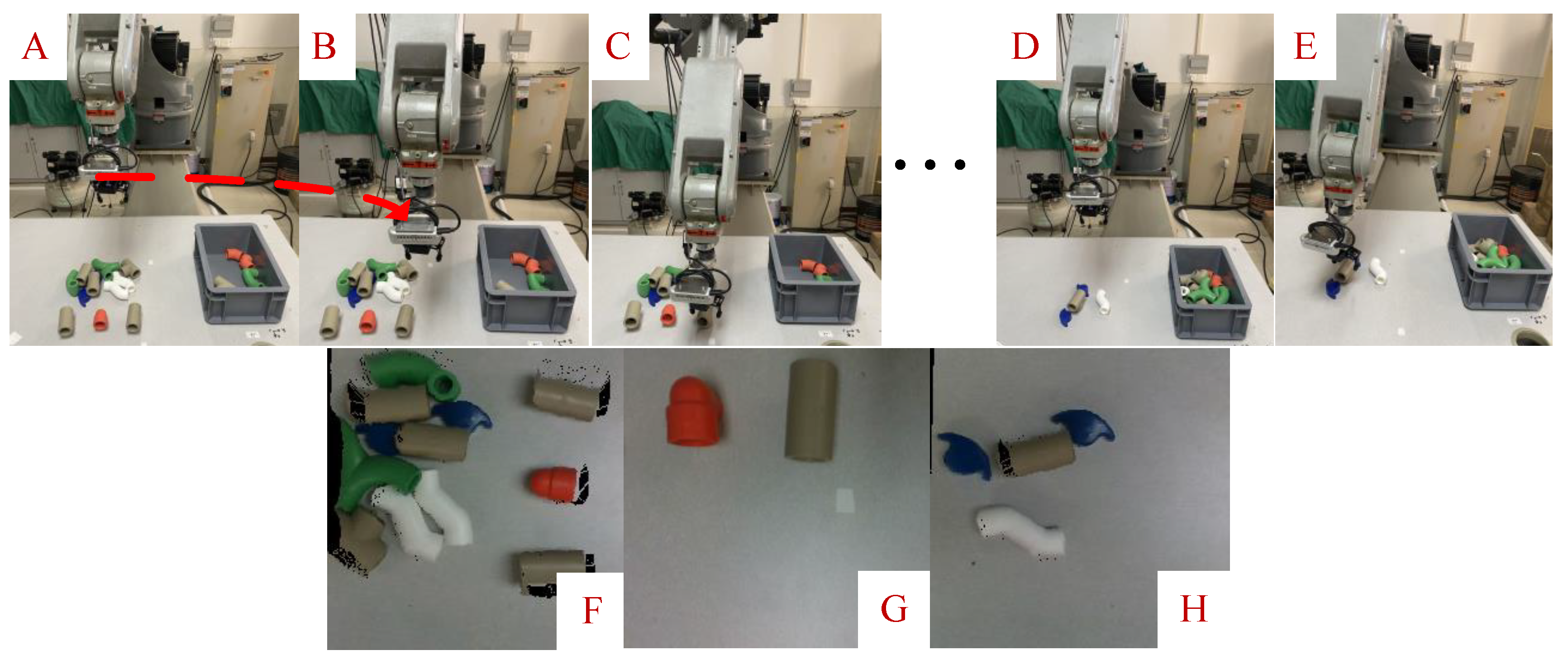

4. Experiment

To test the proposed strategy, several experiments were executed in both simulation environment and the real world. The goals of the experiments are: (1) to test that the proposed VAGS strategy is effective on the grasping task of randomly stacked objects and improves the grasping success rate and scene clearing rate in simulation (

Section 4.4). (2) to demonstrate the proposed DAES method can shorten the training process effectively, and to test the grasping detection performance of VANet. (

Section 4.5). (3) to test the grasping performance of the proposed VAGS strategy in the real-world scenarios (

Section 4.6).

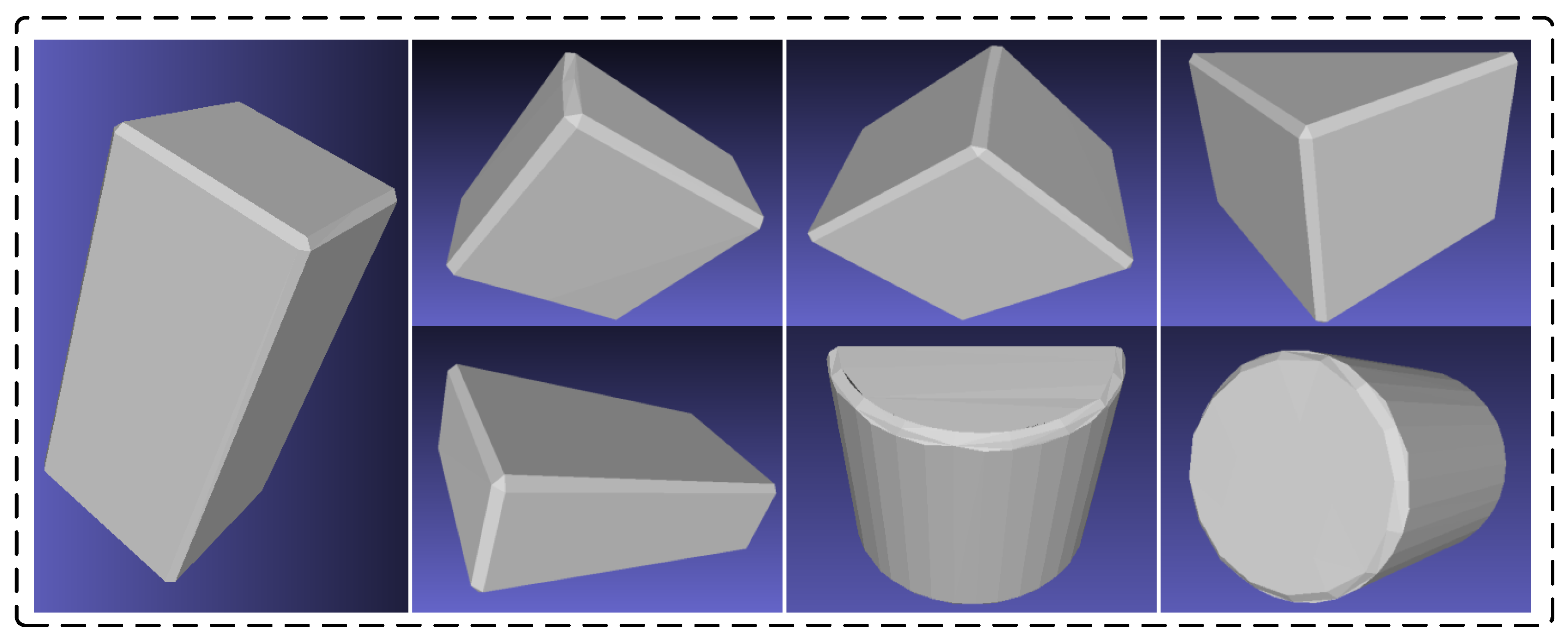

4.1. Configuration of Experimental Environment

Code with PyTorch framework on Unbutu20.04 LTS OS with Intel Core i7-7700K, 16 GB RAM, and 8 GB NVIDIA GeForce GTX 1080 graphics. Simulation experiments are carried out on V-REP [

33] to build a UR5 manipulator with an RG2 gripper and an RGB-D camera (as shown in

Figure 4). The Remote-API of the V-REP is called to obtain the pose of the UR5 manipulator and the RGB-D images with a resolution

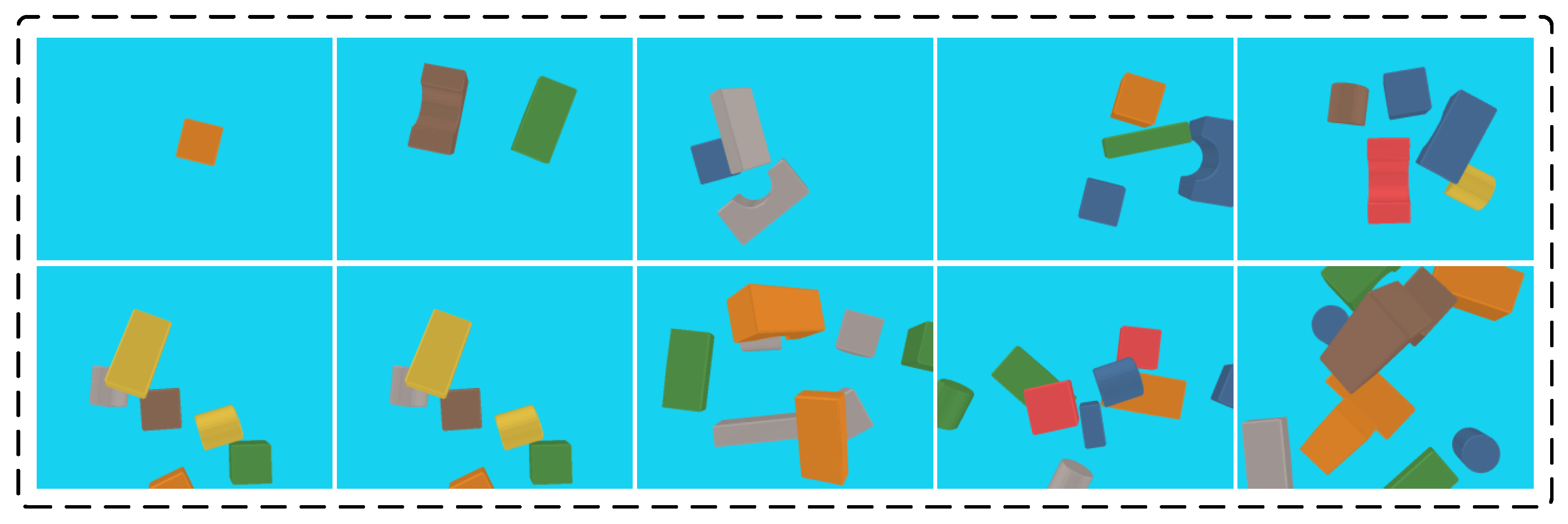

during the grasping process. The shape of the grasped objects are shown in

Figure 5. In the real world experiments, the experimental setup consists of a Yaskawa MOTOMAN-GP8 industrial robot with a servo-driven mechanical gripper, controlled by a remote control MOTOCOM32. An Intel RealSense D435 camera captures the RGB-D images at a resolution of

. Relative to the robot coordinate system, the global camera position is set

in the simulation experiment and

in the real experiment, and the unit is meters.

4.2. Baseline Methods

The grasping effect of our VAGS strategy is compared with the classical methods of GG-CNN, active multi-view based on entropy, next best viewpoint selection and visual pushing for grasping. The specific flow of each method is as follows:

GG-CNN: GG-CNN [

10] privides a grasping prediction for each pixel in the input depth image obtained by a fixed RGB-D camera. In addition, achieve a grasping pose in real-time directly.

Active Multi-View based on Entropy (MVP): Morrison et al. [

23] increased their grasping success rate by their Multi-View Picking. The method is as follows: Firstly, the camera captures the scene image at the global camera position. Secondly, the next best viewpoint is calculated according to the entropy of the current scene image then the robot with the camera at the end is moved to the next viewpoint. The viewpoint prediction is performed many times until the termination condition is reached then the robot executes grasping.

Next Best Viewpoint Selecting (NBV): We increase the grasping success rate by the viewpoint selection experience enhancement algorithm. The method is as follows: Firstly, the scene image is captured by the camera at the global camera position. Secondly, the image is fed into the model. The model predicts the pixel-level q values. In addition, the position where the largest q value is will be selected as the next best viewpoint. Then, the robot with the camera is moved to the target viewpoint and a grasping pose will be estimated according to the GG-CNN. Finally, the robot executes a grasping action.

Visual Pushing for Grasping (VPG): VPG [

22] is a push-to-grasp method that the pushing action and the grasping action were selected the maximum Q-value with parallel architecture.

4.3. Evaluation Metrics

The following performance metrics are used to test the proposed method, which has been used previously by [

23].

Grasp Success Rate: The number of times the robot successfully grasps objects into the specified box divided by the total number of grasping in all grasping attempts. It is mainly used to evaluate the grasping ability of the robot.

Scene Clearing Rate: The number of times of scene clearings in n rounds grasping experiments divided by n. It evaluates the robot’s ability to handle scenes with different stacking levels of objects.

Motion Number: The average motion number when grasping an object. It evaluates the robot’s ability to make judgments based on the scene’s complexity.

Mean Picks Per Hour: The number of objects that the robot successfully placed into the specified box per hour. It is mainly used to evaluate the grasping efficiency of the whole system.

4.4. Simulation Experiments

In the simulation experiments, 1 to 10 objects that shapes, volumes, and colors are randomly selected are randomly placed in the workspace, and the robot performs 50 rounds of grasping tasks per group.

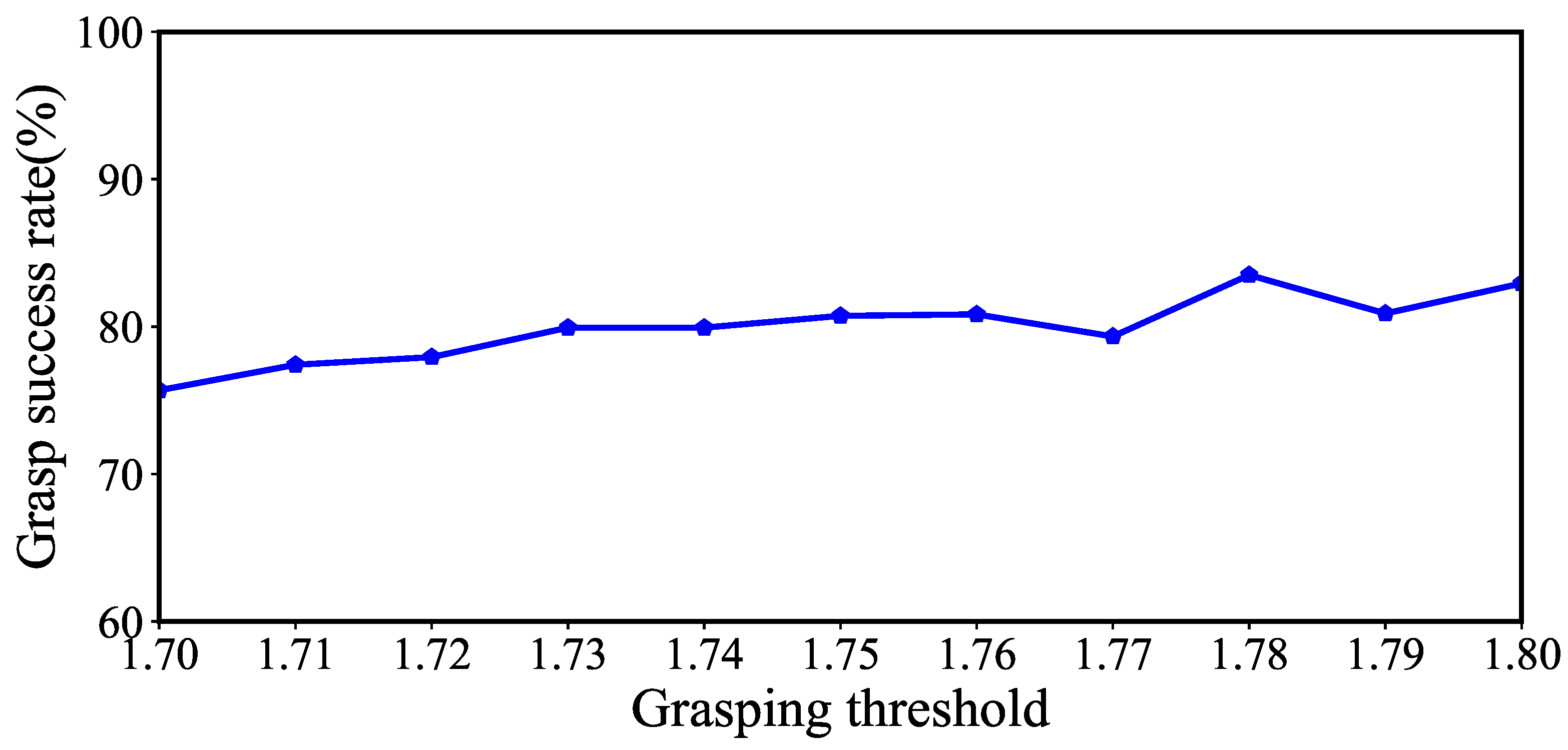

Firstly, we test the effect of the grasping threshold on the grasping success rate, we set the grasping threshold from 1.7 to 1.8 in steps of 0.01 and record the grasping success rate.

Figure 6 shows the change curve of grasping success rate with grasping threshold. We see that with the change of the grasping threshold, there is no obvious change in the grasping success rate. When the grasping threshold is set 1.78, it has the best grasping success rate, 83.50%. So, we set the grasping threshold 1.78 in follow experiments.

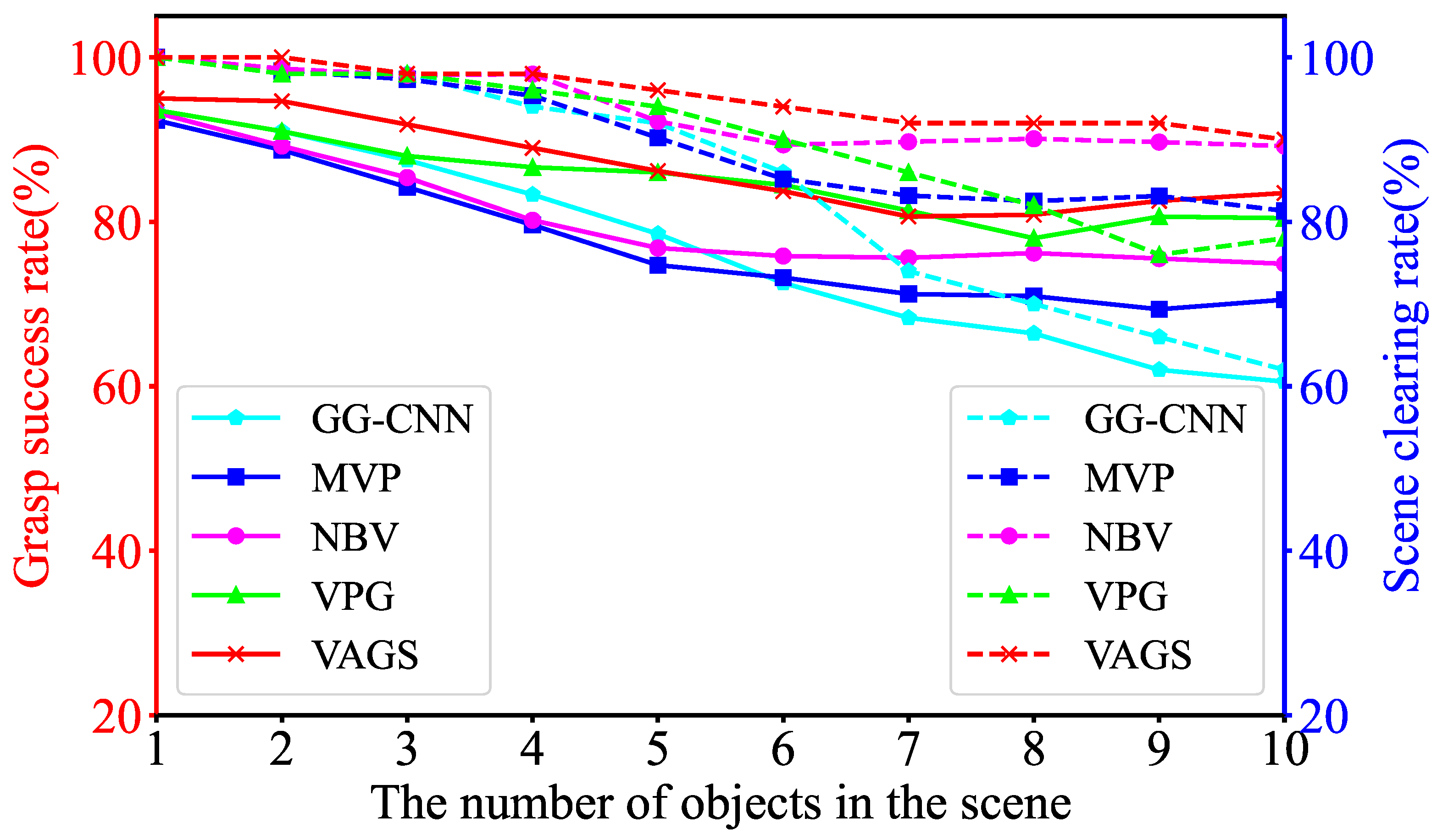

Then, we compare the proposed VAGS to the baseline methods in the simulation. The simulation experiment results are shown in

Figure 7 and

Table 1.

Figure 7 shows that with the increased scene object number, the grasp success rate and scene clearing rate decreased significantly for all methods. This is because that with the increase of the scene object number, the stack of objects in the workspace becomes more clutter, and some objects are located at the edge of the field of view, showed as

Figure 8.

Table 1 shows that VAGS outperforms the baseline methods across all metrics. The scene clearing rate of VPG performs poorly compare to NBV and VAGS. This is likely due to VPG that with push action pushing the objects on the edge out of the workspace. GG-CNN decreased most significantly, the grasp success rate drops from 92.37% to 60.89% and the scene clearing rate drops from 100% to 41.0%. It is because some objects are at the edge of the field of view, which makes them difficult to be detected. The performance of the NBV is lower than VAGS in terms of the grasp success rates and scene clearing rate. This is due to the viewpoint adjustment network of NBV being only three-layer and the backbone of VAGS is Desenet-121 [

28].

4.5. Ablation Study

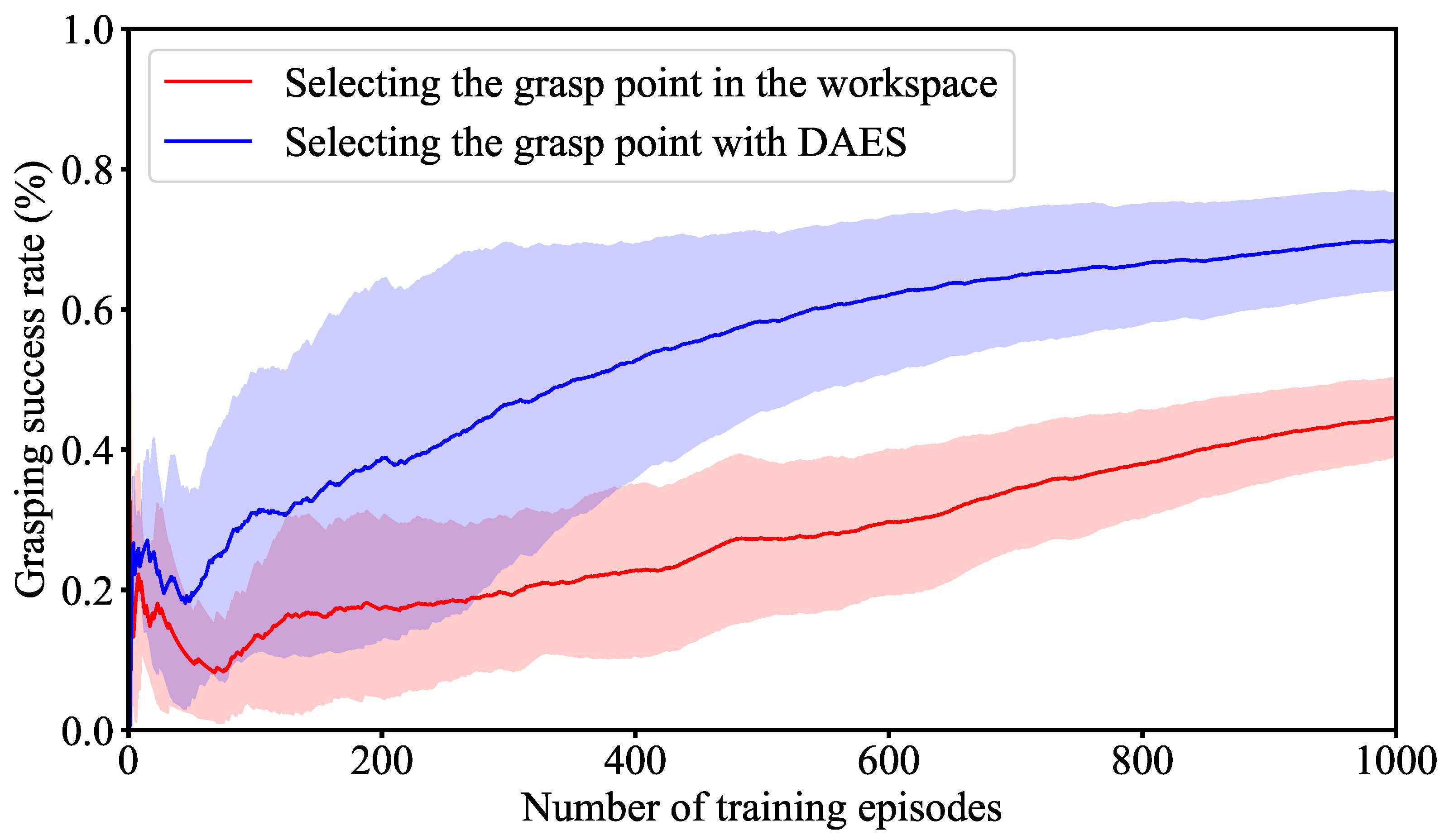

The methods we proposed are compared with several ablation methods to test (1) whether the proposed DAES method can improve the training efficiency and (2) whether the VAGS method can improve the grasping performance.

We train the GDNet in V-REP according to the first stage training details proposed in

Section 3. The objects that shapes, volumes, and colors are randomly selected are randomly placed in the workspace. The training results that with DASE method and without DASE method are shown in

Figure 9. We see that when trained with DAES method, the grasping success rate stabilizes at more than 60% after about 600 training episodes. When the trained without DAES method, the grasping success rate still less than 40% after 800 training episodes.

We also investigate the importance of viewpoint adjusting. We test the method with viewpoint adjusting(w/VANet) and without viewpoint adjusting(w/o VANet) in V-REP and the objects are the same in simulation experiments. The robot performs 50 rounds of grasping tasks per group. The results show in

Table 2. We see that viewpoint adjusting network can improve the grasp success rate and the scene clearing rate, the improvement is 10.49% and 11%, respectively. And with the increased scene object number, the performance improves significantly.

4.6. Real-World Experiments

As mentioned in

Section 4.4, the color, shape, number, and pose of objects are randomly generated during the training process, enhancing the model’s generalization ability. In addition, the model is trained for the pose of the gripper, so the impact of the robot on the model is relatively small. Therefore, in this section, we evaluate the VAGS strategy in the real world, which is trained in the simulation environment without extra fine-tuning. The grasped objects are selected from the standard industrial workpieces as shown in

Figure 10 and randomly place 10 grasping objects in the workspace. We test 30 scenes for each method. Note that no retraining required to move the models of all methods from simulation to the real world. We count the grasping success rate, the scene clearing rate, the average time the robot takes to grasp an object from the preset global camera position successfully, and the number of movements.

Table 3 shows the comparison between the proposed VAGS strategy and the baseline approaches.

As shown in the

Table 3, VAGS has the best performance in terms of Mean Picks Per Hour. This means that the robot can successfully grasp more objects in one hour. The grasping success rate of MVP and NBV is almost the same as the proposed VAGS strategy. However, the robot must move twice at least to perform a complete grasping action according to the algorithms of MVP and NBV. For VAGS strategy, in the initial grasping stage, due to the high degree of stacking of objects in the scene, the robot needs to adjust the viewpoint to observe the local scene where the degree of stacking is lower. As the objects in the scene are gradually grasped away from the workspace, the degree of stacking is reduced. The robot can obtain a grasping pose to grasp the object at the preset global camera position successfully. Therefore, the robot only needs to move 1.36 times on average to grasp an object. The results show that the robot’s grasping performance can be improved by the proposed VAGS in stacking scenes.