1. Introduction

Simultaneous localization and mapping (SLAM) [

1] is a hot research topic in both the computer vision and robotics communities [

2]. The development and progress of the SLAM system are widely applied in many fields, including civil industry, military industry, agriculture, and the security protection industry. Examples of these systems include the sweeping robot, unmanned vehicles, autonomous navigation (UAV), autonomous navigation in unmanned missiles, autonomous unmanned system cooperative operation, and other applications. [

1,

2,

3] The SLAM system is a crucial part of automatic driving and autonomous navigation, and its working steps mainly include motion tracking, local mapping, and loop-closure detection. [

1,

2,

3] Feature points are extracted from the images captured by the camera and pose and motion are estimated according to the geometric relationships between the feature points and the map points to realize the positioning function of the visual SLAM system. [

1,

2,

3] Therefore, the key to accurate pose estimation and motion tracking, without loss, is an accurate detection and a correct matching of feature points.

Feature points can be expressed independently in texture and are often the points where the direction of the object boundary changes suddenly or the intersection between two or more edge segments. It has a clear position or is well positioned in the image space. The accurate detection and correct matching of feature points are the core steps needed for the whole SLAM system.

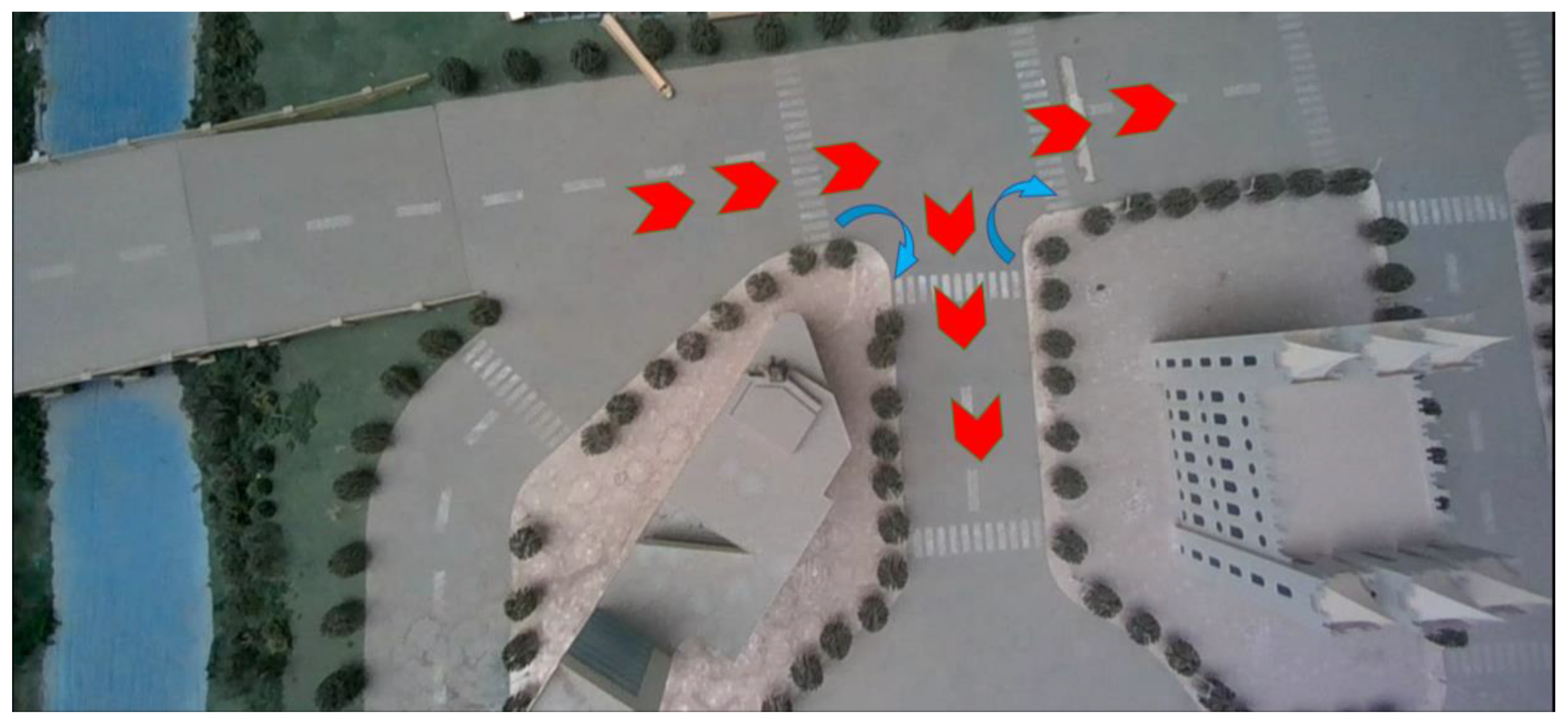

In the actual application of SLAM technology, such as in visual navigation and positioning, as well as in the application platforms, such as unmanned vehicles and unmanned aerial vehicles, the large view angle of unmanned vehicles quickly makes sudden turns, and the flight trajectory of unmanned aerial vehicles rapidly changes, which often leads to problems, such as large camera viewpoint changes and image frame sparsity. In such complex application scenarios, traditional SLAM algorithms often lose track due to a matching failure, which leads to the failure of visual navigation and the positioning of smart devices, resulting in a limitation in the application of smart devices. In a complex environment, designing a set of features with scale, rotation, repeatability, environmental change, and illumination invariance is the core problem of feature points. The features from accelerated segment (FAST) [

4] corner detection method is adopted in the SVO [

5] system. Instead of calculating the descriptors, block matching is carried out according to the 4 × 4 small blocks around the key points, realizing the tracking matching of the key points. [

5] In PTAM [

6], FAST [

4] corner is used for feature extraction, and the parallel operation of motion tracking and mapping is performed. The speeded-up robust feature (SURF) [

7] corner detection method is adopted in RTAB-Map [

8] to realize a feature-based visual odometer and bag-of-words model for loop-closure detection. The features used in the SLAM schemes are easily affected by illumination and angle of view changes and have low feature robustness to weak or repeated textures. Therefore, feature matching is prone to loss and error.

ORB-SLAM2 [

2] and ORB-SLAM3 [

3], are two of the best traditional SLAM algorithms, which adopt the ORB [

9] feature for feature detection. The ORB feature adopts FAST as a corner point and BRIEF as a descriptor, which has the advantages of rotation and scale invariance [

10]. However, the ORB-SLAM system has shortcomings, such as centralized feature point detection, poor robustness of pose calculation, and weak tracking ability in complex environments, such as texture, viewing angle, illumination changes, large pose calculation errors, and easy loss.

Deep learning has achieved good development in various fields. In computer vision, some outstanding work has been conducted for feature detection, feature matching, position recognition, and depth estimation. In particular, convolutional neural networks have shown advantageous performance in almost all image processing tasks. [

11,

12,

13,

14,

15] Among them, the Siamese convolutional network has been well developed in object tracking and loop closure detection and has achieved excellent results [

16,

17]. To solve the problems of traditional SLAM systems, the application of Siamese convolutional neural networks [

16,

18] in SLAM has achieved good results, such as feature detection and similarity detection in complex scenes. However, most of the feature points detected by existing methods have no descriptors or cannot describe the feature points well for cross-viewing, illumination changes, and weak textures. However, detectors and descriptors are required to match map points in SLAM systems, and most deep learning algorithms do not have the transformation invariance feature detection and the description or both. In addition, the invariance of illumination, angle of view change, and weak texture are not superior, and it is easy to lose track. Therefore, to solve these problems, we proposed a new multifunction feature extraction and feature description pseudoinverse Siamese network for the SLAM system; this new network has cross-view, illumination, and texture invariance for feature detection and description in a complex environment.

In this paper, our system uses the front-end design transformation module and feature extraction of the backbone network module, linking the feature point detector and the descriptor sharing network, with data sharing. Finally, the whole network is trained by designing a loss function and building it into a base Siamese convolutional neural network of transformation invariance feature detection, and a description of a SLAM system.

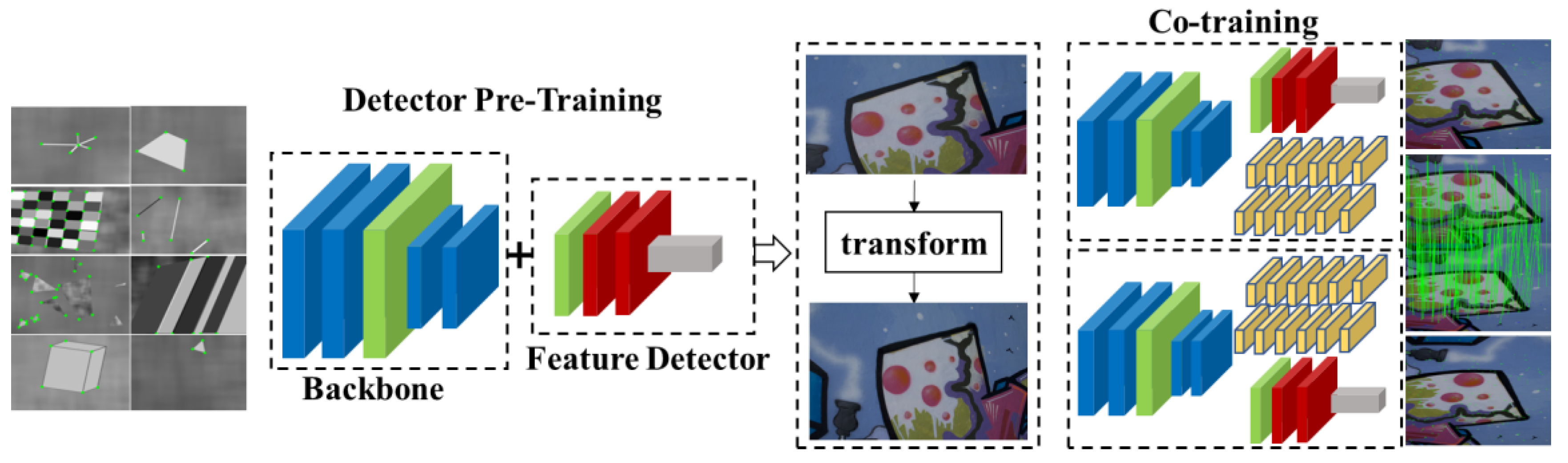

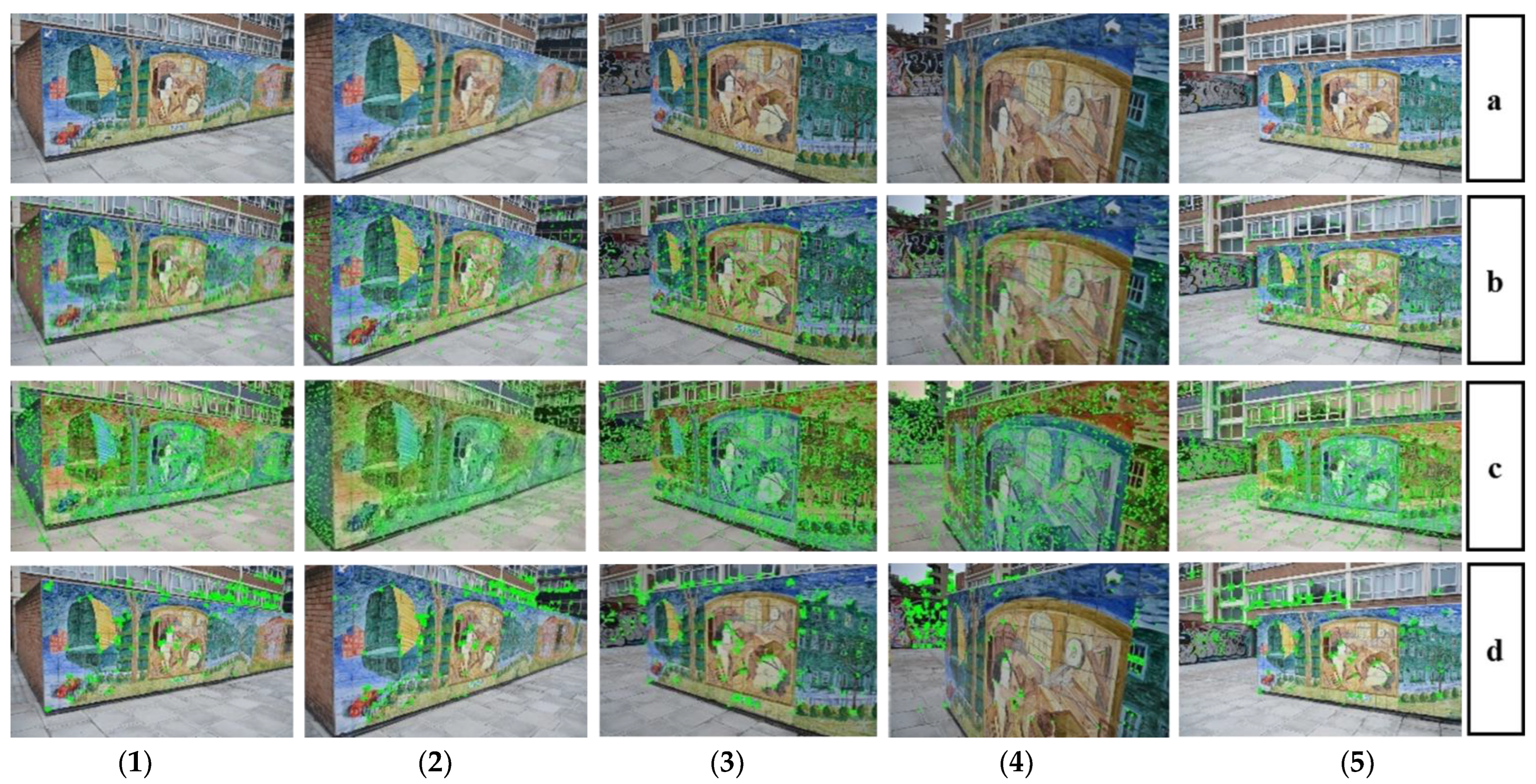

Therefore, our main contributions in this paper can be summarized as follows: (1) We proposed a multifunctional feature detection and description based on a Siamese convolution and compared it with traditional methods, for example, illumination, angle, and texture invariance in complex environments. (2) Since the convolutional neural network does not have transformation invariance, the method that we proposed learns an invariant spatial transform and a viewpoint covariant detector and descriptor. (3) We designed a single network to learn both feature detection and response feature descriptor calculations with a self-supervised approach, as shown in

Figure 1. (4) We proposed a novel SLAM system based on a pseudoinverse Siamese convolution, which effectively solves the problems of traditional SLAM systems, such as less feature detection, less robust transformation invariance, easy to lose track issue, and improves the localization and mapping performance of the SLAM system.

2. Related Work

The ORB descriptor is a binary vector that allows high-performance matching. It can work in both indoor and outdoor environments and supports relocation and automatic initialization [

1]. ORB features work well in traditional SLAM systems and are a state-of-the-art traditional algorithm. However, in complex or changeable scenes, such as inadequate light, excessive illumination, angle of view change, and weak texture, their performance is not stable or may even be unable to work. In multitask features [

15], a deep-learning visual SLAM system, based on a multitask feature extraction networks, and self-supervised feature points is proposed. The system makes full use of the advantages of deep learning to extract feature points, and the CNN structure of the detection feature points and descriptors is simplified. GCN-SLAM [

11] proposed a new learning scheme for generating geometric correspondences to be used for visual odometry. However, they did not work on cross-views, lighting, and texture invariance in complex environments.

SuperPoint [

19] proposes a self-supervised training framework for point-of-interest detectors and descriptors suitable for a large number of multi-view geometry problems in computer visions. Different from the patch-based neural network, it uses a full convolution model to operate on the full-size image and computes the pixel level position of interest points and related descriptors in a forward pass. The haplotropic adaptive, multiscale, multi-haplotropic method is used to improve the repeatability of point of interest detection and performs cross-domain adaptation. However, descriptors are not robust to cross-view and illumination invariances. GIFT [

20] introduces a novel dense descriptor, with a provable invariance to a certain group of transformations. In this method, a descriptor with transformation invariance is proposed to perform feature description calculations on the corresponding feature points but without a feature point detection function. LIFT [

21] introduces a novel deep learning network architecture that combines the three components of standard pipelines for local feature detections and descriptions into a single differentiable network. Patch2Pix [

22] proposed a detect-to-refine method, where it first detects patch-level match proposals and then refines them by a refinement network. The refinement network refines match proposals by regressing pixel-level matches from the local regions, defined by those proposals, and jointly rejects outlier matches with confidence scores. Through the two steps of rough matching and fine matching, the method realizes the detection and matching of feature points and achieves a good performance. However, these methods do not have transformation invariance and are not robust to changes in perspective. In addition, there is no computational descriptor, and these features cannot be applied to the local map matching in the SLAM system and bundle adjustment (BA) [

23] optimization, so they are not suitable for SLAM systems.

Ref. [

24] proposed a LIFT-SLAM, which achieved ideal results in scenes with rich textures, and this method can achieve good results in general environments. The LIFT-SLAM proposed in [

24] uses LIFT as the feature extraction module at the front end. LIFT-SLAM is not proven in complex scenes, such as illumination, viewpoint, and low-texture scenes. However, there are various complex application scenarios in real life, such as the sudden turn of an unmanned car, the sudden change in course of a UAV, etc. Other applications, such as relocation and loop detection, are performed at different views or at larger views. [

25] proposed a kind of RGB-D image sparse visual odometer model, which proposed the use of edge characteristics to minimize photometric adjustments in positional errors; based on the characteristics of the different traditional methods, this method, uses the edge of the image feature extraction method, and introduces each edge point using the exposure degree of a prior probability. The joint photometric error minimization and probabilistic models are used to improve sparse point extraction. It makes feature matching more robust and computationally efficient. However, in complex scenes, such as scenes with low texture and unclear edges, sparse feature points are often few and cannot meet the needs of SLAM algorithm motion tracking. In particular, the photometric adjustment method has a strong photometric consistency assumption, so it is not applicable in complex environments.

In this paper, we proposed a pseudoinverse Siamese convolutional neural network of transformation invariance feature detection and a description for SLAM systems in complex environments. We combined the excellent advantage of convolutional networks in feature descriptors and feature detection. The backbone network structure and feature detection and description submodule based on group features are designed. At the same time, the single neural network structure has feature detection and description with shared parameters.

3. Method

In this section, we first describe the proposed general framework of the method. Second, a pseudoinverse Siamese convolutional network of the transform invariant feature extraction backbone network is described in detail. Third, the feature detector subnetwork and feature descriptor subnetwork are described. Finally, we cover a novel SLAM system by combining a convolutional neural network in detail.

3.1. Overall Framework

A pseudoinverse Siamese convolutional neural network of transformation invariance feature detection and a description for the SLAM system that we propose, includes a design of a backbone network for feature extraction and then a design for two branch networks based on Siamese networks; a feature detector subnetwork, and a feature descriptor subnetwork. The backbone network is shared by two branch networks, and then the feature detector subnetwork is used to detect feature points on the input image. The feature descriptor subnetwork is used to describe the detected feature points and forms feature vectors, with information being sharing between them. Second, the tracking module and local mapping module are designed to jointly contribute to the map.

As shown in

Figure 2, the input image was transformed to form an image group, and then the backbone network module was used to extract features from the image group. The extracted features are inputted into the feature detection module and the feature description module for feature detection and description, respectively, again sharing their information. This tracking module preprocesses the Siamese feature points, descriptors, and depth picture, generating coordinates for pose prediction, local map tracking, and determining new keyframes. The local mapping module is used to insert and delete new keyframes, create and delete new points, and perform local BA operations. The map module includes map points, keyframes, a visibility graph, and a spanning tree.

3.2. The Backbone Network of Feature Detection and Description

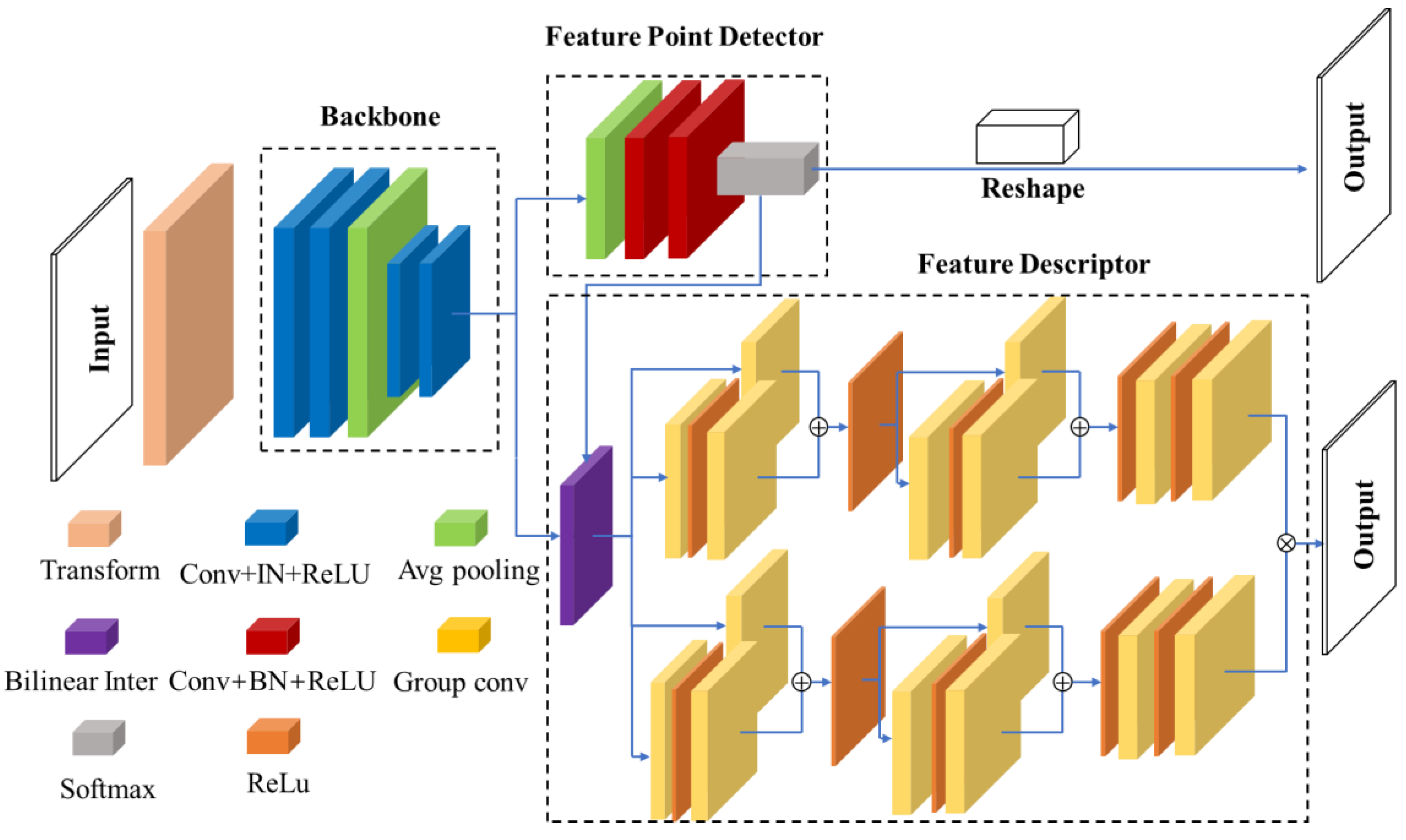

As shown in

Figure 3, our backbone network adopts a neural network design in the style of vanilla CNN to reduce the spatial dimension of the sampled images through convolution, pooling, and nonlinear activation functions. First, an image transformation module is designed to transform the input image according to the image perspective change, illumination, scale, and rotation. Given an image

I, and by transforming

t ∊

T, output

I′ ∊

G, and image Group

G is obtained by transformation module

T. Then, two convolution layers are followed by the transformation module. Each convolution layer is followed by a ReLu nonlinear activation function. Between the two convolutional layer networks and activation functions, we did not follow the batch normalization (BN) layer, but the instance normalization (IN) layer. BN is suitable for discriminant models, such as image classification models. The BN layer focuses on the normalization of each batch to ensure the consistency of the data distribution, and the result of the discriminant model depends on the overall distribution of the data. However, BN is sensitive to the size of the batch. Since the mean and variance are calculated on a batch each time, if the batch size is too small, the calculated mean and variance are not enough to represent the entire data distribution. IN is useful in generative models, such as image style migration. Because the results generated by images mainly depend on an image instance, the normalization of the whole batch is not suitable for image stylization. The IN layer in style migration can not only accelerate model convergence but also maintain the independence of each image instance. We followed an average pooling layer for down sampling. Finally, we convolved two convolution layers with the ReLu function for the nonlinear activation function as above and then the IN layer between the convolution layer and the activation function, as shown in

Figure 3.

Given an input image I, , transform t0, t1, t2…tm ∊ T warped images are used to form a group of transform image Groups O0, O1, O2…Om ∊ Ogroup. The backbone network feature extractor calculates a feature map for each image in the transformation image group. Warped images correspond to feature maps one by one, and each distorted image corresponds to a feature map. Feature points can extract a feature vector from all the feature maps to form the feature vector group. This is performed in the feature descriptor subnetwork. In addition, we input the feature map extracted from the original image into the feature detector subnetwork for the detection and calculation of feature point positions.

3.3. The Feature Detector Subnetwork

The feature detector subnetwork is designed as a feature detector to compute feature-point positions. As a decoder, the feature detector subnetwork first follows one average pooling layer and two convolution layers, as shown in

Figure 3. Input image

I through two convolution layers and then into channel-wise softmax. For each input pixel

p ∊

I, the decoder outputs the probability of each pixel point as a feature point:

where

p is the probability that the pixel is a feature point. At the last convolution layer, the data of each channel are fused, and the dimension is reduced by the 1 × 1 unit convolution instead of the pooling layer and striding. The advantage of this method is to reduce the amount of computation and avoid data loss and distortion. The input image is convolved onto 64 channels channel-wise, and channel-wise convolution slides on the channel dimension, which cleverly solves the complex fully connected characteristics of the input and output in the convolution operation; however, it is not as rigid as grouping convolution. Corresponding to the nonoverlapping 8 × 8 region in 64 channels, the last channel is used to represent the response value of the 8 × 8 region after 64 channels. Finally, the feature point position is put on the original size diagram, and the feature point coordinate position

p (

x,

y) is output.

3.4. The Feature Descriptor Subnetwork

In this section, we design the feature descriptor subnetwork, which extends the group convolution [

26] features of feature modules. For the feature map group output by the backbone neural network, the feature detected by the feature detector subnetwork and the feature descriptor subnetwork apply to the feature detected by the feature detector subnetwork to perform bilinear interpolation to the feature map. As the feature map group is the transform group image, formed after the transformation of the original image and then obtained by convolution, the same transformation is also applied to feature point location detection of the original image. The feature points are transformed into the corresponding feature maps one by one, and the corresponding feature map is interpolated according to bilinear interpolation. Group convolution of the feature group vector is processed according to the group convolution neural network [

26]. The group convolution module is divided into two groups of convolutions with both A and B as eight layers and convolves the group feature group vectors. Group features [

20,

26] and the bilinear model [

27] are merged to generate descriptors, as shown in

Figure 3:

where

is the bilinear interpolation function,

B is the backbone network feature detection function,

is the transformation and

P is the feature point detector. There are many ways to achieve transformation invariance. However, in feature description, equivariant invariance is more important than invariance because invariance is not a very good description of the properties in different spatial states. Group convolutional neural networks can learn feature map equivariant invariance features. So that means:

where

and

need not be the same. In other words, although feature maps undergo different transformations, such as the angle of view and illumination, their descriptors are the same. If d represents the descriptor of an image,

d′ represents the descriptor after transformation, and then

d =

d′. To obtain the descriptors

d, the pooling function

P aggregates the bilinear feature pass point location through the image. The calculation process of this descriptor is as follows:

Here, fA and fB represent feature functions.

3.5. Loss Functions

The network loss function designed by us is composed of two parts: one is the loss function Lp of feature points, and the other is the loss function Ld of the descriptor corresponding to feature points. We use pairs of synthetically warped images that have the ground truth correspondence from a randomly generated homography H that relates the two images. Since there is information sharing between the two parts, given a pair of images, we introduce weight terms to the final loss function, and the total loss function is the weighted Lsum of the two.

The feature point loss

Lp, which we adopt is the cross-entropy loss, is shown in the formula below:

Here, HC and WC are the height and width of the rectangular region for the cross-entropy calculation, respectively, and x is the value of the rectangular region at position (I, j) in different channels m.

In the loss of descriptor, triplet loss is adopted, which minimizes the distance between

d and

dp and maximizes the distance between

d and

dn. The formula is as follows:

Here, d is the descriptor in an image, dp is the true value descriptor, dn is the false descriptor, and θ is a margin between positive and negative pairs.

The final total loss is the sum of the feature point loss and descriptor loss, and

α is used to balance the loss ratio:

where

Lp′ is the feature point loss after transformation.

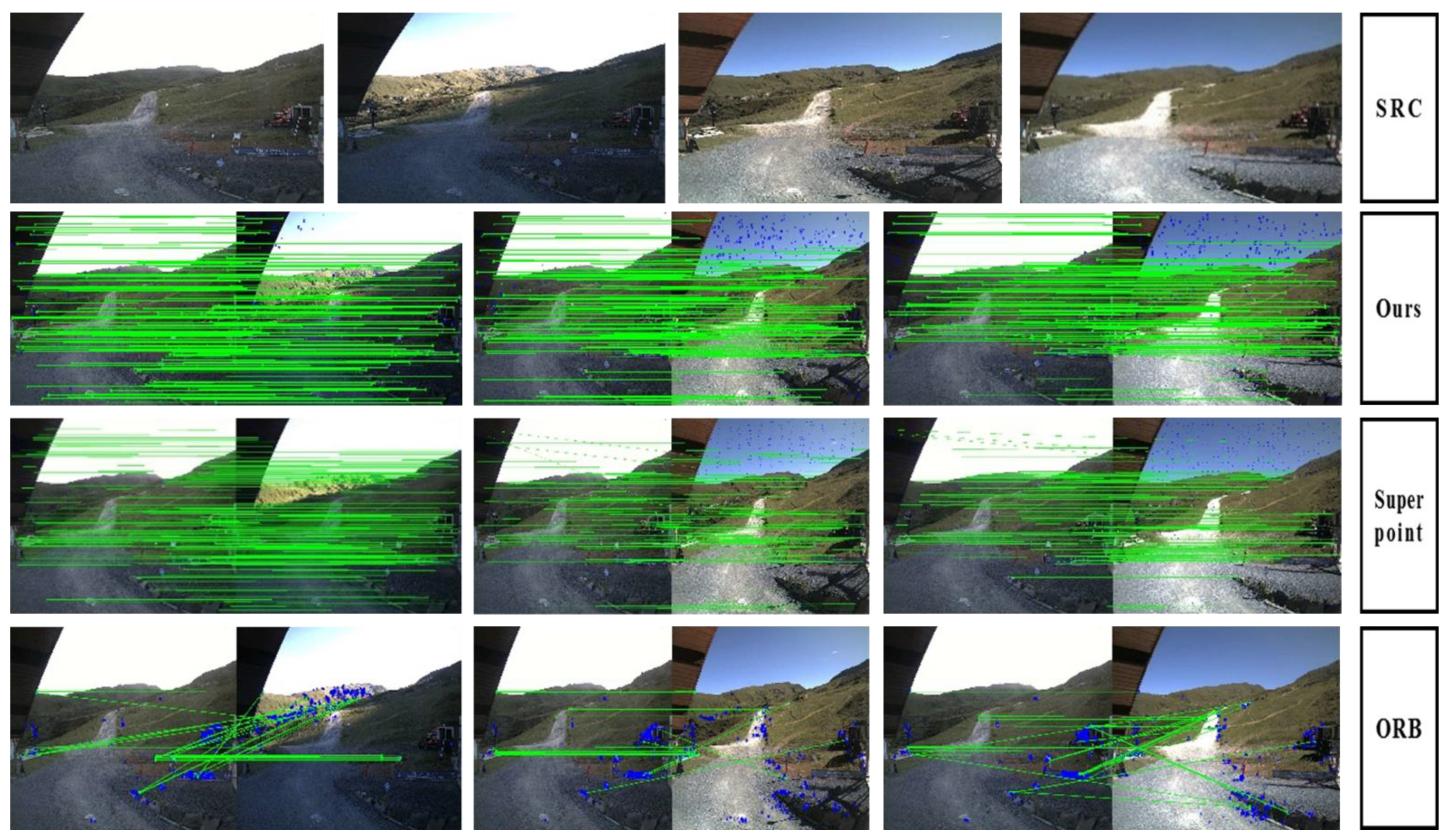

3.6. A Siamese Convolutional Network of Transformation Invariance Feature Detection and Description for a Slam System

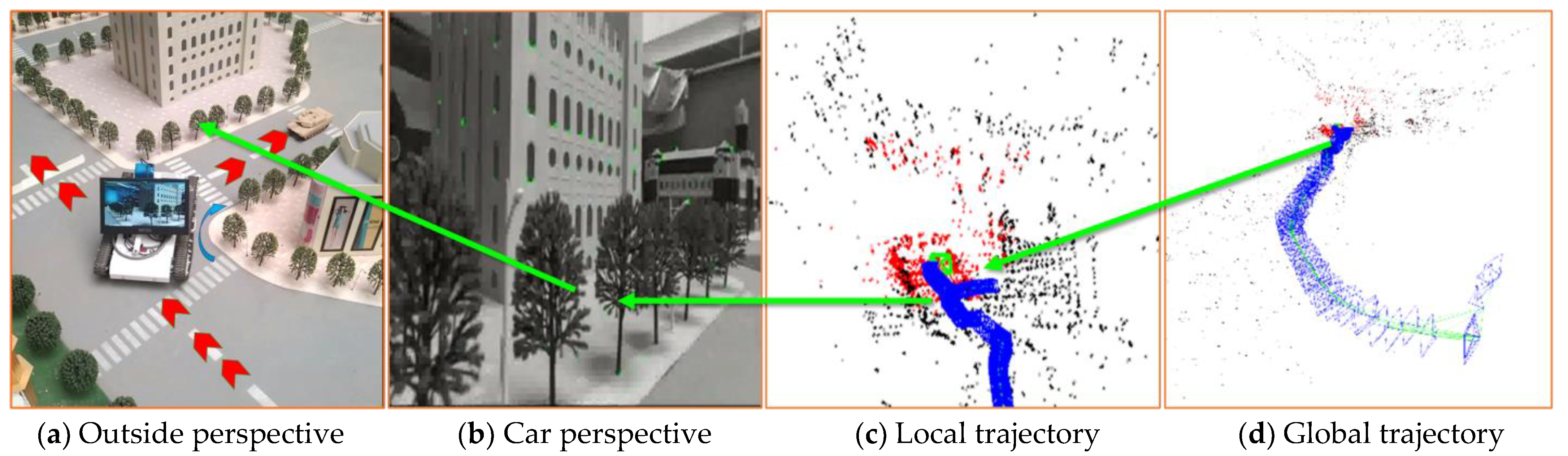

As shown in

Figure 4, the proposed method is constructed based on ORB-SLAM2 [

2] and replaces the front-end feature detection description and matching part. The proposed method combines the advantages of a convolution network for feature detection and description and improves the robustness and stability of invariants, such as cross-viewing angle, texture, and illumination. They are constructed by a single network to realize the information sharing of both the feature detection and feature description and improve the validity of the information.

For the sake of the simplicity of the experiment, we did not add the loop closure detection part and the global BA part into the system. Therefore, our system mainly consists of the front end of the tracking and local map parts.

3.6.1. Tracking

The tracking thread runs in the main thread of the system and is responsible for feature extraction, pose estimation, map tracking, and keyframe selection for each frame of the input image. The proposed method uses the RGB-D camera image data as the processing object, which is also applicable to the stereo camera and mono camera. Here, we discard the original feature matching method and adopt the Siamese convolutional neural network method to feature match the descriptors generated. Then, a nonlinear optimization method is used to minimize the reprojection error and optimize the tracked pose.

We followed the keyframe method in ORB-SLAM [

1] and ORB-SLAM2 [

2] and divided the feature points into close-range map points and long-range map points. Due to the noise and large error of long-range points, to ensure the accuracy and stability of pose estimation, we must carry out pose estimation under the condition that there are enough close-range feature points. Therefore, we use this as the judgment criterion for whether to insert new keyframes. In addition, the current frame has a certain movement with the last keyframe to avoid the waste of computing resources caused by the static picture. To ensure the accuracy of pose estimation, a suitable number of feature points should be provided.

3.6.2. Local Mapping

The local mapping thread is responsible for processing new keyframes, eliminating map points, adding map points, integrating map points, local BA optimization, and eliminating keyframes. In the keyframe insertion module, it is used for keyframe insertion. First, update the common view, insert the keyframe node, and update and insert the edges between the common view map points and other local keyframes. Then, the connection relationship between each keyframe is updated.

Although the high-quality feature points detected in the current keyframe have not been matched successfully, they are added to the map points, according to the tracking position and pose transformation relationship, to maintain the number and scale of local map points by the following formula:

In the local bundle adjustment section, pose and feature points are optimized, and pose and local map points of local key frames are optimized at the same time. All variables to be optimized are put into variable

x together, as shown in Equation (9), and the cost function is shown in Equation (10):

Take the square root of the diagonal elements of

JTJ and form a nonnegative diagonal matrix

A. Then, we solve the delta equation:

Here, λ is the LaGrange multiplier. To maintain the scale and computation of the local map, some local keyframes, that are not in the current frame, can be seen in common and nonadjacent keyframes, which are then deleted from the local keyframe.