Recognizing human nonverbal behaviors necessitates identifying the features of humans themselves (i.e., human skeleton) [

1]. This is achievable with devices such as cameras, red-green-blue-depth (RGBD) cameras, or sensors that can identify human features (e.g., human skeleton). In the following, we discuss a recognition model of human behavior with human features.

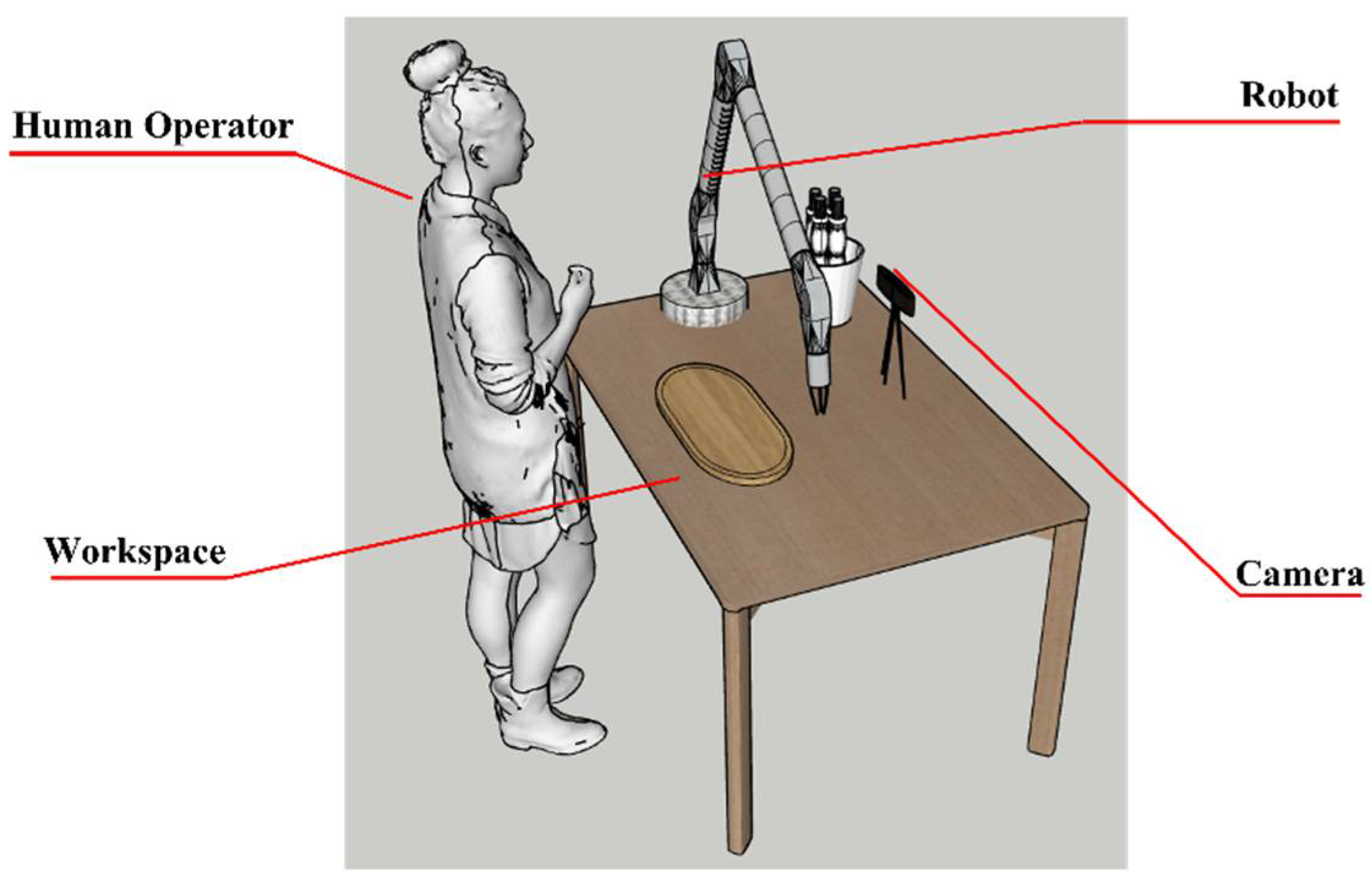

2.1. Human–Robot Interaction

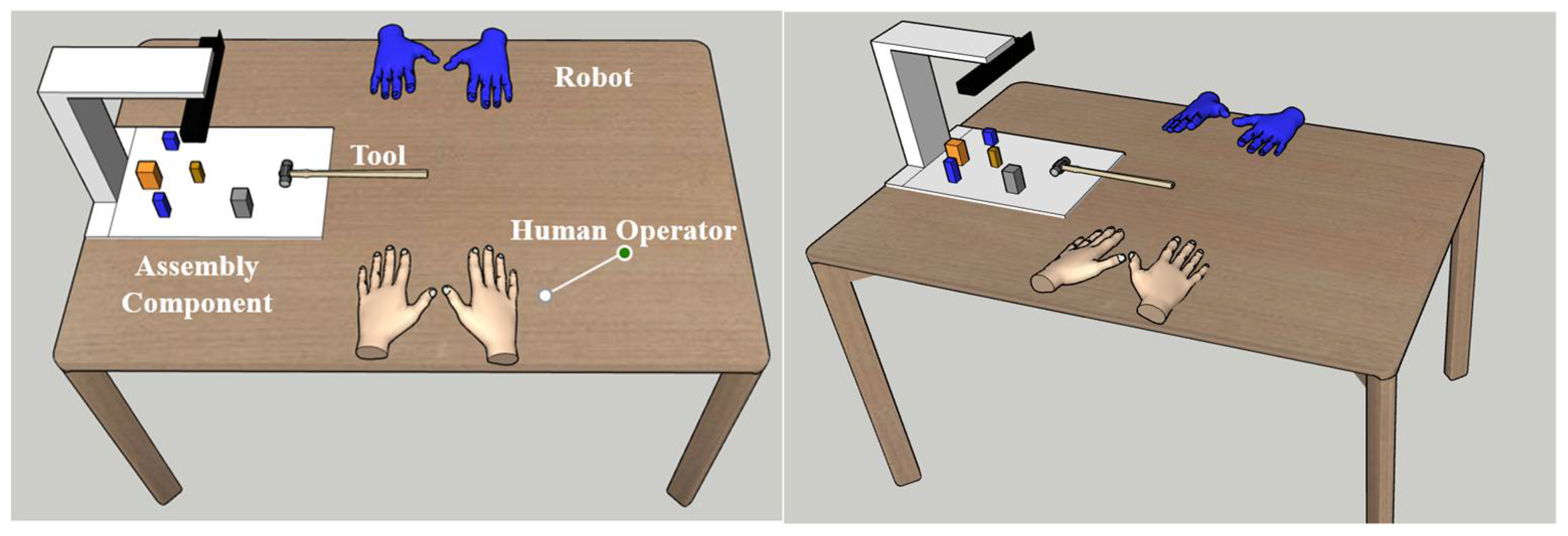

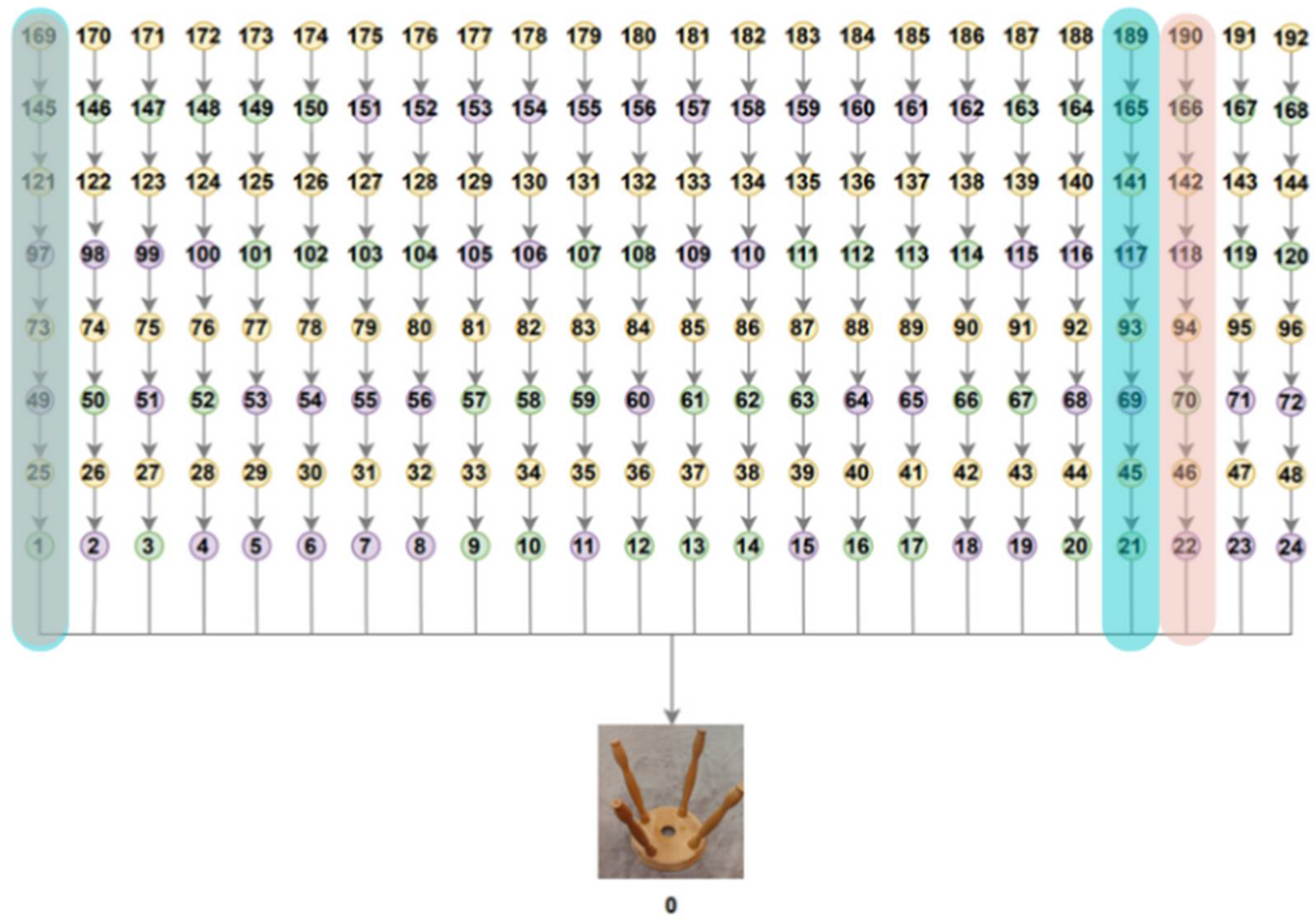

Collaborative robots, also known as “Cobots”, can physically interact with people in a shared workspace. Cobots are readily reprogrammable, even by non-specialists. This allows them to be repurposed within constantly updating workflows [

8]. Introduced to the manufacturing sector several years ago, Cobots are currently used in a variety of tasks. However, further research is still required to maximize the productive potential of interactions between humans and robots, as shown in

Figure 2. No particular strategy has yet been proposed to increase the level of productivity during the construction of intelligent multiagent robotic systems for HR interaction. This is because guaranteeing coordinated interaction between the technological and biological components of an unpredictable intelligent robotic system is a highly challenging process [

7].

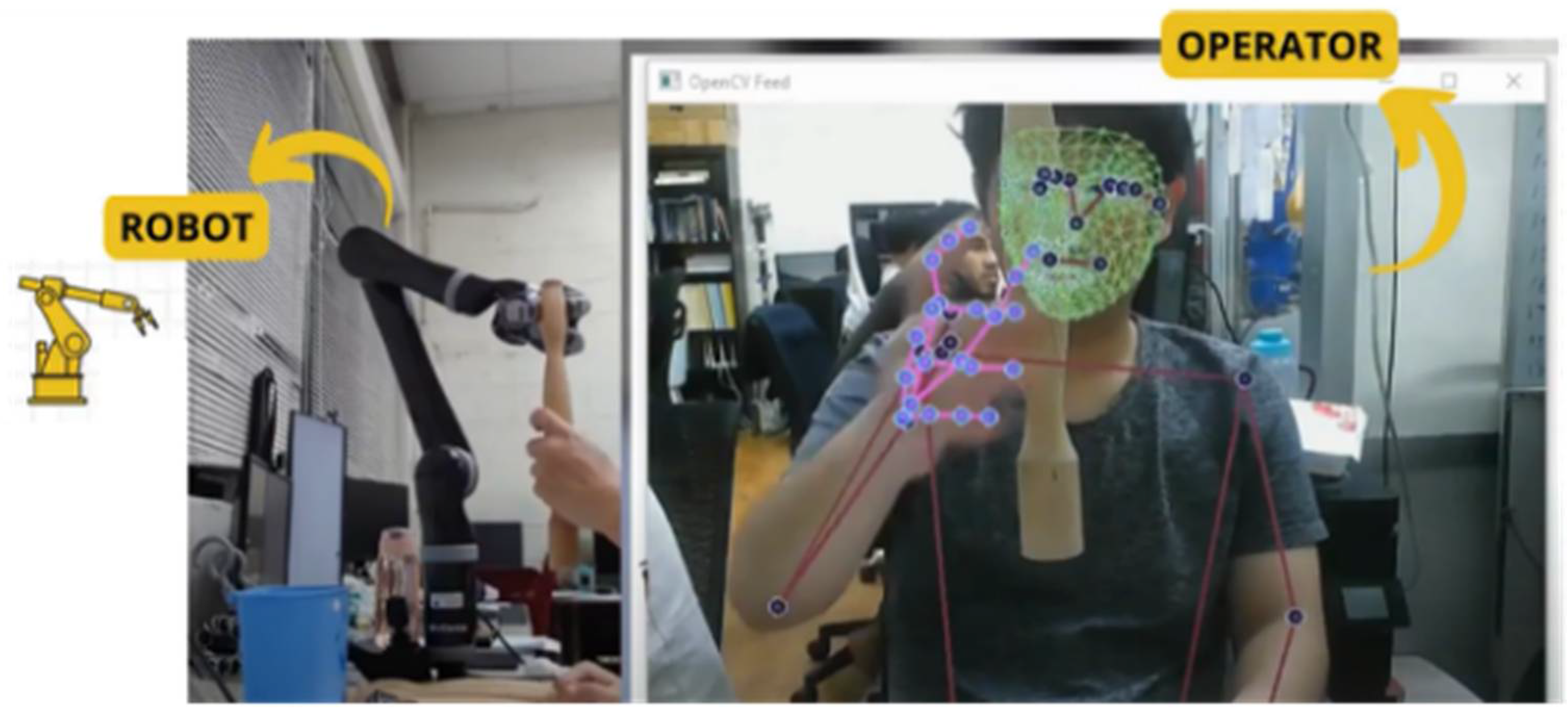

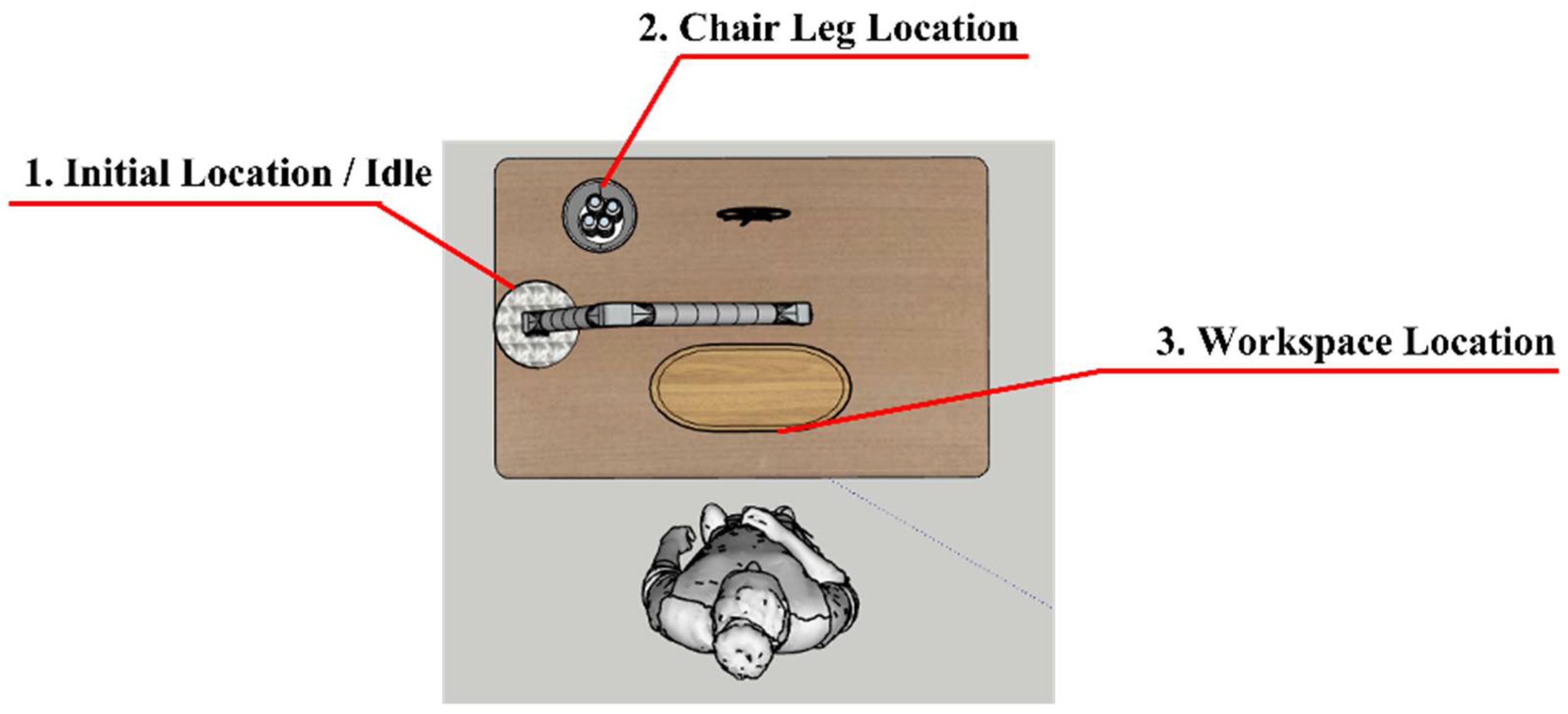

In [

9], a gesture-based HR interaction architecture was proposed, in which a robot helped a human coworker by holding and handing tools and parts during assembly operations. Inertial measurement unit (IMU) sensors were used to capture human upper-body motions. The captured data were then partitioned into static and dynamic blocks by using an unsupervised sliding window technique. Finally, these static and dynamic data blocks were fed into an artificial neural network (ANN) to classify static, dynamic and composed gestures.

In [

10], a technique was proposed for the teleportation of a team of mobile robots with diverse capabilities and human gestures. Both unmanned aerial vehicle–unmanned ground vehicle and unmanned aerial vehicle–unmanned aerial vehicle configurations were tested. In addition, high-level gesture patterns were developed at a distant station and an easily trainable ANN classifier was provided to recognize the skeletal data gathered by an RGBD camera. The classifier used certain data to generate gesture patterns, which allowed the use of natural and intuitive motions for the teleportation of mobile robots.

In [

11], an intelligent operator advice model based on deep learning aimed at generating smart operator advice was investigated. Two mechanisms were used at the core of the model: an object detection mechanism, which involved a convolutional neural network (CNN), and a motion identification mechanism, which involved a decision tree classifier. Motion detection was accomplished using three separate and parallel decision tree classifiers for motion identification based on input from three cameras. The inputs used for these classifiers were the coordinates and speeds of the objects observed. A majority vote was used to approve the appropriate motion receiving the most votes. Overall, the operator guidance model contributed to the final output by providing task checking and advice.

Control systems based on vision play a substantial role in the development of modern robots. Developing an effective algorithm for identifying human actions and the working environment and creating easily understandable gesture commands is an essential step in operationalizing such a system. In [

12], an algorithm was proposed to recognize actions in robotics and factory automation. In the next section, we discuss the role of human feature recognition in HR interaction.

2.2. Recognition of Human Feature

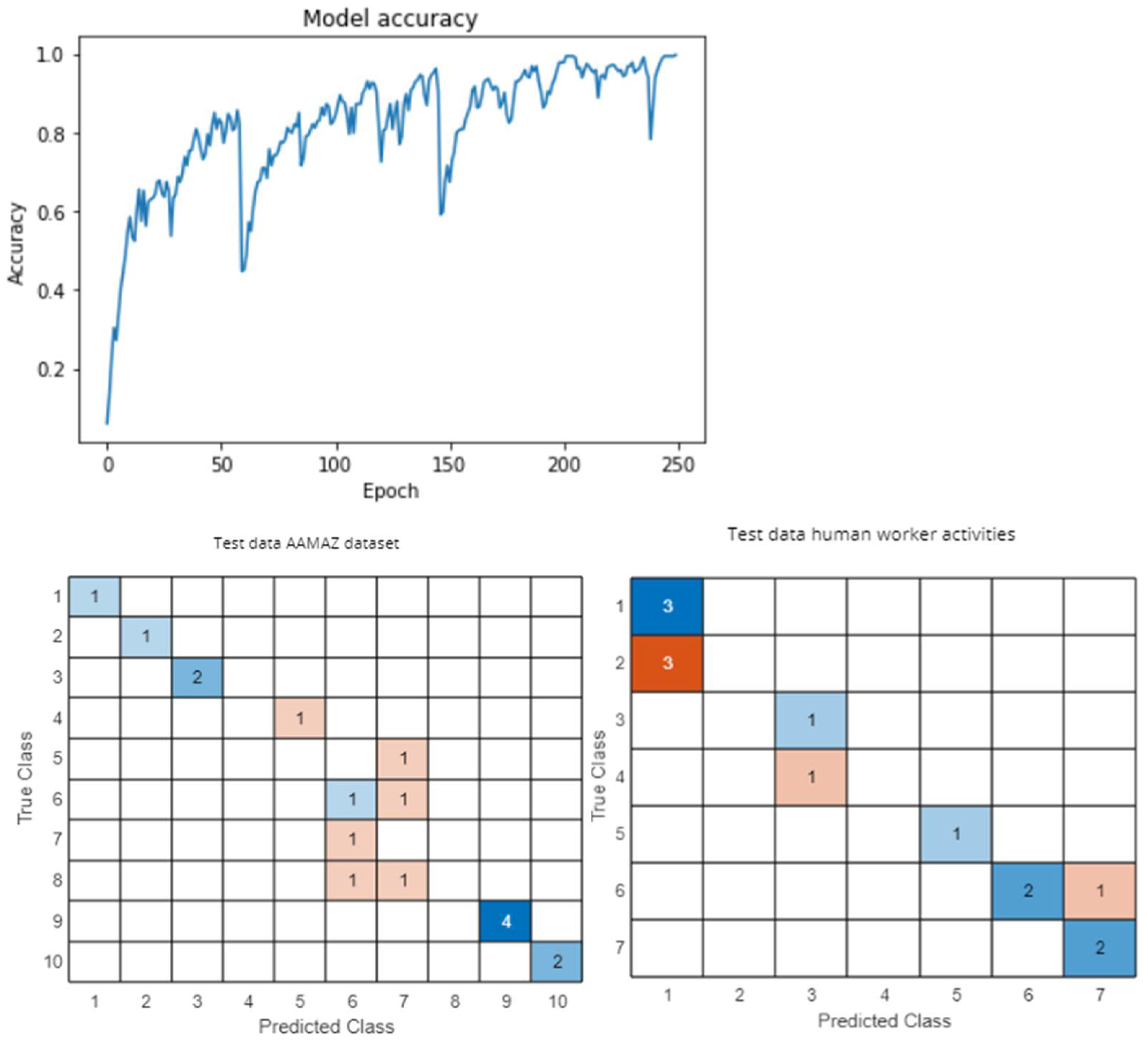

In [

13], the existence of a subway driver system combining a CNN model and a time series diagram was used as an example of nonverbal behavior applied as input for a behavior recognition system. Hand movement was used as the input to recognize behavior. In [

14], a unique infrared hand gesture estimation approach based on CNNs was investigated. This approach helped distinguish a teacher’s behavior by studying the teacher’s hand movements in a real classroom and in a live video. A two-step process algorithm for detecting arms in image sequences was proposed and the relative locations of the whiteboard and the teacher’s arm were used to determine hand gestures in the classroom. According to the experimental results, the developed network outperformed state-of-the-art approaches on two infrared image datasets.

Assessment of human position is considered a crucial phase because it directly affects the quality of a behavior identification system. Skeleton data are usually obtained in either a two-dimensional (2D) or 3D format. For instance, in [

15], a state-of-the-art error correction system combining algorithms for person identification and posture estimation was developed. This was achieved using a Microsoft Kinect sensor, which was used to take deep RGB images to gather 3D skeletal data. An OpenPose framework was then used to identify 2D human postures. In addition, a deep neural network was used during the training process of the classification model. To identify behaviors, both previously proposed approaches and approaches based on skeletons were evaluated.

Generally, the analysis of human behavior based on activity remains substantially complicated. Body movements, skeletal structure and/or spatial qualities can be used to describe an individual in a video sequence. In [

16], a highly effective model for 2D or 3D skeleton-based action recognition called the Pose Refinement Graph Convolutional Network was proposed. OpenPose was also used to extract 2D human poses and gradually merging the motion and location data helped improve the human pose data. In [

17], a hybrid model combining a transfer-learning CNN model, human tracking, skeletal characteristics and a deep-learning recurrent neural network model with a gated recurrent unit was proposed to improve the cognitive capabilities of the system and obtain excellent results. In [

18], an At-seq2seq model was proposed for human motion prediction, which considerably decreased the difference between the predicted and true values and greatly increased the time required for accurate human motion recognition prediction. However, most of the current prediction methods are used for a single individual in a simple scenario and their practicality remains limited.

Techniques based on vision and wearable technology are considered the most developed for establishing a gesture-based interaction interface. In [

19], an experimental comparison was conducted between the data of two movement estimation systems: position data collected from Microsoft Kinect RGBD cameras and acceleration data collected from IMUs. The results indicated that the IMU-based approach, also called OperaBLE, achieved a recognition accuracy rate reaching up to 8.5 times that of Microsoft Kinect, which turned out to be dependent on the movement execution plane, the subject’s posture and the focal distance.

Automated video analysis of interacting objects or people has several uses, including activity recognition and the detection of archetypal or unusual actions [

20]. Temporal sequences of quantifiable real-valued features, such as object location or orientation, are used in the current approaches. However, several qualitative representations have been proposed. With a novel and reliable qualitative method for identifying and clustering pair activities, qualitative trajectory calculus (QTC) was used to express the relative motion of two objects and their interactions as a trajectory of QTC states.

2.3. Human Robot Collaboration and Its Safety Measurement Approaches

In the industry, the Human Machine Collaboration paradigm influences safety implementation regulation. However, enforcing these rules might result in very inefficient robot behavior. Current HRC research [

21] focuses on increasing robot and human collaboration, lowering the possibility of injury owing to mechanical characteristics and offering user-friendly programming strategies. According to studies [

22], more than half of HRC research does not employ safety requirements to address challenges. This is a fundamental problem since industrial robots or robot collaboration might endanger human employees if suitable safety systems are not implemented.

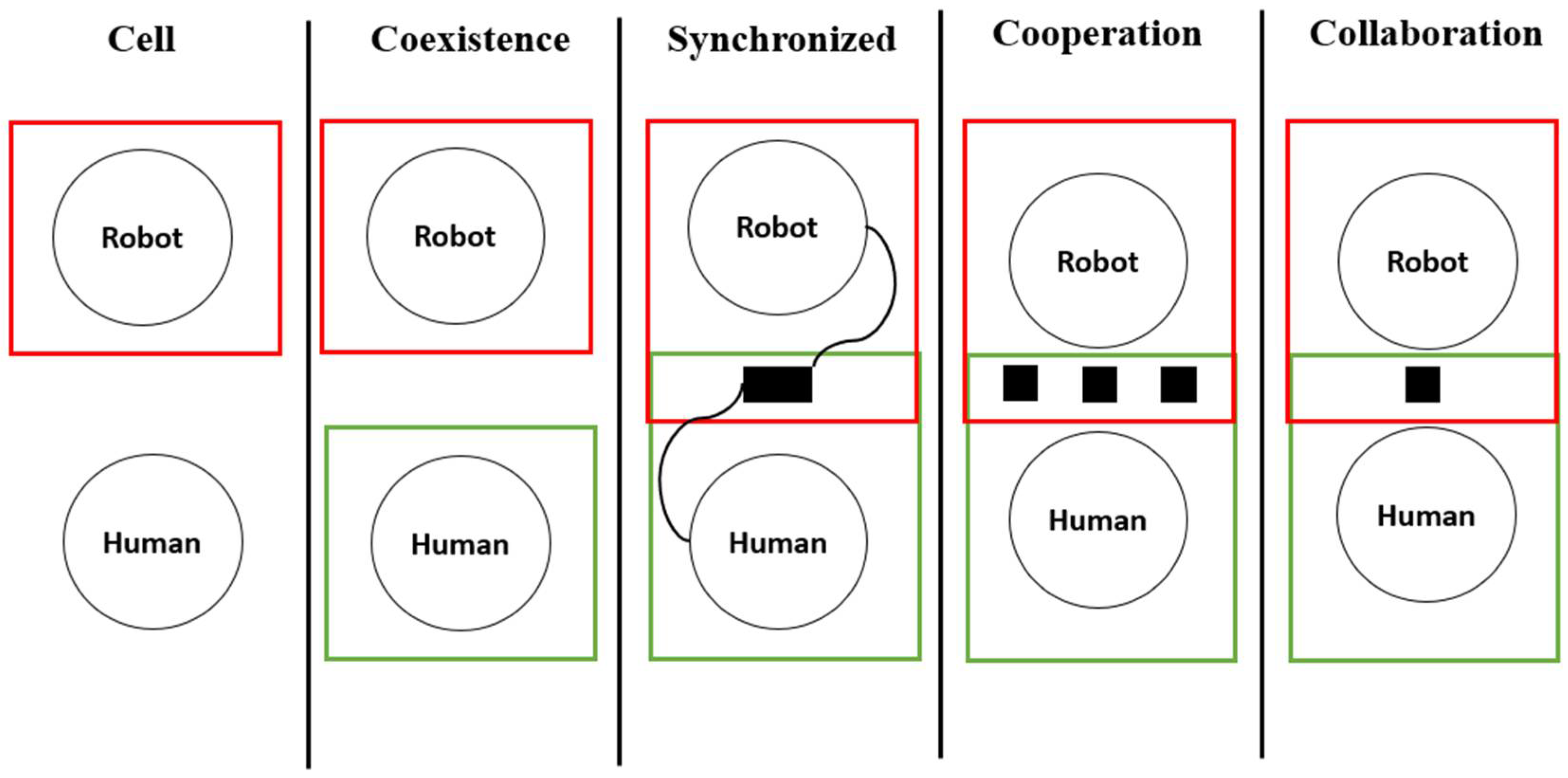

There are five types of human-robot collaboration: (1) cell, (2) cohabitation, (3) synchronized, (4) cooperation and (5) collaboration. Human and robot collaboration begin to occur in synchronized cooperation and collaboration in a shared workstation. The three stages are defined by the moment humans enter the robot workstation. At the synchronized level, robots and humans work on identical products but join the same work differently. At the collaboration level, robots and humans collaborate in the same workplace and at the same time, but their jobs are distinct. Robots and humans collaborate concurrently and share the same workspace by doing the same activity. As a result, people and robots can work together to complete jobs quickly. The HRC workplace design may be adapted to the industry, but it must adhere to the regulations outlined in ISO 10218-1:2011 and 10218-2:2011. Because the standards for collaborative robots have been amended recently, it is vital to consider safety following ISO/TS 15066:2016. To reduce work accidents, four collaboration modes are employed in this regulation: Safety-rated Monitored Stop (SMS), Hand-guiding (HG), Speed and Separation Monitoring (SSM) and Power and Force Limiting (PFL).

As an extension of HR collaboration research, safety research based on the current standard has been deployed. Based on ISO technical criteria 15066, Marvel and Norcross [

23] advised on safety operations and elaborated on the mechanism during HR collaboration. By doing so, Andres et al. [

24] defined the minimum separation distance by combining static and dynamic safety regulations. The authors believe that their proposed dynamic safety regulations is more practical than traditional safety regulations (SSM).

Hereafter, some applications based on dynamic safety zones for the standard collaborative robot started to grow rapidly. Cosmo et al. [

25] verified the possibility of collision during the collaboration using their proposed dynamic safety regulation. From their result, the proposed system produces a better impact on completion time with respect to the traditional static safety zones. Similarly, Byner et al. [

26] proposed two methods to calculate robot velocity by considering the separation distance. This approach is applicable in industry, but it uses sensors to provide precise human position information. In addition, an integrated fuzzy logic safety approach strengthened the protective barrier between people and machines [

27]. It improved the safety of HR collaboration by maximizing the production time and guaranteeing the safety of human operators inside the shared workspace.

On the other hand, study based on external sensor is also being developed in this field. Ref. [

28] detects humans using a heat sensor on the robot arm. It lets the robot become aware of human appearance in their working space. Then, a safety standard is applied in HRC by including a potential run-away motion area. This method allows the industry to create a protection separation distance, which can anticipate a robot’s pace. Kino-dynamic research [

29] built a 2D laser scanner for safety distance with calculated minimum collaboration distance. A safety standard with two layers was also used in [

30] to let the robot collaborate by implementing trajectory scaling to limit the robot’s speed and avoid interference. Moreover, Ref. [

31] presents a safety zone that adapts to the robot’s direction. The safety zone adapts according to the robot’s location.

Apart from the robotic and instrumental point of view, HRC can be achieved using a human skeleton or human movement as an input of the recognition model (i.e., vision-based approaches). One piece of research was the SMI Begaze program. It was utilized for expressive faces to create two areas of interest (AOI) to understand human emotion perception [

32]. By analyzing AOI, one around the eyes and one around the mouth, there is a relationship between callous-unemotional traits, externalizing problem behavior, emotion recognition and attention. Real-time classification for autonomous drowsiness detection using eye aspect ratio also has been conducted [

33].

Furthermore, identifying per-object situation awareness of the driver by using gaze behavior was conducted [

34]. Mixing eye tracking and computational road scene perception make this work possible. Developing a low-cost monocular system that was as accurate as commercial systems for visual fixation registration and analysis was studied [

35]. Two mini cameras were used to track human eye gaze. The logarithm of ocular data acquisition used random sample consensus (RANSAC) and CV functions to improve pupil edge detection. The main goal of this mask-style eye tracker system was to provide researchers with a cost-effective technology solution for high-quality recording of eye fixation behaviors, such as studies of human adaptation and relationships in several professional performance domains.

To conclude, various approaches in safety research are still being developed in HR collaboration. There are two mainstream approaches in this area, namely SSM and PFL. Standard-based SSM works well to limit the robot’s motion while the operator approaches certain areas of the robot’s workspace. However, the SSM-based model is less effective when the operator and robot are within a close distance. Most SSM-based approaches command the robot to stop or idle until the operator leaves the robot’s working area. In contrast to SSM, the PFL-based framework detects the initial collision between the operator and the robot by a built-in or external force sensor in case it exceeds the threshold of contact force.