Tool Remaining Useful Life Prediction Method Based on Multi-Sensor Fusion under Variable Working Conditions

Abstract

:1. Introduction

- The influence of the changing working condition in the tool RUL prediction has not been considered. Most current studies only focus on constant working condition, and there are few prediction methods for tool RUL under variable conditions.

- Most of the existing studies simultaneously use multiplex sensors as input data to predict the tool RUL, but not all the sensor signals are conducive to the tool RUL prediction, and the contribution of different sensors to the tool prediction results is not considered. As a result, the model obtains limited tool degradation information and has poor prediction performance.

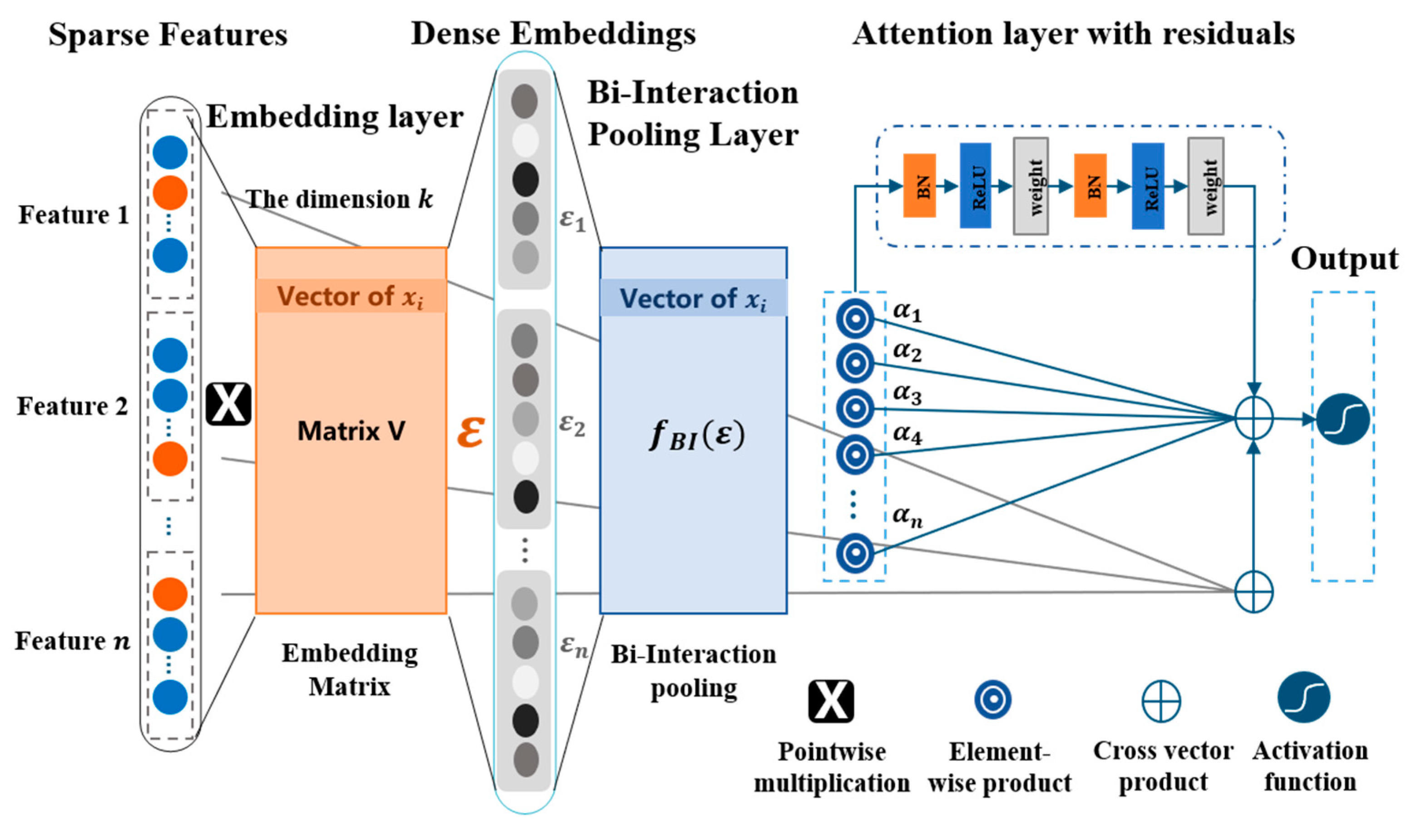

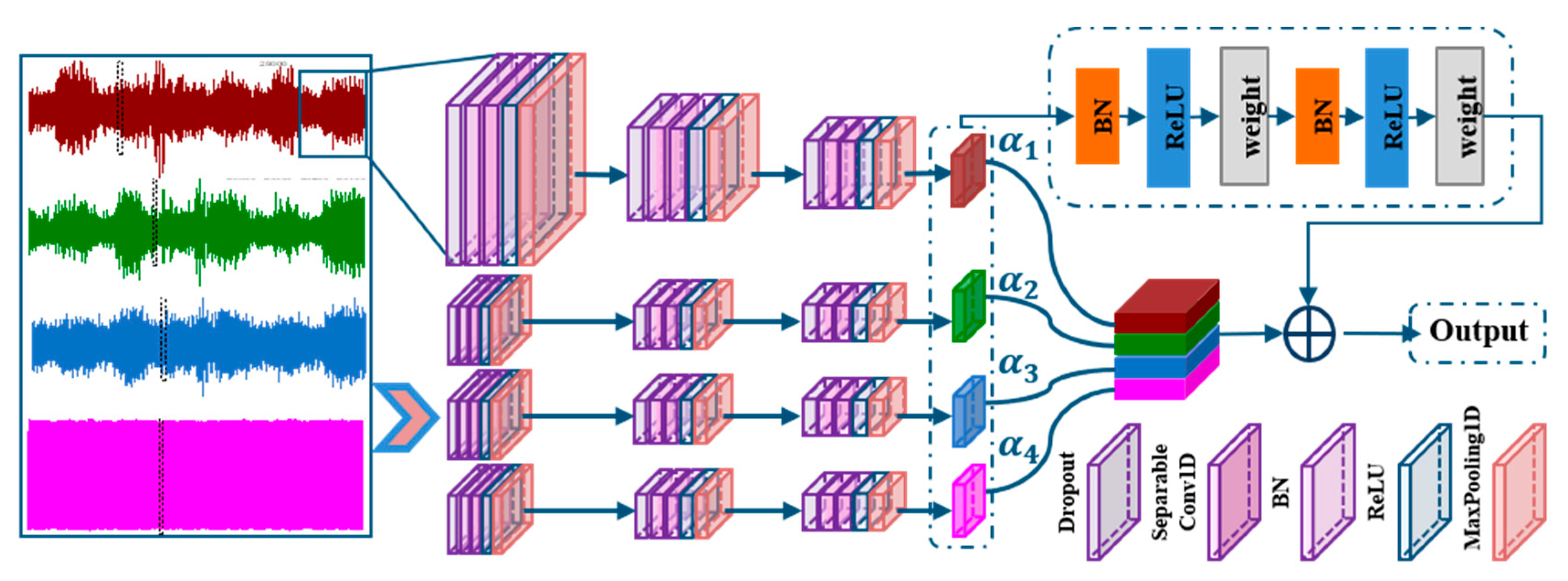

- The factorization machine is used to extract the nonlinear processing characteristics in the low-frequency working condition signal, and the one-dimensional separable convolution layer is extracted in the multi-channel high-frequency sensor signal. The model integrates the working condition signal and the high-frequency sensor state information.

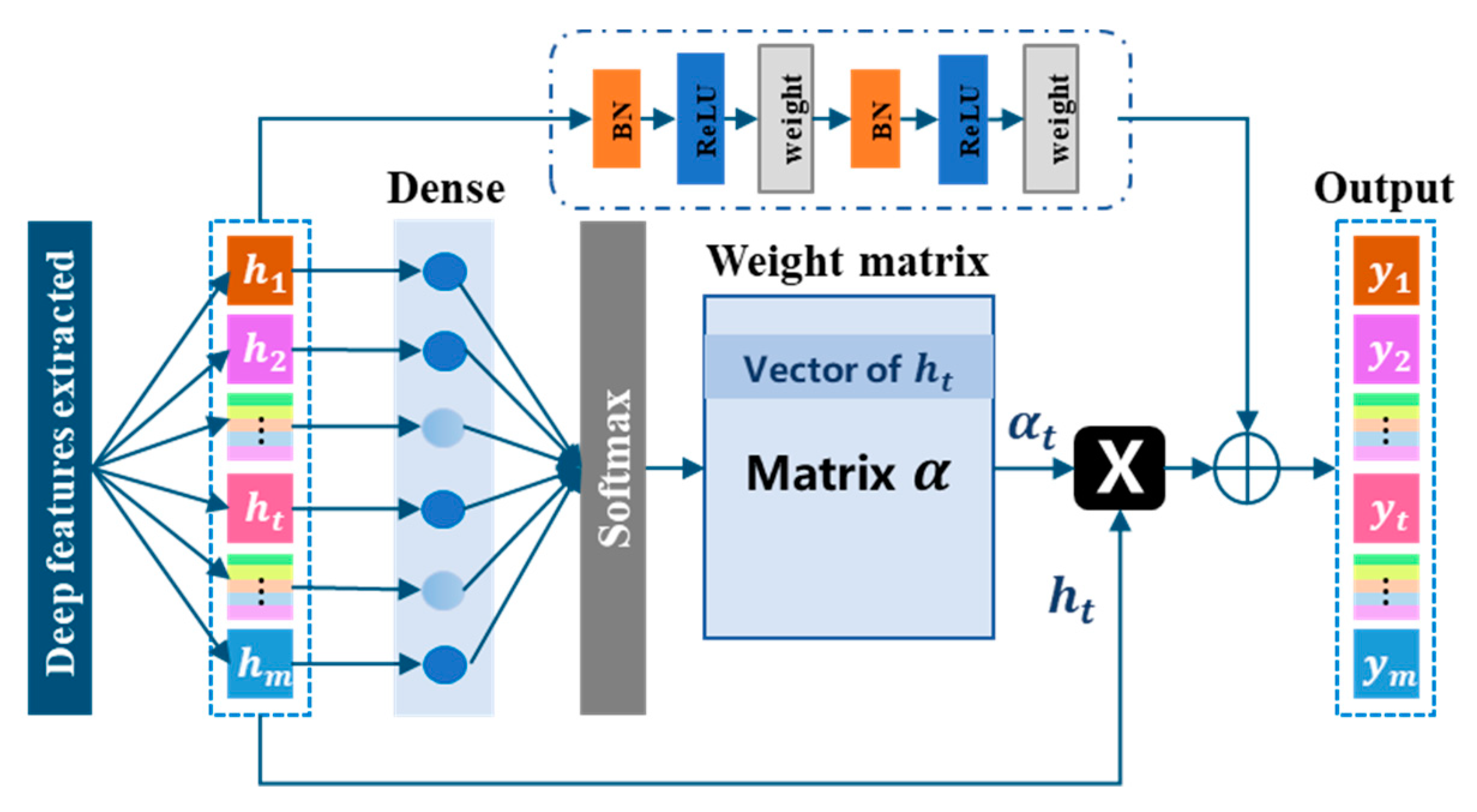

- The attention mechanism with residual differences was applied to integrate features and fuse these features with the adaptive weight determined weights from different signals, which can transmit low-level features to the high level to avoid the upper-level bottleneck problem caused by network degradation.

- Using Foxconn’s publicly available data set for experimental verification and analysis, experiments prove that the proposed method can effectively improve the prediction accuracy and stability of the model.

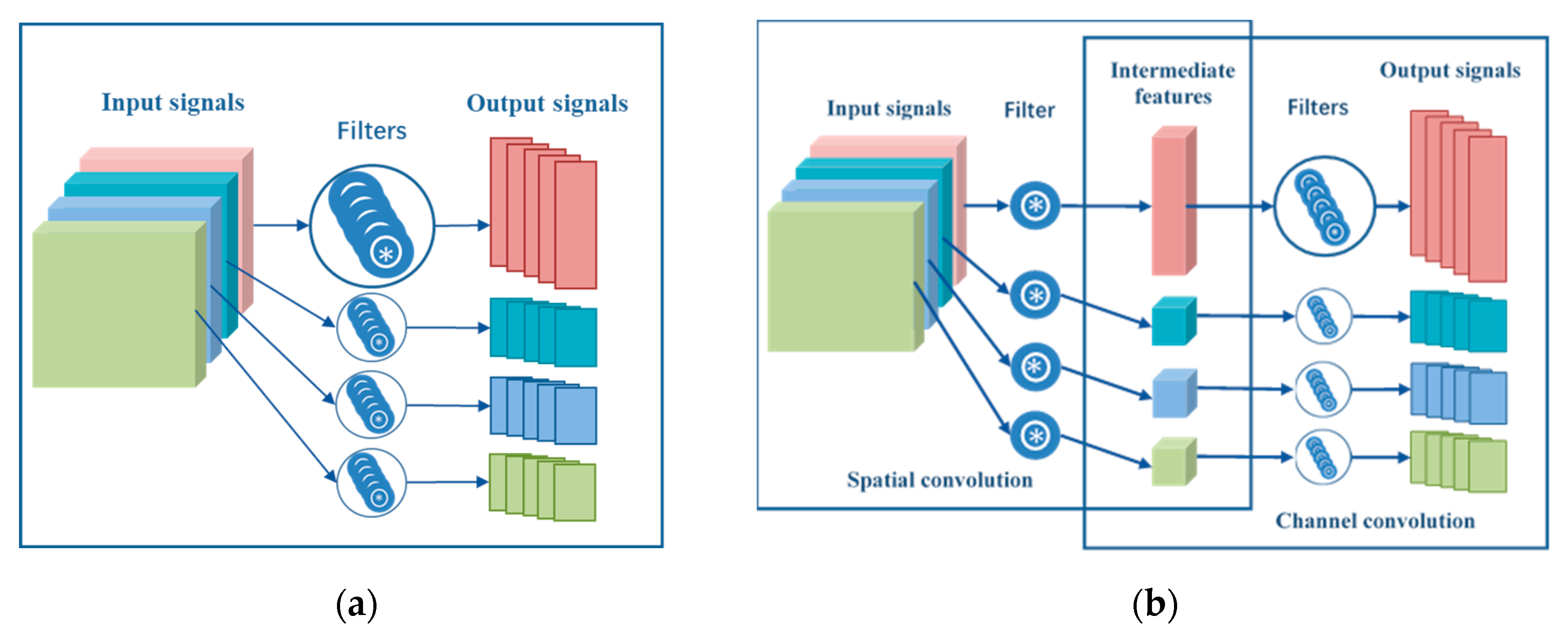

2. Related Theory

3. Tool RUL Prediction Method Based on Multi-Sensor Fusion under Variable Operating Conditions

3.1. The FMRA_SCNRA Overall Framework

3.2. FMRA Network Fusion Working Condition Information

3.3. The SCNRA Network Integrates Multi-Sensor Information

3.4. Residual Attention Network

4. Process of Tool RUL Prediction Based on Multi-Sensor Fusion under Variable Operating Conditions

- Data acquisition, preprocessing, and normalization: different signals are collected from the CNC machine tools through multiple sensors, and the operating condition signals are collected through the PLC. The collected data were then preprocessed, including data cleaning, [0, 1] wide normalization.

- Model construction and training: After building the model, the training samples are trained, and the network parameters are adjusted through indicators and visual analysis.

- Model prediction validation: the test samples after pre-processing and normalization are input to the trained model for validation, and the prediction effect of the model is verified through comparative experiments.

5. Experimental Validation

5.1. Introduction of the Experimental Dataset

5.2. Data Preprocessing

5.3. Model Parameter Setting

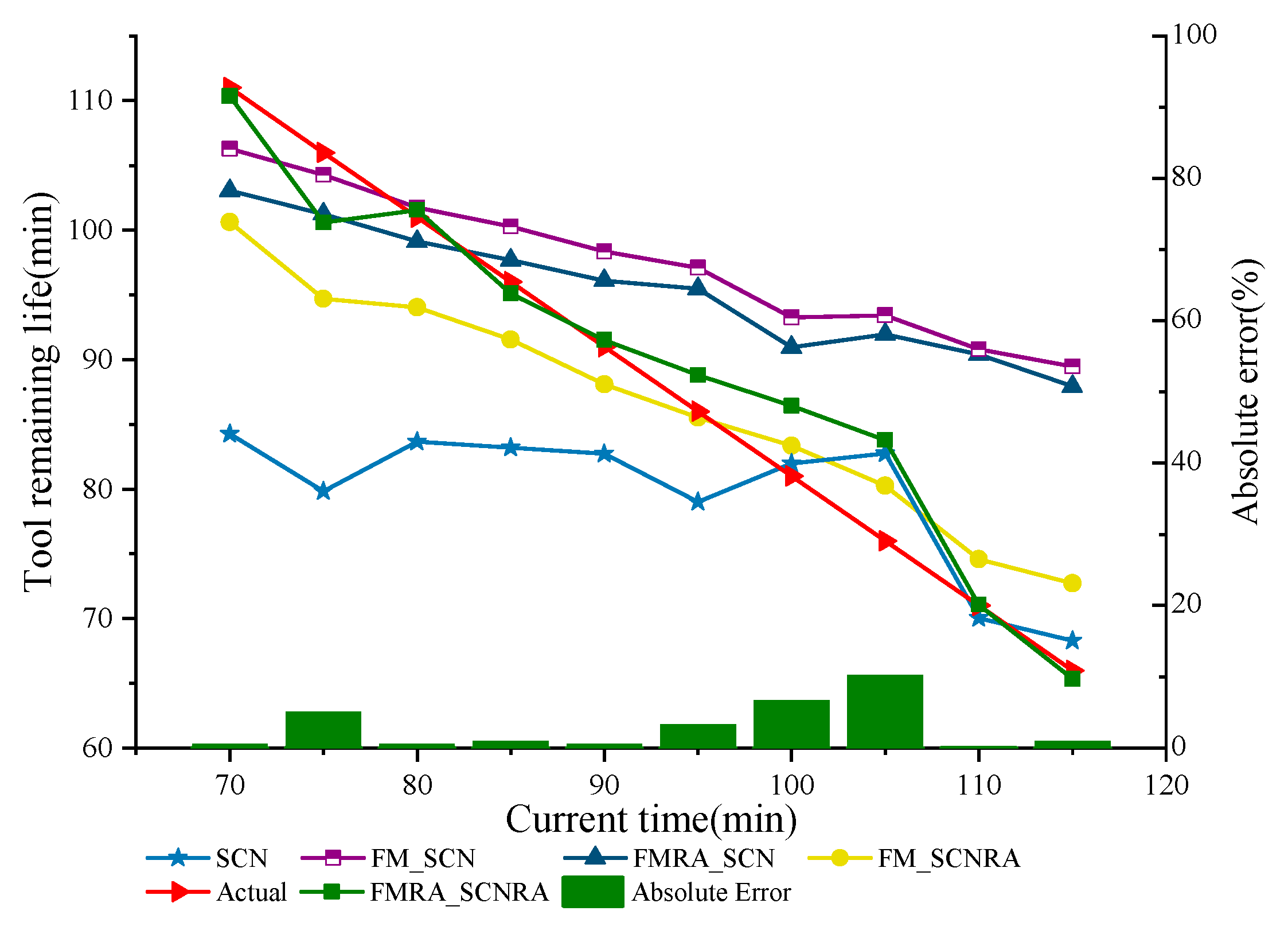

5.4. Experimental Results and Comparative Analysis

- (1)

- SCN: only uses three layers of concurrent one-dimensional separable convolutional module to extract the multi-sensor features and then directly merge the input into the three layers of fully connected layer;

- (2)

- FM_SCN: uses the same SCN to extract multi-sensor features, the FM network is also used to extract the working condition features, Then, the two-part features are combined and input into the three fully connected layers;

- (3)

- FMRA_SCN: based on FM_SCN model and use the adaptive weight allocation of residual attention mechanism on the extracted operating features;

- (4)

- FM_SCNRA: based on the FM_SCN model and using the residual attention mechanism on the extracted sensor features. In the contrast experiments, modeling the same branching network parameters remained consistent.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Colantonio, L.; Equeter, L.; Dehombreux, P.; Ducobu, F. A Systematic Literature Review of Cutting Tool Wear Monitoring in Turning by Using Artificial Intelligence Techniques. Machines 2021, 9, 351. [Google Scholar] [CrossRef]

- Kumar, R.; Sahoo, A.K.; Mishra, P.C.; Das, R.K. Measurement and Machinability Study under Environmentally Conscious Spray Impingement Cooling Assisted Machining. Measurement 2019, 135, 913–927. [Google Scholar] [CrossRef]

- Benkedjouh, T.; Medjaher, K.; Zerhouni, N.; Rechak, S. Health Assessment and Life Prediction of Cutting Tools Based on Support Vector Regression. J. Intell. Manuf. 2015, 26, 213–223. [Google Scholar] [CrossRef] [Green Version]

- Kong, D.; Chen, Y.; Li, N.; Tan, S. Tool Wear Monitoring Based on Kernel Principal Component Analysis and V-Support Vector Regression. Int. J. Adv. Manuf. Technol. 2017, 89, 175–190. [Google Scholar] [CrossRef]

- Liu, Y.C.; Hu, X.F.; Sun, S.X. Remaining Useful Life Prediction of Cutting Tools Based on Support Vector Regression. IOP Conf. Ser. Mater. Sci. Eng. 2019, 576, 012021. [Google Scholar] [CrossRef]

- Kong, D.; Chen, Y.; Li, N.; Duan, C.; Lu, L.; Chen, D. Relevance Vector Machine for Tool Wear Prediction. Mech. Syst. Signal Process. 2019, 127, 573–594. [Google Scholar] [CrossRef]

- Li, W.; Liu, T. Time Varying and Condition Adaptive Hidden Markov Model for Tool Wear State Estimation and Remaining Useful Life Prediction in Micro-Milling. Mech. Syst. Signal Process. 2019, 131, 689–702. [Google Scholar] [CrossRef]

- Mosallam, A.; Medjaher, K.; Zerhouni, N. Data-Driven Prognostic Method Based on Bayesian Approaches for Direct Remaining Useful Life Prediction. J. Intell. Manuf. 2016, 27, 1037–1048. [Google Scholar] [CrossRef] [Green Version]

- Wu, D.; Jennings, C.; Terpenny, J.; Gao, R.X.; Kumara, S. A Comparative Study on Machine Learning Algorithms for Smart Manufacturing: Tool Wear Prediction Using Random Forests. J. Manuf. Sci. Eng. 2017, 139, 071018. [Google Scholar] [CrossRef] [Green Version]

- Bustillo, A.; López de Lacalle, L.N.; Fernández-Valdivielso, A.; Santos, P. Data-Mining Modeling for the Prediction of Wear on Forming-Taps in the Threading of Steel Components. J. Comput. Des. Eng. 2016, 3, 337–348. [Google Scholar] [CrossRef] [Green Version]

- Arnaiz-González, Á.; Fernández-Valdivielso, A.; Bustillo, A.; López de Lacalle, L.N. Using Artificial Neural Networks for the Prediction of Dimensional Error on Inclined Surfaces Manufactured by Ball-End Milling. Int. J. Adv. Manuf. Technol. 2016, 83, 847–859. [Google Scholar] [CrossRef]

- Wu, J.-Y.; Wu, M.; Chen, Z.; Li, X.; Yan, R. A Joint Classification-Regression Method for Multi-Stage Remaining Useful Life Prediction. J. Manuf. Syst. 2021, 58, 109–119. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Zhao, R.; Gao, R.X. Physics Guided Neural Network for Machining Tool Wear Prediction. J. Manuf. Syst. 2020, 57, 298–310. [Google Scholar] [CrossRef]

- Li, Z.; Zhong, W.; Shi, Y.; Yu, M.; Zhao, J.; Wang, G. Unsupervised Tool Wear Monitoring in the Corner Milling of a Titanium Alloy Based on a Cutting Condition-Independent Method. Machines 2022, 10, 616. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, Y.; Liu, C.; Xie, Q.; Xu, Y. A New Tool Wear Monitoring Method Based on Multi-Scale PCA. J. Intell. Manuf. 2019, 30, 113–122. [Google Scholar] [CrossRef]

- Zheng, G.; Sun, W.; Zhang, H.; Zhou, Y.; Gao, C. Tool Wear Condition Monitoring in Milling Process Based on Data Fusion Enhanced Long Short-Term Memory Network under Different Cutting Conditions. Eksploat. Niezawodn. Maint. Reliab. 2021, 23, 612–618. [Google Scholar] [CrossRef]

- Wu, J.; Su, Y.; Cheng, Y.; Shao, X.; Deng, C.; Liu, C. Multi-Sensor Information Fusion for Remaining Useful Life Prediction of Machining Tools by Adaptive Network Based Fuzzy Inference System. Appl. Soft Comput. 2018, 68, 13–23. [Google Scholar] [CrossRef]

- Laddada, S.; Si-Chaib, M.O.; Benkedjouh, T.; Drai, R. Tool Wear Condition Monitoring Based on Wavelet Transform and Improved Extreme Learning Machine. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2020, 234, 1057–1068. [Google Scholar] [CrossRef]

- Chang, H.; Gao, F.; Li, Y.; Wei, X.; Gao, C.; Chang, L. An Optimized VMD Method for Predicting Milling Cutter Wear Using Vibration Signal. Machines 2022, 10, 548. [Google Scholar] [CrossRef]

- Li, Z.; Liu, X.; Incecik, A.; Gupta, M.K.; Królczyk, G.M.; Gardoni, P. A Novel Ensemble Deep Learning Model for Cutting Tool Wear Monitoring Using Audio Sensors. J. Manuf. Process. 2022, 79, 233–249. [Google Scholar] [CrossRef]

- Liu, X.; Liu, S.; Li, X.; Zhang, B.; Yue, C.; Liang, S.Y. Intelligent Tool Wear Monitoring Based on Parallel Residual and Stacked Bidirectional Long Short-Term Memory Network. J. Manuf. Syst. 2021, 60, 608–619. [Google Scholar] [CrossRef]

- Zhang, N.; Chen, E.; Wu, Y.; Guo, B.; Jiang, Z.; Wu, F. A Novel Hybrid Model Integrating Residual Structure and Bi-Directional Long Short-Term Memory Network for Tool Wear Monitoring. Int. J. Adv. Manuf. Technol. 2022, 120, 6707–6722. [Google Scholar] [CrossRef]

- Feng, T.; Guo, L.; Gao, H.; Chen, T.; Yu, Y.; Li, C. A New Time–Space Attention Mechanism Driven Multi-Feature Fusion Method for Tool Wear Monitoring. Int. J. Adv. Manuf. Technol. 2022, 120, 5633–5648. [Google Scholar] [CrossRef]

- Cheng, M.; Jiao, L.; Yan, P.; Jiang, H.; Wang, R.; Qiu, T.; Wang, X. Intelligent Tool Wear Monitoring and Multi-Step Prediction Based on Deep Learning Model. J. Manuf. Syst. 2022, 62, 286–300. [Google Scholar] [CrossRef]

- Xu, X.; Wang, J.; Zhong, B.; Ming, W.; Chen, M. Deep Learning-Based Tool Wear Prediction and Its Application for Machining Process Using Multi-Scale Feature Fusion and Channel Attention Mechanism. Measurement 2021, 177, 109254. [Google Scholar] [CrossRef]

- Guo, H.; Tang, R.; Ye, Y.; Li, Z.; He, X. DeepFM: A Factorization-Machine Based Neural Network for CTR Prediction. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19 August 2017; pp. 1725–1731. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

| File Type | Sampling Frequency | Number of Files | Data | Describe |

|---|---|---|---|---|

| Sensor data | 25,600 Hz | 48 | vibration_1 | x-axis vibration signal |

| vibration_2 | y-axis vibration signal | |||

| vibration_3 | z-axis vibration signal | |||

| current | First phase current | |||

| PLC data | 33 Hz | 1 | time | Record time |

| spindle_load | Spindle load | |||

| x | x-axis coordinate | |||

| y | y-axis coordinate | |||

| z | z-axis coordinate | |||

| csv_no | Number of corresponding Sensor _files |

| Layer | Type | Parameter Setting 1 | Output Size |

|---|---|---|---|

| 1 | Dropout 1 | 0.5 | (n, 776, 1) |

| 2 | SeparableConv1D 1 | 32, 11, 1 | (n, 776, 32) |

| 3 | Batch Normalization 1 | (n, 776, 32) | |

| 4 | Activation 1 | ReLU | (n, 776, 32) |

| 5 | MaxPooling1D 1 | 11 | (n, 71, 32) |

| 6 | Dropout 2 | 0.5 | (n, 71, 32) |

| 7 | SeparableConv1D 2 | 64, 9, 1 | (n, 71, 64) |

| 8 | Batch Normalization 2 | (n, 71, 64) | |

| 9 | Activation 2 | ReLU | (n, 71, 64) |

| 10 | MaxPooling1D 2 | 9 | (n, 8, 64) |

| 11 | Dropout 3 | 0.5 | (n, 8, 64) |

| 12 | SeparableConv1D 3 | 128, 7, 1 | (n, 8, 128) |

| 13 | Batch Normalization 3 | (n, 8, 128) | |

| 14 | Activation 3 | ReLU | (n, 8, 128) |

| 15 | MaxPooling1D 3 | 7 | (n, 2, 128) |

| Index | MAE | RMSE | Accuracy (%) | P-P Value |

|---|---|---|---|---|

| SCN | 11.91 | 13.92 | 87.93 | 26.58 |

| FM_SCN | 10.28 | 12.71 | 88.05 | 23.46 |

| FMRA_SCN | 9.80 | 11.91 | 88.65 | 21.93 |

| FM_SCNRA | 5.34 | 6.27 | 94.31 | 11.30 |

| FMRA_SCNRA | 2.48 | 3.59 | 97.18 | 7.78 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Q.; Qian, C.; Li, C.; Han, Y.; Zhang, Y.; Xie, H. Tool Remaining Useful Life Prediction Method Based on Multi-Sensor Fusion under Variable Working Conditions. Machines 2022, 10, 884. https://doi.org/10.3390/machines10100884

Huang Q, Qian C, Li C, Han Y, Zhang Y, Xie H. Tool Remaining Useful Life Prediction Method Based on Multi-Sensor Fusion under Variable Working Conditions. Machines. 2022; 10(10):884. https://doi.org/10.3390/machines10100884

Chicago/Turabian StyleHuang, Qingqing, Chunyan Qian, Chao Li, Yan Han, Yan Zhang, and Haofei Xie. 2022. "Tool Remaining Useful Life Prediction Method Based on Multi-Sensor Fusion under Variable Working Conditions" Machines 10, no. 10: 884. https://doi.org/10.3390/machines10100884

APA StyleHuang, Q., Qian, C., Li, C., Han, Y., Zhang, Y., & Xie, H. (2022). Tool Remaining Useful Life Prediction Method Based on Multi-Sensor Fusion under Variable Working Conditions. Machines, 10(10), 884. https://doi.org/10.3390/machines10100884