Edgeworth Coefficients for Standard Multivariate Estimates

Abstract

1. Introduction and Summary

2. Multivariate Edgeworth Expansions

3. Secondary or Derived Expansions

- For of (56), set

- Thus, . For and ,

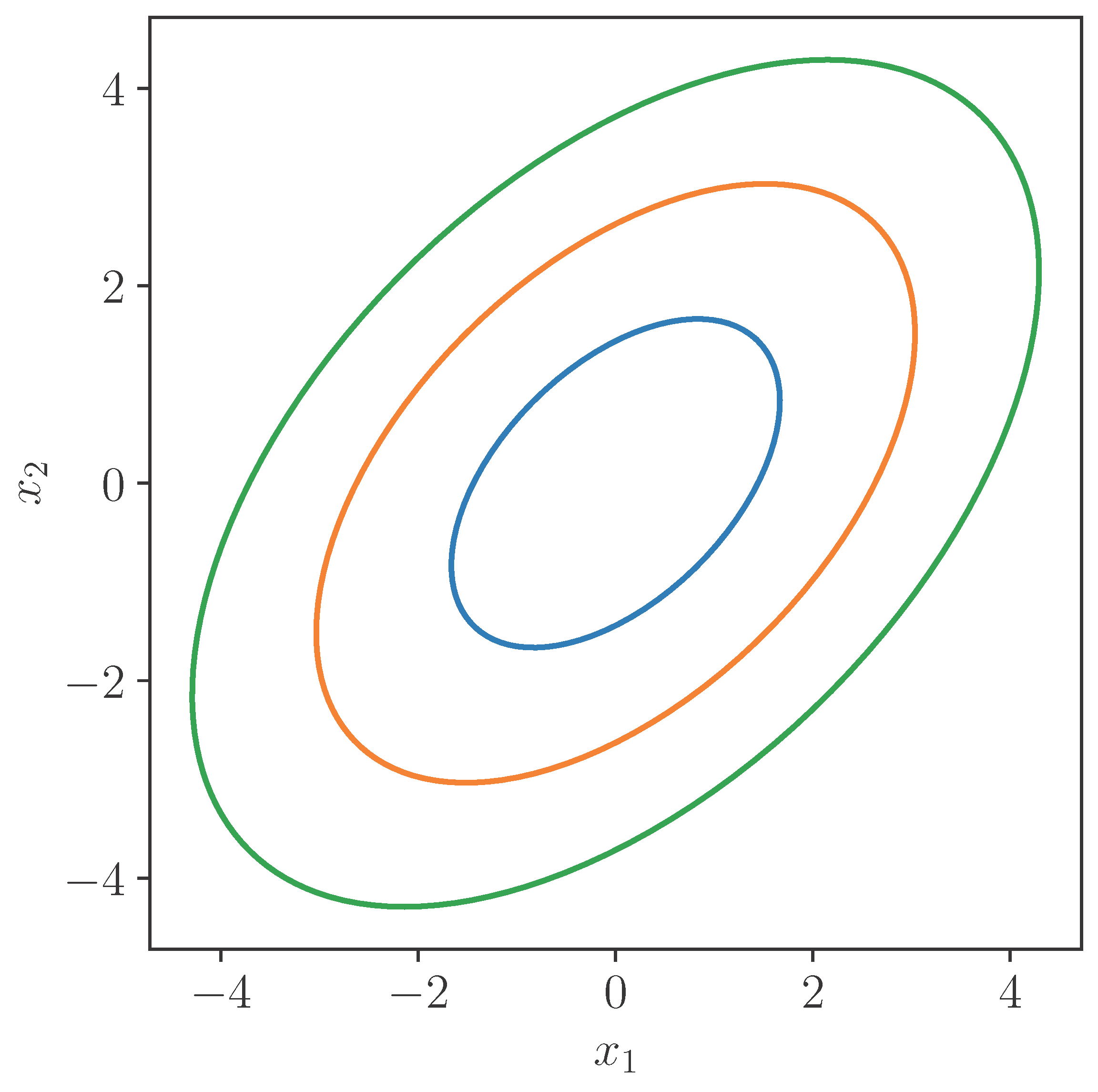

4. The Distribution of Xn = n1/2() for q = 2

5. Conclusions

6. Discussion

- Ref. [13] showed how to generalise the expansions of Cornish and Fisher about to expansions about an arbitrary continuous distribution. Their results are cumbersome as they involve partition theory. In [16], I overcame this using Bell polynomials. It would be straightforward to apply these to expansions about in Example 3 to obtain the percentiles of and . However, in the latter case, we first need to derive the cumulant coefficients of from those of . This can be done by applying [1].

- It would very useful to obtain the multivariate of (44) explicitly.

- The multivariate expansions considered here have been about the multivariate normal. However, as noted at the end of Section 1, expansions about other distributions can greatly reduce the number of terms in each and by matching bias and/or skewness. While this was derived for by Withers and [10,14,15] about Student’s distribution, the F-distribution and the gamma distribution, to date, this has yet to be derived for multivariate expansions about, for example, a multivariate gamma distribution.

- The results here can be extended to tilted (saddle-point) expansions by applying the results of [2]. These are very useful where convergence fails, that is, where the CLT cannot be improved upon, typically due to being in a tail. The tilted version of the multivariate distribution and density of a standard estimate are given by Corollaries 3 and 4 there. Tilting was first used in statistics by [27]. He gave an approximation to the density of a sample mean. See also [49]. Ref. [7] gave a univariate extension to where was the sum of N independent and identically distributed observations, and N was Poisson. The extension of the present results from to would be useful for both univariate and multivariate observations. For a review of references on tilting, see [2].

- Ref. [41] wrote a python program to obtain both analytic and numerical values of multivariate normal moments and multivariate Hermite polynomials when . It would be useful to have these extended to and 4. (The alternative notation for and when or 4 is straightforward.)

- The end of Appendix C suggests a way of giving more theorems for Edgeworth expansions.

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. The Edgeworth Coefficients Needed for (18)

Appendix B. μab and Hab of (66) for a + b ≤ 9

Appendix C. Regularity Conditions for the Edgeworth Expansions of (22)

Appendix D. Some Corrigenda to the References

References

- Withers, C.S. 5th-Order multivariate Edgeworth expansions for parametric estimates. Mathematics 2024, 12, 905. [Google Scholar] [CrossRef]

- Withers, C.S.; Nadarajah, S. Tilted Edgeworth expansions for asymptotically normal vectors. Ann. Inst. Stat. Math. 2010, 62, 1113–1142. [Google Scholar] [CrossRef]

- Cornish, E.A.; Fisher, R.A. Moments and Cumulants in the Specification of Distributions. Rev. l’Inst. Int. Statist. 1937, 5, 307–322. [Google Scholar]

- Withers, C.S. Asymptotic expansions for distributions and quantiles with power series cumulants. J. R. Statist. Soc. B 1984, 46, 389–396, Erratum in J. R. Statist. Soc. B 1986, 48, 256. [Google Scholar]

- Stuart, A.; Ord, K. Kendall’s Advanced Theory of Statistics, 2, 5th ed.; Griffin: London, UK, 1991. [Google Scholar]

- Kolassa, J.E. Series Approximation Methods in Statistics; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Jensen, J.L. Uniform saddlepoint approximations. Adv. Appl. Prob. 1988, 20, 622–634. [Google Scholar]

- Fisher, R.A.; Cornish, E.A. The percentile points of distributions having known cumulants. Technometrics 1960, 2, 209–225. [Google Scholar] [CrossRef]

- Withers, C.S. A simple expression for the multivariate Hermite polynomials. Stat. Prob. Lett. 2000, 47, 165–169. [Google Scholar]

- Withers, C.S.; Nadarajah, S.N. Improved confidence regions based on Edgeworth expansions. Comput. Stat. Data Anal. 2012, 56, 4366–4380. [Google Scholar] [CrossRef]

- Edgeworth, F.Y. The law of error. Proc. Camb. Philos. Soc. 1905, 20, 36–65. [Google Scholar]

- Withers, C.S.; Nadarajah, S.N. Charlier and Edgeworth expansions via Bell polynomials. Probab. Math. Stat. 2009, 29, 271–280. [Google Scholar]

- Hill, G.W.; Davis, A.W. Generalised asymptotic expansions of Cornish-Fisher type. Ann. Math. Statist. 1968, 39, 1264–1273. [Google Scholar]

- Withers, C.S.; Nadarajah, S. Generalized Cornish-Fisher expansions. Bull. Braz. Math. Soc. New Ser. 2011, 42, 213–242. [Google Scholar] [CrossRef]

- Withers, C.S.; Nadarajah, S. Expansions about the gamma for the distribution and quantiles of a standard estimate. Methodol. Comput. Appl. Prob. 2014, 16, 693–713. [Google Scholar] [CrossRef][Green Version]

- Withers, C.S.; Nadarajah, S. Edgeworth-Cornish-Fisher-Hill-Davis expansions for normal and non-normal limits via Bell polynomials. Stochastics Int. J. Probab. Stoch. Processes 2015, 87, 794–805. [Google Scholar] [CrossRef]

- Simonato, J.G. The performance of Johnson distributions for computing value at risk and expected shortfall. J. Deriv. 2011, 19, 7–24. [Google Scholar] [CrossRef]

- Zhang, L.; Mykl, P.A.; Ait-Sahalia, Y. Edgeworth expansions for realised volatility and related estimators. J. Econom. 2011, 160, 190–203. [Google Scholar]

- Withers, C.S.; Nadarajah, S.N. Moment generating functions for Rayleigh Random Variables. Wirel. Pers. Commun. 2008, 46, 463–468. [Google Scholar]

- Song, T.; Wang, S.; An, W. GPS positioning accuracy estimation using Cornish-Fisher expansions. In Proceedings of the 2009 WRI International Conference on Communications and Mobile Computing, Kunming, China, 6–8 January 2009; pp. 152–155. [Google Scholar]

- Abdel-Wahed, A.R.; Winterbottom, A. Approximating posterior distributions of system reliability. Statistician 1983, 32, 224–228. [Google Scholar]

- Winterbottom, A. Asymptotic expansions to improve large sample confidence intervals for system reliability. Biometrika 1980, 67, 351–357. [Google Scholar] [CrossRef]

- Winterbottom, A. The interval estimation of system reliability component test data. Oper. Res. 1984, 32, 628–640. [Google Scholar] [CrossRef]

- Sellentin, E.; Jaffe, A.H.; Heavens, A.F. On the use of the Edgeworth expansion in cosmology I: How to foresee and evade its pitfalls. arXiv 2017, arXiv:1709.03452. [Google Scholar] [CrossRef]

- Perninge, M. Stochastic Optimal Power Flow by Multivariate Edgeworth Expansions; Electric Power Systems Research; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Withers, C.S.; Nadarajah, S.N. Cornish-Fisher expansions about the F-distribution. Appl. Math. Comput. 2012, 218, 7947–7957. [Google Scholar] [CrossRef]

- Daniels, H.E. Saddlepoint approximations in statistics. Ann. Math. Statist. 1954, 25, 631–650. [Google Scholar]

- Cramer, H. Mathematical Methods of Statistics; Princeton University Press: Princeton, NJ, USA, 1946. [Google Scholar]

- Bhattacharya, R.N.; Rao Ranga, R. Normal Approximation and Asymptotic Expansions; Wiley: New York, NY, USA, 1976. [Google Scholar]

- Skovgaard, I.M. On multivariate Edgeworth expansions. Int. Statist. Rev. 1986, 54, 169–186. [Google Scholar]

- Comtet, L. Advanced Combinatorics; Reidel: Dordrecht, The Netherlands, 1974. [Google Scholar]

- Withers, C.S. Nonparametric confidence intervals for functions of several distributions. Ann. Inst. Statist. Math. 1988, 40, 727–746. [Google Scholar]

- Withers, C.S. Accurate confidence intervals when nuisance parameters are present. Comm. Statist.-Theory Methods 1989, 18, 4229–4259. [Google Scholar]

- Takeuchi, K. A multivariate generalization of Cornish-Fisher expansion and its applications. Keizaigaku Ronshu 1978, 44, 1–12. (In Japanese) [Google Scholar]

- Takemura, A.; Takeuchi, K. Some results on univariate and multivariate Cornish-Fisher expansions: Algebraic properties and validity. Sankhya Ser. A 1988, 50, 111–136. [Google Scholar]

- Anderson, T.W. An Introduction to Multivariate Analysis; John Wiley: New York, NY, USA, 1958. [Google Scholar]

- Gradshteyn, I.S.; Ryzhik, I.M. Tables of Integrals, Series and Products, 6th ed.; Academic Press: New York, NY, USA, 2000; (The Generalized Hypergeometric Function Is Defined in Section 9.14.). [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions; Applied Mathematics Series 55; U.S. Department of Commerce; National Bureau of Standards: Gaithersburg, MD, USA, 1964. [Google Scholar]

- Bentkus, V.; Gtze, F.; van Zwet, W.R. An Edgeworth expansion for symmetric statistics. Ann. Stat. 1997, 25, 851–896. [Google Scholar] [CrossRef]

- Xu, J.; Gupta, A.K. Improved confidence regions for a mean vector under general conditions. Comput. Stat. Data Anal. 2006, 51, 1051–1062. [Google Scholar]

- Teal, P. A code to calculate bivariate Hermite polynomials. Its input is V11,V12,V22 and y1,y2, not V11,V12,V22 and x1,x2. 2024. Available online: https://github.com/paultnz/bihermite/blob/main/bihermite.py (accessed on 26 June 2025).

- Hall, P. The Bootstrap and Edgeworth Expansion; Springer: New York, NY, USA, 1992. [Google Scholar]

- Hall, P. Rejoinder: Theoretical Comparison of Bootstrap Confidence Intervals. Ann. Stat. 1988, 16, 981–985. [Google Scholar] [CrossRef]

- Skovgaard, I.M. Edgeworth expansions of the distributions of maximum likelihood estimators in the general (non i.i.d.) case. Scand. J. Statist. 1981, 8, 227–236. [Google Scholar]

- Skovgaard, I.M. Transformation of an Edgeworth expansion by a sequence of smooth functions. Scand. J. Statist. 1981, 8, 207–217. [Google Scholar]

- Withers, C.S.; Nadarajah, S. The distribution and percentiles of channel capacity for multiple arrays. Sadhana SADH Indian Acad. Sci. 2020, 45, 155. [Google Scholar] [CrossRef]

- Withers, C.S. Edgeworth-Cornish-Fisher expansion for the mean when sampling from a stationary process. Axioms 2025, 14, 406. [Google Scholar] [CrossRef]

- Withers, C.S. Expansions for the distribution and quantiles of a regular functional of the empirical distribution with applications to nonparametric confidence intervals. Annals Statist. 1983, 11, 577–587. [Google Scholar]

- Field, C.A.; Hampel, F.R. Small sample asymptotic distributions of M-estimators of location. Biometrika 1982, 69, 29–46. [Google Scholar]

- Isserlis, L. On a formula for the product-moment coefficient of any order of a normal frequency distribution in any number of variables. Biometrika 1918, 12, 134–139. [Google Scholar] [PubMed]

- Withers, C.S. New methods for multivariate normal moments. Stats 2025, 8, 46. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Withers, C.S. Edgeworth Coefficients for Standard Multivariate Estimates. Axioms 2025, 14, 632. https://doi.org/10.3390/axioms14080632

Withers CS. Edgeworth Coefficients for Standard Multivariate Estimates. Axioms. 2025; 14(8):632. https://doi.org/10.3390/axioms14080632

Chicago/Turabian StyleWithers, Christopher Stroude. 2025. "Edgeworth Coefficients for Standard Multivariate Estimates" Axioms 14, no. 8: 632. https://doi.org/10.3390/axioms14080632

APA StyleWithers, C. S. (2025). Edgeworth Coefficients for Standard Multivariate Estimates. Axioms, 14(8), 632. https://doi.org/10.3390/axioms14080632