1. Introduction

Truncated distributions have several applications across various scientific disciplines, particularly in networks of communication, economics, hydrology, materials science, and physics. A truncated distribution is a conditional distribution that arises when the domain size of the original distribution is confined to a narrower location. A truncated distribution arises when incidents occurring either above or below a specified threshold, or outside a defined range, cannot be observed or recorded. Truncated data are a typical and acceptable phenomenon in the field of dependability, particularly when the variable of concern pertains to the failure rates of items. In truncation, information regarding items beyond the limited range is inaccessible. An instance of truncation in manufacturing arises when a sample of things is chosen for analysis from a population that has previously had items excluded for failing to meet established criteria.

Several truncated distributions have been presented by various authors. El Gazar et al. [

1,

2,

3] studied the process of truncation for the inverse power Ailamujia, moment exponential, and inverse power Ishita distributions using their applications in different fields. Elgarhy et al. [

4] studied different estimation methods of the inverse power ailamujia and truncated inverse power ailamujia distributions based on progressive type-II censoring scheme. Thiamsorn et al. [

5] studied the variant of the truncated Ishita distribution. Singh et al. [

6] developed a truncated variant of the Lindley distribution, analyzed its statistical characteristics, and showed that this truncated version provides an enhanced modeling efficacy relative to the Weibull, Lindley, and exponential distributions based on actual data. Also, Almetwally et al. [

7] investigated the truncated Cauchy power Weibull-G family, emphasizing the possible relevance and importance of their findings in population research and other fields that involve truncated distributions. Chesneau et al. [

8] employed the truncated composite approach to the Burr X distribution, which led to the development of a novel truncated Burr X generated family. The truncated version of the Chris–Jerry model, with its basic properties, is studied by Jabarah et al. [

9]. Zaninetti et al. [

10] determined that the truncated Pareto distribution outperforms the Pareto distribution in relation to astronomical data. Nadarajah [

11] examined several truncated distributions, including the t-distribution and inverted distributions. Furthermore, Nadarajah investigated the beta distribution and the Lévy distribution, which are two separate forms of distributions. Hassan et al. [

12] presented the truncated Lomax-G family power Lomax distribution and, in a subsequent study [

13], suggested the power truncation of the Lomax-G family. Furthermore, Hussein and Ahmed [

14] presented the use of the truncated Gompertz-exponential function on the unit interval, highlighting its distinctive properties and practical applications. Khalaf et al. [

15] discussed the truncated Rayleigh Pareto distribution, including its applications. Altawil [

16] introduced the truncated Lomax-uniform distribution on the unit interval, clarifying its properties. Elah et al. [

17] investigate a novel zero-truncated distribution and its utilization to count datasets. Recently, Nader et al. [

18] discussed a new truncated Fréchet-inverted Weibull distribution with many applications in the medical field.

Conversely, recent years have witnessed an increase in studies centered on the innovation of distribution theory, particularly around the Perk (PE) distribution. This dedication arises from the observation of data that diverge from conventional distributions, including gamma, exponential, and Weibull models. In the finance sector, heavy-tailed datasets are frequently poorly represented by conventional models, which results in erroneous risk evaluations and possible financial detriment. Faced with this kind of information, researchers must develop new distributions that more precisely correspond to the noted patterns and which are applicable throughout many fields of expertise. Altering current models has been a prevalent strategy to tackle the diversity present in datasets. In the last twenty years, scholars have investigated novel models by enhancing and modifying current ones.

The PE distribution, originating from the Gompertz–Makeham distribution and developed by [

19], is utilized in actuarial science, specifically for the analysis of elderly deaths data, as noted by Richards [

20]. Singh et al. [

21] also explored the exponentiated Perk distribution. The odd Perk-G distribution family was introduced by Elbatal et al. [

22] and is characterized by two scale parameters. Recently, Hussain et al. [

23] discussed the inverse power Perk distribution, with its applications in engineering and actuarial fields.

Suppose that a random variable

follows the Perk distribution, then the probability density function (PDF) and cumulative distribution function (CDF) are delineated in the following relations, respectively:

where

and

are scale parameters.

This paper focuses on a novel truncated distribution, termed the truncated Perk (TPE) distribution, and examines its basic characteristics. It is noteworthy for several reasons:

From a functional perspective, it is exceedingly straightforward, has merely two parameters, and is still novel in the current literature;

The associated PDF may exhibit decreasing, right skewness or reversed, J-shaped skewness, while the hazard rate function (HRF) can manifest as increasing or J-shaped skewness. These attributes are advantageous in various contexts, including survival analysis, reliability assessment, and uncertainty analysis;

This paper analyzes three distinct datasets to provide practical examples that motivate the research. The first dataset is related to economics, whereas the second dataset is related to the environment and the third dataset related to physics. We illustrate that the TPE distribution may serve as a superior alternative to formidable competitors, which confirms the flexibility of the proposed model in dealing with different types of datasets.

Modeling real-world data using flexible statistical distributions is crucial, especially when dealing with truncated, skewed, or bounded datasets. The truncated Perk (TPE) distribution offers such flexibility, which makes it suitable across various fields. In this study, we apply the TPE distribution to three datasets from economics, agriculture, and entomology. These include economic growth indicators with asymmetric patterns, biologically constrained milk yields from SINDI cows, and truncated data on insect counts under different irradiation treatments. In all cases, the TPE distribution provides a superior fit and realistic representation, supporting more accurate inference and decision-making. These applications underscore its adaptability and practical relevance in diverse scientific domains.

The remainder of this paper is structured into the subsequent sections.

Section 2 presents the construction of the new truncated model. Numerous fundamental characteristics of the proposed model are delineated in

Section 3, supplemented by images and numerical tables as necessary. The estimates of the parameters of the TPE distribution are obtained in

Section 4, using seven classical estimation techniques.

Section 5 delineates a simulation study to illustrate the adaptability of the new model.

Section 6 explained Bayesian estimation for parameters.

Section 7 demonstrates the application of the TPE distribution to three categories of real data, highlighting its adaptability. Finally,

Section 8 provides conclusive notes, summarizing major findings and prospective avenues for future research.

2. Truncated Perk Model

A novel truncated distribution, referred to as the truncated Perk (TPE) model, is proposed in this section. From the PDF and CDF of the Perk (PE) distribution, shown in Equations (1) and (2), respectively, we can obtain the formula of the PDF and CDF of the TPE distribution, respectively, as follows:

and

The probability density function (PDF) of the TPE distribution exhibits notable behavior near the boundaries of the unit interval. Specifically, depending on the parameter values, the density may approach zero, diverge, or remain bounded as or . For instance, under certain configurations, the density tends to zero at both ends, while, in other cases, it may exhibit a sharp increase near one of the boundaries, reflecting the distribution’s flexibility in capturing edge behavior. This behavior can be formally analyzed by setting the limits of the PDF as and , which provide insight into the tail characteristics and boundedness of the model.

It is worth noting that the behavior of the TPE distribution’s probability density function (PDF), when it is set as

can be analytically examined. Specifically, under the condition that the parameters satisfy

, the PDF approaches a finite constant value:

This limit indicates that the TPE model is right-bounded and exhibits a well-defined behavior at the lower boundary of the support. Additionally, depending on the specific values of the shape parameters, the TPE distribution’s PDF can exhibit different modalities. For instance, it may be unimodal or monotonically decreasing. This flexibility enhances the usefulness of the model in capturing diverse real-world phenomena

Furthermore, the TPE distribution demonstrates diverse modality patterns depending on the shape parameters. It can be unimodal, exhibiting a single peak, or nearly flat, which allows it to adapt to various empirical data shapes. The location of the mode (if it exists) is sensitive to the interaction between the parameters, and in some instances, numerical methods are required to determine it explicitly. This adaptability makes the TPE distribution particularly suitable for modeling data with skewness or truncated characteristics within the unit interval

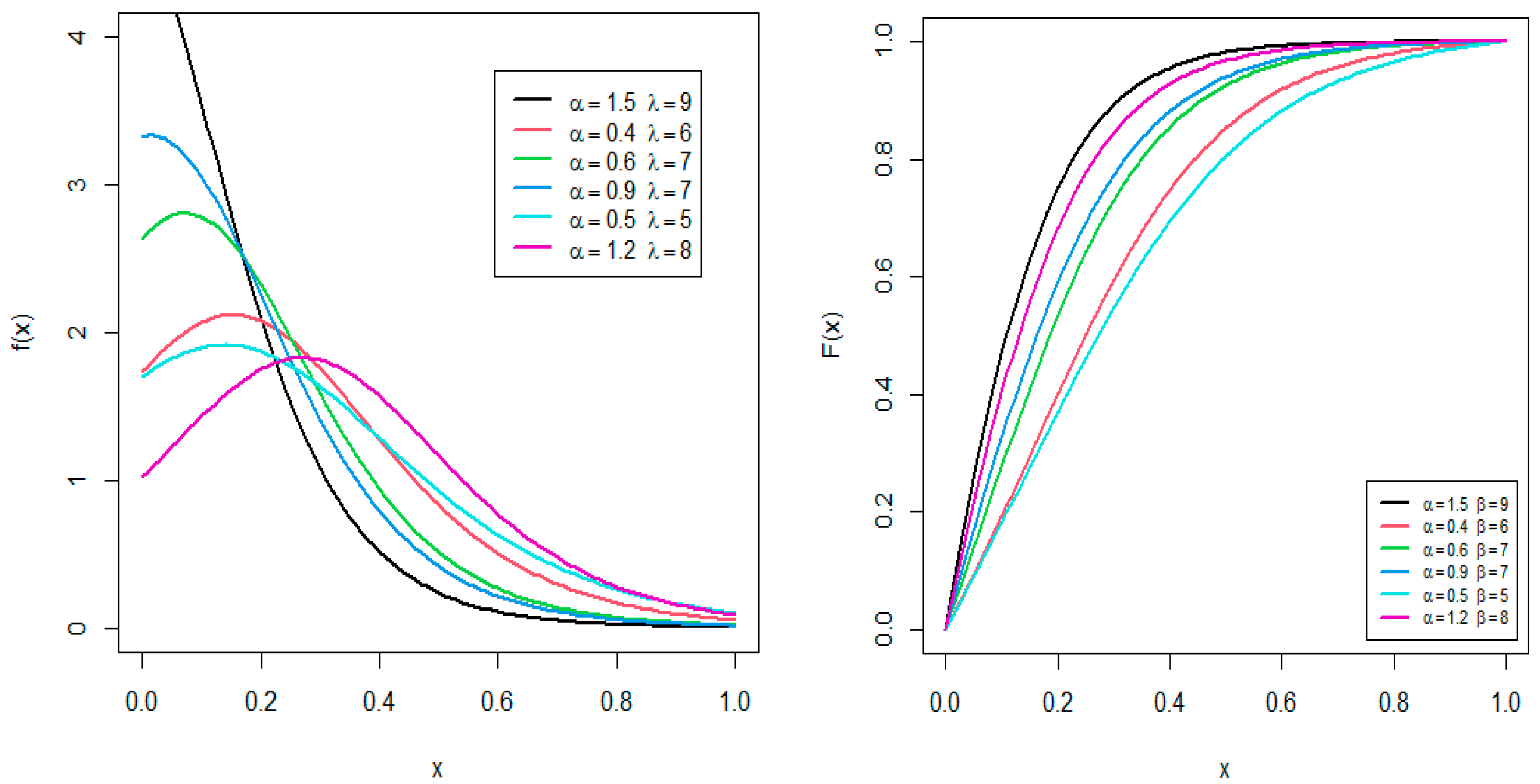

Figure 1 displays a variety of possible shapes of the PDF and CDF of the TPE distribution for some selected values of parameters. It can be detected that the PDF exhibits right skewed and unimodal behavior, while the shape of the CDF increases in size.

According to Equations (3) and (4), the survival function (SF), hazard rate function (HRF), reserved hazard rate function, and cumulative hazard rate function are derived, respectively, as follows:

Figure 2 depicts the survival and hazard rate curves over values of α and λ, showcasing their effectiveness. The HRF plot for the TPE model is evidently growing and has a J-shaped curve.

3. Basic Properties

This section studies the fundamental features of the TPE model such as its moment, moment generating function, coefficients of skewness and kurtosis, probability-weighted function, Renyi entropy, Tsallis entropy, order statistics, and quantile function.

3.1. Moments

Let

be a random variable that follows the TPE distribution with PDF

, then the

rth moment, say

, can be stated as follows:

Using Equation (3) in Equation (5), we obtain the following:

By using the generalized binomial expansions, we obtain the following:

Therefore, we obtain the following:

Let

, then

is provided as shown below.

where

is the incomplete gamma function.

In particular, the mean and the variance of X are given by:

Also, the relations of the skewness (

), the kurtosis (

), and the coefficient of variation (

) for the TPE distribution can be written as follows:

Table 1 provides a numerical depiction of the initial four moments, variance (

), coefficients of skewness (

), kurtosis (

), and variation (

) for different parameter values. The results reveal that, for a fixed value of

, increasing α leads to a consistent increase in all moment-related measures, including the mean and variance. In contrast, the skewness (

) and kurtosis (

) coefficients exhibit a decreasing trend as

increases, indicating a tendency toward a more symmetric and less peaked distribution. These patterns suggest that the parameter α plays a significant role in shaping the distribution’s tail behavior and overall shape. Also, 3D plots of the measurements for

,

,

, and

are presented in

Figure 3 for further clarification and elucidation.

Figure 3 presents three-dimensional charts depicting the variance and mean of the TPE distribution.

3.2. Moment-Generating Function

The MGF of the TPE distribution is derived using the PDF of Equation (3) in Equation (7), as follows:

Then, by using Exponential Expansion, we obtain the following:

By using Equation (6), the MGF of the TPE distribution is given as follows:

3.3. Probability-Weighted Moment

The probability-weighted moment (PWM) approach is commonly used for estimating parameters in distributions that lack a straightforward inverse form. First introduced in [

24], this method has gained significant recognition in hydrological studies for estimation purposes. The PWM of the TPE distribution is obtained as follows:

By substituting Equations (3) and (4) into Equation (8), we obtain the following:

where

.

For any real number and , the generalized binomial series is defined as follows:

. Then, by using this series, we obtain the following:

and

By substituting in Equation (9), we obtain the following:

By using the incomplete Gamma function, the PWM is obtained as follows:

3.4. Renyi Entropy

Renyi entropy, proposed by Renyi [

25], measures the variation in uncertainty in a distribution. The Renyi entropy is defined as follows:

For any real number

a,

b > 0 and |

ε| < 1, the generalized binomial series is defined as follows:

Then, we obtain the following:

Therefore, the Renyi entropy is developed as follows:

3.5. Tsallis Entropy

Tsallis [

26] introduced an entropy called Tsallis entropy for generalizing standard statistical mechanics which is defined as follows:

For any real number

a,

b > 0 and |ε| < 1, the generalized binomial series is defined as follows:

Therefore, Tsallis entropy is given as follows:

3.6. Order Statistics

Assume that is a random sample of size drawn from the TPE distribution with PDF and CDF as defined in Equation (3) and Equation (4), respectively. Next, order statistics are indicated by where and .

The

order statistics of PDF are obtained as follows:

3.7. Quantile Function

A quantile function, referred to as the inverse distribution function, is a function that associates a probability value (ranging from 0 to 1) with the corresponding value in a dataset or distribution. It essentially offers a method to determine the threshold within which a specified percentage of data reside. The quantile function (QF) is crucial for actuaries in determining premium rates and assessing capital reserves, as it provides immediate information in the form of a loss distribution. The quantile function of the TPE model is derived from the inverse of the CDF as presented in Equation (4). The QF of the TPE distribution has a closed form as follows:

By simplifying, we obtain the following:

By taking the log function for both sides, we can obtain the QF as follows:

Specifically, by substituting

= 0.25, 0.5, and 0.75, we obtain the first, second (median), and third quantiles. Furthermore, predicated on the quantiles, Bowley’s skewness (

) and Moor’s kurtosis (

) are provided, respectively, by the following relations:

and

These measurements provide significant insights into the skewness and kurtosis modeling capabilities of the TPE distribution and possess the benefit of being applicable for all parameter values.

Table 2 displays the possible quantile values,

, and

for a designated set of parameter values, encompassing the real and positive roots.

Table 2 illustrates that, with increases in the values of the parameters, the results of the quantiles exhibit a downward trend, whereas the values of

and

demonstrate an upward trend.

4. Estimation Methods

In this section, we discuss seven traditional methods employed to ascertain the parameters of the TPE model. These estimation techniques entail maximizing the objective function in order to identify the most suitable estimator, whether by maximizing or minimizing.

4.1. Maximum Likelihood Method

The maximum likelihood (E

1) technique is predominantly employed in estimation concepts to ascertain the parameters of statistical models due to its consistency, asymmetric effectiveness, and consistency features. Let

,

be random samples of size

with joint PDF

, then the likelihood function of the random sample can be expressed as follows:

By taking the log function of both sides of Equation (10), we obtain the following:

By differentiating both sides of the function (

) with respect to the parameters

, we obtain the following:

By setting the relations Equations (11) and (12) to zero and using computer facilities, the estimators for the unknown parameters and can be determined.

The likelihood equations defined in systems (11) and (12) do not admit closed-form solutions, and numerical optimization methods are required to obtain the maximum likelihood estimates. However, the standard regularity conditions required for the asymptotic properties of the maximum likelihood estimators (MLEs) are summarized as follows: the parameter space is an open subset of R; the probability density function is differentiable with respect to the parameters; the Fisher information matrix is positive and definite; the true parameter value lies in the interior of the parameter space; and the log-likelihood function satisfies smoothness and integrability conditions that permit differentiation under the integral sign. These assumptions ensure the consistency, asymptotic normality, and efficiency of the MLEs. In our numerical experiments, the optimization procedure consistently converged to stable estimates, indicating that the likelihood surface is well-behaved for the considered parameter space.

Moreover, given that the regularity conditions for the MLEs are satisfied for the proposed TPE distribution, the maximum likelihood estimators enjoy desirable asymptotic properties. Specifically, they are consistent, asymptotically normal, and asymptotically efficient. These properties justify the use of an MLE for inference and support its application in the real data analysis provided in this study

4.2. Least Square and Weighted Least Square Methods

The least square (E

2) method is a statistical technique used to determine the optimal fit for a dataset by minimizing the total sum of the differences between data points and the curve being fitted. Swain et al. [

27] presented least square estimators and weighted least square estimators for estimating the parameters of Beta distributions. We utilize an identical technique for the TPE distribution in this investigation. The E

2 estimates for the parameters

and λ of the TPE model are derived by minimizing the following equation.

with respect to the parameters

and

, let

represent the distribution function of the arranged random variables

, where {

} is a random sample of size

from a CDF

. Hence, in this case, the LS estimates of

and

can be found by minimizing the next formula, with respect to

and

.

The E

3 estimates of the parameters

and

are obtained by minimizing the following equation.

with respect to

, the weights

are equal to

Hence, in this case, the E

3 estimations of

and

, respectively, can be determined by minimizing the next formula, with respect to

and

, as follows:

4.3. Anderson Darling and Right-Tail Anderson Darling Methods

The Anderson–Darling (E

4) method, introduced by Anderson and Darling [

28], is another sort of minimum distance estimator. The E

4 estimates

and

of the parameters

and

are, respectively, obtained by minimizing the following equation.

These estimates can also be determined by solving the following equations:

where

and

are the first derivatives of the CDF of the TPE model.

Also, the E

5 estimates

and

of the parameters

and

are, respectively, obtained by minimizing the following equation.

These estimates can be obtained by solving the following equations:

4.4. Cramér-Von-Mises Method

E

6 estimation refers to the discrepancy between the estimated CDF and its empirical distribution function. Based on actual data from MacDonald [

29], the bias of the estimations is lesser compared to other minimal distance estimates. The E

6 estimates

and

of

and

are, respectively, determined by minimizing the following equation.

The next nonlinear equations can also be utilized to calculate these estimates.

4.5. Percentile Method

Utilizing a closed-form distribution function allows for the estimation of a distribution parameter by plotting a linear representation against the percentile points. This approach to determining the parameters of the Weibull distribution was proposed by Kao [

30] and Kao [

31]. Given

random samples

from the distribution function, where

denotes the ordered samples, the E

7 estimates of the parameters α and λ can be derived by minimizing the next formula:

where

is an unbiased estimator of

. Hence, the E

7 estimates can be derived by differentiating Equation (13) with respect to α and λ, respectively. By equating the resulting equations by zero, we can obtain the values of the estimated parameters.

5. Simulation Analysis

The assessment of estimators’ performance takes into account different sample sizes . A numerical analysis is conducted herein to evaluate the efficacy of estimations for the TPE model, with a focus on relative biases and mean squared errors (MSEs). The simulation employs Software R 4.5.0. The procedure for obtaining random samples from the TPE distribution through the inversion method is delineated as follows:

The sizes of the random samples, 50, 150, 350, 500, are constructed from the TPE model by employing the inversion approach;

The values of the parameters are studied as α = 0.2, 0.3, 0.5, 0.7, 0.8, and 0.9 and λ = 2.5, 3.5, 4.5, 5, 6, and 6.5. The TPE model estimators are evaluated based on the values of the parameters and sample sizes;

Looking at the results of

Table 3,

Table 4,

Table 5,

Table 6,

Table 7 and

Table 8, we note that the values of the relative biases and mean square error decrease with larger sample sizes. The simulation outcomes exhibit the rankings of the estimators for each technique, which are shown as superscripts in each row, along with the total sum of the ranks denoted by

. The results in

Table 9 show the performance order of all estimators, both individually and collectively.

Table 9 demonstrates that the maximum likelihood (E

1) method, achieving a total score of 52, exceeds all estimates from other methodologies for the TPE distribution. The weighted least square (E

3), achieving a total score of 77.5, may be regarded as a competing approach to the E

1 method. see

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9.

6. Bayesian Estimation

For Bayesian estimation of the TPE distribution, it is assumed that the α and λ have the following independent prior gamma distribution:

The hyperparameters

ai and

bi, for

i = 1, 2, are selected to reflect prior beliefs about the unknown parameters and are assumed to be known. Hyperparameters were chosen based on empirical Bayes logic, where prior means were set close to the MLEs, and variances were selected to reflect moderate prior uncertainty. By combining the likelihood function with the prior distribution, a structured Bayesian framework for parameter estimation was established. This led to the formulation of the posterior distribution for the parameters

α and

λ, which is denoted as:

To make Bayesian statistical inference more practical and meaningful, it is necessary to consider symmetric and asymmetric loss functions. As noted in [

32], a loss function is a real-valued function that accommodates all realistic parameters and estimates. Using such an asymmetric loss function enhances the flexibility and applicability of Bayesian inference in situations where the results of overestimation and underestimation are different. These functions ensure that the inference process remains robust and relevant across various parameter estimators. Consider the squared error loss (SEL) function, which is defined as follows:

where

This loss function is symmetric, meaning that it equally penalizes both overestimation and underestimation.

For any function of α and λ, the Bayesian estimation

under the SEL function is expressed as follows:

Noteworthy is the fact that the ratio of several integrals in Equation (15) cannot be clearly stated. Using the joint posterior density function provided to produce the samples in Equation (14), the MCMC method is employed. Specifically, the MCMC technique is implemented using the Gibbs inside Metropolis–Hastings (M−H) sampling process. The following is the combined posterior distribution:

The Bayesian estimation of the parameters α and λ for the TPE distribution is analytically intractable due to the model’s complexity. Closed-form solutions are difficult to obtain under the conditions of the TPE distribution. To overcome this challenge, we propose the use of the MCMC method, a robust computational approach for estimating posterior distributions when analytical solutions are unavailable; see Equation (14). It is evident from Equations (16) and (17) that the conditional posterior distributions of α and λ do not correspond to any standard form. As a result, Gibbs sampling becomes an appropriate choice, where the M−H algorithm plays a crucial role in drawing samples within the MCMC framework. The MCMC method generates a sequence of samples that approximate the posterior distribution of the model parameters by simulating a Markov chain that converges to the target distribution. These samples can be used to estimate posterior statistics, such as the mean, which serves as Bayesian estimation for α and λ.

In this study, a comprehensive comparison between the MLE and Bayesian estimation approaches was carried out to evaluate the performance and flexibility of the proposed model. The MLE method, known for its asymptotic efficiency and computational simplicity, provides point estimates based solely on observed data. In contrast, Bayesian estimation incorporates prior beliefs and yields full posterior distributions, offering a more informative framework, particularly in the presence of limited or uncertain data.

The significance of this comparison lies in its ability to reveal the relative advantages and practical implications of each estimation technique. While the MLE method may perform well in large samples, Bayesian methods can offer superior inference in complex models or under prior knowledge constraints. By analyzing and contrasting the estimates, confidence intervals, and computational aspects of both methods, we provide a more holistic understanding of the model’s behavior in

Table 10,

Table 11,

Table 12 and

Table 13.

A rigorous comparison between the MLE and Bayesian estimation methods was performed to evaluate the effectiveness of each approach in estimating the parameters of the proposed distribution. The analysis revealed that the MLE method exhibited superior performance compared to the Bayesian approach, particularly in terms of estimation accuracy and sensitivity to sample size. Specifically, the MLE yielded parameter estimates with lower mean squared errors (MSEs), and its precision improved significantly as the sample size increased, demonstrating its well-known asymptotic properties.

Although Bayesian estimation provides a flexible framework that incorporates prior knowledge and generates full posterior distributions, its accuracy was comparatively limited, especially when non-informative priors were used or when the sample size was moderately large. This limitation was further illustrated through histograms of posterior samples obtained via Markov chain Monte Carlo (MCMC) simulations. These graphical representations revealed wider posterior spreads and higher uncertainty around the parameter estimates, particularly in smaller samples.

The comparative results emphasize the practical advantage of MLEs under the examined conditions, suggesting their suitability for applications that involve large datasets where minimizing estimation error is critical. Nonetheless, Bayesian estimation remains valuable in contexts where prior information is available or when dealing with complex hierarchical models.

To support the Bayesian analysis, posterior samples were generated using Markov chain Monte Carlo (MCMC) techniques. The resulting histograms of these posterior samples were examined to assess their convergence and the shape of the posterior distributions. As shown in

Figure 4, these summaries serve not only as diagnostic tools but also as intuitive visual representations of parameter uncertainty, reinforcing the credibility of the Bayesian inference results.

7. Applications

This section examines the versatility and importance of the TPE model by analyzing three real-world datasets. The model’s applicability, illustrated through data plots, may be more appealing, as these visual representations emphasize the TPE model’s superior capacity to align with the data compared to competitive models. The practical significance of this model is evidenced by its analysis of economic growth data, the total milk output from a cohort of cows, and data on adults exposed to radiation, which illustrates the TPE model’s superiority over competing distributions. The suggested TPE model will be applicable across multiple disciplines, including medical sciences, engineering, economics, and reliability studies. A detailed description of the datasets is presented in the following points:

The initial dataset that was analyzed includes the trade share variable values from the esteemed “Determinants of Economic Growth Data,” which encompass growth rates from 61 different nations, along with characteristics potentially associated with growth. The information is accessible online as an adjunct to [

33]. This analysis utilizes empirical data to underscore the practical significance and promise of the TPE distribution in modeling complicated events that involve inflation and unemployment rates, and hence offers valuable insights for many sectors that are dependent on forecasting future labor market fluctuations. The dataset of trade shares comprises the following numbers:

0.1405, 0.1566, 0.1577, 0.1604, 0.1608, 0.2215, 0.2994, 0.3131, 0.3246, 0.3247, 0.3295, 0.3300, 0.3379, 0.3397, 0.3523, 0.3589, 0.3933, 0.4176, 0.4258, 0.4356, 0.4421, 0.4444, 0.4505, 0.4558, 0.4683, 0.4733, 0.4846, 0.4889, 0.5096, 0.5177, 0.5278, 0.5347, 0.5433, 0.5442, 0.5508, 0.5527, 0.5606, 0.5607, 0.5671, 0.5753, 0.5828, 0.6030, 0.6050, 0.6136, 0.6261, 0.6395, 0.6469, 0.6512, 0.6816, 0.6994, 0.7048, 0.7292, 0.7430, 0.7455, 0.7798, 0.7984, 0.8147, 0.8230, 0.8302, 0.8342, and 0.9794;

The second dataset originates from the comprehensive investigation conducted by [

34] and later analyzed by [

35]. This dataset comprises the entire milk production from the initial calving of 107 SINDI breed cows. These data reveal the efficacy of the TPE model in precisely predicting the milk output of this breed of cows. The dataset is as follows:

0.4365, 0.4260, 0.5140, 0.6907, 0.7471, 0.2605, 0.6196, 0.8781, 0.4990, 0.6058, 0.6891, 0.5770, 0.5394, 0.1479, 0.2356, 0.6012, 0.1525, 0.5483, 0.6927, 0.7261, 0.3323, 0.0671, 0.2361, 0.4800, 0.5707, 0.7131, 0.5853, 0.6768, 0.5350, 0.4151, 0.6789, 0.4576, 0.3259, 0.2303, 0.7687, 0.4371, 0.3383, 0.6114, 0.3480, 0.4564, 0.7804, 0.3406, 0.4823, 0.5912, 0.5744, 0.5481, 0.1131, 0.7290, 0.0168, 0.5529, 0.4530, 0.3891, 0.4752, 0.3134, 0.3175, 0.1167, 0.6750, 0.5113, 0.5447, 0.4143, 0.5627, 0.5150, 0.0776, 0.3945, 0.4553, 0.4470, 0.5285, 0.5232, 0.6465, 0.0650, 0.8492, 0.8147, 0.3627, 0.3906, 0.4438, 0.4612, 0.3188, 0.2160, 0.6707, 0.6220, 0.5629, 0.4675, 0.6844, 0.3413, 0.4332, 0.0854, 0.3821, 0.4694, 0.3635, 0.4111, 0.5349, 0.3751, 0.1546, 0.4517, 0.2681, 0.4049, 0.5553, 0.5878, 0.4741, 0.3598, 0.7629, 0.5941, 0.6174, 0.6860, 0.0609, 0.6488, and 0.2747;

The third dataset is derived from the research introduced by [

36], which includes the number of F1 adults’ progeny of

Stegobium paniceum L. produced in unirradiated peppermint packets and the number of those subjected to gamma irradiation (6, 8, 10 KGy) or microwave exposure (1–3 min). The results of the choice packet test were as follows: 165, 170, 168, 114, 120, 117, 86, 91, 85, 65, 60, 63, 149, 145, 153, 107, 103, 107, 81, 74, 80. Prior to utilizing these data, they must be normalized to the interval [0, 1] through the transformation

; hence, the transformed data might be presented as follows:

0.964912, 0.994152, 0.982456, 0.666667, 0.701754, 0.684211, 0.502924, 0.532164, 0.497076, 0.380117, 0.350877, 0.368421, 0.871345, 0.847953, 0.894737, 0.625731, 0.602339, 0.625731, 0.473684, 0.432749, and 0.467836.

The three datasets are mostly investigated in

Table 14.

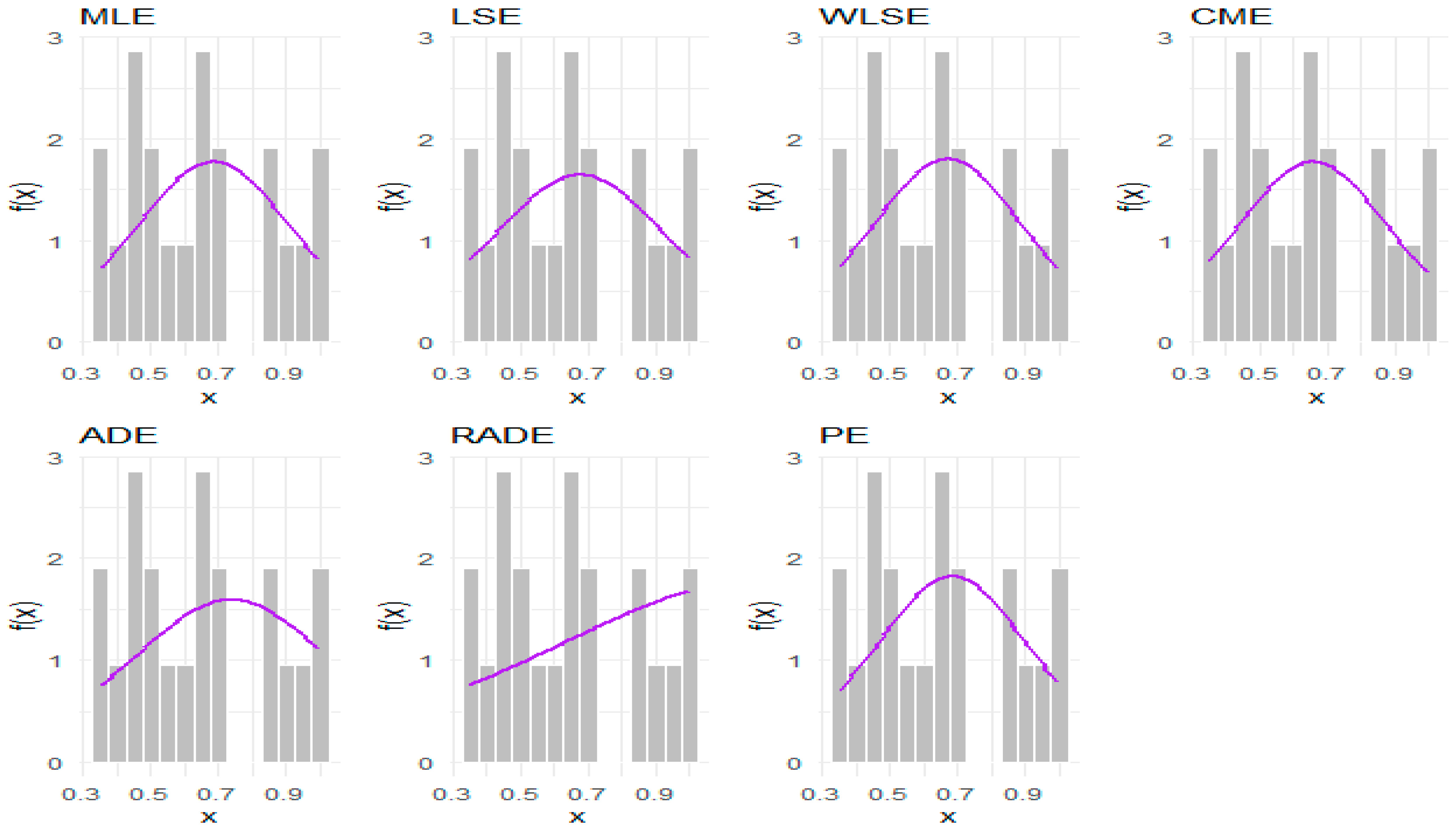

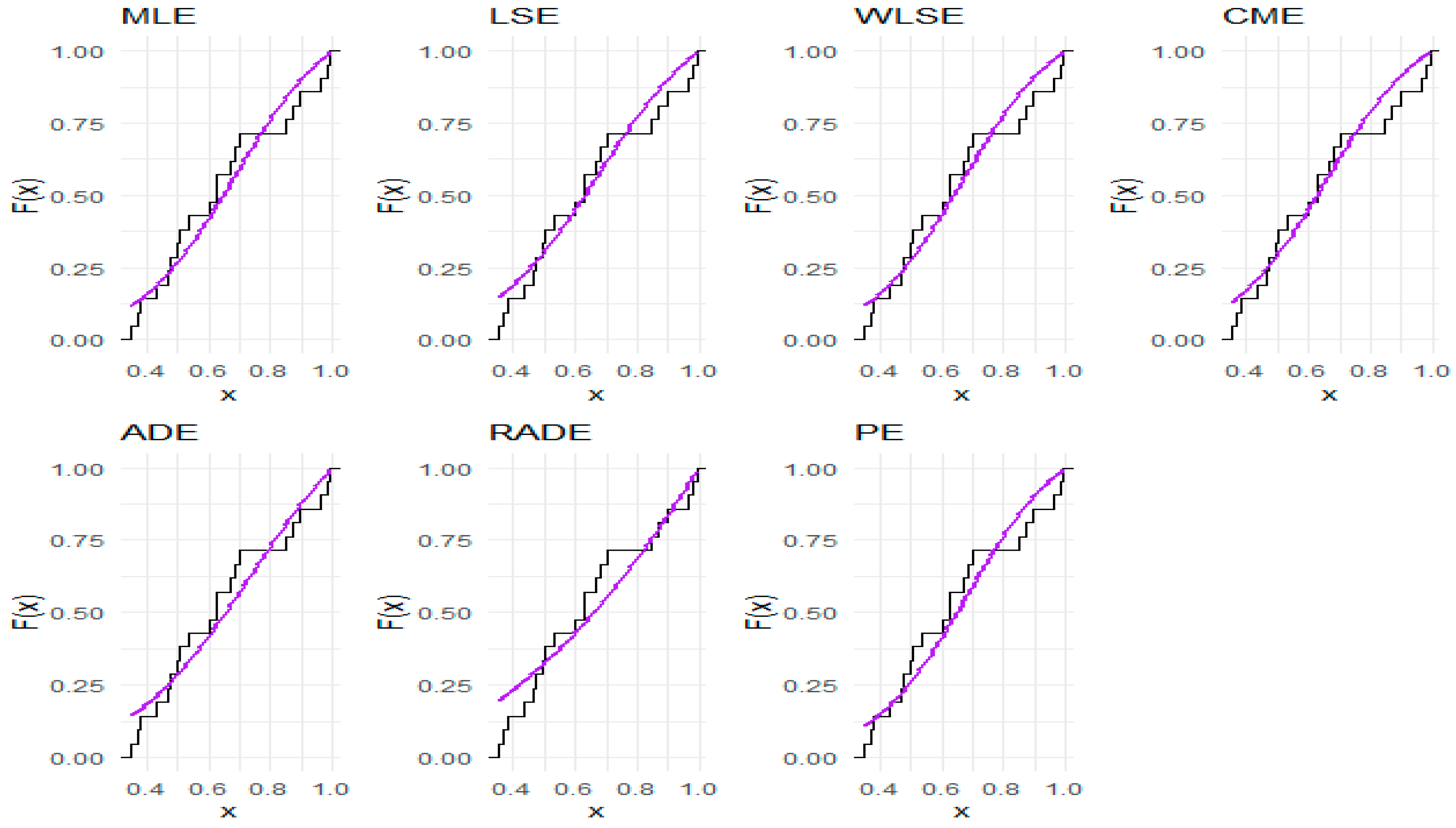

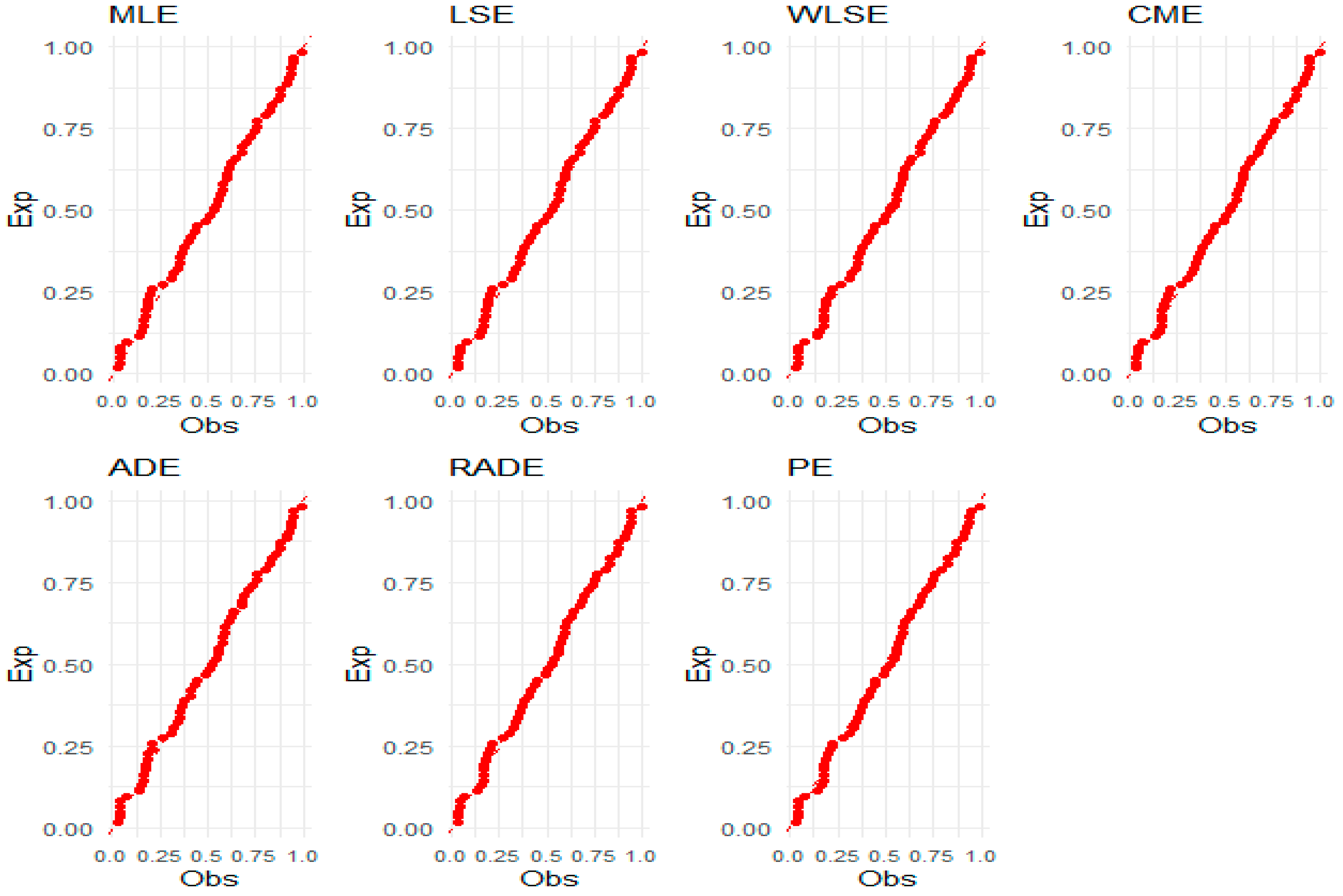

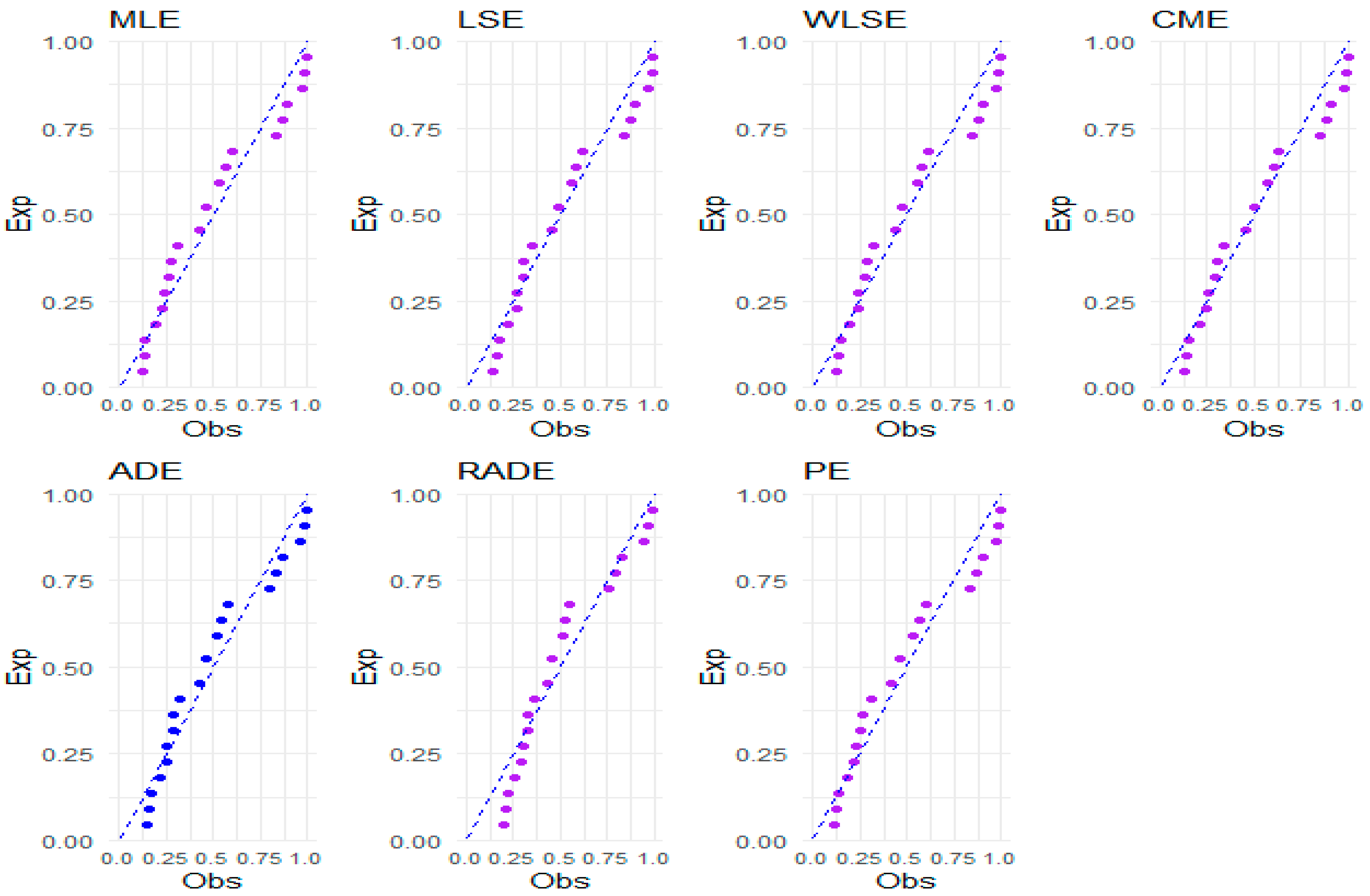

Figure 5,

Figure 6, and

Figure 7, respectively, illustrate some graphical renderings of these datasets. These encompass histograms, kernel density estimates, violin plots, box plots, total time on test (TTT) plots, and quantile–quantile (QQ) plots. These figures show that all of the datasets have an increasing HRF. Also, the first and third datasets are slightly right-skewed, while the second dataset is left-skewed. The first and second datasets are almost symmetrical with no visible outliers, while the third dataset exhibits a non-normal distribution with no visible outliers. These characteristics can be handled by the TPE distribution as developed in the theoretical results.

Key statistical aspects are indicated by colors and symbols: In the violin plot, the green area indicates density, and the white dot is the median; in the box plot, the orange box represents the middle 50% of the data, the red dot is the mean, and the blue dots are data points. Pink and yellow are used in TTT and QQ charts to illustrate empirical versus theoretical behavior.

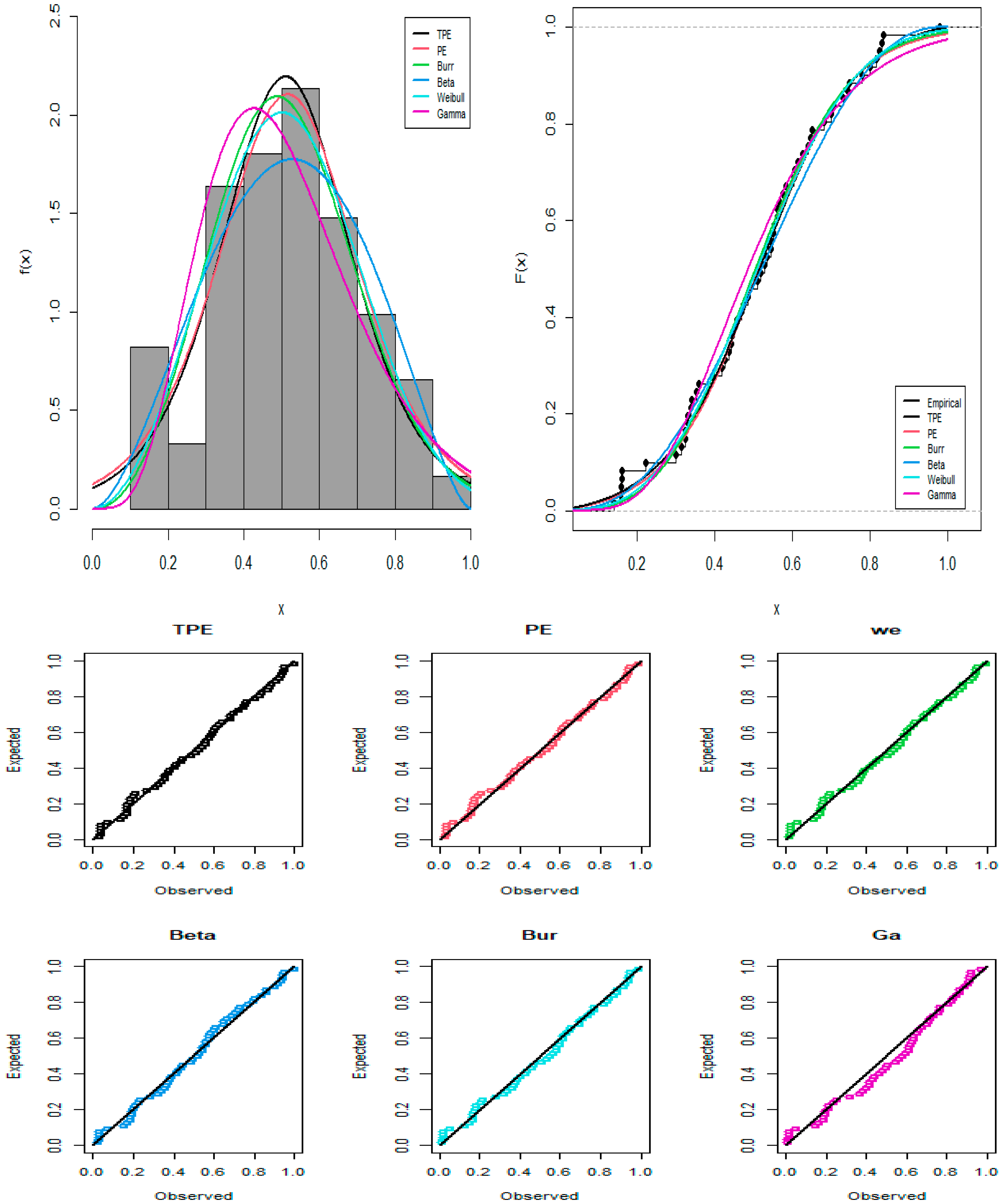

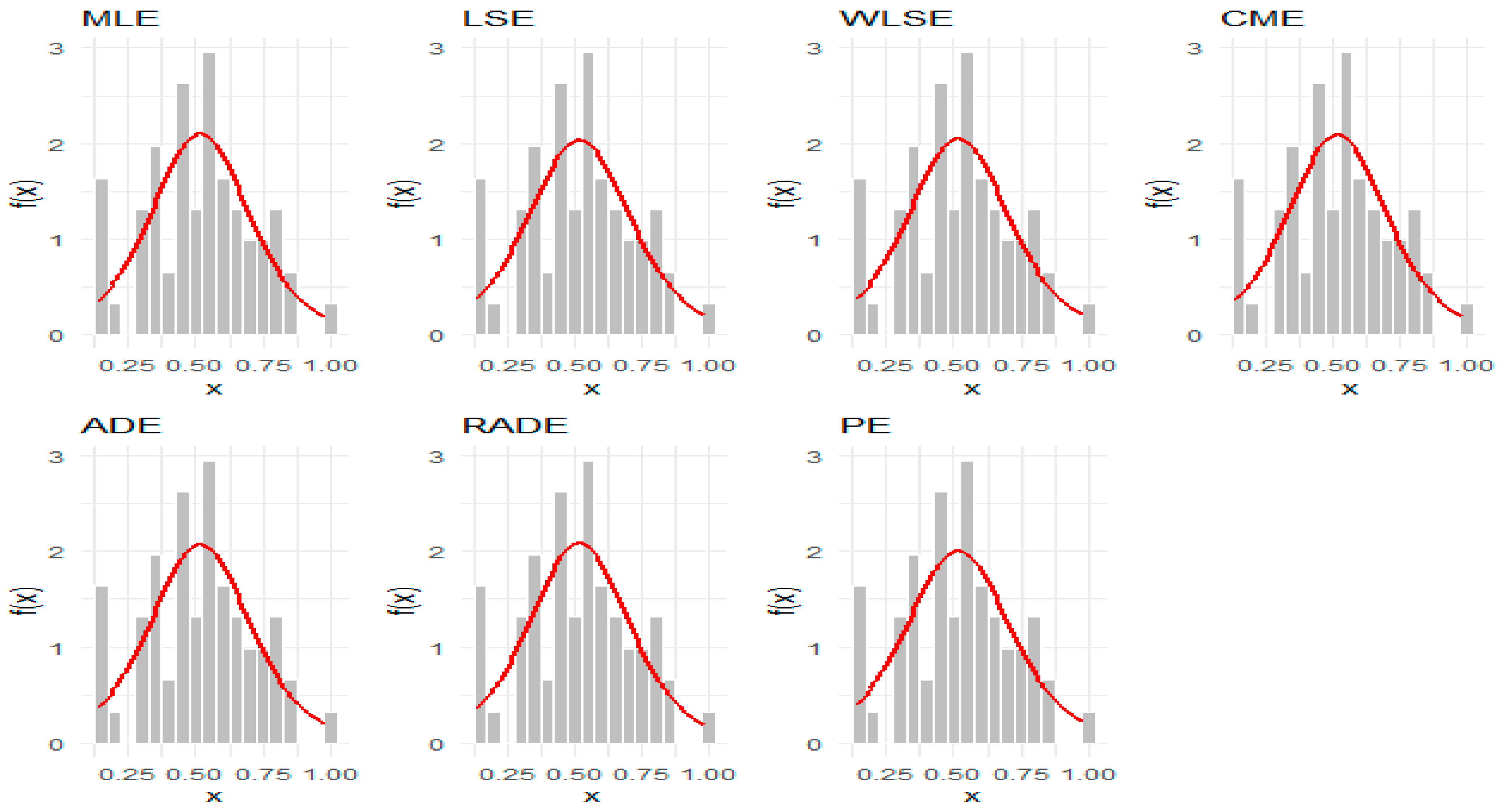

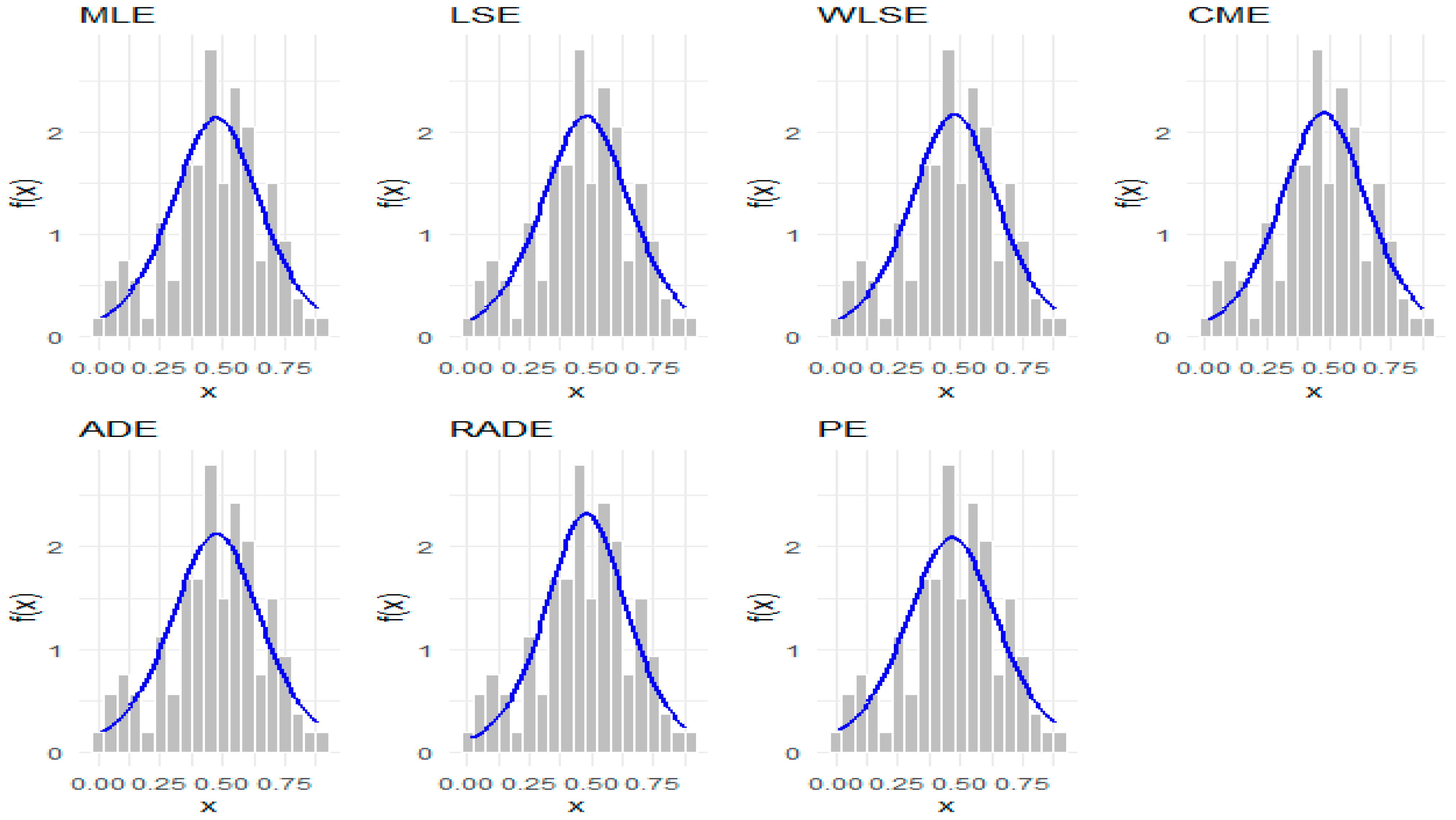

This comparative analysis rigorously evaluates the TPE model in relation to many rival models, including the Weibull (We), Beta, Gamma (Ga), Burr XII, and Perk (PE) distributions. The maximum likelihood method was utilized for parameter estimation and goodness-of-fit evaluation using the R programming language.

Table 15,

Table 16 and

Table 17 present the results for parameter estimates and their corresponding standard errors. The findings from

Table 18,

Table 19 and

Table 20 indicate that the TPE model demonstrates superior performance among all compared distributions, as indicated by it obtaining the lowest values in different goodness-of-fit metrics, including AIC, BIC, CAIC, HQIC, and K-S, as well as the greatest

p-value. The estimated values for various estimation methods used on the TPE model for this dataset are displayed in

Table 21,

Table 22 and

Table 23, which indicate that the E

2 method outperforms the others for the first dataset, the E

7 method excels for the second dataset, and the E

1 method leads for the third dataset, as evidenced by it obtaining the highest

p-value. Additionally,

Figure 8,

Figure 9 and

Figure 10 present graphical representations of densities, empirical cumulative distribution functions, and P–P plots for all competing models for the numerical data, facilitating a visual assessment of the models’ efficacy. Also, a visual comparison of the shape of the three datasets in the fitted PDFs, CDFs, and P–P plots is presented in

Figure 11,

Figure 12,

Figure 13,

Figure 14,

Figure 15,

Figure 16,

Figure 17,

Figure 18 and

Figure 19.

8. Conclusions

This paper proposes the truncated Perk distribution for modeling data on the unit interval, which stems from an exploration of an appropriate transformation. The statistical features of the proposed model, including its quantile function, Rényi entropy, Tsallis entropy, order statistics, and moments, with some associated measures, were presented. The parameter estimators of the proposed distribution were determined using seven classical estimation techniques, including E1, E2, E3, E4, E5, E6, and E7, with the R programming language. The efficacy of parameter estimate approaches was analyzed using limited samples through an extensive simulation study. We evaluated the efficacy of the estimates regarding their bias and mean squared error. This paper conducts a comparative analysis between the MLE method and Bayesian estimation to assess the performance of the proposed model. While the MLE method is known for its efficiency in large samples, Bayesian methods offer advantages when prior information is available or sample sizes are limited. Three empirical datasets and their comparative analyses, with varying distributions, are employed to visually elucidate the efficacy of the proposed distribution for data modeling. During the analytical development of the TPE distribution, several challenges arose due to the complex form of the density and its bounded support. Obtaining closed-form expressions for moments, entropies, and the moment generating function required advanced manipulations, including series expansions and variable transformations. The generalized binomial theorem was used to simplify moment derivations, while beta-type integral techniques were applied to obtain Rényi and Tsallis entropies. These steps ensured analytical tractability and reflect a novel methodological contribution to the study of bounded distributions.

Future research will consider an assessment of the stress–strength reliability model for the TPE distribution.