1. Introduction

It is well known that problems with m-term approximation arise naturally in image processing, statistical learning, artificial neural networks, numerical solutions of PDEs, and other fields. It is also well known that greedy-type algorithms can effectively generate such approximations. We provide an overview of some important results for greedy algorithms for m-term approximation.

Let

H be a real Hilbert space with an inner product

. The norm induced by this inner product is

. A subset

of

H is called a dictionary if

for any

and the closure of span of

is

H. For any element

, we define its best

m-term approximation error by

We use greedy algorithms to obtain an

m-term approximation. The core idea behind greedy algorithms is to reduce the problem of best

m-term approximation to that of best one-term approximation. It is not difficult to see that the best one-term approximation of

f is

where

. Then, by iterating the best one-term approximation

m times, one can obtain an

m-term approximation of

f. This algorithm is called the Pure Greedy Algorithm (PGA), which was defined in [

1] as follows:

Define the next approximant to be

and proceed to Step

.

To obtain the convergence rate of the PGA, we always assume that the target element

f belongs to some basic sparse classes; see [

2]. Let

be a dictionary and

M be a positive real number. We define the class

and define

to be its closure in

H. Let

For

, we define its norm by

.

It is known from [

1] that the optimal convergence rate of the best

m-term approximation on

for all dictionaries

is

. Livshitz and Temlyakov in [

3] proved that there is a dictionary

, a constant

, and an element

such that

Thus, the PGA fails to reach the optimal convergence rate

on

for all dictionaries

. This leads to various modifications of the PGA. Among others, the Orthogonal Greedy Algorithm (OGA) and the Rescaled Pure Greedy Algorithm (RPGA) were shown to achieve the optimal convergence rate

.

We first recall the OGA from [

1].

Denote

Define the next approximant to be

where

is the orthogonal projection of

f on

, and proceed to Step

.

Clearly,

is the best approximation of

f on

. DeVore and Temlyakov in [

1] obtained the following convergence rate of the OGA for

:

Then, we recall from [

4] another modification of the PGA, which is called the Rescaled Pure Greedy Algorithm (RPGA).

With

define the next approximant to be

and proceed to Step

.

Note that if the output at each Step m was and not , this would be the PGA. However, the RPGA does not use but the best approximation of f from the one-dimensional space , that is, . It is clear that the RPGA is simpler than the OGA. At Step m, the OGA needs to solve the m-dimensional optimization problem, while the RPGA only needs to solve the one-dimensional optimization problem. This implies that the RPGA has less computational complexity.

Moreover, Petrova in [

4] derived the following convergence rate of the RPGA

:

Theorem 1 ([

4])

. If , then the output of the RPGA satisfies Theorem 1 shows that the RPGA can achieve the optimal convergence rate on

. Based on these advantages, the RPGA has been successfully applied to machine learning and signal processing, see [

5,

6,

7,

8,

9]. Moreover, a generalization of RPGA can be used to solve the convex optimization problem; see [

10].

Note that when selecting

in the above algorithms, the supremum in the equality

may not be attainable. Thus, we replace the greedy condition by a weaker condition:

where

. Greedy algorithms that use this weak condition are called weak greedy algorithms. In this way, we can obtain the Weak Rescaled Pure Greedy Algorithm (WRPGA).

The following convergence rate of the WRPGA

was obtained in [

4]:

Theorem 2 ([

4])

. If , then the output of the WRPGA satisfies the inequality Some greedy algorithms have been extended to the setting of Banach spaces. Let

X be a real Banach space with norm

. A set

is called a dictionary if each

has norm

, and the closure of

is

X. Let

denote the set of all continuous linear functionals on

X. It is known from the Hahn–Banach theorem that for every non-zero

, there exists

such that

The functional

is called the norming functional of

x. The modulus of smoothness of

X is defined as

If

, then the Banach space

X is called uniformly smooth. We say that the modulus of smoothness

is of power type

if

with some

. The sparse class

in Banach space

X is defined in the same way as in Hilbert space

H.

We recall from [

4] the definition of the WRPGA in the setting of Banach spaces.

With

choose

such that

Define the next approximant to be

and proceed to Step

.

Petrova in [

4] derived the following convergence rate of the WRPGA

:

Theorem 3 ([

4])

. Let X be a Banach space with , . If , then the output of the WRPGA satisfies Observe that the best

m-term approximation problem with respect to a given dictionary

is essentially an optimization problem for a norm function

. In fact, the norming functional

used in the selection of

in the greedy algorithms is closely related to the function

. Their relationship is given in

Section 3. Naturally, one can use a similar technique, such as a greedy strategy used in

m-term approximation, to handle optimization problems, especially convex optimization problems.

Now, we turn to the problem of convex optimization. Convex optimization occurs in many fields of modern science and engineering, such as automatic control systems, data analysis and modeling, statistical estimation and learning, and communications and networks; see [

11,

12,

13,

14,

15,

16] and the references therein. The problem of convex optimization can be formulated as follows:

Let

X be a Banach space and

be a bounded convex subset of

X. Let

E be a function on

X satisfying

We aim to find an approximate solution to the problem

Usually, the domain

X of the objective function

E in classical convex optimization is finite-dimensional, while some applications of convex optimization require that

X has a large dimension, even an infinite dimension, which may require that the rate of convergence of a numerical algorithm to be independent of the dimension of

X. Otherwise, it may suffer from the curse of dimensionality. It is well known that the convergence rates of greedy-type algorithms are independent of the dimension of

X. Thus, greedy-type algorithms are increasingly used in solving (

5); see [

17,

18,

19]. The main idea is that the algorithm outputs

after

m iterations such that

is a good approximation of

Generally,

is a linear combination of

m elements from a dictionary of

X. These algorithms usually choose

as an initial approximant and select

as

Some greedy-type convex optimization algorithms, such as the Weak Chebychev Greedy Algorithm (WCGA(co)), the Weak Greedy Algorithm with Free Relaxation (WGAFR(co)), and the Relaxed Weak Relaxed Greedy Algorithm (RWRGA(co)), have been successfully used to solve convex optimization problems; see [

17,

20,

21,

22]. Furthermore, Gao and Petrova in [

10] proposed the Weak Rescaled Pure Greedy Algorithm (WRPGA(co)) for convex optimization in Banach space

X. This algorithm is a generation of WRPGA

for

m-term approximation. To give the definition of the WRPGA(co), we first recall some concepts and notations.

We assume that the function

E is Fréchet-differentiable at any

. That is, there is a continuous linear functional

in Banach space

X such that

where

. We call the functional

the Fréchet derivative of

E. Under the assumptions that

E is convex and Fréchet-differentiable, it is well known that the minimizer of

E exists and is attainable; see [

17]. Throughout this article, we assume that the minimizer of

E belongs to the basic sparse class

.

We call the convex function satisfying Condition 0 if E has a Fréchet derivative at every , and

If there exist constants

, and

, such that for all

with

and

then we say that

E satisfies the

Uniform Smoothness (US) condition on

.

The Weak Rescaled Pure Greedy Algorithm with a weakness sequence

, and a parameter sequence

satisfying

is defined as follows:

Assume has been defined and . Perform the following steps:

- -

Choose an element

such that

- -

Compute

, given by the formula

- -

- -

Define the next approximant as

If , then finish the algorithm and define for .

If , then proceed to Step .

The following convergence rate of the WRPGA(co) was also obtained in [

10]:

Theorem 4 ([

10])

. Let E be a convex function defined on X. It satisfies Condition 0 and the US condition. Moreover, we assume its minimizer . Then, the output of the WRPGA(co) with satisfies the following inequality:where .

It follows from the definition of the WRPGA(co)

and Theorem 4 that the weakness sequence

and the parameter sequence

are important for the algorithm. The weakness sequence

allows us to have more freedom to choose element

in the dictionary

. The parameter sequence

gives more choice in how much to advance along the selected element

. We remark that the function

is increasing on

and decreasing on

, with a global maximum at

. Thus, if we set

and

for

, then WRPGA(co)

satisfies the error

where

C is a constant. This setting of

and

ensures that the value on the right-hand side of inequality (

7) is minimized. For any particular target function

E, we can optimize some constants related to parameter

to obtain the optimal convergence results in terms of optimal constants. For example, the authors in [

23] selected

in Hilbert space as an example.

Very often, noise and errors are inevitable during the algorithm implementation. Thus, taking them into account and exploring their dependence on algorithms are of great importance. If the convergence rate of an algorithm changes relatively little when influenced by noise and errors, then we say that this algorithm exhibits a certain level of stability, which allows us to portray and analyze the behavior of the algorithm in a certain way, providing a theoretical basis for the design and improvement of the algorithm. The study of the stability of greedy algorithms for

m-term approximation in Banach spaces began in [

24] and was further developed in [

25]. In [

17,

20,

22], the stability of WCGA(co) and some relaxed-type greedy algorithms for convex optimization was studied, where computational inaccuracies were allowed in the process of error reduction and biorthogonality.

To the best of our knowledge, no relevant results exist concerning the numerical stability of the WRPGA(co). In the present article, we study the stability of the WRPGA(co). We consider the perturbation value

such that

instead of

Then, we investigate the dependence of WRPGA(co) on the error in the computation of

in the second step of the algorithm. We obtain the convergence rates of WRPGA(co) with noise and errors. The results show that WRPGA(co) is stable in some sense, and the associated convergence rates remain independent of the spatial dimension. This addresses real-world scenarios where exact computations are rare, making the algorithm more practically applicable, especially for high-dimensional applications. We verify this conclusion through numerical experiments. Furthermore, we apply WRPGA(co) with errors to the problem of

m-term approximation and derive the optimal convergence rate, which indicates its flexibility and wide utility across applied mathematics and signal processing. Notably, the condition we use diverges from that used in [

10]. We just use one condition: that

has the power type

. However in [

10], the authors need to use not only the

US condition but also additional conditions to ensure that the

US condition still holds for the sequence generated from WRPGA(co). This is also one of the strengths of our article.

We organize the article as follows: In

Section 2, we state the results for the convergence rates of WRPGA(co)

with noise and errors. In

Section 3, we apply WRPGA(co)

with errors to

m-term approximation. In

Section 4, we provide two numerical examples to illustrate the stability of WRPGA(co). In

Section 5, we make some concluding remarks and discuss future work.

2. Convergence Rate of WRPGA(co) with Noise and Error

In this section, we will discuss the performance of WRPGA(co) in the presence of noise and computational errors. We first recall some notions. The modulus of smoothness,

, of

E on the set

is defined as

Clearly,

is an even function with respect to

u. If

as

, then we call

E uniformly smooth on

. It is known from [

23] that the condition that

is closely related to the

US condition (

6). However, the

US condition on the set

is not sufficient to derive the convergent result. It also requires

Condition 0 so that the output sequence

of the algorithm remains in

, where the

US condition holds. Thus, we use condition (

8).

Now, we discuss the performance of WRPGA(co) with data noise. Suppose that

is influenced by noise. That is, in the implementation of the algorithm, we consider the perturbation value

such that

We obtain the following convergence rates of WRPGA(co) in that case:

Theorem 5. Let be a uniformly smooth Fréchet-differentiable convex function with , . Take and such thatwith some number . Then, for WRPGA(co) with , we have Taking

and

for

in Theorem 5, we obtain

It is clear that the function

is decreasing on

and increasing on

, with a global minimum at

. Thus, if we set

and

for

, WRPGA(co) satisfies

This setting of

and

ensures that the value on the right-hand side of inequality (

9) is minimized.

Theorem 5 implies the following result for the noiseless case ():

Corollary 1. Let the convex function be uniformly smooth and Fréchet-differentiable with , . If , then for WRPGA(co) with , the following inequality holds: Corollary 1 gives the convergence rate of WRPGA(co) with noiseless data, which is different from the result in Theorem 4. It is known from [

23] that the

US Condition is equivalent to the condition

in Hilbert spaces. Theorem 5 and Corollary 1 do not require

Condition 0. From this point of view, our assumption is weaker than that of Theorem 4 for general Banach spaces. By choosing an appropriate

, we can achieve the same error as Theorem 4.

To prove Theorem 5, we first recall some lemmas.

Lemma 1 ([

18]).

Let E be a convex function on X. Assume that it is Fréchet-differentiable. Then, for every , and , Lemma 2 ([

2])

. For any and any dictionary of X, we have Lemma 3 ([

18])

. Let E be a convex function on X. Assume that it is Fréchet-differentiable at every point in Ω. Then, for each and , Lemma 4 ([

4])

. Let and and let and be sequences of positive numbers satisfyingThen, the following inequality holds: The following lemma was stated in [

21] without proof. Moreover, we have not found the proof of this lemma in other studies. For convenience, we provide a proof here.

Lemma 5. Let E be a uniformly smooth, Fréchet-differentiable convex function on X. Let L be a subspace of X with finite dimension. If satisfiesthen, for all , we have Proof. We prove the lemma by contradiction. Suppose that there exists a

such that

and

. Note that

. Then, applying Lemma 1 to

y and

with

, we have

which implies

Since

, we can find

such that

. Then, we have

This inequality contradicts the assumption that

E reaches its minimum at

in space

L. Thus, the proof of this lemma is finished. □

Proof of Theorem 5. It is known from the definition of WRPGA(co)

that

Then, applying Lemma 1 to (

11) with

and

, we obtain

where we have used the fact that

. Substituting

into

after a simple calculation, we obtain

Combining (

11) with (

12), we have

Thus, the sequence

is decreasing. If

, then from the monotonicity of

, we have

, and hence inequality (

9) holds.

We proceed to the case that

. We first estimate the lower bound of

. From the choice of

and Lemma 2, we have

Applying Lemma 5 to

with

, we have

Then, from Lemma 3, we have

Combining (

13) with (

14) and (

15), we obtain

Subtracting

from both sides of (

16), we derive

For each

, we define

Then, the sequence

is decreasing and

From the above discussion, it is sufficient to consider the case that . The details are as follows:

Case 1: . It follows from the monotonicity of the sequence

that either all elements of

belong to

, or for some

, it holds that

. Then, for

, inequality (

9) clearly holds.

Applying Lemma 4 to all positive numbers in

with

,

,

,

we have

which implies that

Hence, inequality (

9) holds.

Case 2: Taking

in inequality (

17), we obtain

Hence,

. Combining this with the monotonicity of

gives inequality (

9). □

Proof of Corollary 1. If , then and . Thus, Corollary 1 follows from Theorem 5. □

Now, we compare the performance of WRPGA(co) with the Weak Chebyshev Greedy Algorithm with errors (WCGA(

,co)). Dereventsov and Temlyakov in [

17] investigated the numerical stability of WCGA(

,co) and established the corresponding convergence rate with data noise. We recall the definition of WCGA(

,co). Let

be a weakness sequence and

be an error sequence with

and

for

The WCGA(

,co) is defined as follows:

- (1)

Select

satisfying

- (2)

Denote

and define the next approximant

from

such that

- (3)

The approximant

satisfies

Note that in the definition of the WCGA(

,co), the dictionary

must be symmetric; that is, if

, then

. It is well known that the WCGA(co) has the best approximation properties; see [

26] for example. Let

be a constant such that

Assume that the set

is bounded. Under this assumption, the authors in [

17] obtained the following convergence rate of the WCGA(

,co):

Theorem 6 ([

17])

. Let E be a convex function with , . Take an element and a number such thatwith some number . Then, for the WCGA(Δ,co) with a constant weakness sequence and an error sequence with , , we have Notice that when for all , the WCGA(,co) reduces to the WCGA(co). Theorem 6 shows that the WCGA(,co) maintains the optimal convergence rate despite the presence of noise and computational errors.

Comparing (

10) with (

18), we see that the convergence rate of the WRPGA(co) with data noise is the same as that of the WCGA(

,co). We remark that the rate

is independent of the dimension of the space

X. Moreover, the WRPGA(co) has less computational complexity than the WCGA(co). It involves only one one-dimensional optimization problem rather than an

m-dimensional optimization problem for the WCGA(co).

Next, we discuss the performance of the WRPGA(co) in the presence of computational errors. There are some results on the WRPGA for

m-term approximation in this direction. In [

4], Petrova investigated the WRPGA(

H,

) with some errors in the computation of the inner product

. That is, in Step

m of the WRPGA

, the coefficient

is computed according to the following formula:

They obtained that for any

, the output

of the WRPGA

with errors

satisfies

Jiang et al. in [

27] studied the stability of the WRPGA

for

m-term approximation, which allows for computational inaccuracies in calculating the coefficient

. That is,

is calculated based on

They called this algorithm the Approximate Weak Relaxed Pure Greedy Algorithm (AWRPGA

). Moreover, they derived the following convergence rate of the AWRPGA

.

Theorem 7 ([

27])

. Let X be a Banach space with , . For all , the AWRPGA satisfies More generally, we consider the dependence of the WRPGA(co) on the computational error in the second step of each iteration. We assume that in Step

m of the algorithm, the coefficient

is calculated by using the following formula:

Such an algorithm is an approximate version of the WRPGA(co), denoted by AWRPGA(co). For simplicity, we use the notation

. We obtain the following error bound for the AWRPGA(co):

Theorem 8. Let the convex function be uniformly smooth and Fréchet-differentiable with minimizer and , . Assume that the error sequence satisfies for any . Then, for the AWRPGA(co) with where .

Proof. Just like in the proof of inequality (

13), we obtain

Note that

. Thus,

Then, we estimate the lower bound of

. Since

, for any fixed

,

can be represented as

, with

Applying Lemma 5 to

with

, we have

. Therefore,

Let

. We have

Then, by using Lemma 3, we obtain

and hence

Combining (

19) with (

20), we derive

Thus, it holds that

Applying Lemma 4 to the sequence

with

,

we obtain

Thus, the proof is completed. □

By limiting the error to a certain range , we derive the following corollary from Theorem 8:

Corollary 2. Let the convex function be uniformly smooth and Fréchet-differentiable with minimizer and , . For any , let for all . Then, for the AWRPGA(co) with , it holds that where and .

Proof. Since

and

, we have

. It is easy to check that the minimum of the function

is

. Taking

, we have

Then, from Theorem 8, we have

where

and

, which completes the proof of Corollary 2. □

Observe that the above rate of convergence of the AWRPGA(co) is independent of the dimension of the space X. Comparing the convergence rates in Theorem 4 with those in Corollary 2, we can see that when the noise amplitude changes relatively little, the WRPGA(co) is stable.

We remark that when the

US condition (

6) holds on the whole domain

X,

Condition 0 in Theorem 4 is not necessary. In that case, we obtain the following theorem:

Theorem 9. Let the convex function be uniformly smooth and Fréchet-differentiable with minimizer . Assume that there are constants and such that for all ,Given an error sequence that satisfies for any , for the AWRPGA(co) with , the inequalityholds with . Proof. Taking

and

in (

21), we have

where we have used the definition of

and the assumption

.

Note that

. Thus, using (

22), we derive

The remaining part of the proof of this theorem is similar to that of Theorem 8. Here, we omit it. □

Note that when

, the above convergence rate is consistent with that of Theorem 4. Moreover, the parameter

just satisfies

instead of

It follows from Theorems 5, 8, and 9 and Corollaries 1 and 2 that the convergence rates of the WRPGA(co) do not change significantly despite the presence of noise and computational errors. This assertion is crucial for the practical implementation of the algorithm, which implies that the WRPGA(co) has certain robustness against noise and errors.

3. Convergence Rate of the AWRPGA

In this section, we will apply the AWRPGA(co) to solve the problem of

m-term approximation with respect to dictionaries in Banach spaces. Let

X be a Banach space and

be a fixed element in

X. If we set

in convex optimization problem (

5), then problem (

5) reduces to an

m-term approximation problem. Similarly, the discussion about the stability of the greedy-type convex optimization algorithms reduces to that of the greedy algorithm for

m-term approximation. Since the function

is not a uniformly smooth function in the sense of the smoothness of a convex function, we consider

with

. Recall that the authors in [

28] pointed out that if the modulus of smoothness,

, of

X defined as (

4) satisfies

,

, then

is uniformly smooth and satisfies

. It is known from [

2] that the functional

defined as (

3) is the derivative of the norming function

. Then,

Thus, the conditions of Theorem 8 are satisfied.

In this case, the AWRPGA(co) is reduced to the AWRPGA, which is defined as follows.

Assume has been defined and . Perform the following steps:

- -

Choose an element

such that

- -

Compute

, given by the formula

- -

- -

Choose

such that

and define the next approximant as

If , then finish the algorithm and define for .

If , then proceed to Step .

It is clear that the AWRPGA generates an m-term approximation of after m iterations. Applying Theorem 8 to , we obtain the following convergence rate of this new algorithm.

Theorem 10. Let and, for suitable γ, satisfy , . Assume that the error sequence satisfies for any . Then, for the AWRPGA with , it holds that When for all , the AWRPGA reduces to the ARPGA. Taking , , , and we obtain the following convergence rate of the ARPGA with error .

Theorem 11. Let and, for suitable γ, satisfy , . Assume that the error sequence satisfies for all with . Then, for the ARPGA with , it holds that We proceed to remark that the rate of Theorem 11 is sharp. We take Lebesgue spaces as examples. Let

be a measure space; that is,

is a measure on the

-algebra

of subsets of

O. For

, define the Lebesgue space

as

It is known from [

29] that the modulus of smoothness of

satisfies

Applying Theorem 11 to

, we obtain the following theorem.

Theorem 12. Let . Assume that the error sequence satisfies for all with . Then, for the ARPGA with , it holds that Jiang et al. in [

27] have shown that the rate

is sharp.

Now, we apply the ARPGA to Hilbert spaces. When , a Hilbert space H with the inner product and the norm , the AWRPGA takes the following form:

Assume has been defined and . Perform the following steps:

- -

Choose an element

such that

- -

Compute

, given by the formula

- -

- -

Define the next approximant as

If , then finish the algorithm and define for .

If , then proceed to Step .

For the AWRPGA, we obtain the following corollary from Theorem 9:

Corollary 3. Let . Assume that the error sequence satisfies for any . Then, the output of the AWRPGA withsatisfies the following inequality: Proof. It is well known that

is Fréchet-differentiable on Hilbert space

H. Moreover, its Fréchet derivative

acts on

as

; see [

10] for instance. Therefore, we obtain

Thus,

satisfies the conditions of Theorem 9 with

and

. Then, Corollary 3 follows from Theorem 9. □

We remark that the result of Corollary 3 for

,

, was obtained in [

4,

27].

4. Examples and Numerical Results

Observe that the convergence results of the WRPGA(co) in Banach spaces apply to any dictionary, including those in infinite-dimensional Banach spaces. In this case, dictionaries contain infinitely many elements. This is a somewhat surprising result, which shows the power of the WRPGA(co). On the other hand, in practice, we deal with optimization problems in finite high-dimensional spaces. A typical example is sparse signal recovery in compressive sensing. Let

, and for any

, define its norm by

Let

be a

d-dimensional compressible vector and

be the measurement matrix with

. Our goal is to find the best approximation of the unknown

by utilizing

and the given data

. It is well known that this problem is equivalent to solving the following convex optimization problem on

:

where

denote the columns of

. Note that the dimension

d is larger than

N, which means that the column vectors of the measurement matrix

are linearly dependent. Thus, the column vectors of

can be considered a dictionary of

. Notably, when

, solving problem (

23) is difficult. Observe that problem (

23) can also be considered an approximation problem with a dictionary in space

X. Naturally, one can adapt greedy approximation algorithms to handle this problem. It turns out that greedy algorithms are powerful tools for solving problem (

23); see [

7,

30,

31,

32].

Next, we will present two numerical examples to illustrate the stability of the WRPGA(co) with noise and errors, which are discussed in

Section 2 and

Section 3. We begin with the first example to verify Theorems 5 and 8 (see

Figure 1,

Figure 2 and

Figure 3). We will randomly generate a Banach space

and a dictionary

based on a given distribution of parameter

p. We will choose a suitable target functional

and then apply the WRPGA(co) to solve the convex optimization problem (

5); that is, we seek to find a sparse minimizer to approximate

In this process, we assume that the WRPGA(co) is affected by noise and error. We evaluate the performance of the WRPGA(co) using

Now, we give the details of this example. Let

and

. We consider a dictionary with size 10,000, where every element is the normalized linear combination of the standard basis,

, of

X and coefficients follow a normal distribution, that is,

where

We choose the target functional

as

where

is randomly generated in the form of

with

, where

is a permutation of {1, …, 10,000} and

. Although the example is simple, it is a classical problem in compressed sensing and has many practical backgrounds. We will use the WRPGA(co) to derive an approximate minimizer with a sparsity of 100. The following results are based on 100 simulations.

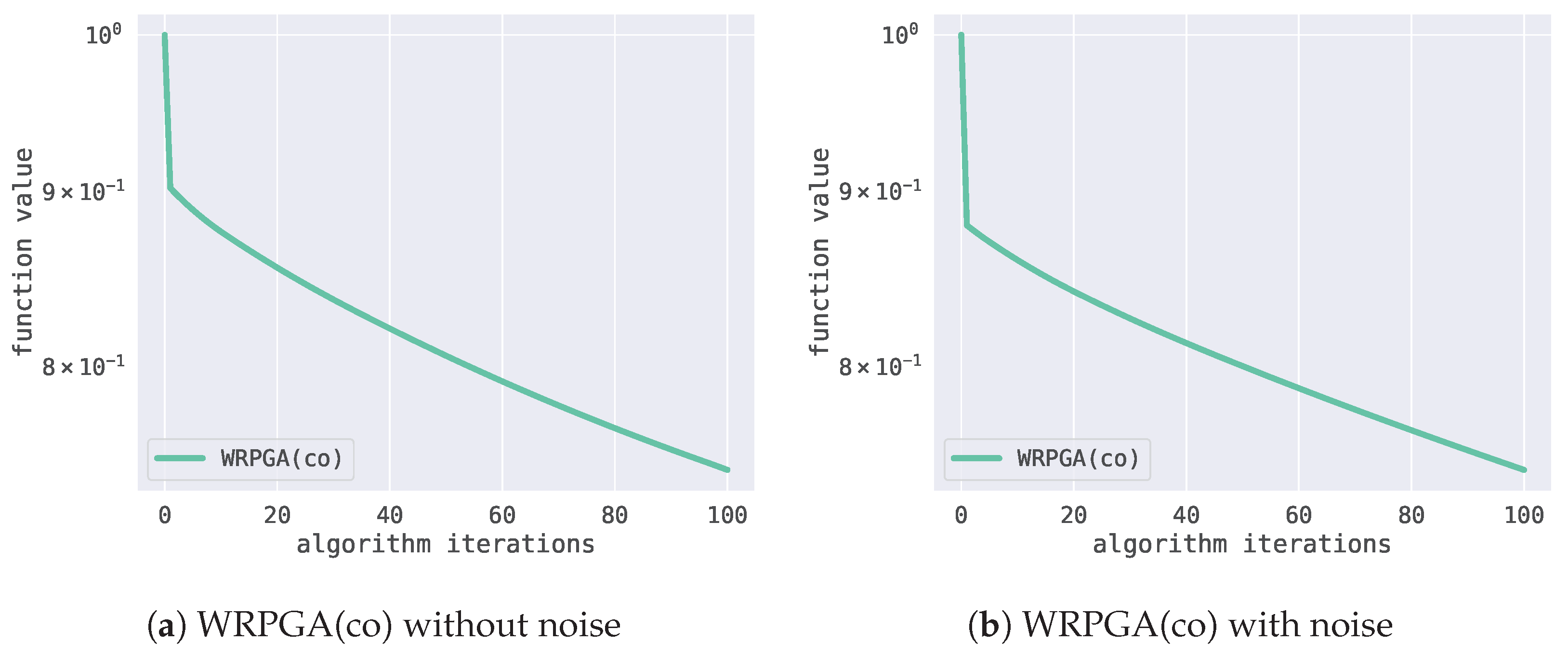

Figure 1 demonstrates the relationship between the number of iterations of the WRPGA(co) and the function value (

24) in data noise and noiseless cases, which verifies Theorem 5. Here, the noise is randomly generated with small amplitude values. In both cases, we randomly choose a parameter

slightly larger than 2 for simplicity. It shows that the function value (

24) does not change greatly under this noise, which implies that the WRPGA(co) is stable in a sense and will perform well in practice.

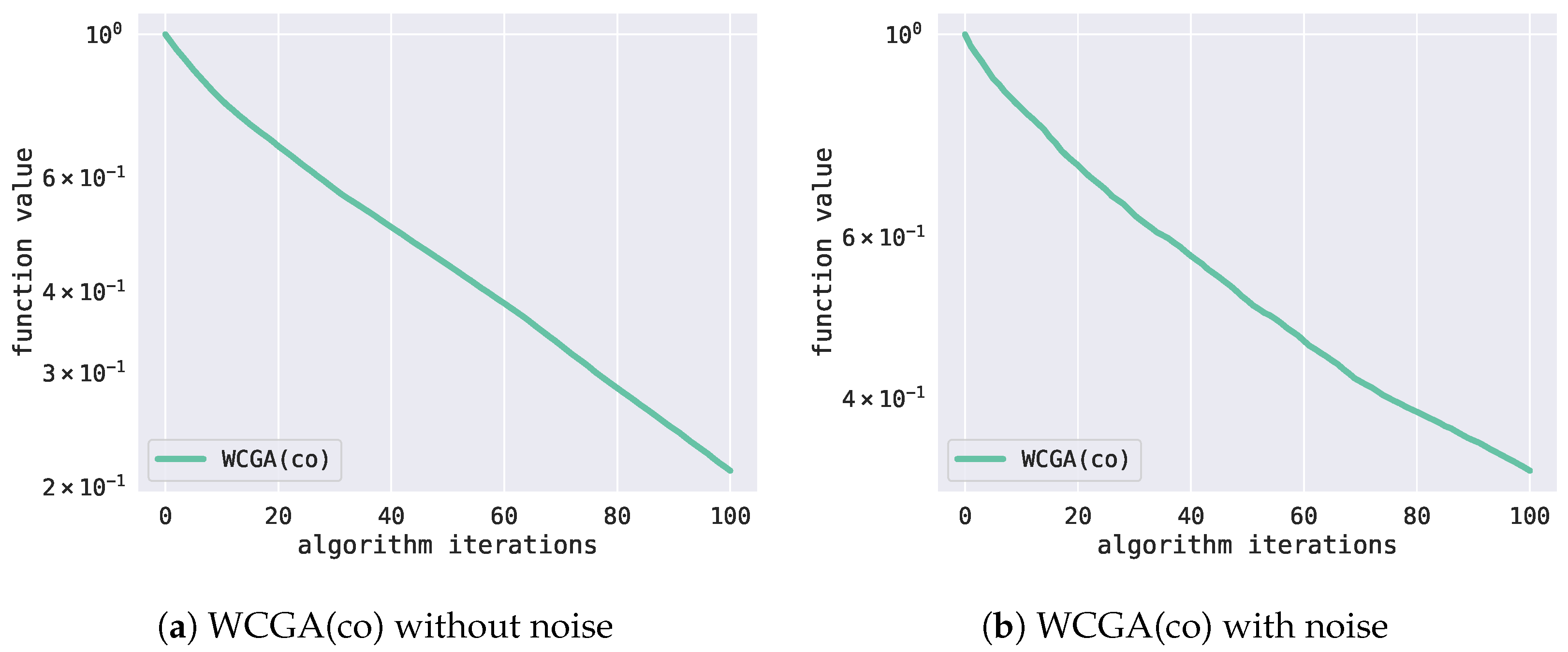

Furthermore, we use the WCGA(co) to obtain an approximate minimizer with a sparsity of 100 for the above problem. The corresponding setting is the same as that of the WRPGA(co). In the field of signal processing, the WCGA(co) is known as the Orthogonal Matching Pursuit (OMP). After 100 simulations, we obtained the relationship between the number of iterations of the WCGA(co) and the function value with data noise and that without noise, as shown in

Figure 2. Here, the choice of noise is the same as that of the WRPGA(co).

It follows from

Figure 1 and

Figure 2 that the approximation performance of the WCGA(co) is better than that of the WRPGA(co) even in the presence of noise. However, unlike the WCGA(co), the difference in the function value of the WRPGA(co) with data noise is not huge. This means that the WRPGA(co) is not sensitive to the noise, showing its stability. There is a trade-off between computational complexity and approximation performance.

Figure 3 reveals the relationship between the number of iterations of the AWRPGA(co) and the function value (

24) in the presence of computational error and the ideal case, which verifies Theorem 8. Here, the computational error,

, is randomly selected from

. From

Figure 3, we know that in the error case, the function value fluctuates but does not change significantly, which indicates that the WRPGA(co) with errors is weakly stable.

Now, we consider the stability of the special AWRPGA

with

and

for all

, that is, the RPGA with computational error

. We will illustrate Corollary 3 with the following numerical experiment on regression learning. One can also refer to [

8] for more details about the Rescaled Pure Greedy Algorithms in learning problems.

Let

,

. We uniformly select 500 sample points from this function. Set

. Let

be a set of 200 equally spaced points in

. We choose

as a dictionary. We seek to approximate

by using the RPGA based on the sampling points

. In the implementation of the algorithm, Gaussian noise from

with

is allowed. The function values with noise are used as inputs to the regression. To measure the performance of the algorithm, we use the mean square error (MSE) of

f on the unlabeled samples

, which is defined as

To make the performance of the RPGA clear, we also discuss the performance of the OGA. Moreover, we add computational inaccuracies in the implementation of the RPGA. By repeating the test 100 times, we obtain the following

Table 1 and

Figure 4.

Figure 4 demonstrates that the performance of the OGA on noisy data and noiseless data outperforms that of the RPGA with computational error

in the regression learning problem. However, according to the running time in

Table 1, we observe that the RPGA runs more quickly than the OGA even in the noise case. This is related to the design of the algorithm. The OGA updates the approximant by solving an

m-term optimization problem. On the other hand, the RPGA only needs to solve a one-dimensional optimization problem.

It is well known that the above two numerical examples can also be solved by the Least Absolute Shrinkage and Selection Operator (LASSO). The LASSO algorithm introduces sparsity through

-regularization; see [

33]. When the design matrix

X satisfies certain conditions and the regularization parameter

is appropriately chosen, the solution is guaranteed to be unique. However, its robustness to noise in practice critically depends on the proper selection of

. In contrast, greedy algorithms (e.g., OGA) iteratively build sparse solutions with low per-step complexity. While they converge quickly in low-noise scenarios, their inability to revise earlier decisions makes them prone to error accumulation and less robust to noise compared to LASSO; see [

34,

35].

5. Conclusions and Discussions

It is well-known that the Weak Rescaled Pure Greedy Algorithm for convex optimization (WRPGA(co)) is simpler than other greedy-type convex optimization algorithms, such as the Weak Chebychev Greedy Algorithm (WCGA(co)), the Weak Greedy Algorithm with Free Relaxation (WGAFR(co)), and the Rescaled Weak Relaxed Greedy Algorithm (RWRGA(co)), and has the same approximation properties as them. The rate of convergence of all these algorithms is of the optimal order

, which is independent of the spatial dimension; see [

10,

17,

21]. In this article, we study the stability of the WRPGA(co) in real Banach spaces and obtain the corresponding convergence rates with noise and error. We use the condition that

has power type

instead of the

US condition since the

US condition of

E on

is restrictive and requires additional conditions to ensure that the

US condition still holds for the sequence generated from the WRPGA(co). Our results show that the WRPGA(co) is stable in the sense that the rate of the convergence of the WRPGA(co) is basically unchanged, which demonstrates that the WRPGA(co) maintains desirable properties even in imperfect computation scenarios. Moreover, we apply the WRPGA(co) with error to the problem of

m-term approximation and derive the optimal convergence rate. This indicates that the WRPGA(co) is flexible and can be widely utilized in applied mathematics and signal processing. The numerical simulation results have also verified our theoretical results. The stability of the WRPGA(co), allowing for noise and error, is crucial for practical implementation, which may produce predictable results and guarantee the consistency of the results when the algorithm is implemented repeatedly. This advantage, as well as the advantages in computational complexity and convergence rates, indicate that the WRPGA(co) is efficient in solving high-dimensional and even infinity-dimensional convex optimization problems.

In summary, the novelty of our work is as follows:

Under weaker conditions, , we show that the WRPGA(co) with noise has the same convergence rate as that of the WRPGA(co) without noise.

We introduce and study a new algorithm—the Approximate Weak Rescaled Pure Greedy Algorithm for convex optimization (AWRPGA(co)). We show that the AWRPGA(co) has almost the same convergence rate as that of the WRPGA(co).

We apply, for the first time, the WRPGA(co) with error to m-term approximation problems in Banach spaces. The convergence rate we obtained is optimal.

We compare the efficiency of the WRPGA(co) with that of the WCGA(co) through numerical experiments. The results show that the WCGA(co) has better approximation performance but higher computational cost than the WRPGA(co).

Next, we discuss some future work. The introduction of the weakness sequence

,

, into the algorithm allows for controlled relaxation in selecting elements from the dictionary, which increases the flexibility of the algorithm. Observe that when

for all

, the algorithm can achieve the optimal error, which is sharp as a general result. However, the supremum of greedy condition (

2) may not be attainable. Our results provide the same rate of convergence for the weak versions (in the case

) as for the strong versions (

) of the algorithms. Thus, taking

may be a good choice. However, there is no general answer to the question of how to choose the optimal

t, which relies on the specific convex optimization problem. Recently, some researchers have used some strategies, such as utilizing the a posteriori information gained from previous iterations of the algorithm, to improve the convergence rate of the algorithm; see [

36,

37]. This inspires us to use this strategy to improve our results. In addition, it is known from [

26,

38] that research on the WCGA and the WGAFR for

m-term approximation in real Banach spaces has been extended to complex Banach spaces. Thus, it would be interesting to extend our study to the setting of complex Banach spaces.

Above all, we will concentrate on the following problems in our future work:

For a given convex optimization problem, we will explore the optimal selection of the parameter t.

In the implementation of the WRPGA(co), we will take the a posterior information into account to further improve our convergence rates and numerical results.

We will investigate the performance of greedy-type algorithms in complex Banach spaces for m-term approximation or convex optimization.