1. Introduction

Solving nonlinear equations of the form where is a continuously differentiable function defined on an open interval I, is a fundamental yet challenging problem encountered across various disciplines of science and engineering. In many practical applications, such equations do not admit closed-form analytical solutions, necessitating the use of iterative numerical techniques to approximate the roots with a desired level of accuracy.

Among these techniques, Newton’s method [

1] remains one of the most widely used due to its quadratic convergence and simplicity, particularly when the function

f and its derivative

are easy to compute. Newton’s method is a classical iterative technique, which starts with an initial approximation

and generates a sequence

from the following relation:

Multipoint methods overcome the theoretical limits of any one-point method like Newton’s method (

1) regarding the order of convergence and informational and computational efficiency [

2]. Thus, they are of greater practical importance than one-point methods. A comprehensive study of multipoint iterative methods for simple roots can be found in [

2,

3,

4]. To expedite the convergence of Newton’s method, many third-order two-point methods, requiring the evaluations of either the first derivative or first and second derivatives, have also been proposed (see [

1,

2,

5,

6,

7,

8,

9]).

Kung and Traub [

10] conjectured that an optimal method of order

n is a method without memory which requires

function evaluations per iteration. To satisfy this conjecture, several optimal fourth and eighth-order methods have been derived and investigated in the last few years (for example, see [

4,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20]).

Efficiency index of an iteration scheme (IS) is computed using a well-known formula due to Ostrowski [

1] given by:

where

p indicates order convergence of

and

is the total number of evaluations of functions used by an iterative scheme per iterative cycle. For instance, the efficiency of Newton’s method is

. Please note that an optimal fourth-order scheme, for example, the well-known King method [

21] has an efficiency index,

. However, the new method designed in this paper has higher computational efficiency, i.e.,

.

The main motivation of this work is to improve the convergence order and efficiency of the well-known King–Werner method [

22] by introducing cubic interpolation in its second step. This enhancement raises the order of convergence from

to 3 and the efficiency index from

to

, outperforming many existing optimal fourth- and eighth-order schemes in practice. Our aim is to present a more effective and computationally competitive alternative iterative scheme for solving nonlinear equations.

To this end, we propose a novel third-order multipoint iterative method and analyze its convergence analysis as well as complex dynamics. The method is validated through several numerical and graphical experiments, demonstrating both robustness and superior performance. The iteration method derived in this paper is more efficient than the optimal methods described above. Moreover, higher-order convergent techniques free from the evaluation of derivatives, for instance, the schemes presented in the papers [

23,

24], are of specific significance since a large number of nonlinear equations involve a non-differentiable term.

We consider the derivative-free King–Werner method given as follows [

22]:

where

are initial points and

is first order divided difference. We improve the convergence order of the King–Werner method (

3) from

to 3 under the same hypothesis.

The contents of the paper are summarized below. The development of the new method is presented in

Section 2. In

Section 3, the convergence analysis of the new method is investigated. Numerical examples, including some real-world applications, are considered for the verification of theoretical results and the comparison with some existing methods in

Section 4.

Section 5 presents the complex dynamics and stability analysis of the new method compared with some existing well-known methods using basins of attraction. Finally, the summary of the key findings is concluded in

Section 6.

2. The Method

We define a two-step iterative scheme given by:

where

C is a cubic curve interpolation given by,

employing the following conditions:

Now, for the coefficients

b,

c and

d in terms of the coefficient

a by applying the conditions (

6) in R(t), then

where,

Simple calculations yield:

Hence, by using Equation (

10), the iteration scheme (

4) yields:

The iterative scheme (

11) includes a free parameter

a, which plays a crucial role in enhancing the behavior and performance of the proposed method. By varying the value of

a, different iterative methods can be derived from the same general formulation. This flexibility allows for tuning the method to achieve optimal convergence characteristics under different problem conditions. In this manuscript, however, we focus on a specific case by selecting a fixed value of

a, namely

. The convergence analysis of the iteration scheme (

11) is investigated in the next section.

4. Numerical Results and Comparison

This section evaluates the efficacy and validity of the proposed method (

11) by employing Mathematica 11 programming environment with arbitrary-precision arithmetic. For the sake of comparison, we consider second order methods of Newton [

25] and Steffensen [

26]; third-order methods by Özban [

8], Weerakoon-Fernando [

9], Halley [

7], Ostrowski [

1] and the method given by (

3). These methods are given as follows:

Newton’s method:

Steffensen’s method:

Özban’s method:

Weerakoon-Fernando’s method:

Halley’s method:

Ostrowski’s method:

Numerical results displayed in

Table 1,

Table 2,

Table 3 and

Table 4 include the number of required iterations

, absolute error between two consecutive iterations

, for the first three iterative steps (where

denotes

), the approximated computational order of convergence (ACOC) and CPU time (sec) elapsed as the program executes, computed by Mathematica command “TimedUsed[ ]”. The ACOC is calculated using the following expression [

27]:

based on the last four iterative approximations of the required root. The number of required iterations

are calculated such that it satisfies the stopping criterion

. For numerical tests, we consider the following four examples from real-world applications.

Example 1. Kepler’s equation

First, we analyze Kepler’s equation given as follows [

28]:

where

and

. For different values of

K and

, a numerical investigation has been presented in [

28]. For

and

, the solution of (

33) is

. Numerical comparisons of different methods for this problem are shown in

Table 1, which demonstrates that the proposed method (

11) achieves the desired accuracy in four iterations, fewer than all the other methods, and confirms a theoretical convergence order of 3. All the cubic-order methods converge in 5 iterations, but with less accuracy than the proposed method. This indicates improved efficiency and reduced computational cost of the new method.

Example 2. Isentropic supersonic flow

Consider isentropic supersonic flow around a sharp expansion corner. The relationship between the Mach number before the corner

and after the corner

, defined by Hoffman [

29], is given as follows:

where

and

indicates the particular heat ratio of the gas. For

and

, the Equation (

34) is resolved for

as a particular case, as follows:

where

A solution is of the above equation is

. The numerical comparison of several methods for this problem is shown in

Table 2 for an initial guess

Again, the proposed method (

11) achieves convergence in 5 iterations, surpassing other methods in accuracy and speed. The higher ACOC and significantly lower absolute errors for the proposed method indicate more rapid convergence, making it best for nonlinear equations of the form (

35).

Example 3. Population growth

The law of population growth is defined by [

30]:

where

is birth rate constant of population,

is the population at time

t and

is the rate of immigration. If

is an initial population, then Equation (

36) has the following solution:

As a particular problem, suppose that there are 1,000,000 individuals initially contained in a certain population, that in the first year, 435,000 individuals immigrate into the community, and that at the end of one year, 1,564,000 individuals are present. Then, to find the birth rate, we must solve the following equation:

wherein

. A solution to this problem is

. The numerical outcomes for this problem are presented in

Table 3 which illustrates that the proposed method (

11) outperforms others, converging in 5 iterations with a high ACOC of 3. The superior performance of the proposed method confirms its robustness and wide applicability, even when other well-known methods fail.

Example 4. Transcendental-Polynomial Piecewise Function

Let us consider a nonlinear function as follows:

The above equation has three roots;

, where the root

is a multiple root of multiplicity 3. The simple zero at

is our desired root. The corresponding numerical outcomes are presented in

Table 4, which shows that the proposed method (

11) converges in only 4 iterations, outperforming all the other methods in both speed and accuracy.

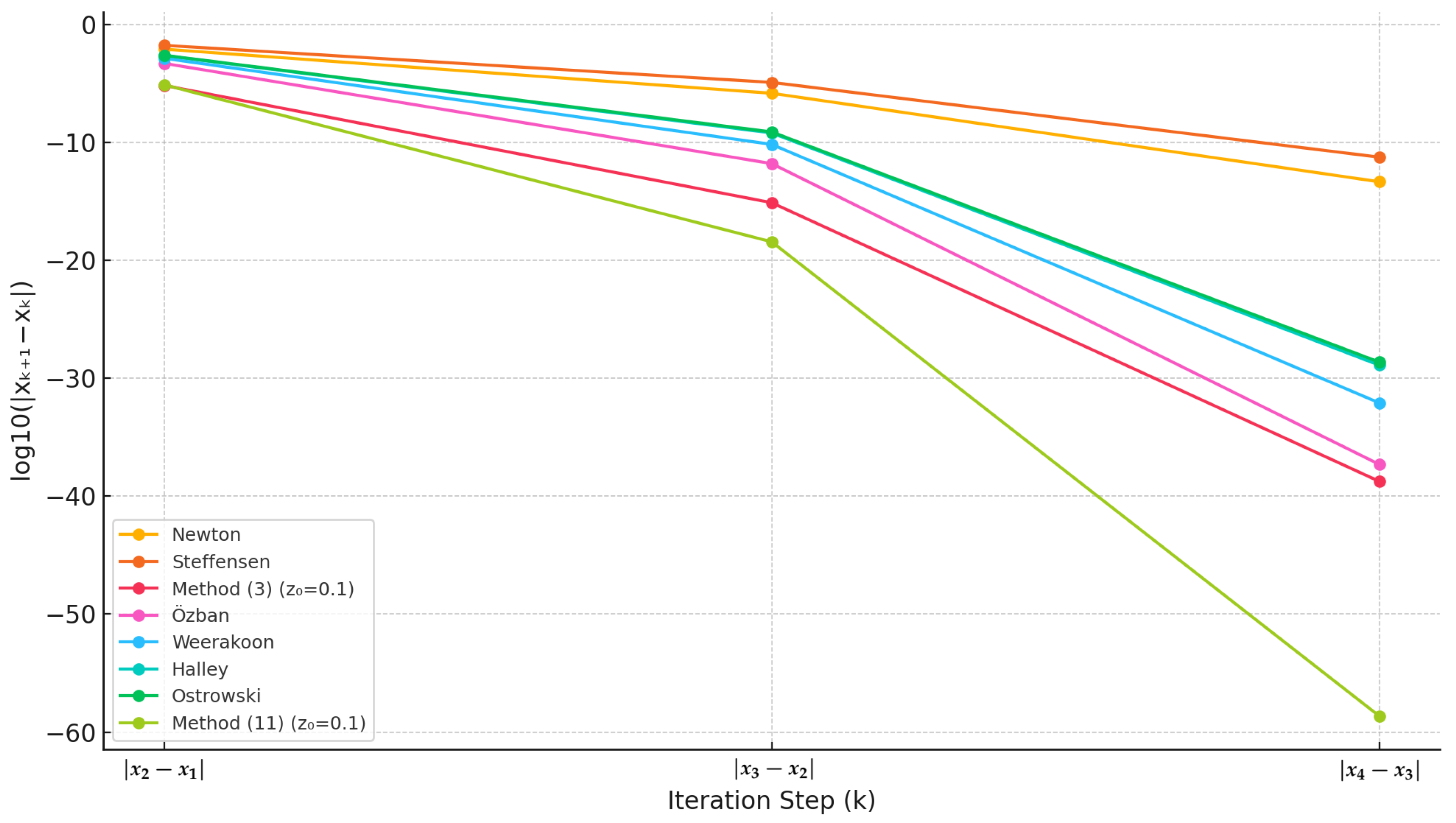

Figure 1 presents a graphical representation of the numerical results shown in

Table 1. It is evident from this figure that the proposed method (

11) demonstrates superior convergence behavior compared to the existing methods. Specifically, Method (

11) reaches the desired root with significantly fewer iterations, indicating higher computational efficiency and faster convergence. This improvement confirms the advantage of the new method in solving nonlinear equations.

It is observed from the numerical results that for each test problem, the approximated computational order of convergence (ACOC) of the proposed method (

11) consistently confirms the theoretical convergence order. Furthermore, our method possesses better accuracy than existing methods using only two function evaluations. The proposed method (

11) consistently demonstrates faster convergence, higher accuracy, and superior computational order of convergence. This confirms the practical advantage of the proposed method over existing methods.

By comparing efficiency indices of different methods using (

2) offers additional insight into the computational efficiency. Efficiency indices of different methods are computed as follows:

We observe that the new method (

11) is more efficient than the method (

3) and the methods of order 2 and 3 as considered above. Moreover, the efficiency index

is even higher than the efficiency indices of optimal fourth-order methods (i.e.,

) and of optimal eighth-order methods (i.e.,

) [

11,

13,

31,

32,

33].

5. Complex Dynamics and Comparison

This section presents the comparison of dynamical analysis of the several iteration schemes in terms of the basins of attraction on a variety of nonlinear problems discussed in

Section 4. We acquire a better understanding of the behavior of root-finding techniques by plotting their attractor basins or regions of convergence containing the required root in the complex plane. It is fascinating to see that each iterative scheme produces distinct attractor basins for the same nonlinear problem, which enhances their importance in investigating the root-finding techniques. The border between the attractor basins for consecutive zeros of

f indicates a complex fractal structure. Assigning a distinct hue to every basin typically yields beautiful visuals that showcase the effectiveness of iterative techniques. First, in 2001 and 2002, respectively, Stewart [

34] and Varona [

35] looked into the graphical comparison of a few classical iterative techniques. Plotting the portraits of attractor basins of root-finding algorithms has then become a frequent way for their comparison graphically. Studies on this type of comparison have been conducted more lately; for example, one can see [

11,

14,

16,

36]. By plotting the attractor basins, all these papers compared various iterative techniques on simple polynomial functions of the form

, in the complex plane. However, we examine the attractor basins of different methods by visualizing their phase portraits on diverse nonlinear equations presented in

Section 4.

To draw attractor basins for a nonlinear function , a point is chosen as an initial guess from a square , containing the required root. Each point in I is then painted with a unique color, specific for each root (except blue), for which an iteration method converges to a simple root of . If, for an initial point, the method fails to converge to any of the roots within 25 iterations and for , then that specific point is marked with color blue (indicating divergence or failure). Brighter shades within each basin represent initial points that converge more quickly, requiring fewer iterations.

A mathematical explanation to obtain basins of attraction for an iteration method is given as follows.

Let

be a nonlinear function, and let

denote its simple roots, i.e.,

and

. Consider an iterative method defined by

to approximate the roots of

.

The resulting plot is a phase portrait or basins of attraction that visualizes the sets

highlighting the regions in the complex plane from which the iteration converges to each root, as well as the speed of convergence.

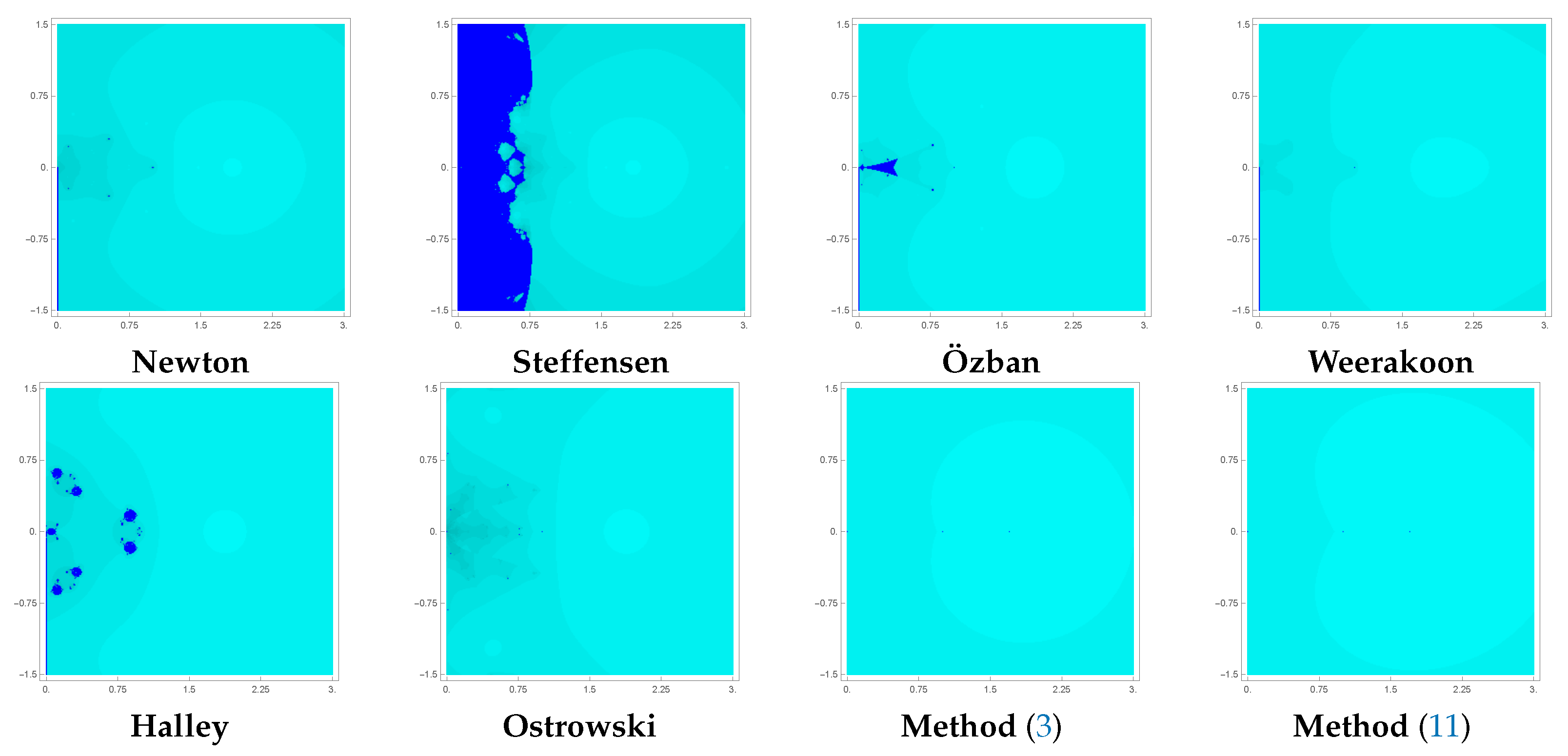

For the attractor basins of

(

33), which has a root at

, we chose a grid of

points in

. Due to the limited paper space, the significant digits of the root are reduced. The color cyan is assigned to each point in

I for which the iteration scheme converges to the root

. The corresponding phase portraits of basins of attraction are displayed in

Figure 2. The proposed method (

11) and the method (

3) have far superior performance regarding the speed and wide areas of convergence in comparison with other classical methods.

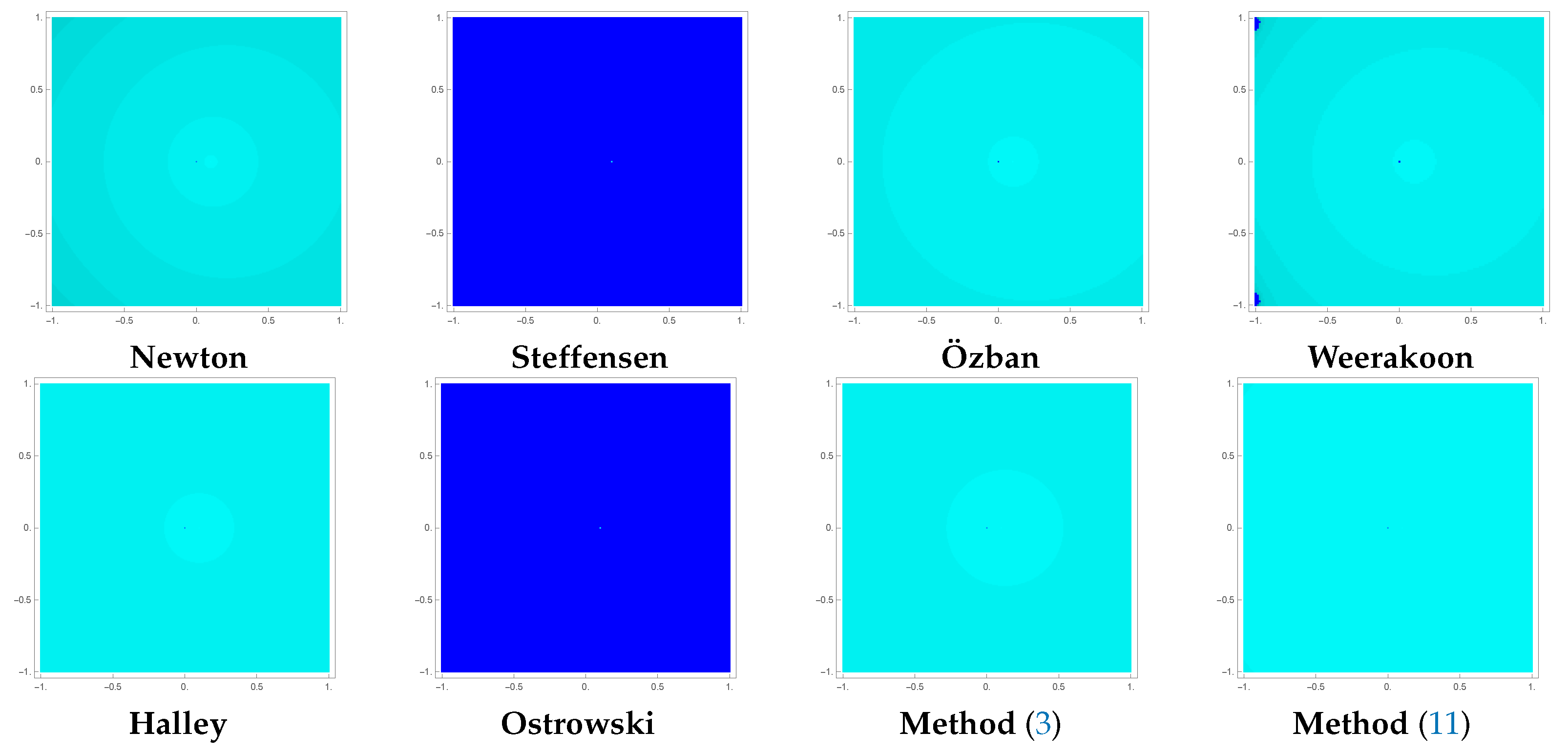

For

(

35), which has a zero at

, we chose a grid of

points in

. Similar to the previous example, each point of the square

I is painted with the color cyan, for which a method converges to the root

. The corresponding portraits of basins are shown in

Figure 3, which exhibit the superior performance of the proposed method (

11) in terms of convergence speed and convergence region. The proposed method has only two non-convergent points in the selected region.

We have taken a grid of

points in a square

to plot the phase portraits for

(

38), which has a zero at

. The color cyan is assigned to each point in

I for which an iterative scheme converges to this zero.

Figure 4 depicts the corresponding portraits demonstrating the worst behavior (divergence) of the methods by Steffensen (

27) and Ostrowski (

31) while our method (

11) exhibits wider regions of convergence. In particular, the brighter color of basins for the proposed method illustrates its robust convergence in comparison with other methods.

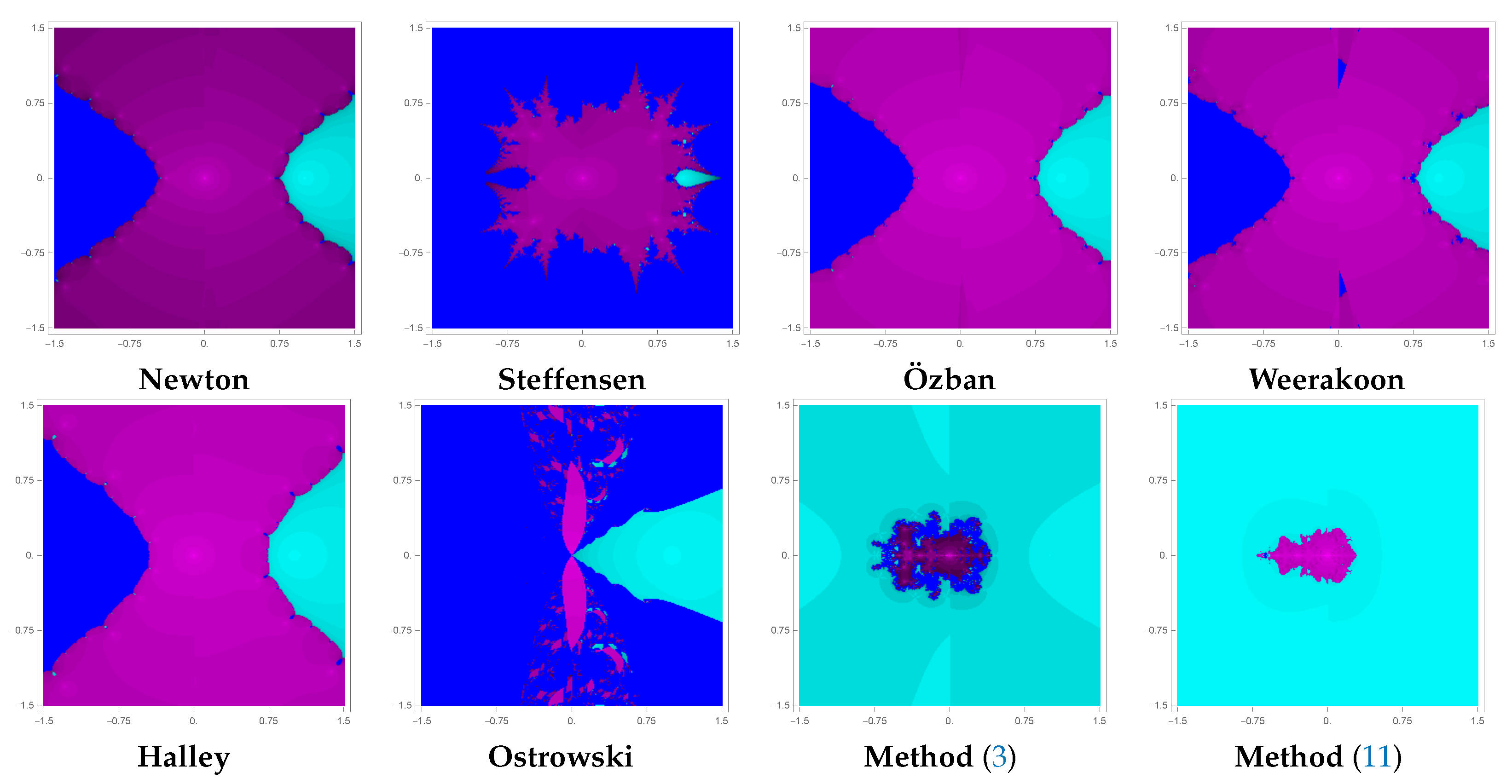

Figure 5 shows the basins of attraction for

(

39), for which a grid of

points is taken in the square

containing two roots of

; one multiple root at 0 and a simple root at 1. Each point of the square

I is marked with the colors magenta and cyan, for which an iterative scheme converges to the roots 0 and 1, respectively. The blue color indicates that the method fails to converge to any of the zeros.

Figure 4 depicts that the methods of Steffensen, Ostrowski, Newton, Ozban, Weerakoon, and Halley possess smaller areas of convergence while the proposed method (

11) has wide regions of convergence for the desired root, i.e.,

.

Figure 2,

Figure 3,

Figure 4 and

Figure 5 depict the basins of attraction for the nonlinear problems

, providing a visual representation of the convergence behavior of several iterative methods discussed in

Section 4. The figures clearly demonstrate that the proposed iterative scheme (

11) exhibits significantly wider areas of convergence in comparison to the existing methods in several instances, indicating their robustness and efficiency in solving complex nonlinear problems. This implies that the proposed methods can successfully converge from a broader set of initial guesses, which is a key advantage in practical applications where selecting initial values can be challenging.

Table 5,

Table 6,

Table 7 and

Table 8 provide the comparison of different iteration schemes in terms of average number of iterations (AVGIT), number of non-convergent initial points (NCP) and CPU time (e-time) to plot basins for

and

, respectively. The proposed method has outperformed other methods in all the metrics, as it has taken less time and performed fewer average iterations to plot the portraits of basins of all functions. Moreover, the basins generated by the proposed method are brighter and wider with fewer diverging points compared with those of the existing iteration methods.

6. Conclusions

In this paper, we have introduced and analyzed a novel higher-order multipoint iteration method for solving nonlinear equations, employing the cubic interpolation to enhance the convergence of the King–Werner method [

22]. The proposed approach increases the convergence order from

to 3 and improves the efficiency index from

to

, surpassing the efficiency of even optimal fourth and eighth-order methods [

11,

13,

31,

32,

33]. Through extensive numerical and dynamic experiments, the method’s robustness and superiority have been validated using metrics such as absolute residual error, computational order of convergence, CPU time, and regions of convergence using attractor basins. The method is tested on real-world problems, including Kepler’s equation, isentropic supersonic flow, and the law of population growth, consistently outperforming existing well-known methods. The proposed method is derivative-free and therefore remains applicable even when

at or near the root. This gives it a significant advantage over Newton’s method, particularly for functions that are non-differentiable or have stationary points near the root. The convergence of our method does not depend on the behavior of the derivative but rather on the function evaluations, making it broadly applicable.

Furthermore, the study extended the analysis of convergence and stability beyond traditional polynomial functions like to diverse nonlinear functions, providing a more comprehensive understanding of the method’s applicability. The proposed scheme demonstrates faster generation of basins of attraction with wider convergence regions, highlighting its stability and efficiency. These results underscore the method’s potential as a reliable and high-performance tool for solving nonlinear equations in both theoretical and practical applications. The proposed method is designed for solving nonlinear equations where the function is at least continuous and sufficiently differentiable. Its applicability to problems involving non-smooth or discontinuous solutions is limited, as the convergence behavior in such cases may not be guaranteed. This limitation is a subject for future research, particularly extending the method to handle such non-smooth functions.