On Quasi-Monotone Stochastic Variational Inequalities with Applications

Abstract

1. Introduction

2. Preliminaries

- (i)

- strongly monotone if, there exists a constant such that for all

- (ii)

- monotone if, for all

- (iii)

- pseudomonotone if, for all

- (iv)

- quasi-monotone if, for all

- (i)

- and

- (ii)

- (iii)

- if and only if

- (iv)

- Let Then, for all strictly positive.

- (A)

- The solution set

- (B)

- (i) For all and almost everywhere is a measurable function such that for almost(ii) There exists and such that and

- (C)

- The mapping F is quasi-monotone.

- (D)

- Let and a positive sequence such that and Furthermore, as

3. Proposed Algorithm

- 1.

- In the Step 1 of the proposed algorithm, we incorporate the inertial term also called momentum-based method inspired by Nesterov acceleration and Polyak’s heavy-ball method. It promises faster convergence rates, variance reduction, better stability for ill-conditioned problems, and improved practical performance. It is obvious that without inertia, SA can become stuck in flat regions or plateaus caused by noise. In stochastic games, inertial SA often requires fewer iterations to achieve a desired accuracy. These facts underscore the need for adopting it in the algorithm, and it is an improvement over [5,21,23,25,27,28,30,39,41].

- 2.

- The algorithm involves the subgradient extragradient (SEG) method, which involves one projection onto the feasible set. It handles non-smooth problems, improves feasibility maintenance, which ensures all iterates remain feasible, a key requirement in constrained stochastic optimization problems. It is, therefore, preferable to those algorithms that involve two projections onto the feasible set per iteration. Hence, it contributes positively to the literature when compared with works in [5,14,15,20,21,22,25,27,33,41,42].

- 3.

- Since the SA-based algorithm is very sensitive to the stepsize or the step-length, we consider a self-adaptive stepsize that adjusts dynamically, ensuring robustness across problem scales and conditions. In fact, self-adaptive stepsize in SA accelerates convergence, reduces sensitivity to noise, eliminates heavy manual turning, ensures stability, and equally improves efficiency near the solution. Unlike Armijo linesearch methods that consume a large amount of time, thereby affecting the performance of iterative algorithms (see, e.g., [4,13,14,15,17,21,31,33,40,41,42,42]) and cited references contained therein.

- 4.

- It is known that real-world stochastic systems often have non-symmetric or partially monotone structures. This necessitated the very essence of considering a quasi-monotone operator, which is weaker, so that the proposed scheme can handle nonlinear, asymmetric, or discontinuous mappings more realistically. It is important to note that quasi-monotone operators avoid the need for projection correctness or strong-regularization techniques required for non-monotone problems. To this end, Algorithm 1 offers greater modeling flexibility, wider applicability, reduced assumptions for convergence, and lower computational cost compared to strict monotonicity, monotonicity, and pseudo-monotonicity commonly found in the literature. Therefore, our scheme improves many already announced results in this research direction.

| Algorithm 1 Inertial Self-Adaptive Subgradient Extragradient Algorithm |

| Step 0: Select Take Take the sample rate with Set Step 1: Given the current iterates construct the inertial term as follows: Step 2: Draw an i.i.d. sample from and compute Step 3: Consider a constructible set and calculate |

4. Convergence Analysis

4.1. Almost Surely Convergence

- 2.

- From the Algorithm 1, and (7) it follows that for any givenprovided that This is one of striking advantage of Algorithm 1. Its execution is merely a single projection onto creating efficiency and smooth running of the scheme.

- 3.

- Let From Algorithm 1, Step 2, we know that i.e., is the projection of onto Using projection property, we understand thatwhere z is the point being projected, i.e., Using the definition of from the algorithm, we understand that for allSo,

- 4.

- In view of (5), we define and the oracle errors for all If for some thenIndeed, assume that for some positive We know from Lemma 1 (i) thatNoting that we quickly have thatIndeed, for all defines a martingale difference, i.e., This follows from the fact that since and we understand thatTaking in (13)Therefore,

4.2. Rate of Convergence

5. Applications and Numerical Illustrations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Royset, J.O. Risk-adaptive approaches to stochastic optimization: A survey. SIAM Rev. 2025, 67, 3–70. [Google Scholar] [CrossRef]

- Liang, H.; Zhuang, W. Stochastic modeling and optimization in a microgrid: A survey. Energies 2014, 7, 2027–2050. [Google Scholar] [CrossRef]

- Sclocchi, A.; Wyart, M. On the different regimes of stochastic gradient descent. Proc. Natl. Acad. Sci. USA 2024, 121, e2316301121. [Google Scholar] [CrossRef]

- Dong, D.; Liu, J.; Tang, G. Sample average approximation for stochastic vector variational inequalities. Appl. Anal. 2023, 103, 1649–1668. [Google Scholar] [CrossRef]

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Farh, H.M.H.; Al-Shamma’a, A.A.; Alaql, F.; Omotoso, H.O.; Alfraidi, W.; Mohamed, M.A. Optimization and uncertainty analysis of hybrid energy systems using Monte Carlo simulation integrated with genetic algorithm. Comput. Electr. Eng. 2024, 120, 109833. [Google Scholar] [CrossRef]

- Stampacchia, G. Formes bilinéaires coercitives sur les ensembles convexes. Comptes Rendus l’Académie Sci. Série A 1964, 258, 4413–4416. [Google Scholar]

- Nagurney, A. Network Economics: A Variational Inequality Approach; Kluwer Academic: Dordrecht, The Netherlands, 1999. [Google Scholar]

- Shehu, Y.; Iyiola, O.S.; Reich, S. A modified inertial subgradient extragradient method for solving variational inequalities. Optim. Eng. 2022, 23, 421–449. [Google Scholar] [CrossRef]

- Liu, L.; Cho, S.Y.; Yao, J.C. Convergence analysis of an inertial Tseng’s extragradient algorithm for solving pseudomonotone variational inequalities and applications. J. Nonlinear Var. Anal. 2021, 2, 47–63. [Google Scholar]

- Nwawuru, F.O.; Echezona, G.N.; Okeke, C.C. Finding a common solution of variational inequality and fixed point problems using subgradient extragradient techniques. Rend. Circ. Mat. Palermo II Ser. 2024, 73, 1255–1275. [Google Scholar] [CrossRef]

- Dilshad, M.; Alamrani, F.M.; Alamer, A.; Alshaban, E.; Alshehri, M.G. Viscosity-type inertial iterative methods for variational inclusion and fixed point problems. AIMS Math. 2024, 9, 18553–18573. [Google Scholar] [CrossRef]

- Facchinei, F.; Pang, J.S. Finite-Dimensional Variational Inequalities and Complementarity Problems; Springer: New York, NY, USA, 2003. [Google Scholar]

- Jiang, H.; Xu, H. Stochastic approximation approaches to stochastic variational inequality problems. IEEE Trans. Autom. Control 2008, 53, 1462–1475. [Google Scholar] [CrossRef]

- Shapiro, A. Monte Carlo sampling methods. In Handbooks in Operations Research and Management Science: Stochastic Programming; Ruszczyński, A., Shapiro, A., Eds.; Elsevier: Amsterdam, The Netherlands, 2003; pp. 353–425. [Google Scholar]

- Wang, M.Z.; Lin, G.H.; Gao, Y.L.; Ali, M.M. Sample average approximation method for a class of stochastic variational inequality problems. J. Syst. Sci. Complex. 2011, 24, 1143–1153. [Google Scholar] [CrossRef]

- He, S.X.; Zhang, P.; Hu, X.; Hu, R. A sample average approximation method based on a D-gap function for stochastic variational inequality problems. J. Ind. Manag. Optim. 2014, 10, 977–987. [Google Scholar] [CrossRef]

- Cherukuri, A. Sample average approximation of conditional value-at-risk based variational inequalities. Optim. Lett. 2024, 18, 471–496. [Google Scholar] [CrossRef]

- Zhou, Z.; Honnappa, H.; Pasupathy, R. Drift optimization of regulated stochastic models using sample average approximation. arXiv 2025, arXiv:2506.06723. [Google Scholar] [CrossRef]

- Yousefian, F.; Nedić, A.; Shanbhag, U.V. Distributed adaptive steplength stochastic approximation schemes for Cartesian stochastic variational inequality problems. arXiv 2013, arXiv:1301.1711. [Google Scholar] [CrossRef]

- Koshal, J.; Nedić, A.; Shanbhag, U.V. Regularized iterative stochastic approximation methods for stochastic variational inequality problems. IEEE Trans. Autom. Control 2013, 58, 594–609. [Google Scholar] [CrossRef]

- Iusem, A.N.; Jofré, A.; Thompson, P. Incremental constraint projection methods for monotone stochastic variational inequalities. Math. Oper. Res. 2019, 44, 236–263. [Google Scholar] [CrossRef]

- Yang, Z.P.; Zhang, J.; Wang, Y.; Lin, G.H. Variance-based subgradient extragradient method for stochastic variational inequality problems. J. Sci. Comput. 2021, 89, 4. [Google Scholar] [CrossRef]

- Korpelevich, G.M. The extragradient method for finding saddle points and other problems. Matekon 1976, 12, 747–756. [Google Scholar]

- Iusem, A.N.; Jofré, A.; Oliveira, R.I.; Thompson, P. Extragradient method with variance reduction for stochastic variational inequalities. SIAM J. Optim. 2017, 27, 686–724. [Google Scholar] [CrossRef]

- Nwawuru, F.O. Approximation of solutions of split monotone variational inclusion problems and fixed point problems. Pan-Am. J. Math. 2023, 2, 1. [Google Scholar] [CrossRef]

- Iusem, A.N.; Jofré, A.; Oliveira, R.I.; Thompson, P. Variance-based extragradient methods with line search for stochastic variational inequalities. SIAM J. Optim. 2019, 29, 175–206. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef]

- Nwawuru, F.O.; Ezeora, J.N.; ur Rehman, H.; Yao, J.-C. Self-adaptive subgradient extragradient algorithm for solving equilibrium and fixed point problems in Hilbert spaces. Numer. Algorithms 2025. [Google Scholar] [CrossRef]

- Wang, S.; Tao, H.; Lin, R.; Cho, Y.J. A self-adaptive stochastic subgradient extragradient algorithm for the stochastic pseudomonotone variational inequality problem with application. Z. Angew. Math. Phys. 2022, 73, 164. [Google Scholar] [CrossRef]

- Liu, L.; Qin, X. An accelerated stochastic extragradient-like algorithm with new stepsize rules for stochastic variational inequalities. Comput. Math. Appl. 2024, 163, 117–135. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iterative methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Nwawuru, F.O.; Ezeora, J.N. Inertial-based extragradient algorithm for approximating a common solution of split-equilibrium problems and fixed-point problems of nonexpansive semigroups. J. Inequalities Appl. 2023, 2023, 22. [Google Scholar] [CrossRef]

- Ezeora, J.N.; Enyi, C.D.; Nwawuru, F.O.; Ogbonna, R.C. An algorithm for split equilibrium and fixed-point problems using inertial extragradient techniques. Comput. Appl. Math. 2023, 42, 103. [Google Scholar] [CrossRef]

- Nwawuru, F.O.; Narian, O.; Dilshad, M.; Ezeora, J.N. Splitting method involving two-step inertial iterations for solving inclusion and fixed point problems with applications. Fixed Point Theory Algorithms Sci. Eng. 2025, 2025, 8. [Google Scholar] [CrossRef]

- Enyi, C.D.; Ezeora, J.N.; Ugwunnadi, G.C.; Nwawuru, F.O.; Mukiawa, S.E. Generalized split feasibility problem: Solution by iteration. Carpathian J. Math. 2024, 40, 655–679. [Google Scholar] [CrossRef]

- Nesterov, Y.E. A method for solving a convex programming problem with convergence rate O(1/k2). Dokl. Akad. Nauk SSSR 1983, 269, 543–547. [Google Scholar]

- Long, X.J.; He, Y.H. A fast stochastic approximation-based subgradient extragradient algorithm with variance reduction for solving stochastic variational inequality problems. J. Comput. Appl. Math. 2023, 420, 114786. [Google Scholar] [CrossRef]

- Zhang, X.; Du, X.; Yang, Z.; Lin, G. An infeasible stochastic approximation and projection algorithm for stochastic variational inequalities. J. Optim. Theory Appl. 2019, 183, 1053–1076. [Google Scholar] [CrossRef]

- Fang, C.; Chen, S. Some extragradient algorithms for variational inequalities. In Advances in Variational and Hemivariational Inequalities; Han, W., Migórski, S., Sofonea, M., Eds.; Advances in Mechanics and Mathematics; Springer: Cham, Switzerland, 2015; Volume 33, pp. 145–171. [Google Scholar]

- Ezeora, J.N.; Nwawuru, F.O. An inertial-based hybrid and shrinking projection methods for solving split common fixed point problems in real reflexive spaces. Int. J. Nonlinear Anal. Appl. 2023, 14, 2541–2556. [Google Scholar]

- Robbins, H.; Siegmund, D. A convergence theorem for nonnegative almost supermartingales and some applications. In Optimizing Methods in Statistics; Rustagi, J.S., Ed.; Academic Press: New York, NY, USA, 1971; pp. 233–257. [Google Scholar]

- Li, T.; Cai, X.; Song, Y.; Ma, Y. Improved variance reduction extragradient method with line search for stochastic variational inequalities. J. Glob. Optim. 2023, 87, 423–446. [Google Scholar] [CrossRef]

- Yang, Z.P.; Lin, G.H. Variance-based single-call proximal extragradient algorithms for stochastic mixed variational inequalities. J. Optim. Theory Appl. 2021, 190, 393–427. [Google Scholar] [CrossRef]

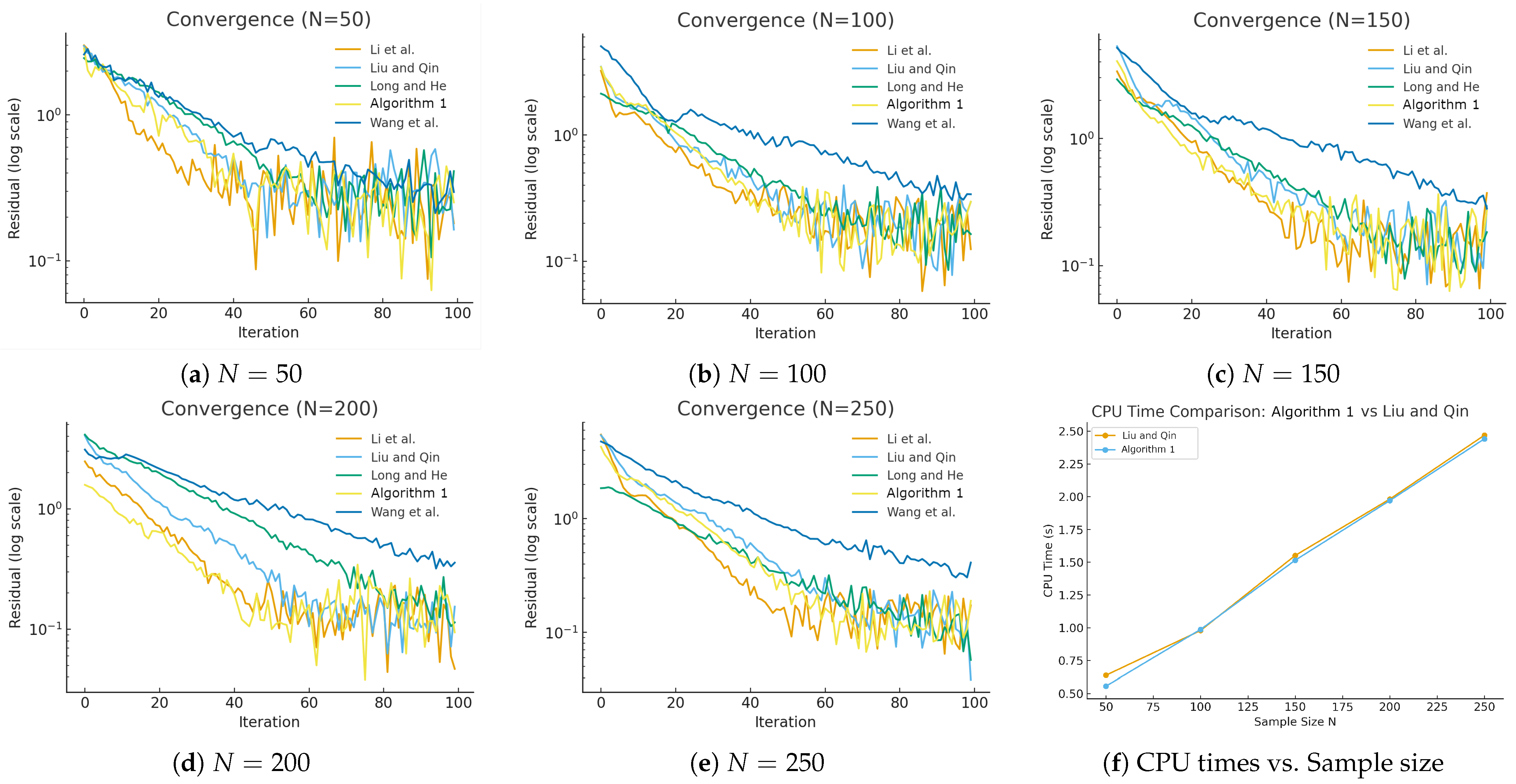

| Method | Per-Iteration Oracle & Projections | Stepsize Control | Extras | Convergence Behavior (Qualitative) |

|---|---|---|---|---|

| Li et al. [43] | Two sample sets (); two projections to X | Residual-based linesearch , adaptive warm-start | None (no inertia) | A.s. convergence with growing batches; often takes larger steps means faster practical progress |

| Liu & Qin [31] | Two averaged maps; one projection to X + one to half-space | Discrete backtracking on ; adaptive | Inertial extrapolation with cap | A.s. convergence under monotone/quasi-monotone maps; inertia accelerates but is safeguarded |

| Long & He [38] | Uses for , for ; one projection to X + one to half-space | Backtracking on via two-point inequality | None | A.s. convergence for monotone-type F; very robust in noise, steady progress |

| Algorithm 1 | One projection to X + one to half-space ; two batch evaluations | Adaptive via norm ratio rule | Inertial with adaptive cap | A.s. convergence under quasi-monotone F; good balance of stability and speed |

| Wang et al. [30] | One projection to X + one to half-space ; two batch evaluations | Adaptive via squared-norm ratio | None | A.s. convergence for monotone mappings; conservative but very stable |

| Algorithm | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Time (s) | Iter | Conv | Time (s) | Iter | Conv | Time (s) | Iter | Conv | Time (s) | Iter | Conv | Time (s) | Iter | Conv | |

| Li et al. [43] | 0.002 | 35 | Yes | 0.003 | 30 | Yes | 0.004 | 28 | Yes | 0.005 | 27 | Yes | 0.006 | 25 | Yes |

| Liu and Qin [31] | 0.002 | 40 | Yes | 0.004 | 36 | Yes | 0.004 | 33 | Yes | 0.005 | 31 | Yes | 0.006 | 29 | Yes |

| Long and He [38] | 0.001 | 32 | Yes | 0.002 | 29 | Yes | 0.003 | 27 | Yes | 0.003 | 25 | Yes | 0.004 | 24 | Yes |

| Algorithm 1 | 0.002 | 38 | Yes | 0.003 | 34 | Yes | 0.004 | 31 | Yes | 0.004 | 29 | Yes | 0.005 | 28 | Yes |

| Wang et al. [30] | 0.001 | 45 | Yes | 0.002 | 41 | Yes | 0.003 | 39 | Yes | 0.004 | 36 | Yes | 0.004 | 34 | Yes |

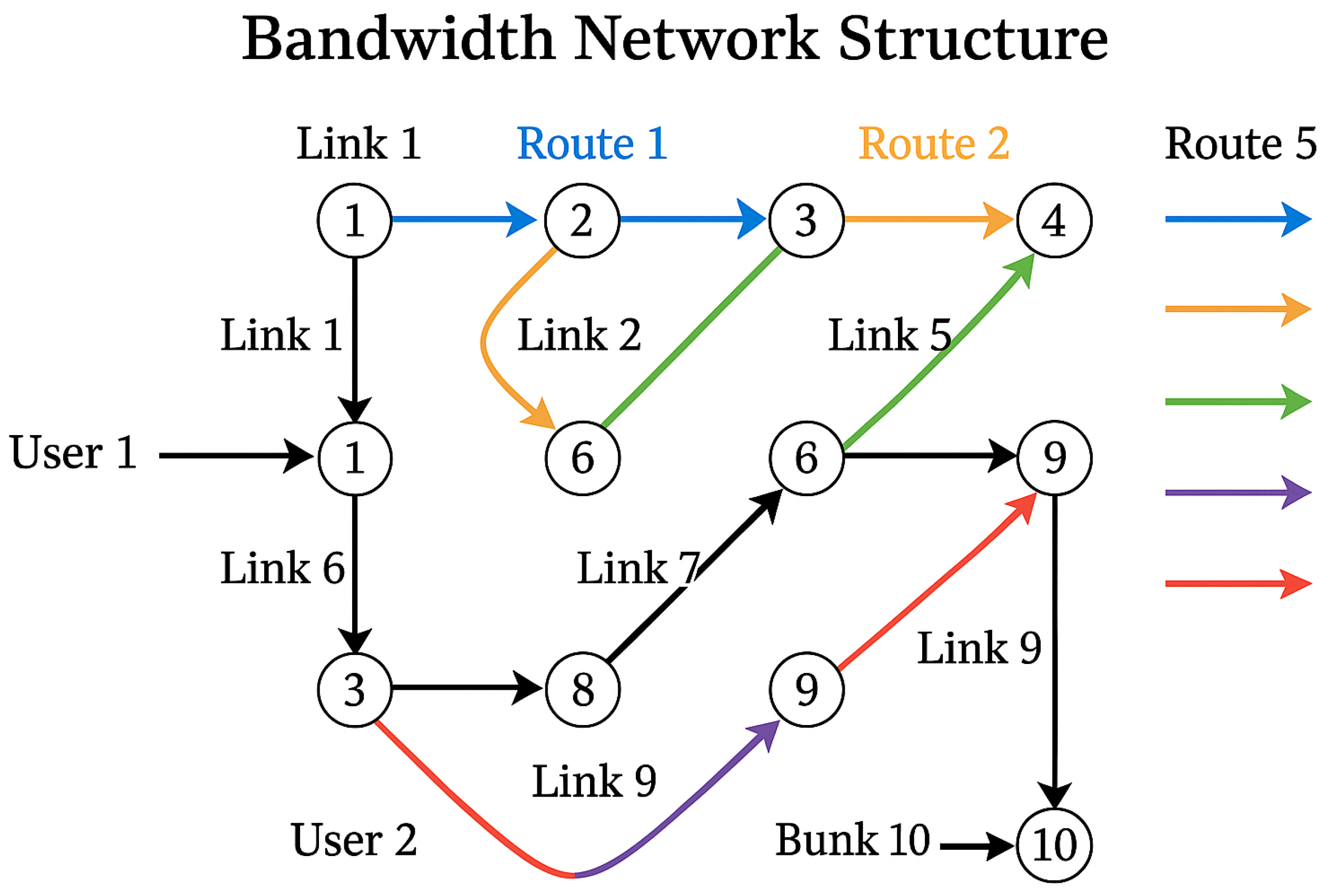

| User | Route | Random Parameter | Distribution Range |

|---|---|---|---|

| User 1 | |||

| User 2 | |||

| Link | Incident Routes | Example Load | Suggested Capacity | Slack (-Load) |

|---|---|---|---|---|

| 1 | {1} | |||

| 2 | {1,2} | |||

| 3 | {1,2} | |||

| 4 | {2,3} | |||

| 5 | {3} | |||

| 6 | {4} | |||

| 7 | {4} | |||

| 8 | {4,5} | |||

| 9 | {5} | |||

| 10 | {5} |

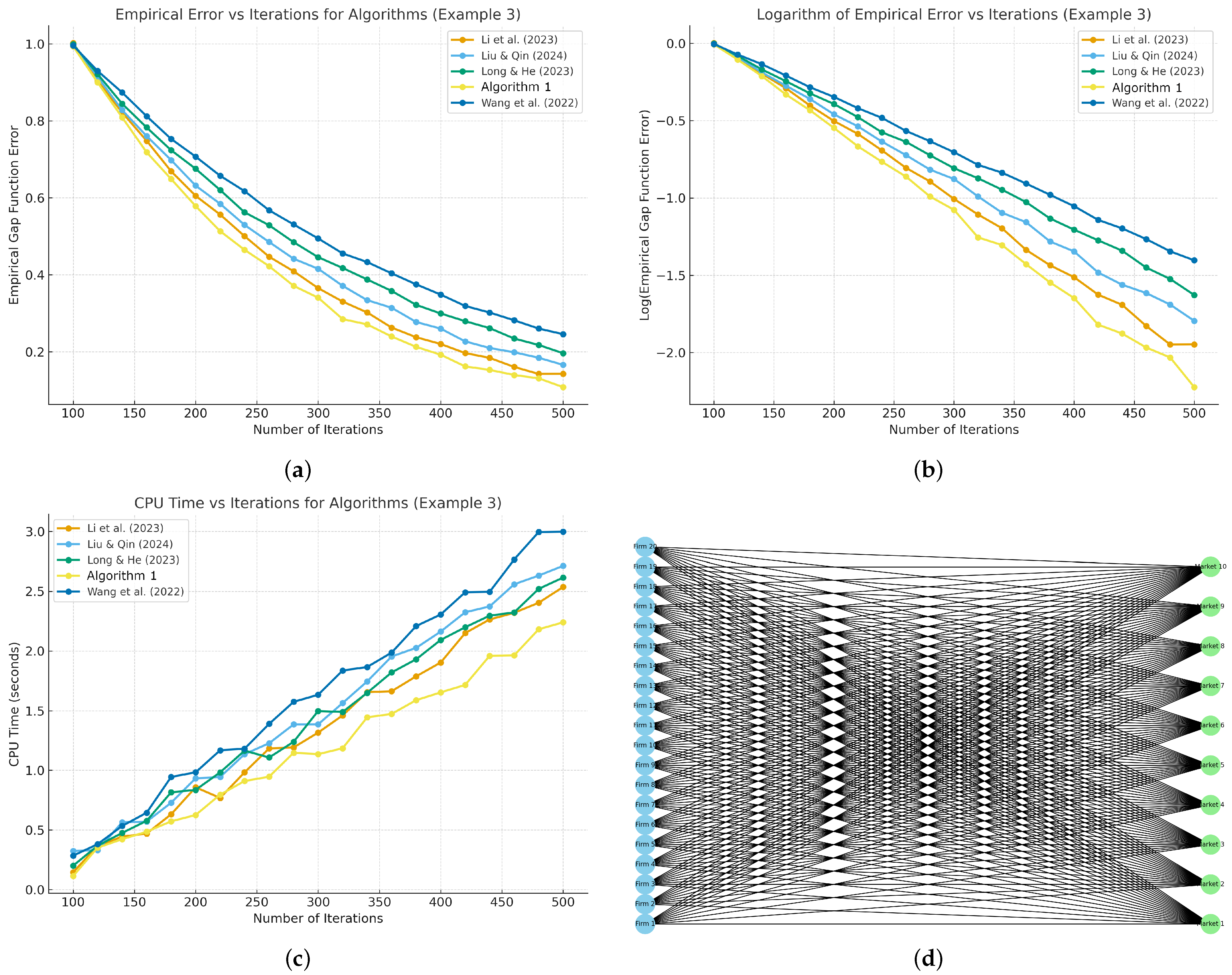

| Market | Price Parameters | Firms Connected | Capacity per Link |

|---|---|---|---|

| Market 1 | Firm 1–Firm 20 | 2 | |

| Market 2 | Firm 1–Firm 20 | 2 | |

| Market 3 | Firm 1–Firm 20 | 2 | |

| Market 4 | Firm 1–Firm 20 | 2 | |

| Market 5 | Firm 1–Firm 20 | 2 | |

| Market 6 | Firm 1–Firm 20 | 2 | |

| Market 7 | Firm 1–Firm 20 | 2 | |

| Market 8 | Firm 1–Firm 20 | 2 | |

| Market 9 | Firm 1–Firm 20 | 2 | |

| Market 10 | Firm 1–Firm 20 | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dilshad, M.; Al-Dayel, I.; Nwawuru, F.O.; Ezeora, J.N. On Quasi-Monotone Stochastic Variational Inequalities with Applications. Axioms 2025, 14, 912. https://doi.org/10.3390/axioms14120912

Dilshad M, Al-Dayel I, Nwawuru FO, Ezeora JN. On Quasi-Monotone Stochastic Variational Inequalities with Applications. Axioms. 2025; 14(12):912. https://doi.org/10.3390/axioms14120912

Chicago/Turabian StyleDilshad, Mohammad, Ibrahim Al-Dayel, Francis O. Nwawuru, and Jeremiah N. Ezeora. 2025. "On Quasi-Monotone Stochastic Variational Inequalities with Applications" Axioms 14, no. 12: 912. https://doi.org/10.3390/axioms14120912

APA StyleDilshad, M., Al-Dayel, I., Nwawuru, F. O., & Ezeora, J. N. (2025). On Quasi-Monotone Stochastic Variational Inequalities with Applications. Axioms, 14(12), 912. https://doi.org/10.3390/axioms14120912