Estimation for Longitudinal Varying Coefficient Partially Nonlinear Models Based on QR Decomposition

Abstract

1. Introduction

2. Models and Methods

2.1. Estimation of Parameter Vector

2.2. Estimation of the Coefficient Functions

3. Main Conclusions

- (C1)

- The support of the random variable U is bounded, and its probability density function has continuous second-order derivatives.

- (C2)

- The varying coefficient functions are continuously differentiable of order r on , where .

- (C3)

- For arbitrary Z, exhibits continuity with respect to , and has continuous partial derivatives of order r.

- (C4)

- holds, and there exists some satisfying .

- (C5)

- The covariates and are assumed to satisfy the following conditions: , ,

- (C6)

- Let be interior nodes on . Furthermore, let then a constant exists such that:

- (C7)

- Define , then we have:

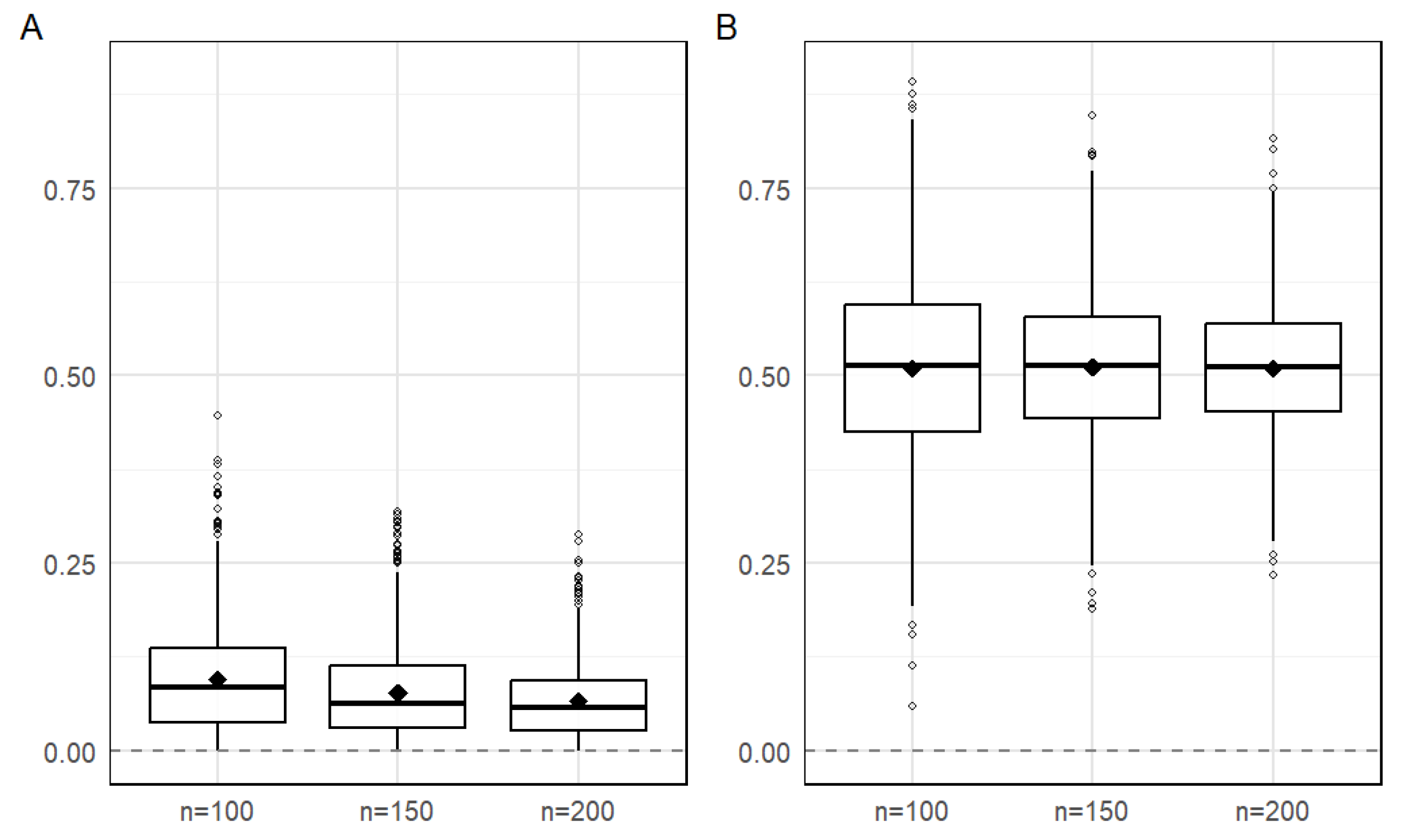

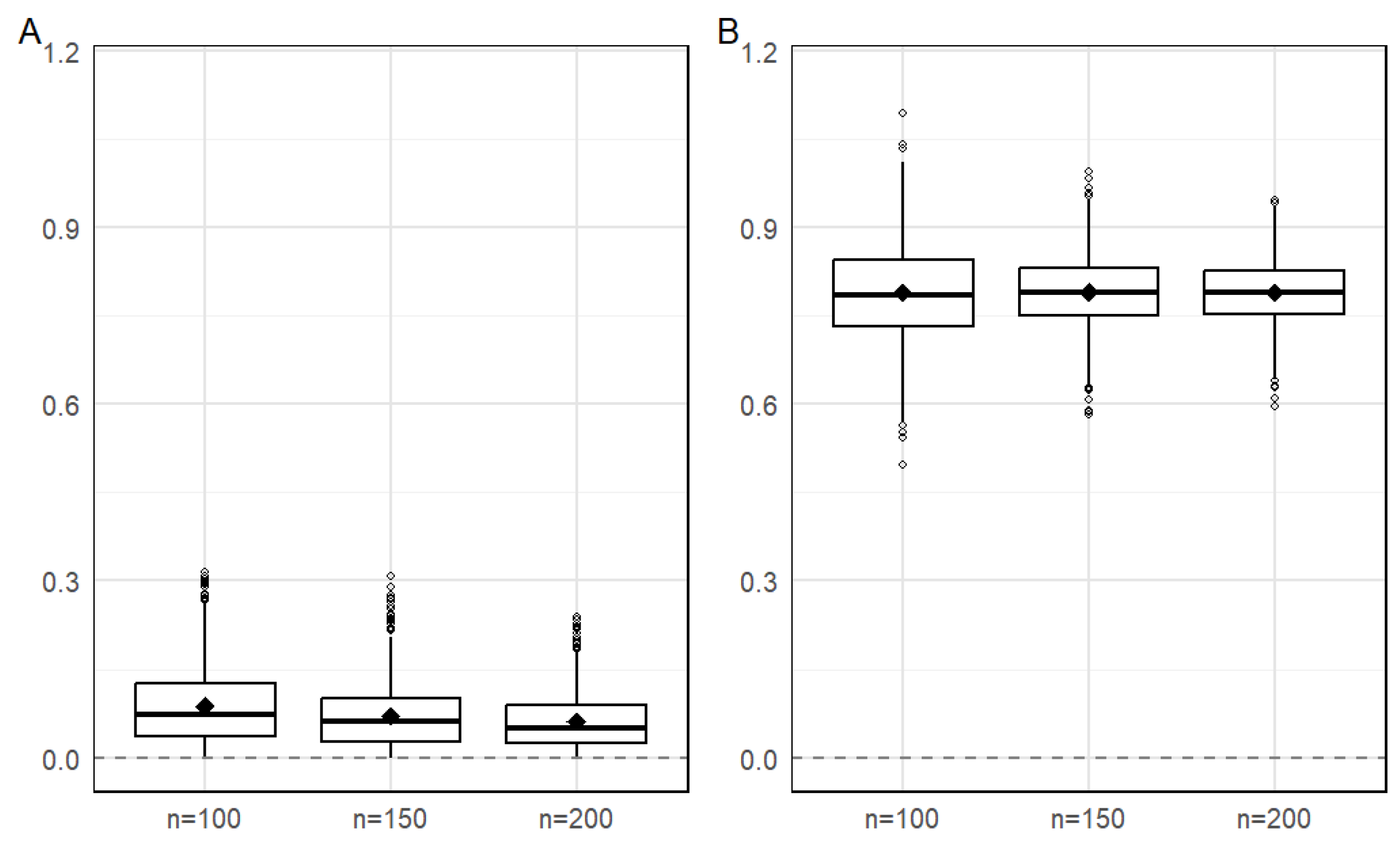

4. Simulation Study

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| VCPNLM | Varying coefficient partially nonlinear model |

| QIF | Quadratic inference function |

| OQIF | The combination of QR decomposition and QIF |

| PNLS | Profile nonlinear least squares |

Appendix A

References

- Li, T.; Mei, C. Estimation and inference for varying coefficient partially nonlinear models. J. Stat. Plan. Inference 2013, 143, 2023–2037. [Google Scholar] [CrossRef]

- Yu, P.; Zhu, Z.; Shi, J.; Ai, X. Robust estimation for partial functional linear regression model based on modal regression. J. Syst. Sci. Complex. 2020, 33, 527–544. [Google Scholar] [CrossRef]

- Yan, L.; Tan, X.y.; Chen, X. Empirical likelihood for partially linear errors-in-variables models with longitudinal data. Acta Math. Appl. Sin. Engl. Ser. 2022, 38, 664–683. [Google Scholar] [CrossRef]

- Xiao, Y.; Liang, L. Robust estimation and variable selection for varying-coefficient partially nonlinear models based on modal regression. J. Korean Stat. Soc. 2022, 51, 692–715. [Google Scholar] [CrossRef]

- Zhao, P.; Zhou, X.; Wang, X.; Huang, X. A new orthogonality empirical likelihood for varying coefficient partially linear instrumental variable models with longitudinal data. Commun. Stat.-Simul. Comput. 2020, 49, 3328–3344. [Google Scholar] [CrossRef]

- Liang, K.Y.; Zeger, S.L. Longitudinal data analysis using generalized linear models. Biometrika 1986, 73, 13–22. [Google Scholar] [CrossRef]

- Xu, H.X.; Fan, G.L.; Liang, H.Y. Quantile regression for varying-coefficient partially nonlinear models with randomly truncated data. Stat. Pap. 2024, 65, 2567–2604. [Google Scholar] [CrossRef]

- Diggle, P.J. Analysis of Longitudinal Data; Oxford University Press: Oxford, UK, 2002. [Google Scholar]

- Qu, A.; Lindsay, B.G.; Li, B. Improving generalised estimating equations using quadratic inference functions. Biometrika 2000, 87, 823–836. [Google Scholar] [CrossRef]

- Bai, Y.; Fung, W.K.; Zhu, Z.Y. Penalized quadratic inference functions for single-index models with longitudinal data. J. Multivar. Anal. 2009, 100, 152–161. [Google Scholar] [CrossRef]

- Tian, R.; Xue, L.; Liu, C. Penalized quadratic inference functions for semiparametric varying coefficient partially linear models with longitudinal data. J. Multivar. Anal. 2014, 132, 94–110. [Google Scholar] [CrossRef]

- Schumaker, L. Spline Functions: Basic Theory; Wiley: Hoboken, NJ, USA, 1981. [Google Scholar]

- Wei, Y.; Wang, Q.; Liu, W. Model averaging for linear models with responses missing at random. Ann. Inst. Stat. Math. 2021, 73, 535–553. [Google Scholar] [CrossRef]

- Jiang, Y.; Ji, Q.; Xie, B. Robust estimation for the varying coefficient partially nonlinear models. J. Comput. Appl. Math. 2017, 326, 31–43. [Google Scholar] [CrossRef]

- Xiao, Y.T.; Chen, Z.S. Bias-corrected estimations in varying-coefficient partially nonlinear models with measurement error in the nonparametric part. J. Appl. Stat. 2018, 45, 586–603. [Google Scholar] [CrossRef]

- Yang, J.; Yang, H. Smooth-threshold estimating equations for varying coefficient partially nonlinear models based on orthogonality-projection method. J. Comput. Appl. Math. 2016, 302, 24–37. [Google Scholar] [CrossRef]

- Qian, Y.; Huang, Z. Statistical inference for a varying-coefficient partially nonlinear model with measurement errors. Stat. Methodol. 2016, 32, 122–130. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, P.; Du, H. Statistical inferences for varying coefficient partially non linear model with missing covariates. Commun. Stat.-Theory Methods 2021, 50, 2599–2618. [Google Scholar] [CrossRef]

- Zhou, Y.; Mei, R.; Zhao, Y.; Hu, Z.; Zhao, M. Orthogonality-based bias-corrected empirical likelihood inference for partial linear varying coefficient EV models with longitudinal data. J. Comput. Appl. Math. 2024, 443, 115751. [Google Scholar] [CrossRef]

- Zhao, P.; Xue, L. Empirical likelihood inferences for semiparametric varying-coefficient partially linear errors-in-variables models with longitudinal data. J. Nonparametric Stat. 2009, 21, 907–923. [Google Scholar] [CrossRef]

| Method | Parameter | ||||||

|---|---|---|---|---|---|---|---|

| Bias | SD | Bias | SD | Bias | SD | ||

| OQIF | 0.00257 | 0.02012 | 0.00123 | 0.01890 | 0.00079 | 0.01568 | |

| −0.00390 | 0.03890 | −0.00235 | 0.03568 | −0.00157 | 0.02946 | ||

| PNLS | 0.01457 | 0.03012 | 0.01235 | 0.02789 | 0.00988 | 0.02346 | |

| −0.02789 | 0.05890 | −0.02457 | 0.05235 | −0.02012 | 0.04457 | ||

| Method | Parameter | ||||||

|---|---|---|---|---|---|---|---|

| Length | Coverage | Length | Coverage | Length | Coverage | ||

| OQIF | 0.07671 | 0.943 | 0.07190 | 0.947 | 0.05971 | 0.951 | |

| 0.14853 | 0.937 | 0.13551 | 0.941 | 0.11329 | 0.948 | ||

| PNLS | 0.11329 | 0.911 | 0.10065 | 0.918 | 0.08541 | 0.925 | |

| 0.20995 | 0.899 | 0.18773 | 0.905 | 0.15946 | 0.912 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ge, J.; Zhou, X.; Wang, C. Estimation for Longitudinal Varying Coefficient Partially Nonlinear Models Based on QR Decomposition. Axioms 2025, 14, 875. https://doi.org/10.3390/axioms14120875

Ge J, Zhou X, Wang C. Estimation for Longitudinal Varying Coefficient Partially Nonlinear Models Based on QR Decomposition. Axioms. 2025; 14(12):875. https://doi.org/10.3390/axioms14120875

Chicago/Turabian StyleGe, Jiangcui, Xiaoshuang Zhou, and Cuiping Wang. 2025. "Estimation for Longitudinal Varying Coefficient Partially Nonlinear Models Based on QR Decomposition" Axioms 14, no. 12: 875. https://doi.org/10.3390/axioms14120875

APA StyleGe, J., Zhou, X., & Wang, C. (2025). Estimation for Longitudinal Varying Coefficient Partially Nonlinear Models Based on QR Decomposition. Axioms, 14(12), 875. https://doi.org/10.3390/axioms14120875