Structural Results on the HMLasso

Abstract

1. Introduction

2. Preliminaries

3. Main Results

3.1. Lipschitz Continuity

3.2. Strong Convexity

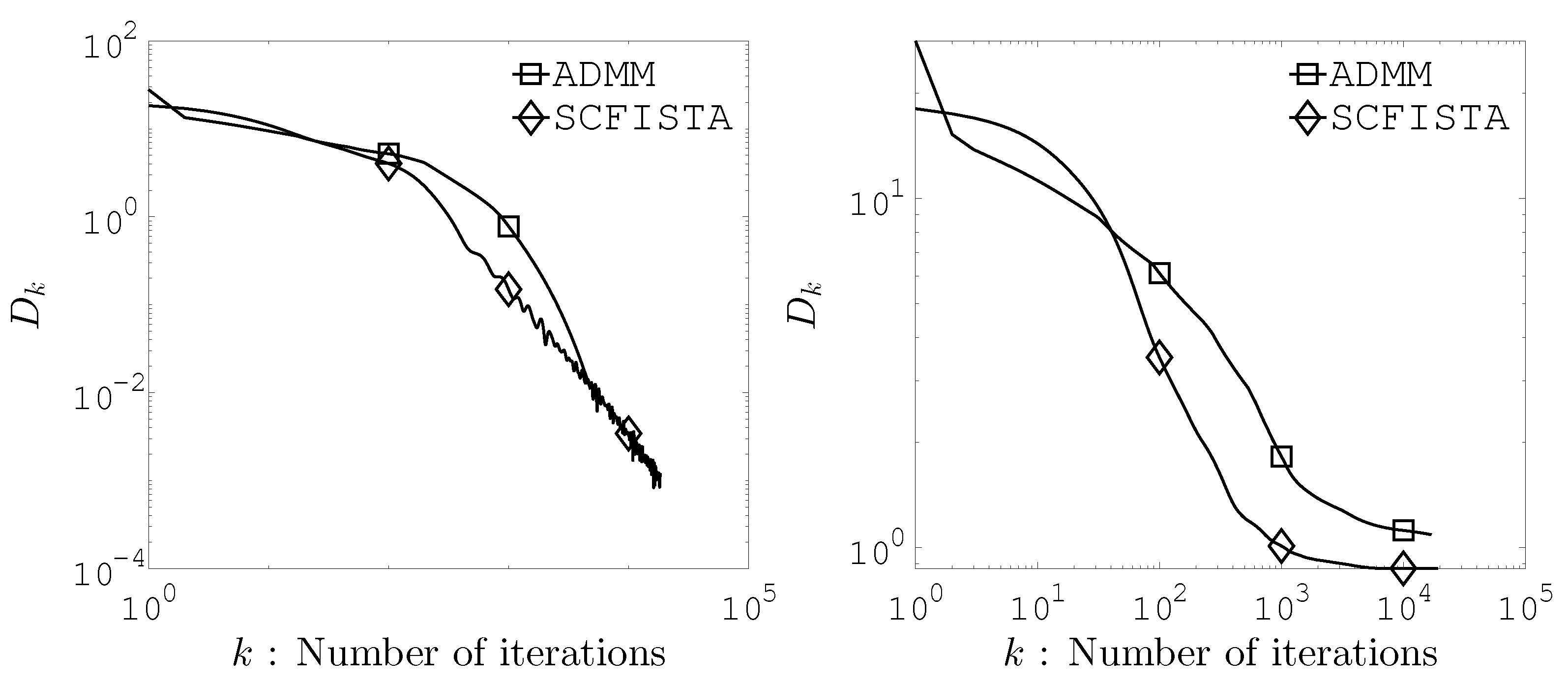

4. Numerical Experiments

4.1. Strongly Convex FISTA

| Algorithm 1 Strongly convex FISTA [6] |

|

4.2. Residential Building Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tibshirani, R. Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Takada, M.; Fujisawa, H.; Nishikawa, T. HMLasso: Lasso with High Missing Rate. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI), Macao, 10–16 August 2019; pp. 3541–3547. [Google Scholar]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; CMS Books in Mathematics; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Escalante, R.; Raydan, M. Alternating Projection Methods; SIAM: Philadelphia, PA, USA, 2011. [Google Scholar]

- Beck, A. First-Order Methods in Optimization; MOS-SIAM Series on Optimization; SIAM: Philadelphia, PA, USA, 2017. [Google Scholar]

- D’Aspremont, A.; Scieur, D.; Taylor, A. Acceleration Methods. Fund. Trends Optim. 2021, 5, 1–245. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matsushita, S.-y.; Sasaki, H. Structural Results on the HMLasso. Axioms 2025, 14, 843. https://doi.org/10.3390/axioms14110843

Matsushita S-y, Sasaki H. Structural Results on the HMLasso. Axioms. 2025; 14(11):843. https://doi.org/10.3390/axioms14110843

Chicago/Turabian StyleMatsushita, Shin-ya, and Hiromu Sasaki. 2025. "Structural Results on the HMLasso" Axioms 14, no. 11: 843. https://doi.org/10.3390/axioms14110843

APA StyleMatsushita, S.-y., & Sasaki, H. (2025). Structural Results on the HMLasso. Axioms, 14(11), 843. https://doi.org/10.3390/axioms14110843