1. Introduction

Linear regression models are widely employed in physical sciences to analyze relationships between variables in fields such as environmental monitoring, material science, chemistry, and geophysics. A critical assumption in these models is that predictor variables are independent; however, physical systems often exhibit strong interdependencies and correlations among measured factors. This multicollinearity presents significant challenges in regression analysis, as it obscures the individual effect of each variable and leads to unstable coefficient estimates. In some cases, parameter estimates may fluctuate dramatically or change signs unexpectedly, undermining the physical interpretability of the model. Such instability reduces the reliability of conclusions drawn about complex physical phenomena and limits the model’s predictive accuracy, complicating the understanding and management of environmental and physical processes.

While many researchers have proposed different shrinkage or ridge parameters to address multicollinearity, only a few are widely examined in the literature. Initially, Hoerl and Kennard [

1] developed a ridge regression method that introduced a penalty parameter

) to reduce the influence of highly correlated variables to remove the effect of multicollinearity. In the shrinkage technique, penalty term is added to stabilize the coefficient estimates, hence improving the statistical model’s predictive accuracy. However, the ridge parameter introduces a slight bias in the estimators. When the penalty parameter is set to zero, ridge regression essentially becomes equivalent to OLS. Over the years, various improvements have been made to ridge regression to enhance its effectiveness in tackling multicollinearity. Rao and Toutenburg [

2] proposed generalized ridge regression, offering more flexibility in selecting the penalty terms. Furthermore, the authors in [

3,

4] improved ridge estimators and the process of shrinking coefficients, enhancing the model’s stability and precision. Most recently, advanced developments by authors [

5,

6,

7,

8,

9] innovated shrinkage parameters to handle severe multicollinearity, further improving the accuracy, reliability, and predication of regression models. One of the recent studies is Ref. [

10], who introduced several two-ridge estimators, which are based on the data structure, for handling collinear datasets.

It is clear from the literature that no single ridge estimator achieves optimal performance across all scenarios, creating a significant research gap in addressing multicollinearity effectively. Existing methods often fail to incorporate critical factors, such as sample size, standard error, and the number of predictors, which are crucial for improving the accuracy and reliability of regression estimates. To bridge this gap, we propose three new estimators, denoted as SPS1, SPS2 and SPS3, that specifically address these issues by incorporating the sample size , standard error , and number of predictors in their formulations. Our newly proposed estimators are designed to mitigate multicollinearity, shrink regression coefficients, and achieve lower MSE as compared to OLS and other existing estimators. Through extensive simulation analysis and practical applications, the newly proposed SPS estimators consistently outperform both OLS and other existing estimators across various scenarios.

The structure of this paper is organized as follows.

Section 2 introduces the statistical models, discusses various ridge estimators, and presents the proposed shrinkage parameters.

Section 3 details the simulation study conducted to evaluate the performance of the proposed estimators. In

Section 4, two real-life datasets are analyzed to demonstrate the practical advantages of the proposed estimators. Finally, the paper concludes with key findings and remarks in

Section 5.

2. Materials and Methods

Consider the following multiple linear regression model:

where

is an

observations response vector,

is an

design matrix,

is a

unknown parameter vector, and

is an

error vector.

The OLS estimator is given as follows:

where

. However, when predictors are highly correlated,

becomes nearly singular (|

′| ≈ 0), causing unstable estimates and large variances [

11]. To address this issue, ridge regression method introduces a shrinkage parameter

. Ridge regression estimator is given as follows:

where

is the identity matrix. This stabilizes estimates by trading bias for reduced variance. The ridge shrinkage parameter

biases the estimates; however, it reduces their variance compared to OLS estimator [

1]. When

the ridge regression estimator approaches the OLS estimator (

), and as

→ ∞,

.

The regression model (1) can be expressed in its canonical form as follows:

where

,

, and

is an orthogonal matrix such that

′′ = Λ, with

Λ representing the eigenvalues of

′. In this form, OLS and ridge regression estimator are given in Equation (5) and Equation (6), respectively.

where

for all

The MSE of the OLS and ridge regression estimator are defined in Equation (7) and Equation (8), respectively.

The first term in Equation (8) represents variance, while the second term captures the bias introduced by ridge regression. As increases, the variance decreases and the bias increases.

2.1. Ridge Regression Estimators

In ridge regression, is often referred to as the ridge, shrinkage, or penalty term, and it plays an important role in addressing collinearity in the data. Its value must be estimated from real data, and much of the recent research in ridge regression has focused on developing methods to determine an optimal value. In this section, we review various statistical approaches for estimating and their applications in addressing multicollinearity.

2.1.1. Existing Ridge Estimators

Hoerl and Kennard [

1] mathematically determined that the optimal ridge parameter

equals the estimated error variance divided by the square of the ordinary least squares coefficient estimate, as presented in Equation (9).

where

is the estimated error variance and

. This estimator is known as HK estimator.

Hoerl et al. [

12] further modified the HK estimator, and developed BHK estimator with estimated

defined as follows:

Kibria [

13] introduced some new ridge estimators based on averages: arithmetic mean, geometric mean, and median for collinearity dataset. These estimators are denoted by KAM, KGM, and Kmed in this work. Their estimated

values are mathematically defined as follows:

Khalaf et al. [

14] developed a new estimator denoted as KMS by making eigenvalue-based adjustments. The estimated

value for this estimator is expressed as follows:

Most recently, Ref. [

15] introduced a few shrinkage parameters to improve and better handle collinear data. Among these, the Balanced Log Ridge Estimator (BLRE) is chosen for comparison with our proposed estimators. The

-value for the BLRE is defined as follows:

where

.

The newly proposed SPS estimators are compared with OLS and above ridge estimators, HK, BHK, KAM, KGM, Kmed, KMS, and BLRE in this research.

2.1.2. Proposed Ridge-Type Estimators

In this study, we proposed three new ridge parameters to establish three new ridge estimators, that referred to as SPS1, SPS2, and SPS3, which are based on data components such as the sample size

, the number of predictor variables

, and the standard error (

. The

for the three proposed estimators are mathematically defined as follows:

The proposed assist in controlling the influence of correlated independent variables. SPS1 adjusts the penalty with a logarithmic term, which is influenced by both the number of predictors and the sample size. SPS2 introduces a correction factor based on the relationship between and . SPS3 incorporates both the standard error and the sum of the sample size and number of predictors, providing a more balanced adjustment to the penalty term.

2.2. Mean Squared Error

The estimators’ performance was evaluated using the MSE criterion, a widely used metric in previous similar studies [

16,

17,

18]. The MSE can be defined as follows:

As the theoretical comparisons can be complex, we examined the performance of these ridge estimators (Equations (9)–(18)) via Monte Carlo simulations, as described in next section.

3. Monte Carlo Simulation Approach

To assess ridge estimators, the predictor variables are generated using Equation (20), where

is simulated through a standard approach widely used by researchers [

19,

20,

21].

To generate the dependent variable, we assumed the following model:

where

represents the dependent variable,

are the predictor variables and

is the error term

. The number of observations is denoted as

, and the

β coefficients are selected under the assumption that

β′β = 1. For the model in Equation (21), the intercept term is set to zero

The correlation between the predictor variables are given values . The error terms are followed a normal distribution with zero mean and unit variance. The study explored the impact of varying factors, such as the number of independent variables , the error variance and the sample size (. The specific values used in the analysis are as follows:

Sample sizes: .

Number of independent variables: .

Error variance: .

These variations were considered to examine the influence of these factors on the model’s behavior and results. To evaluate the MSE across different values of

simulations were run in R programming. The results are presented in

Table A1,

Table A2,

Table A3,

Table A4,

Table A5,

Table A6,

Table A7 and

Table A8 (

Appendix A).

The following steps were used to calculate the MSE for the estimators:

Standardize predictors using Equation (20) and compute eigenvalues (, , , …, ) and eigenvectors (, , , …, ) of . Set true coefficients as β = emaxP, where P = [, …, ] and corresponds to the largest eigenvector (with errors generated from

Compute response values using Equations (5) and (6), and derive OLS and other ridge estimates using their formulas.

Repeat the procedure for

Monte Carlo simulations. Calculate the MSE as follows:

Discussion of the Simulation Results

The simulation results presented in

Table A1,

Table A2,

Table A3,

Table A4,

Table A5,

Table A6,

Table A7 and

Table A8 provided a detailed comparison of the performance of OLS, existing ridge estimators (HK, KMS, KAM, KGM, Kmed, and BLRE), and our newly proposed ridge estimators (

). The study evaluated the effects of sample size

, number of predictors

, correlation coefficient

, and error variance

on the mean squared error (MSE) of these estimators. The results highlighted the robustness and efficiency of our newly proposed estimators across a wide range of scenarios. Key observations from the tables are summarized below.

Performance Across Sample Sizes : The performance of the estimators varies significantly with different sample sizes. As the sample size increased (from

= 10, 20 to

= 100), the MSE tended to decrease across most estimators, which is a common trend in statistical estimation due to the law of large numbers. For instance, in

Table A1 (

= 4,

= 20), the MSE for most estimators was higher, whereas in

Table A3 (

= 4,

= 100), MSE values were noticeably lower. This improvement is particularly evident in the new SPS estimators, especially the SPS1, which consistently exhibited lower MSE compared to OLS and other existing estimators. This suggests that the SPS estimators, particularly the SPS1, are more stable and efficient as the sample size increases, further supporting their robustness in larger datasets.

Impact of Number of Predictors : The number of predictors also influenced the MSE of the estimators. As the number of predictors increases from

= 4, 6, 8 and

= 10, the MSE generally increases, especially for estimators like OLS, which struggle more as the model complexity increases. For example, in

Table A4 (

= 10,

= 20), the MSE for OLS was considerably higher than in the

= 4 scenarios, reflecting the difficulty of OLS in dealing with more complex models. The proposed SPS estimators continued to show robust performance across different values of

, especially the SPS1, which tended to outperform other estimators even as p increased. This highlights that the SPS estimators remain effective even in higher-dimensional settings, where traditional estimators like OLS may fail to perform well.

Effect of Correlation Coefficient : The correlation coefficient had a notable effect on the MSE, with higher correlation leading to increased estimation difficulty. As

increased from 0.80 to 0.99, the MSE for most estimators increased, especially for OLS. This is particularly apparent in

Table A1,

Table A2 and

Table A3, where at

= 0.99, the MSE for OLS was much higher than at

= 0.80. The newly proposed SPS estimators, particularly the SPS1, maintained relatively low MSE even as the correlation increases. This indicates that the SPS estimators are more robust to high correlation, which is often a challenging condition for many existing estimators and OLS. This robustness makes the SPS estimators particularly attractive when high correlations between predictors are present.

Influence of Error Variance : The influence of error variance was another critical factor affecting the performance of the estimators. As σ

2 increased from 0.5 to 11, the MSE generally increased for all estimators, indicating that higher error variance leads to greater estimation uncertainty. However, the newly proposed SPS estimators showed a distinct advantage under high error variance conditions. For instance, in

Table A4 (

= 10,

= 20) at σ

2 = 11, the SPS1 still outperformed OLS and several other existing estimators, suggesting that it can handle larger error variances more effectively. In contrast, traditional estimators like KGM and Kmed showed substantial increases in MSE as error variance rose, especially at

= 0.99. This confirms that the SPS estimators, particularly the SPS1, are more robust to high levels of error variance, providing more reliable estimates under such conditions.

Comparison with existing estimators: When comparing the performance of the newly proposed SPS estimators with existing ones (HK, BHK, KMS, KAM, KGM, Kmed, BLRE), the SPS estimators generally outperform OLS and most of the existing estimators across different conditions, especially under higher correlations and larger error variances. Among the traditional estimators, KAM, KMS, and BHK were the most competitive, showing lower MSE than OLS in many scenarios, particularly at higher sample sizes and moderate to high correlation. However, the SPS estimators, particularly the SPS1, showed consistently superior performance across a wide range of conditions, including high correlation ( = 0.99) and large error variances (σ2 = 11). The SPS1 consistently provided the lowest MSE across most of the datasets, making it the most reliable estimator in challenging conditions. In contrast, the SPS2 and SPS3 exhibited competitive performance but with slightly higher MSE compared to the SPS1, particularly in cases of very high correlation and large error variances. Nonetheless, they still outperformed OLS and several existing estimators, making them valuable alternatives in many situations.

4. Environmental and Chemical Science Data Applications

Regression analysis involving multicollinear data, especially in environmental, chemical, and physical studies, is of particular interest to many researchers, where predictor interdependence is common.

The performance of our newly developed estimators, OLS, and other existing ridge estimators was evaluated using two real datasets. We considered the environmental Air Pollution Dataset [

22] and the chemical Hald Cement Dataset [

11], see also: Ref. [

23]. These real datasets share features similar to those taken into account in our earlier simulation work.

4.1. Air Pollution Dataset

This environmental dataset contains 20 real-world measurements of urban nitrogen dioxide (NO

2) levels (

), along with humidity

, temperature

, and air pressure

. From 15 predictors, we selected only three predictor variables

as their correlations are obvious. NO

2 ranges from 0.05 ppm (light) to 0.25 ppm (moderate pollution). The natural correlations between weather variables, such as humidity and air pressure, make this dataset useful for testing regression models on atmospheric data. The linear regression model for modeling this dataset can be written as follows:

In this equation, represents the intercept, while , and are the coefficients associated with each predictor variable respectively. The term reflects the error term, which accounts for the difference between the actual and predicted values.

To check if the dataset is affected by multicollinearity, three tools were used: Variance Inflation Factor (VIF), the Condition Number (CN), and a heatmap display. For the CN, the eigenvalues of the data are needed, which are as follows: .

The presence of near-zero eigenvalues (0.05 and 0.003) shows that the predictors are highly interdependent, with one variable almost perfectly explainable by the others.

When the CN is greater than 30, it indicates the presence of multicollinearity in the dataset.

The CN, calculated as the ratio of the largest to smallest eigenvalue, is as follows:

Additionally, the VIF values for each predictor are as follows:

Since all VIF values far exceeded the threshold of 10, this confirms high multicollinearity in the dataset.

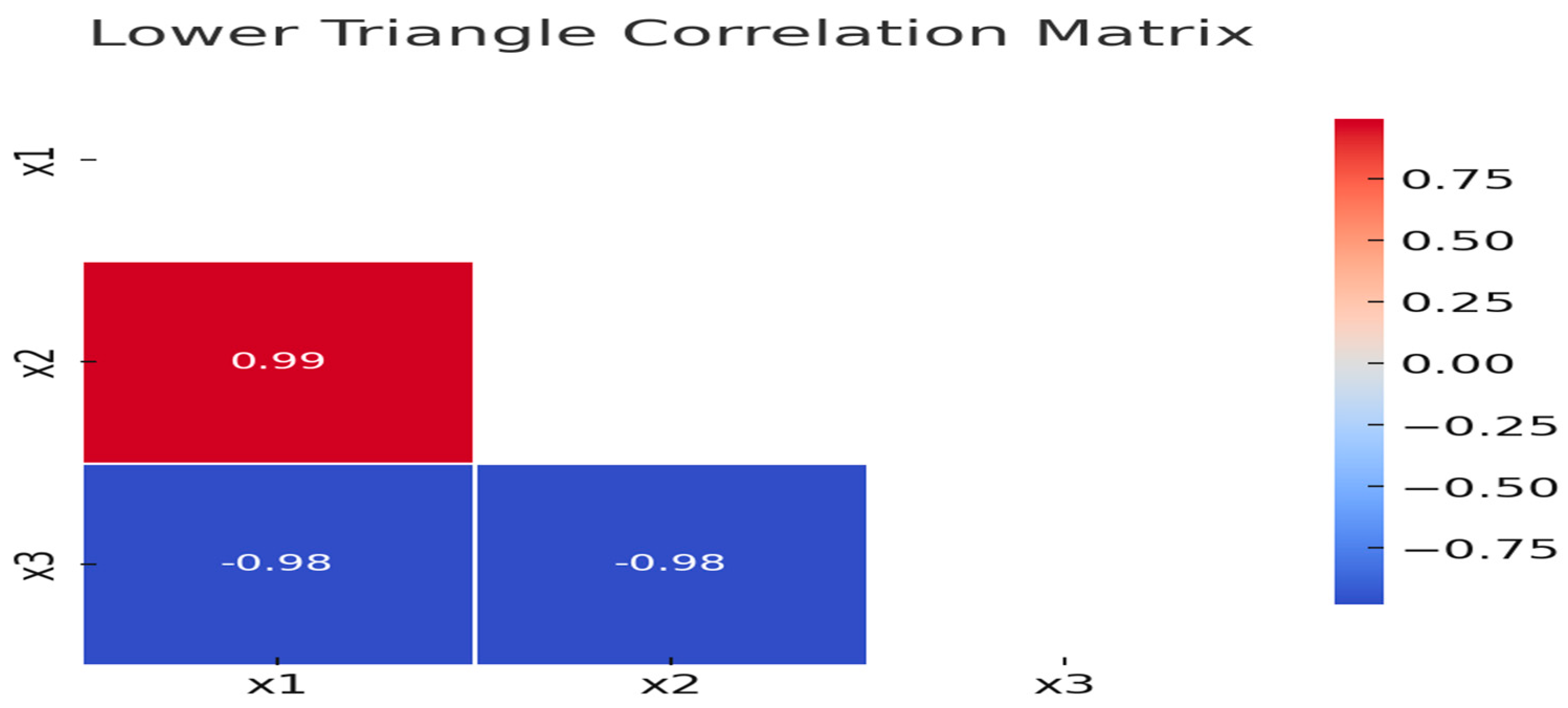

From

Figure 1, it is also evident that variables exhibit strong correlations with each other; therefore, strong multicollinearity exists in the data. Given that OLS regression would produce unstable coefficient estimates, we employed our newly developed estimators that were designed to handle highly collinear data effectively. These estimators were compared against OLS and other existing methods to demonstrate their superiority in mitigating multicollinearity.

This practical application proved that our proposed estimator’s performance is better compared to the OLS and other existing approaches. These findings, presented in

Table 1, provided strong confirmation of our earlier simulation results.

4.2. Hald Cement Dataset

This dataset consists of 13 observations with five numerical variables: the dependent variable (y) and four independent variables

. The regression model is expressed as follows:

To check if the dataset is affected by multicollinearity, two indicators were used: the CN and the heatmap. For the CN, the eigenvalues of the data are needed. The eigenvalues are.

Therefore, the CN is approximately 986, which is greater than the threshold of 30. This indicates that the data exhibits multicollinearity.

Figure 2 clearly shows that the independent variables are correlated with each other.

The newly proposed and existing estimators were employed to compare their performance based on MSE, in order to identify the best estimator for mitigating multicollinearity.

Table 2 presents the MSE and regression coefficients for both the newly proposed estimators (SPS1, SPS2, SPS3) and the existing ones based on the real Hald Cement Dataset. It is evident that the results aligned well with the simulation results, validating the performance of the proposed estimators. In the analysis of the Hald Cement Dataset, the newly proposed estimators (SPS1, SPS2, SPS3) were compared with OLS and other existing methods (HK, HKB, KAM, KGM, Kmed, KMS, and BLRE) based on MSE. SPS1 estimator showed the best performance, having the lowest MSE, which was significantly better than OLS and other methods. SPS2 and SPS3 also performed well, with MSE values of 0.381985, outperforming all other methods except Kmed (0.375257).

Overall, the SPS estimators offer a promising alternative for more accurate predictions in modeling this dataset.

5. Conclusions

This study comprehensively evaluated the performance of newly proposed ridge-type shrinkage estimators (SPS1, SPS2, and SPS3) with OLS and other existing estimators, such as HK, KAM, KGM, Kmed, KMS, and BLRE, under various scenarios of multicollinearity including sample size, and error variance. Both simulations and real-world datasets analyses confirmed the superiority of the proposed SPS estimators in mitigating multicollinearity and induced instability while maintaining estimation efficiency.

SPS estimators are recommended for regression analysis involving multicollinear data, particularly in environmental and physical science studies, where predictor interdependence is common.

Future work could explore theoretical extensions in nonlinear or heteroscedastic settings and applications in more complex, big environmental and physical science datasets.