1. Introduction

As a result of swift advancements in networking, communication technology, and computing, the management of these systems intertwines the cyber and physical realms closely. To ensure the stability of these systems, it is essential to have dependable signals that are transmitted among the components over a common communication network. As demonstrated in references [

1,

2], implementing improvements to performance and reliability will be subject to challenges when undertaken in the presence of resource constraints. Due to resource constraints, measurement errors are inevitable when predicting actual systems and making decisions. In such cases, these errors are often handled in probabilistic terms to achieve better results [

3,

4].

Linear–quadratic–Gaussian (LQG) control, a classical and widely used optimal control method in modern control theory, primarily addresses the optimal control problem of linear stochastic systems. It achieves optimal feedback control laws by solving the Riccati equation, integrating state feedback with Kalman filtering. In practical applications, LQG control has been successfully implemented in various fields such as aerospace, robotic control, and power systems. However, as modern industries transition towards intelligence and networking, the dependence of control systems on networks has increased significantly. In this context, the impact of communication constraints on system performance cannot be overlooked [

5,

6,

7]. Taking smart grids as an example, limited communication bandwidth restricts the amount of real-time data transmission between power nodes. When there are sudden changes in grid loads, various nodes may fail to timely exchange power adjustment information, leading to exacerbated voltage fluctuations and potentially triggering local grid failures. Aghaee, Fateme et al. focused on the impact of communication delays and data packet loss on distributed secondary control for microgrids [

8]. The study presented in [

9] investigates the safety of surgical robots with autonomous control in the context of surgical procedures, focusing on the modeling and control of nonlinear tissue compression and heating utilizing LQG methods. The authors in [

10] showcase the balance between the expenses associated with control and the data transmission rates in infinite-horizon LQG systems. Furthermore, the work in [

11] examines the ideal tracking capabilities of single-input single-output networked control systems when faced with restricted communication channels. In a similar vein, this paper delves into the LQG control issue under the conditions of communication limitations.

Prior research efforts [

12,

13,

14] have examined the energy transmission issue in systems incorporating energy harvesters, which play a crucial role in transforming various environmental energy types into electricity. In [

15], the authors demonstrated that the distributed resource allocation game algorithm significantly enhances energy efficiency and reduces network interference, thereby ensuring successful data transmission. Additionally, In [

16], the authors investigated the challenges related to energy transmission for a sensor that harvests energy for remote state estimation, utilizing a continuous-time methodology alongside perturbation analysis. Building on this foundation, they demonstrated the existence of an optimal policy for the allocation of deterministic and stationary power transmission [

17]. It is evident that the integration of energy harvesters substantially improves system performance. Consequently, it is posited that the energy selector possesses the capability to efficiently harvest energy.

Generally speaking, control strategies and energy selection strategies need to be co-designed for a long time. For example, researchers demonstrated that the ideal controller does not display the separation principle [

2]. On the other hand, they offered the essential criteria for the controller to manifest a separation principle [

5]. Based on the principle of separation proposed in [

5], a novel framework is introduced in [

18] that integrates a controller with a quantization selector, enabling the dynamic determination of the optimal quantization level from a specified set of quantization levels. Nevertheless, the optimal structure of the controller and the energy selector has not been sufficiently investigated. This paper adopts a similar approach and investigates the separation structure between the controller and the energy selector. In contrast to [

18], this paper considers a situation where the communication channel is unreliable, which may lead to packet loss. In [

19], the authors addressed the issue of energy management for a controller that transmits information to a sensor over communication channels characterized by unknown packet loss. Unlike the approach taken in [

19], this paper examines a scenario where the plant transmits information through an unknown packet-dropping link to the filter. In a manner akin to the channel model outlined in reference [

17], the energy used for transmission impacts the frequency of packet loss. Apart from scenarios involving flawless receipt acknowledgments [

17,

20], this study also takes into account a feedback channel that operates as a flawed packet-dropping link between the filter and the plant.

This study examines a challenge related to inefficient control and energy scheduling that stems from a faulty feedback channel. During each time interval, the energy selector assesses the power allocated for data transmission, relying on imperfect acknowledgments and the currently available energy supply. Simultaneously, the controller produces outputs to sustain an ideal equilibrium between control effectiveness and expenses. The optimal control issue, assuming that the state is estimated optimally, is defined by a Riccati equation that incorporates packet loss. The problem of energy selection is addressed by reinterpreting it as a Markov decision process (MDP) with accurate acknowledgments. In conclusion, this study outlines three main contributions:

(1) The LQG optimal control problem under the optimal estimated state with given energy levels is investigated by considering imperfect acknowledgments and energy constraints.

(2) In the case of the packet loss information and transmission cost, a novel optimal controller structure under the optimal estimated state is derived by using backward induction.

(3) The optimal energy selection strategies to ensure filtering performance and the suboptimal controller gain are co-designed, which can be computed offline and independently.

Notations: The symbols and tr(X) denote the transpose and the trace of a real-valued matrix denoted by X, respectively. The inverse of the matrix X is expressed as . The sets of non-negative integers and positive integers are represented by and , respectively. The n-dimensional Euclidean space is referred to as . Additionally, signifies the probability of X, while indicates the conditional expectation of X given Y.

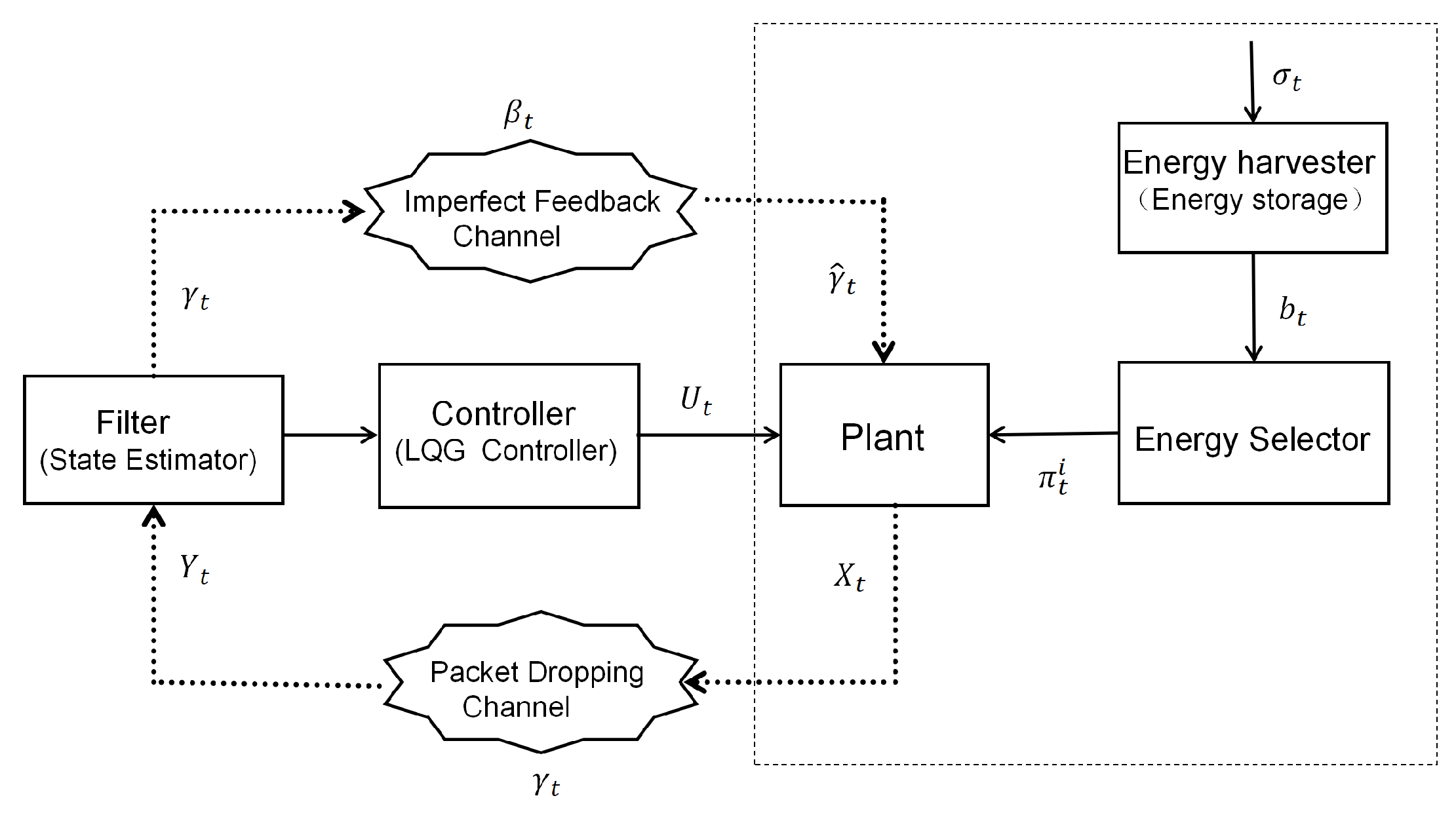

As shown in

Figure 1, the plant, on one hand, acquires energy from the environment via its integrated energy-harvesting subsystem (comprising an energy collector, an energy storage battery, and an energy selector) to generate

. This energy is stored in the battery as

, and then, the energy selector chooses the optimal transmission energy

from

M energy levels to power the transmission of the state value. On the other hand, the plant transmits its own state

to the filter through a wireless channel with packet loss. The filter performs state estimation based on the received state value

, obtains the estimated value, and sends it to the controller. The controller, relying on its own information set, calculates the optimal control signal

in accordance with the separation principle and feeds it back to the plant to stabilize its state. Meanwhile, the filter sends an imperfect acknowledgment signal

to the plant. The plant uses this feedback signal to adjust the subsequent energy-selection strategy, ultimately forming a complete closed loop of “state → energy optimization → estimation → control → feedback regulation” and enabling the stable operation of the system under energy constraints and imperfect feedback. In the following sections, we will detail the different submodules represented in the plant diagram.

3. Filter and Imperfect Feedback

The filter receives the state value transmitted by the plant, performs state estimations based on historical measurements and control information, and transmits the estimated results to the controller; at the same time, it sends an imperfect feedback signal to the plant.

3.1. Imperfect Feedback

A signal acknowledging transmission is dispatched from the filter to the plant at every time step. This study addresses a feedback channel that may contain errors. The packet loss sequence

remains unknown to the plant. Instead, an imperfect acknowledgment sequence

is received by the plant from the filter. According to [

4], the modeling of the erroneous feedback channel is presented as follows:

When

, all feedback signals are completely lost with a specified dropout probability

where

. Conversely, when

, the likelihood of a transmission error arises with a probability of

such that

. This error in transmission results in

if

and

if

. The conditional probability matrix for the feedback channel is formulated as follows:

where

for

and

. The system receives perfect packet reception acknowledgments when both

and

are set to 0.

3.2. State Estimation

Let us define the sets as follows: represents the set of state history, represents the history of state values that the filter can receive, denotes the control history, corresponds to the history of feedback signals, and finally, signifies the history of transmission selection.

The data accessible to the controller at time

t is represented as

with the initial state being

, where

a

algebra. Based on Equation (

9)’s definition, one can interpret an admission control strategy as a function that maps

to

. We label these strategies as

. In contrast to the feedback-based control methods described in [

2], our approach involves transmitting

rather than

at time

t. It is important to note that

can be easily calculated using the values of

,

, and

.

Now, we introduce notation , which we will call the prediction of . Additionally, we define as the filtered version of . Consequently, we express .

Using (

1) and because

is

-measurable, we have

Let us introduce the error

. From this, it can be derived that

where

. The estimation error of the state

is influenced by the sequence

via the variables

. However, it is independent of the control strategy

.

4. Suboptimal Control and Energy Selection Strategy

The controller receives the state estimation transmitted by the filter and, based on its own information set

, outputs the optimal control signal

to ensure the stability of the equipment state and minimize the total cost. The energy transmission selector at time

t has access to the information given by

with

. The strategies for selecting the transmitter can likewise be considered as a mapping:

to

. These strategies are denoted as

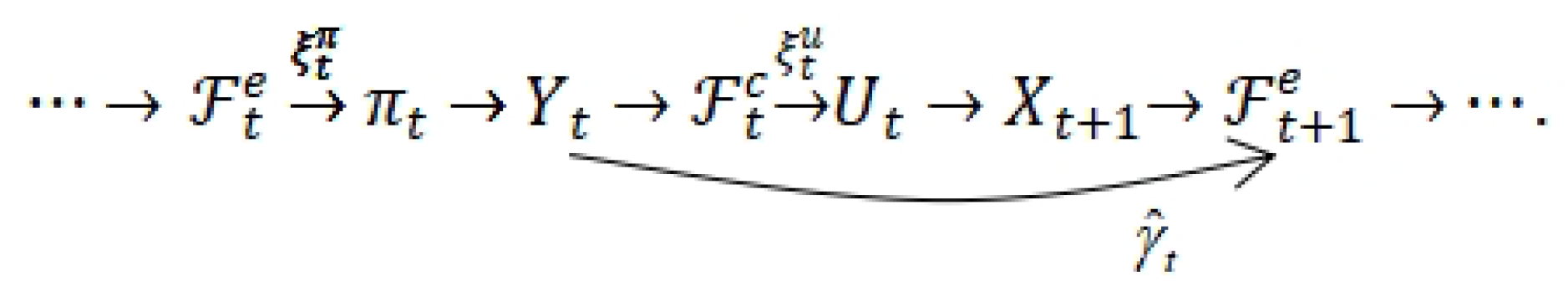

. Thus, the decision-making process during a single time step is illustrated in

Figure 2.

The cooperative minimization of the cost function undertaken by the controller and the energy transmission selector involves a finite horizon quadratic criterion, and it is expressed as follows:

where

,

,

,

,

represents the entire sequence

; likewise,

is defined similarly.

Remark 1. The overall cost function to minimize is a weighted combination of control efficiency and transmission cost, directly reflecting their balance. Then, penalize the state deviation from the equilibrium () and control input consumption ()—smaller values mean better control stability. High-cost energy selection is then penalized—smaller values mean lower energy expenditure (driven by Λ). To avoid the complexity of joint optimization, we use the separation principle to decompose the problem into two decoupled but mutually constrained subproblems—ensuring that neither control efficiency nor energy selection (costΛ)) is sacrificed excessively.

It is essential to identify the optimal mappings

and

that minimize the cost function (

14) across all permissible strategies:

4.1. Optimal Control Under the Optimal Estimated State with the Separate Principle

When packet loss occurs during the process of transmission, the controller cannot receive

, and we have the following function:

where

. It is clear that the values of

belong to a countably infinite collection:

.

We may introduce a random variable

to represent the length of the most recent successful transfer before time

t:

The analysis presented suggests a distinction in structure between the controller and the selection of transmitters. In the subsequent sections, we will formally demonstrate the emergence of a separation principle related to this issue. Associated with the cost function (

14), let us define the value function as

Expression (

18) is rewritten as follows by using the dynamic programming principle:

If

and

serve to minimize the right side of (

19), then we have

and

. Additionally, from (

18), we can derive the following:

Here, the expectation in Equation (

20) pertains to the random variables

and

. For conciseness in the upcoming analysis, we will denote

as follows:

where

is the combined information set. Note that the information set

(and, consequently,

) encompasses the realization

of the state

. The subsequent theorem describes the optimal policy

for every

.

Theorem 1. Given the information set at time k provided to the controller, the optimal control policy , which operates under the optimal estimated state to minimize the right-hand side of Equation (19), can be expressed in the following manner: This holds for every . Proof. To ensure conciseness, we will refer to

rather than

, and

will denote

. The proof of this theorem relies on the dynamic programming principle. The central concept is to establish that the value function linked to the optimal control problem, assuming the best estimate of the state, is represented as follows:

where

signifies the outcome of the state

, and

is defined similarly to that in (

23b); this is applicable for all

.

The matrix

and the scalar

are defined by the following equations:

Neither

nor

relies on previous or forthcoming control or energy selection decisions. Thus, these values can be calculated offline. Additionally, from (

12), it can be deduced that

is independent of the earlier control history

, being solely determined by

. Consequently,

is not influenced by the control action

. Equation (

25) can be reformulated as follows.

Based on the definition of

presented in (

18), we can express

as follows:

Following this, we need to confirm that

conforms to the structure outlined in (

24):

Substituting Equation (

1) and performing a series of simplifications result in the following expression:

In Formula (

30), the term

is the sole component that is influenced by

. Therefore,

serves as the minimum mean squared estimate for

.Then,

After replacing the optimal

in (

29), we derive

Consequently, applying the definitions of

and

leads us to the conclusion that

Therefore, the expression

is certainly in the format described by Equation (

24). To confirm that Equation (

24) is also valid for time

k, we apply backward induction, presuming that it is true for some time

. Toward that end, we obtain

Using Equations (

1), (

23a) and (

23b), one can obtain

The optimal control, denoted as

, which is derived under the best estimated state to minimize (

35), is expressed as follows:

After substituting the optimal control under the optimal estimated state from (

36) in (

35), we can obtain

Consequently, the value function can be expressed in the form of (

24), and the optimal control based on the best estimated state at times

is provided in (

36). □

Remark 2. The optimal feedback controller for the separated control problem is given by Theorem 1 in [5]; it indicates that there exists a separation principle if the policy satisfies the structure of Equation (4) in [5]. In this paper, Equation (23a) is exactly the same as the structure of Equation (4) in [5], which shows that the optimal control strategy proposed by us satisfies the separation principle. Remark 3. The problem of designing a controller based on the filter requires the consideration of both filtering performance and control performance simultaneously. One typical method is to prioritize filtering performance before ensuring control performance. For example, the separation structure between the controller and the quantizer is studied in [18] to ensure the filtering performance and control performance individually. Through a similar analytical approach, we find that the state estimation error at the filter depends only on the energy selection policy through the variable and not on the control . Likewise, we obtain a separation principle between the energy selector and the controller. Note that, in contrast to [

18], this paper takes into account delay:

. In the presence of delay, the information available at the controller will be affected since some of the measurements’ arrival will be delayed, and hence, state estimations will be affected. Theorem 1 incorporates the consideration of delay and provides the optimal controller structure for this scenario:

.

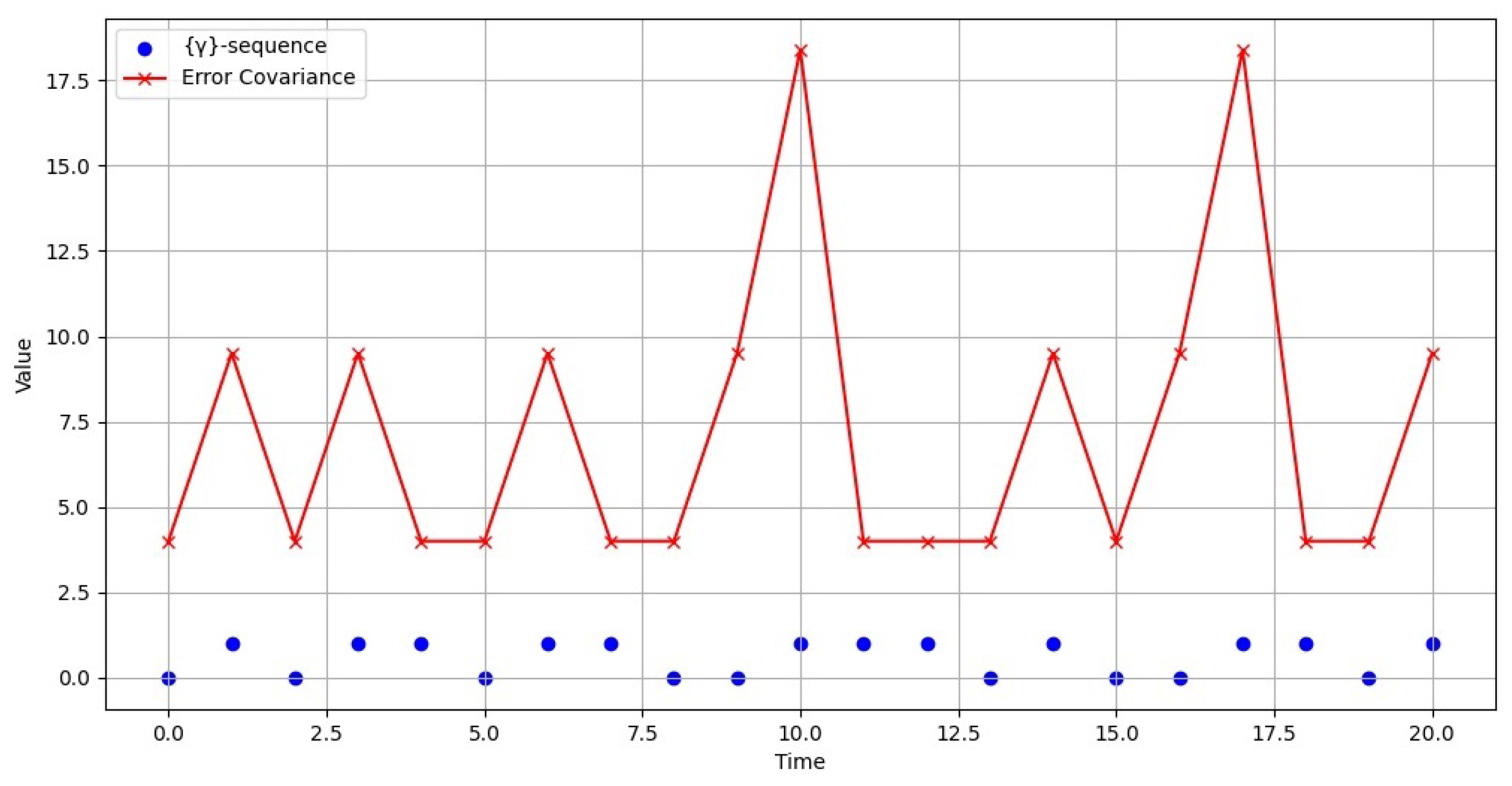

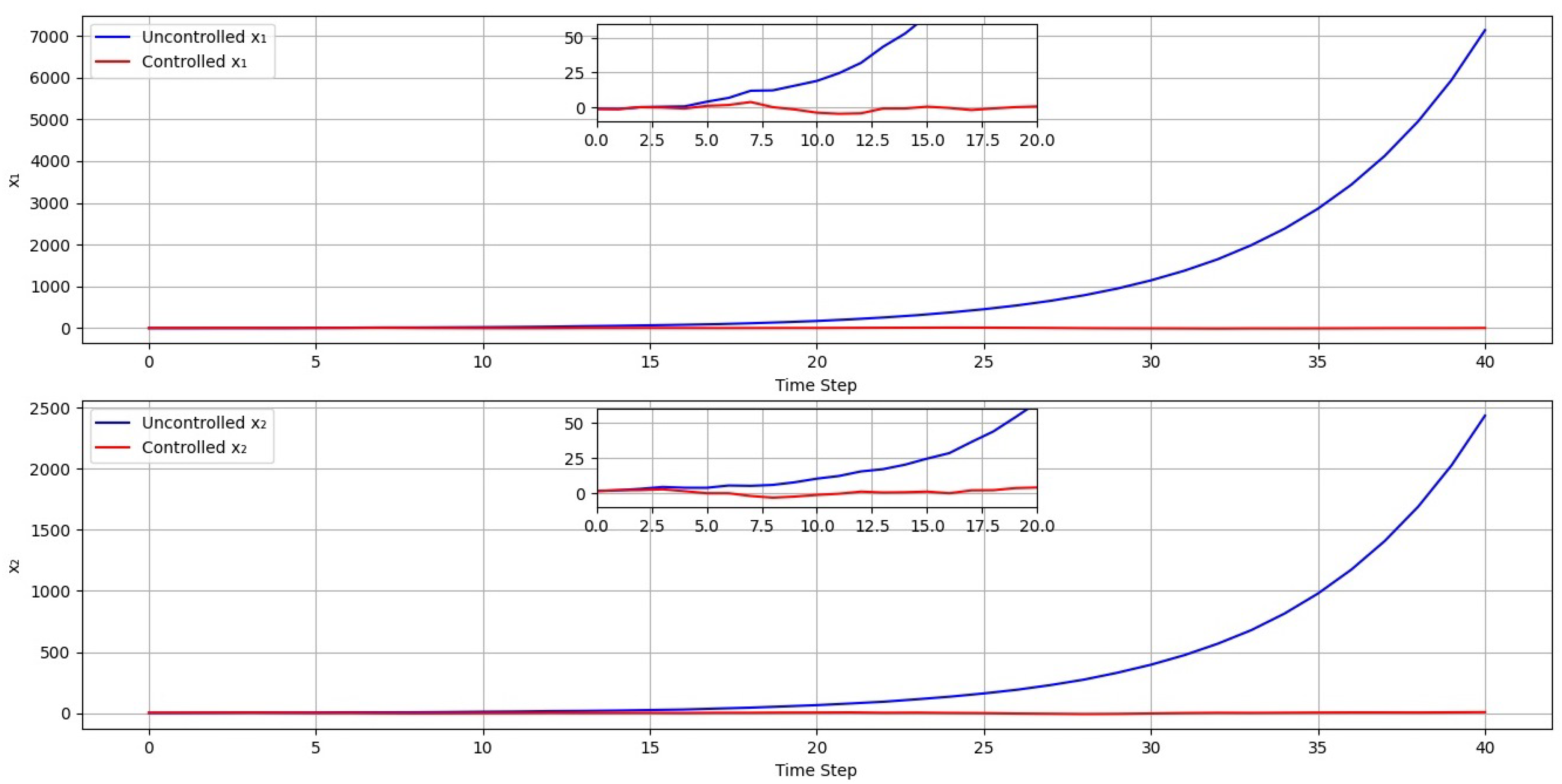

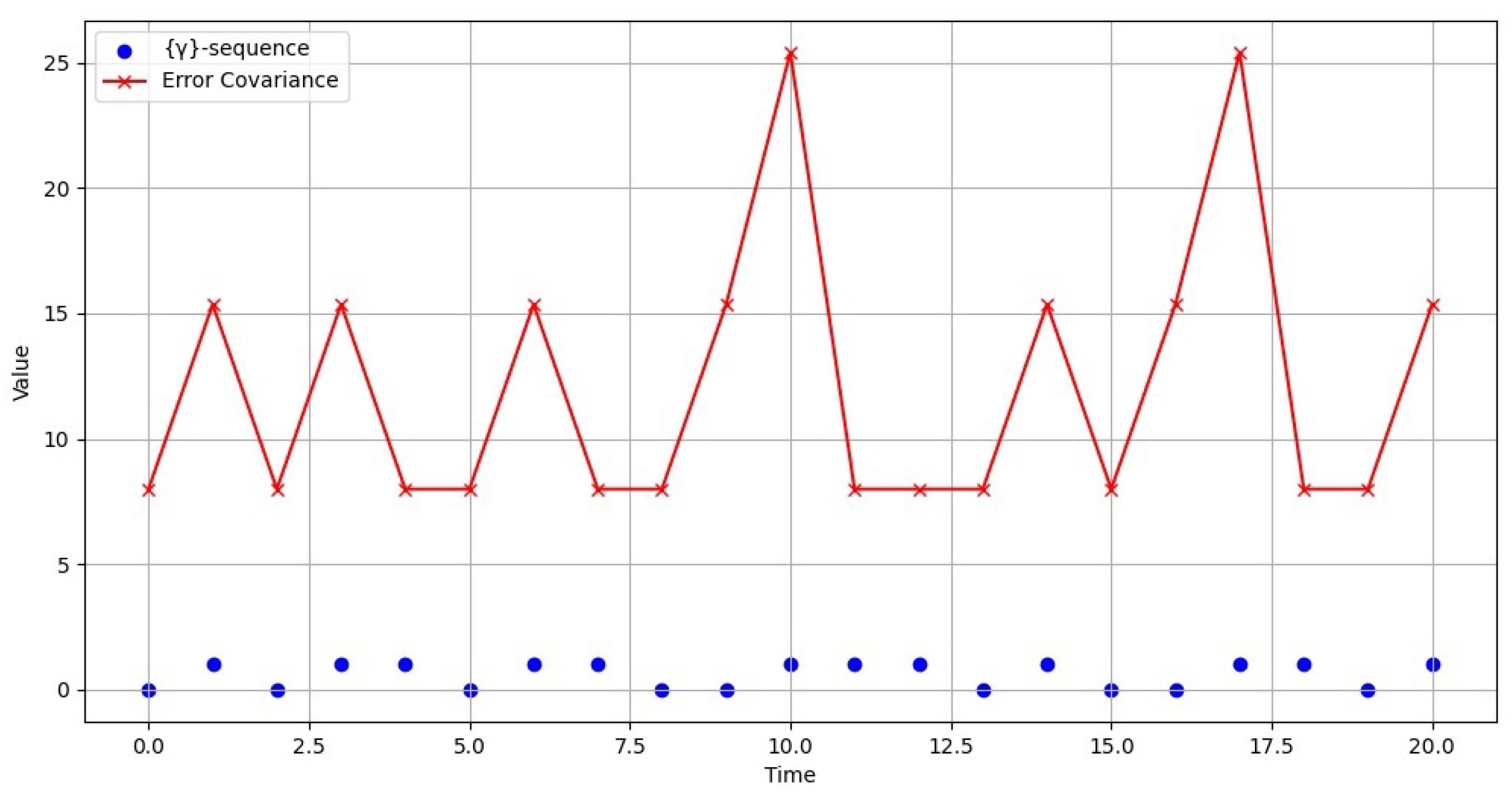

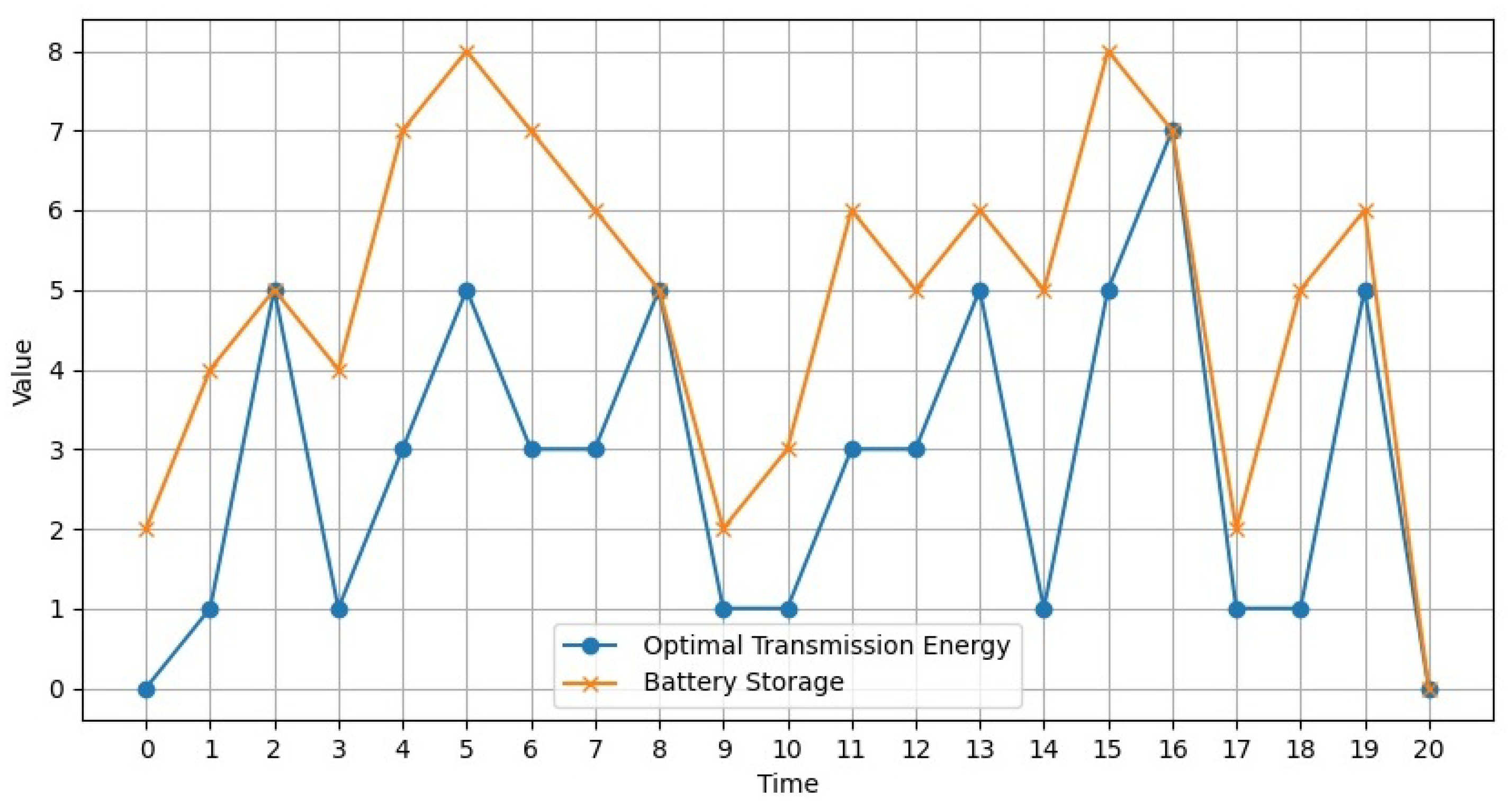

4.2. Optimal Energy Selection to Ensure Filtering Performance and MDP

Affected by the energy constraint, packet dropouts may occur during the information transmission of the plant. Consequently, the system determines the most efficient transmission energy to reduce the error covariance at the filter, as well as the energy selection cost. The optimization challenge can be reformulated as follows:

This subsection aims to identify the ideal energy selection strategy that guarantees filtering efficacy while minimizing the above cost function. Due to the presence of an imperfect feedback communication channel, the system cannot verify the receipt of packets by the filter. Thus, at time t, the system acquires “imperfect state information” regarding through the acknowledgment sequence . This optimization problem can be interpreted as a Markov decision process (MDP) with flawed state information. Furthermore, the issue characterized as an MDP with imperfect acknowledgments may be transformed into an MDP with accurate acknowledgments by utilizing the information-state framework.

Let all observations obtained from the filter up to time

t be represented as

for

, with

. Next, we define the information state as

representing the conditional probability of the estimation error covariance

given

and

. The ensuing theorem illustrates how

can be determined from

in conjunction with

and

. From reference [

4], we have the following lemma.

Lemma 1 ([

4])

. The dynamics of the information-state function is described as follows:with the initial condition given by , where δ denotes the Dirac delta function. In light of the function

, the estimation error covariances at the filter satisfy the following iteration expression:

The condition of having perfect state information is characterized by the information-state

. Define a binary random variable

, akin to

. For a given

P, we express the following

as the operator of the random Riccati equation. Let

denote the collection of all non-negative definite matrices. We denote the space of all probability density functions defined on

as

, characterized by the condition

for any

. Building on the recursion of the information-state, we define

for a given

, as well as for

and

p. It is important to highlight that when both

and

are set to zero, the optimization issue transforms into a stochastic control problem with perfect state information.

We formulate a Markov decision process (MDP) to address the stochastic control issue. At time

t, the MDP is characterized by the state

, which resides within the state space

. Let

represent the set of actions corresponding to transmission energy, while

denotes the collection of permissible actions for the state

. The reward for a single stage is given by

The best approach for selecting energy to guarantee effective filtering performance is calculated offline using the Bellman dynamic programming equations presented below.

Theorem 2. Given the initial condition , the finite-time horizon minimization problem, which accounts for imperfect acknowledgments, fulfills the following Bellman optimal iterative equation:and the termination condition iswhere all available energy is used for transmission in the final time T. Accordingly, the optimal selection policy

is obtained by

Proof. For this proof, please consult Theorem

in reference [

21]. □

To facilitate the calculations, we express

where the energy harvesting sequence

is characterized by finite-state Markov chains. The matrix

represents the state transition probabilities of the energy harvesting processes, and

.

Remark 4. The energy selection problem with the imperfect state information problem is reduced to ones with perfect state information using the notion of information-state [21]. The information state is the entire probability density function and not just its value at any particular . We note that discretized versions of the Bellman equations (46), which, in particular, includes the discretization of the space of probability density functions Ψ

, is used for the numerical computation to find the suboptimal solution to the energy selection problem. As the degree of discretization increases, the suboptimal solution will converge to the optimal solution [22]. For Bellman optimal (46), we can solve it by applying the value iteration algorithm [23]. 4.3. Algorithm

Algorithm 1 is the algorithm flow of this article.

| Algorithm 1 Joint Optimization of Control and Energy Selection for LQG Systems. |

Require: Set Time horizon T, system matrices , noise covariance , state penalty Q, control cost R, energy cost vector , energy levels , battery capacity C, energy harvesting transition matrix T, harvesting set ,, noise spectral density S, bandwidth K, feedback error probabilities

Initialize the distributions:System initial state , initial battery level ; Estimation error covariance initial value (Dirac delta distribution ), information state ; Energy selection decision variable ,

Offline Calculation: Controller and Energy Policy (Backward Induction):- 1:

for down to 0 do - 2:

Solve Riccati equation for optimal control gain - 3:

if then - 4:

Initialize terminal matrix (Equation (23c)) - 5:

end if - 6:

Calculate (Equation (23b)) - 7:

Compute control gain (Equation (23a)) - 8:

Store - 9:

MDP value iteration for optimal energy policy - 10:

while MDP value function not converged do - 11:

Prediction of information state: Update √ via Lemma 1 - 12:

Update of value function: Calculate one-stage reward (Equation ( 45)) - 13:

Update (Equation ( 46)) - 14:

end while - 15:

Determine optimal energy policy: (Equation ( 48)) - 16:

Store - 17:

end for

Online Simulation: System Operation (Forward Propagation)):for to do - 2:

Select transmission energy (feasibility constraint: ) Compute packet loss probability - 4:

Generate binary loss indicator Generate measurement (Equation ( 5)) - 6:

Calculate continuous loss duration (Equation ( 17)) Filtered state - 8:

Compute optimal control (Equation ( 22)) Update state (Equation ( 1)), where - 10:

Update battery (Equation ( 6)) Generate next harvesting energy - 12:

Record performance indicators: estimation error covariance , transmission cost , control cost end for

|