Discovering New Recurrence Relations for Stirling Numbers: Leveraging a Poisson Expectation Identity for Higher-Order Moments

Abstract

1. Introduction

2. Preliminary

3. Expectation Identity

4. Analytical Derivation of the First Four Origin Moments

5. Analytical Determination of the kth Origin Moment

6. Table of Coefficients for the First 10 Origin Moments

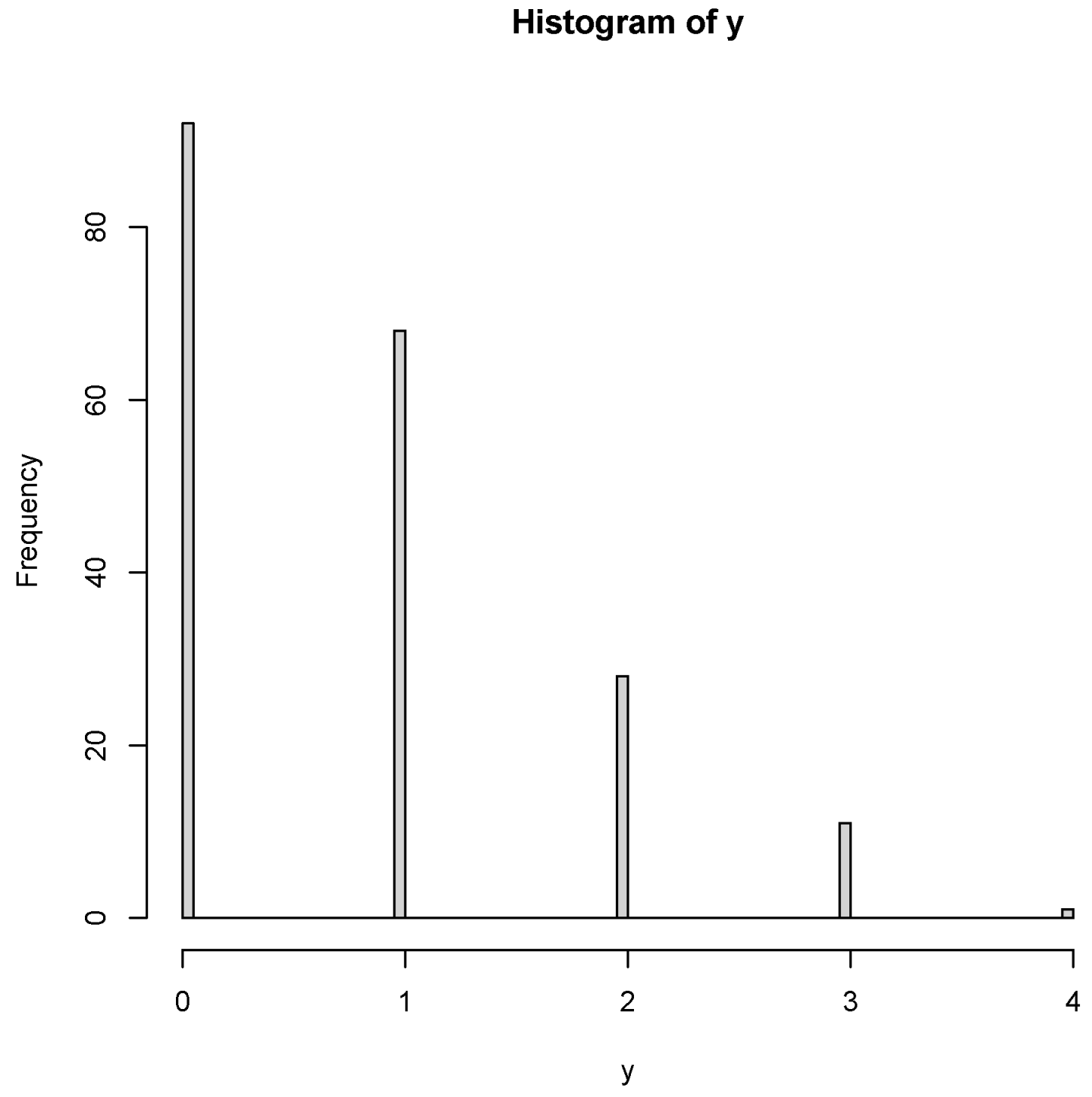

7. Simulations

8. Two Empirical Data Examples

9. Conclusions and Discussions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

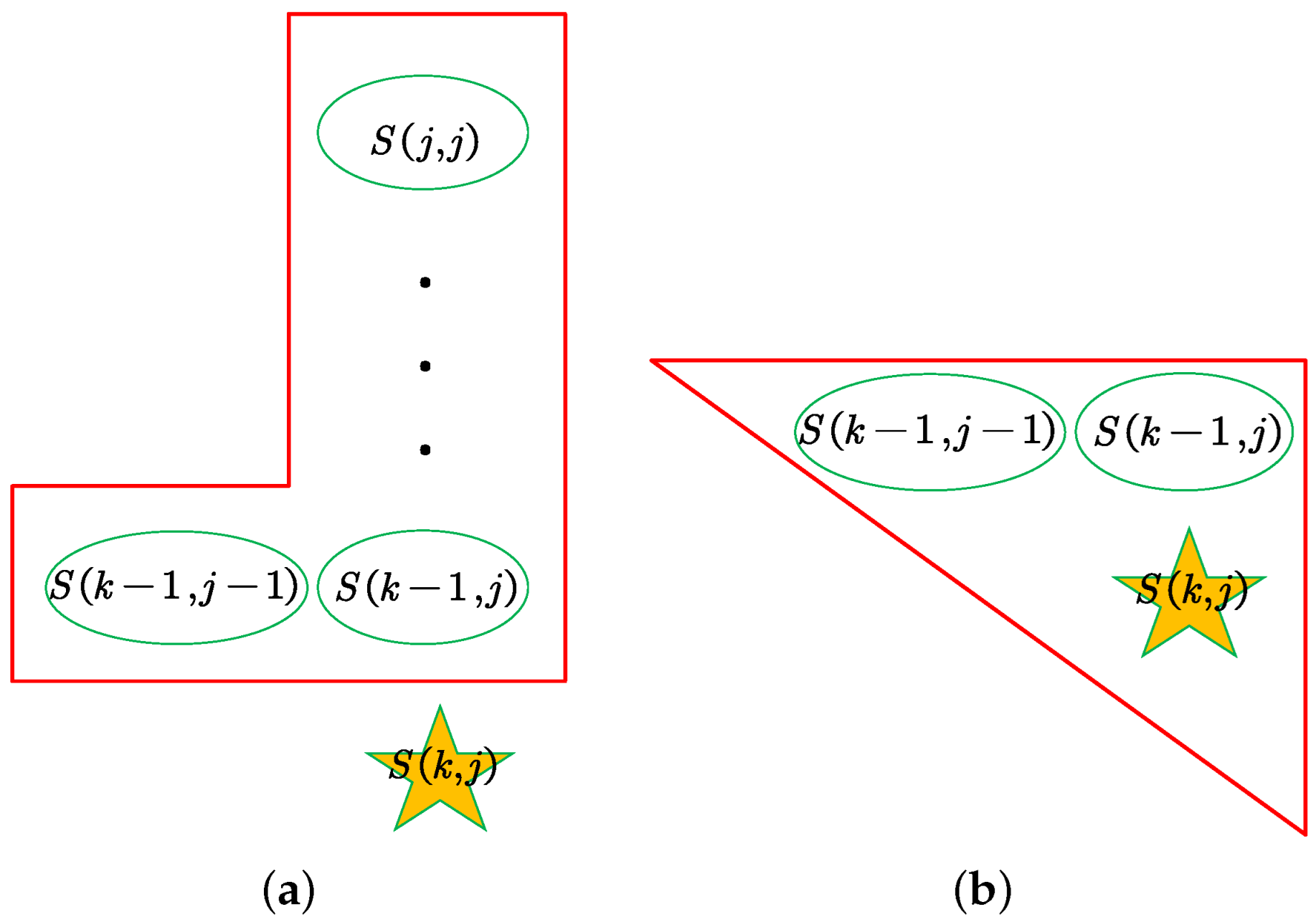

Appendix A

References

- Paloheimo, J.E. Spatial bivariate Poisson distribution. Biometrika 1972, 59, 489–492. [Google Scholar] [CrossRef]

- Steyn, H.S. Multivariate Poisson normal distribution. J. Am. Stat. Assoc. 1976, 71, 233–236. [Google Scholar] [CrossRef]

- Lukacs, E.; Beer, S. Characterization of multivariate Poisson-distribution. J. Multivar. Anal. 1977, 7, 1–12. [Google Scholar] [CrossRef]

- Aitchison, J.; Ho, C.H. The multivariate Poisson-log normal-distribution. Biometrika 1989, 76, 643–653. [Google Scholar] [CrossRef]

- Chen, L.H.Y. Convergence of Poisson binomial to Poisson distributions. Ann. Probab. 1974, 2, 178–180. [Google Scholar] [CrossRef]

- Duembgen, L.; Wellner, J.A. The density ratio of Poisson binomial versus Poisson distributions. Stat. Probab. Lett. 2020, 165, 108862. [Google Scholar] [CrossRef]

- Cordeiro, G.M.; Rodrigues, J.; de Castro, M. The exponential COM-Poisson distribution. Stat. Pap. 2012, 53, 653–664. [Google Scholar] [CrossRef]

- Atkinson, A.C.; Yeh, L. Inference for Sichel compound Poisson-distribution. J. Am. Stat. Assoc. 1982, 77, 153–158. [Google Scholar] [CrossRef]

- Roos, B. Improvements in the Poisson approximation of mixed Poisson distributions. J. Stat. Plan. Inference 2003, 113, 467–483. [Google Scholar] [CrossRef]

- Alzaid, A.A.; Alqefari, A.A.; Qarmalah, N. On the conflation of Poisson and logarithmic distributions with applications. Axioms 2025, 14, 518. [Google Scholar] [CrossRef]

- Wu, H.J.; Zhang, Y.Y.; Li, H.Y. Expectation identities from integration by parts for univariate continuous random variables with applications to high-order moments. Stat. Pap. 2023, 64, 477–496. [Google Scholar] [CrossRef]

- Zhang, Y.Y.; Rong, T.Z.; Li, M.M. Expectation identity for the binomial distribution and its application in the calculations of high-order binomial moments. Commun. Stat.-Theory Methods 2019, 48, 5467–5476. [Google Scholar] [CrossRef]

- Liu, J.L.; Zhang, Y.Y.; Wang, Y.Q. Expectation identity of the discrete uniform distribution and its application in the calculations of higher-order origin moments. Adv. Appl. Stat. 2023, 85, 1–41. [Google Scholar] [CrossRef]

- Wang, Y.Q.; Zhang, Y.Y.; Liu, J.L. Expectation identity of the hypergeometric distribution and its application in the calculations of high-order origin moments. Commun. Stat.-Theory Methods 2023, 52, 6018–6036. [Google Scholar] [CrossRef]

- Zhang, Y.Y. Expectation identity of the negative binomial distribution and its application in the calculations of high-order origin moments. Commun. Stat.-Theory Methods 2025, 54, 6701–6710. [Google Scholar] [CrossRef]

- Casella, G.; Berger, R.L. Statistical Inference, 2nd ed; Duxbury: Pacific Grove, CA, USA, 2002. [Google Scholar]

- Hwang, J.T. Improving on standard estimators in discrete exponential families with applications to Poisson and negative binomial cases. Ann. Stat. 1982, 10, 857–867. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024. [Google Scholar]

- Comtet, L. Advanced Combinatorics, Revised and Enlarged ed.; D. Reidel Publishing Company: Boston, MA, USA, 1974. [Google Scholar]

- Simsek, B. New moment formulas for moments and characteristic function of the geometric distribution in terms of Apostol-Bernoulli polynomials and numbers. Math. Methods Appl. Sci. 2024, 47, 9169–9179. [Google Scholar] [CrossRef]

- Simsek, B. Novel formulas of moments of negative binomial distribution connected with Apostol-Bernoulli numbers of higher order and Stirling numbers. Rev. Real Acad. Cienc. Exactas Fis. Nat. Ser. A Mat. 2024, 118, 142. [Google Scholar] [CrossRef]

- Simsek, B. Certain mathematical formulas for moments of geometric distribution by means of the Apostol-Bernoulli polynomials. Filomat 2025, 39, 1843–1853. [Google Scholar] [CrossRef]

- Simsek, B.; Kilar, N. By analysis of moments of geometric distribution: New formulas involving Eulerian and Fubini numbers. Appl. Anal. Discret. Math. 2025, 19, 233–252. [Google Scholar] [CrossRef]

- Xue, Y.; Chen, L.P. Statistical Modeling and R Software; Tsinghua University Press: Beijing, China, 2007. [Google Scholar]

- Riordan, J. Moment recurrence relations for binomial, Poisson and hypergeometric frequency distributions. Ann. Math. Stat. 1937, 8, 103–111. [Google Scholar] [CrossRef]

- Haight, F.A. Handbook of the Poisson Distribution; John Wiley & Sons: New York, NY, USA, 1967. [Google Scholar]

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | |||||||||||

| 1 | 0 | 1 | |||||||||

| 2 | 0 | 1 | 1 | ||||||||

| 3 | 0 | 1 | 3 | 1 | |||||||

| 4 | 0 | 1 | 7 | 6 | 1 | ||||||

| 5 | 0 | 1 | 15 | 25 | 10 | 1 | |||||

| 6 | 0 | 1 | 31 | 90 | 65 | 15 | 1 | ||||

| 7 | 0 | 1 | 63 | 301 | 350 | 140 | 21 | 1 | |||

| 8 | 0 | 1 | 127 | 966 | 1701 | 1050 | 266 | 28 | 1 | ||

| 9 | 0 | 1 | 255 | 3025 | 7770 | 6951 | 2646 | 462 | 36 | 1 | |

| 10 | 0 | 1 | 511 | 9330 | 34,105 | 42,525 | 22,827 | 5880 | 750 | 45 | 1 |

| k | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 22.0 | 94.0 | 454.0 | 2430.0 | 14,214.0 | 89,918.0 | 610,182.0 | 4,412,798.0 | |||

| 6.030 | 22.1 | 94.8 | 458.6 | 2458.2 | 14,399.5 | 91,216.8 | 619,823.5 | 4,488,357.1 | ||

| −75,559.1 | ||||||||||

| 22.3 | 94.5 | 446.5 | 2306.8 | 12,844.8 | 76,342.3 | 480,766.0 | 3,188,704.9 | |||

| 123.2 | 1369.2 | 13,575.7 | 129,416.0 | 1,224,093.1 | ||||||

| Number of calls received | 0 | 1 | 2 | 3 | 4 | 5 | 6 |

| Frequency of occurrence | 7 | 10 | 12 | 8 | 3 | 2 | 0 |

| k | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 5.5 | 19.7 | 81.9 | 385.8 | 2015.8 | 11,521.8 | 71,279.5 | 473,353.4 | |||

| 18.2 | 68.3 | 277.6 | 1194.0 | 5343.9 | 24,605.4 | 115,626.2 | 551,468.3 | |||

| 0.1 | 1.5 | 13.6 | 108.2 | 821.8 | 6177.9 | 46,674.1 | 357,727.2 | 2,800,506.9 | ||

| Number of customers | 0 | 1 | 2 | 3 | 4 | 5 |

| Frequency of occurrence | 92 | 68 | 28 | 11 | 1 | 0 |

| k | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1.453 | 3.271 | 8.9 | 28.1 | 100.5 | 398.9 | 1735.0 | 8184.5 | 41,535.2 | ||

| 3.265 | 8.3 | 23.3 | 69.9 | 220.5 | 724.7 | 2465.3 | 8634.3 | |||

| 0.006 | 0.6 | 4.8 | 30.6 | 178.4 | 1010.3 | 5719.2 | 32,901.0 | |||

| 0.2% | 6.5% | 17.1% | 30.5% | 44.7% | 58.2% | 69.9% | 79.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.-Y.; Pan, D.-D. Discovering New Recurrence Relations for Stirling Numbers: Leveraging a Poisson Expectation Identity for Higher-Order Moments. Axioms 2025, 14, 747. https://doi.org/10.3390/axioms14100747

Zhang Y-Y, Pan D-D. Discovering New Recurrence Relations for Stirling Numbers: Leveraging a Poisson Expectation Identity for Higher-Order Moments. Axioms. 2025; 14(10):747. https://doi.org/10.3390/axioms14100747

Chicago/Turabian StyleZhang, Ying-Ying, and Dong-Dong Pan. 2025. "Discovering New Recurrence Relations for Stirling Numbers: Leveraging a Poisson Expectation Identity for Higher-Order Moments" Axioms 14, no. 10: 747. https://doi.org/10.3390/axioms14100747

APA StyleZhang, Y.-Y., & Pan, D.-D. (2025). Discovering New Recurrence Relations for Stirling Numbers: Leveraging a Poisson Expectation Identity for Higher-Order Moments. Axioms, 14(10), 747. https://doi.org/10.3390/axioms14100747