Abstract

The advances in science and technology have led to vast amounts of complex and heterogeneous data from multiple sources of random sample length. This paper aims to investigate the extreme behavior of competing risks with random sample sizes. Two accelerated mixed types of stable distributions are obtained as the extreme limit laws of random sampling competing risks under linear and power normalizations, respectively. The theoretical findings are well illustrated by typical examples and numerical studies. The developed methodology and models provide new insights into modeling complex data across numerous fields.

MSC:

60G70; 62P05; 62P12; 62G32

1. Introduction

Extreme value theory (EVT) focuses on modeling extreme events within a sequence of a large number of independent and identically distributed (i.i.d.) random variables. Its applications span diverse fields such as finance, insurance, environmental science, and engineering [1,2]. Let be a sequence of i.i.d. random variables with a common distribution function (d.f.), F, and denote by the sample maxima. The risk is called the max-domain attraction of G (cf. Definition 3.3.1 in [2]), if there exist some normalization constants , and a non-degenerate d.f., G, such that (with convergence in distribution or convergence weakly)

The limit distribution, G, is the so-called generalized extreme value distribution (GEV), satisfying the stability relation , for every integer n, where and are some suitable constants. The GEV distribution, G, is thus of the l-max stable laws, written as

where , the positive part of . We denote this by . Here, the three parameters are called the shape, location, and scale parameters. In addition, the tail behavior of the potential risk, X, is well classified into Fréchet, Gumbel, and Weibull domains, corresponding to the cases with , respectively [3].

Given the wide applications of EVT, numerous studies have extensively explored the limit theory similar to Equation (1). Pantcheva [4] extended first the GEV distributions under linear normalization in Equation (1) to the power limit laws , i.e., if there exist some power normalization constants , and a non-degenerate d.f., H, such that

with the sign function equal 1, , and 0 for x being positive, negative, and zero, respectively. It is well known that H in Equation (3) is a p-max stable distribution (that is, for any integer , there exist suitable constants , such that ). The limit distribution, H, consists of six types of distributions, which can be written uniformly in the form of Equation (4) below [5]: for some constants , and (recall G is the GEV defined in Equation (2)),

In what follows, we denote this by . We refer to [6] for exponential normalization with generalized Pareto families of asymmetric distributions as its limit laws, extending further the p-stable laws under power normalization.

Recently, Cao and Zhang [7] and Hu et al. [8] explored the limit behavior of extremes under linear and power normalization in the scenario of competing risks, with the practical consideration of aggregating multiple heterogeneous information in terms of geography, environment, and socioeconomics [9]. Namely, the studied sample maxima, , is actually obtained from k heterogeneous subsamples from source/population . This considerate modeling in the big data era is desirable due to the complexity of real applications [10,11]. The limit behavior of obtained for multiple sources is the so-called limit theory of competing risks since

Clearly, the limit laws obtained for competing risks specified in Equation (5), which extend the classical extreme value theory presented in Equations (1) and (3), correspond to the so-called accelerated l-max stable and accelerated p-max stable distributions (see Cao and Zhang [7] (Theorem 2.1) and Hu et al. [8] (Theorem 2.1)). Note that the key condition in determining accelerated limit theory is the interplay of the sample length and the tail behavior among the multiple competing risks.

As the advances of science and technology have led to vast amounts of complex and heterogeneous data from multiple sources of random sample length, a natural question is how the extreme law of competing risks varies in the uncertainty of the sample size involved. This is very common in environmental and financial fields; for instance, the potential extreme claim size among k insured policyholders, each holding the insurance for a -day period with heterogeneous claim risk and a total of claims [12]. Another example is the extreme daily precipitation among k regions, where each region is exposed to a -day wet period with different extreme precipitation risks [13]. Although the study of such heterogeneous risks under a random sampling scenario is key in risk management for relevant decision-makers, its extreme behavior remains a significant and unresolved issue. This paper aims to establish the limit theory of competing risks under both linear and power normalization when the sample sizes are random rather than determinant.

Many authors have refined the extreme limit theory of sample maxima from a single risk, X, under linear/power normalization with random sample sizes, , for two common cases:

- Case (I) with a random sample size independent of the basic risk. The random sample is supposed to be independent of the sample size index . Assume that converges weakly to a non-degenerate distribution function [14,15,16,17];

- Case (II) with a random sample size not independent of the basic risk. There exists a positive-valued variable, V, such that converges to V in probability, allowing the interrelation of the basic risk and sample size index [14,18,19].

The limit behaviors of extreme samples with random sample sizes were well investigated for a range of extensions, including sample minima [20], extreme order statistics under power normalization [19,21], stationary Gaussian processes [17], stationary chi-processes [16], and recent contributions on multivariate extreme behavior [22].

This paper will focus on the limit behavior of competing extremes (maxima of maxima defined by Equation (5) and minima of minima in Equation (14)) under linear and power normalization, extending the accelerated l-max and p-max stable distributions to be in the mixed form, as we consider that the sample size sequence is a random sequence satisfying conditions indicated in Cases I and II above. The theoretical results will be illustrated by typical examples with random sample sizes, , following time-shifted Poisson, (negative) binomial distributions, and numerical studies (cf. Section 3). Our theoretical findings are expected to be applied in finance, insurance, and hydrology [23,24]. The developed methodology and models provide new insights into modeling complex data across numerous fields.

The remainder of the paper is organized as follows. Section 2 presents the main results for maxima of maxima under both linear and power normalization with sample sizes. Extensional results for competing minima and typical examples are given in Section 3. Numerical studies illustrating our theoretical findings are presented in Section 4. The proofs of all theoretical results are deferred to Appendix A.

2. Main Results

Notation. Recall that the competing risks defined in Equation (5) are generated from k independent samples of size ’s from risks . Let a random sequence from , which are mutually independent, positive integer-valued variables, stand for the random sample size. Similar to Equation (5), we write

Here, and . Throughout this paper, for any risk, X, following a cumulative distribution function (cdf), F, we write , standing for the cdf of . Further, we denote by the convergence in probability, and all limits are taken as .

To simplify the notation, in what follows, we consider competing risks from two sources, namely . We will present the limit behavior of for Cases I and II in Section 2.1 and Section 2.2, respectively.

2.1. Limit Theorem for Case (I) with Independent Sample Size

In this section, we present our main results concerning the limit behavior of competing risks under linear and power normalization, as detailed in Theorems 1 and 2, respectively. We focus on the following random sample size scenario: assume that there exist k positive variables , such that

Condition (7) is commonly used for the limit behavior of extremes with random sample sizes [16,21,25]. In general, one may consider a random stopping sampling process [26]. Typical examples of random sample sizes satisfying Equation (7) are given in Section 3.2 (cf. Examples 1∼3); see also Peng et al. [15] for more examples. In addition, it is worthy to note that, under condition (7), the ’s inherit the mutual independence of the random sample size sequences .

1. Limit behavior of under linear normalization. Clearly, for , there exist some constants , such that satisfies Equation (1) as . It follows further by Theorem 6.2.1 in Galambos [18] (Ch.6, p.330) that, under condition (7), we can find a subsequence by Skorokhod representation theorem and , a mixed GEV distribution defined by

Below, we will show in Theorem 1 that, under condition (7), the limit theorem for competing extremes, , holds with an accelerated mixed GEV distribution, which can be written as a product of independent ’s.

Theorem 1.

Remark 1. (a) Theorem 1 is reduced to Theorem 2.1 by Cao and Zhang [7] if all ’s are degenerate at one (that is, in probability), the limit theorem for competing maxima with determinant sample size.

(b) In addition, the two results in (i) and (ii) correspond to the cases for two competing risks being comparable tails with a balanced sampling process and the dominated case, respectively.

(c) In general, our results introduce a fairly larger class of accelerated mixed GEV distribution, as a product of the mixed GEV distributions written in the form of Equation (8).

2. Limit behavior of under power normalization. Clearly, for , there exist , such that satisfies Equation (3) as . We will show in Theorem 2 that, under condition (7), the limit theorem for competing extremes, , holds with an accelerated mixed distribution, P, which can be written as the product of ’s given below

Here, are of the same p-type of H defined in Equation (4).

Theorem 2.

Let be given by Equation (6) with the basic risks , and random sample sizes mutually independent. Assume conditions (3) and (7) hold for sample maxima with suitable constants , and the random sample size . Suppose further there exist two non-negative constants α and β such that

as . The following claims hold with mixed p-stable distributions defined by Equation (10).

- (i).

- (ii).

- The following limit distribution holdsprovided that one of the following four conditions is satisfied (notation: , the right endpoint of )

- (a).

- When is one of the same p-types of , and is one of the same p-types of .

- (b).

- When is one of the same p-types of , and is one of the same p-types of for . In addition, Equation (11) holds with and .

- (c).

- When is one of the same p-types of , and is one of the same p-types of for . In addition, Equation (11) holds with and or and .

- (d).

Remark 2.

(a) Theorem 2 is reduced to the extreme limit behavior of competing risks with determinant sample size if all ’s are degenerate at one, which was extensively discussed in Hu et al. [8]. The random sampling size scenarios are very common in practice. For instance, in physics and insurance fields, follows a shifted Poisson df with mean such that . For more examples, see Example 1 below and Remark 2.2 by Abd Elgawad et al. [27].

(b) In addition, the two results in i) and ii) correspond to the two different cases with and in condition (11). These results illustrate the limit behavior of two competing risks with comparable tails and a balanced sampling process and the dominated case, respectively.

(c) Theorem 2 extends Theorem 2.1 by Barakat and Nigm [19] for a non-competing risk scenario, where the extremes are from one single source. In general, the accelerated mixed power-stable distributions family is a larger class including those of form in Equation (10).

2.2. Limit Theorem for Case (II) with Non-Independent Sample Size

In this section, we focus on Case (II), where we relax the independent condition between the basic risk and random sample size. On the other hand, we need to strengthen the convergence in distribution as the convergence in probability, as stated below. Assume that there exist positive random variables , such that

Theorem 3.

Theorem 4.

Remark 3.

Recalling that G and H given by Equations (2) and (4) are the so-called l-max stable and p-max stable distributions, we call L and P the accelerated mixed l-max stable and the accelerated mixed p-max stable distributions if they can be written as a product of and , respectively. Thus, the limit laws obtained by Theorems 3 and 4 correspond to fairly larger families of accelerated mixed l-max stable and accelerated mixed p-max stable distributions, respectively.

3. Extension and Examples

In this section, we first extend our results for competing minima risks in Section 3.1, and then present typical examples of random sizes with specific mixed extreme distributions in Section 3.2.

3.1. Extreme Limit Theory for Competing Minima Risks

In some practical applications, such as the lifetime analysis in reliability studies or race time of athletes in physical studies, extreme minima play an important role. As we will see in Corollaries 1 and 2 below, analytical claims follow for competing risks with random sample sizes in terms of minima of minima. Essentially, noting that the right tail behavior of is demonstrated by its sample maxima, , the left tail behavior of can be shown by the sample minima . Further, the left tail behavior of can be obtained through the study of the right tail of X since (cf. Theorem 1.8.3 in Leadbetter et al. [1] and Grigelionis [28])

Noting that, the condition that , i.e., there exist some constants , such that satisfies Equation (1) as , is equivalent that

where is of the same l-type of GEV distributions given in Equation (2).

Corollary 1.

Suppose the same conditions as for Theorems 1 or 3 are satisfied.

Noting that , the following corollary holds for the power normalized minima of minima specified in Equation (14).

Corollary 2.

Suppose that the same conditions as for Theorems 2 or 4 are satisfied. The following claims hold for , with the mixed distributions defined in Equation (10).

- (i).

- (ii).

- The following limit distribution holdsprovided that one of the conditions (a)∼(d) in Theorem 2 holds.

3.2. Examples

Below, we will give three examples (Examples 1∼3) to illustrate our main results obtained in Theorems 1 and 2. Specifically, we consider that random sample size follows, respectively, time-shifted versions of Poisson or binomial distribution, as well as geometric and negative binomial distributions with relevant parameters satisfying certain average stable conditions [15].

Example 1

(Time-shifted binomial/Poisson distributed random sample size). Let follow a time-shifted binomial distribution with probability mass function (pmf) given as

If , then converges in probability to one. Similarly, for a time-shifted Poisson distributed with , then converges in probability to 1 [15] (Lemmas 4.3). For the random sample size aforementioned, the claims of Theorems 1 and 2 follow as the reduced determinant random size cases, see Remarks 1(a) and 2(a).

Example 2

(Time-shifted geometric distributed sample size). In the case of linear normalization, with G being one of the three l-types of distributions, say specified in Equation (2). Suppose that the random sample size, , follows a geometric distribution with mean . We have in distribution with a random scale, V, following a standard exponential distribution. Consequently, Theorem 1 holds with an accelerated mixed l-max stable distribution, , which is the product of mixed l-max stable distributions of form L as described below.

which is taken as its limit for .

Similarly, for the power normalization case, recalling H is specified in Equation (4), as the p-max type of limit distributions, Theorem 2 follows with an accelerated mixed p-max stable distribution, , the product of mixed p-max stable distribution P, as described below (cf. Barakat and Nigm [19] [Example 2.1]).

Example 3

(Time-shifted negative binomial distributed sample size). As an extension of m-shifted geometric distributions, we consider time-shifted negative binomial distributed sample size with given by

It follows from Lemma 4.1 by Peng et al. [15] that, as , we have that converges in distribution to V, a gamma random variable with shape parameter r and scale parameter 1, i.e., the cdf of V is given by

where denotes the gamma function. It follows by Theorem 1 that

Similarly, Theorem 2 follows with the accelerated mixed p-max stable distributions, which are products of cdfs of form P given below.

4. Numerical Studies

We will conduct a Monte Carlo simulation to illustrate Theorems 1 and 2 with the m-shifted random sample size given in Examples 1∼2. In the following simulations, we set the shift parameter for all time-shifted random sample size distributions. The basic risks are drawn from Pareto and Pareto (recall the cdf of Pareto is given as ), and the random sample sizes are supposed to be mutually independent. Additionally, the repeated time is taken as . We will illustrate our main results specified in Theorems 1 using the three examples given in Section 3.2 above.

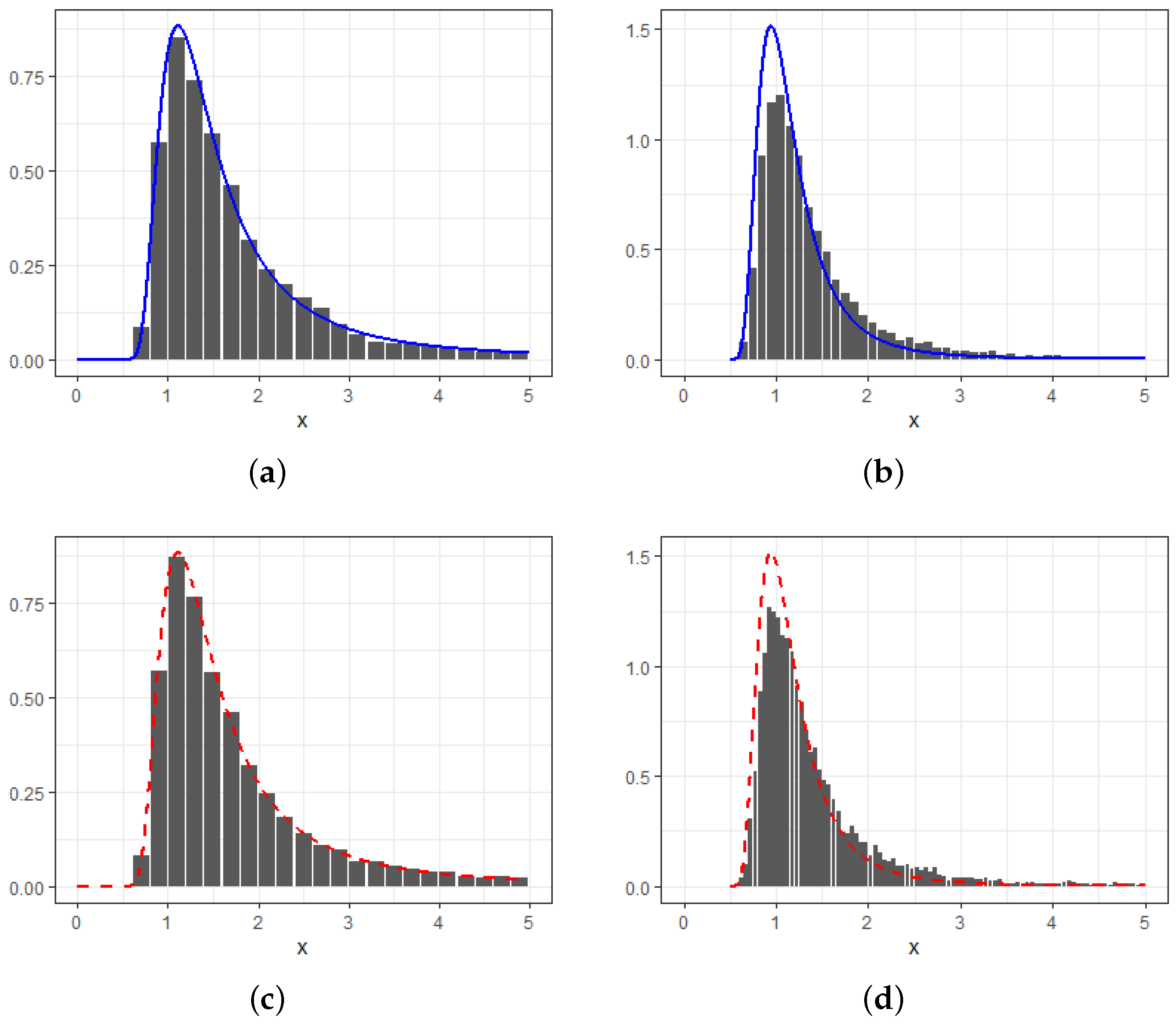

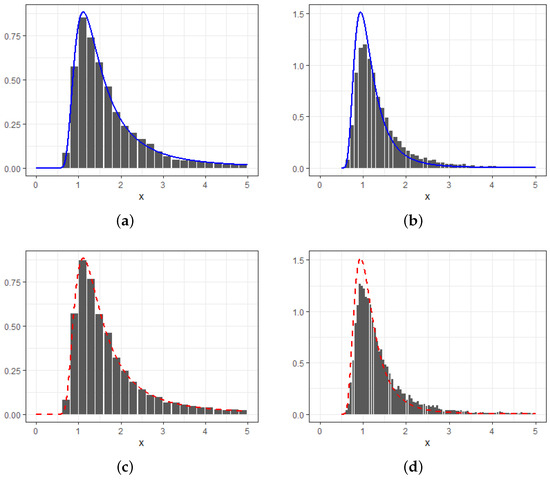

1. Comparison of Pareto competing extremes with determinant sample size and Poisson distributed random sample size. In Figure 1, we will demonstrate that the competing extremes with a Poisson distributed sample size are similar to the case with a non-random sample size. Let follow m-shifted Poisson distribution with mean parameters . We then generate competing Pareto extremes with basic risks following Pareto for given It follows from Theorems 1, 2 and Example 1 together with Example 4.6 by Hu et al. [8] that (recall , the Fréchet distribution)

Figure 1.

Distribution approximation of linear normalized (a,b) and (c,d) with both ’s from Pareto and Poisson distributed sample size with mean . Here, and with in (b,d) and (a,c) by and , respectively.

- For or with , we have

- For with , we have

Note that the power normalized extremes behave similarly to the linear normalized ones, up to a power transformation. Therefore, we will focus on the behavior of linear normalization in the numerical studies presented below.

In Figure 1, we take and with to show the above two cases. Overall, the competing Pareto extremes are well fitted by the accelerated GEV distribution for the non-random sample size, with a slightly better fit compared with the random sample size cases. Furthermore, the accelerated GEV approximation (Figure 1a,c) is relatively closer to the empirical competing extremes than the dominated case.

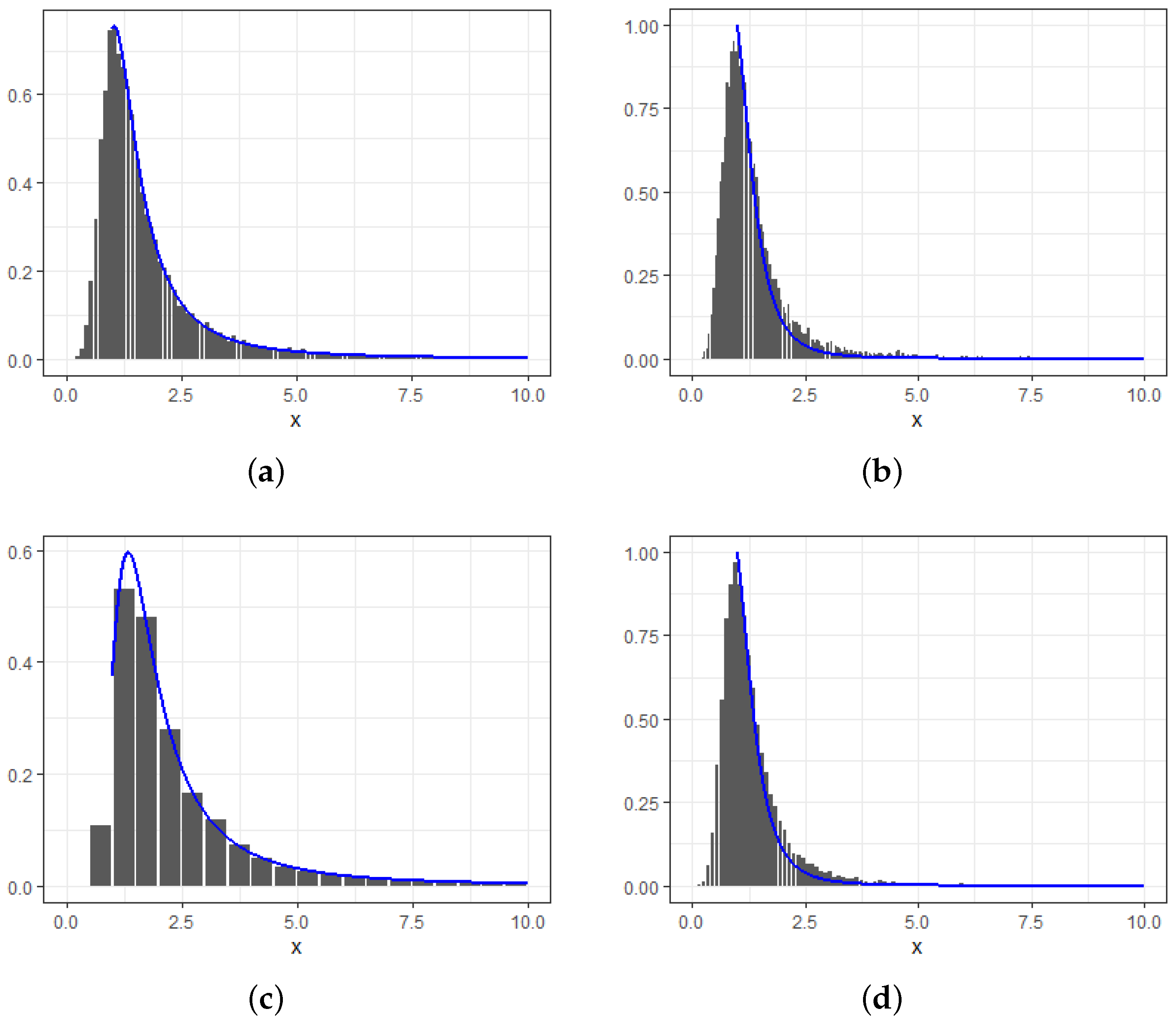

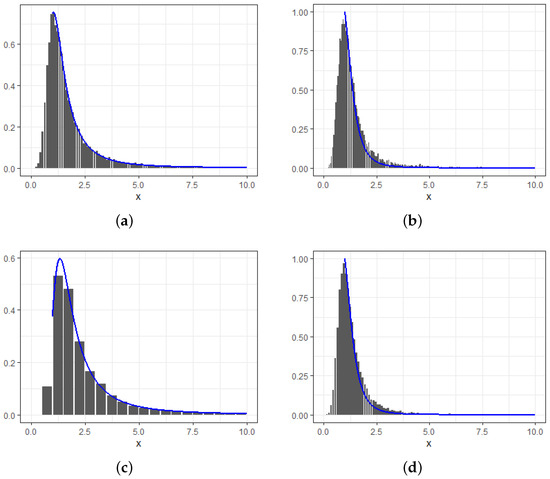

2. Comparison of Pareto competing extremes with geometric distributed and negative binomial distributed sample size. We consider the maxima of maxima with basic risks and random sample sizes following m-shifted negative binomial distribution with probability , and . It follows by Example 4.6 by Hu et al. [8], Theorem 1 (a, b), and Example 3 that, with

- For or with , we have

- For with , we have

Thus, its density function is given by

In Figure 2, we set with in (a, c) and (b, d), respectively. The random sample size follows a 5-shifted negative binomial distribution with in (a, b) (namely geometric distribution), in (c, d), and probability . The Pareto basic risks are set with and . Consequently, the sub-maxima are completely competing when , resulting in the accelerated mixed extreme limit distributions as shown in Figure 2a,c. In contrast, the dominated limit behavior is given in Figure 2b,d as .

Figure 2.

Distribution approximation of linear normalized with both ’s from Pareto. The random sample size follows a negative binomial distribution with (the geometric distribution) (a,b), (c,d), and probability . Here, and with in (a,c) and (b,d) with pdf curves of and , respectively.

In general, our theoretical density curve given by Equation (16) approximates the histogram very well (Figure 2). Further, we see that the approximation with geometric distributed random size is slightly better than the negative binomial case. In addition, the approximation for the dominated case (Figure 2d) is slightly better than the accelerated case when negative binomial random size applies.

Author Contributions

Conceptualization, L.B., K.H. and C.L.; methodology, K.H. and C.L.; software, L.B.; validation, L.B., Z.T. and C.L.; formal analysis, L.B., K.H. and C.L.; investigation, K.H.; writing—original draft preparation, C.L. and K.H.; writing—review and editing, C.W., Z.T. and C.L.; visualization, C.L.; supervision, C.L.; project administration, C.L.; funding acquisition, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

Long Bai is supported by National Natural Science Foundation of China Grant no. 11901469, Natural Science Foundation of the Jiangsu Higher Education Institutions of China grant no. 19KJB110022, and University Research Development Fund no. RDF-21-02-071. Chengxiu Ling is supported by the Research Development Fund [RDF1912017] and the Post-graduate Research Fund [PGRS2112022] at Xi’an Jiaotong-Liverpool University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

We thank the editors and all reviewers for their constructive suggestions and comments that greatly helped to improve the quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Proofs of Theorems 1∼4

We will first present a lemma, followed by the proofs of each theorem established in Section 2.

Lemma A1

(Theorem 2.1 by Cao and Zhang [7]). If and satisfy Equation (1) with , the limit distribution of as can be determined in the following cases:

The proof of Lemma A1 is omitted since one can find its detailed proof given in Cao and Zhang [7] (page 250).

Below, we present the proofs of Theorems 1∼4 subsequently.

Proof of Theorem 1.

In view of Theorem 6.2.1 by Galambos [18], it follows by the independence of the basic risk, , and the random sample size, , and conditions (1) and (7) that

Further, it follows by the mutual independence between and that

The straightforward application of Equation (A1) gives

Next, we turn to show the limit behavior of . First, we rewrite as

Case (i). It follows by conditions (1) and (9) with that (see also the relevant proof of Lemma A1 for Cao and Zhang [7]) (Theorem 2.1, page 250)

Therefore, in view of Theorem 6.2.1 in Galambos [18], we have by condition (7) and the dominated convergence theorem

Similar arguments to Case (i) imply that .

Consequently, we complete the proof of Theorem 1. □

Proof of Theorem 2.

Noting that and are independent, we rewrite the left-hand side of Equation (12) as follows.

Since condition (3) holds for and condition (7) is satisfied for , it follows by Theorem 2.1 in Barakat and Nigm [19] that

Next, we show the limit of . We rewrite as

where with given by Equation (11).

Case (i). Noting that as , condition (3) holds uniformly for and thus

Therefore, using again Theorem 2.1 in Barakat and Nigm [19], we have by the dominated convergence theorem

Case (ii). It remains to show that , which can be confirmed by applying Theorem 2.1 in Barakat and Nigm [19] together with the dominated convergence theorem and condition (7) if we can show

In what follows, we will show that Equation (A6) holds if one of the four conditions specified in is satisfied.

- (a).

- Since and are one of the same p-types of and , respectively, we have, for ,We thus obtain Equation (A6).

- (b).

- For being one of the same p-types of , and being one of the same p-types of with , we have, forholds for all . Therefore, Equation (A6) follows.

- (c).

- For being one of the same p-types of , and being the same p-type of with , we have, for or and anyWe have thus .

- (d).

- For being one of the same p-types of , we have, for or , and anyindicating that .

We complete the proof of Theorem 2. □

Proof of Theorem 3.

Proof of Theorem 4.

We show first that, the claim follows for the jth sample maxima with normalizing constants , i.e.,

Denote by the probability mass function of . We have

It follows by the total law of probability and the independence between basic risks and sample size that

Since as , we have . Therefore,

Noting that condition (7) implies that there exists a sub-sequence, , such that . It follows thus by Theorem 2.1 in Berman [25] that, for every ,

References

- Leadbetter, M.R.; Lindgren, G.; Rootzén, H. Extremes and Related Properties of Random Sequences and Processes; Springer: New York, NY, USA, 1983. [Google Scholar]

- Embrechts, P.; Kluppelberg, C.; Mikosch, T. Modelling Extremal Events; Stochastic Modelling and Applied Probability; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Beirlant, J.; Teugels, J.L. Limit distributions for compounded sums of extreme order statistics. J. Appl. Probab. 1992, 29, 557–574. [Google Scholar] [CrossRef]

- Pantcheva, E. Limit Theorems for Extreme Order Statistics under Nonlinear Normalization; Springer: Berlin/Heidelberg, Germany, 1985; pp. 284–309. [Google Scholar]

- Nasri-Roudsari, D. Limit distributions of generalized order statistics under power normalization. Commun. Stat.-Theory Methods 1999, 28, 1379–1389. [Google Scholar] [CrossRef]

- Barakat, H.M.; Khaled, O.M.; Rakha, N.K. Modeling of extreme values via exponential normalization compared with linear and power normalization. Symmetry 2020, 12, 1876. [Google Scholar] [CrossRef]

- Cao, W.; Zhang, Z. New extreme value theory for maxima of maxima. Stat. Theory Relat. Fields 2021, 5, 232–252. [Google Scholar] [CrossRef]

- Hu, K.; Wang, K.; Constantinescu, C.; Zhang, Z.; Ling, C. Extreme Limit Theory of Competing Risks under Power Normalization. arXiv 2023, arXiv:2305.02742. [Google Scholar]

- Chen, Y.; Guo, K.; Ji, Q.; Zhang, D. “Not all climate risks are alike”: Heterogeneous responses of financial firms to natural disasters in China. Financ. Res. Lett. 2023, 52, 103538. [Google Scholar] [CrossRef]

- Cui, Q.; Xu, Y.; Zhang, Z.; Chan, V. Max-linear regression models with regularization. J. Econom. 2021, 222, 579–600. [Google Scholar] [CrossRef]

- Zhang, Z. Five critical genes related to seven COVID-19 subtypes: A data science discovery. J. Data Sci. 2021, 19, 142–150. [Google Scholar] [CrossRef]

- Soliman, A.A. Bayes Prediction in a Pareto Lifetime Model with Random Sample Size. J. R. Stat. Soc. Ser. 2000, 49, 51–62. [Google Scholar] [CrossRef]

- Korolev, V.; Gorshenin, A. Probability models and statistical tests for extreme precipitation based on generalized negative binomial distributions. Mathematics 2020, 8, 604. [Google Scholar] [CrossRef]

- Barakat, H.; Nigm, E. Convergence of random extremal quotient and product. J. Stat. Plan. Inference 1999, 81, 209–221. [Google Scholar] [CrossRef]

- Peng, Z.; Jiang, Q.; Nadarajah, S. Limiting distributions of extreme order statistics under power normalization and random index. Stochastics 2012, 84, 553–560. [Google Scholar] [CrossRef]

- Tan, Z.Q. The limit theorems for maxima of stationary Gaussian processes with random index. Acta Math. Sin. 2014, 30, 1021–1032. [Google Scholar] [CrossRef]

- Tan, Z.; Wu, C. Limit laws for the maxima of stationary chi-processes under random index. Test 2014, 23, 769–786. [Google Scholar] [CrossRef]

- Galambos, J. The Asymptotic Theory of Extreme Order Statistics; Wiley Series in Probability and Mathematical Statistics; Wiley: New York, NY, USA, 1978. [Google Scholar]

- Barakat, H.; Nigm, E. Extreme order statistics under power normalization and random sample size. Kuwait J. Sci. Eng. 2002, 29, 27–41. [Google Scholar]

- Dorea, C.C.; GonÇalves, C.R. Asymptotic distribution of extremes of randomly indexed random variables. Extremes 1999, 2, 95–109. [Google Scholar] [CrossRef]

- Peng, Z.; Shuai, Y.; Nadarajah, S. On convergence of extremes under power normalization. Extremes 2013, 16, 285–301. [Google Scholar] [CrossRef]

- Hashorva, E.; Padoan, S.A.; Rizzelli, S. Multivariate extremes over a random number of observations. Scand. J. Stat. 2021, 48, 845–880. [Google Scholar] [CrossRef]

- Shi, P.; Valdez, E.A. Multivariate negative binomial models for insurance claim counts. Insur. Math. Econ. 2014, 55, 18–29. [Google Scholar] [CrossRef]

- Ribereau, P.; Masiello, E.; Naveau, P. Skew generalized extreme value distribution: Probability-weighted moments estimation and application to block maxima procedure. Commun. Stat.-Theory Methods 2016, 45, 5037–5052. [Google Scholar] [CrossRef]

- Berman, S.M. Limiting distribution of the maximum term in sequences of dependent random variables. Ann. Math. Stat. 1962, 33, 894–908. [Google Scholar] [CrossRef]

- Freitas, A.; Hüsler, J.; Temido, M.G. Limit laws for maxima of a stationary random sequence with random sample size. Test 2012, 21, 116–131. [Google Scholar] [CrossRef]

- Abd Elgawad, M.; Barakat, H.; Qin, H.; Yan, T. Limit theory of bivariate dual generalized order statistics with random index. Statistics 2017, 51, 572–590. [Google Scholar] [CrossRef]

- Grigelionis, B. On the extreme-value theory for stationary diffusions under power normalization. Lith. Math. J. 2004, 44, 36–46. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).